1. Introduction

Self-regulated learning (SRL) asserts that a learner engaging in SRL succeeds because one can control the learning environment by directing and regulating one’s actions based on a learning goal [

1,

2]. SRL is characterized by interaction with the learning process, for example, actively engaging with the learning material, adapting one’s habits to achieve the learning goals, and assuming responsibility for the learning outcomes [

3]. Key theoretical foundations include metacognitive theories focusing on awareness and the control of cognition [

4]; Zimmerman’s cyclical phases model (forethought, performance, self-reflection) [

5]; Pintrich’s model emphasizing motivation [

6]; Bandura’s social cognitive theory highlighting self-efficacy [

7]; and Deci and Ryan’s self-determination theory emphasizing autonomy, competence, and relatedness [

8]. Implementing SRL in education involves teaching metacognitive strategies, promoting goal setting, fostering a growth mindset, and providing constructive feedback. Although various theories or models bring different perspectives, they agree that SRL comprises the general objectives of goal setting, plan implementation, and process evaluation [

9].

SRL has psychological and social-cognitive benefits for both the learner and the instructor. Studies of the inherent benefits of SRL suggest that it positively affects academic achievement, motivation, and students’ beliefs in their abilities [

10]. For the learner, SRL facilitates the development of self-efficacy. The process of self-regulation allows learners to monitor their progress actively, set learning goals, and receive feedback to develop an effective learning strategy. As learners experience success and make progress, their confidence grows, leading to increased self-efficacy and motivation. Other psychological benefits include improved metacognitive awareness, enhanced goal orientation, and positive emotional well-being [

11,

12,

13,

14]. By cultivating SRL skills, learners can develop important psychological resources and competencies that support lifelong learning, personal growth, and success in various domains of life. When students take responsibility for their learning, educators can focus more on guiding and facilitating rather than constantly directing and controlling in and outside the classroom, maximizing instructional efficiency [

15,

16]. This shared responsibility and independence reduce the teacher’s workload and provide a more balanced and supportive learning environment. By empowering students to take control of their learning, instructors can witness increased engagement, motivation, and independence among students. This further reaffirms the teacher’s belief in their ability to impact the student’s academic and social life, fostering a sense of professional accomplishment [

17].

In the age of generative artificial intelligence (GenAI), where both instructor and learner are inclined to use artificial intelligence to facilitate the teaching and learning process, it is clear that GenAI is the disruptive technology that has brought prompt-based, contextualized content to every computer. GenAI can produce novel and original content such as text, images, or other forms of media that resemble human-created content. This is achieved through its ability to learn patterns from large datasets and generate content based on learned patterns. There are many use cases of GenAI being used in recent years to create banks of questions for students to answer based on their current level of understanding and achievement; personalized study plans for students based on their performance, strengths, weaknesses, needs, and interests; and to provide tutoring or feedback to students using natural language processing [

18]. Take the following examples: TutorAI (TutorAI

https://www.tutorai.me/, accessed on 13 June 2023) is an educational platform that generates interactive content on a topic of choice of the user; and NOLEJ (NOLEJ

https://nolej.io/, accessed on 13 June 2023) offers an e-learning module that has interactive video, a glossary, practice, and a summary for a user-chosen topic. Both of these platforms use GenAI technology to produce their content at a much faster pace than any educator can. More capable, innovative, and groundbreaking GenAI tools are released daily. To facilitate learning, students can use GenAI to seek clarification or explain a concept in simpler terms. They can obtain immediate and personalized feedback from GenAI tools in the form of text feedback, communicating areas of improvement and identifying suggestions for enhancement on assignments, essays, or even code submissions from AI bots designed for these specific uses. The learning process is enhanced with feedback catered specifically for the learner to understand their strengths and weaknesses. Likewise, instructors can use GenAI to generate exciting ideas for lesson materials for a targeted learner group. The capability of GenAI tools to mass produce and provide materials for multiple learning pathways has facilitated lesson planning for many instructors. GenAI can consider the instructor and class profile to provide appropriate scaffolding, learning materials, and activities that cater to each class’ specific needs, thus ensuring a more effective and tailored learning experience.

In particular, chatbots present the ability to be a virtual tutor in the absence of an instructor, adult, or peer. GenAI chatbots can operate as virtual tutors that engage in personalized conversations with learners by providing an interactive and adaptive learning environment to answer questions, explain, and offer instant support, assistance, and guidance. Chatbots in education have gained significant traction in recent years [

19]. In a systematic review conducted by Okonkwo and Ade-Ibijola [

20], the authors concluded that the benefit of using chatbots in an education context is that “Chatbots encourage personalized learning, provide instant support to users, and allow multiple users to access the same information at the same time”. In particular, educational chatbots can serve as knowledge repositories, allowing students to retrieve information, review concepts, and interactively engage in practice exercises, enhancing their self-regulated learning process. By engaging in conversations with students, chatbots can also help them articulate their learning objectives, break down goals into actionable steps, and create realistic timelines. This guidance helps students develop a structured approach to their learning and fosters self-regulation in the setting and pursuing of goals. While there are still significant steps forward that chatbots can take, especially with respect to providing motivational and emotional support [

21,

22,

23], their extensive use in the education setting demands the attention of learning analytics research into the use of chatbots for SRL.

As with previous research on SRL with educational technology tools such as analyzing trace data on learning management systems [

24,

25,

26] and massive open online courses [

27,

28,

29,

30], GenAI is another educational tool that is emerging at a rapid rate. In recent months, new research has emerged aligning the SRL process with the adoption of GenAI, signalling a new frontier for research into this domain. The work by Chiu et al. looks into how motivation and the process of self-determination theory can be fostered with ChatGPT [

31]. Another work by Kong and Yang focuses on domain knowledge learning for adolescent learning [

32] With new and emerging research, this work seeks to answer the following questions: (1) Which SRL model helps describe learning processes for GenAI chatbots? (2) How do we identify and classify SRL processes for prompts used for educational chatbots? (3) What learning analytics can we perform?

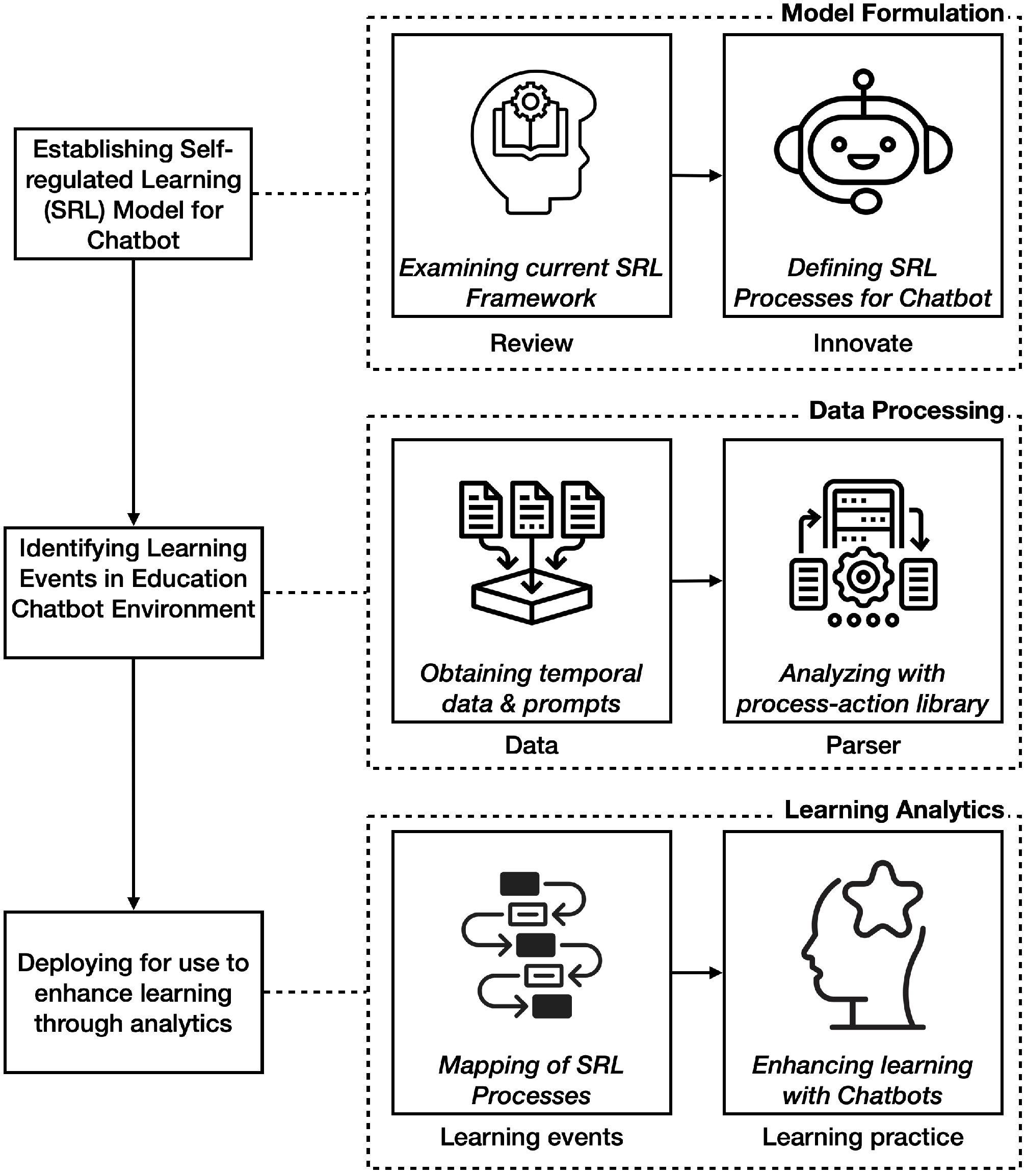

To answer these research questions, we examine the existing frameworks for SRL in

Section 2. We will aggregate our findings from the existing frameworks to develop a refreshed framework suitable for examining SRL in prompts provided to chatbots during the learning process. Next, as a proof-of-concept, we analyze two students’ interaction with a chatbot to classify the prompts according to the framework in

Section 3. The various stages of the learning process are then examined for learning analytics and are organized and reported in

Section 4. The scope for establishing a framework for an SRL model for GenAI Chatbot is illustrated in

Figure 1.

2. SRL Framework for Chatbots

Research into self-regulated learning began as early as the 1970s. During this time, several frameworks, theories, and models of SRL have emerged and been developed—some more popular than others and some in greater alignment with others. This section examines a few SRL models to answer the first research question. As this is not a systematic review, we will not evaluate all the existing models but will shed light on why specific models were chosen. We recommend the systematic review by Panadero [

9] for an overview of the other models. All the models highlighted in this section and chosen for SRL analysis with GenAI chatbot are aligned in the description and development of the metacognitive aspects of SRL. This gives us excellent ground upon which to develop our framework, and we urge readers and researchers of this work to explore using other models to answer the same research question.

2.1. Existing SRL Frameworks and Models

Zimmerman is a prominent researcher who has developed three different SRL models, each with a different focus. The first model, developed with Pons, is a set of 15 self-regulation strategies, comprising self-regulated learning behaviors [

33]. This model is useful in identifying the traits of a self-regulated learner. By extension, trace data and prompts provided by the learner to the chatbot can be identified with one or more of these traits, which gives evidence to SRL. Secondly, termed the Triadic Analysis of SRL, the model is developed with a focus on the interactions between the environment, behavior, and person [

10]. The triadic analysis applied to Bandura’s triadic model of social cognition, which asserts the concept of reciprocal determinism, where a person’s behavior is influenced by cognitive processes and environmental factors such as social stimuli [

7]. While the triadic analysis helps explain behaviors broadly, it is not immediately apparent how it can be made process-driven, which limits its use as a framework within which to answer the research question. The final model draws from social-cognitive theory, called Zimmermann’s Cyclical Phases Model [

34,

35], which explains the interrelation of metacognitive and motivational processes at the individual level in three domains. The model includes sub-processes classified under each domain: forethought (with sub-processes: task-analysis and self-motivating beliefs); performance (self-control and self-observation); and self-reflection (self-judgment and self-reaction). Due to the comprehensiveness of the first model and the popularity and depth of the third model, these models are often used for analysis in SRL research.

Pintrich’s work in the field of SRL has been influential due to his contributions to clarifying the conceptual framework of SRL [

6]. His efforts to further empirical research on the relationship between SRL and motivation, as well as the development of the widely used MSLQ (Motivated Strategies for Learning Questionnaire), established him in the field of SRL research. According to Pintrich’s SRL model, there are four phases: forethought, planning, and activation; monitoring; control; and reaction and reflection. Each phase includes different areas for self-regulation, such as cognition, motivation/affect, behavior, and context. The model also integrates SRL processes, including target goal-setting, activating prior knowledge, making efficacy judgments, and self-observing behavior. The well-organized phases and areas of SRL make it easy for an early adopter to adapt the model to a different context.

Winne and Hadwin’s SRL model strongly emphasizes the metacognitive perspective. They view self-regulated students as actively managing their learning through monitoring and using cognitive strategies [

36,

37]. Their model highlights the goal-driven nature of SRL and the influence of self-regulatory actions on motivation. The development of Winne and Hadwin’s SRL model is guided by the Information Processing Theory [

38,

39], focusing on the cognitive and metacognitive aspects of SRL in greater detail than the aforementioned models. The model consists of four inter-connected and recursive phases: task definition, goal setting and planning, enacting study tactics and strategies, and metacognitively adapting studying. The model further includes five facets of tasks: Conditions, Operations, Products, Evaluations, and Standards. Conditions refer to the resources and constraints of the task or environment, Operations encompass the cognitive processes and strategies used by students, Products represent the information created through these operations, Evaluations involve feedback on the fit between products and standards, and Standards refer to the criteria against which products are monitored [

36]. Winne and Hadwin’s SRL model has been widely used, particularly in research involving computer-supported learning settings, which makes it a good candidate for use in the chatbot setting [

9,

40].

2.2. Proposed Framework for Chatbot

We amalgamate the SRL processes from the previous section to discover the underlying patterns of how learners use chatbots for SRL. Here, we note that there are multiple ways to perform this aggregation. With disruptive technologies, the framework proposed is suitable for the existing capabilities of conversational chatbots for education purposes. The SRL framework introduced here is a simple starting point from which to study the SRL process from the data perspective. Conversation data are the most accessible data that chatbots provide; by codifying this data, we can study students’ SRL processes by integrating Zimmerman’s, Pintrich’s, and Winne and Hadwin’s models into one.

The process–action framework is developed by strongly considering the metacognitive perspective (called

process) from Winne and Hadwin’s model, the four phases of SRL by Pintrich and Zimmerman’s subprocesses (called

actions). Similarly, in the proposed SRL model for chatbots, there are four learning processes, namely, defining, seeking, engaging, and reflecting, each process has its associated learning action.

Table 1 contains a summary of these processes and the description and example prompt to describe the process–action. One should note that chatbots are often not used in isolation. Therefore, certain actions may be associated with user interaction with the chatbot in support of another tool. With

Table 1, we can tag each prompt submitted to the chatbot with an associated process and action.

3. Data Processing and Learning Process-Action Map

This section introduces how the data processing is performed to answer the second research question. To understand how chatbots can be useful in SRL, we now turn to concepts in process mining to highlight what the SRL processes are when using educational chatbots. Raw data are collected from learners by exporting their chat conversation history. This can be carried out natively in ChatGPT, the chatbot of choice in this work. In other educational contexts and class sizes, specialized chatbots can be built to automatically store conversation histories for processing.

Figure 1 shows that data processing involves parsing raw data containing the prompts submitted by the learner through the process–action library described in

Table 1. This process is achieved by manually assigning each prompt to a corresponding process–action code based on how it fits the description and example prompt. In general, process–action codes are used for brevity.

In this exploratory work, we simplified the process by subjecting the classification of prompts to the following constraints: (1) Prompts are arranged in the order in which they are supplied to the chatbot; (2) Each prompt is assigned exactly one action label; (3) Each action label belongs to exactly one process; (4) If multiple successive prompts belong to actions in the same process, this process is self-referential; (5) If two successive prompts belong to actions in different processes, this process is transitionary. Variations to these constraints could result in differing outcomes. It is thus instructive to compare various methods of assigning process–action labels.

Following this classification and labeling of prompts with actions and processes, we perform process mining to discover how learners use chatbots. Process mining is a data-driven approach used primarily in business analytics that aims to discover, monitor, and improve real-life processes by analyzing event logs recorded by information systems. In the context of SRL, the event logs correspond to the prompts, and we are analyzing the learning process. This involves extracting insights and knowledge from the learning data generated during a chatbot to better understand how SRL processes work.

Following the collection of prompt data, an annotator will process the data with the process–action library by assigning codes to each conversation line. In more complex annotation task that requires agreement across multiple annotators, the Inter-Annotator Agreement (IAA) method can be adopted. IAA is used in various fields such as natural language processing, information retrieval, and content analysis, where multiple annotators need to independently reach a measurable degree of agreement when labeling or codifying data [

41,

42]. Once a reasonable agreement is reached, the final agreed codes are used to process the data for analysis. One such way is to generate a process–action map. This is done by constructing an adjacency matrix to describe the relationship between prompt flow and the occurrence of each code. This adjacency matrix is then converted into a Markov chain, where each action is a node, and directed edges from one node to another show the sequence of the learning process.

Proof-of-Concept

As a proof-of-concept, we provide two examples of a student engaging OpenAI’s ChatGPT (GPT-3.5). Two learners were tasked with choosing a topic they would like to learn and engaging the chatbot as a virtual intelligent tutor. The learners can stop when they feel they have sufficiently learned the topic. The first student is new to a topic in statistics and is about to learn about the Shapiro–Wilk test, a topic covered in an intermediate-level undergraduate statistics course. The prompts provided to ChatGPT are ordered as shown in

Table 2 (note that typographical errors are deliberately left unedited). The associated process–action tags are also found in the same table. The adjacency matrix of this prompt flow is found in

Table 3.

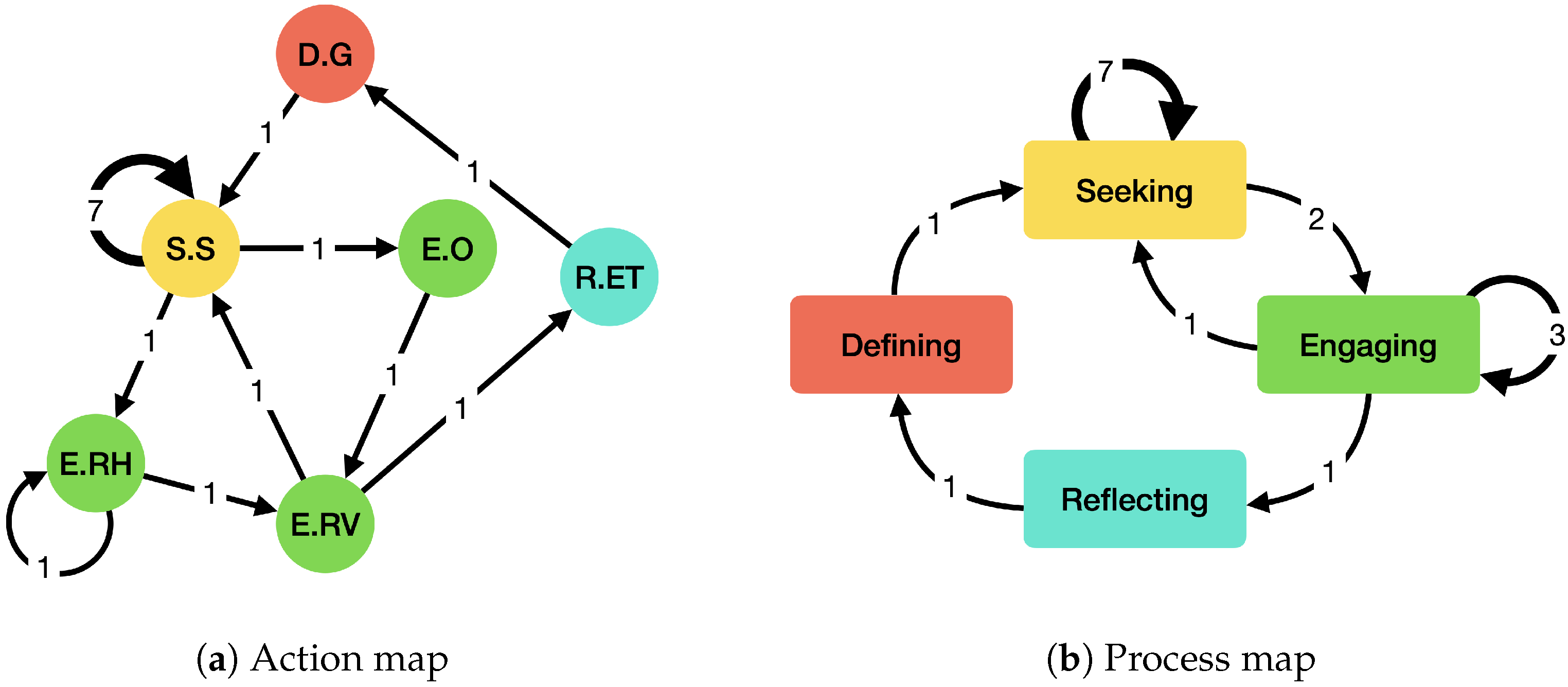

Correspondingly,

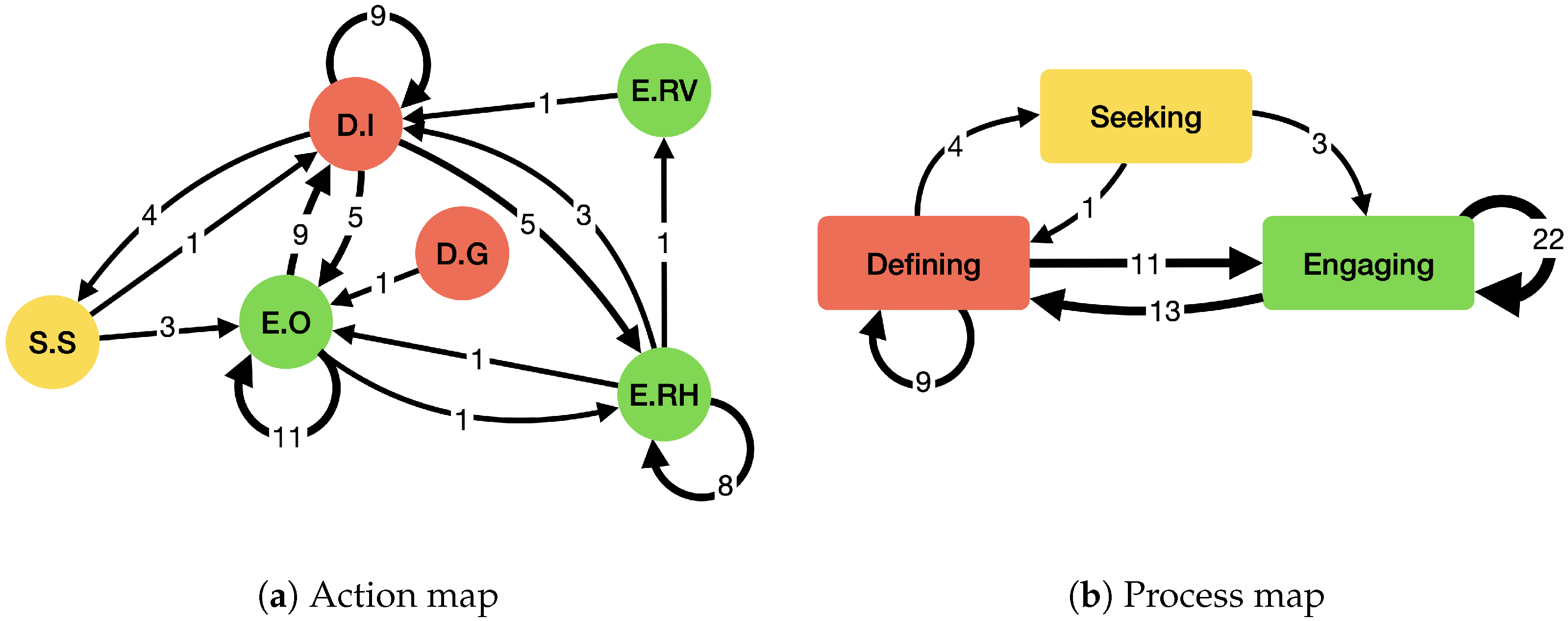

Figure 2 shows the learning process map for this student at both the action and process levels. In the second example, an adult learner has prior knowledge of the topic and is studying project management in preparation for the Certified Associate in Project Management exam. The learning process map is shown in

Figure 3.

In our first example, we note that the learner engaged with the chatbot with a small number of prompts (17 prompts), with the majority of the SRL process at the seekingand engaging phase. In the case of the second learner, there is more engagement with the chatbot at the defining and engaging phases. With this cursory information, we can provide insight into the SRL of these two learners, albeit in different learning contexts.

4. Learning Analytics Discussion

The benefit of process mining is that SRL researchers do not need to reinvent the wheel. Process mining is a method used in business analytics and has multiple indicators of success in optimizing processes. Advanced tools used in process mining can correspondingly drive SRL research in this respect. The process–action map introduced in this work is merely a subset of the application of process mining. For example, if we want to know where students spent the most time engaging a chatbot in SRL, obtaining prompts as the raw data is not enough. Instead, temporal data must be obtained and fed into the parser to assign weighted edges that display temporal data. If we want to have greater resolution on how students interact with the chatbot to get a better classification of learning actions and processes, for example, when a student highlights, copies, and pastes answers, then the chatbot infrastructure must thus be able to provide the means of collecting such data. Regardless, we see benefits in a chatbot’s potential as part of a person’s SRL ecosystem. This has huge potential, not just for the learner and educator but also for instructional designers and researchers alike.

By applying process mining techniques, educators can analyze the sequence of events and actions learners perform during the learning process. In particular, the learning activities students engage with, how they engage with learning materials, what strategies they employ, and how their learning behaviors evolve. In this work, we demonstrate one of the basic functions of process mining: visualizing learning processes. The learning process–action map lets instructors visualize learners’ activities as process flowcharts. These visualizations can help identify patterns, bottlenecks, and deviations from expected learning behaviors, allowing for a deeper understanding of the learning process. In the case of the example, we note that this student participates actively in seeking and engaging, but not so much in defining and reflecting. This could be interpreted as either (1) the student is not participating fully in the SRL cycle, (2) the student is not sure how to prompt the chatbot to help regulate these two processes, or (3) the student has experience with the chatbot being unhelpful in facilitating these two processes, thus did not perform these steps. These are possible inferences that should be confirmed with further educational research. On the learner front, this could translate to personalized recommendations for this student to engage in learning actions not present in the student’s process–action map.

On a broader scale, with multiple students’ process–action maps, comparative studies can be conducted by analyzing the prompts given to the chatbot and the associated learning actions and processes. Educators can identify indicators of effective SRL, such as time management, goal setting, metacognitive awareness, and self-assessment techniques. These insights can be used to evaluate the effectiveness of interventions or support tools to promote SRL for individuals and groups of students. Furthermore, by understanding how groups of learners interact with the chatbot and the learning resources provided by the chatbot, instructors can identify usability issues, content gaps, or mismatches between learner expectations and the chatbot learning environment. Further analysis using process mining techniques can also help instructors and instructional designers identify areas for improvement in learning materials. Understanding the limitations of chatbots, instructors can supplement these materials instead of having students prompt continuously with no satisfactory answer from the chatbot.

On a more advanced level, developers can collaborate with instructors to build their chatbot to provide learners with personalized real-time feedback systems or intelligent tutoring systems. By monitoring learners’ actions and progress, process mining techniques can identify moments when learners may benefit from specific interventions, such as reminders, prompts, or suggestions to adopt more effective learning strategies. Process mining can help identify meaningful indicators or metrics for learning analytics. Researchers can also identify key performance indicators or process metrics correlating with successful learning outcomes. These indicators can then be used to measure and monitor learners’ progress and evaluate the effectiveness of interventions. Process mining can further provide insights into the most effective learning paths taken by successful learners. Researchers can identify common sequences of actions or activities associated with positive learning outcomes by studying the process–action maps of different students in different performance groups. This knowledge can be used to optimize the sequencing and organization of learning materials, ensuring that learners are guided along efficient and effective learning paths toward their learning goals. Lastly, natural language processing techniques such as inter-annotator agreement algorithms can be used to automate the parsing of the process–action tags.

5. Implementation Considerations and Conclusions

This work considers SRL at the individual level and how it could potentially be aggregated to derive knowledge about subject- and standard-specific insights. However, SRL is a wide field with many models focussing on various aspects of learning, each with its merit. Therefore, while this work seeks to address the cognitive, metacognitive, and motivational aspects, in-depth research still needs to be carried out to integrate other important and critical aspects of SRL in the age of rapid GenAI usage. These include integrating behavioral models where the chatbot design would focus on observable behaviors and reinforcement learning; constructivist models, where the focus is on active learning and the construction of knowledge through interaction; and collaborative learning models, where chatbots would focus on facilitating interactions between multiple users or between users and the chatbot as a mediator in group activities [

43,

44].

An example of how this can be expanded is collaborative learning environments that foster shared understanding among learners augmented by chatbots. Chatbots designed with collaborative learning models can facilitate group discussions, provide feedback, and promote cooperative problem-solving. This collaborative approach can deepen their understanding of the subject matter through shared perspectives and insights while giving them the extended edge of access to knowledge and information provided by the capabilities of GenAI. Such collaborative learning environments also facilitate and encourage peer teaching and feedback mechanisms within the learning process. Groups of students can also leverage GenAI to synthesize and summarize information from diverse sources. AI algorithms can analyze vast amounts of data and distill key insights, facilitating the creation of shared knowledge bases for complex topics.

In pursuing leveraging advanced technologies like ChatGPT, it is imperative to confront the inherent limitations and ethical considerations associated with their deployment. One of the primary concerns surrounding GenAI is the potential for generating misinformation or the chatbot hallucinating. While the model is designed to provide accurate and contextually relevant information, it may inadvertently produce misleading or inaccurate content. In the context of learning, this could be detrimental, especially in cases where students are unaware of the false information received. Recognizing this limitation and implementing measures to mitigate the risk of disseminating unreliable information is vital. We advocate for integrating a human-in-the-loop approach to address the challenges posed by the potential for misinformation and other limitations. This involves educator oversight and intervention in using ChatGPT, ensuring that the technology is used while lowering the chances of learners receiving misinformation. By incorporating human judgment, we can enhance the reliability of the generated content and minimize the risks associated with automated decision-making.

To bolster the credibility and acceptance of our proposed solution, it is imperative to conduct user acceptance studies. These studies gather feedback and insights from potential end-users, stakeholders, and relevant communities. Understanding user perspectives and preferences will significantly refine our approach, ensuring it aligns with real-world needs and concerns. To ensure that validation is ongoing, we recommend a continuous and iterative approach incorporating feedback from pilot implementations and user studies to refine and adapt the solution over time. By engaging in an iterative validation process, we can adapt to evolving requirements and fine-tune our approach for optimal performance. Validation efforts, including scenario-based applications such as the one presented in our proof-of-concept, play a pivotal role in strengthening the overall credibility of our proposed solution. A robust validation process instills confidence in stakeholders and provides empirical evidence of our approach’s practical benefits and effectiveness.

In conclusion, this exploratory research aimed to investigate and answer three fundamental research questions relating to SRL processes for GenAI chatbots. Firstly, we explored the SRL models that effectively describe the learning processes for these chatbots. Through an in-depth analysis of the chatbot interactions and event logs, we identified a process–action library that provides a comprehensive framework for understanding and explaining the SRL behaviors exhibited by learners engaging with GenAI chatbots. Next, we addressed identifying and classifying SRL processes for prompts used in educational chatbots. We successfully developed a methodology to categorize and classify the SRL processes associated with different prompts by examining the process–action tagging and leveraging process mining techniques. This classification approach provides a deeper understanding of the specific SRL strategies employed by learners and allows for the customization and adaptation of prompts to enhance the SRL experience. Lastly, we explored the potential applications of learning analytics that can be performed to extract valuable insights from the SRL processes observed from interacting with educational chatbots. By employing process mining techniques, we could derive meaningful learning analytics indicators. Leveraging these analytics enables educators and designers to monitor learners’ progress, evaluate the effectiveness of interventions, and provide personalized feedback, ultimately fostering enhanced SRL experiences.

This research has shed light on the SRL processes exhibited by learners in their interactions with GenAI chatbots. We have advanced our understanding of effectively supporting and optimizing SRL in an age where educational chatbots are the mainstay. These findings have significant implications for the design of personalized learning environments, the improvement of learning outcomes, and the promotion of learner autonomy and skills. Future research can build upon these findings to further explore the dynamics of SRL in chatbot-assisted learning and refine the methodologies and techniques employed for even more robust and effective self-regulated learning experiences.