3. Method/Approach

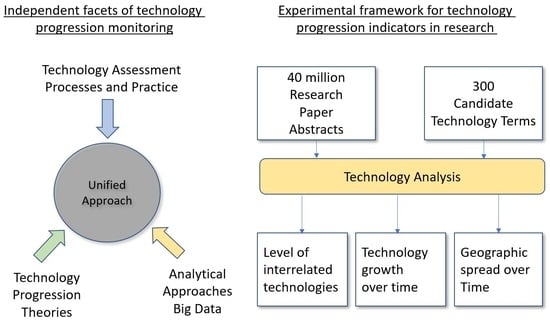

To test the research question, indicators of the progression of a set of technologies within research were developed. These indicators measured the prevalence and relationship of a set of ‘input technologies’ in academic material, using open and public data, to produce measures of the progression. The input was a set of technology terms, with a date against each showing when they were first identified. Several sources of such terms were used, as described below. The sources chosen offered technology terms over a period of years (between 10 and 20), allowing the opportunity for historic analysis.

This paper seeks not to devise methods of identifying new technologies themselves, but instead to assess candidate technologies over time. The measures of technology progression devised were:

The level of activity or interest in a technology topic over time in academic papers.

The geographical progression of technology topics over time, where they begin and how they progress geographically.

The correlation between the progression of the technology topics, identifying relationships between them.

The occurrence of technologies over time in research related to specific subject areas such as medicine, social sciences, or law.

The approach taken is to exploit available information on technology progression over two decades (i.e., perform retrospective analysis).

Given the successful technologies from the last two decades are now known, the method’s success in identifying the growth of a set of technologies over others can be assessed over the period proposed (two decades from 2000 to 2020). The key elements of the experimentation were:

A list of technologies which have evolved in the last 20 years, and where available an indication of when they became mature.

A representative set of information describing the academic research undertaken in the last 20 years. The abstract of a paper is sufficient to understand its subject/aim, it was decided that reviewing the title/abstract and publication date would be sufficient.

Software to ingest and process the reference sources of technology progression and to provide relevant analytics to allow several indicators to be produced and assessed.

A capability to compare the indicator output for each year over the last 20 years to the external technology sources chosen (in 1 above) and thus assess the viability of the approach.

3.1. Selection of Technology Sources

Sources of technology progression which were considered as potential inputs were measured against the following criteria:

Publicly available commentary on technology

A widely recognized source of technology assessment

Available for at least 10 years of the last two decades.

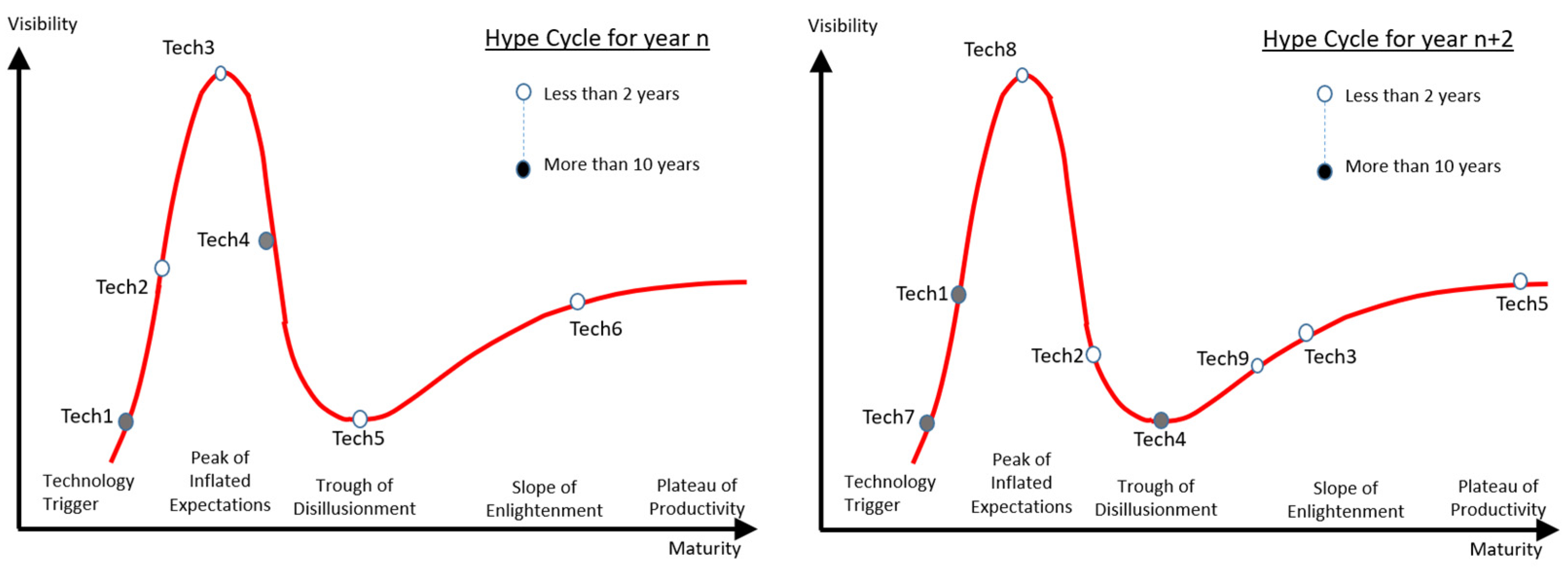

Two enduring sources meeting the criteria above were business research organization Gartner and the Economist magazine. Both Gartner and the Economist have published information on technology progression over the period considered. Gartner has openly published (annually) a technology assessment over the last 20 years (the annotated hype cycle diagram), which is covered further in the third research question. The Economist has a regular section covering science and technology, and a regular quarterly bulletin.

With both Gartner and the Economist, a progression over time of the technologies they highlight-and in Gartner’s case the relative maturity were available.

Gartner’s public offering, the ‘hype cycle’, published annually, is a list of technologies identified on a curve showing their assessed point of evolution (

Figure 4).

The concept of the ‘hype cycle’ is outlined in Linden & Fenn (2003) [

1]. Technology topics low on the cycle (to the left) are considered immature candidates and topics nearer the right of the diagram are considered a success. Using the technology topics identified each year (either the first occurrence or the mature end of the graph) gives a reference for comparison (when the technology was noted by Gartner’s assessment and when they believe it became ‘mature’).

Each week the Economist newspaper looks at the implications of various technologies. It also includes regular quarterly reviews which focus on emerging technology topics. Copies of the Economist were available for two decades.

By reviewing Gartner’s hype cycles over the two decades chosen and the Economist newspaper over the same period a list of technology terms was identified together with the date occurrence. This was a relatively manual process, and it is difficult to ensure total reliability in detection of terms. It should therefore be noted that these sources were simply used to generate representative lists of technology for each year. This paper does not try to assess the accuracy of these sources. They potentially offer between them an indication of what technologies were considered as emerging from 2000 to 2020.

3.2. Selection of Academic Paper Data

Paper metadata (paper title, abstract and publication date) was used to perform the analysis. If a technology term was not identified in the title or abstract, then it was unlikely to be a significant part of the research. Other information—such as the authors, the geographic location of the research and the topic area of the paper (e.g., medicine) would also be beneficial. Paper metadata is generally publicly available whereas the full paper is often not. Thus, a wider coverage could be achieved simply using the metadata.

Several sources of academic paper summaries were considered including Google Scholar and academic reference organizations such as Elsevier. For this work though, it was important to be able to harvest information programmatically, which is problematic with most sources (e.g., Google) as automated processing requires special permissions; this was found to be true of most commercial sources. However, most academic organizations are now contributing to the Open Archive Initiative (OAI), which provides a common, machine access mechanism definition with which organizations comply, and a list of contributing organizations and the URLs for their OAI access point. The OAI started in the late 1990s and is now supported by a significant number of academic organizations and journals globally. There are currently around 7000 stated member organizations. Some of these do not currently publish an OAI URL or have some form of protection on their URL. The latter may be resolvable with a specific request to the organization but without such action, 3219 sites can be accessed on an open basis (this number was established by interrogation and validation of each URL response automatically) and these have all been used in the experiment. Although many academic and not for profit organizations have joined the OAI initiative later than the 1990s, they are typically loading all historic papers. This means that at least two decades of paper metadata is typically available for each new organization as it comes online.

3.3. Analysis Framework

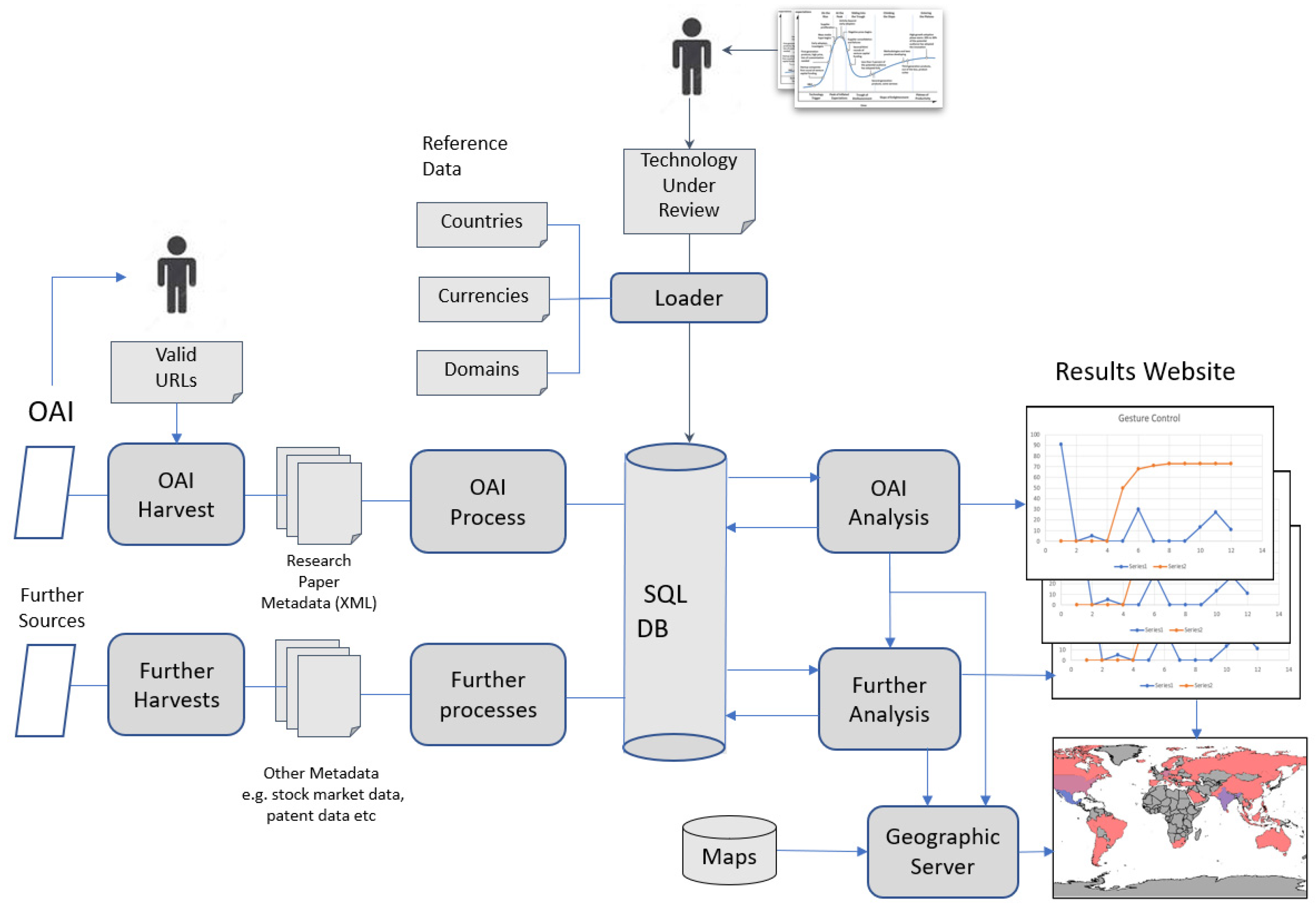

The analysis of the paper abstracts required the development of several pieces of technology. Components were developed as needed to undertake the necessary processes. These are shown in

Figure 5.

The framework was designed to allow further extension with additional data sources, harvesters and analyses. Further papers will describe these analyses and their results as the research progresses.

The technology choices were made specifically for the type of data and measures an analysis required. For example, because the research paper data were largely tabular, and efficient text searching was needed, a relational storage model and thus a RDBMS was used. Some of the outputs are spatial and others network related. The framework is extensible to integrate other forms of analysis—for example, it currently generates HTML and csv but could easily output a graph representation of the relationship data (in for example GraphQL or RDF).

3.4. Harvester/Loader

After considerable investigation and experimentation, the OAI resources were determined to be the most useful and complete open and exploitable source of paper abstracts, and it proved to be an extensive resource for the experiment. The amount of data (with 40,000,000 papers’ metadata, from 3219 OAI libraries), was of the scale needed.

The harvesting and population of a database was initially time consuming (even though largely automated), but the concept of ‘incremental refresh’ means the resource is easy to keep up to date. The approach of downloading data rather than using it online meant that various experiments could be performed with no concerns about being blocked by websites for excessive requests for data. It also made repeatable experiments possible.

Because of the need for repeated and detailed analysis and the amount of data, it was necessary to harvest the paper metadata into a central database (this avoided overloading the individual organizations’ websites). A harvester program was implemented to retrieve the records. This connected to each organization, requested the metadata for each available paper, and stored this in a relational database. The first harvest was performed in early 2019 and in early 2021 papers added since the 2019 harvest (including from new contributing organizations) were added. In total 41,974,693 paper abstracts were harvested from the 3219 organizations’ OAI interfaces, with publication dates from 1994, and with origins in over 100 countries. The initial harvesting and loading process (in 2019) took approximately three weeks and the update process (in 2021), approximately 5 days.

3.5. Data Cleansing/Enhancement

With the number of organizations included, and the amount of data published and subsequently harvested, significant data gaps/issues exist; thus, a cleaning process was performed prior to analysis. For example, a missing and badly formatted publication date could be problematic. For the analysis, only the publication year was required. If the publication date was not valid, as a backup the year of entry into the OAI library was used as an alternative.

Geographic location is not present in OAI Paper metadata. An inference can be made by looking at the publishing organization. The location for this had to be derived from the publishers URL (for example ac.uk is unique to the UK) or if still unresolved by manual examination (e.g., the description, e.g., ‘Stanford’ identifies a paper as US). Approximately 60% of the 3219 organizations country could be inferred from the URL, the remainder were classified manually or left as ‘worldwide’.

There is the risk of significant duplication as papers are often published both in a journal and concurrently on a university website. Since the title is typically unchanged when republished, removal of duplicates is relatively easy. Queries were developed that could mark them as excluded from the analytics search.

In addition to the academic papers, the manually created spreadsheets of technology terms and occurrence dates from the reference sets were cleaned and consistently formatted for input into the analysis process.

3.6. Database Design

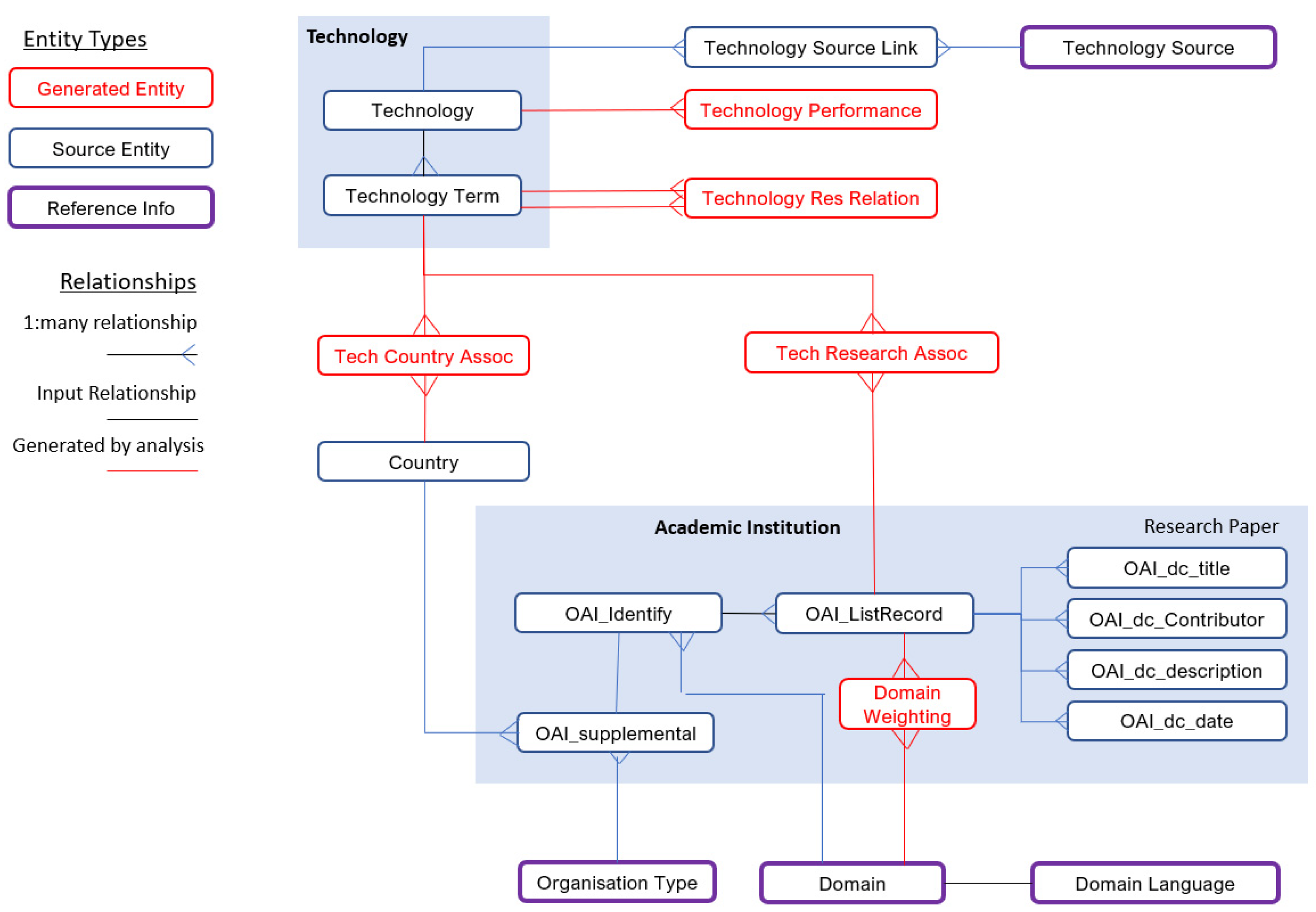

The database objects used to support the initial experiment are shown in

Figure 6.

The elements of the model include the following elements, populated with data either by the harvest process or by the analytic process (see the key above):

Source entities are items which are loaded from two sources, research data from the Open Archive Initiative (OAI) and technology terms from internet sources and journal review.

Reference information such as domains/sectors and technology classification types and country codes are loaded from reference files.

Generated Entities include computed data and metrics, including associations between technologies and research.

3.7. Analysis and Results

For each technology term in the reference list (Gartner, Economist, etc.), a query was performed to obtain a list of papers in which the term appears in the abstract. The input to the process was a file of the form shown in

Table 1.

Queries were derived which analyzed both the formatted fields in the data (dates, locations, etc.), and scanned the free text in the title and abstract in the metadata for all papers. Several techniques were used to avoid falsely detecting short words in other words (e.g., ‘VR’ in manoeuVRe) by carefully conditioning the query and post processing.

The following measures were generated:

Profile over time of a technology term (number of occurrences each year).

Profile over time of the term within different subject areas (technology, medicine, law, etc.).

Profile over time of the publication country of papers containing the term.

Co-occurrence with other terms (how often do other technology terms appear in papers relating to one technology).

Each measure offers a different insight into technology progression—addressing the overall growth of a technology, how it starts to pervade different subject areas (applications), its geographic spread, and its alignment with other technologies. The latter supports identification of co-dependence, or shared relevance to a problem.

The results of the analysis are presented in textual and graphical form in a linked HTML structure (results website), allowing for the revision of the analysis of a given collection of technologies. In addition to the measures pages per technology, there is an overview page, providing an index for the results. A typical overview page output for a processing run, with a list of input technologies, is shown in

Figure 7.

In addition to the human readable outputs (HTML) a series of data outputs were generated, comma separated variable (CSV), JavaScript object notation (JSON) and resource description framework (RDF) files per technology. These allow other tools to be used to assess the data.

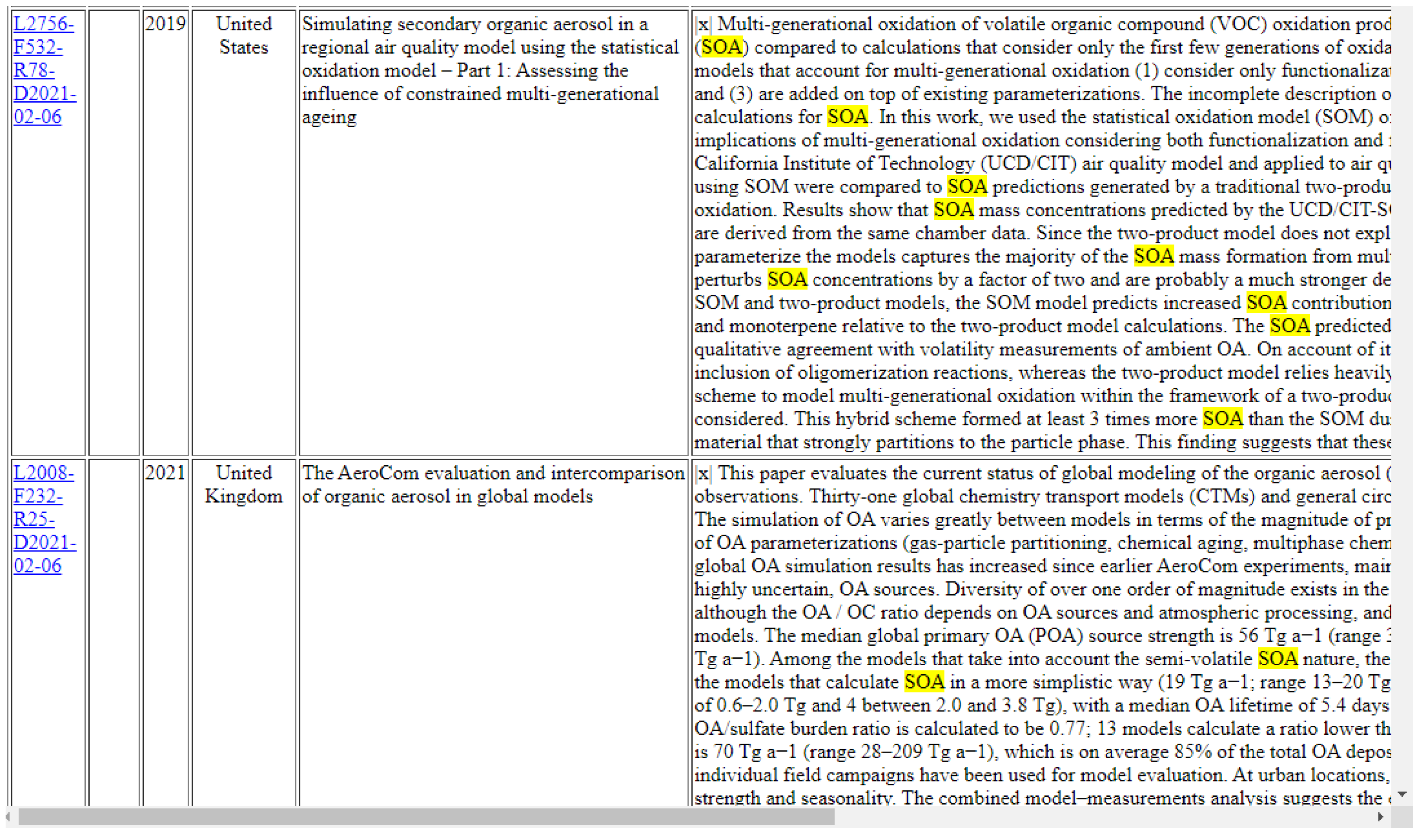

To validate the results or review specific technology topic results, both the titles and the paper abstracts were presented in HTML for a given technology topic (

Figure 8).

The abstract table is helpful in identifying erroneous detections, particularly with the implementation of highlighting showing where the detections occurred. A typical issue was, for example, the term ‘OLED’ (organic light emitting diode) is a common string in many words (e.g., pooled). This was easily avoided once identified (by conditioning the queries) to add spaces around small terms “oled” and “(oled)” and also some further processing. This is not perfect as it then misses some potential results but is more stable.

For a given set of reference technology topics (over 300 in total), all the analyses described in the previous section were calculated automatically and hyperlinked to the relevant technology topic presented in an HTML table as shown in

Figure 7.

Various steps were taken to validate the harvesting and analysis.

An extensive logging of errors was implemented and retained, allowing all failures in processing to be reviewed.

To test if the set of paper abstracts was representative, the papers present for each of the authors of this paper were searched for (as we are each aware of the papers we have published and so can check their presence, relevance, and publication dates). The results were as expected.

The abstracts of a sample of occurrences of a term were examined to verify they were in general correct and not false detections (the highlighting of terms in the abstract helped here)

The categorization of papers based on words in the abstract (as law, medicine, etc.) was verified by passing through papers from institutions specializing solely in one of the disciplines and checking that discipline scored highly.

Association level was checked using two sets of terms which were generally unrelated. The expectation was to find close grouping within each set and little cross linking, which was the case.

While not exhaustive, these tests provide the basis for confidence in the results.

3.8. Technology Used for the Experiment

The experimental software was largely developed in the Java programming language. This included all key components (the harvester/database loader, the cleansing software and the analysis component). Supporting this, the PostgreSQL database was used to store all paper metadata and to query results during analysis. In addition, GeoServer was used to visualize the map displays. Results were generated in the form of HTML, so can be visualized using any browser. Lastly, some other tools such as Microsoft Excel were used to generate, for example, the reference terms lists and to undertake some specific analyses. JavaScript graphing packages such as Chart.js and cytoscape.js libraries were used to provide specific graphical visualizations and some bespoke JavaScript was developed. The software used was predominantly open source.

In terms of computing, two Windows machines with Intel I9 processors, 64GB of memory and 2TB RAID SSDs as well as HDD backup storage were used as the main computing resource (with the database cloned to each machine).

Comparison of Effectiveness of the Results

As indicated a secondary goal of the experiment was to compare/correlate the results with the ‘perceived technology status’ for each year. Thus the above includes a measure of this.

To compare/correlate the technologies from the reference list with the outputs of the analysis, the occurrence profile of a term over time in research was superimposed with the occurrence year of the technology term from the reference. In addition, an absolute measure was produced of how many years before or after a term occurred in the reference list did it occur in published papers (see

Section 3).

4. Results

The following presents the various measures, and the form of the results. The detailed output for each technology is available in HTML form alongside this paper.

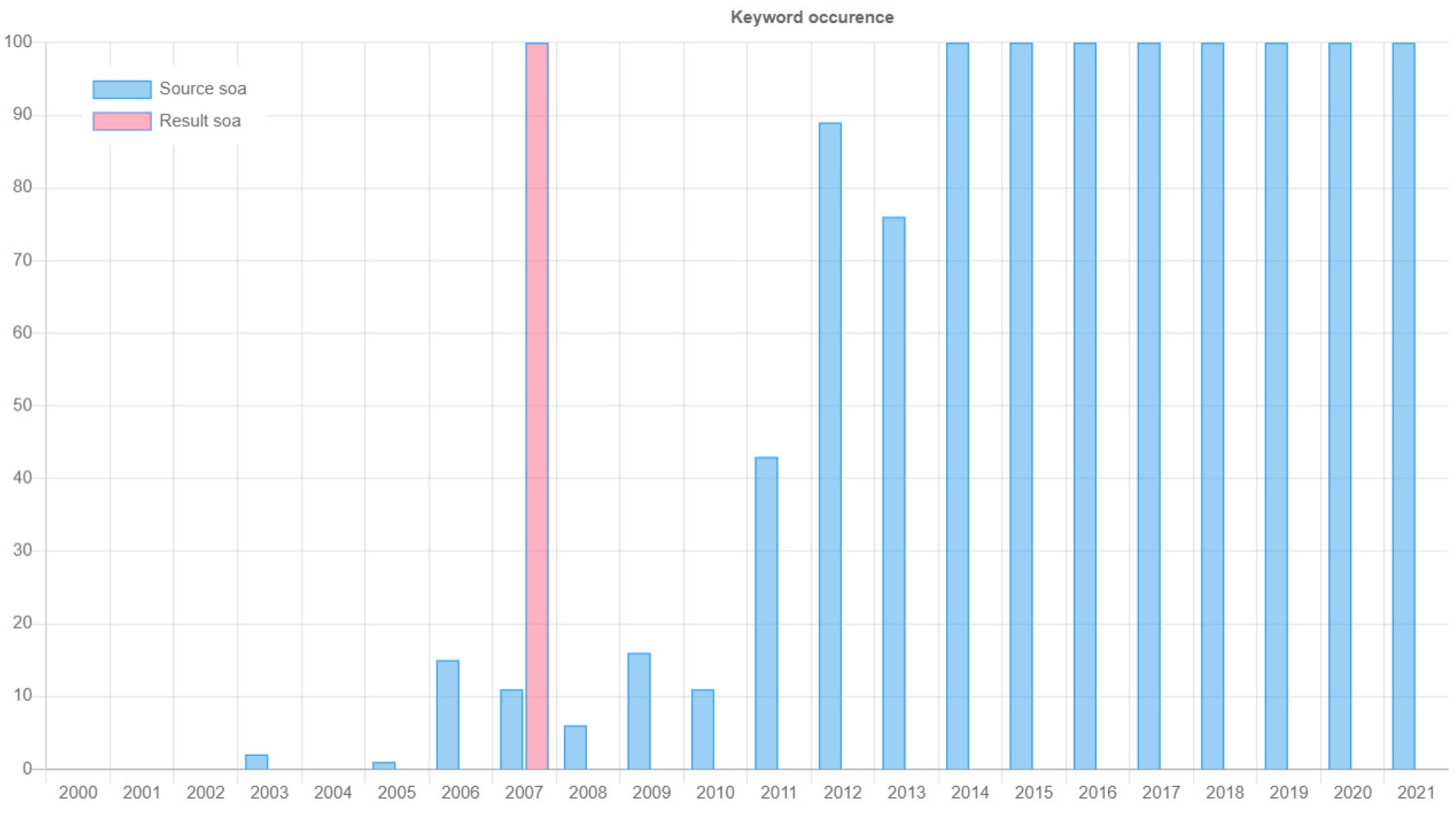

The first and most basic measure (e.g., SOA, visualized in

Figure 9) was the number of papers containing the technology term in each year—blue bars (the number was clipped at 100 to allow the initial point of growth to be seen more clearly). The actual occurrence numbers are available in a tabular output alongside the graph. The date the term first appeared in the reference was also included, for comparison purposes—red bar. The goal of this analysis was to provide a metric of the level of research applied to a term.

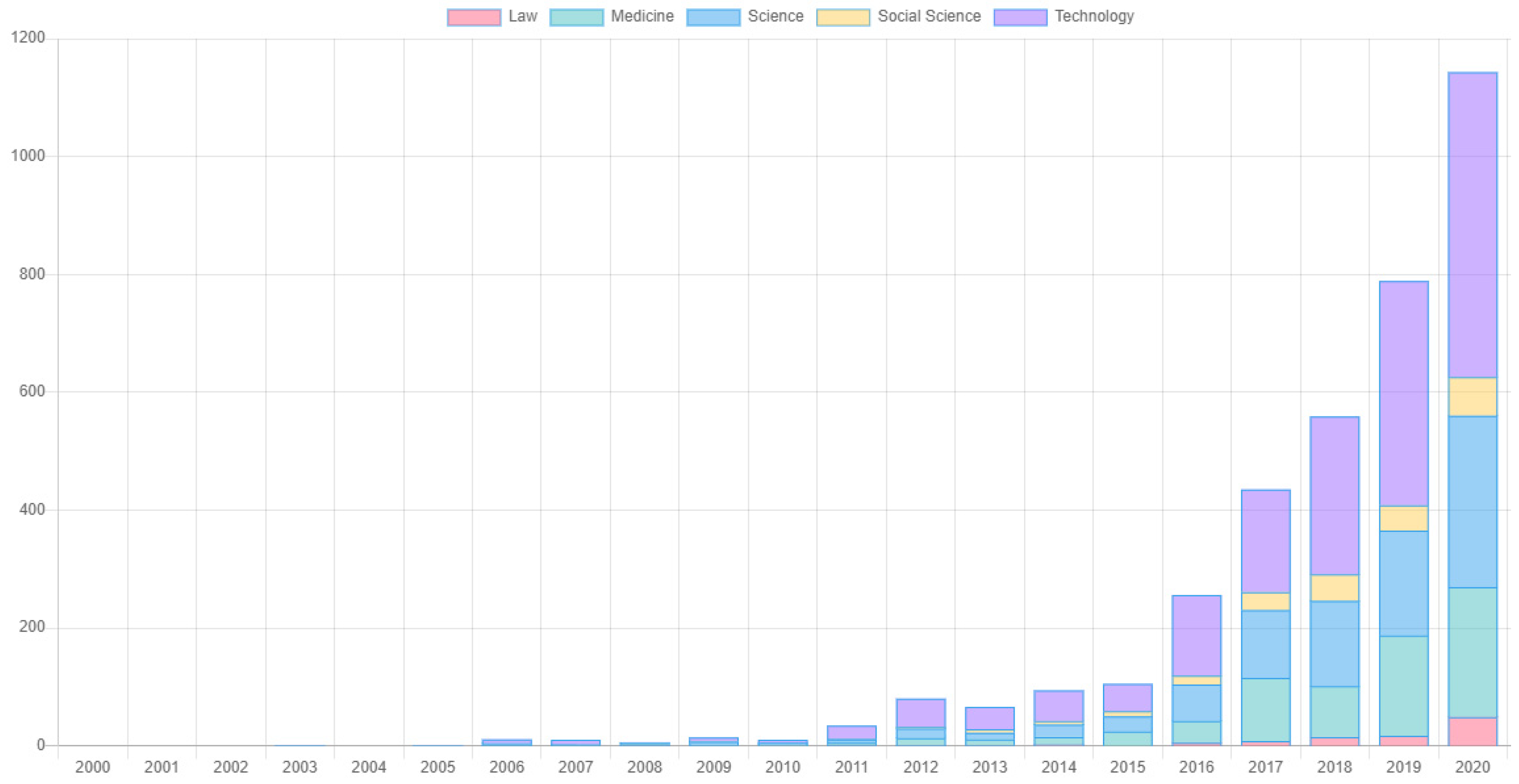

The next level of complexity was to assess in what subject areas the term was appearing. For example, was it occurring purely in technology-related papers (implying it was still in development), or was it also appearing in medical, social science or law papers, which might indicate progression into actual use? Because the subject area of the paper was not available in the metadata, a technique to try and identify it was developed. This involved creating 100 ‘keywords’ for each subject area (e.g., for Medicine this might include ‘operation’, ‘pathology’; for Law it might include ‘case’ or ‘jury’). Depending on the score of these words, the paper could be ranked as say 60% law related, 20% technology. Both a table and a graph were then produced for each technology showing this breakdown over time (

Figure 10).

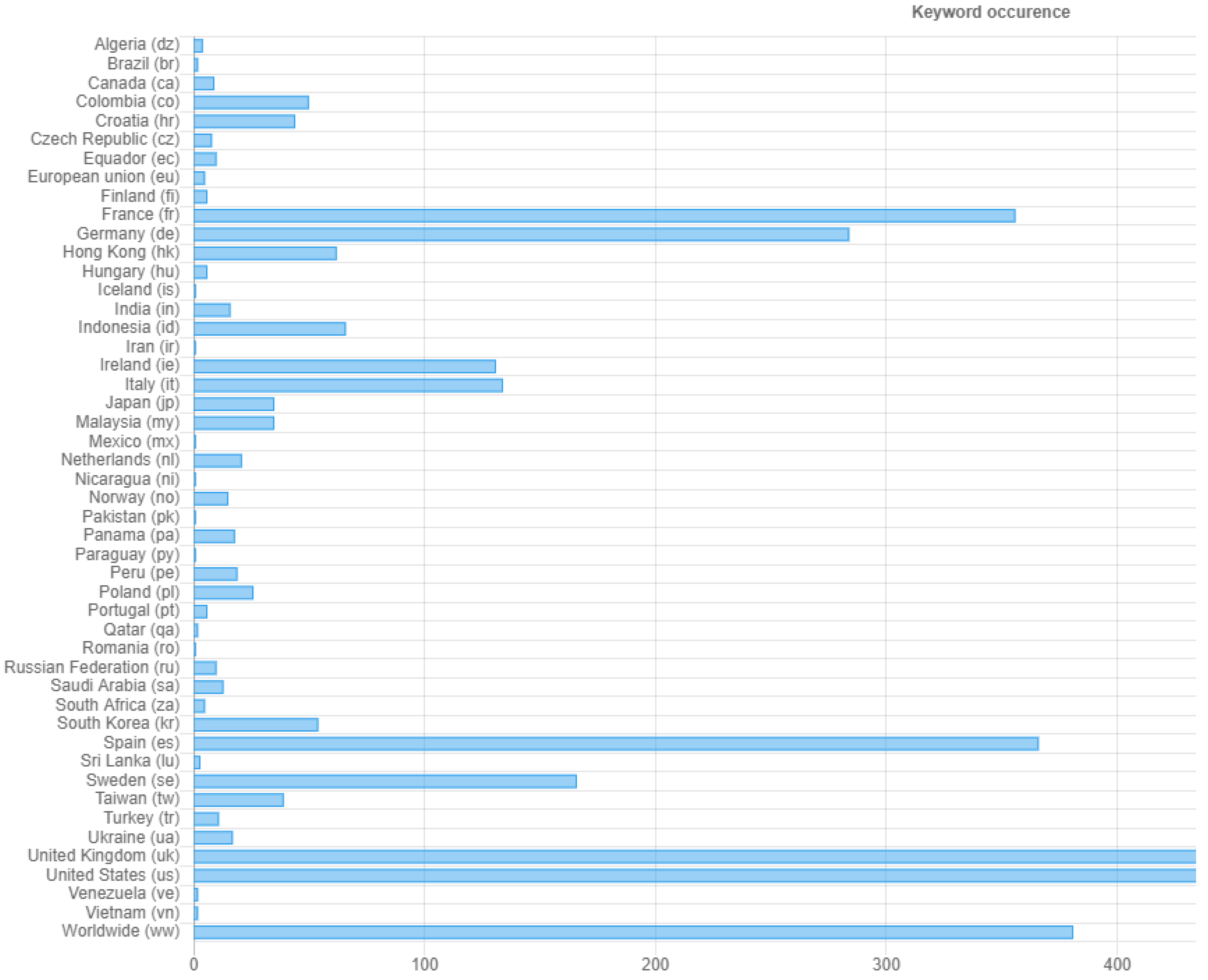

The paper abstracts containing the technology term were also categorized by country. The goal was to examine whether technology progression formed a particular pattern. Arthur (2009) [

5] suggests that technology often forms in geographic pockets, meaning specific areas would show a high prevalence initially. Martin (2015) [

37] has suggested this geographic focus only occurs for specific technologies, such as where physical resources are important (e.g., in drug development where specialist laboratory facilities are needed).

The country association to papers using the publisher library location was used as described in

Section 3. This is therefore an approximation but does give an indication of where the research was undertaken (

Figure 11).

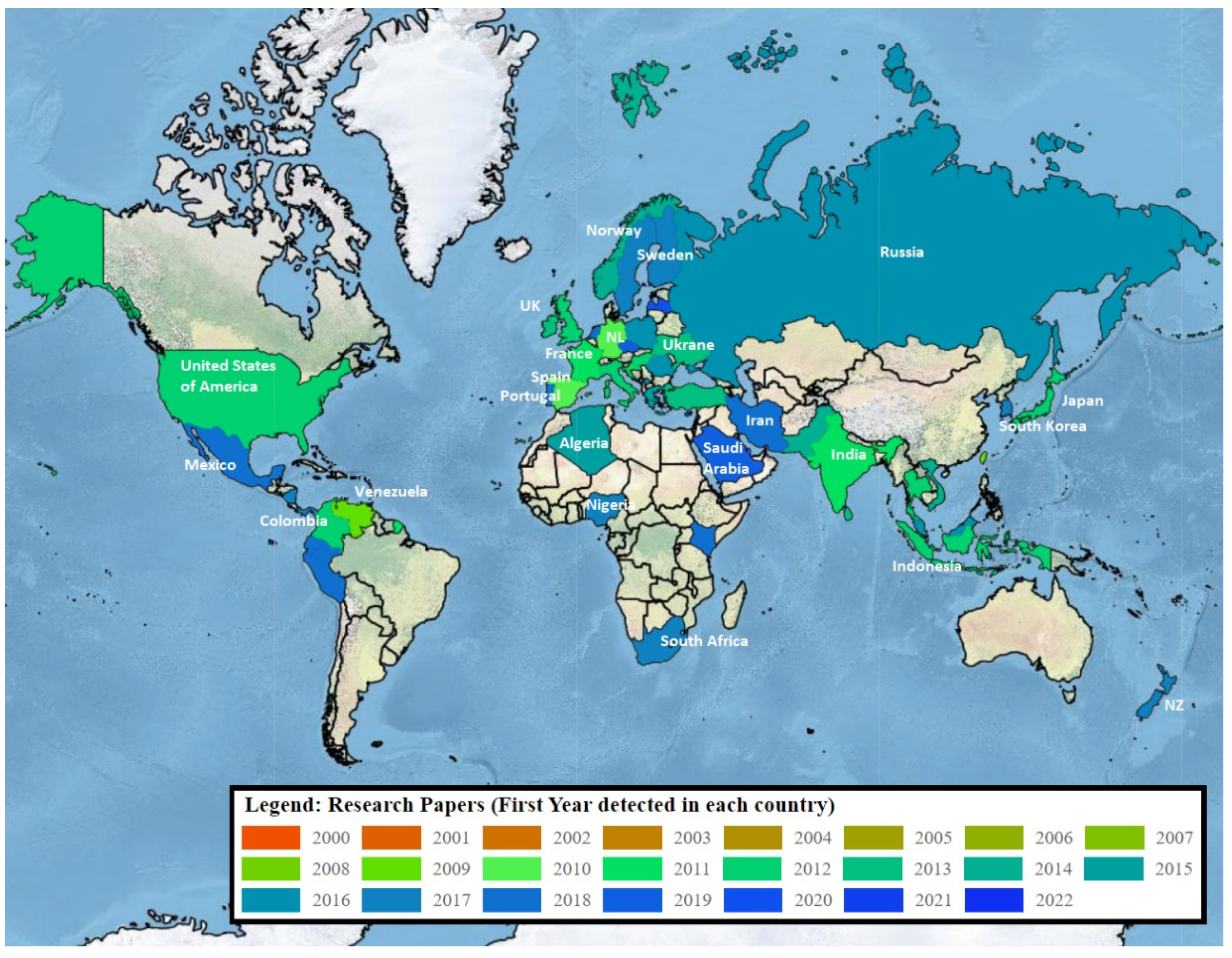

The result was also visualized geographically (

Figure 12); color was used to indicate geographic progression over time. The results show that some technologies have a dominant location from where they grow (an origin). In some cases, technologies remain tightly grouped geographically, but may start to spread to other locations because of the availability of specific researchers and skills in those geographic areas. This pattern is common for technologies which require a complex infrastructure—for example vaccine development and testing. Others spread quickly and uniformly after initial occurrence in a single location. Work by Schmidt (2015) [

31] suggests just such an effect is likely to occur, suggesting that there is an element of ‘mobility’ in some technologies, for example IT related, compared to technologies which require significant research or production capability.

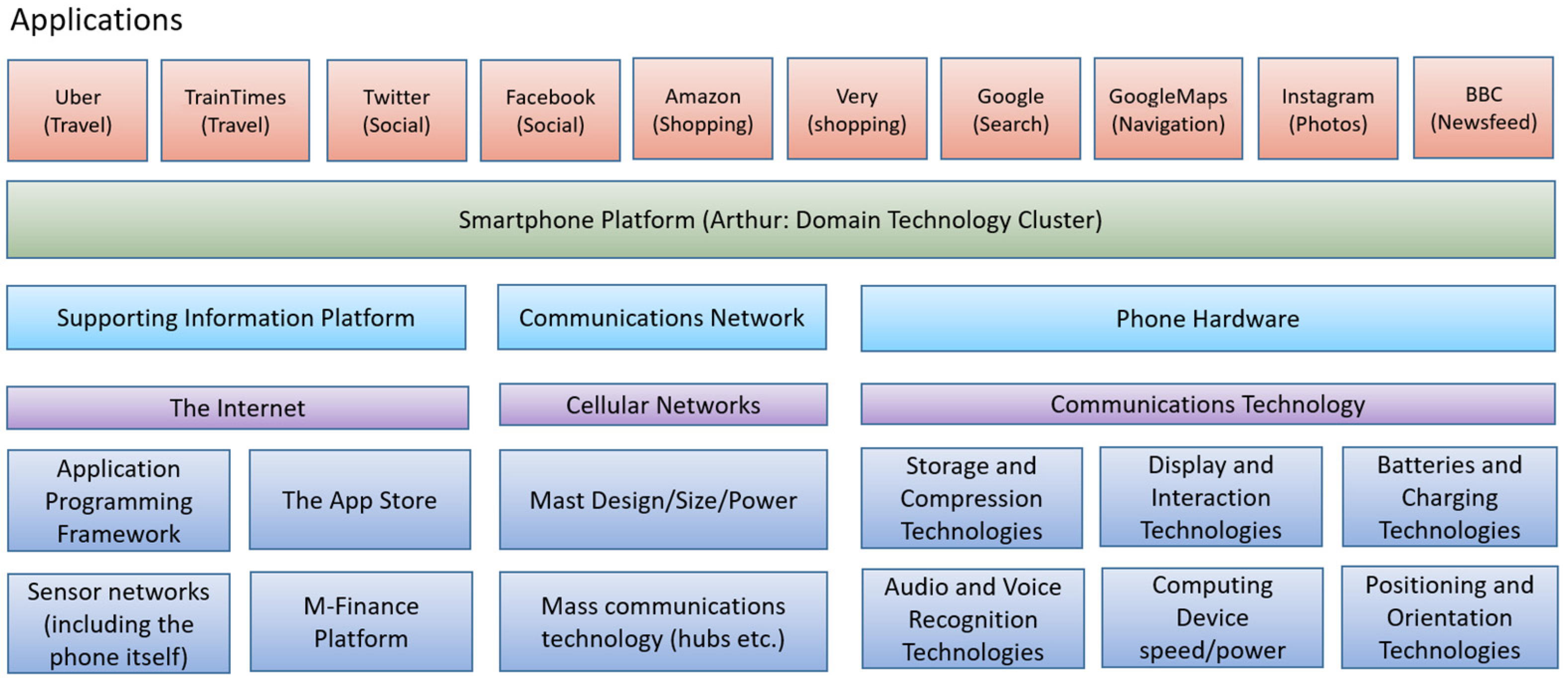

In Brackin et al. (2019) [

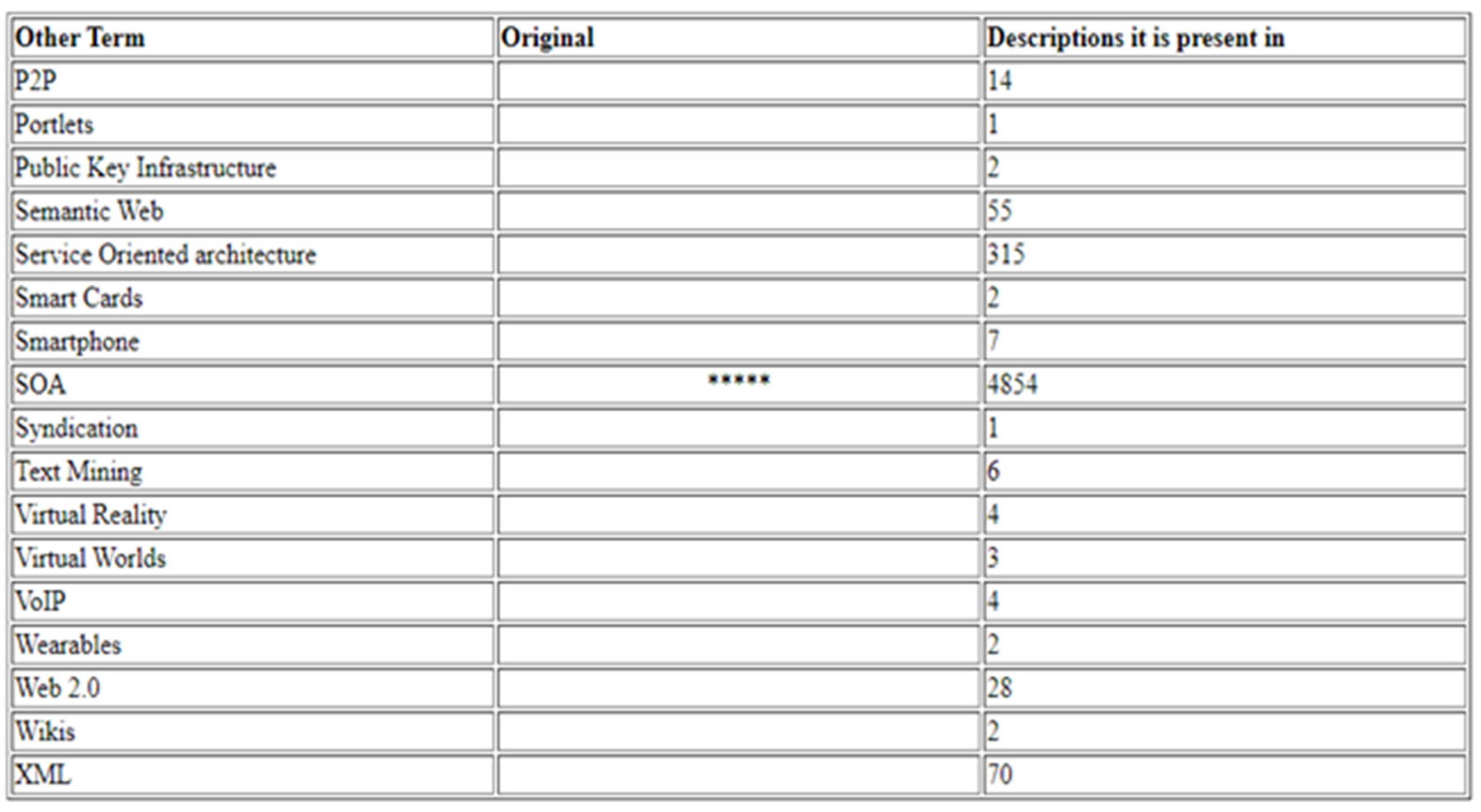

16], the issue of technology groupings was a key element of technology progression. The example given specifically was the smartphone, and the relationship between technologies that form a cluster and progress in parallel. Looking for such clusters was identified as a useful measure. A technique was devised to calculate and visualize this. For each technology in the set being analyzed, the papers which contain the technology term searched for are known. Given those results, for each technology, it is possible to then see how many times each other technology in the set occurs in each technology set results. This amounts to an occurrence cross-product for each technology pair. If two technologies share no common papers, then no link is assumed. If two technologies share all, or a high number, a strong link is assumed.

Figure 13 shows the result for SOA. The ***** symbol in column 2 indicates that this was the reference technology (original source of the papers) with which were searched for comparison with other technologies.

This result was also visualized graphically as a network graph—both for a specific technology topic, and as relationships between all technology topics. The technology link is shown by the presence of a line between the nodes (which are the technology topics), and the line thickness shows the level of commonality.

Figure 14 shows the form of the diagram.

Only primary relationships are shown (i.e., technology topics directly related to the technology topic of interest). If the second level is added (topics related to that first set of topics), the diagram becomes significantly more complex.

The graph generation technology used does allow interactive browsing and so exploration of the full network is possible (as opposed to the simple hierarchical view shown in

Figure 14).

Further composite analysis is potentially possible—for example looking at the geographic coincidence of technologies over time as well as their occurrence. Alternatively, one could analyze whether technology growth in a subject area occurs in a geographic pattern. Each of these analyses could offer further insight into technology progression. Additional measures are envisaged, which build on the comparison of technologies to demonstrate the principle of path dependence explored by Arthur (1994) [

22], thereby allowing technology competition to be identified (relative growth).

In summary, a significant metadata resource was assembled for academic papers, and several analytical metrics were produced which corresponded to the common questions in relation to technology progression:

How fast is a technology developing over time (showing interest in the technology)?

In what fields is it gaining the most traction (showing the level of relevance in those fields)?

How is a technology spreading geographically and are there particular geographic clusters?

How is a technology linked to other technologies to form clusters?

The metrics described here try to provide insight into each of these questions for a set of input technologies.

Taking topic areas, the technique of classifying data based on these does show value. Virtual and augmented reality use in medicine can be seen as the technology evolves. The results also show several papers emerging related to law and examining these they are clearly valid detections, with the papers addressing some of the legal and social aspects of augmented reality (e.g., public right infringement).

The geographic spread of technologies also shows value, although the lack of accurate origin of papers makes it less valuable, and it seems that the spread of technology topics is not particularly geographically bounded (although the analysis does allow some degree of pinpointing of the origin).

Lastly, the ability to visualize the relationships between technologies does allow an understanding to be drawn of clustering. It is quite clear that terms related to the smartphone such as internet of things, SOA, Web 2.0 and HTML5 are related technologies, as are technology clusters such as virtual reality, augmented reality, virtual worlds, and the smartphone are also strongly shown in this group too. This ‘links’ view also allowed synonyms/acronyms to be identified (e.g., Service Oriented Architecture and SOA are shown as strongly related on the graph).

The ability to drill down Into th” res’lts and see the actual detected technology terms in the abstracts, and to review the abstracts in a specific technology topic, was also useful. It allows the reviewer of the summary information to investigate specific results and identify false term detections and associations or points of particular interest.

The above results demonstrate that objective measures of technology progression, measured on various dimensions (time, space, application domain, co-occurrence with other technology growth) is possible using automated techniques. This confirms research question 2.

The framework does output a comparison of the input technologies and the profile of those technologies over time and space. This does allow an assessment of the technology and therefore an assessment of the reference.

The result of the analysis phase is 4 measures, 6 analyses most with both tabular and graphical representations/presentations for each of the 337 reference technology terms used as input. The analysis results page generated for each of these then provides links to 3370 artefacts (tables and graphs) generated automatically by the analysis process and accessible from the summary web page of the analysis, as shown in

Figure 3.

For the reference technology terms list, the percentages of reference technology terms that could be detected in the academic paper abstracts was calculated, together with the difference between the point the term occurred in academic material, and the time it was seen in the reference technology terms list. Lastly, there is the total number of occurrences of a technology as a measure. Overall statistics were calculated; for the 337 terms, 75% were detected in academic abstracts. The detection failures seem largely to be in three-word technology terms and where there are potentially more likely names, for example: ‘defending delivery drones’, where ‘drone defense’ is a more likely generic technology term. Manual entry of alternative terms was included. a future iteration could potentially try to identify alternative name combinations (for example automated inclusion of acronyms (e.g., simply taking the first letter of each tech term, virtual reality and VR for example. This would not though associate Unmanned Aerial Vehicle (UAV) and drone. There were also potentially some technologies which were not matched because they simply did not progress through an academic route, for example Tablet PC.

The above largely provides visualizations of the assessments. There are though specific metrics available in the results. The first is the year of first occurrence (when the term was first detected) and the year when the rate of change of occurrence in papers was significant (in fact the threshold used for ‘significant’ was an increase of 100 paper detections per year).

There is also the time difference in years between a term occurring in the academic material (publication date), and the date in the reference technology terms list, was calculated. Additionally, whether the time was positive (academic detection occurred first), zero (detection was at the same time) or negative (the term occurred first in the reference list) was used to set one of three criteria–‘precursor’, ‘the same time’ and ‘not precursor’, respectively.

53% of technologies were discovered in academic paper abstracts prior to the occurrence date in the reference technology terms list.

8% were detected in academic papers at the same time as first seen in the selected media.

So overall and accepting that the input list was not created by the experiment and so its utility is limited at present, the automation does provide indicators in the same time range as occurrence in other sources. The analyses of geographic spread, subject area spread and relationships between technologies potentially offers additional insight.

The results also reinforce the idea that academic papers alone are not a sufficient measure of technology origin; an analysis capability requires multiple measures. Items such as Tablet PC were not detected before the reference, probably because its origins were industrial/commercial, rather than academic. This is highlighted by the fact that the tablet PC entered the Gartner model at a late stage too (i.e., it was not detected early by Gartner either). Similarly, with Bluetooth. Conversely, virtual reality, augmented reality and 3D scanners were all detected in papers considerably before noted on Gartner or in the Economist newspaper. These are composite technologies rather than individual innovations. The processing undertaken does also provide a considerable amount of detailed analysis of relationships not explicitly available from the reference sources.

Other quantitative measures were created in the output analytics. These are shown in

Table 2. They provide an overview with the option of the user to drill down to examine the detail (as shown in the various visual representations in this section).

In terms of the overall research question “To what extent is it possible to identify objective measures/indicators of technology progression using historic data in academic research”, this question is addressed by the results above, i.e., the measures, although limited to academic research, do provide indicators of various aspects of technology progression in academic research. The results do also show several measures, for example the total number of occurrences of a technology topic as an absolute measure, or the number of occurrences in a given country or again the year in which the research occurrences was greater than 100.

In terms of the subsidiary element of the question, does this correlate with other methods, then an indication of this is possible (with the described before, same time and after measure against the reference technologies derived from sources such as Gartner and the Economist). It could be applied more rigorously if run as an on-going assessment using a team of experts operating using the normal method of panel assessment.

The final aspect of the experiment is whether the approach can offer objective validation of the theoretical concepts described in

Section 2. Of the theories, an initial assessment of this is shown in

Table 1.

Arthur (2009) [

5] offers a narrative description of the nature of technology and the eco-systems that surround it. This research has realized that as a database model and populated parts of that model relating to research activity. Ongoing work will extend this to populate other elements of the model, for example industrial use of technologies. At that point the model would provide the basis for a continually updated model of multiple technologies’ progression. The conclusion of the work so far is that Arthur’s concepts do offer value in modelling technology progression and potentially the eco-systems around it.

Christensen (1997) [

9] proposed the concept of disruptive technology. The indicators developed do seem to support the proposition that growth of technology is non-linear. In most cases the graph of occurrences of a technology term within research papers shows a tipping point with initial 1–10 occurrences and then a growth in the next year or two peaking at many thousands. Examples of this include Service Oriented Architecture, Internet of Things, and Virtual Reality. Further work in progress which contrasts research growth with commercialization and looks at the relative timelines offers further insight into this aspect.

Mazzucuto’s work in papers relating to state funding of research, again cannot be fully proven by this work but the indicators show that many technologies identified by consultants or the press did occur previously in research. A good example of this is Internet of Things.

Lastly, the concept of the platform, described by Langley & Leyshon (2017) [

10] is borne out by the significant linking that occurs between groups of technology (measured by the number of paper abstracts in which multiple technologies are mentioned). Further work to show the alignment of growth of these connected technologies would reinforce this and assessing their adoption or relative commercial success.

5. Discussion

The goal of this work was to identify measures can be created using automated means and to help identify the direction of technology travel at a macro level (the research question). This has been demonstrated. The measures and processes require further refinement but were only intended as proof of concept. The general approaches used were in line with big data principles, as outlined in Mayer-Schoenberger & Cuckier (2013) [

38]. The analyses used here provide an analytical view of technology progression based on academic paper metadata, which aligns with the outputs of manual forecasting techniques.

The techniques documented in this paper do not, on their own, offer a way to identify candidate technologies, or even significantly improve on human approaches typically used to rank technologies. They do offer the opportunity to potentially support the human view and provide extra insight and analysis to those undertaking technology forecasting. The analysis in this paper is based on one measurement point (academic research); multiple measures would be required for a more universal technology progression monitoring and forecasting capability. Measures of financial success of a technology, for example, would add another measure to indicate progression and further work in this area is the subject of a paper in preparation.

There is a rich collection of analyses of different aspects of technology growth as described in

Section 1. Other research that could provide a theoretical basis for indicators include Gladwell (2000) [

39] looking at the ‘tipping point’; Langley (2014) [

9], Simon (2011) [

17] and Srnicek (2017) [

18], looking at the concept of platforms; and Lepore (2014) [

40], looking at the more negative aspects of progression.

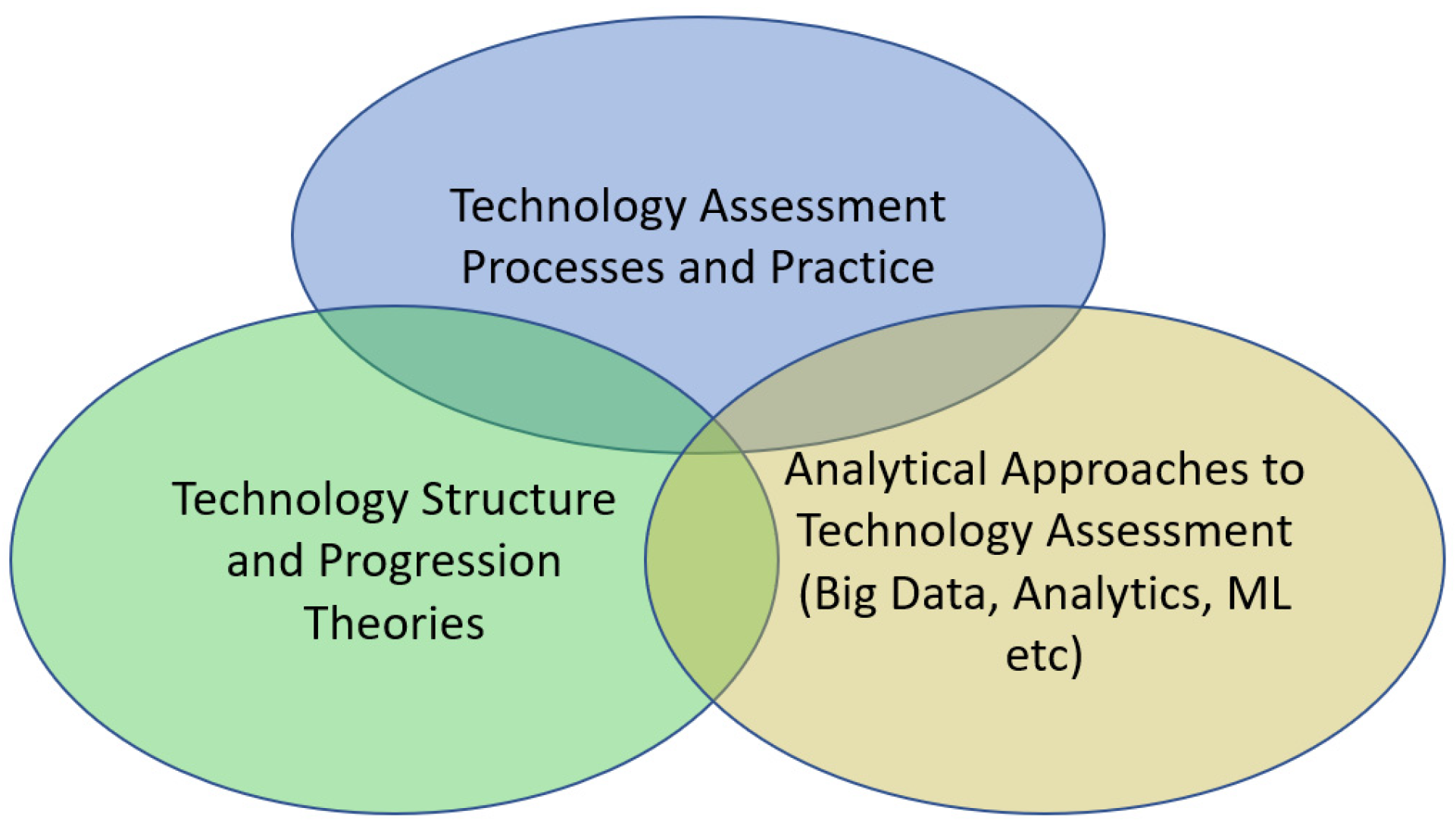

The authors envision a series of monitors based on open data in various areas of the ecosystem and a unified model which can support the equivalent modelling undertaken in environmental and economic modelling. This paper is a first step in suggesting how such indicators could be constructed and most importantly integrated. There is in this, the chance to exploit the plethora of related big data, machine learning approaches which exist. Others have looked at different aspects of measuring technology progression, for example Carbonell (2018) [

34] and Calleja-Sanz (2020) [

32] and Dellermann (2021) [

33]. In general, there are many opportunities for the application of big data and machine learning in this field, particularly with the model shown in

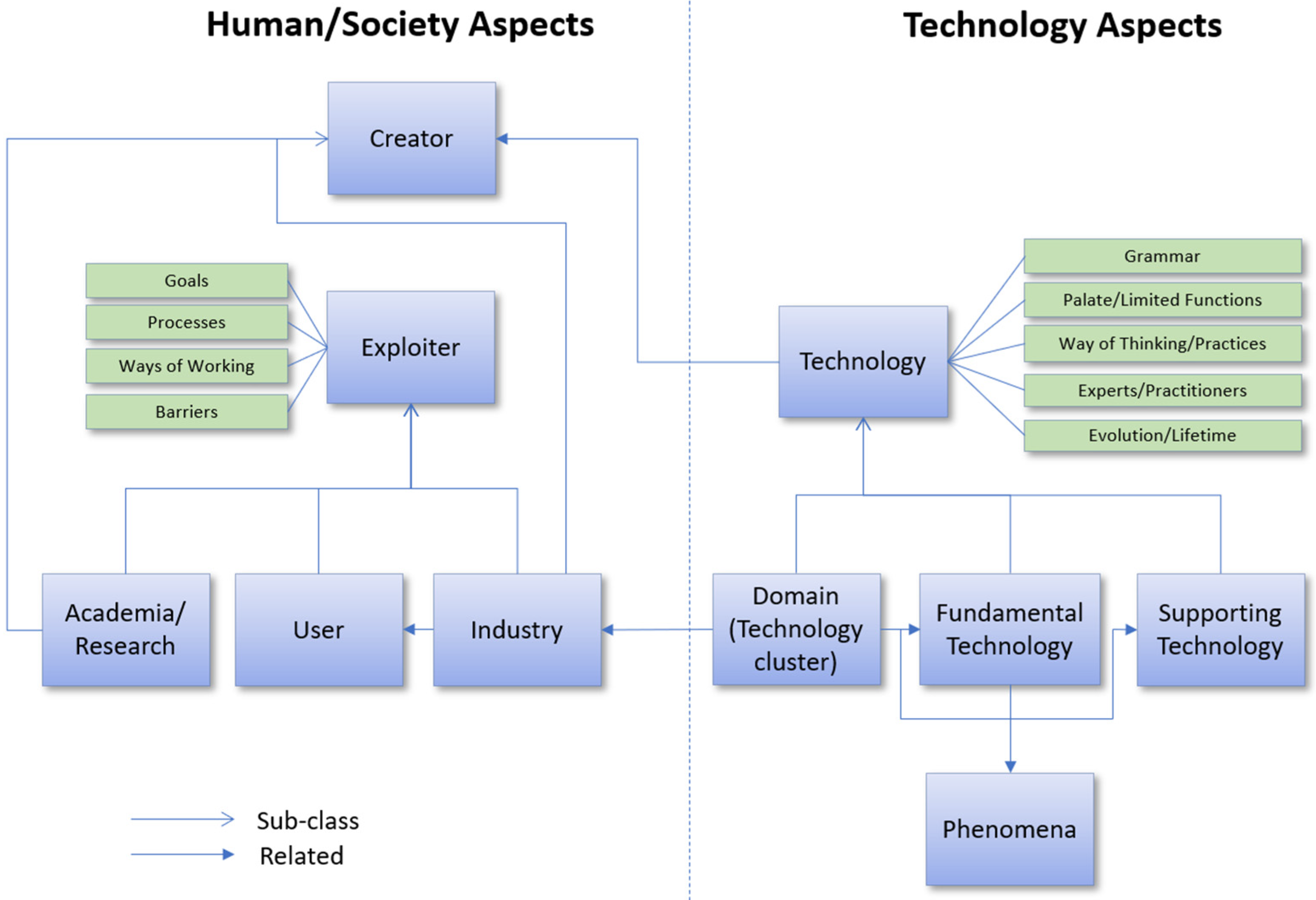

Figure 1 as the basis.

Several approaches could be considered in taking this work from purely monitoring of technologies to a predictive capability. The first is to undertake an analysis of unusual words used in papers related to a topic. For example, detecting that ‘virtual worlds’ has recently occurred as a new term in paper abstracts. This could be done across the entire paper abstract set, or in existing topic areas. An initial version of this was produced, but it had a high level of false terms, as the libraries have been growing quickly (with lots of organizations putting papers online). However, the application of big data techniques and machine learning could make an approach like this viable.

The technique for classifying papers based on a domain dictionary match (legal words, medical words, etc.) could be refined to detect a broader set of subject areas. The refinement of the dictionaries could also exploit machine learning as there are, for example, many libraries which contain only legal or medical papers, allowing training datasets to be efficiently created. The result would be richer classification information.

The use of the author/contributor information present in the paper metadata would also allow for the tracing of technology progression (authors typically have subject area specializations)-both in space and time. This was considered, but it does potentially have identity infringement issues, so was avoided in this initial research. There are also issues with ambiguity for the identification of authors (as the reference is typically surname and initial).

The most important next step is a unified, fully machine processable model based on the various sub-models described in Brackin et al. (2019) [

16] and refinement to the point where the models can be created and informed by the sort of automated approach documented in this paper. The authors intend to continue this work in that direction. There is a strong base of overall (macro) approaches from Arthur (2009) [

5] and Christensen (1997) [

9], as well as several conceptual models for parts of the technology ecosystem identified by Mazzucato (2011) [

6] and others on which to base a unified model.

The creation of further indicators which exploit other sources (perhaps the internet more generally) will be needed to support a unified model. Some of the techniques described in this paper will be valuable in creating these further indicators. Some will require new or automated approaches.

Lastly, more specific analysis of the results of this work would be valuable. This would help prove the results are useful. Insight into the temporal, spatial and subject area-related progression of specific technologies (for example autonomous vehicles) is an area that the approach could be applied to. Strambach (2012) [

41] offers some existing analysis of the geography of knowledge, which could be further developed in terms.