Characterization of Shrub Fuel Structure and Spatial Distribution Using Multispectral and 3D Multitemporal UAV Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Experimental Design and Analytical Overview

2.3. RPAS Data Acquisition and Preprocessing

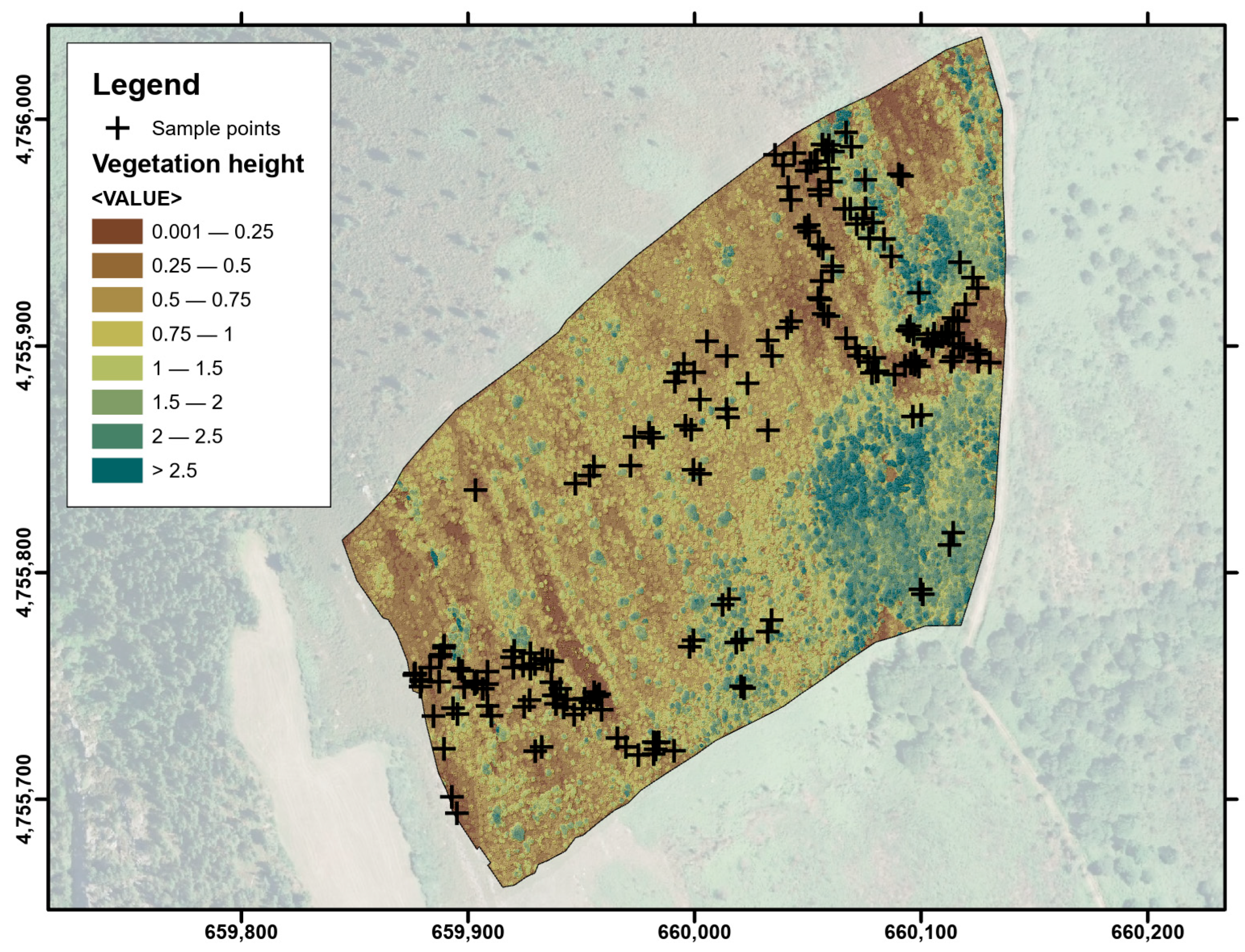

2.4. Vegetation Height Estimation

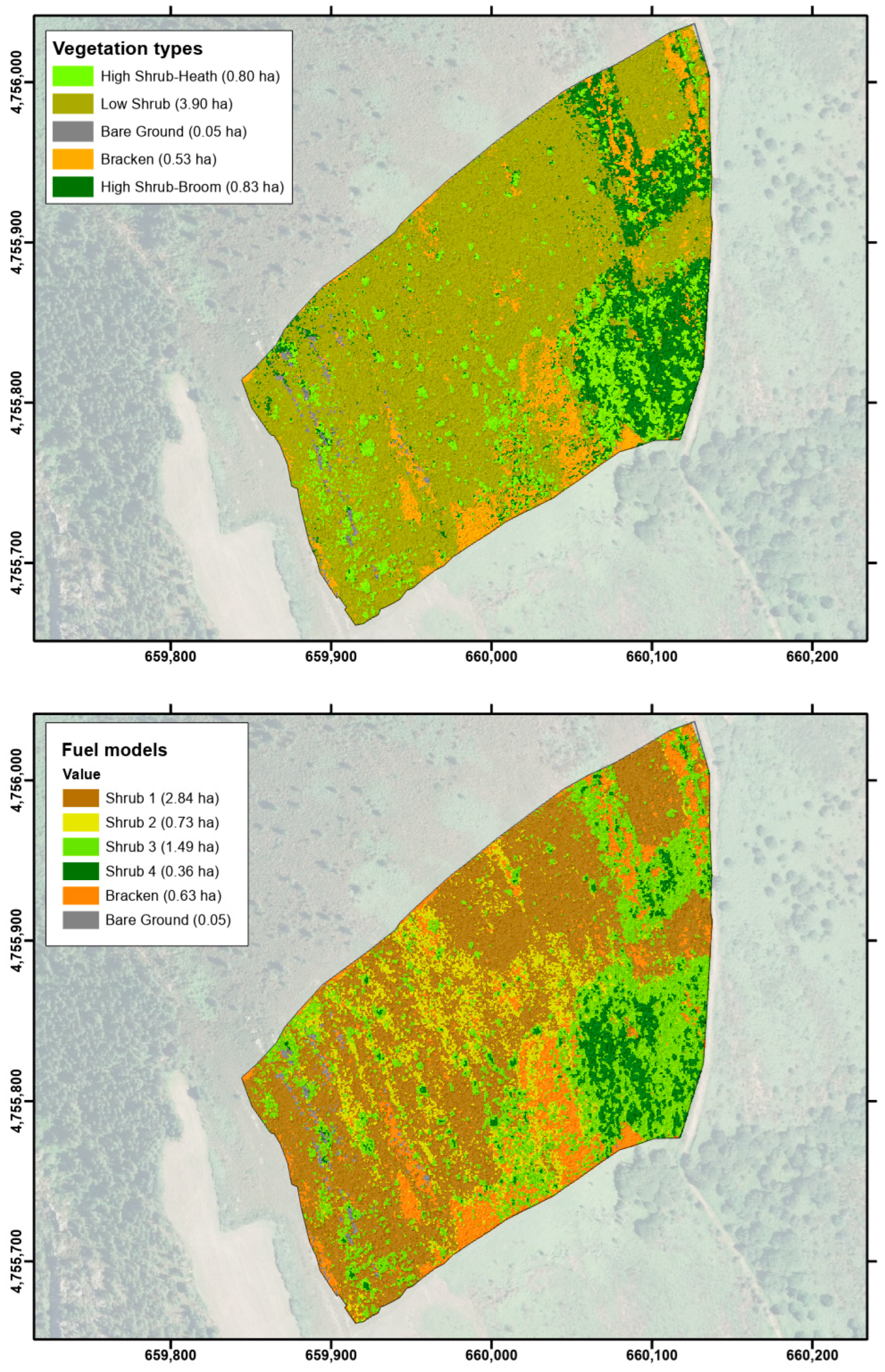

2.5. Vegetation and Fuel Model Classification

3. Results

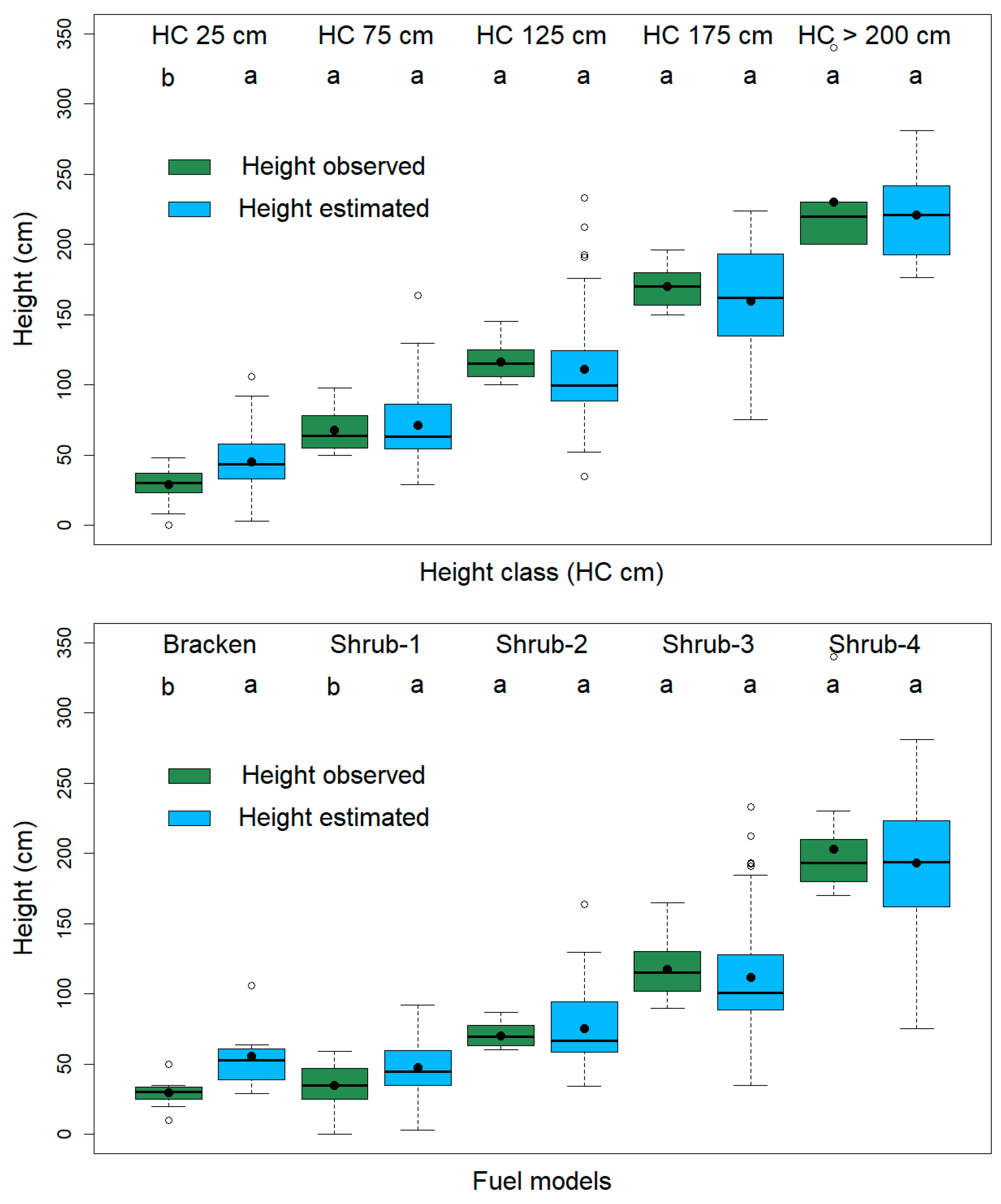

3.1. Height Estimation

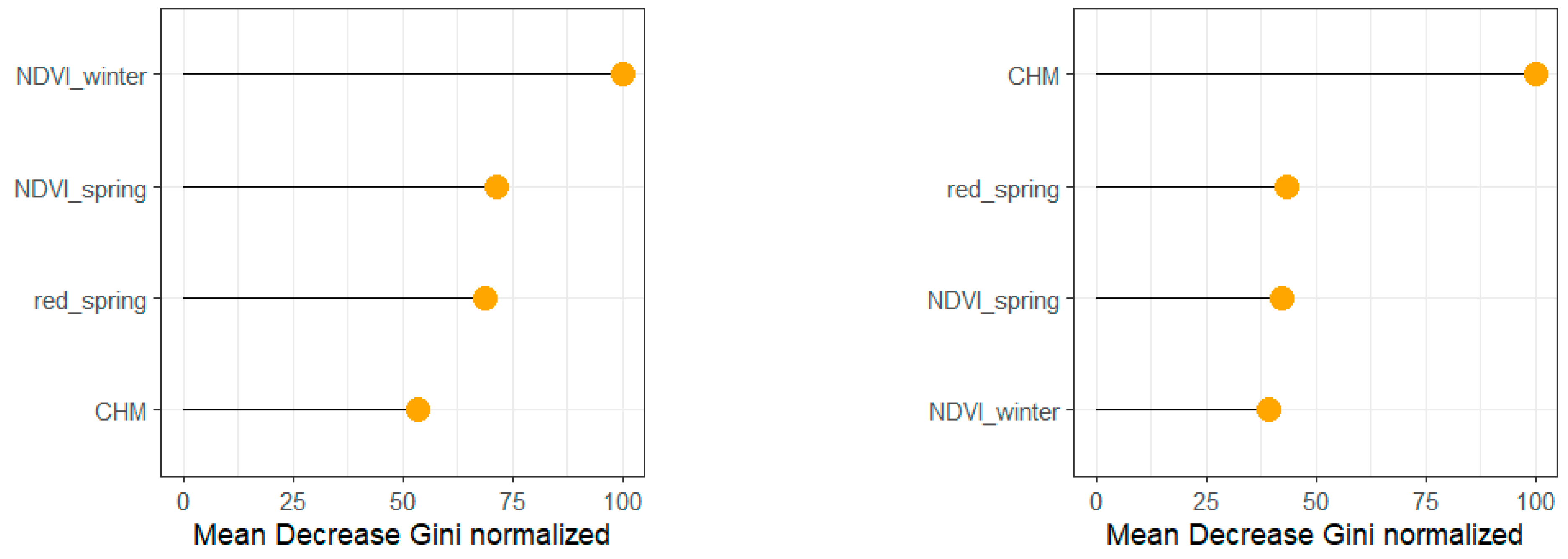

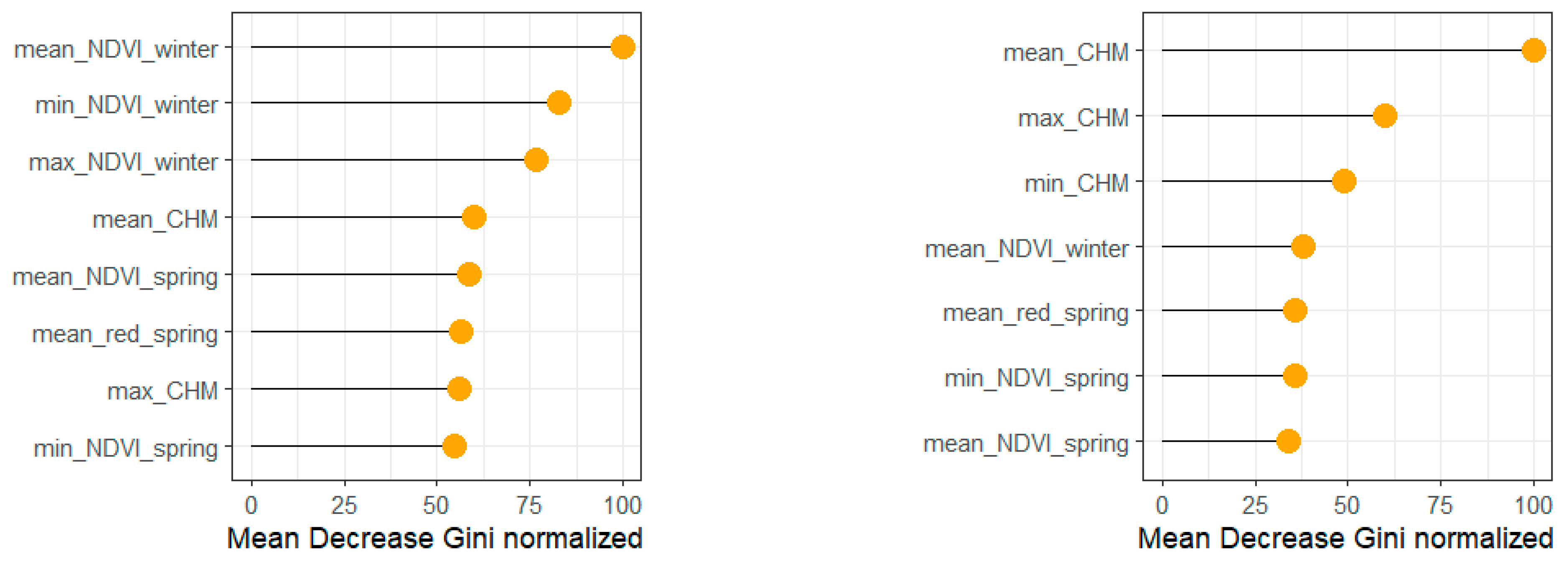

3.2. Pixel-Based Classification

3.3. Object-Based Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anthroecology Lab. Shrublands. Available online: https://anthroecology.org/anthromes/guide/shrublands/ (accessed on 4 February 2024).

- Gimingham, C.H. An Introduction to Heathland Ecology; Oliver & Boyd: Edinburgh, UK, 1975. [Google Scholar]

- Loidi, J.; Campos, J.A.; Haveman, R.; Janssen, J. Shrublands of temperate Europe. In Forests—Trees of Life; M. Goldstein, M.I., DellaSala, D.A., Eds.; Elsevier: Amsterdam, The Netherlands, 2020; Volume 3. [Google Scholar] [CrossRef]

- European Commission. Interpretation Manual of European Union Habitats—EUR 28; DG Environment. Nature ENV B.3; European Commission, 2013. Available online: https://www.mase.gov.it/sites/default/files/archivio/allegati/rete_natura_2000/int_manual_eu28.pdf (accessed on 13 March 2025).

- De Graaf, M.C.C.; Bobbink, R.; Smits, N.A.C.; Van Diggelen, R.; Roelofs, J.G.M. Biodiversity, vegetation gradients and key biogeochemical processes in the heathland landscape. Biol. Conserv. 2009, 142, 2191–2201. [Google Scholar] [CrossRef]

- Walmsley, D.C.; Delory, B.M.; Alonso, I.; Temperton, V.M.; Härdtle, W. Ensuring the Long-Term Provision of Heathland Ecosystem Services—The Importance of a Functional Perspective in Management Decision Frameworks. Front. Ecol. Evol. 2021, 9, 791364. [Google Scholar] [CrossRef]

- Fagúndez, J. Heathlands confronting global change: Drivers of biodiversity loss from past to future scenarios. Ann. Bot. 2013, 111, 151–172. [Google Scholar] [CrossRef]

- Piessens, K.; Honnay, O.; Hermy, M. The role of fragment area and isolation in the conservation of heathland species. Biol. Conserv. 2005, 122, 61–69. [Google Scholar] [CrossRef]

- Webb, N.R. The Traditional Management of European Heathlands. J. Appl. Ecol. 1998, 35, 987–990. [Google Scholar] [CrossRef]

- Wessel, W.; Tietema, A.; Beier, C.; Emmett, B.; Peñuelas, J.; Riis-Nielsen, T. A qualitative ecosystem assessment for different shrublands in Western Europe under impact of climate change. Ecosystems 2004, 7, 662–671. [Google Scholar] [CrossRef]

- EUNIS. Factsheet for European Dry Heaths. Available online: https://eunis.eea.europa.eu/habitats/10084 (accessed on 4 February 2024).

- Gómez-González, S.; Paniw, M.; Durán, M.; Picó, S.; Martín-Rodríguez, I.; Ojeda, F. Mediterranean Heathland as a Key Habitat for Fire Adaptations: Evidence from an Experimental Approach. Forests 2020, 11, 748. [Google Scholar] [CrossRef]

- Olmeda, C.; Šefferová, V.; Underwood, E.; Millan, L.; Gil, T.; Naumann, S. EU Action Plan to Maintain and Restore to Favourable Conservation Status the Habitat Type 4030 European Dry Heaths; European Commission: Brussels, Belgium, 2020. [Google Scholar]

- Millington, A.C.; Alexander, R.W. (Eds.) Vegetation Mapping in the Last Three Decades of the Twentieth Century. In Vegetation Mapping. From Patch to Planet; John Wiley & Sons Ltd.: Chichester, UK, 2000; pp. 321–332. [Google Scholar]

- Xie, Y.; Sha, Z.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Assiri, M.; Sartori, A.; Persichetti, A.; Miele, C.; Faelga, R.A.; Blount, T.; Silvestri, S. Leaf area index and aboveground biomass estimation of an alpine peatland with a UAV multi-sensor approach. GIScience Remote Sens. 2023, 60, 2270791. [Google Scholar] [CrossRef]

- de Castro, A.I.; Shi, Y.; Maja, J.M.; Peña, J.M. UAVs for Vegetation Monitoring: Overview and Recent Scientific Contributions. Remote Sens. 2021, 13, 2139. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Díaz-Varela, R.A.; Álvarez-González, J.G.; González, P.M.R. Assessing a novel modelling approach with high resolution UAV imagery for monitoring health status in priority riparian forests. For. Ecosyst. 2021, 8, 61. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Dor, E.B.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Othman, N.Q.H.; Li, Y.; Alsharif, M.H.; Khan, M.A. Unmanned aerial vehicles (UAVs): Practical aspects, applications, open challenges, security issues, and future trends. Intelligent Service. Robotics 2023, 16, 109–137. [Google Scholar] [CrossRef] [PubMed]

- Detka, J.; Coyle, H.; Gomez, M.; Gilbert, G.S. A Drone-Powered Deep Learning Methodology for High Precision Remote Sensing in California’s Coastal Shrubs. Drones 2023, 7, 421. [Google Scholar] [CrossRef]

- Prošek, J.; Šímová, P. UAV for mapping shrubland vegetation: Does fusion of spectral and vertical information derived from a single sensor increase the classification accuracy? Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 151–162. [Google Scholar] [CrossRef]

- Díaz Varela, R.A.; Ramil Rego, P.; Calvo Iglesias, S.; Muñoz Sobrino, C. Automatic habitat classification methods based on satellite images: A practical assessment in the NW Iberia coastal mountains. Environ. Monit. Assess. 2008, 144, 229–250. [Google Scholar] [CrossRef]

- Fraser, R.H.; Olthof, I.; Lantz, T.C.; Schmitt, C. UAV photogrammetry for mapping vegetation in the low-Arctic. Arct. Sci. 2016, 2, 79–102. [Google Scholar] [CrossRef]

- Moritake, K.; Cabezas, M.; Nhung, T.T.C.; Lopez Caceres, M.L.; Diez, Y. Sub-alpine shrub classification using UAV images: Performance of human observers vs DL classifiers. Ecol. Inform. 2024, 80, 102462. [Google Scholar] [CrossRef]

- Li, Z.; Ding, J.; Zhang, H.; Feng, Y. Classifying Individual Shrub Species in UAV Images—A Case Study of the Gobi Region of Northwest China. Remote Sens. 2021, 13, 4995. [Google Scholar] [CrossRef]

- Mücher, C.A.; Kooistra, L.; Vermeulen, M.; Borre, J.V.; Haest, B.; Haveman, R. Quantifying structure of Natura 2000 heathland habitats using spectral mixture analysis and segmentation techniques on hyperspectral imagery. Ecol. Indic. 2013, 33, 71–81. [Google Scholar] [CrossRef]

- Sankey, T.T.; McVay, J.; Swetnam, T.L.; McClaran, M.P.; Heilman, P.; Nichols, M. UAV hyperspectral and lidar data and their fusion for arid and semi-arid land vegetation monitoring. Remote Sens. Ecol. Conserv. 2018, 4, 20–33. [Google Scholar] [CrossRef]

- Sankey, J.B.; Sankey, T.T.; Li, J.; Ravi, S.; Wang, G.; Caster, J.; Kasprak, A. Quantifying plant-soil-nutrient dynamics in rangelands: Fusion of UAV hyperspectral-LiDAR, UAV multispectral-photogrammetry, and ground-based LiDAR-digital photography in a shrub-encroached desert grassland. Remote Sens. Environ. 2021, 253, 112223. [Google Scholar] [CrossRef]

- Carbonell-Rivera, J.P.; Torralba, J.; Estornell, J.; Ruiz, L.Á.; Crespo-Peremarch, P. Classification of Mediterranean Shrub Species from UAV Point Clouds. Remote Sens. 2022, 14, 199. [Google Scholar] [CrossRef]

- Gonzalez Musso, R.F.; Oddi, F.J.; Goldenberg, M.G.; Garibaldi, L.A. Applying unmanned aerial vehicles (UAVs) to map shrubland structural attributes in northern Patagonia, Argentina. Jt. Virtual Issue Appl. UAVs For. Sci. 2020, 1, 615–623. [Google Scholar] [CrossRef]

- Klouček, T.; Klápště, P.; Marešová, J.; Komárek, J. UAV-Borne Imagery Can Supplement Airborne Lidar in the Precise Description of Dynamically Changing Shrubland Woody Vegetation. Remote Sens. 2022, 14, 2287. [Google Scholar] [CrossRef]

- Estornell, J.; Ruiz, L.A.; Velázquez-Marti, B. Study of Shrub Cover and Height Using LIDAR Data in a Mediterranean Area. For. Sci. 2011, 57, 171–179. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, X.; Wang, Y.; Zheng, Z.; Zheng, S.; Zhao, D.; Bai, Y. UAV-based individual shrub aboveground biomass estimation calibrated against terrestrial LiDAR in a shrub-encroached grassland. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102358. [Google Scholar] [CrossRef]

- Aicardi, I.; Dabove, P.; Lingua, A.M.; Piras, M. Integration between TLS and UAV photogrammetry techniques for forestry applications. IForest 2016, 10, 41–47. [Google Scholar] [CrossRef]

- Anderson, K.E.; Glenn, N.F.; Spaete, L.P.; Shinneman, D.J.; Pilliod, D.S.; Arkle, R.S.; McIlroy, S.K.; Derryberry, D.R. Estimating vegetation biomass and cover across large plots in shrub and grass dominated drylands using terrestrial lidar and machine learning. Ecol. Indic. 2018, 84, 793–802. [Google Scholar] [CrossRef]

- Tian, J.; Li, H.; Sun, X.; Zhou, Y.; Ma, W.; Chen, J.; Zhang, J.; Xu, Y. Quality assessment of shrub observation data based on TLS: A case of revegetated shrubland, Southern Qinghai-Tibetan Plateau. Land Degrad. Dev. 2023, 34, 1570–1581. [Google Scholar] [CrossRef]

- Zabihi, K.; Paige, G.B.; Wuenschel, A.; Abdollahnejad, A.; Panagiotidis, D. Increased understanding of structural complexity in nature: Relationship between shrub height and changes in spatial patterns. SCIREA J. Geosci. 2023, 7, 78–95. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Slavík, M. 3D point cloud fusion from UAV and TLS to assess temperate managed forest structures. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102917. [Google Scholar] [CrossRef]

- Alonso-Rego, C.; Arellano-Pérez, S.; Cabo, C.; Ordoñez, C.; Álvarez-González, J.G.; Díaz-Varela, R.A.; Ruiz-González, A.D. Estimating Fuel Loads and Structural Characteristics of Shrub Communities by Using Terrestrial Laser Scanning. Remote Sens. 2020, 12, 3704. [Google Scholar] [CrossRef]

- van Blerk, J.J.; West, A.G.; Smit, J.; Altwegg, R.; Hoffman, M.T. UAVs improve detection of seasonal growth responses during post-fire shrubland recovery. Landsc. Ecol. 2022, 37, 3179–3199. [Google Scholar] [CrossRef]

- Olsoy, P.J.; Zaiats, A.; Delparte, D.M.; Germino, M.J.; Richardson, B.A.; Roser, A.V.; Forbey, J.S.; Cattau, M.E.; Caughlin, T.T. Demography with drones: Detecting growth and survival of shrubs with unoccupied aerial systems. Restor. Ecol. 2024, 32, e14106. [Google Scholar] [CrossRef]

- Pérez-Luque, A.J.; Ramos-Font, M.E.; Tognetti Barbieri, M.J.; Tarragona Pérez, C.; Calvo Renta, G.; Robles Cruz, A.B. Vegetation Cover Estimation in Semi-Arid Shrublands after Prescribed Burning: Field-Ground and Drone Image Comparison. Drones 2022, 6, 370. [Google Scholar] [CrossRef]

- Riaño, D.; Chuvieco, E.; Ustin, S.L.; Salas, J.; Rodríguez-Pérez, J.R.; Ribeiro, L.M.; Viegas, D.X.; Moreno, J.M.; Fernández, H. Estimation of shrub height for fuel-type mapping combining airborne LiDAR and simultaneous colour infrared ortho imaging. Int. J. Wildland Fire 2007, 16, 341–348. [Google Scholar] [CrossRef]

- Streutker, D.R.; Glenn, N.F. LiDAR measurement of sagebrush steppe vegetation heights. Remote Sens. Environ. 2006, 102, 135–145. [Google Scholar] [CrossRef]

- Vega, J.A.; Álvarez-González, J.G.; Arellano-Pérez, S.; Fernández, C.; Cuiñas, P.; Jiménez, E.; Fernández-Alonso, J.M.; Fontúrbel, T.; Alonso-Rego, C.; Ruiz-González, A.D. Developing customized fuel models for shrub and bracken communities in Galicia (NW Spain). J. Environ. Manag. 2024, 351, 119831. [Google Scholar] [CrossRef]

- Roussel, J.R.; Auty, D. Airborne LiDAR Data Manipulation and Visualization for Forestry Applications. R Package Version 4.1.2. Available online: https://cran.r-project.org/package=lidR (accessed on 12 February 2024).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria; Available online: https://www.R-project.org/ (accessed on 4 February 2024).

- McGaughey, R.J. FUSION/LDV: Software for LIDAR Data Analysis and Visualization; USDA Forest Service; Pacific Northwest Research Station: Corvallis, OR, USA, 2023. [Google Scholar]

- CNIG. Centro de Descargas del CNIG (IGN). Available online: http://centrodedescargas.cnig.es (accessed on 5 February 2024).

- Mellor, A.; Boukir, S.; Haywood, A.; Jones, S. Exploring issues of training data imbalance and mislabelling on random forest performance for large area land cover classification using the ensemble margin. ISPRS J. Photogramm. Remote Sens. 2015, 105, 155–168. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Congalton, R.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 1st ed.; CRC/Lewis Press: Boca Raton, FL, USA, 1999. [Google Scholar]

- De Leeuw, J.; Jia, H.; Yang, L.; Liu, X.; Schmidt, K.; Skidmore, A.K. Comparing accuracy assessments to infer superiority of image classification methods. Int. J. Remote Sens. 2006, 27, 223–232. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Estornell, J.; Ruiz, L.A.; Velázquez-Martí, B.; Hermosilla, T. Analysis of the factors affecting LiDAR DTM accuracy in a steep shrub area. Int. J. Digit. Earth 2011, 4, 521–538. [Google Scholar] [CrossRef]

- Su, J.; Bork, E. Influence of Vegetation, Slope, and Lidar Sampling Angle on DEM Accuracy. Photogramm. Eng. Remote Sens. 2006, 72, 1265–1274. [Google Scholar] [CrossRef]

- Curcio, A.C.; Peralta, G.; Aranda, M.; Barbero, L. Evaluating the Performance of High Spatial Resolution UAV-Photogrammetry and UAV-LiDAR for Salt Marshes: The Cádiz Bay Study Case. Remote Sens. 2022, 14, 3582. [Google Scholar] [CrossRef]

- Zhao, X.; Su, Y.; Hu, T.; Cao, M.; Liu, X.; Yang, Q.; Guan, H.; Liu, L.; Guo, Q. Analysis of UAV lidar information loss and its influence on the estimation accuracy of structural and functional traits in a meadow steppe. Ecol. Indic. 2022, 135, 108515. [Google Scholar] [CrossRef]

- Rodríguez Dorribo, P.; Alonso Rego, C.; Díaz Varela, R.A. Shrub height estimation for habitat conservation in NW Iberian Peninsula (Spain) using UAV LiDAR point clouds. Eur. J. Remote Sens. 2024, 58, 2438626. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Casals, P.; Gabriel, E.; De Cáceres, M.; Ríos, A.I.; Castro, X. Composition and structure of Mediterranean shrublands for fuel characterization. Ann. For. Sci. 2023, 80, 23. [Google Scholar] [CrossRef]

- de Bello, F.; Lavorel, S.; Gerhold, P.; Reier, Ü.; Pärtel, M. A biodiversity monitoring framework for practical conservation of grasslands and shrublands. Biol. Conserv. 2010, 143, 9–17. [Google Scholar] [CrossRef]

- Demirbaş Çağlayan, S.; Leloglu, U.M.; Ginzler, C.; Psomas, A.; Zeydanlı, U.S.; Bilgin, C.C.; Waser, L.T. Species level classification of Mediterranean sparse forests-maquis formations using Sentinel-2 imagery. Geocarto Int. 2022, 37, 1587–1606. [Google Scholar] [CrossRef]

- Macintyre, P.; van Niekerk, A.; Mucina, L. Efficacy of multi-season Sentinel-2 imagery for compositional vegetation classification. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101980. [Google Scholar] [CrossRef]

- Chan, J.C.-W.; Beckers, P.; Spanhove, T.; Borre, J.V. An evaluation of ensemble classifiers for mapping Natura 2000 heathland in Belgium using spaceborne angular hyperspectral (CHRIS/Proba) imagery. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 13–22. [Google Scholar] [CrossRef]

- Díaz-Varela, R.A.; Calvo Iglesias, S.; Cillero Castro, C.; Díaz Varela, E.R. Sub-metric analysis of vegetation structure in bog-heathland mosaics using very high resolution rpas imagery. Ecol. Indic. 2018, 89, 861–873. [Google Scholar] [CrossRef]

- Müllerová, J.; Gago, X.; Bučas, M.; Company, J.; Estrany, J.; Fortesa, J.; Manfreda, S.; Michez, A.; Mokroš, M.; Paulus, G.; et al. Characterizing vegetation complexity with unmanned aerial systems (UAS)—A framework and synthesis. Ecol. Indic. 2021, 131, 108156. [Google Scholar] [CrossRef]

- Simpson, G.; Nichol, C.J.; Wade, T.; Helfter, C.; Hamilton, A.; Gibson-Poole, S. Species-Level Classification of Peatland Vegetation Using Ultra-High-Resolution UAV Imagery. Drones 2024, 8, 97. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Fu, B.; Wang, Y.; Campbell, A.; Li, Y.; Zhang, B.; Yin, S.; Xing, Z.; Jin, X. Comparison of object-based and pixel-based Random Forest algorithm for wetland vegetation mapping using high spatial resolution GF-1 and SAR data. Ecol. Indic. 2017, 73, 105–117. [Google Scholar] [CrossRef]

- Berhane, T.M.; Lane, C.R.; Wu, Q.; Anenkhonov, O.A.; Chepinoga, V.V.; Autrey, B.C.; Liu, H. Comparing pixel-and object-based approaches in effectively classifying wetland-dominated landscapes. Remote Sens. 2017, 10, 46. [Google Scholar] [CrossRef]

- Arellano-Pérez, S.; Castedo-Dorado, F.; López-Sánchez, C.; González-Ferreiro, E.; Yang, Z.; Díaz-Varela, R.; Álvarez-González, J.G.; Vega, J.A.; Ruiz-González, A.D. Potential of sentinel-2A data to model surface and canopy fuel characteristics in relation to crown fire hazard. Remote Sens. 2018, 10, 1645. [Google Scholar] [CrossRef]

- D’Este, M.; Elia, M.; Giannico, V.; Spano, G.; Lafortezza, R.; Sanesi, G. Machine learning techniques for fine dead fuel load estimation using multi-source remote sensing data. Remote Sens. 2021, 13, 1658. [Google Scholar] [CrossRef]

- Keerthinathan, P.; Amarasingam, N.; Hamilton, G.; Gonzalez, F. Exploring unmanned aerial systems operations in wildfire management: Data types, processing algorithms and navigation. International J. Remote Sens. 2023, 44, 5628–5685. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, X.; Wang, Z.; Yang, L.; Xie, Y.; Huang, Y. UAVs as remote sensing platforms in plant ecology: Review of applications and challenges. J. Plant Ecol. 2021, 14, 1003–1023. [Google Scholar] [CrossRef]

- Fernández-Alonso, J.M.; Llorens, R.; Sobrino, J.A.; Ruiz-González, A.D.; Alvarez-González, J.G.; Vega, J.A.; Fernández, C. Exploring the potential of lidar and sentinel-2 data to model the post-fire structural characteristics of gorse shrublands in NW Spain. Remote Sens. 2022, 14, 6063. [Google Scholar] [CrossRef]

- Beltrán-Marcos, D.; Suárez-Seoane, S.; Fernández-Guisuraga, J.M.; Fernández-García, V.; Marcos, E.; Calvo, L. Relevance of UAV and sentinel-2 data fusion for estimating topsoil organic carbon after forest fire. Geoderma 2023, 430, 116290. [Google Scholar] [CrossRef]

- Riihimäki, H.; Luoto, M.; Heiskanen, J. Estimating fractional cover of tundra vegetation at multiple scales using unmanned aerial systems and optical satellite data. Remote Sens. Environ. 2019, 224, 119–132. [Google Scholar] [CrossRef]

- Morgan, G.R.; Hodgson, M.E.; Wang, C.; Schill, S.R. Unmanned aerial remote sensing of coastal vegetation: A review. Ann. GIS 2022, 28, 385–399. [Google Scholar] [CrossRef]

| Vegetation Class | Description |

|---|---|

| High shrub—heath | Formation of high and dense shrubs (>1 m height) dominated by Erica australis and Erica arborea |

| High shrub—broom | Formation of high, dense shrubs (>1 m height) dominated by Cytisus spp. |

| Low shrub | Shrub formation diverse in coverage and low or dwarf size (<1 m height) dominated by different woody species (Erica cinerea, Calluna vulgaris, Pterospartum tridentatum, Halimium lasianthum, and Ulex gallii, among others) with a variable share of herbaceous species other than bracken (Pteridium aquilinum) |

| Bracken | Dense formations of Pteridium aquilinum |

| Bare ground | Areas with low or no vegetation coverage (rocky habitats and bare ground) |

| Fuel Model | Description |

|---|---|

| Shrub-1 | Young shrub communities with low height (<60 cm) and low fuel loads or non-senescent communities dominated predominantly by Erica umbellata, E. mackaiana, or Cistus ladanifer. |

| Shrub-2 | Shrub communities with relatively small mean heights (<90 cm), although higher than Shrub-1, but with much larger loads, especially of fine fuels (diameter < 0.6 cm). |

| Shrub-3 | Shrub communities with higher heights (ranging from 90 to 170) and fuel loads than the two previous ones and with the highest load of both live and dead fine fuels, with the latter representing about 40% of the total fine fuel load. |

| Shrub-4 | Adult communities mainly dominated by species of the genera Cytisus, Erica australis, or E. arborea and Ulex europaeus, which have the highest heights (>170 cm) and largest total and coarse fuel loads (diameter ≥ 0.6 cm). |

| Bracken | Dense formations of Pteridium aquilinum |

| Date | RPAS | Sensor | Data Type | Nº Acquisitions | Pixel Size (cm) | Rationale |

|---|---|---|---|---|---|---|

| 18 April 2018 (spring, pre-burn) | Phantom3 Pro | Parrot Sequoia | Four-band multispectral | 1026 | 7.5 | Vegetation and fuel classification |

| 13 February 2019 (winter, pre-burn) | RPAS FV-8 Atyges | Sony Alfa 6300, Tokyo, Japan | RGB | 256 | 3.5 | Fuel height |

| 13 February 2019 (winter, pre-burn) | RPAS FV-8 Atyges | Micasense RededgeTM, Seattle, WA, USA | Five-band multispectral | 618 | 9.4 | Vegetation and fuel classification |

| 15 March 2019 (early spring, post-burn) | RPAS FV-8 Atyges | Sony Alfa 6300, Tokyo, Japan | RGB | 287 | 3.2 | Ground reference |

| Estimated Fuel Model | Observed Fuel Model | ||||||

|---|---|---|---|---|---|---|---|

| Shrub-1 | Shrub-2 | Shrub-3 | Shrub-4 | Total | Pro. Acc. | User Acc. | |

| Shrub-1 | 15 | 5 | 2 | 0 | 22 | 0.68 | 0.79 |

| Shrub-2 | 3 | 4 | 13 | 0 | 20 | 0.20 | 0.31 |

| Shrub-3 | 1 | 4 | 48 | 19 | 72 | 0.67 | 0.70 |

| Shrub-4 | 0 | 0 | 6 | 31 | 37 | 0.84 | 0.62 |

| Total | 19 | 13 | 69 | 50 | 151 | ||

| Estimated Vegetation Class | Observed Vegetation Class | |||||||

|---|---|---|---|---|---|---|---|---|

| High Shrub—Heath | High Shrub—Broom | Low Shrub | Bracken | Bare Ground | Total | Pro. Acc. | User Acc. | |

| High Shrub—Heath | 66 | 5 | 2 | 0 | 0 | 73 | 0.97 | 0.90 |

| High Shrub—Broom | 2 | 32 | 1 | 0 | 0 | 35 | 0.84 | 0.91 |

| Low Shrub | 0 | 1 | 19 | 0 | 0 | 20 | 0.86 | 0.95 |

| Bracken | 0 | 0 | 0 | 30 | 3 | 33 | 1.00 | 0.91 |

| Bare Ground | 0 | 0 | 0 | 0 | 19 | 19 | 0.86 | 1.00 |

| Total | 68 | 38 | 22 | 30 | 22 | 180 | ||

| Estimated Fuel Model | Observed Fuel Model | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Bracken | Bare ground | Shrub-1 | Shrub-2 | Shrub-3 | Shrub-4 | Total | Pro. Acc. | User Acc. | |

| Bracken | 30 | 3 | 0 | 0 | 0 | 0 | 33 | 1.00 | 0.91 |

| Bare ground | 0 | 19 | 0 | 0 | 0 | 0 | 19 | 0.86 | 1.00 |

| Shrub-1 | 0 | 0 | 13 | 4 | 0 | 0 | 17 | 0.81 | 0.76 |

| Shrub-2 | 0 | 0 | 1 | 0 | 1 | 0 | 2 | 0.00 | 0.00 |

| Shrub-3 | 0 | 0 | 2 | 5 | 57 | 7 | 71 | 0.95 | 0.80 |

| Shrub-4 | 0 | 0 | 0 | 0 | 2 | 36 | 38 | 0.84 | 0.95 |

| Total | 30 | 22 | 16 | 9 | 60 | 43 | 180 | ||

| Estimated Vegetation Class | Observed Vegetation Class | |||||||

|---|---|---|---|---|---|---|---|---|

| High Shrub—Heath | High Shrub—Broom | Low Shrub | Bracken | Bare Ground | Total | Pro. Acc. | User Acc. | |

| High Shrub—Heath | 67 | 5 | 0 | 0 | 0 | 72 | 0.99 | 0.93 |

| High Shrub—Broom | 1 | 33 | 0 | 0 | 0 | 34 | 0.87 | 0.97 |

| Low Shrub | 0 | 0 | 22 | 1 | 0 | 23 | 1.00 | 0.96 |

| Bracken | 0 | 0 | 0 | 29 | 0 | 29 | 0.97 | 1.00 |

| Bare Ground | 0 | 0 | 0 | 0 | 22 | 22 | 1.00 | 1.00 |

| Total | 68 | 38 | 22 | 30 | 22 | 180 | ||

| Estimated Fuel Model | Observed fuel model | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Bracken | Bare Ground | Shrub-1 | Shrub-2 | Shrub-3 | Shrub-4 | Total | Pro. Acc. | User Acc. | |

| Bracken | 30 | 0 | 0 | 0 | 0 | 0 | 30 | 1.00 | 1.00 |

| Bare ground | 0 | 22 | 0 | 0 | 0 | 0 | 22 | 1.00 | 1.00 |

| Shrub-1 | 0 | 0 | 15 | 5 | 0 | 0 | 20 | 0.94 | 0.75 |

| Shrub-2 | 0 | 0 | 1 | 1 | 0 | 0 | 2 | 0.11 | 0.50 |

| Shrub-3 | 0 | 0 | 0 | 3 | 60 | 4 | 67 | 1.00 | 0.90 |

| Shrub-4 | 0 | 0 | 0 | 0 | 0 | 39 | 39 | 0.91 | 1.00 |

| Total | 30 | 22 | 16 | 9 | 60 | 43 | 180 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Díaz-Varela, R.A.; Alonso-Rego, C.; Arellano-Pérez, S.; Briones-Herrera, C.I.; Álvarez-González, J.G.; Ruiz-González, A.D. Characterization of Shrub Fuel Structure and Spatial Distribution Using Multispectral and 3D Multitemporal UAV Data. Forests 2025, 16, 676. https://doi.org/10.3390/f16040676

Díaz-Varela RA, Alonso-Rego C, Arellano-Pérez S, Briones-Herrera CI, Álvarez-González JG, Ruiz-González AD. Characterization of Shrub Fuel Structure and Spatial Distribution Using Multispectral and 3D Multitemporal UAV Data. Forests. 2025; 16(4):676. https://doi.org/10.3390/f16040676

Chicago/Turabian StyleDíaz-Varela, Ramón Alberto, Cecilia Alonso-Rego, Stéfano Arellano-Pérez, Carlos Iván Briones-Herrera, Juan Gabriel Álvarez-González, and Ana Daría Ruiz-González. 2025. "Characterization of Shrub Fuel Structure and Spatial Distribution Using Multispectral and 3D Multitemporal UAV Data" Forests 16, no. 4: 676. https://doi.org/10.3390/f16040676

APA StyleDíaz-Varela, R. A., Alonso-Rego, C., Arellano-Pérez, S., Briones-Herrera, C. I., Álvarez-González, J. G., & Ruiz-González, A. D. (2025). Characterization of Shrub Fuel Structure and Spatial Distribution Using Multispectral and 3D Multitemporal UAV Data. Forests, 16(4), 676. https://doi.org/10.3390/f16040676