1. Introduction

Bamboo strips are the fundamental feedstock for producing bamboo products, and their quality directly determines product performance. In practice, a variety of surface defects often occur—arising from environmental stress and pest damage during growth, as well as from mechanical damage introduced during processing, transport, and storage. Typical defects include wormholes, chipped edges, decay, green bark residue, yellow pith residue, and splinters [

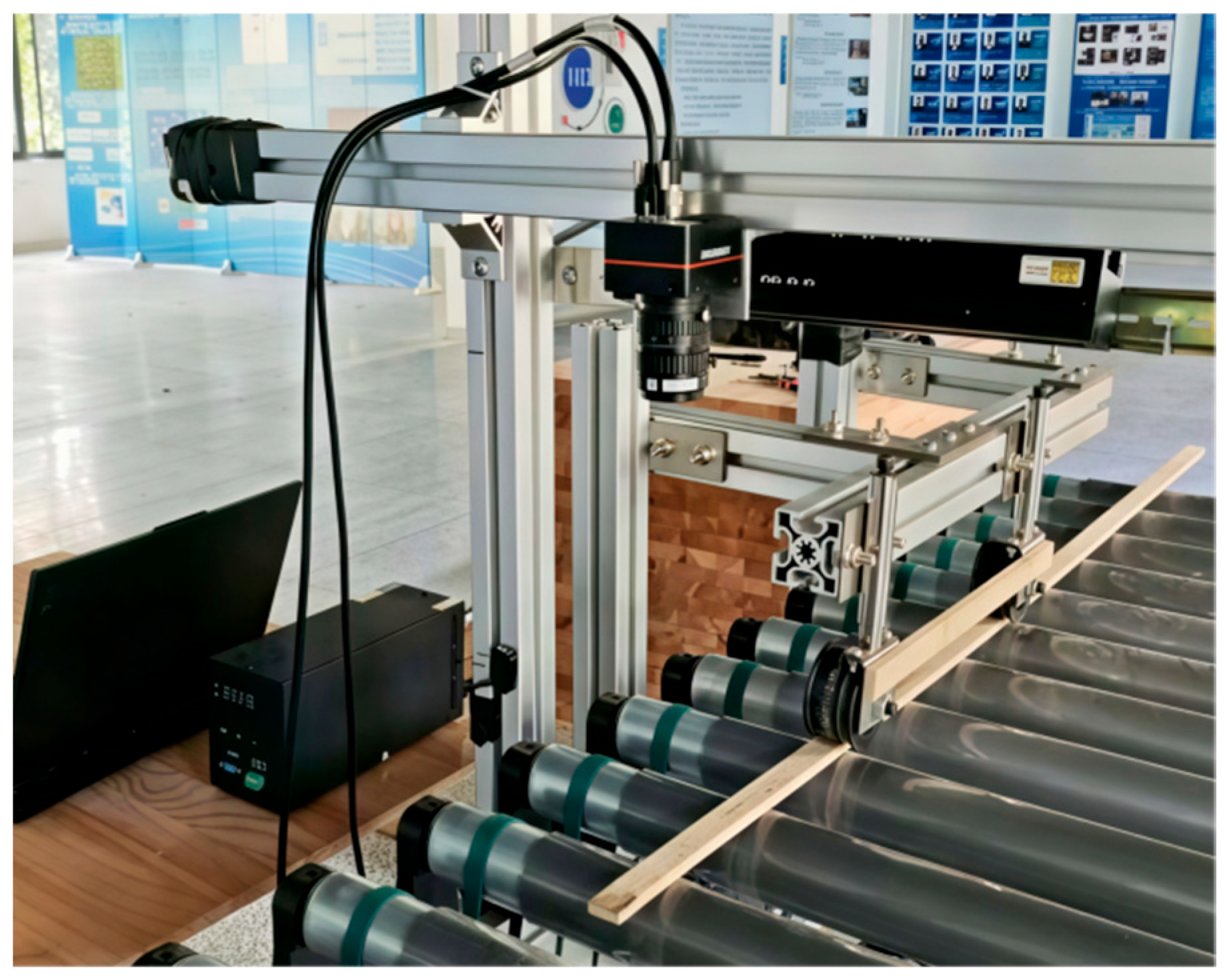

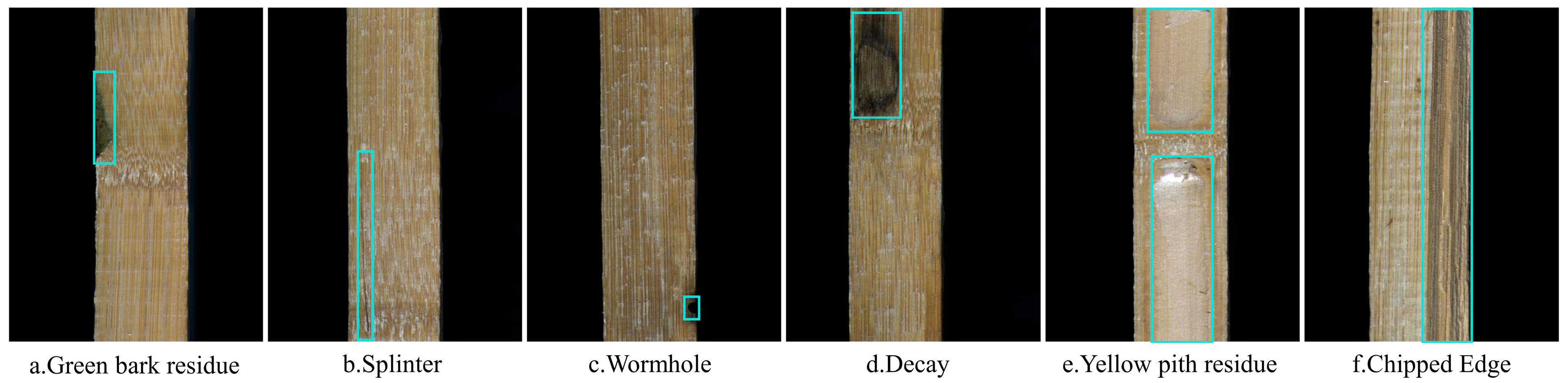

1]. These defects diminish mechanical strength and stability, shorten service life, and impair surface quality, ultimately reducing market value. A critical intermediate in bamboo laminated lumber [

2] manufacturing is the rough-planed bamboo strip; its surface quality directly affects adhesive bonding, grading, and material yield. During panel manufacture, multiple strips are bonded to form a single panel; however, the presence of just one defective strip can compromise the entire panel, resulting in a downgraded grade at best, or in scrappage and production stoppage at worst. By conservative estimates, defects lead to industry-wide profit losses approaching USD 140 million each year [

3]. At the global scale, the cross-border trade value of bamboo and rattan reached approximately USD 4.12 billion in 2022 [

4], indicating steady international demand and an active supply chain. Among downstream applications directly linked to bamboo strips, the bamboo flooring segment was about USD 1.43 billion in 2024 and is projected to reach USD 2.02 billion by 2034; in a broader scope, the global bamboo industry was on the order of USD 67.1 billion in 2024 [

5]. Accordingly, reliable inspection and screening of bamboo strips are critical for improving the quality of bamboo products and reducing manufacturing costs.

Current approaches to bamboo-strip surface defect detection fall into two categories: machine-vision methods and deep-learning–based object detection methods [

6]. Traditional machine-learning–based methods primarily exploit hand-crafted cues—color, texture, and edges—for defect detection [

7]. Zeng et al. [

8] extracted color features via color moments and texture features via the gray-level co-occurrence matrix, applied PCA for dimensionality reduction, and then used an SVM to classify bamboo into eight color grades, achieving promising recognition accuracy. Kuang et al. [

9] extracted regions of interest via thresholding and built a dual-modal texture representation by combining LBP and GLCM features, thereby validating the effectiveness of multi-feature fusion for bamboo-strip classification. Classical machine-vision approaches face labor-intensive feature engineering, weak cross-scene generalization, and nontrivial computational overhead [

10], which fall short of the efficiency and real-time requirements of modern bamboo-strip surface defect inspection.

In recent years, deep-learning–based image classification and object detection have gained significant traction [

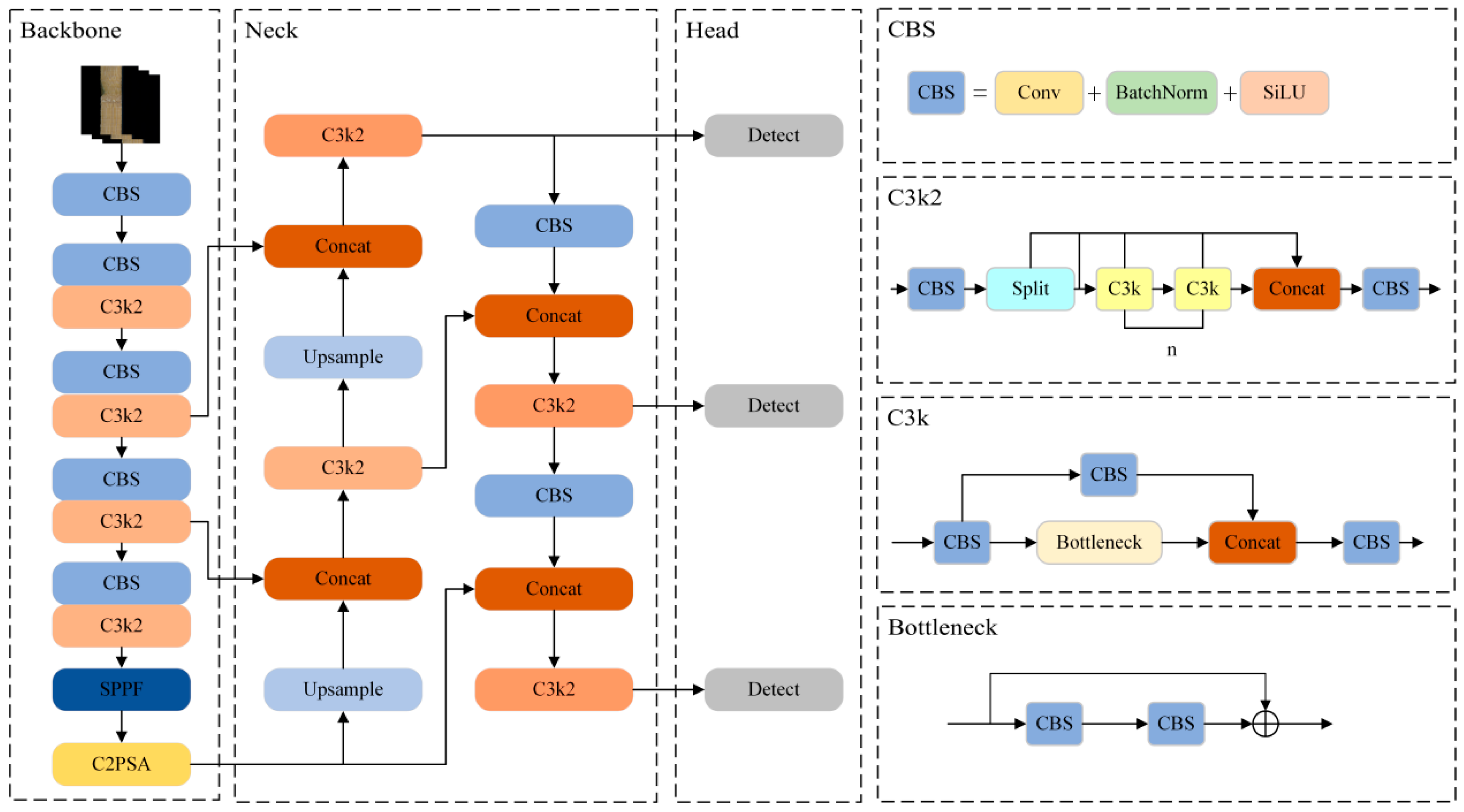

11]. Detectors are generally divided into one-stage methods [

12] and two-stage methods [

13]. Compared with their two-stage counterparts, one-stage detectors offer substantially higher speed while preserving accuracy; representative examples include YOLO [

14], SSD [

15], and RetinaNet [

16]. The YOLO family performs object classification and bounding-box regression simultaneously, enabling fast response, non-contact operation, and flexible deployment, and has been widely applied to surface-defect inspection of bamboo and wood [

17]. Nonetheless, in aspects most pertinent to real industrial deployments, common shortcomings persist. First, the continuity of elongated defects and the preservation of their topology are inadequate: high aspect ratio splinters and chipped edges are often fragmented into multiple detection boxes during inference, with poor boundary adherence, so that a single physical defect appears as disjoint predictions. Second, publicly available datasets specific to this task are scarce and the literature remains limited; moreover, datasets used in existing studies are typically small in scale, which leads to limited model generalization. Yang et al. [

18] introduced a Coordinate Attention (CA) module to enhance feature extraction for five defect types and refined the hierarchy with C2f, improving accuracy while reducing parameters for a lightweight model. In their later work, Yang et al. [

19] adapted an improved YOLOv8 to bamboo-strip defect detection, with targeted optimizations for small objects and complex textures, yielding a significant accuracy gain. Guo et al. [

20] addressed the peculiarity of high aspect-ratio, slender defects on bamboo surfaces by strengthening horizontal responses with an asymmetric convolution block and introducing a hybrid attention mechanism for joint spatial we adopt channel enhancement, achieving high accuracy across six typical defect classes. Accordingly, YOLO was adopted as the baseline in this study for further improvements.

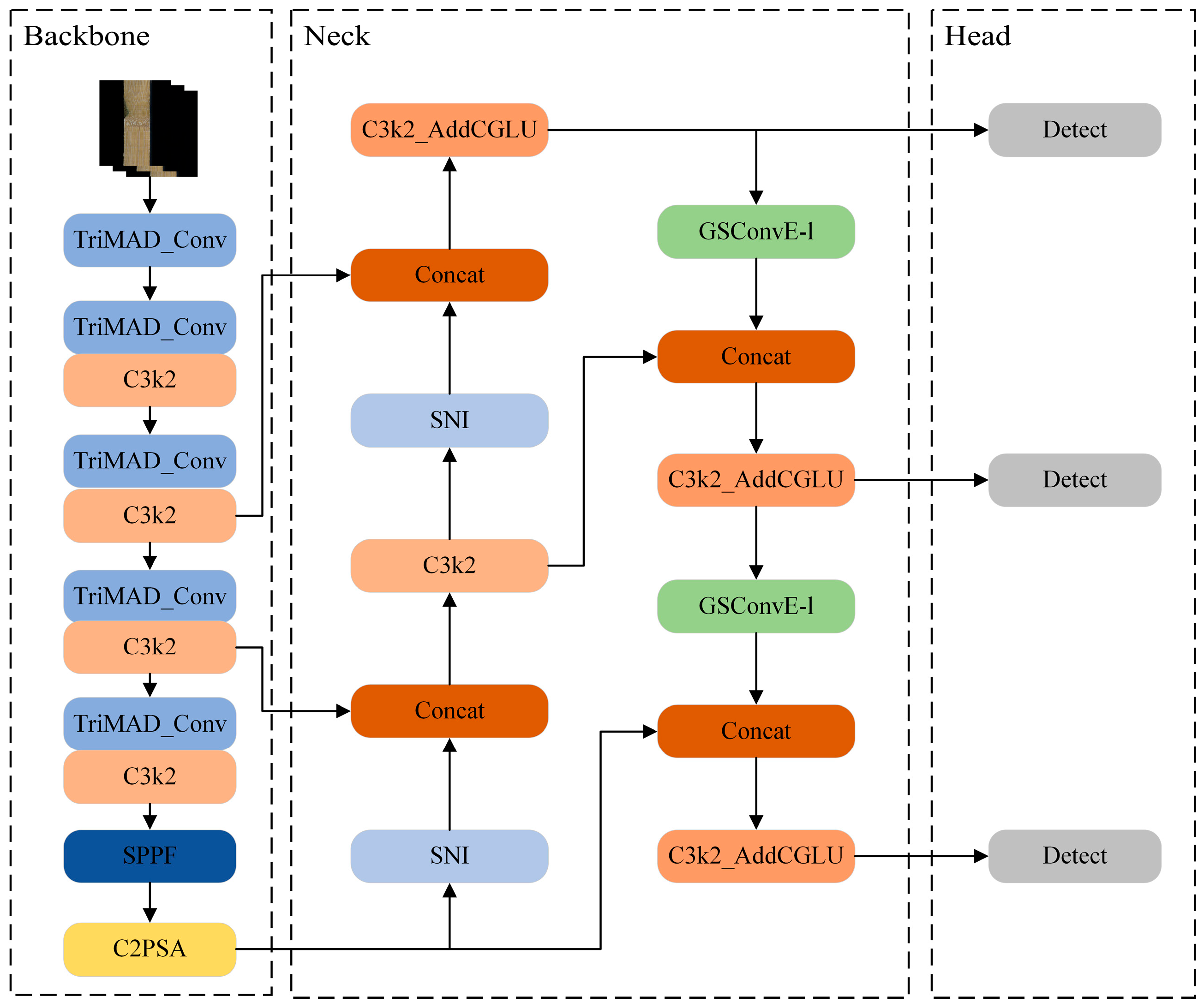

To address six surface defect types on rough-planed bamboo strips, this study proposed an improved YOLO11n-based detection method. The main contributions are as follows:

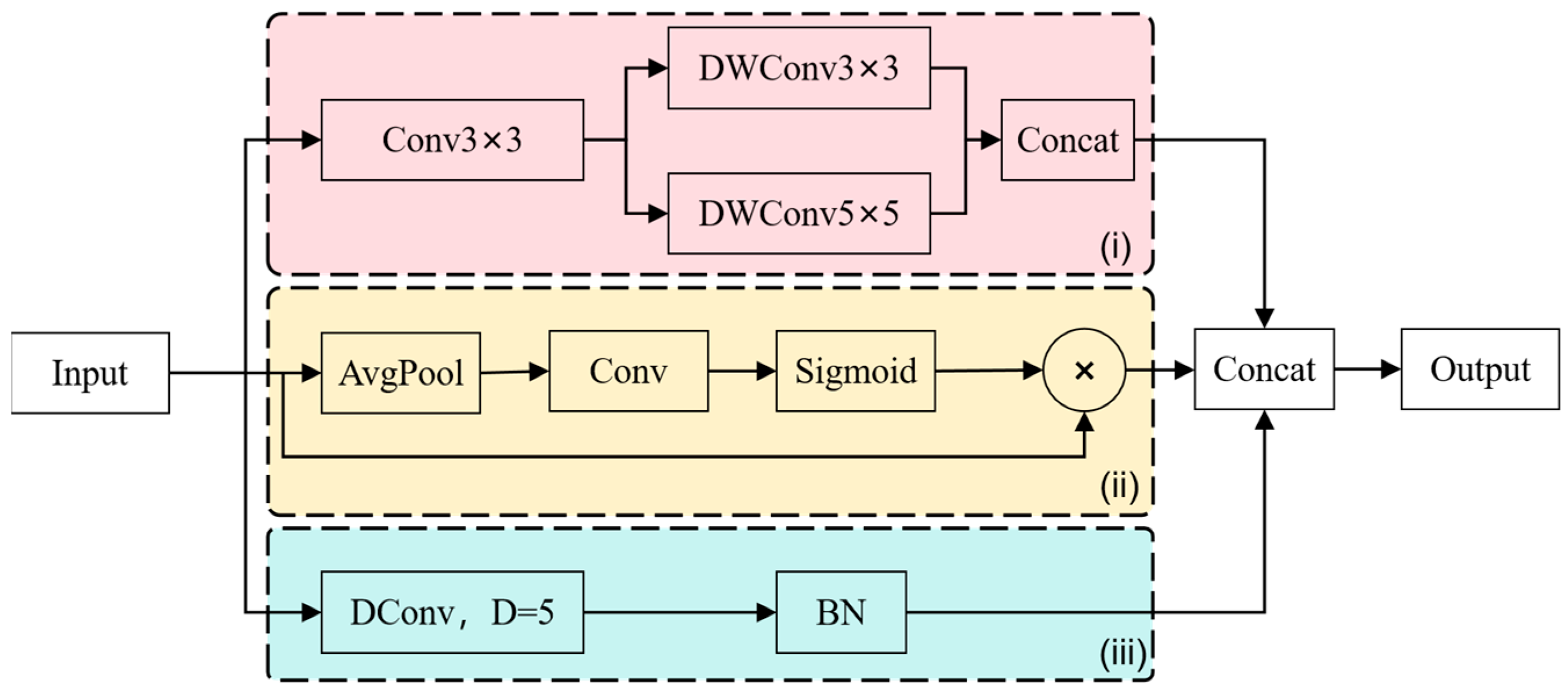

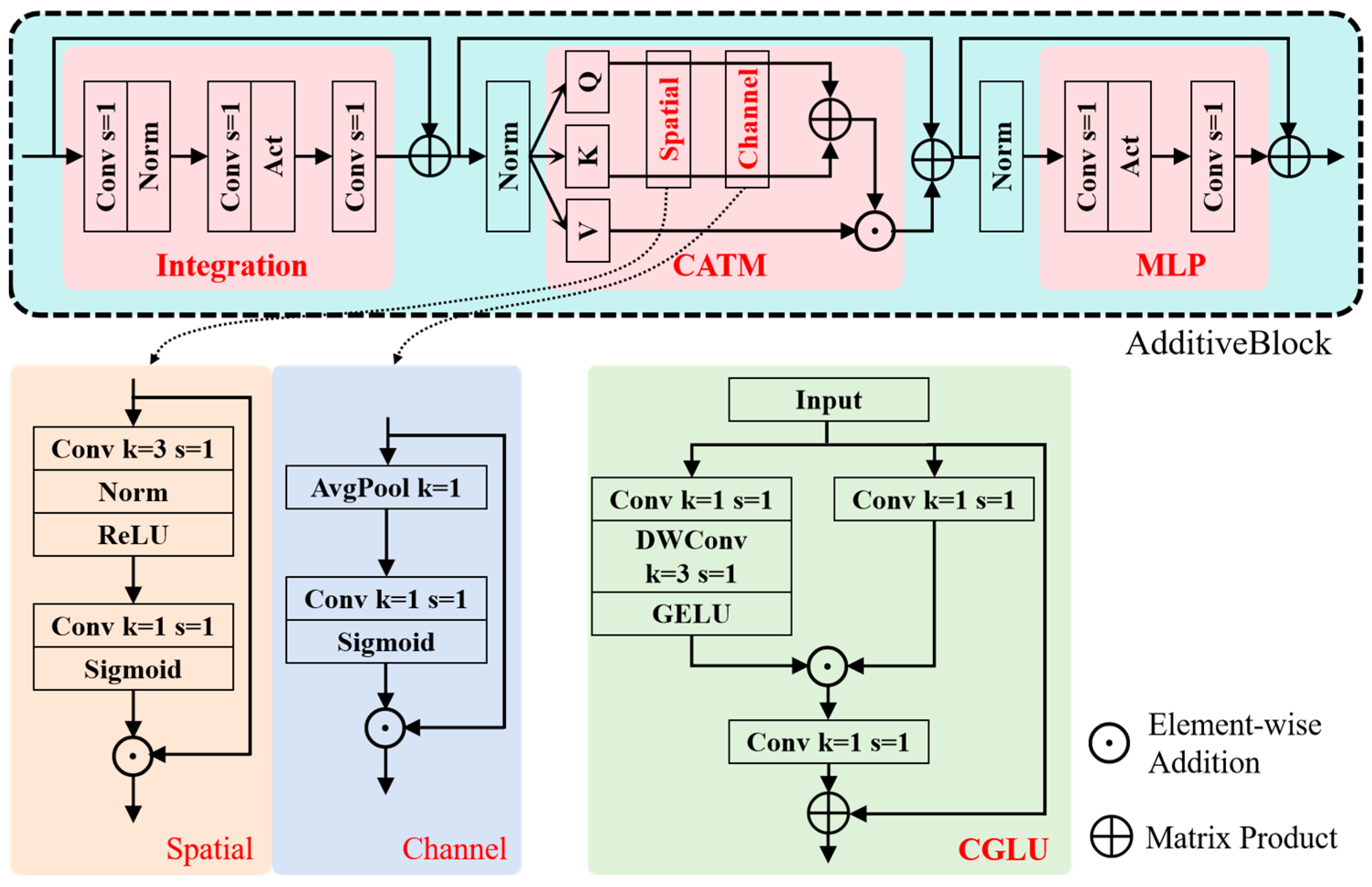

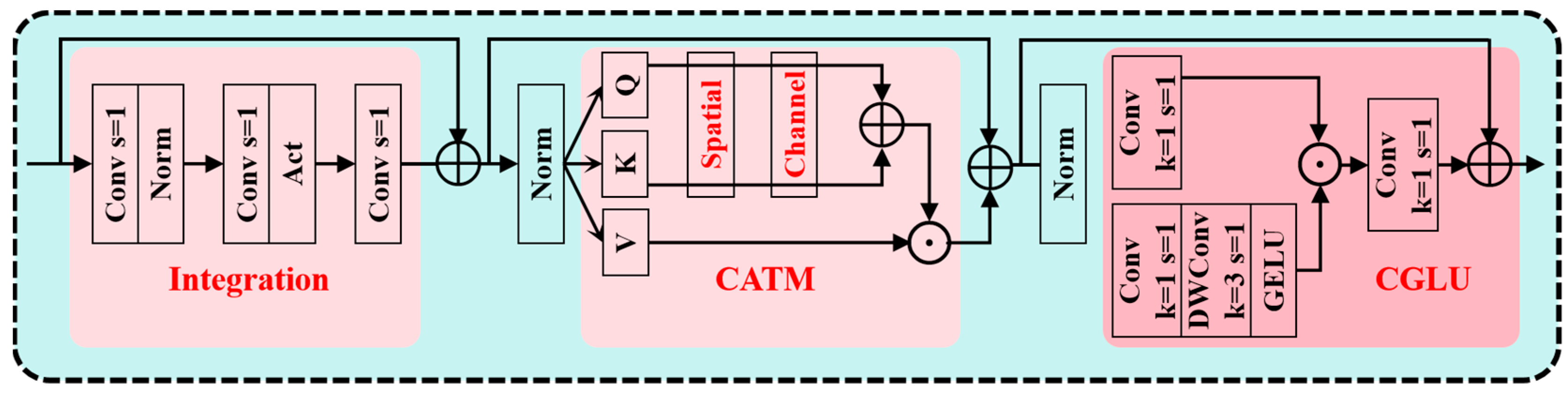

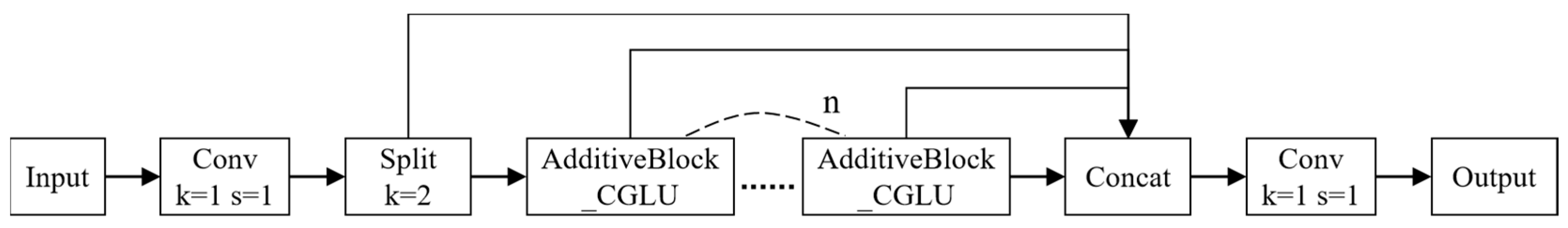

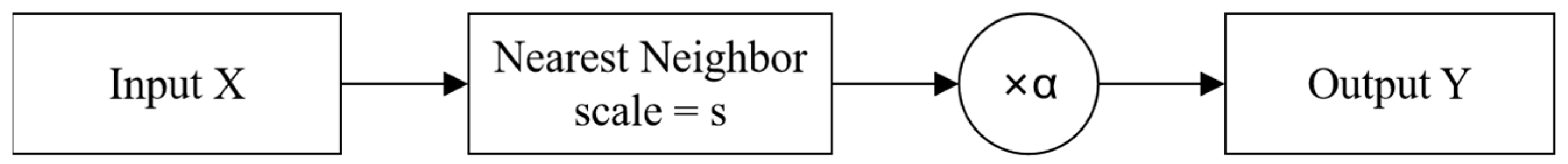

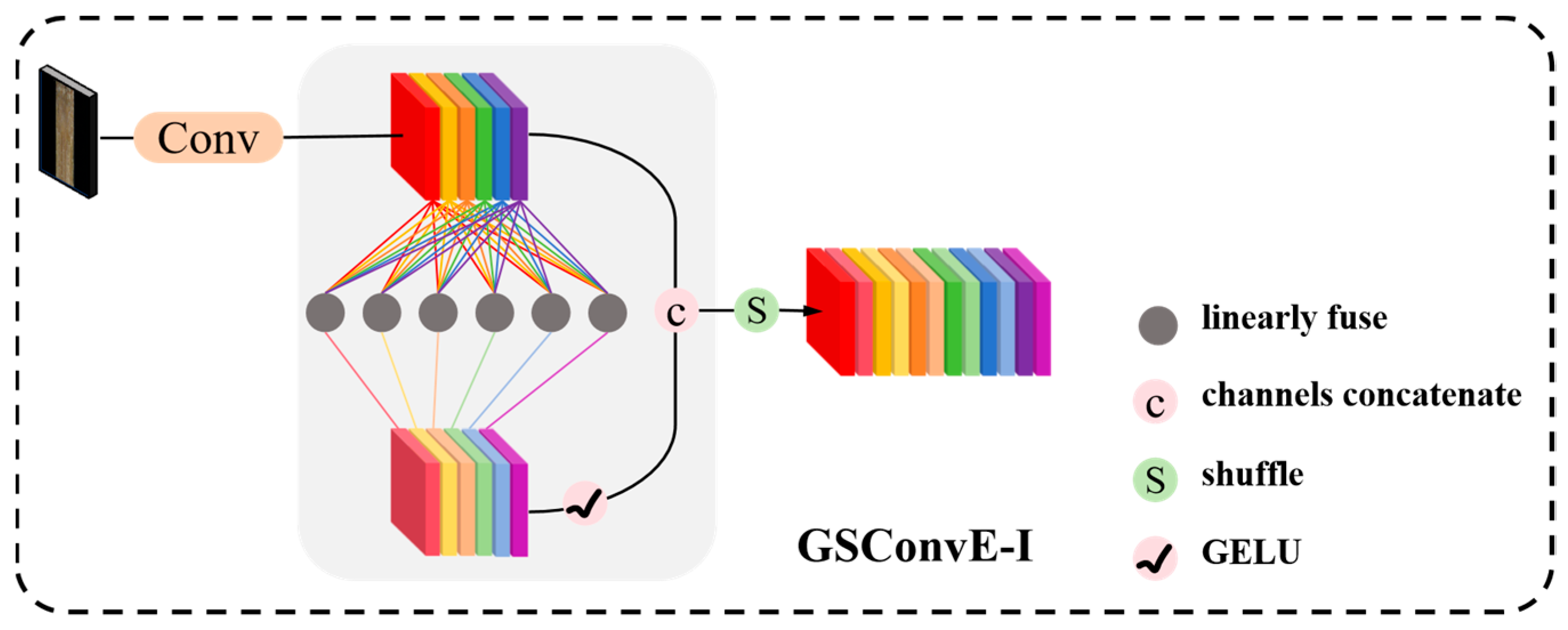

To mitigate the under-detection of wormholes and small-area green bark residue and decay, a tri-branch unit was designed for capturing fine edges while preserving surrounding texture, with channel attention suppressing interference from bamboo grain bands and knots. A dilated-convolution branch widened the effective field of view, improving small-object visibility and detection stability. C3k2_AddCGLU was integrated at the C3k2 stages, leveraging additive residual interaction and CGLU gating to sharpen channel selection and preserve details. This accentuated the differences between splinters and chipped edges and the bamboo grain band, reducing false positives and improving precision. To mitigate fragmentation and drift when aggregating along-grain elongated defects, SNI was adopted as the upsampling strategy in the neck and then fuse cross-scale features with GSConvE-I. This combination suppressed the blocky, jagged stair-step artifacts introduced by upsampling. Under an acceptable real-time budget, predictions for splinters and chipped edges became more contiguous and better aligned to edges, and wormholes detections appeared rounder.

4. Discussion

Amid global timber scarcity and mounting environmental pressures, bamboo—fast-growing, renewable, and non-wood—has become strategically important. Bamboo strips are the basic units of bamboo laminated lumber; their surface quality governs product grade and application range. Surface defects markedly degrade mechanical performance and bonding, weakening overall strength, stability, and durability, and thus limiting bamboo’s role in “bamboo-for-plastic” and “bamboo-for-wood” initiatives. As sustainability agendas advance, bamboo’s strong carbon sequestration and benign degradation make it a compelling substitute; however, uncontrolled strip-surface defects hinder quality improvement and scale-up, waste resources, and erode bamboo’s position in green supply chains. Consequently, improving strip surface quality and developing high-accuracy defect inspection are essential for high-value utilization of bamboo, alleviating timber shortages, and reducing plastic pollution [

30].

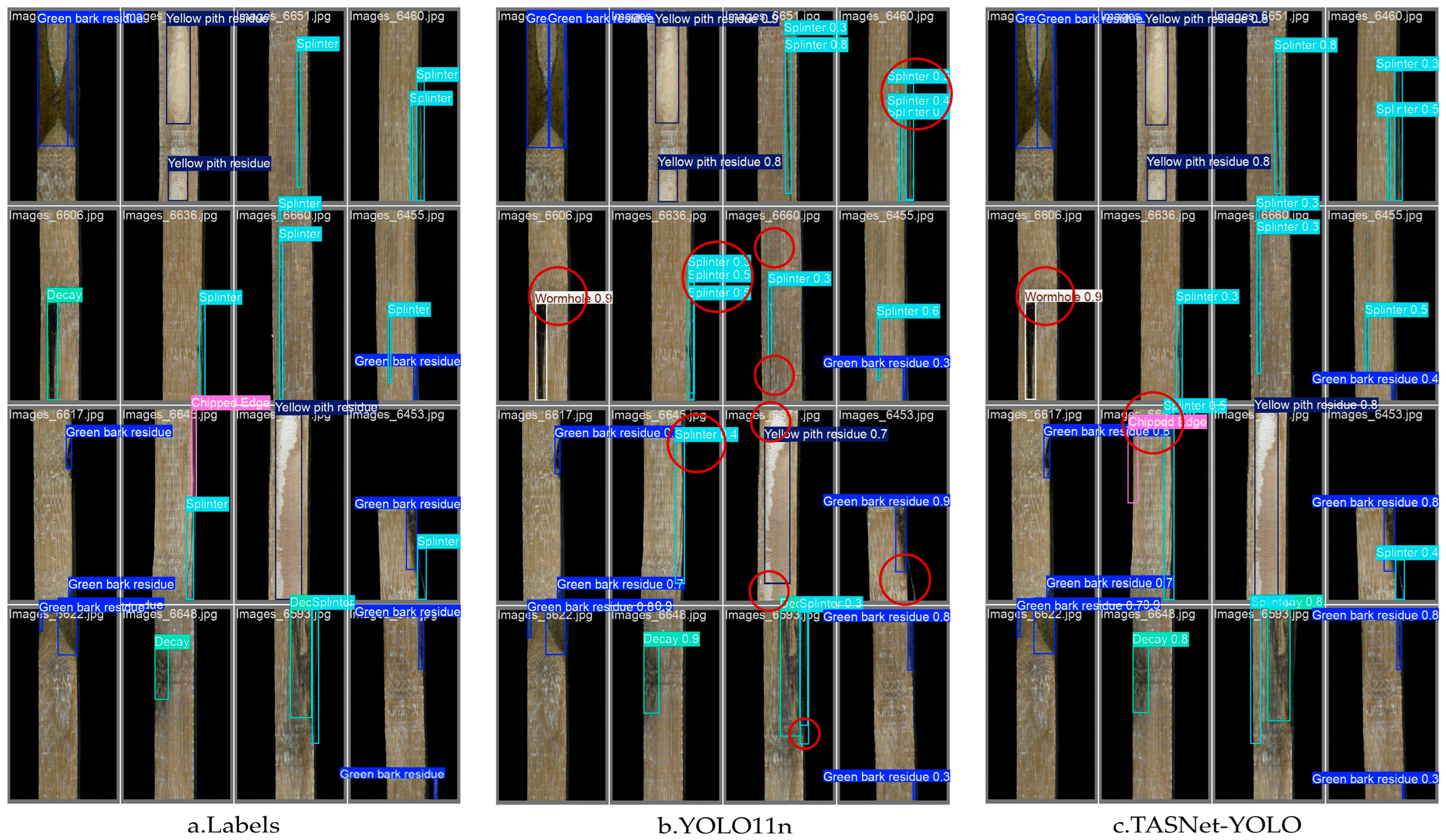

A systematic evaluation of bamboo-strip surface defects in an industrial setting was conducted in this study. Visual comparisons showed that the improved model was more stable on small targets and in complex textures: boundaries for wormholes, fine splinters, and chipped edges were more continuous; boxes adhere to edges more consistently; and false or missed detections decreased under strong periodic grain, glare, and local contamination (

Figure 11). These observations align with the quantitative gains, indicating better localization and higher recall on low-contrast targets. Why it works: TriMAD_Conv enriches local texture and long-range context through a lightweight multi-branch design with dilated receptive fields, helping preserve fine defects that might otherwise be lost during down-sampling. C3k2_AddCGLU performs channel-wise gated recalibration, suppressing non-discriminative responses over large homogeneous grain and uneven illumination while emphasizing truly anomalous details. On the neck, SNI softens nearest-neighbor upsampling to curb cross-layer misalignment and jagged artifacts, preserving shallow texture. GSConvE-I strengthens cross-channel communication and the effective receptive field via channel shuffle and lightweight aggregation, improving multi-scale fusion without additional compute. Together, these components deliver a better balance among accuracy, computation, and latency.

Nevertheless, there remains room for improvement. Although the overall parameter count is comparable to YOLO11n, computation and latency can be further reduced. By integrating pruning, distillation, and quantization without sacrificing accuracy, a more favorable balance among accuracy, speed, and model size, can be achieved, thereby meeting stricter real-time requirements on edge deployments. Meanwhile, it is necessary to increase the proportion of hard examples in the dataset. For categories such as splinters, decay, and chipped edges, which exhibit relatively low per-class detection accuracy, targeted data augmentation can partially compensate for biases caused by sample scarcity and thereby improve the accuracy and generalization of these minority defect classes. Future application-oriented work will focus on broadening the method’s applicability. Specifically, we will investigate domain adaptation on unlabeled target production lines to mitigate cross-plant and cross-season distribution shifts, pursue transfer learning across bamboo species to accommodate interspecies differences in texture and epidermal structure, and extend the model to other natural materials (wood, rattan, fiber composites) and to edge-device deployments, while preserving real-time performance to ensure deployability and operational stability.

5. Conclusions

Rough-planed bamboo strips present several detection challenges. Wormholes and small patches of green bark residue or decay are difficult to detect; splinters and chipped edges are easily masked by the periodic grain; elongated defects are often fragmented into multiple boxes during detection. To address these issues, we propose TASNet-YOLO, an improved detector built on YOLO11n. By incorporating TriMAD_Conv, C3k2_AddCGLU, and SNI-GSNeck, the model enhances sensitivity to tiny defects, improves feature discrimination under complex textures, and produces more coherent results for long, along-grain defects. On our in-house dataset it achieves 81.8% mAP and 106.4 FPS, striking a solid balance between accuracy and efficiency and demonstrating strong practical potential. The study also validates, at a methodological level, the effectiveness of multi-branch design, gated mechanisms, and lightweight convolutional fusion for bamboo-defect detection, offering a transferable recipe for detecting weak targets in textured backgrounds. Remaining gaps include recognition of chipped edges and generalization under extreme conditions; future work will focus on enlarging difficult classes and refining annotation protocols to advance deployment in industrial settings.