SegForest: A Segmentation Model for Remote Sensing Images

Abstract

1. Introduction

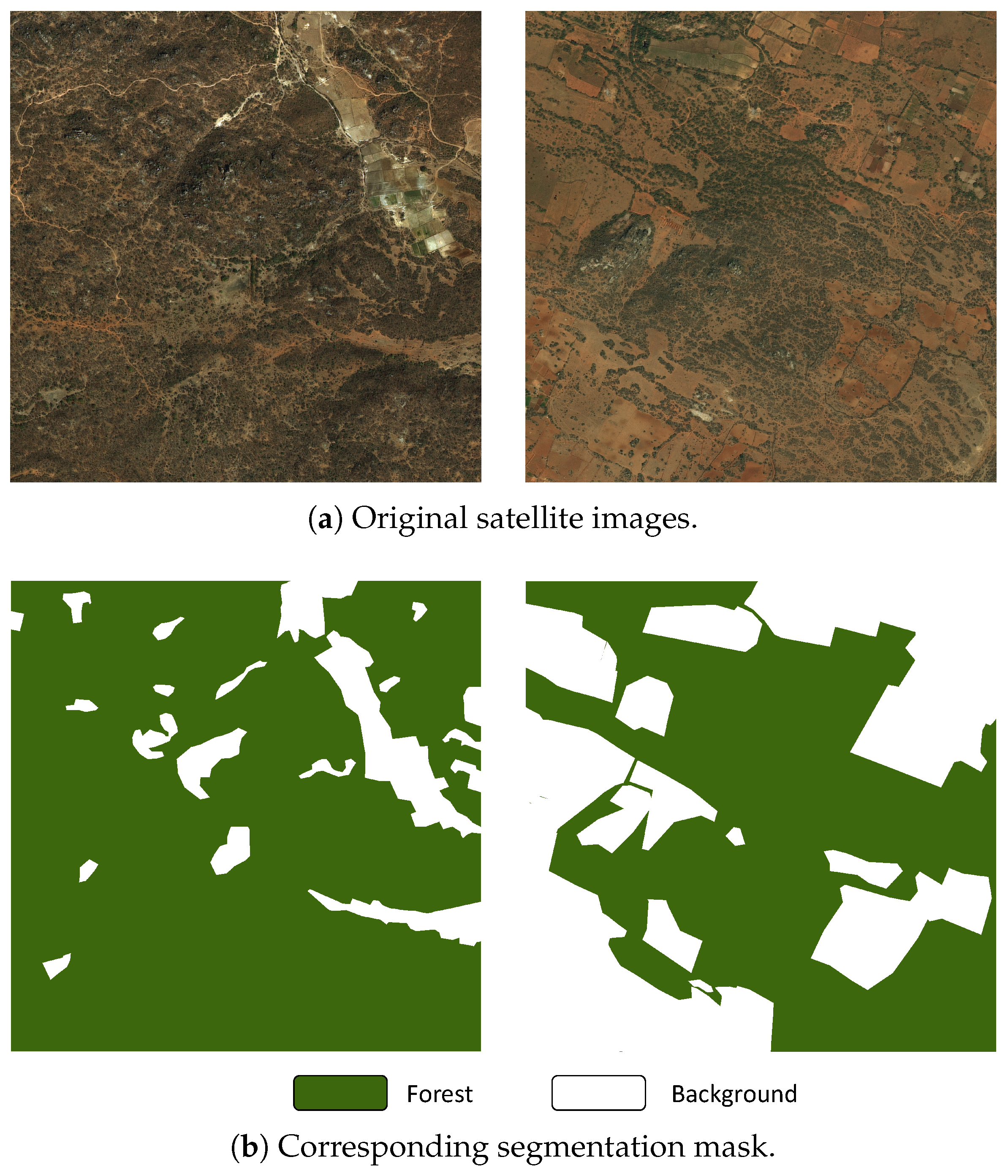

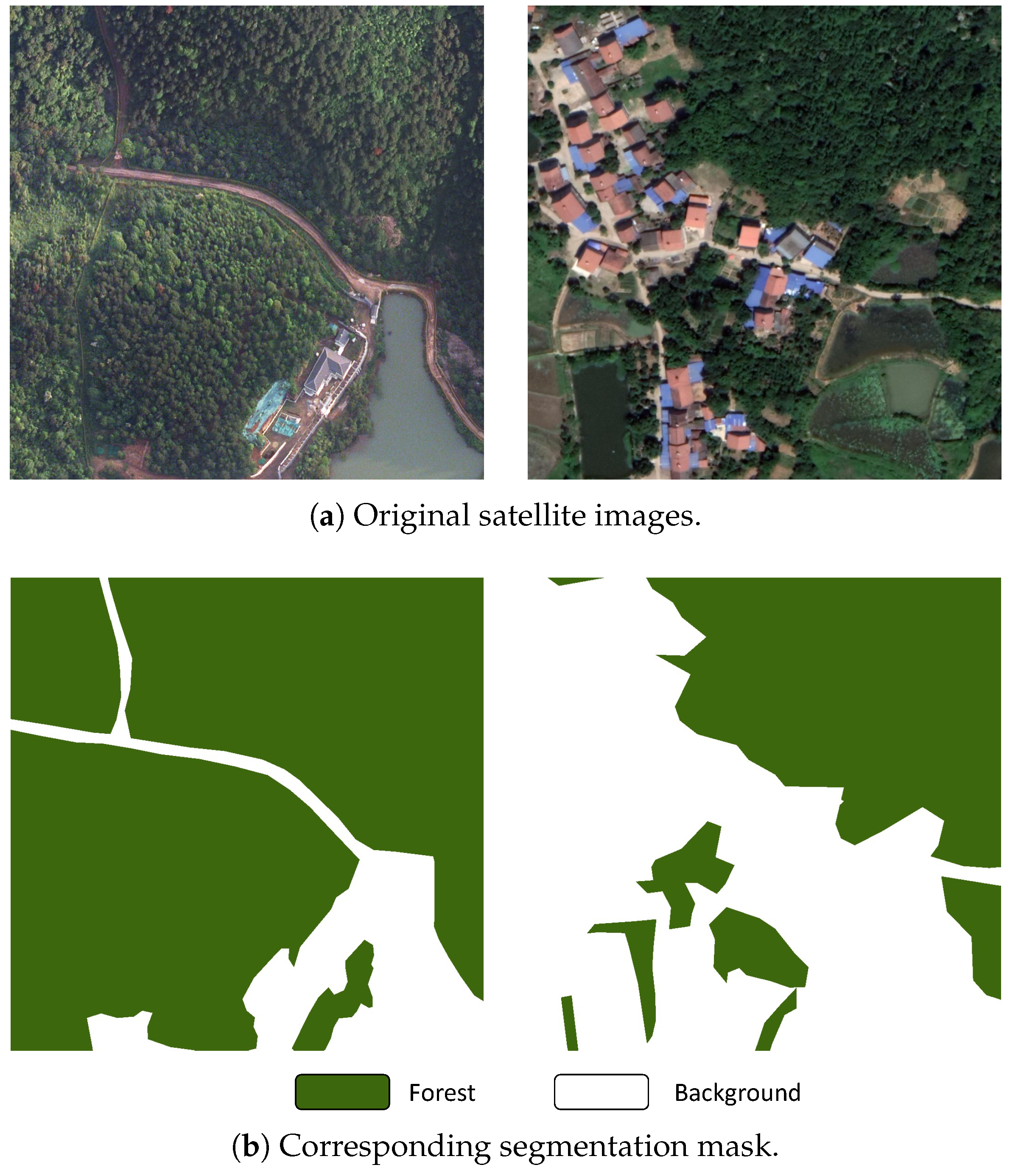

2. Datasets

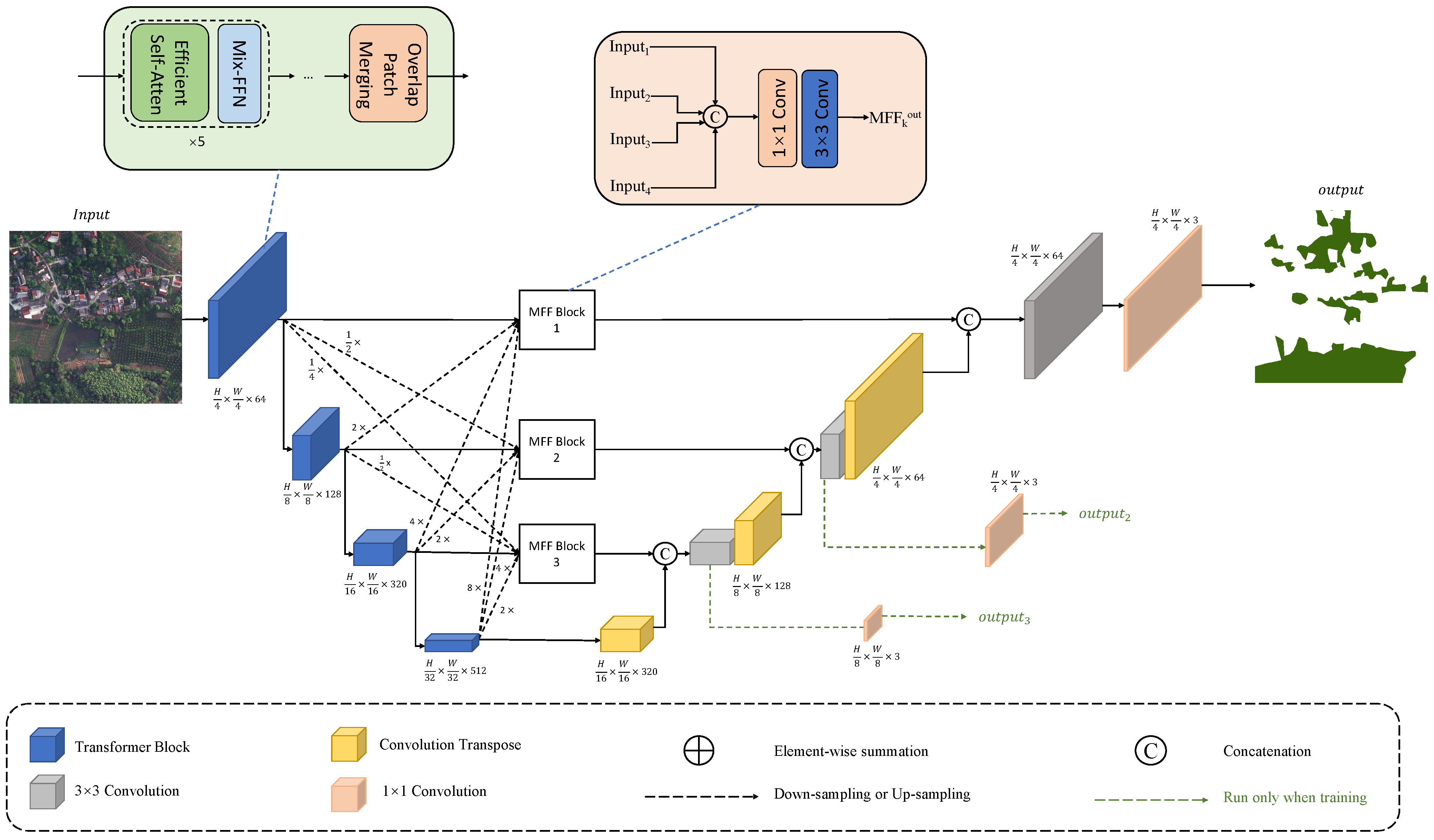

3. Methods

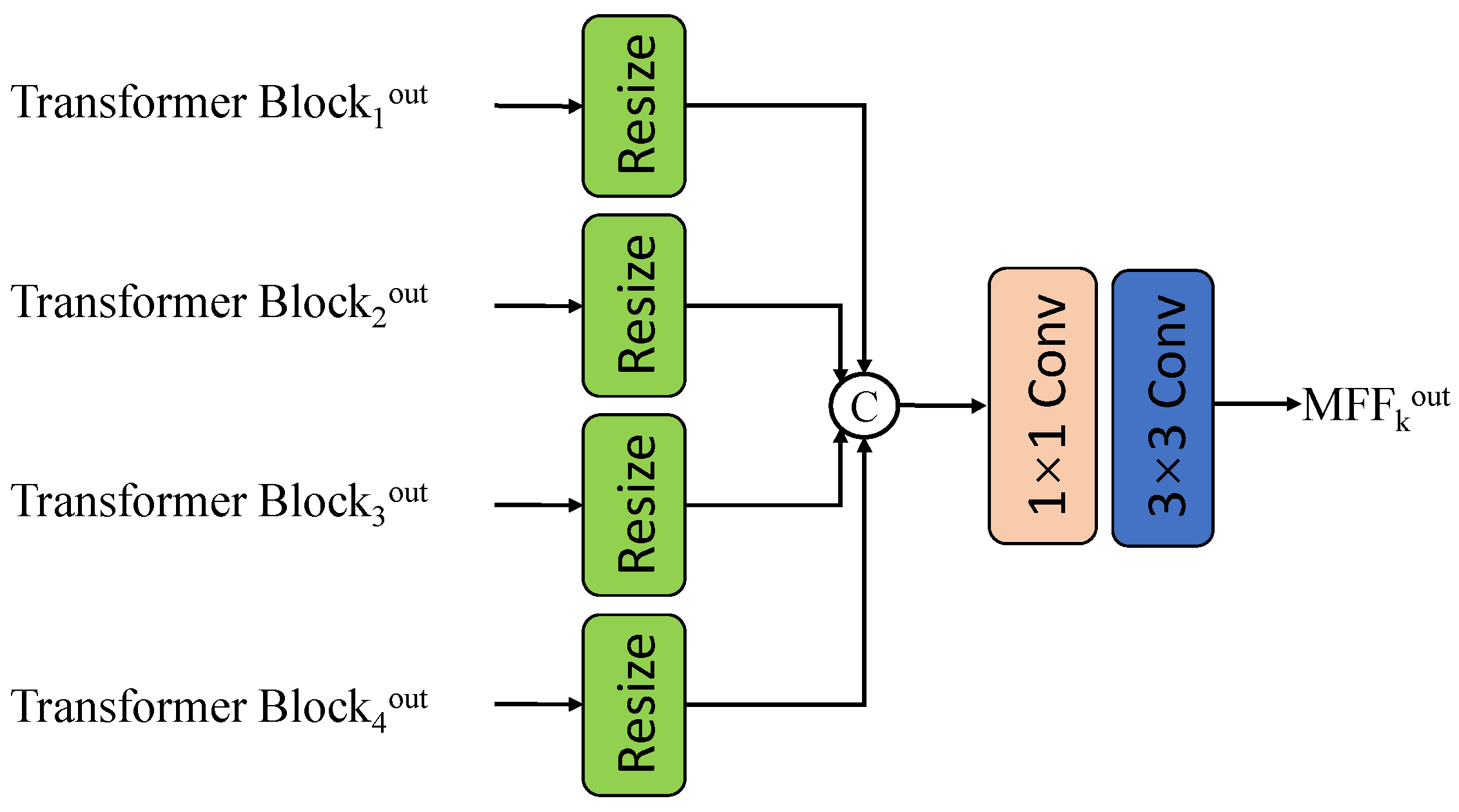

3.1. Multi-Scale Feature Fusion

3.2. Multi-Scale Multi-Decoder

3.3. Loss Function

4. Experiments

4.1. Experimental Settings

4.2. Evaluation Metrics

4.3. Comparison to State of the Art Methods

4.4. Ablation Studies

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pandey, R.; Bargali, S.S.; Bargali, K.; Karki, H.; Kumar, M.; Sahoo, U.K. Fine root dynamics and associated nutrient flux in Sal dominated forest ecosystems of Central Himalaya, India. Front. For. Glob. Chang. 2023, 5, 1064502. [Google Scholar] [CrossRef]

- Di Sacco, A.; Hardwick, K.A.; Blakesley, D.; Brancalion, P.H.S.; Breman, E.; Cecilio Rebola, L.; Chomba, S.; Dixon, K.; Elliott, S.; Ruyonga, G.; et al. Ten golden rules for reforestation to optimize carbon sequestration, biodiversity recovery and livelihood benefits. Glob. Chang. Biol. 2021, 27, 1328–1348. [Google Scholar] [CrossRef]

- Kilpeläinen, J.; Heikkinen, A.; Heinonen, K.; Hämäläinen, A. Towards sustainability? Forest-based circular bioeconomy business models in Finnish SMEs. Sustainability 2021, 13, 9419. [Google Scholar]

- Lewis, S.L.; López-González, G.; Sonké, B.; Affum-Baffoe, K.; Baker, T.R.; Ojo, L.O.; Phillips, O.L.; Reitsma, J.M.; White, L.J.T.; Comiskey, J.A.; et al. Asynchronous carbon sink saturation in African and Amazonian tropical forests. Nature 2019, 579, 80–87. [Google Scholar]

- Lin, J.; Chi, J.; Li, B.; Ju, W.; Xu, X.; Jin, W.; Lu, X.; Pan, D.; Ciais, P.; Yang, Y. Large Chinese land carbon sink estimated from atmospheric carbon dioxide data. Nature 2018, 560, 634–638. [Google Scholar]

- Yamamoto, Y.; Matsumoto, K. The effect of forest certification on conservation and sustainable forest management. J. Clean. Prod. 2022, 363, 132374. [Google Scholar] [CrossRef]

- Souza Jr, C.M.; Shimbo, J.Z.; Rosa, M.R.; Parente, L.L.; Alencar, A.A.; Rudorff, B.F.T.; Hasenack, H.; Matsumoto, M.; Ferreira, L.G.; Souza-Filho, P.W.M.; et al. Reconstructing Three Decades of Land Use and Land Cover Changes in Brazilian Biomes with Landsat Archive and Earth Engine. Remote Sens. 2020, 12, 2735. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, X.; Yuan, X.; An, F.; Zhang, H.; Zhou, L.; Shi, J.; Yun, T. Simulating Wind Disturbances over Rubber Trees with Phenotypic Trait Analysis Using Terrestrial Laser Scanning. Forests 2022, 13, 1298. [Google Scholar] [CrossRef]

- Xue, X.; Jin, S.; An, F.; Zhang, H.; Fan, J.; Eichhorn, M.P.; Jin, C.; Chen, B.; Jiang, L.; Yun, T. Shortwave Radiation Calculation for Forest Plots Using Airborne LiDAR Data and Computer Graphics. Plant Phenomics 2022, 2022, 9856739. [Google Scholar] [CrossRef] [PubMed]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Canny, J.F. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Malik, J.; Perona, P. Scale-space and edge detection using anisotropic diffusion. In Proceedings of the IEEE Computer Society Workshop on Computer Vision, Osaka, Japan, 4–7 December 1990; pp. 16–22. [Google Scholar]

- Felzenszwalb, P.F.; Huttenlocher, D.P. L1 graph-based active contours. In Proceedings of the International Conference on Computer Vision, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. 64–71. [Google Scholar]

- Boykov, Y.; Kolmogorov, V. An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 26, 1124–1137. [Google Scholar] [CrossRef]

- Wang, R.; Nie, F.; Wang, Z.; He, F.; Li, X. Scalable Graph-Based Clustering With Nonnegative Relaxation for Large Hyperspectral Image. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7352–7364. [Google Scholar] [CrossRef]

- Belgiu, M.; Dragut, L. Random forest in remote sensing: A review of applications and future directions. Isprs J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. Isprs J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, R. A multiple conditional random fields ensemble model for urban area detection in remote sensing optical Images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3978–3988. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. Isprs J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Wu, J.; Liu, S.; Wu, H.; Yang, W.; Hu, J.; Wang, J. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. Remote Sens. 2019, 11, 1748. [Google Scholar]

- Li, Y.; Yu, X.; Jiang, Z.; Wu, L.; Lu, S. Application of a Novel Multiscale Global Graph Convolutional Neural Network to Improve the Accuracy of Forest Type Classification Using Aerial Photographs. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6874–6888. [Google Scholar]

- Deng, C.; Xiong, Y.; Yu, T.; Liu, R.; Li, X.; Xu, G.; Wang, J.; Zou, J. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 13103–13112. [Google Scholar]

- Liu, Z.; Shi, Q.; Chen, J.; Zhu, Q.; Zhang, J. Mask2Former: From Mask Encoding to Spatial Broadcasting Transformer for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 143–152. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. SegNeXt: Rethinking Convolutional Attention Design for Semantic Segmentation. arXiv 2022, arXiv:2209.08575. [Google Scholar]

- Fu, L.; Zhang, D.; Ye, Q. Recurrent Thrifty Attention Network for Remote Sensing Scene Recognition. IEEE Trans. Geosci. Remote Sens. 2021, 59, 8257–8268. [Google Scholar] [CrossRef]

- Aleissaee, A.A.; Kumar, A.; Anwer, R.M.; Khan, S.; Cholakkal, H.; Xia, G.S.; Khan, F.S. Transformers in Remote Sensing: A Survey. Remote Sens. 2023, 15, 1860. [Google Scholar] [CrossRef]

- Demir, I.K.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, B.; Basu, S.; Hughes, F.; Tuia, D.; Raska, R.; Kressner, A.A.; et al. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–182. [Google Scholar]

- Sankaranarayanan, S.S.; Balaji, Y.; Jain, H.; Chellappa, R.; Castillo-Rubio, F.J.; Petersson, L.; Carneiro, G. LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 703–704. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in PyTorch. 2017. Available online: http://xxx.lanl.gov/abs/arXiv:1502.03167v3 (accessed on 28 October 2017).

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. In Proceedings of the European Conference on Computer Vision, Honolulu, HI, USA, 21–26 July 2017; pp. 23–37. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wei, X.Y.; Tang, Y.R.; Lai, Y.K.; Liu, X. Learning Pixel-wise Non-linear Regression for Single Image Super-resolution Using Convolutional Neural Network. IEEE Trans. Image Process. 2018, 27, 381–392. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Zhang, W.; Pang, J.; Chen, K.; Loy, C.C. K-Net: Towards Unified Image Segmentation. In Proceedings of the Advances in Neural Information Processing Systems 34 (NEURIPS 2021), Online, 6–14 December 2021; Volume 34. [Google Scholar]

- Guo, M.H.; Lu, C.Z.; Liu, Z.N.; Cheng, M.M.; Hu, S.M. Visual Attention Network. arXiv 2022, arXiv:2202.09741. [Google Scholar]

- Vali, A.; Comai, S.; Matteucci, M. Deep Learning for Land Use and Land Cover Classification Based on Hyperspectral and Multispectral Earth Observation Data: A Review. Remote Sens. 2020, 12, 2495. [Google Scholar] [CrossRef]

| Class | Label | Pixel Count | Proportion |

|---|---|---|---|

| Background | 0 | 537.13 M | 46.20% |

| Forest | 1 | 625.46 M | 53.80% |

| Class | Label | Pixel Count | Proportion |

|---|---|---|---|

| Background | 0 | 332.37 M | 48.32% |

| Forest | 1 | 355.49 M | 51.68% |

| IoU | mIoU | Accuracy | mAcc | |||

|---|---|---|---|---|---|---|

| Forest | Background | Forest | Background | |||

| Deeplabv3+ | 77.69 | 79.67 | 78.68 | 87.42 | 88.70 | 88.06 |

| Pidnet-s | 78.78 | 80.96 | 79.87 | 87.35 | 90.21 | 88.78 |

| Pspnet | 79.86 | 80.79 | 80.33 | 91.17 | 87.22 | 89.20 |

| Knet-s3-r50 | 80.23 | 81.22 | 80.73 | 91.24 | 87.63 | 89.44 |

| Segformer | 79.98 | 81.71 | 80.85 | 89.02 | 89.80 | 89.41 |

| Mask2former | 80.52 | 81.61 | 81.06 | 91.09 | 88.17 | 89.63 |

| Segnext | 80.60 | 81.84 | 81.22 | 90.69 | 88.71 | 89.70 |

| SegForest | 82.80 | 83.99 | 83.39 | 91.79 | 90.20 | 91.00 |

| IoU | mIoU | Accuracy | mAcc | |||

|---|---|---|---|---|---|---|

| Forest | Background | Forest | Background | |||

| Deeplabv3+ | 64.22 | 75.88 | 70.05 | 80.37 | 84.85 | 82.61 |

| Pidnet-s | 64.36 | 74.08 | 69.22 | 84.82 | 80.85 | 82.84 |

| Pspnet | 62.68 | 77.08 | 69.88 | 73.93 | 89.18 | 81.56 |

| Knet-s3-r50 | 65.99 | 76.16 | 71.08 | 84.11 | 83.45 | 83.78 |

| Segformer | 64.63 | 76.31 | 70.47 | 80.36 | 85.35 | 82.86 |

| Mask2former | 65.67 | 76.83 | 71.25 | 81.69 | 85.30 | 83.50 |

| Segnext | 64.42 | 76.96 | 70.69 | 78.31 | 87.01 | 82.66 |

| SegForest | 68.38 | 79.04 | 73.71 | 82.98 | 87.14 | 85.06 |

| MFF | MSMD | WBCE | IoU | mIoU | Accuracy | mAcc | ||

|---|---|---|---|---|---|---|---|---|

| Forest | Background | Forest | Background | |||||

| 79.98 | 81.71 | 80.85 | 89.02 | 89.80 | 89.41 | |||

| ✓ | 81.54 | 83.75 | 82.65 | 89.83 | 90.13 | 89.98 | ||

| ✓ | 80.53 | 81.64 | 81.09 | 88.71 | 90.18 | 89.45 | ||

| ✓ | ✓ | 81.31 | 82.90 | 82.11 | 89.95 | 90.12 | 90.04 | |

| ✓ | ✓ | ✓ | 82.80 | 83.99 | 83.39 | 91.79 | 90.20 | 91.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Hu, C.; Zhang, R.; Qian, W. SegForest: A Segmentation Model for Remote Sensing Images. Forests 2023, 14, 1509. https://doi.org/10.3390/f14071509

Wang H, Hu C, Zhang R, Qian W. SegForest: A Segmentation Model for Remote Sensing Images. Forests. 2023; 14(7):1509. https://doi.org/10.3390/f14071509

Chicago/Turabian StyleWang, Hanzhao, Chunhua Hu, Ranyang Zhang, and Weijie Qian. 2023. "SegForest: A Segmentation Model for Remote Sensing Images" Forests 14, no. 7: 1509. https://doi.org/10.3390/f14071509

APA StyleWang, H., Hu, C., Zhang, R., & Qian, W. (2023). SegForest: A Segmentation Model for Remote Sensing Images. Forests, 14(7), 1509. https://doi.org/10.3390/f14071509