Rapid Estimation of Decameter FPAR from Sentinel-2 Imagery on the Google Earth Engine

Abstract

1. Introduction

2. Materials and Methods

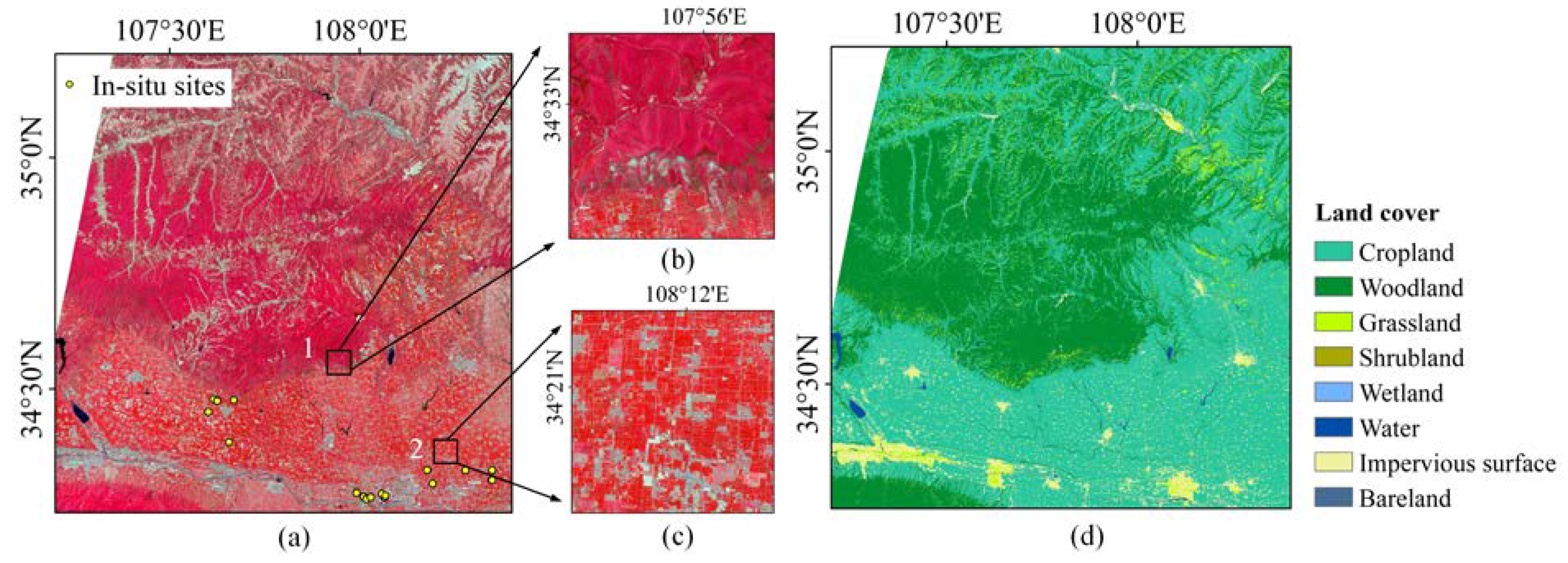

2.1. Study Areas

2.2. Data Acquisition and Processing

2.2.1. Sentinel-2 Data

2.2.2. MODIS FPAR Product

2.2.3. In Situ Measurement

2.3. Scaling-Based Method

- Unsupervised classification

- 2.

- Sample screening

- 3.

- Band selection

- 4.

- Fine-resolution FPAR estimation

2.4. SNAP Algorithm

2.5. Validation and Comparison

3. Results

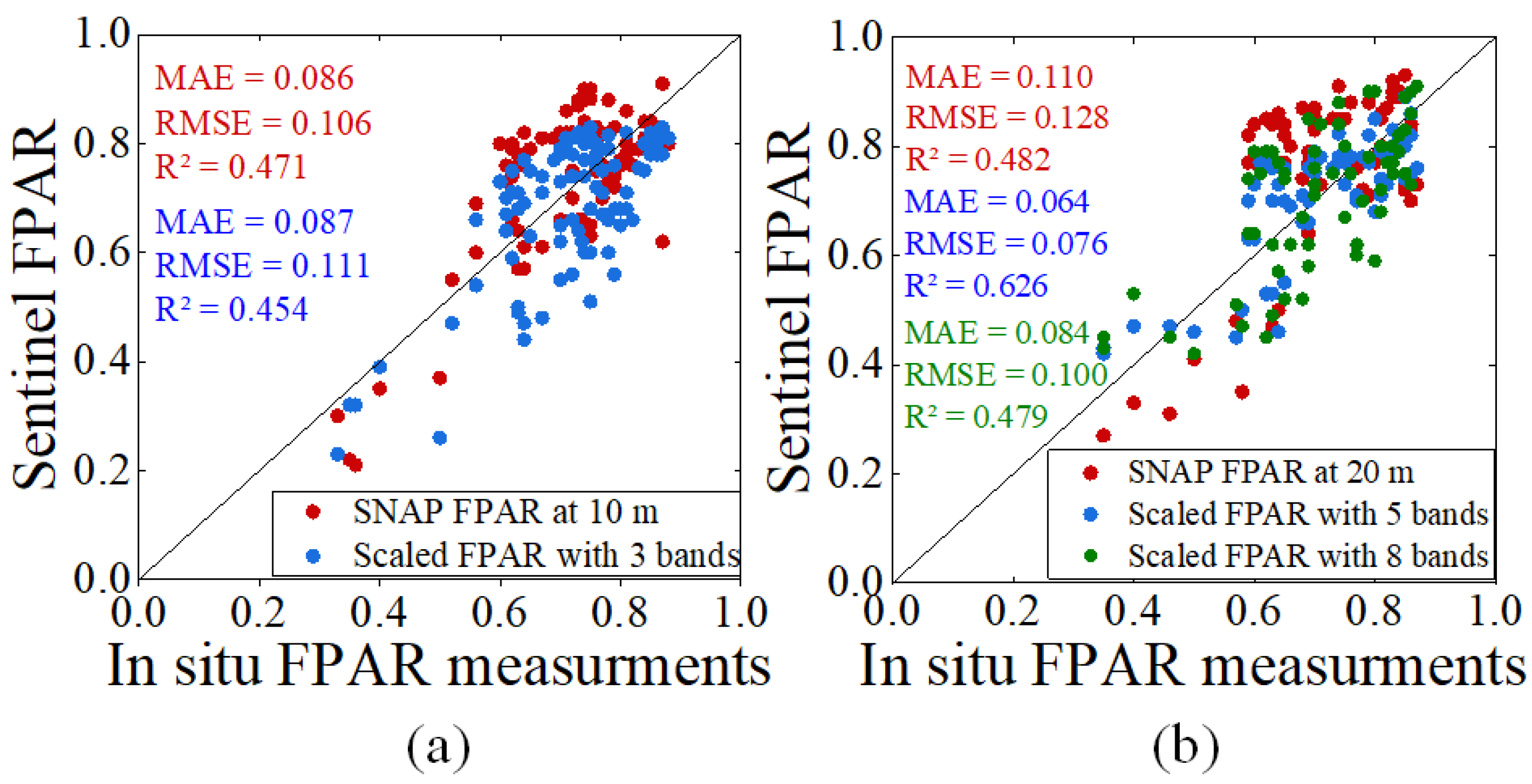

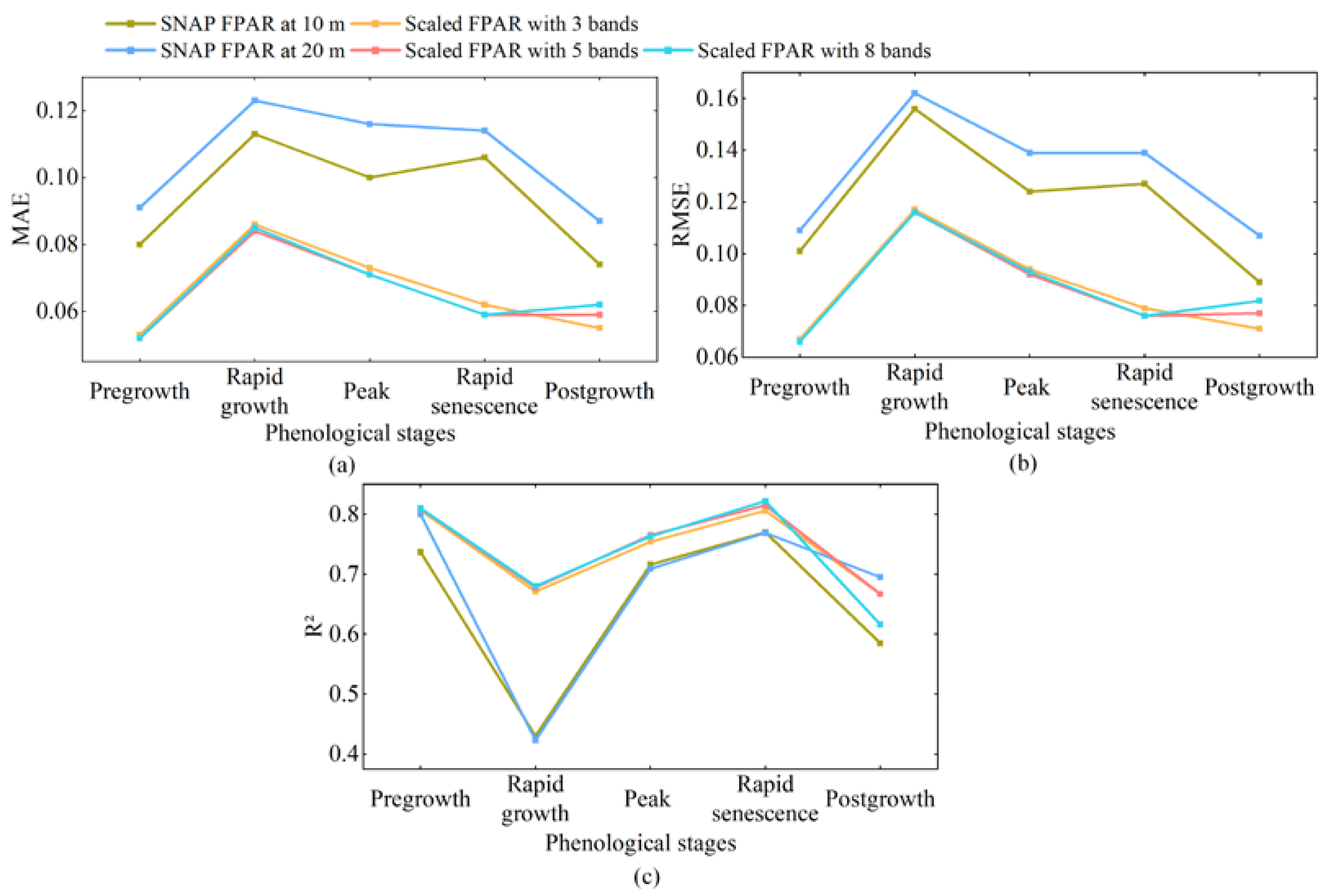

3.1. Validation with In Situ Measurements

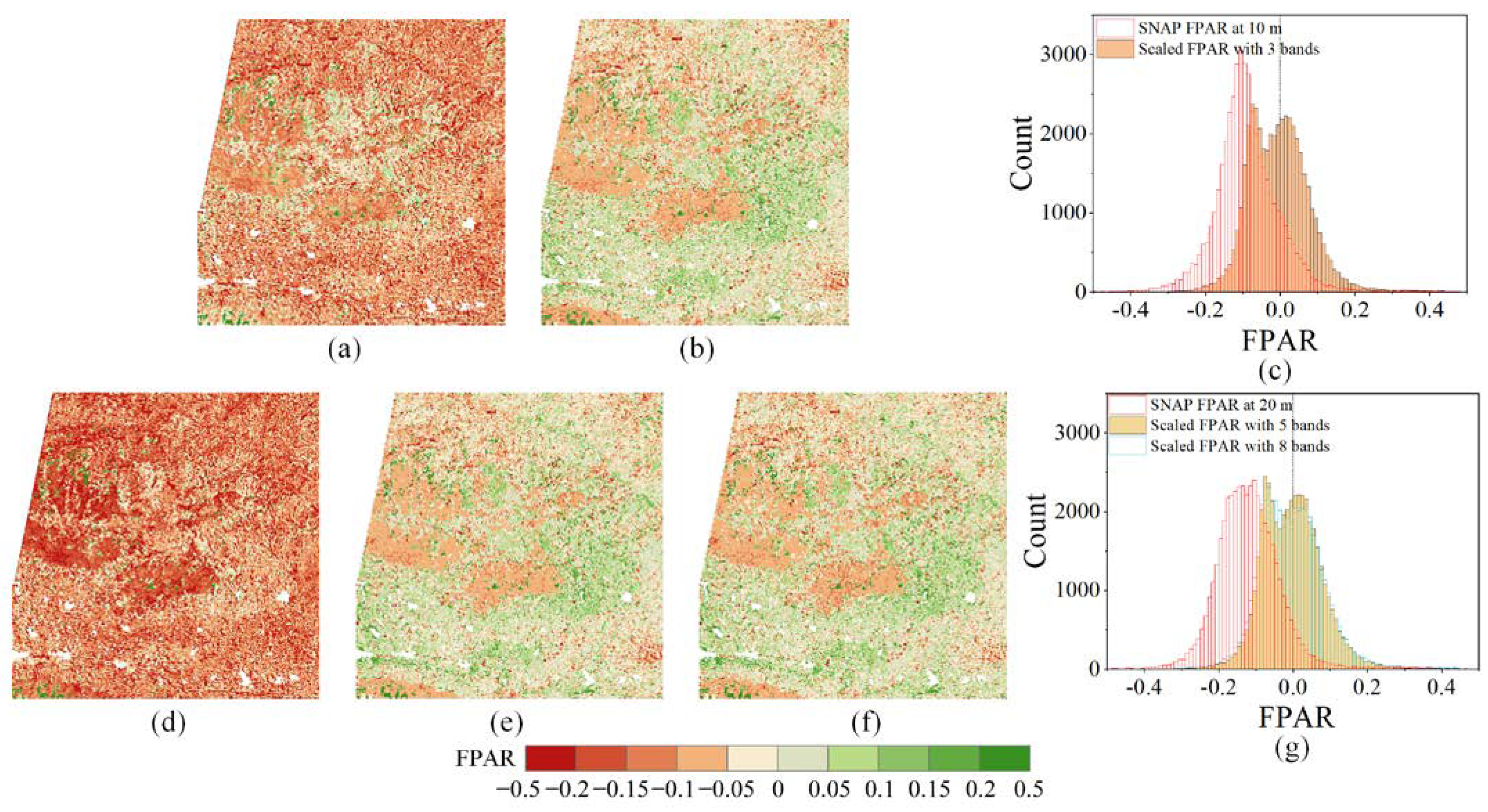

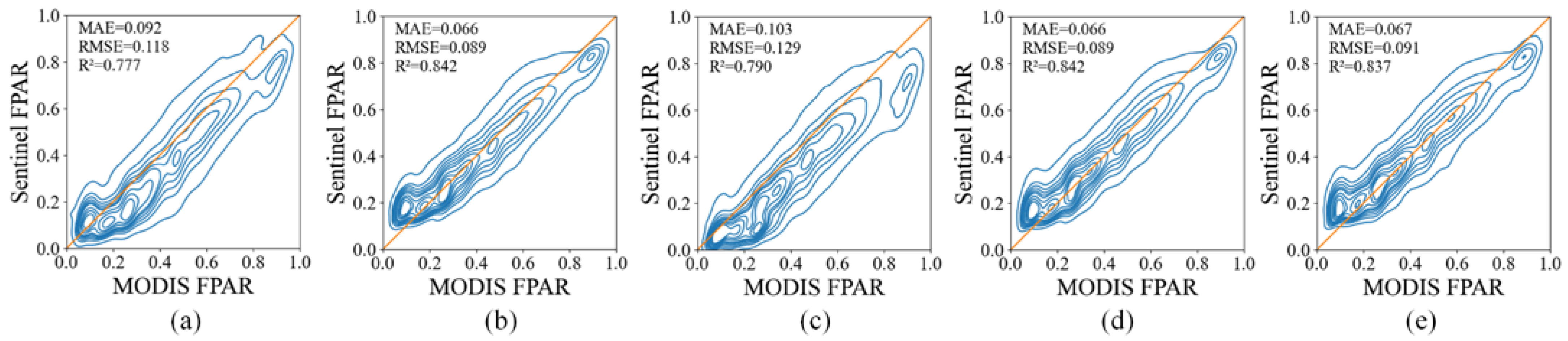

3.2. Consistency with MODIS FPAR

3.3. Computational Efficiency Comparison

4. Discussion

5. Conclusions

- (1)

- Validated with the in situ FPAR measurements, the scaling-based method using five bands at 20 m resolution was the most accurate, outperforming the SNAP method at 10 m and 20 m resolutions and the scaling-based method using eight bands. At 10 m resolution, the SNAP method performed better than the scaling-based method using three bands. Thus, the scaling-based method using five bands was optimal at 20 m resolution or when we only considered accuracy, while the SNAP method was optimal at 10 m resolution.

- (2)

- Compared with MODIS FPAR products, the SNAP method systematically underestimated FPAR values, especially for densely vegetated and sparsely vegetated areas. Such underestimation by the SNAP method was more significant at 20 m resolution than at 10 m resolution. The scaling-based method using three, five, and eight bands all achieved very good consistency with the MODIS FPAR products compared to the SNAP method.

- (3)

- The scaling-based method was implemented on the GEE and is more efficient than the SNAP method. Estimating FPAR from a single Sentinel-2 scene only takes 30 s, while the SNAP method takes an average of 10 min. The scaling-based method is very suitable for the operational estimation of FPAR from Sentinel-2 images.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gower, S.T.; Kucharik, C.J.; Norman, J.M. Direct and indirect estimation of leaf area index, fAPAR, and net primary production of terrestrial ecosystems. Remote Sens. Environ. 1999, 70, 29–51. [Google Scholar] [CrossRef]

- Castaldi, F.; Casa, R.; Pelosi, F.; Yang, H. Influence of acquisition time and resolution on wheat yield estimation at the field scale from canopy biophysical variables retrieved from SPOT satellite data. Int. J. Remote Sens. 2015, 36, 2438–2459. [Google Scholar] [CrossRef]

- Tian, D.; Fan, W.; Ren, H. Progress of fraction of absorbed photosynthetically active radiation retrieval from remote sensing data. J. Remote Sens. 2020, 24, 1307–1324. [Google Scholar]

- Myneni, R.B.; Hoffman, S.; Knyazikhin, Y.; Privette, J.; Glassy, J.; Tian, Y.; Wang, Y.; Song, X.; Zhang, Y.; Smith, G. Global products of vegetation leaf area and fraction absorbed PAR from year one of MODIS data. Remote Sens. Environ. 2002, 83, 214–231. [Google Scholar] [CrossRef]

- Knyazikhin, Y.; Martonchik, J.; Myneni, R.B.; Diner, D.; Running, S.W. Synergistic algorithm for estimating vegetation canopy leaf area index and fraction of absorbed photosynthetically active radiation from MODIS and MISR data. J. Geophys. Res. Atmos. 1998, 103, 32257–32275. [Google Scholar] [CrossRef]

- Xiao, Z.; Liang, S.; Sun, R.; Wang, J.; Jiang, B. Estimating the fraction of absorbed photosynthetically active radiation from the MODIS data based GLASS leaf area index product. Remote Sens. Environ. 2015, 171, 105–117. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, G.; Xie, D.; Hu, R.; Zhang, H. Generating Long Time Series of High Spatiotemporal Resolution FPAR Images in the Remote Sensing Trend Surface Framework. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, D.; Zhan, Y.; Li, H.; Yan, G.; Chen, Y. Assessing the accuracy of landsat-MODIS NDVI fusion with limited input data: A strategy for base data selection. Remote Sens. 2021, 13, 266. [Google Scholar] [CrossRef]

- Chen, J.M. Canopy architecture and remote sensing of the fraction of photosynthetically active radiation absorbed by boreal conifer forests. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1353–1368. [Google Scholar] [CrossRef]

- Fan, W.; Liu, Y.; Xu, X.; Chen, G.; Zhang, B. A new FAPAR analytical model based on the law of energy conservation: A case study in China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3945–3955. [Google Scholar] [CrossRef]

- Majasalmi, T.; Rautiainen, M.; Stenberg, P. Modeled and measured fPAR in a boreal forest: Validation and application of a new model. Agric. For. Meteorol. 2014, 189, 118–124. [Google Scholar] [CrossRef]

- Wang, W.; Nemani, R.; Hashimoto, H.; Ganguly, S.; Huang, D.; Knyazikhin, Y.; Myneni, R.; Bala, G. An interplay between photons, canopy structure, and recollision probability: A review of the spectral invariants theory of 3d canopy radiative transfer processes. Remote Sens. 2018, 10, 1805. [Google Scholar] [CrossRef]

- Fensholt, R.; Sandholt, I.; Rasmussen, M.S. Evaluation of MODIS LAI, fAPAR and the relation between fAPAR and NDVI in a semi-arid environment using in situ measurements. Remote Sens. Environ. 2004, 91, 490–507. [Google Scholar] [CrossRef]

- Myneni, R.; Williams, D. On the relationship between FAPAR and NDVI. Remote Sens. Environ. 1994, 49, 200–211. [Google Scholar] [CrossRef]

- Liu, R.; Ren, H.; Liu, S.; Liu, Q.; Yan, B.; Gan, F. Generalized FPAR estimation methods from various satellite sensors and validation. Agric. For. Meteorol. 2018, 260, 55–72. [Google Scholar] [CrossRef]

- Huemmrich, K.; Goward, S. Vegetation canopy PAR absorptance and NDVI: An assessment for ten tree species with the SAIL model. Remote Sens. Environ. 1997, 61, 254–269. [Google Scholar] [CrossRef]

- Gao, F.; Anderson, M.C.; Kustas, W.P.; Wang, Y. Simple method for retrieving leaf area index from Landsat using MODIS leaf area index products as reference. J. Appl. Remote Sens. 2012, 6, 063554. [Google Scholar] [CrossRef]

- Sun, Y.; Qin, Q.; Ren, H.; Zhang, Y. Decameter Cropland LAI/FPAR Estimation From Sentinel-2 Imagery Using Google Earth Engine. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, G.; Hu, R.; Xie, D.; Chen, W. A scaling-based method for the rapid retrieval of FPAR from fine-resolution satellite data in the remote-sensing trend-surface framework. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7035–7048. [Google Scholar] [CrossRef]

- Yan, K.; Pu, J.; Park, T.; Xu, B.; Zeng, Y.; Yan, G.; Weiss, M.; Knyazikhin, Y.; Myneni, R.B. Performance stability of the MODIS and VIIRS LAI algorithms inferred from analysis of long time series of products. Remote Sens. Environ. 2021, 260, 112438. [Google Scholar] [CrossRef]

- Donlon, C.; Berruti, B.; Buongiorno, A.; Ferreira, M.-H.; Féménias, P.; Frerick, J.; Goryl, P.; Klein, U.; Laur, H.; Mavrocordatos, C. The global monitoring for environment and security (GMES) sentinel-3 mission. Remote Sens. Environ. 2012, 120, 37–57. [Google Scholar] [CrossRef]

- Gobron, N.; Morgan, O.; Adams, J.; Brown, L.A.; Cappucci, F.; Dash, J.; Lanconelli, C.; Marioni, M.; Robustelli, M. Evaluation of Sentinel-3A and Sentinel-3B ocean land colour instrument green instantaneous fraction of absorbed photosynthetically active radiation. Remote Sens. Environ. 2022, 270, 112850. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P. Sentinel-2: ESA’s optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. S2ToolBox Level 2 Products: LAI, FAPAR, FCOVER, Version 1.1; ESA Contract nr 4000110612/14/I-BG; INRA: Avignon, France, 2016; p. 52. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Mourad, R.; Jaafar, H.; Anderson, M.; Gao, F. Assessment of leaf area index models using harmonized landsat and sentinel-2 surface reflectance data over a semi-arid irrigated landscape. Remote Sens. 2020, 12, 3121. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, D.; Liu, S.; Hu, R.; Li, Y.; Yan, G. Scaling of FAPAR from the Field to the Satellite. Remote Sens. 2016, 8, 310. [Google Scholar] [CrossRef]

- Li, W.; Fang, H.; Wei, S.; Weiss, M.; Baret, F. Critical analysis of methods to estimate the fraction of absorbed or intercepted photosynthetically active radiation from ground measurements: Application to rice crops. Agric. For. Meteorol. 2021, 297, 108273. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, D.; Li, X. Universal scaling methodology in remote sensing science by constructing geographic trend surface. J. Remote Sens. 2014, 18, 1139–1146. [Google Scholar]

- Weiss, M.; Baret, F.; Jay, S. S2ToolBox Level 2 Products LAI, FAPAR, FCOVER, Version 2.0; EMMAH-CAPTE, INRAE: Avignon, France, 2020. [Google Scholar]

- Baret, F.; Weiss, M.; Lacaze, R.; Camacho, F.; Makhmara, H.; Pacholcyzk, P.; Smets, B. GEOV1: LAI and FAPAR essential climate variables and FCOVER global time series capitalizing over existing products. Part1: Principles of development and production. Remote Sens. Environ. 2013, 137, 299–309. [Google Scholar] [CrossRef]

- Willmott, C.J. Some comments on the evaluation of model performance. Bull. Am. Meteorol. Soc. 1982, 63, 1309–1313. [Google Scholar] [CrossRef]

- Dong, P.; Gao, L.; Zhan, W.; Liu, Z.; Li, J.; Lai, J.; Li, H.; Huang, F.; Tamang, S.K.; Zhao, L. Global comparison of diverse scaling factors and regression models for downscaling Landsat-8 thermal data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 44–56. [Google Scholar] [CrossRef]

- Putzenlechner, B.; Castro, S.; Kiese, R.; Ludwig, R.; Marzahn, P.; Sharp, I.; Sanchez-Azofeifa, A. Validation of Sentinel-2 fAPAR products using ground observations across three forest ecosystems. Remote Sens. Environ. 2019, 232, 111310. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT+ SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Wu, H.; Li, Z.-L. Scale issues in remote sensing: A review on analysis, processing and modeling. Sensors 2009, 9, 1768–1793. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Hunt Jr, E.R.; Doraiswamy, P.C.; McMurtrey, J.E.; Daughtry, C.S.; Perry, E.M.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef]

- Cheng, W.; Qian, X.; Li, S.; Ma, H.; Liu, D.; Liu, F.; Liang, J.; Hu, J. Research and application of PIE-Engine Studio for spatiotemporal remote sensing cloud computing platform. Natl. Remote Sens. Bull 2022, 26, 335–347. [Google Scholar]

- Nemani, R.; Votava, P.; Michaelis, A.; Melton, F.; Hashimoto, H.; Milesi, C.; Wang, W.; Ganguly, S. NASA Earth Exchange: A Collaborative Earth Science Platform. In AGU Fall Meeting Abstracts; American Geophysical Union: Washington, DC, USA, 2010; abstract id. IN53A-1161. [Google Scholar]

- Hu, Q.; Yang, J.; Xu, B.; Huang, J.; Memon, M.S.; Yin, G.; Zeng, Y.; Zhao, J.; Liu, K. Evaluation of global decametric-resolution LAI, FAPAR and FVC estimates derived from Sentinel-2 imagery. Remote Sens. 2020, 12, 912. [Google Scholar] [CrossRef]

| Bands | Central Wavelength/nm | Spatial Resolution/m | Name |

|---|---|---|---|

| B1 | 443 | 60 | Coastal |

| B2 | 490 | 10 | Blue |

| B3 | 560 | 10 | Green |

| B4 | 665 | 10 | Red |

| B5 | 705 | 20 | Red edge 1 |

| B6 | 740 | 20 | Red edge 2 |

| B7 | 783 | 20 | Red edge 3 |

| B8 | 842 | 10 | NIR |

| B8A | 865 | 20 | NIR |

| B9 | 940 | 60 | Water vapor |

| B10 | 1375 | 60 | Cirrus |

| B11 | 1610 | 20 | SWIR1 |

| B12 | 2190 | 20 | SWIR2 |

| Strategy | Combination of Bands |

|---|---|

| Scaled FPAR with three bands (10 m) | B3, B4, B8 (10 m) |

| Scaled FPAR with five bands (20 m) | B3, B4, B8A, B11, B12 (20 m) |

| Scaled FPAR with eight bands (20 m) | B3, B4, B5, B6, B7, B8A, B11, B12 (20 m) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhan, Y.; Xie, D.; Liu, J.; Huang, H.; Zhao, D.; Xiao, Z.; Zhou, X. Rapid Estimation of Decameter FPAR from Sentinel-2 Imagery on the Google Earth Engine. Forests 2022, 13, 2122. https://doi.org/10.3390/f13122122

Wang Y, Zhan Y, Xie D, Liu J, Huang H, Zhao D, Xiao Z, Zhou X. Rapid Estimation of Decameter FPAR from Sentinel-2 Imagery on the Google Earth Engine. Forests. 2022; 13(12):2122. https://doi.org/10.3390/f13122122

Chicago/Turabian StyleWang, Yiting, Yinggang Zhan, Donghui Xie, Jinghao Liu, Haiyang Huang, Dan Zhao, Zihang Xiao, and Xiaode Zhou. 2022. "Rapid Estimation of Decameter FPAR from Sentinel-2 Imagery on the Google Earth Engine" Forests 13, no. 12: 2122. https://doi.org/10.3390/f13122122

APA StyleWang, Y., Zhan, Y., Xie, D., Liu, J., Huang, H., Zhao, D., Xiao, Z., & Zhou, X. (2022). Rapid Estimation of Decameter FPAR from Sentinel-2 Imagery on the Google Earth Engine. Forests, 13(12), 2122. https://doi.org/10.3390/f13122122