A Review: Individual Tree Species Classification Using Integrated Airborne LiDAR and Optical Imagery with a Focus on the Urban Environment

Abstract

:1. Introduction

2. Urban Tree Species Classification from Optical Remote Sensing Imagery

2.1. Pixel-Based vs. Object-Based Classification

2.2. Development and Limitations

3. Urban Tree Species Classification from LiDAR Data

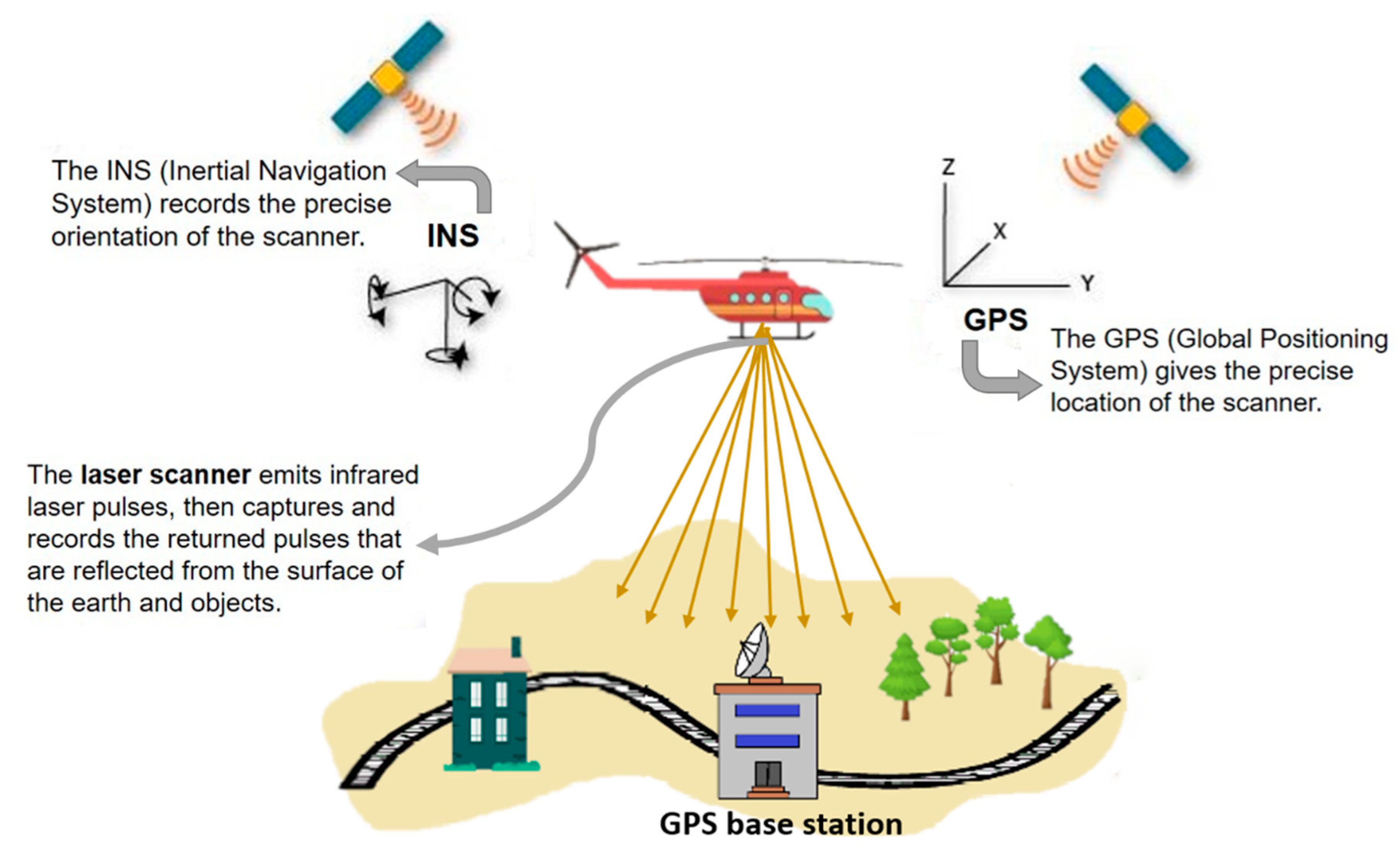

3.1. Introduction to LiDAR

3.2. LiDAR in Urban Tree Species Classification

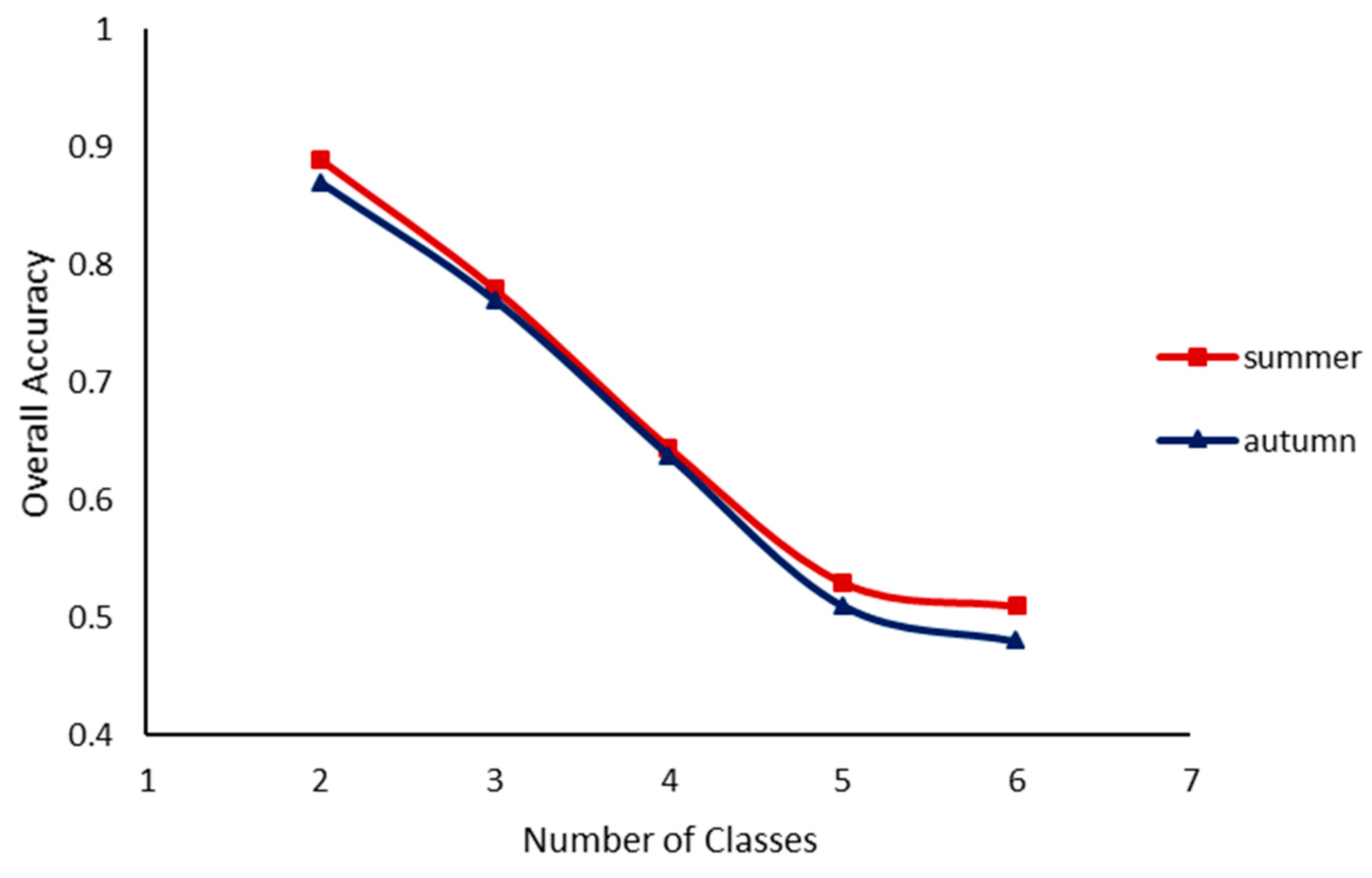

3.2.1. Urban Tree Species Classification through Image Fusion

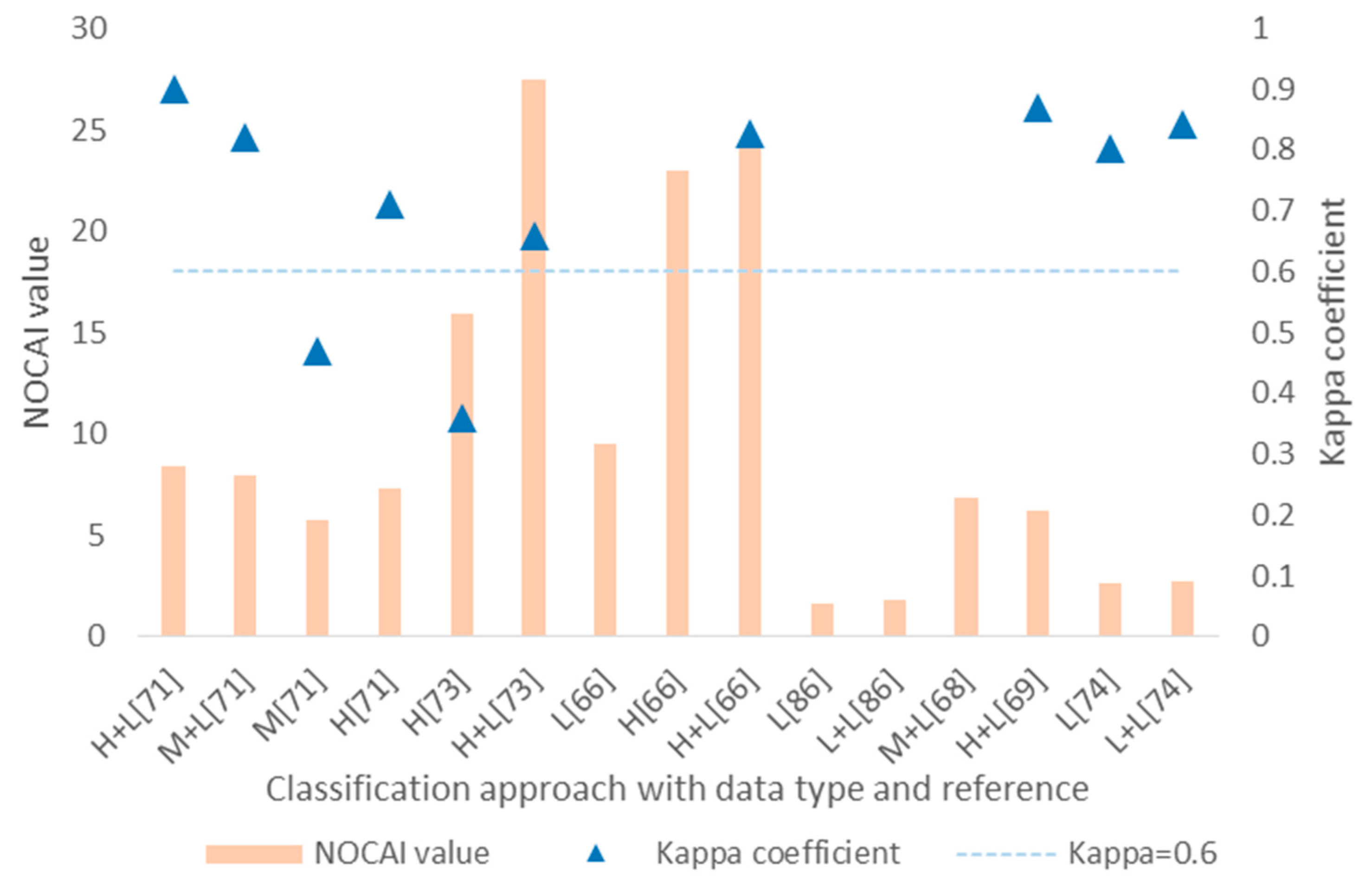

3.2.2. Accuracy Comparison of Urban Tree Species Classification

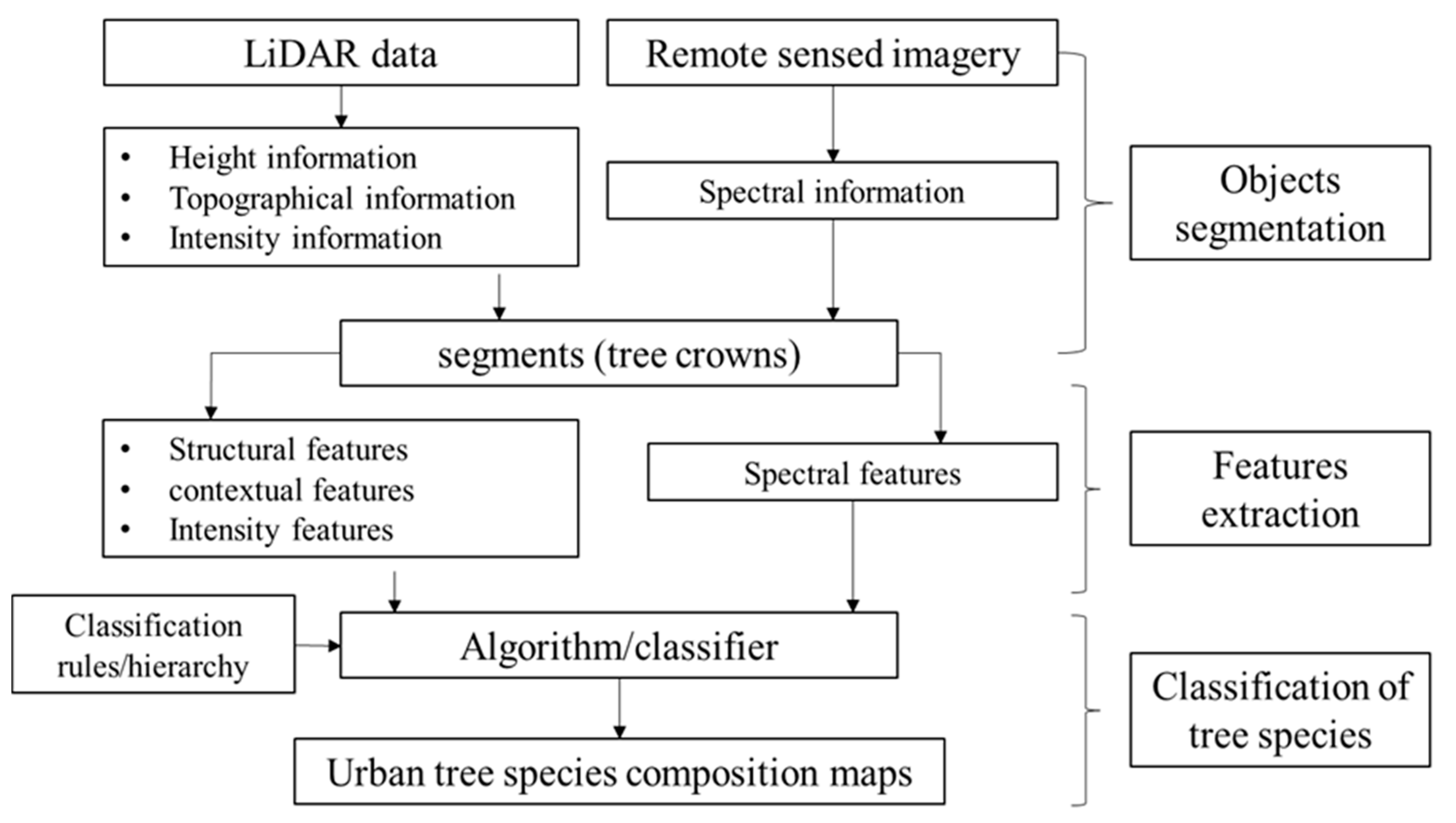

3.2.3. A General Workflow of Urban Tree Species Classification by Combining LiDAR Data

3.3. Potential Contributions of LiDAR to Urban Tree Species Classification

3.4. Future Considerations for LiDAR

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Strongina, M. Social and Economic Problems of Urbanization (Survey of the Literature). Probl. Econ. 1974, 17, 23–43. [Google Scholar] [CrossRef]

- UN Habitat. Cities and Climate Change: Global Report on Human Settlements 2011; Earthscan: London, UK, 2011. [Google Scholar]

- Escobedo, F.J.; Nowak, D.J. Spatial heterogeneity and air pollution removal by an urban forest. Landsc. Urban Plan. 2009, 90, 102–110. [Google Scholar] [CrossRef]

- Mccarthy, H.R.; Pataki, D.E. Drivers of variability in water use of native and non-native urban trees in the greater Los Angeles area. Urban Ecosyst. 2010, 13, 393–414. [Google Scholar] [CrossRef] [Green Version]

- Manning, W.J. Plants in urban ecosystems: Essential role of urban forests in urban metabolism and succession toward sustainability. Int. J. Sustain. Dev. World Ecol. 2008, 15, 362–370. [Google Scholar] [CrossRef]

- Nowak, D.J. Air pollution removal by Chicago’s urban forest. In Chicago’s Urban forest Ecosystem: Results of the Chicago Urban forest Climate Project; Mcpherson, E.G., Nowak, D.J., Rowntree, R.A., Eds.; USDA Forest Service: Radnor, PA, USA, 1994; pp. 63–81. [Google Scholar]

- Beckett, K.P.; Freer-Smith, P.H.; Taylor, G. Urban woodlands: Their role in reducing the effects of particulate pollution. Environ. Pollut. 1998, 99, 347–360. [Google Scholar] [CrossRef]

- Akbari, H.; Pomerantz, M.; Taha, H. Cool surfaces and shade trees to reduce energy use and improve air quality in urban areas. Sol. Energy 2001, 70, 295–310. [Google Scholar] [CrossRef]

- Yang, J.; Mcbride, J.; Zhou, J.; Sun, Z. The urban forest in Beijing and its role in air pollution reduction. Urban For. Urban Green. 2005, 3, 65–78. [Google Scholar] [CrossRef]

- Beckett, K.P.; Freer-Smith, P.; Taylor, G. Effective tree species for local air-quality management. J. Arboric. 2000, 26, 12–19. [Google Scholar]

- Xiao, Q.; Mcpherson, E.G. Rainfall interception by Santa Monica’s municipal urban forest. Urban Ecosyst. 2002, 6, 291–302. [Google Scholar] [CrossRef]

- Akbari, H.; Konopacki, S. Calculating energy-saving potentials of heat-island reduction strategies. Energy Policy 2005, 33, 721–756. [Google Scholar] [CrossRef]

- Kirby, K.R.; Potvin, C. Variation in carbon storage among tree species: Implications for the management of a small-scale carbon sink project. For. Ecol. Manag. 2007, 246, 208–221. [Google Scholar] [CrossRef] [Green Version]

- Jenkins, J.C.; Chojnacky, D.C.; Heath, L.S.; Birdsey, R.A. National-scale biomass estimators for United States tree species. For. Sci. 2003, 49, 12–35. [Google Scholar]

- Duro, D.C.; Coops, N.C.; Wulder, M.A.; Han, T. Development of a large area biodiversity monitoring system driven by remote sensing. Prog. Phys. Geogr. 2007, 31, 235–260. [Google Scholar] [CrossRef]

- Zhang, K.; Hu, B. Individual Urban Tree Species Classification Using Very High Spatial Resolution Airborne Multi-Spectral Imagery Using Longitudinal Profiles. Remote Sens. 2012, 4, 1741–1757. [Google Scholar] [CrossRef] [Green Version]

- Lefsky, M.A.; Cohen, W.B.; Parker, G.G.; Harding, D.J. Lidar Remote Sensing for Ecosystem Studies. Bioscience 2002, 52, 19–30. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Swain, P.H.; Davis, S.M. Remote Sensing: The Quantitative Approach. IEEE Trans. Pattern Anal. Mach. Intell. 1981, 6, 713–714. [Google Scholar] [CrossRef]

- Wulder, M. Optical remote-sensing techniques for the assessment of forest inventory and biophysical parameters. Prog. Phys. Geogr. 1998, 22, 449. [Google Scholar] [CrossRef]

- Heller, R.C.; Doverspike, G.E.; Aldrich, R.C. Identification of Tree Species on Large-scale Panchromatic and Color Aerial Photographs; Forest Service: Beltsville, MD, USA, 1964; Volume 261.

- Franklin, S.E.; Wulder, M.A.; Gerylo, G.R. Texture analysis of IKONOS panchromatic data for Douglas-fir forest age class separability in British Columbia. Int. J. Remote Sens. 2001, 22, 2627–2632. [Google Scholar] [CrossRef]

- Brandtberg, T. Individual tree-based species classification in high spatial resolution aerial images of forests using fuzzy sets. Fuzzy Sets and Systems. Fuzzy Sets Syst. 2002, 132, 371–387. [Google Scholar] [CrossRef]

- Xiao, Q.; Ustin, S.L.; Mcpherson, E.G. Using AVIRIS data and multiple-masking techniques to map urban forest tree species. Int. J. Remote Sens. 2004, 25, 5637–5654. [Google Scholar] [CrossRef] [Green Version]

- Enderle, D.; Weih, R.C., Jr. Integrating supervised and unsupervised classification methods to develop a more accurate land cover classification. Ark. Acad. Sci. 2005, 59, 65–73. [Google Scholar]

- Ke, Y.; Quackenbush, L.J.; Im, J. Synergistic use of QuickBird multispectral imagery and LIDAR data for object-based forest species classification. Remote Sens. Environ. 2010, 114, 1141–1154. [Google Scholar] [CrossRef]

- Jong, S.M.D.; Hornstra, T.; Maas, H.G. An Integrated Spatial and Spectral Approach to the Classification of Mediterranean Land Cover Types: The SSC Method. Int. J. Appl. Earth Obs. Geoinf. 2001, 3, 176–183. [Google Scholar] [CrossRef]

- Gao, Y.; Mas, J.F. A Comparison of the Performance of Pixel Based and Object Based Classifications over Images with Various Spatial Resolutions. Online J. Earth Sci. 2014, 2, 27–35. [Google Scholar]

- Treitz, P.; Howarth, P. Integrating spectral, spatial, and terrain variables for forest ecosystem classification. Photogramm. Eng. Remote Sens. 2000, 66, 305–318. [Google Scholar]

- Franklin, S.E. Using spatial cooccurrence texture to increase forest structure and species composition classification accuracy. Photogramm. Eng. Remote Sens. 2001, 67, 849–855. [Google Scholar]

- Zhang, C.; Wulder, M.A. Geostatistical and texture analysis of airborne-acquired images used in forest classification. Int. J. Remote Sens. 2004, 25, 859–865. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Opitz, D.; Blundell, S. Object recognition and image segmentation: The Feature Analyst® approach. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 153–167. [Google Scholar]

- Blaschke, T.; Lang, S.; Hay, G.J. Object-Based Image Analysis: Spatical Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer Science & Business Media: Berlin, Germany, 2008; pp. 2–16. [Google Scholar]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based Detailed Vegetation Classification with Airborne High Spatial Resolution Remote Sensing Imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Hay, G.; Castilla, G. Object-based image analysis: Strengths, weaknesses, opportunities and threats (SWOT). In Proceedings of the 1st International Conference on OBIA: The International Archives of the Pthotogrammetry, Remote Sensing and Spatial Information Sciences, Salzburg, Austria, 4–5 July 2006; pp. 4–5. [Google Scholar]

- Weih, R.C.; Riggan, N.D. Object-based classification vs. Pixel-based classification: Comparitive importance of multi-resolution imagery. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Orlando, FL, USA, 6–18 November 2010; p. C7. [Google Scholar]

- Brockhaus, J.A.; Khorram, S. A comparison of SPOT and Landsat-TM data for use in conducting inventories of forest resources. Int. J. Remote Sens. 1992, 13, 3035–3043. [Google Scholar] [CrossRef]

- Rogan, J.; Miller, J. Land-Cover Change Monitoring with Classification Trees Using Landsat TM and Ancillary Data. Photogramm. Eng. Remote Sens. 2003, 69, 793–804. [Google Scholar] [CrossRef]

- Salovaara, K.J.; Thessler, S.; Malik, R.N.; Tuomisto, H. Classification of Amazonian primary rain forest vegetation using Landsat ETM+ satellite imagery. Remote Sens. Environ. 2005, 97, 39–51. [Google Scholar] [CrossRef]

- Carleer, A.; Wolff, E. Exploitation of Very High Resolution Satellite Data for Tree Species Identification. Photogramm. Eng. Remote Sens. 2004, 70, 135–140. [Google Scholar] [CrossRef]

- Johansen, K.; Phinn, S. Mapping Structural Parameters and Species Composition of Riparian Vegetation Using IKONOS and Landsat ETM+ Data in Australian Tropical Savannahs. Photogramm. Eng. Remote Sens. 2006, 72, 71–80. [Google Scholar] [CrossRef]

- Katoh, M. Classifying tree species in a northern mixed forest using high-resolution IKONOS data. J. For. Res. 2004, 9, 7–14. [Google Scholar] [CrossRef]

- Buddenbaum, H.; Schlerf, M.; Hill, J. Classification of coniferous tree species and age classes using hyperspectral data and geostatistical methods. Int. J. Remote Sens. 2005, 26, 5453–5465. [Google Scholar] [CrossRef]

- Rocchini, D.; Balkenhol, N.; Carter, G.A.; Foody, G.M.; Gillespie, T.W.; He, K.S.; Kark, S.; Levin, N.; Lucas, K.; Luoto, M. Remotely sensed spectral heterogeneity as a proxy of species diversity: Recent advances and open challenges. Ecol. Inform. 2010, 5, 318–329. [Google Scholar] [CrossRef]

- Clark, M.L.; Roberts, D.A.; Clark, D.B. Hyperspectral discrimination of tropical rain forest tree species at leaf to crown scales. Remote Sens. Environ. 2005, 96, 375–398. [Google Scholar] [CrossRef]

- Gong, P.; Howarth, P.J. The use of structural information for improving land-cover classification accuracies at the rural-urban fringe. Photogramm. Eng. Remote Sens. 1990, 56, 67–73. [Google Scholar]

- Gong, P.; Howarth, P.J. An Assessment of Some Factors Influencing Multispectral Land-Cover Classification. Photogramm. Eng. Remote Sens. 1990, 56, 597–603. [Google Scholar]

- Sugumaran, R.; Pavuluri, M.K.; Zerr, D. The use of high-resolution imagery for identification of urban climax forest species using traditional and rule-based classification approach. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1933–1939. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Alonzo, M.; Roth, K.; Roberts, D. Identifying Santa Barbara’s urban tree species from AVIRIS imagery using canonical discriminant analysis. Remote Sens. Lett. 2013, 4, 513–521. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. Inf. Theory IEEE Trans. 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Boschetti, M.; Boschetti, L.; Oliveri, S.; Casati, L.; Canova, I. Tree species mapping with Airborne hyper-spectral MIVIS data: The Ticino Park study case. Int. J. Remote Sens. 2007, 28, 1251–1261. [Google Scholar] [CrossRef]

- Bachman, C.G. Laser Radar Systems and Techniques; Artech House Inc.: Dedham, MA, USA, 1979; pp. 57–58. [Google Scholar]

- Geospatial Modeling & Visualization. Available online: http://gmv.cast.uark.edu/scanning-2/airborne-laser-scanning/ (accessed on 11 September 2018).

- Guo, Q.H.; Liu, J.; Tao, S.L.; Xue, B.L.; Le, L.I.; Guangcai, X.U.; Wenkai, L.I.; Fangfang, W.U.; Yumei, L.I.; Chen, L.H. Perspectives and prospects of LiDAR in forest ecosystem monitoring and modeling. Chin. Sci. Bull. 2014, 59, 459. (In Chinese) [Google Scholar] [CrossRef]

- Lim, K.; Treitz, P.; Wulder, M.; Stonge, B.; Flood, M. LiDAR remote sensing of forest structure. Prog. Phys. Geogr. 2003, 27, 88–106. [Google Scholar] [CrossRef]

- Reitberger, J.; Krzystek, P.; Stilla, U. Analysis of full waveform LIDAR data for the classification of deciduous and coniferous trees. Int. J. Remote Sens. 2008, 29, 1407–1431. [Google Scholar] [CrossRef]

- Drake, J.B.; Dubayah, R.O.; Clark, D.B.; Knox, R.G.; Blair, J.B.; Hofton, M.A.; Chazdon, R.L.; Weishampel, J.F.; Prince, S. Estimation of tropical forest structural characteristics using large-footprint lidar. Remote Sens. Environ. 2002, 79, 305–319. [Google Scholar] [CrossRef]

- Drake, J.B.; Knox, R.G.; Dubayah, R.O.; Clark, D.B.; Condit, R.; Blair, J.B.; Hofton, M. Above-ground biomass estimation in closed canopy Neotropical forests using lidar remote sensing: Factors affecting the generality of relationships. Glob. Ecol. Biogeogr. 2010, 12, 147–159. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Cohen, W.B.; Acker, S.A.; Parker, G.G.; Spies, T.A.; Harding, D. Lidar Remote Sensing of the Canopy Structure and Biophysical Properties of Douglas-Fir Western Hemlock Forests. Remote Sens. Environ. 1999, 70, 339–361. [Google Scholar] [CrossRef]

- Maltamo, M.; Packalen, P.; Yu, X.; Eerikainen, K.; Hyyppa, J.; Pitkanen, J. Identifying and quantifying structural characteristics of heterogeneous boreal forests using laser scanner data. For. Ecol. Manag. 2005, 216, 41–50. [Google Scholar] [CrossRef]

- Dalponte, M.; Bruzzone, L.; Gianelle, D. Tree species classification in the Southern Alps based on the fusion of very high geometrical resolution multispectral/hyperspectral images and LiDAR data. Remote Sens. Environ. 2012, 123, 258–270. [Google Scholar] [CrossRef]

- Hollaus, M.; Mücke, W.; Höfle, B.; Dorigo, W.; Pfeifer, N.; Wagner, W.; Bauerhansl, C.; Regner, B. Tree species classification based on full-waveform airborne laser scanning data. In Proceedings of the Silvilaser, College Station, TX, USA, 14–16 October 2009; pp. 54–62. [Google Scholar]

- Brandtberg, T. Classifying individual tree species under leaf-off and leaf-on conditions using airborne lidar. ISPRS J. Photogramm. Remote Sens. 2007, 61, 325–340. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Pohl, C.; Genderen, J.L.V. Review article Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef] [Green Version]

- Tigges, J.; Lakes, T.; Hostert, P. Urban vegetation classification: Benefits of multitemporal RapidEye satellite data. Remote Sens. Environ. 2013, 136, 66–75. [Google Scholar] [CrossRef]

- Zhang, Z.; Kazakova, A.; Moskal, L.; Styers, D. Object-Based Tree Species Classification in Urban Ecosystems Using LiDAR and Hyperspectral Data. Forests 2016, 7, 122. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, Å.; Söderman, U. Species identification of individual trees by combining high resolution LiDAR data with multi-spectral images. Int. J. Remote Sens. 2008, 29, 1537–1552. [Google Scholar] [CrossRef]

- Sugumaran, R.; Voss, M. Object-Oriented Classification of LIDAR-Fused Hyperspectral Imagery for Tree Species Identification in an Urban Environment. In Proceedings of the Urban Remote Sensing Joint Event, Paris, France, 11–13 April 2007; pp. 1–6. [Google Scholar]

- Matthew, V.; Ramanathan, S. Seasonal Effect on Tree Species Classification in an Urban Environment Using Hyperspectral Data, LiDAR, and an Object-Oriented Approach. Sensors 2008, 8, 3020–3036. [Google Scholar]

- Zhang, C.; Qiu, F. Mapping Individual Tree Species in an Urban Forest Using Airborne Lidar Data and Hyperspectral Imagery. Photogramm. Eng. Remote Sens. 2012, 78, 1079–1087. [Google Scholar] [CrossRef]

- Korpela, I.; Ørka, H.O.; Maltamo, M.; Tokola, T.; Hyyppä, J. Tree species classification using airborne LiDAR—Effects of stand and tree parameters, downsizing of training set, intensity normalization, and sensor type. Silva Fenn. 2010, 44, 319–339. [Google Scholar] [CrossRef]

- Congalton, R.G. A Quantitative Method to Test for Consistency and Correctness in Photointerpretation. Photogramm. Eng. Remote Sens. 1983, 49, 69–74. [Google Scholar]

- Liu, X.H.; Skidmore, A.K.; Oosten, H.V. Integration of classification methods for improvement of land-cover map accuracy. ISPRS J. Photogramm. Remote Sens. 2002, 56, 257–268. [Google Scholar] [CrossRef] [Green Version]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Strijbos, J.W.; Martens, R.; Prins, F.; Jochems, W. Content analysis: What are they talking about? Comput. Educ. 2006, 46, 29–48. [Google Scholar] [CrossRef] [Green Version]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar]

- Shi, Y.; Skidmore, A.K.; Wang, T.; Holzwarth, S.; Heiden, U.; Pinnel, N.; Zhu, X.; Heurich, M. Tree species classification using plant functional traits from LiDAR and hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 207–219. [Google Scholar] [CrossRef]

- Ghosh, A.; Fassnacht, F.E.; Joshi, P.K.; Koch, B. A framework for mapping tree species combining hyperspectral and LiDAR data: Role of selected classifiers and sensor across three spatial scales. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 49–63. [Google Scholar] [CrossRef]

- Holmgren, J.; Persson, Å. Identifying species of individual trees using airborne laser scanner. Remote Sens. Environ. 2004, 90, 415–423. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Currie, D.J.; Paquin, V. Large-scale biogeographical patterns of species richness of trees. Nature 1987, 329, 326–327. [Google Scholar] [CrossRef]

- Key, T.; Warner, T.A.; Mcgraw, J.B.; Fajvan, M.A. A Comparison of Multispectral and Multitemporal Information in High Spatial Resolution Imagery for Classification of Individual Tree Species in a Temperate Hardwood Forest. Remote Sens. Environ. 2001, 75, 100–112. [Google Scholar] [CrossRef]

- Kim, S.Y.; Mcgaughey, R.J.; Andersen, H.E.; Schreuder, G. Tree species differentiation using intensity data derived from leaf-on and leaf-off airborne laser scanner data. Remote Sens. Environ. 2009, 113, 1575–1586. [Google Scholar] [CrossRef]

- Jones, T.G.; Coops, N.C.; Sharma, T. Assessing the utility of airborne hyperspectral and LiDAR data for species distribution mapping in the coastal Pacific Northwest, Canada. Remote Sens. Environ. 2010, 114, 2841–2852. [Google Scholar] [CrossRef]

- Korpela, I.; Ørka, H.O.; Hyyppä, J.; Heikkinen, V.; Tokola, T. Range and AGC normalization in airborne discrete-return LiDAR intensity data for forest canopies. Isprs J. Photogramm. Remote Sens. 2010, 65, 369–379. [Google Scholar] [CrossRef]

- Yu, X.; Hyyppä, J.; Litkey, P.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Single-Sensor Solution to Tree Species Classification Using Multispectral Airborne Laser Scanning. Remote Sens. 2017, 9, 108. [Google Scholar] [CrossRef]

- Babcock, C.; Finley, A.O.; Cook, B.D.; Weiskittel, A.; Woodall, C.W. Modeling forest biomass and growth: Coupling long-term inventory and LiDAR data. Remote Sens. Environ. 2016, 182, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Dubayah, R.; Goetz, S.J.; Blair, J.B.; Fatoyinbo, T.E.; Hansen, M.; Healey, S.P.; Hofton, M.A.; Hurtt, G.C.; Kellner, J.; Luthcke, S.B. The Global Ecosystem Dynamics Investigation. In Proceedings of the AGU Fall Meeting, San Francisco, CA, USA, 15–19 December 2014; p. 07. [Google Scholar]

- Neeck, S.P.; Volz, S.M. NASA Earth Science Flight Program. In Proceedings of the SPIE Sensors, Systems, and Next-Generation Satellites XVI, Edinburgh, UK, 24–27 September 2012; p. 85330E. [Google Scholar]

- Fu, B.; Wang, Y.; Campbell, A.; Li, Y.; Zhang, B.; Yin, S.; Xing, Z.; Jin, X. Comparison of object-based and pixel-based Random Forest algorithm for wetland vegetation mapping using high spatial resolution GF-1 and SAR data. Ecol. Indic. 2017, 73, 105–117. [Google Scholar] [CrossRef]

- Qiao, X.; Li, L.L.; Li, D.; Gan, Y.L.; Hou, A.Y. Application of Machine Learning in Urban Greenery Land Cover Extraction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 1409–1413. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Turner, D.; Watson, C. Error assessment and mitigation for hyper-temporal UAV-borne LiDAR surveys of forest inventory. In Proceedings of the 11th International Conference on LiDAR Applications for Assessing Forest Ecosystems, Tasmania, Australia, 16–20 October 2011; pp. 1–13. [Google Scholar]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L. Assessing the stability of canopy maps produced from UAV-LiDAR data. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, Australia, 21–26 July 2013; Volume 1, pp. 3879–3882. [Google Scholar]

| Contribution of LiDAR Data | Overall Accuracy | Citation | ||

|---|---|---|---|---|

| Image Segmentation | Feature Extraction | with LiDAR | without LiDAR | |

| LiDAR-derived height models as threshold for image segmentation | - | 85.5% | - | [68] |

| LiDAR-derived CHM to delineate tree crowns | - | 88.9% | - | [69] |

| Crown segments derived from LiDAR point cloud data | Height distribution Canopy shape Proportion of pulse types Intensity of returns | 96% | 91% | [70] |

| Segmentation based on LiDAR-derived layers: DEM, DSM, height and intensity | Topography Height Intensity | 94% | 89% | [26] |

| - | Height features | 83% | 74.1% | [63] |

| Segments created using LiDAR layers: elevation and intensity; LiDAR-derived DEM as reference for geometrical correction | Classification rules based on elevation | 81% | 93% | [71] |

| Individual segments isolated on the LiDAR-derived CHM then overlay on AVIRIS image | Structural features: crown heights, crown widths, intensity, crown porosity | 83.4% | 79.2% | [66] |

| DTM derivation; Individual tree detection; Crown delineation | - | 68.8% | - | [73] |

| Treetop positioning; Crown segments based on LiDAR point cloud data | Structural features: heights, intensity | 90.8% | - | [74] |

| Non-tree area mask derived based on vertical structure from LiDAR; Crown outlining and treetop positioning from LiDAR CHM | Crown shape Laser point distribution Laser return intensity | 51.1% | 70% | [84] |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Wang, T.; Liu, X. A Review: Individual Tree Species Classification Using Integrated Airborne LiDAR and Optical Imagery with a Focus on the Urban Environment. Forests 2019, 10, 1. https://doi.org/10.3390/f10010001

Wang K, Wang T, Liu X. A Review: Individual Tree Species Classification Using Integrated Airborne LiDAR and Optical Imagery with a Focus on the Urban Environment. Forests. 2019; 10(1):1. https://doi.org/10.3390/f10010001

Chicago/Turabian StyleWang, Kepu, Tiejun Wang, and Xuehua Liu. 2019. "A Review: Individual Tree Species Classification Using Integrated Airborne LiDAR and Optical Imagery with a Focus on the Urban Environment" Forests 10, no. 1: 1. https://doi.org/10.3390/f10010001

APA StyleWang, K., Wang, T., & Liu, X. (2019). A Review: Individual Tree Species Classification Using Integrated Airborne LiDAR and Optical Imagery with a Focus on the Urban Environment. Forests, 10(1), 1. https://doi.org/10.3390/f10010001