1. Introduction

A wide range of dynamical systems in ecology [

1], power systems [

2], social networks [

3], epidemics [

4], and other areas can be seen as groups of interaction components. The interaction patterns among individual components give rise to complex dynamic behaviors at the component level, while also inducing emergent complexity at the holistic system level. The classical approach to modeling interactions between individuals is through complex networks [

5]. In reality, we can only access the component observations through sensors, with the underlying interaction as a black box. However, the unknown interactions, i.e., the network structure, are of great importance in dynamic evolution. For example, identifying synaptic adjacency relationships between neurons can reveal abnormal discharge propagation pathways in epileptic seizures [

6]. Accurately identifying physical adjacency relationships among generator nodes is critical for predicting cascading failures. Also, adjacency relationship identification based on social contact networks enables precise prediction of virus transmission hot spots [

7]. Thus arises the problem of network structure inference in nonlinear dynamical systems.

Classic structure identification methods primarily rely on observations to reconstruct network topology. This approach fails to fully exploit the inherent physical properties of the underlying dynamical systems. In fact, the dynamical evolution process embodies substantial physical information, including but not limited to initial conditions, boundary conditions, and the governing equations themselves. Moreover, the numerical solvers of dynamical evolution are intrinsically coupled with network structure, where each iteration can be viewed as an interaction among initial conditions, network topology, and individual dynamics. Certain numerical solvers inherently generate physical information during iterative computations, such as automatic differentiation of parameters. However, such critical information remains underutilized in traditional identification paradigms.

The advancement of artificial intelligence algorithms has given rise to diverse methodologies capable of exploiting physical system information from multiple perspectives [

8]. First, regarding observational data, whereas classic approaches require extensive sampling to predict short-term system states, contemporary neural network-based methods demand significantly fewer samples while aiming for extended temporal prediction horizons [

9]. Secondly, from the dynamic solver interaction perspective, parameter estimation methods with solver interactivity have been developed for individual physical oscillators [

10]. However, for network structure identification in systems composed of multiple oscillators, the parameter dimensionality escalates from a fixed number to a quadratic problem, rendering solver-interactive network structure identification exceptionally challenging. Thirdly, from the perspective of physical information, Physics-Informed Neural Networks (PINNs) have emerged in recent years [

11]. These networks aim to integrate physical knowledge by embedding physical constraints into the loss function, thereby combining the learning capacity of neural networks with physical principles to enhance learning efficiency and identification accuracy. However, in the context of network structure identification, PINNs face challenges similar to those encountered in solver-interactive methods specifically. How to extend their advantages in single-oscillator parameter identification to network topology inference still remains a challenge.

In response to these challenges, we propose an integrated physical information-based solver-interactive network structure reconstruction framework (PISI). Specifically, our framework employs a physical information based graph neural network (PIGNN) as the foundational component and embeds PIGNN into each solver iteration. By leveraging automatic differentiation (AD) information generated during the solving process, the proposed framework achieves end-to-end network structure learning. We validate the effectiveness of the PISI method in state prediction and structure reconstruction using an Runge–Kutta (RK) solver for two Kuramoto systems. The primary innovations and contributions of this research are outlined as follows.

The proposed PISI is solver-interactive, enabling simultaneous estimation of network topology and system states.

By integrating multiple information sources, PISI dramatically reduces the required sample size for a high network structure identification accuracy.

The proposed PISI method realizes extending from single-node parameter identification to multi-agent system structure reconstruction by leveraging the interaction between graph neural networks and solvers.

The rest of this study is organized as follows.

Section 2 reviews related works in three aspects.

Section 3 describes the methodology module of this study.

Section 4 presents the results of this study and discusses the state of the art of the methodology.

Section 5 concludes this study.

2. Related Work

In this section, we present research related to our work in three aspects: (1) graph neural network (GNN)-based structure reconstruction, (2) PINN-based parameter estimation of nonlinear dynamics, and (3) solver-interactivity parameter estimation of single oscillators.

2.1. GNN Based Network Structure Reconstruction

GNN was initially designed to process data with known graph structures by employing message-passing mechanisms to predict or estimate node states [

12]. Subsequent research has gradually expanded to infer or reconstruct unknown network structures from observed data (such as node states or interaction behaviors), forming several important branches in this direction [

13]. Thomas [

14] et al. took the form of a variational auto-encoder, in which the latent code represents the underlying interaction graph and the reconstruction is based on graph neural networks. Zang [

15] et al. proposed combining Ordinary Diferential Equation Systems (ODEs) and GNNs to learn continuous-time dynamics on complex networks in a data-driven manner. Rakshit [

16] et al. presented DyRep, a novel modeling framework for dynamic graphs that posits representation learning as a latent mediation process bridging two observed processes, namely the dynamics of the network (realized as topological evolution) and dynamics on the network (realized as activities between nodes). Zhang [

17] et al. proposed an LLM-based robust graph structure inference framework, LLM4RGNN, which distills the inference capabilities of GPT-4 into a local LLM for identifying malicious edges and an LM-based edge predictor for finding missing important edges, so as to recover a robust graph structure. Although GNNs are capable of handling structure estimation and state prediction tasks, their lack of physics-informed mechanisms compared to our PISI results in a high demand for training data. For example, in [

14], to reconstruct a system consisting of five oscillators, more than 50,000 samples are needed.

2.2. PINN Based Parameter Estimation of Nonlinear Dynamics

PINN integrates neural networks with physical equations by using automatic differentiation to compute PDE residuals, which are incorporated as part of the loss function. The usage of physical information requires no large training datasets, due to the fact that the neural network can be trained solely based on PDE constraints [

18]. Raissi et al. devised a new family of data efficient spatio-temporal function approximators for continuous systems; for discrete systems, the model allows the use of arbitrarily accurate implicit Runge–-Kutta time stepping schemes with an unlimited number of stages. Ji [

19] et al. investigated the performance of the PINN in solving stiff chemical kinetic problems with governing equations of stiff ordinary differential equations. The results elucidate the challenges of utilizing the PINN in stiff ODE systems. However, the loss function of PINN faces difficulties when solving network structure reconstruction compared to our PISI. The aforementioned applications of PINNs have primarily focused on individual systems, with insufficient research on network structure identification.

2.3. Solver-Interactivity Parameter Estimation of Nonlinear Dynamics

In both dynamical evolution and inverse problem parameter estimation, differential equation solvers for dynamical systems invariably play a crucial and inescapable role [

20]. In all of the literature reviewed in

Section 2.1 and

Section 2.2 above, iterative solvers have been consistently employed. Therefore, the integration of parameter estimation and structure reconstruction with solver iterations is of critical importance. Kiwon [

21] et al. integrated the solver into the training loop and thereby allowed the model to interact with the PDE during training. Philipp [

22] et al. presented a novel hierarchical predictor-corrector scheme enabling neural networks to learn to understand and control complex nonlinear physical systems over long time frames, in which the predictor network plans optimal trajectories and the control network infers the corresponding control parameters. Drawing inspiration from classical numerical integration solution rules for differential equations, Zhai [

10] et al. proposed a new recurrent neural network architecture, taking PINN cells as the basic integration units of the architecture to introduce prior physics-based biases. Although existing research has explored the integration of solver-in-the-loop approaches with PINNs, the insufficient understanding of graph properties in network structure identification tasks compared to our PISI hinders their ability to effectively address such problems.

3. Methodology

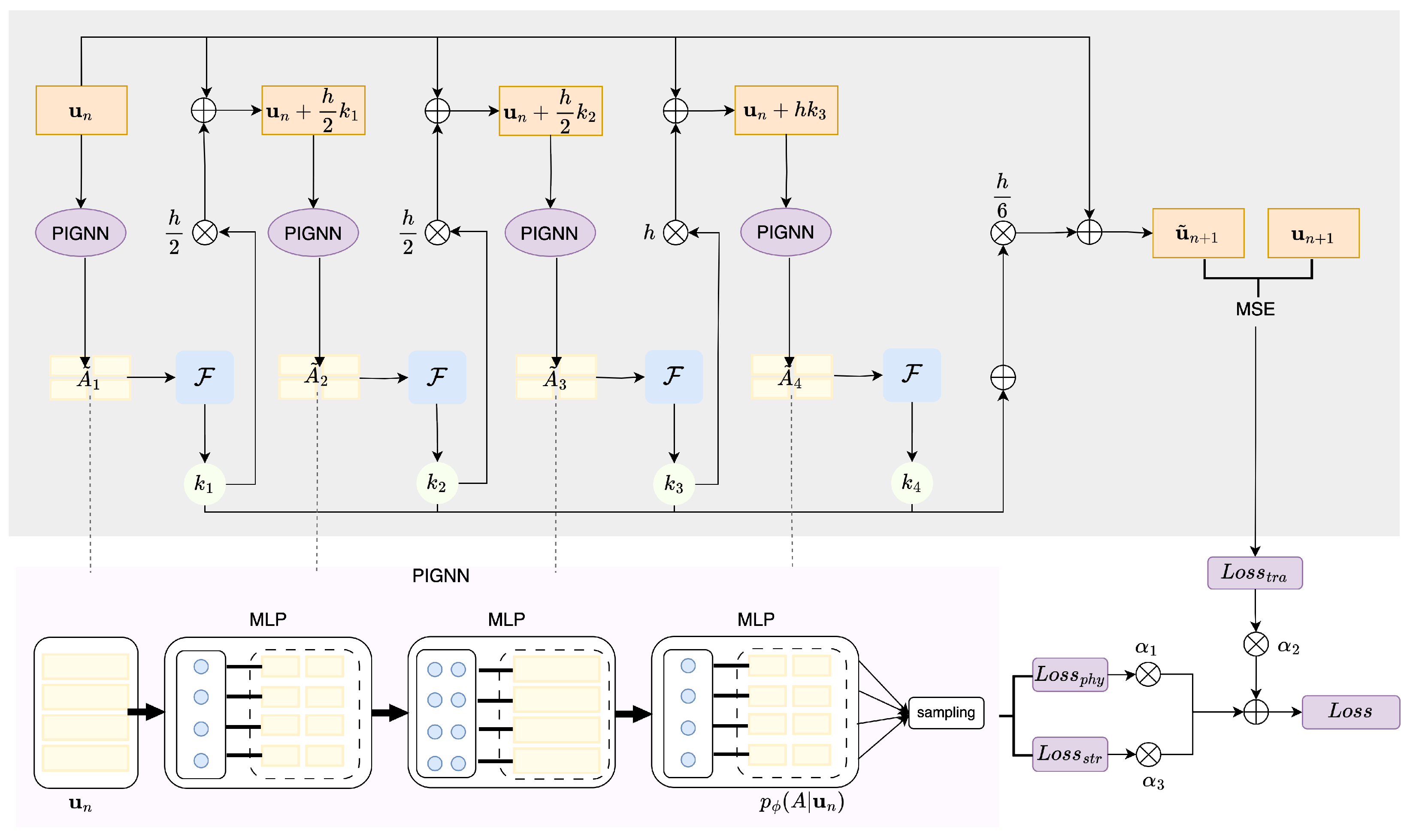

In this study, we propose a unified neural network framework that integrates neural graph, physical, and solver information, aiming to reconstruct the network structure of nonlinear dynamics systems and at the same time predict the system state. The framework comprises two modules: a physical information-based GNN, and an iterative solver. We propose an end-to-end network structure reconstruction solution. The method employs the fourth-order Runge–Kutta (RK4) method for differential equation solving as its backbone. We employ RK4 as an illustrative example, while other differential equation solvers are equally applicable. The PIGNN takes the observation data as input and outputs, with edge type posterior . With a sampling module, we obtain the estimated network structure . will compensate for the unknown terms in the dynamical system, thereby enabling the operation of the RK4 solver. The PIGNN and RK4 module interact as follows. At each stage of RK4, the PIGNN provide structure estimation value and the receive the stage node state to obtain the physical loss. At the end of each RK4 iteration, state loss is provided to PIGNN, thereby achieving end-to-end training across all four involved PIGNNs.

3.1. Modeling of the Network Structure Reconstruction Problem

Consider a system composed of

n coupled oscillators, captured by parametrized and nonlinear differential equations of the general form

where

consists of solutions

defined in Banach space

, and

denotes the time derivative term of each oscillators.

is a parametrized nonlinear operator, and

represents system coupling strength parameters, where

is a discrete categorical variable representing the edge type between oscillators

and

.

Without loss of generality, for each oscillator

i, the nonlinear operator

consists of three parts

with

describing the intrinsic oscillatory linear properties,

describing the phase interaction between oscillators, and

describing the spatial diffusion/dissipation of the phase field. This decomposition represents many scenarios in mathematical physics, including the extended Kuramoto model describing power grid frequency synchronization [

23], the XY model [

24], and the theta-gamma coupled oscillation model [

25] for brain rhythm synchronization [

26]. As an important model that we will focus on in the following discussion, the Kuramoto model corresponds to the case when

,

,

, with

, and

K as the time-independent constant. The first problem we focus on is: how to incorporate the solver with the network inferring procedure thus to improve the state predict accuracy? The second problem we focus on is: how can we infer the coupling strength, i.e., the network structure

A from as less as observed data?

3.2. Runge–Kutta Methods

The inputs consist of trajectories of the N oscillators. We denote the observed trajectories of the oscillator at time t as . All observed data for N oscillators from time are denoted as , with the as all trajectories of N oscillator at time t. We denote the trajectories of node as .

To solve the considered two problems, we first introduce one of the most widely used differential equation solvers. The Runge–Kutta method can advance temporal solutions for non-stiff ordinary differential equations and semi-discretion partial differential equations. The flexible and extensible framework accommodates both explicit and implicit formulations for temporal discretizations. The RK4 is self-starting and eliminates the dependency on historical data required by multi-step methods. The Runge–-Kutta methods are linear extrapolations based on Taylor series expansion. Since

A is unknown during the iteration, we explicitly represent

A in

. Next, we employ the fourth-order Runge–Kutta (RK4) method for temporal discretization as an illustrative example. For time step

, from time

to

, the solution can be written as

where

3.3. Design of PISI

It should be noticed that among the RK4 procedure, the discretization of phase interaction encodes the underlying reconstructed A, thus leading to the underlying inferred . During the RK4 procedure, the unknown vector field is evaluated four times at distinct intermediate stages. Higher-order Runge–Kutta methods require progressively more evaluations of the vector field. Thus, the key of structure reconstruction and states prediction lies in the refer of . Our basic idea is to approximate with a graph based neural network during the RK4 procedure. The designed neural network undergoes four invocations during a single RK4 iteration while maintaining parameter sharing across all calls. In designing the neural network, we prioritize three key considerations. First, the architecture should effectively utilize intermediate RK iteration data and physical information. Second, the PISI should effectively incorporate graph attributes of the identifiable matrix A. Finally, the network’s input and output data should maintain compatibility with the RK4 iterative procedure.

3.3.1. The Physic Information Based Graph Neural Network

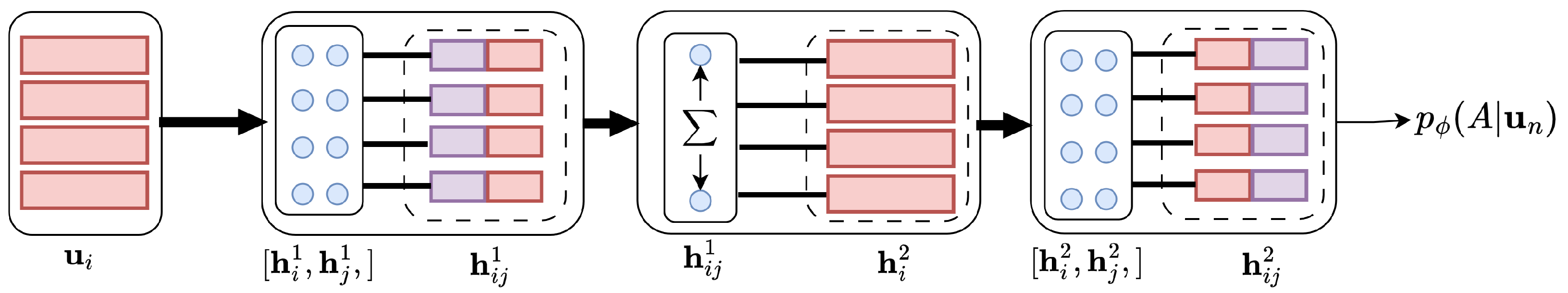

Overall, we adopt the graph neural network structure from [

14], but redesign the loss function to satisfy the aforementioned three requirements for our neural network. The PIGNN supports the RK4 procedure as follows by predicting the interaction graph given the trajectories.

First, we state the graph neural network part of PIGNN, then we present how the traditional GNN incorporates the physical information. The physical information-based graph neural network can be denoted as edge type posterior with the shared parameter of the four PIGNNs. The PIGNN returns a factorized distribution of A.

We state a brief introduction of GNN, which operates directly on graph-structured data by passing local messages [

27]. We define a single node-to-node message passing operation in a GNN as follows:

in which the

and

are the embedding of node

i and edge between nodes

i and

j. The function

denotes a neural network like MLP [

28], CNN [

29] with under trainable parameter

. Equation (

8) enables iterative bidirectional mapping between edge and node representations through successive message-passing operations.

Adopting the GNN structure from [

14], we design a message passing procedure consists of two messages passing from nodes to edges as in

Figure 1, with the first passing encoding the information of local node pairs and the second passing encoding global information corresponding to the considered nodes pair. Specifically, starting from a fully-connected graph without self-loop, the two nodes to edges message passing for

of the graph neural network is as follows

Then, from the global feature

, we can calculate the edge type posterior

.

To bridge the gap between the PIGNN and the RK4 procedure, we sample from the edge type posterion and obtain

(into

Appendix A for sampling method). The resulting edge value should be substituted into the partial unknown vector field

feeding to Equations (4)–(7).

Above all, the interaction between the PIGNN and RK4 is as follows. In the

n-th time period of RK4, from trajectories input

, run the first

and obtain

. Substitute

into the RK4 procedure, and oobtain the evolution function

. Calculate the second intermediate variable

. The process is then iterated cyclically until reaching the fourth stage of RK4. From Equation (

3), we can then obtain the estimated trajectories

. Within a time step of RK4, we employ adjacency matrices

at four stages to enhance the model’s learning capacity. To ensure the numerical stability of the RK4 algorithm, we introduce a consistency loss term for the adjacency matrices within each time step, guaranteeing evolutionary continuity between stages. The specific design of the penalty term is detailed in next subsection.

3.3.2. Loss Function

The four PIGNNs share parameter , and the training loss consists of three components: (1) physical information: automatic differentiation of the structure, (2) the trajectory prediction loss term, and (3) the structure reconstruction loss. The incorporation of tri-dimensional information endows our method with sampling efficiency.

Automatic differentiation is a numerical method for computing derivatives of functions with respect to the input, serving as the core operation in PINNs. Its fundamental principle involves decomposing complex functions into elementary operations and systematically applying the chain rule, making it inherently compatible with most modern neural network training frameworks. The underlying structure can be calculated from the

k-th PIGNN as

with

the automatic differentiation calculation in the PIGNN training procedure. As the four PIGNN shares parameter and the ground truth of the structure

A is time-invariant among the four stages in RK4, we take coefficient of variation as physic information loss

where

is the coefficient of variation and

the mean of

.

For the trajectory prediction, we focus on the end-to-end loss. In one iteration of RK4, the end-to-end loss uses the sample

and the output

. The PIGNN parameter is trained via the MSE of the trajectories as follows

For the structure reconstruction loss, we delve into the four stages of RK4 while maintaining an end-to-end learning process. For each stage, the Kullback–Leibler (KL) divergence is employed. Across different stages, we constrain the overall distribution matching by averaging the KL divergences, thereby compelling all stages to jointly optimize the alignment between latent variables and priors, rather than focusing on any single network. The resulting structure reconstruction loss of the

k-th step at the

n-th time period

is as follows:

The prior

is a factorized uniform distribution over edge types. The core assumption of the factorized uniform prior is that edge types are independent and unbiased.

The total loss function of the total training loss combines the above three losses, using the weighting parameters

,

and

to control the relative importance of each part of the optimization process. We define the total training

as follows:

By employing the combined loss function

, the model can optimize state prediction and structure estimation. Now that we have described all the elements in the framework in

Figure 2, the training goes as follows.

4. Experiment

We used PyTorch (version 2.7.0) to implement our network. All models in the experiment were trained for 300 epochs. We train the framework with Adam [

30], using an initial learning rate of 3 ×

that is halved every 10 epochs. The entire network was trained and evaluated using an NVIDIA GeForce RTX 4090 GPU with a batch size of 64. The grid search method was utilized to find the proper values of weights of the three loss terms.

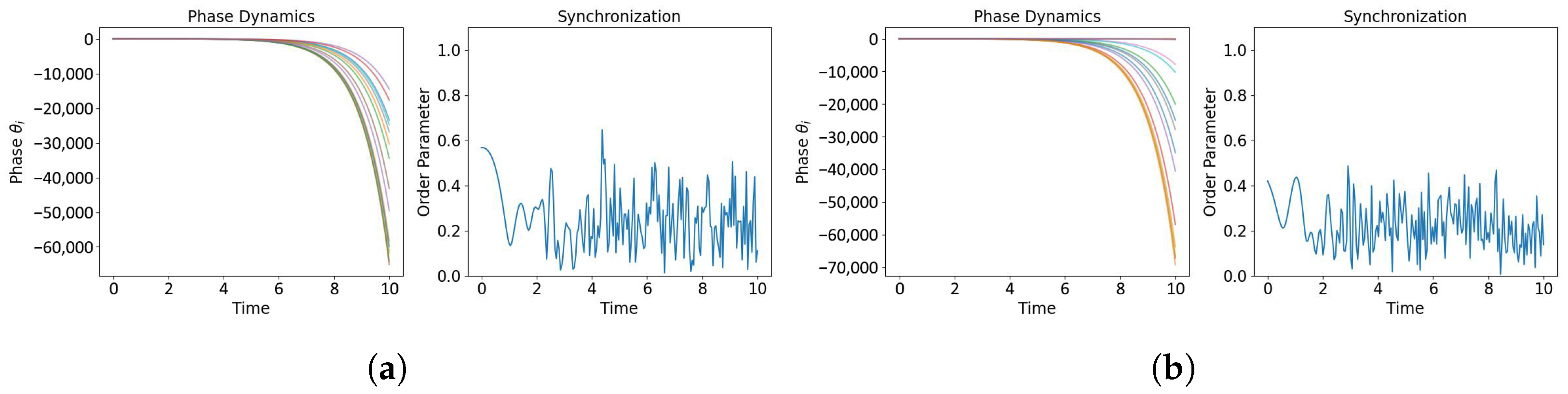

4.1. Kuramoto Model

The Kuramoto model was independently introduced in the context of power grids in [

31] and has a long history of study there. We use the following notation:

where

denotes the phase angle of oscillator

i, and the first-order and second derivative of

denote voltage angle

and its time derivatives, respectively. The injected power

is selected in

.

is the uniform coupling coefficient [

32]. The classical Kuramoto model can be transformed into an equivalent state-space representation for trajectories sampling:

The initial voltage angle is a random vector from

. The initial phase angle is sampled from a Logistic map to generate highly dispersed initial phase angles. We introduce the outlier value to prevent the system from reaching synchronization prematurely, as synchronization can significantly interfere with structural identification [

33,

34]. Specifically, we multiply an outlier parameter with the logistic map during the generation of initial values. We present the order parameter in sampling time period to quantify the level of synchronization in

Figure 3. The outlier value is selected from

.

4.2. System Structure

Given the initial conditions, we can simulate the trajectories by solving Equation (

21) by RK4 method. The time step is set to 0.01 in the RK4. By default, training and validation trajectories takes length 49 while test trajectories continue for another 20 time steps. We explicitly hard-coded the first edge type as a “non-edge”, i.e., no messages are passed along edges of this type. The MLPs have hidden and output dimensions of 256. The overall input/output dimension of our model is 2 for the Kuramoto model experiments. During training, we use teacher forcing in every 10th time step (i.e., every 10th time step, the model receives a ground truth input; otherwise, it receives its previous prediction as input). The detailed PIGNN structure is in

Figure 1. To characterize the small-world [

35] properties of smart grids and demonstrate the applicability of our method to diverse network topologies, we employ two distinct small-world networks. Small-world networks are characterized by high clustering coefficients and short average path lengths. We simulate two networks with

nodes, representing the small world network structure in

Figure 4. The generator nodes are connected by a global connection strength of 0.01. We implement the classical Watts–Strogatz model using the NetworkX library [

36]. The network parameters are as in

Table 1.

4.3. Physic Information

According to Equation (

21), we will derive the automatic differentiation information for the Kuramoto system. We dnote

Then, we have

In PIGNN training,

does not directly depend on the variable

in PyTorch’s automatic differentiation. Therefore, we introduce an intermediate variable

, and obtain the AD information as follows:

Here,

is from the teacher forcing

(i.e., the ground truth of next time step).

4.4. Experimental Results

We compare our model with the state-of-the-art structure reconstruction method and evaluate it on the two Kuramoto systems with node size 10 and 20 both in the trajectory prediction and structure reconstruction. To demonstrate our method’s sample efficiency as stated in

Section 3.3.2, we conduct comparative Mean Squared Error (MSE) tests across different models with varying sample quantities.

Table 2 compares the state prediction MSE of single LSTM, joint LSTM [

37], the global PISI, the local PISI, and static under different sample sizes for systems with 20 nodes. The best result under each sample size is highlighted in bold. We run all the methods with

time steps, and predict the following 20 time steps. The LSTM baseline labeled “single” employs separate but weight-shared LSTMs for each object. The alternative “joint” approach concatenates all state vectors as input to a single LSTM trained for simultaneous multi-object state prediction. The global PISI corresponds to the two message passing GNN in Equation (

10). The local PISI corresponds to the classic GNN in Equation (

8). The static baseline uses

. In RK4PINN [

10], a total of 6K samples are required for estimating three parameters in the Lorenz system. We set the minimum sampling size to 50 K, as the neural relational inference (NRI) [

14] model reconstructs 10-node graphs with only graph information at this sample size. The results demonstrate that our method exhibits advantages when the sample size is limited. When the sample size is over 60 K, our global PISI reaches 0.01 level MSE.

Table 3 studies the MSE of state prediction with node size 20 and sample size 100 K when the prediction steps are increasing. For one-step prediction, the joint LSTM, local PISI, and the global PISI reaches a 1 ×

level. Our global PISI shows advantages for prediction steps 1 and 20.

For the structure reconstruction accuracy, we compare our PISI with correlation LSTM, the correlation path method, and the NRI in

Table 4 with node sizes of 10 and 20 and sample size increasing. The final accuracy is the average over all batches, and the accuracy for each batch is calculated as

with

the Kronecker delta function. The correlation LSTM method trains an LSTM network with shared parameters [

38] to individually model each trajectory, computes correlations between the final hidden states, and generates an interaction matrix through thresholding. The correlation path method estimates the interaction graph by applying thresholding to the correlation matrix between trajectory feature vectors.

Table 4 demonstrates a slight improvement in reconstruction accuracy as the sample size increases. Our global PISI demonstrates superior performance with 20 nodes, outperforming state-of-the-art methods by 0.5% with sample size 100 K.

Table 5 investigates the impact of outlier values and the dynamic evolution time step on the recognition results. As stated in

Section 4.1, the system synchronization has significant drawbacks for network structure identification. We set the outlier values 0.5, 3, and 3.8 with an evolution time step of 50. When the outlier value is 0.5, the network achieves synchronization at time step 10. The considered four methods all fail to reconstruct the structure. When the outlier reaches 3.5, the system fails to achieve synchronization. Compared to baseline methods, our approach shows improved reconstruction accuracy. The evolution time scales linearly with the sampling size, where the scaling factor is the sampling interval. We set it to 10, 50, and 100 to maintain consistency with the state prediction experiment.

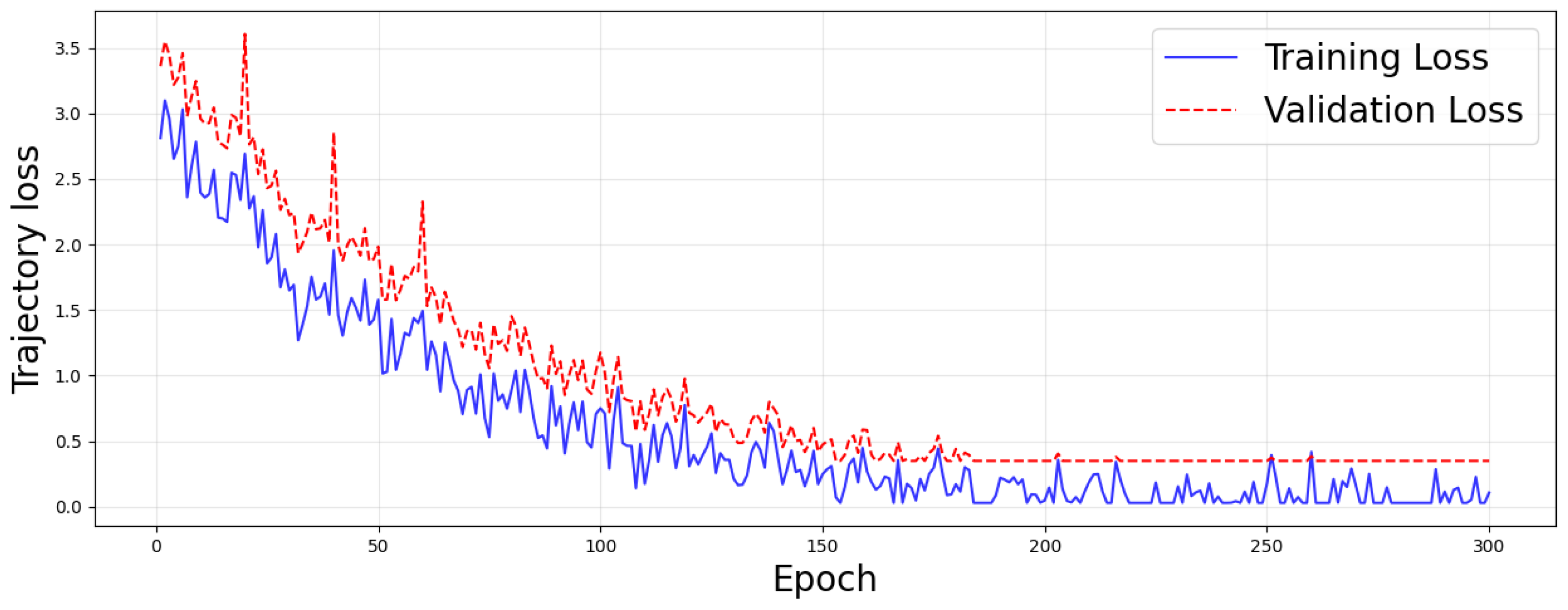

4.5. Ablation Studies

Finally, we set

,

, and

to balance the loss terms for different tasks. In this subsection, we will first perform an ablation study on weight parameters

,

, and

to verify the effect of each loss term in Equation (

19). Among the three weight parameters,

and

capture the structure importance.

captures the trajectory importance. Increasing the weight parameter

accelerates the convergence of loss term of trajectory.

Figure 5 shows the trajectory loss convergence with

,

, and

.

When

, PISI degenerates into a structure identification algorithm that does not rely on physical information but depends on the graph neural network and solver iteration. In

Table 6, we investigate the impact of physical information on structural identification for networks with

. It can be observed that when

, the structural identification accuracy decreases. This is due to insufficient utilization of physical information from the adjacency matrix. When

is too large (relative to

), the structural identification accuracy also declines, as excessive physical information loss leads to slower convergence in fitting node states.

5. Conclusions

In this study, we propose a physical information-based solver-interactive network structure reconstruction method. The PISI effectively predicts the system trajectory. Furthermore, it is able to output the system structure adjacent matrix. The core concept of PISI lies in employing neural networks to approximate the unknown dynamic functions during the solution process of dynamic systems, thereby integrating physical information, system structure, and trajectory data. Specifically, PISI comprises two main modules: the structure reconstruction PIGNNs and the dynamic solver RK4. For the input observation, the RK4 modular evolves the state, thus providing the trajectory loss for PIGNN. At the end of each RK4 iteration, we obtain a trajectory loss. PIGNN provides the estimated structure for each RK4 stage. We employ four parameter-shared PIGNNs to approximate the unknown dynamic function in RK4. The four PIGNNs are trained end-to-end in a RK4 iteration. Each PIGNN internally consists of a global GNN. The global GNN consists of node-to-edge and edge-to-node message passing operations to enable more efficient exploitation of global structural information. Each information channel is processed by an MLP. Finally, the PISI loss function comprises three components: trajectory loss from RK4 output, physics-informed loss derived from physical constraints at each RK4 stage, and estimated structural loss for stage-wise topology reconstruction. We evaluate our method in two Kuramoto systems. Compared to the baseline, the global PISI shows advantage in the trajectory prediction and the structure reconstruction. Ablation studies demonstrate that the balance among the three loss terms significantly impacts recognition performance.

Author Contributions

Conceptualization, J.L. and G.M.; methodology, J.L. and G.M.; software, J.L. and G.M.; validation, J.L. and G.M.; formal analysis, J.L. and G.M.; investigation, J.L. and G.M.; resources, J.L. and G.M.; data curation, J.L. and G.M.; writing—original draft preparation, J.L.; writing—review and editing, J.L. and G.M.; visualization, J.L. and G.M.; supervision, J.L. and G.M.; project administration, J.L. and G.M.; funding acquisition, J.L. and G.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Fundamental Research Funds for the Central Universities under Grants BLX202338. This work was supported by National Natural Science Foundation of China under Grants 62303052. This work was supported by the PNRR project FAIR-Future AI Research (PE00000013), under the NRRP MUR program funded by the NextGenerationEU.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy.

Acknowledgments

We thank the editor and the anonymous reviewers for their valuable suggestions to improve the quality of this study.

Conflicts of Interest

The authors declare that they have no known conflicting financial interests or personal relationships that could have appeared to influence the work reported in this study.

Appendix A

To enable backpropagation through sampling from our discrete latent variables, we sample from the continuous approximation of

. Specifically, we employ the Concrete distribution [

39] with the sampling procedure defined as follows:

in which

is an i.i.d. sample from the Gubel(0,1) distribution, and

is a a parameter that controls the “smoothness” of the samples.

References

- Hu, J.; Jeff, G. Emergent phases of ecological diversity and dynamics mapped in microcosms. Science 2022, 378, 85–89. [Google Scholar] [CrossRef]

- Ehsan, A.; Milad, S. A Novel Modified Fuzzy-predictive Control of Permanent Magnet Synchronous Generator Based Wind Energy Conversion System. Chin. J. Electr. Eng. 2023, 9, 107–121. [Google Scholar] [CrossRef]

- Nurisso, M.; Raviola, M.; Tosin, A. Network-based kinetic models: Emergence of a statistical description of the graph topology. Eur. J. Appl. Math. 2024. [Google Scholar] [CrossRef]

- Zhang, X.; Barzel, B. Epidemic spreading under mutually independent intra- and inter-host pathogen evolution. Nat. Commun. 2022, 13, 6218. [Google Scholar] [CrossRef]

- Battiston, F.; Amico, E. Barrat, A. The Physics of Higher-Order Interactions in Complex Systems. Nat. Phys. 2021, 17, 1093–1098. [Google Scholar] [CrossRef]

- Jiang, S.; Pei, H.; Chen, J.; Li, H.; Liu, Z.; Wang, Y.; Gong, J.; Wang, S.; Li, Q.; Duan, M.; et al. Striatum- and Cerebellum-Modulated Epileptic Networks Varying Across States with and without Interictal Epileptic Discharges. Int. J. Neural Syst. 2024, 34, 2450017. [Google Scholar] [CrossRef]

- Kuchler, T.; Russel, D.; Stroebel, J. The Geographic Spread of COVID-19 Correlates with the Structure of Social Networks as Measured by Facebook. Available online: https://www.ifo.de/en/cesifo/publications/2020/working-paper/geographic-spread-covid-19-correlates-structure-social-networks (accessed on 6 August 2025).

- Zhang, L.; Xu, Z.; Wang, S.; He, G. Clustering Dimensionless Learning for Multiple-Physical-Regime Systems. Comput. Methods Appl. Mech. Eng. 2024, 415, 116728. [Google Scholar] [CrossRef]

- Chen, C.; Ma, H.; Aihara, K. Predicting future dynamics from short-term time series by anticipated learning machine. Natl. Sci. Rev. 2020, 7, 1070–1091. [Google Scholar] [CrossRef]

- Zhai, W.; Tao, D.; Bao, W. Parameter estimation and modeling of nonlinear dynamical systems based on Runge–-Kutta physics-informed neural network. Nonlinear Dyn. 2023, 111, 21117–21130. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Scarselli, F.; Gori, M.; Tsoi, A. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2009, 20, 61–80. [Google Scholar] [CrossRef]

- Zhou, J.; Cui, G.; Hu, S. Graph Neural Networks for Network Reconstruction: From Dynamics to Topology. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 3124–3140. [Google Scholar]

- Kipf, T.; Fetaya, E.; Wang, K.; Welling, M.; Zemel, M. Neural Relational Inference for Interacting Systems. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 2688–2697. [Google Scholar]

- Zang, C.; Wang, F. Neural Dynamics on Complex Networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD ’20), Virtual Event, 6–10 July 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 892–902. [Google Scholar]

- Trivedi, D.; Farajtabar, M.; Biswal, P.; Zha, H. Learning Representations Over Dynamic Graphs. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Zhang, Z.; Wang, X.; Zhou, H.; Yu, Y.; Zhang, M.; Yang, C.; Shi, C. Can Large Language Models Improve the Adversarial Robustness of Graph Neural Networks? In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD ’25), Toronto, ON, Canada, 3–7 August 2025; ACM: New York, NY, USA, 2025.

- Khoo, Y.; Lu, J.; Ying, L. Solving Parametric PDE Problems with Artificial Neural Networks. J. Comput. Phys. 2021, 430, 110092. [Google Scholar] [CrossRef]

- Ji, W.; Qiu, W.; Shi, Z.; Pan, S.; Deng, S. Stiff-PINN: Physics-Informed Neural Network for Stiff Chemical Kinetics. J. Phys. Chem. A 2021, 125, 8098–8106. [Google Scholar] [CrossRef]

- Morrison, M.; Kutz, J.N. Solving Nonlinear Ordinary Differential Equations Using the Invariant Manifolds and Koopman Eigenfunctions. SIAM J. Appl. Dyn. Syst. 2024, 23, 924–960. [Google Scholar] [CrossRef]

- Um, K.; Brand, R.; Fei, Y.; Holl, P.; Thuerey, N. Solver-in-the-Loop: Learning from Differentiable Physics to Interact with Iterative PDE-Solvers. Adv. Neural Inf. Process. Syst. 2020, 33, 6111–6122. [Google Scholar]

- Holl, P.; Koltun, V.; Thuerey, N.; Um, K. Learning to Control PDEs with Differentiable Physics. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; Volume 139, pp. 1234–1245. [Google Scholar]

- Skardal, P.; Arenas, A. Higher-order interactions in complex networks of phase oscillators promote abrupt synchronization switching. Commun. Phys. 2020, 218, 3. [Google Scholar] [CrossRef]

- Wang, Y.; Han, R.; Liu, X.; Horiguchi, T. Phase transitions of XY model in ultra-thin magnetic film with a triangular latt ice. Acta Phys. Sin. 2023, 52, 1776–1782. [Google Scholar] [CrossRef]

- Pirazzini, G.; Ursino, M. Modeling the contribution of theta-gamma coupling for sequential memory Pirazzini Ursino. Front. Neural Circuits 2024, 18, 1326609. [Google Scholar] [CrossRef]

- Lin, A.; Liu, K.; Bartsch, R. Dynamic network interactions among distinct brain rhythms as a hallmark of physiologic state and function. Commun. Biol. 2020, 197, 3. [Google Scholar]

- Gori, M.; Monfardini, G.; Scarselli, F. A new model for learning in graph domains. In Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, 2005, Montreal, QC, Canada, 31 July–4 August 2005; Volume 2, pp. 729–734. [Google Scholar]

- Wu, Z.; Pan, S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef]

- Chen, G.; Wang, G.; Wang, K.; Hu, M.; Deng, J. KCNN: A Neural Network Lightweight Method and Hardware Implementation Architecture. J. Comput. Res. Dev. 2025, 62, 532–541. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Bergen, A.; Hill, D. A Structure Preserving Model for Power System Stability Analysis. IEEE Trans. Power Appar. Syst. 1981, PAS-100, 25–35. [Google Scholar] [CrossRef]

- Nitzbon, J.; Schultz, P.; Heitzig, J.; Kurths, J.; Hellmann, F. Deciphering the imprint of topology on nonlinear dynamical network stability. New J. Phys. 2017, 19, 033029. [Google Scholar] [CrossRef]

- Shanmugasundaram, S.; Kashkynbayev, A.; Udhayakumar, K.; Rakkiyappan, R. Centralized and decentralized controller design for synchronization of coupled delayed inertial neural networks via reduced and non-reduced orders. Neurocomputing 2022, 469, 91–104. [Google Scholar] [CrossRef]

- Udhayakumar, K.; Fathalla, A.R.; Li, R.R. Quasi-bipartite synchronisation of multiple inertial signed delayed neural networks under distributed event-triggered impulsive control strategy. IET Control Theory Appl. 2021, 15, 1615–1627. [Google Scholar]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ’small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- Schultz, P.; Heitzig, J.; Kurths, J. A random growth model for power grids and other spatially embedded infrastructure networks. Eur. Phys. J. Spec. Top. 2014, 223, 2593–2610. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social LSTM: Human Trajectory Prediction in Crowded Spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 961–971. [Google Scholar]

- Jang, E.; Gu, S.; Poole, B. Categorical Reparameterization with Gumbel-Softmax. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).