1. Introduction

As a critical component of power transmission lines, insulators play a key role in ensuring safe isolation from grounded structures and maintaining the stable operation of the power grid. However, due to harsh environmental conditions and natural weather disasters such as strong winds, heavy rain, snow, and high temperatures, insulators are prone to defects like self-explosion, string drop, damage, and flashover discharge. These defects not only compromise the integrity of the insulators but can also lead to power safety incidents [

1,

2,

3]. Moreover, the complex environments where transmission lines are located often feature diverse and cluttered backgrounds, including trees, buildings, and utility poles, which further increase the difficulty of defect detection. Therefore, timely and accurate detection of defects in transmission line insulators is an essential measure for ensuring the safety of the power system.

In the early stages, insulator defect detection relied on manual inspection, which was not only labor-intensive and time-consuming but also lacked reliability in detection quality. In recent years, with technological advancements, machine vision-based methods have become the primary approach for insulator defect detection due to their high real-time performance and accuracy [

4,

5,

6,

7,

8,

9,

10]. For example, utilizing drone-captured images enables the timely and precise identification of damaged insulators. Currently, the two mainstream methods for machine vision-based insulator defect detection are the lightweight deep learning model design [

11,

12,

13,

14,

15,

16,

17,

18,

19] and feature extraction frameworks optimization [

20,

21,

22,

23,

24,

25].

Dahua Li et al. [

11] proposed a LiteYOLO-ID insulator defect detection model with strong generalization ability by designing a new lightweight convolution module ECA-GhostNet-C2f and a neck network EGC PANet. Yanping Chen et al. [

12] made lightweight improvements to the backbone network Faster R-CNN, greatly reducing model parameters and improving its detection accuracy. Zhibin Qiu et al. [

13] used a MobileNet lightweight convolutional neural network to optimize the YOLOv4 model structure, enhancing the accuracy and speed of insulator defect detection. Zhong Cao et al. [

14] introduced CAM and CSO into the original YOLOv8m, improving detection accuracy and reducing model parameters. Yang Lu et al. [

15] designed a lightweight attention mechanism and introduced GSConv and C3Ghost convolution modules to reduce redundant parameters in the model. Yong Jiang et al. [

16] adopted a new lightweight module C2f-RBE in the backbone architecture, which replaces traditional bottlenecks with RepViTBlocks and significantly improves detection efficiency and performance. Cong Liu et al. [

17] integrated the Ghost module and introduced C3Ghost as an alternative to the backbone network, proposing a lightweight detection algorithm for multiple defects in insulators based on an improved YOLOv5s. Weiyu Han et al. [

18] improved the C2f module by introducing the SCConv module, thereby enhancing the backbone network, reducing space and channel redundancy, and lowering computational complexity and parameter count. Liangliang Wei et al. [

19] proposed an automatic detection method based on an improved lightweight YOLOv5s model and used GIoU loss function, Mish activation function, and CBAM module to identify and locate insulator defects.

Zheng He et al. [

20] integrated the Adaptive Feature Fusion (ASFF) module, which enables the network to learn the relationships between different feature maps, enhance semantic information, and improve the network’s ability to detect minor defects. Qiang Zhang et al. [

21] constructed the C3 Global Pool Fusion (C3-GPF) module, aiming to enhance the focus on key data in the extraction and fusion stages of insulator defect features. Chuang Gong et al. [

22] enhanced the C2f structure of YOLOv8 and improved its multi-scale feature extraction and multi-level feature fusion capabilities by integrating the expansion direction residual module and heavy parameter module. Bao Liu et al. [

23] introduced spatially aware convolution in the task and structure dual decoupling head regression branch, enhancing the ability to extract spatial feature information in both horizontal and vertical directions. Zhongsheng Li et al. [

24] replaced the traditional PANet structure with a BiFPN-P feature fusion module to improve the extraction of shallow features. Zhuye Xu et al. [

25] proposed a new attention mechanism (MAP-CA) that effectively integrates global and local feature information by combining mean pooling and max pooling, achieving higher accuracy in insulator defect recognition.

Although the above studies have achieved valuable results, there are contradictions between model complexity and recognition accuracy in [

11,

12,

13,

14,

15,

16,

17,

18,

19], and the manually optimized feature extraction methods in [

20,

21,

22,

23,

24,

25] have limited generalization ability.

Moreover, current mainstream object detection algorithms still face significant challenges in small object recognition. For instance, YOLOv5s only achieves a mAP of 21.5% for small objects (area <

) on the COCO dataset, which is substantially lower than its performance on medium (44.6%) and large objects (56.2%) [

26]. YOLO models often exhibit low accuracy and sensitivity to background noise in small object detection, where deeper networks may cause small object features to be lost or suppressed [

27]. Since insulator defects often appear in small sizes and concealed forms, existing methods struggle to balance detection accuracy and efficiency, particularly in scenarios with complex backgrounds and low contrast. In light of this, this paper proposes an improved DGNCA-Net insulator detection framework based on YOLOv11 [

28], featuring a lightweight Ghost backbone as its core backbone network. The primary contributions of this paper can be summarized as follows.

- (1)

Deformable convolution (DC) with GhostNet combined in parallel with a 3 × 3 convolution to form the Large-Kernel Deformable Ghost Parallel Convolution (LKDGPC) module, which replaces original convolution layers in YOLOv11.

- (2)

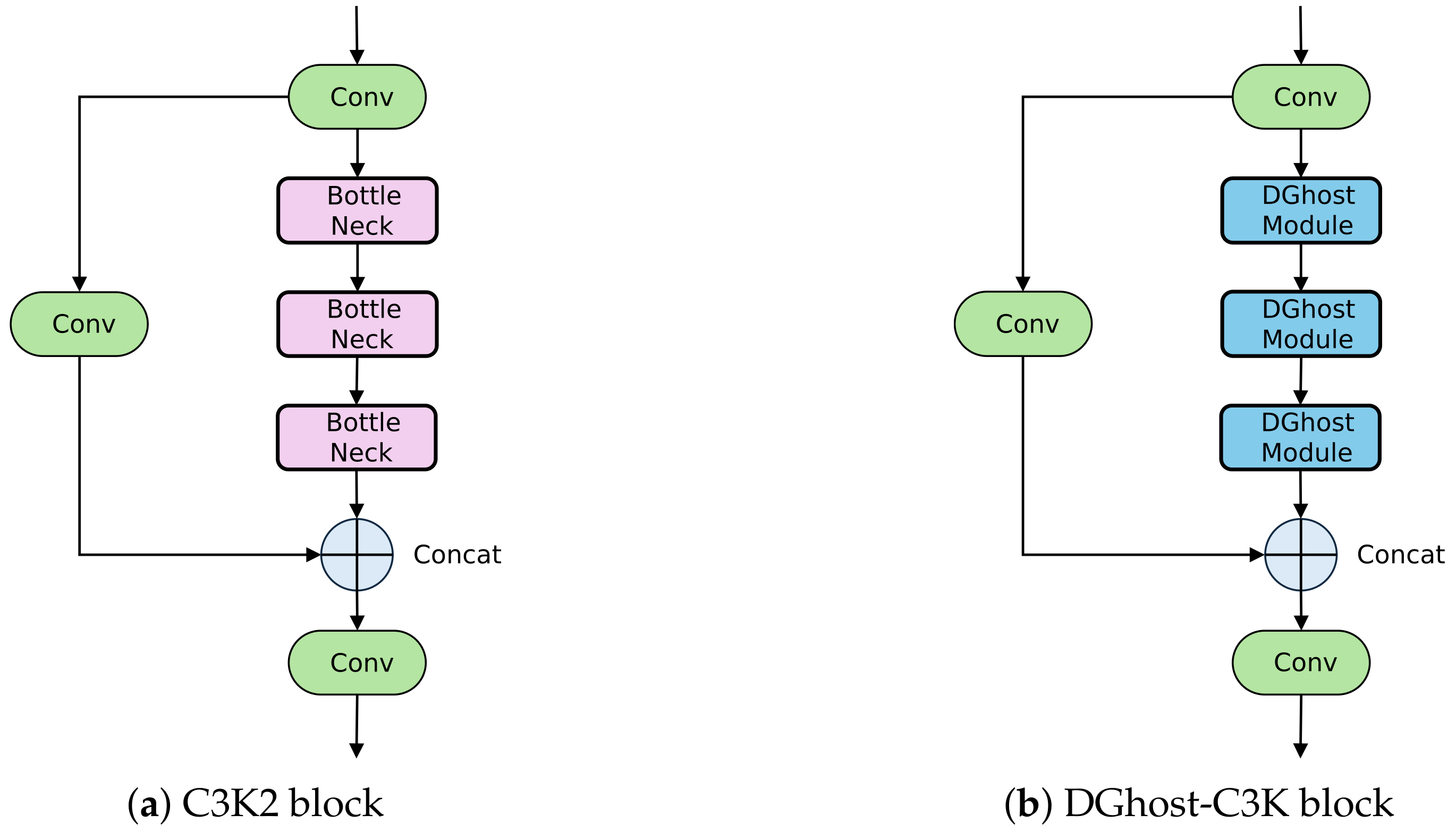

A DGhost-C3K (DG-C3K) module is developed based on the Deformable Ghost (DGhost) module to replace the original C3K2 module, aiming to improve feature extraction efficiency and representation.

- (3)

A Cross Stage Partial Coordinate Attention (CSPCA) module is introduced to replace the C2PAS module in the backbone’s final stage, enhancing object localization capabilities.

- (4)

A Progressive Upsampling Feature Pyramid Network (PUFPN) with deformable convolution is proposed as a redesigned neck (DC-PUFPN), enabling better feature fusion and improved detection of small insulator defects.

The structure of this article is organized as follows:

Section 2 provides a comprehensive introduction and analysis of the proposed model.

Section 3 presents detailed quantitative and qualitative experimental results, validates the effectiveness of each component through ablation studies, and demonstrates the superiority and advanced performance of the proposed model through comparative experiments. Finally,

Section 4 concludes the article and summarizes the main findings and key insights.

2. DGNCA-Net Insulator Defect Detection Model

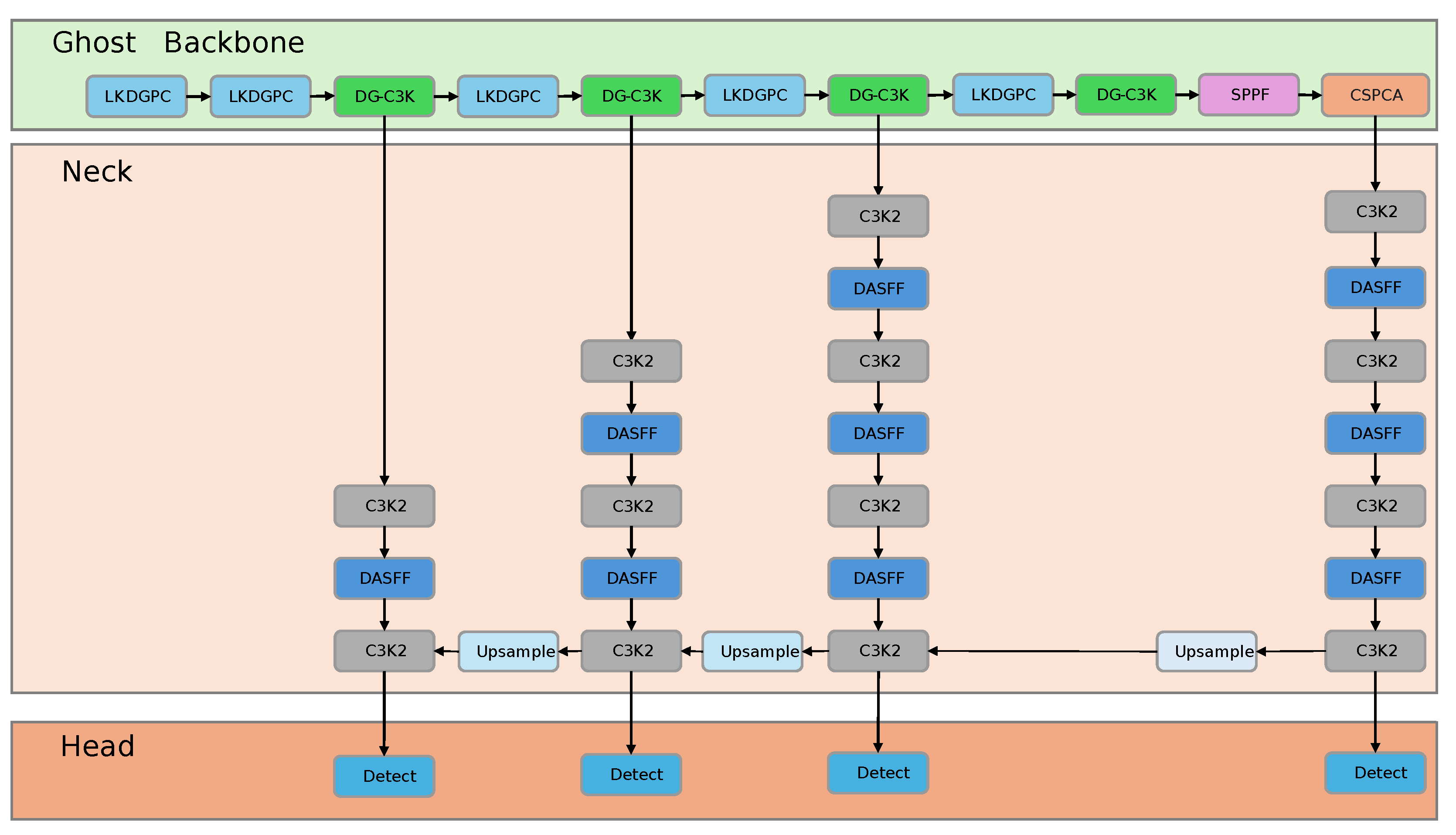

YOLOv11 is a model based on the Cross Stage Partial Network (CSPNet) architecture, with its core being the C3K2 module, which is an improved version of the C2F module in YOLOv8. The backbone network and neck network adopt CNN and Path Aggregation Network (PANet) structures, respectively. However, both the CNN model and the PANet structure have shortcomings such as insufficient global feature extraction ability and repeated feature fusion, resulting in low detection accuracy and excessive computational redundancy. In light of this, this paper improves the feature extraction, feature fusion, and loss function components based on the YOLOv11 framework, proposing an efficient and lightweight DGNCA-NET insulator defect detection algorithm. The model framework is shown in

Figure 1:

2.1. Large-Kernel Deformable Ghost Parallel Convolution Block

Traditional CNNs suffer from high computational redundancy and insufficient model lightweighting during feature extraction, making it difficult to meet the efficiency requirements of real-time detection tasks. Despite continuous advancements in exploring lightweight network structures, the key challenge remains how to reduce the number of parameters while maintaining or even enhancing feature representation capabilities.

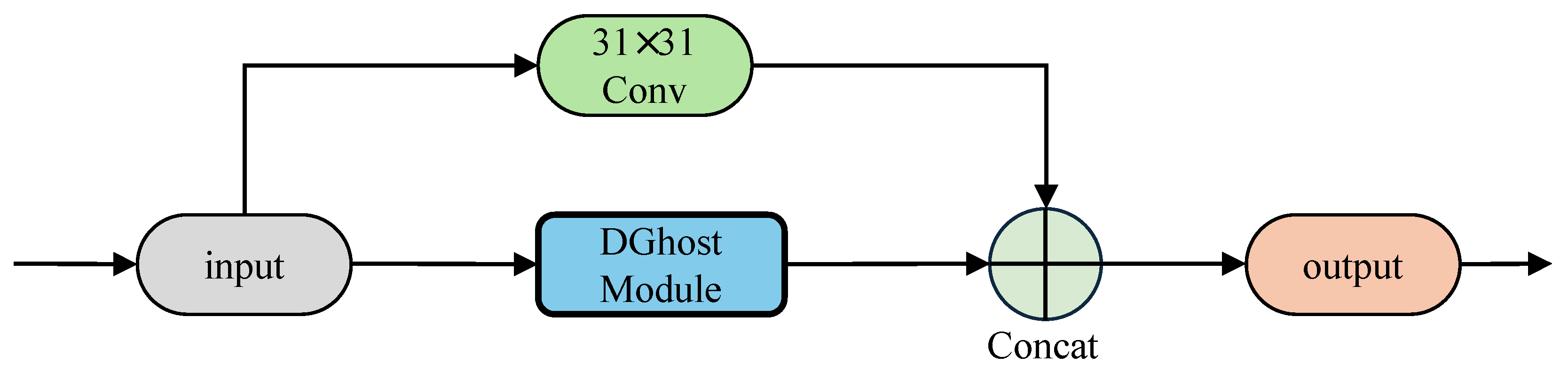

Inspired by [

29,

30], this paper proposes an LKDGPC module (as shown in

Figure 2) that employs 31 × 31 large-kernel convolution and DGhost for dual-path feature extraction. LKDGPC feeds input features into two branches: One is a large-kernel convolution with a large receptive field, which significantly enhances the network’s ability to capture multi-scale contextual information. It addresses the limited receptive field of small-kernel convolution layers, thereby improving the detection of insulator defects at various scales, while also partially mitigating the optimization difficulties associated with increased model depth. The other branch is a lightweight DGhost module that efficiently generates diverse and rich local detail features through cheap linear operations, enhancing sensitivity to subtle and small-scale defects while keeping computational cost low. Finally, the features from the two branches are concatenated and fused, balancing broad spatial context with fine-grained details. Moreover, the deformable convolution in the large-kernel branch dynamically adjusts sampling positions, boosting the model’s adaptability to geometric transformations and irregular shapes common in insulator defects. Compared to the replaced standard convolutions, this architectural change not only improves multi-scale perception and local feature extraction but also reduces overall parameter count and computational complexity, leading to better detection accuracy and faster inference.

The DGhost module is an improved variant of the core component in GhostNet, namely the Ghost module. The fundamental design philosophy of this module is to generate informative and discriminative Ghost feature maps from the original feature maps through a series of cheap linear operations, which significantly reduces computational cost and parameter count while maintaining competitive performance.

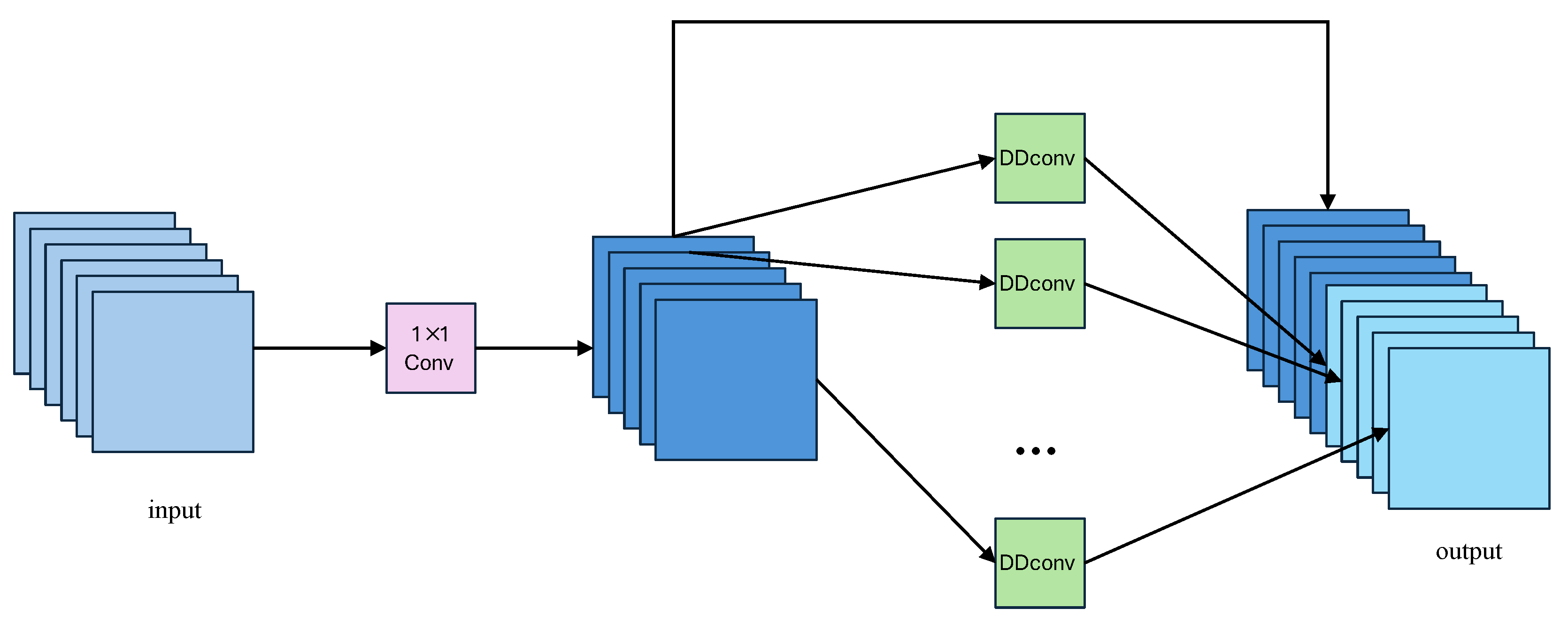

As illustrated in

Figure 3, the DGhost module integrates both standard convolution and lightweight linear operations. Specifically, a portion of the input feature maps is first processed through standard convolution to produce intrinsic features. The remaining features are then generated by applying multiple cheap linear transformations to the intrinsic ones. Finally, these two sets of features are concatenated to form the complete output feature map. Compared to conventional convolutional operations, the DGhost module enables more diverse and informative feature representations at a significantly lower computational cost, thereby improving the inference efficiency and deployment friendliness of the network.

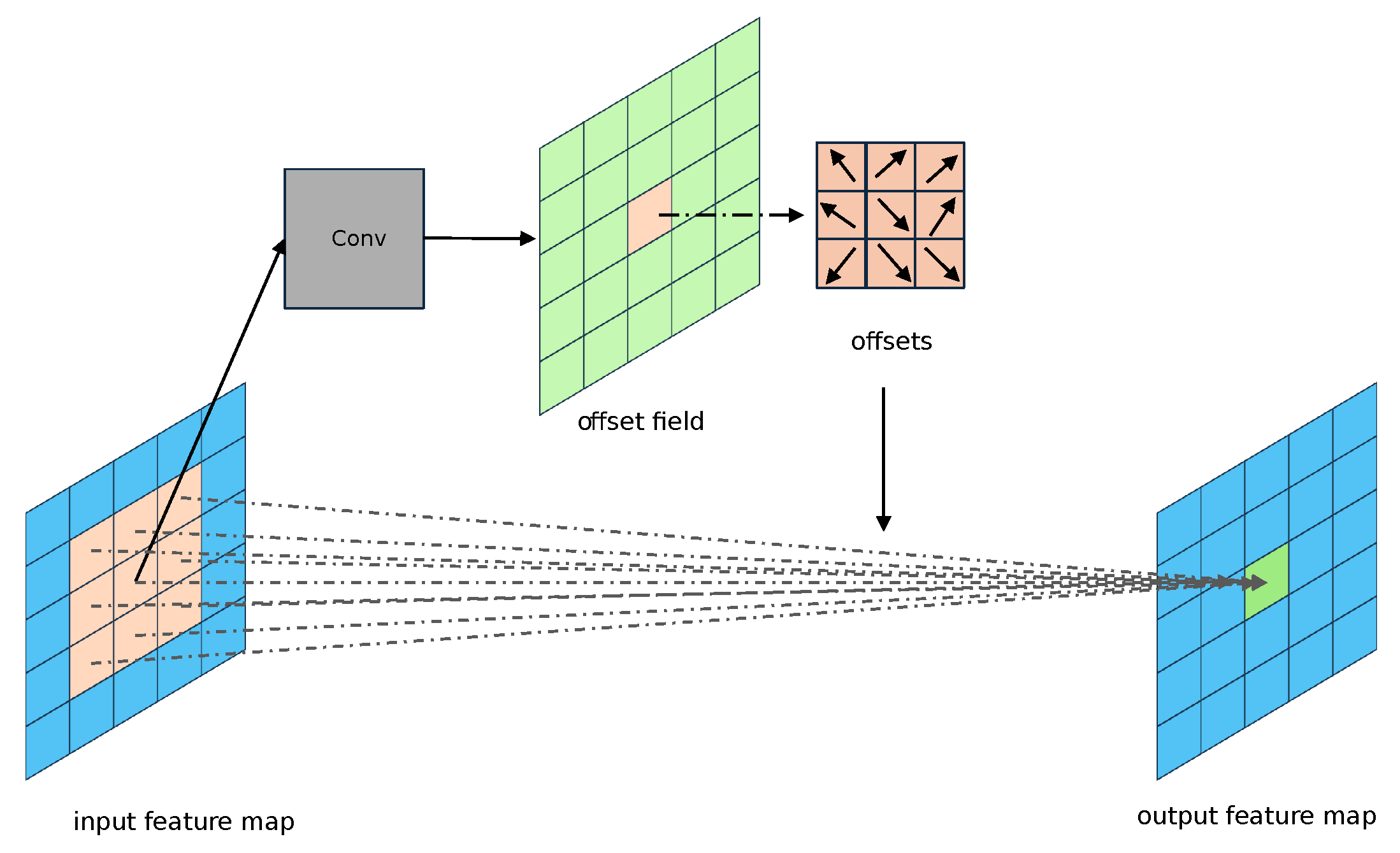

On top of this, the DGhost module further modifies the original Ghost module by introducing DC (as shown in

Figure 4) into the original depthwise convolution, forming a deformable depthwise convolution (DDConv). This enhancement allows the module to better model geometric transformations and complex object structures. By dynamically adapting the sampling locations, DC increases the spatial flexibility of the convolution operation, thereby enabling the network to more effectively capture irregular object shapes and deformations.

For any point

on the input feature map, the traditional convolution operation can be expressed as follows:

where

is the convolution kernel weight at this position,

is the input feature map, and

represents the offset of each point in the convolution kernel relative to the center point.

In DC, an additional offset

is introduced for each point, the convolution operation of DC can be expressed as follows:

2.2. DGhost-C3K3 Block

The C3K2 module is a key component in the YOLOv11(as shown in

Figure 5a). It is an enhanced version based on the traditional C3 module, designed to improve feature extraction capabilities. However, the convolutional kernel size in C3K2 is fixed, making it difficult to capture large-scale or elongated insulator features and to model long-range dependencies. Additionally, the standard convolution’s spatial sampling is too regular, failing to dynamically align with irregular cracks, pits, and other deformations on the insulator surface, which results in challenges in precisely locating tiny defects.

In light of this, this paper proposes a DG-C3K3 module (as shown in

Figure 5b), which replaces the bottleneck in the original C3K2 with a DGhost module. This architectural change brings several concrete advantages. First, by integrating deformable convolution within the DGhost module, the DG-C3K3 can dynamically adjust sampling positions based on the insulator surface texture, enabling more flexible spatial sampling that better aligns with irregular cracks, concave–convex edges, and other complex geometric deformations. This flexibility overcomes the fixed, rigid sampling grid of the original C3K2, improving the network’s ability to precisely capture fine-grained defect features. Second, the Ghost mechanism inside the DGhost module efficiently generates diverse and informative feature maps through inexpensive linear transformations, which enhances feature richness and discrimination without a large computational burden. Although the inclusion of deformable convolution slightly increases the parameter count, the Ghost mechanism compensates by significantly reducing redundant computations in feature generation. Overall, this replacement enhances the module’s adaptability to irregular defect shapes, improves multi-scale and long-range feature representation, and achieves substantial performance gains with only minimal computational overhead.

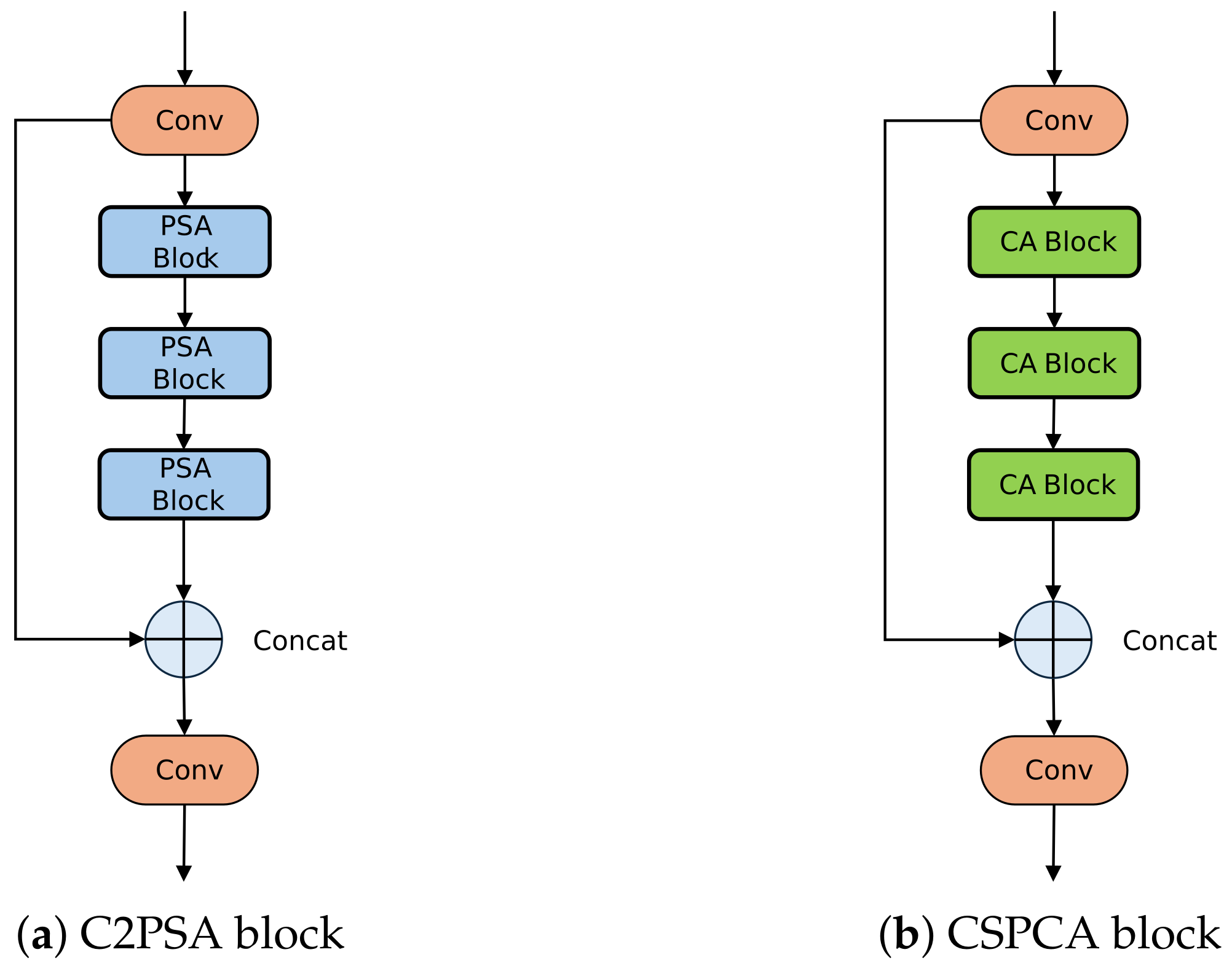

2.3. Cross Stage Partial Coordinate Attention Block

In the YOLOv11 model, as the network depth increases, deep feature extraction faces challenges such as information loss and insufficient semantic representation. The attention mechanism in the original C2PSA module (as shown in

Figure 6a) has limited capability in fusing spatial positional information and channel features, making it difficult to effectively capture fine-grained structures and long-range dependencies of the target. This results in imprecise deep feature representations, thereby affecting the model’s detection performance in complex scenarios.

To address this issue, this paper replaces the Pyramid Split Attention mechanism in the C2PSA module with Coordinate Attention and proposes a new set of CSPCA modules (as shown in

Figure 6b) to enhance the model’s ability to capture and represent deep semantic features more effectively.

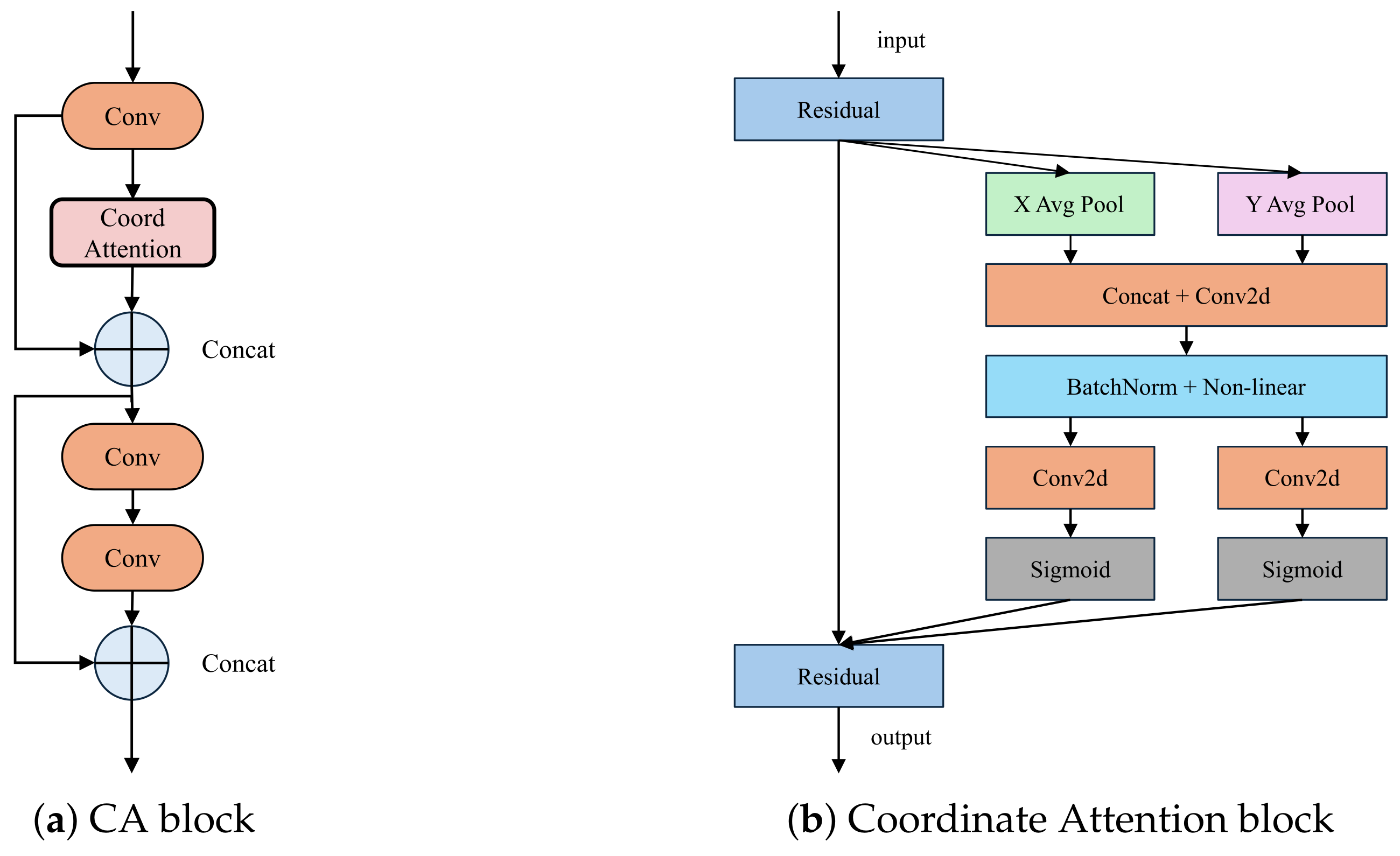

The CA block (as shown in

Figure 7a) consists of convolution and a Coordinate Attention (CA) module, achieving feature enhancement through feature concatenation and residual connections. The coordinate attention mechanism first applies channel-wise attention weighting to the input features, followed by spatial coordinate-aware optimization to refine feature representation. Finally, it fuses the refined features with the original ones to improve the model’s ability to capture both the target’s location and semantic information.

The Coordinate Attention block (as shown in

Figure 7b) is based on a residual structure. It first performs average pooling along the horizontal and vertical directions to capture long-range spatial dependencies. The pooled features are then concatenated and passed through a series of operations including convolution, normalization, and non-linear activation to generate attention weights for both horizontal and vertical directions. These weights are finally fused with the residual branch to produce the output. By decoupling attention along the horizontal and vertical dimensions, the module can precisely locate the target’s row and column positions, effectively enhancing feature extraction for insulators of various scales and poses, while also reducing computational redundancy.

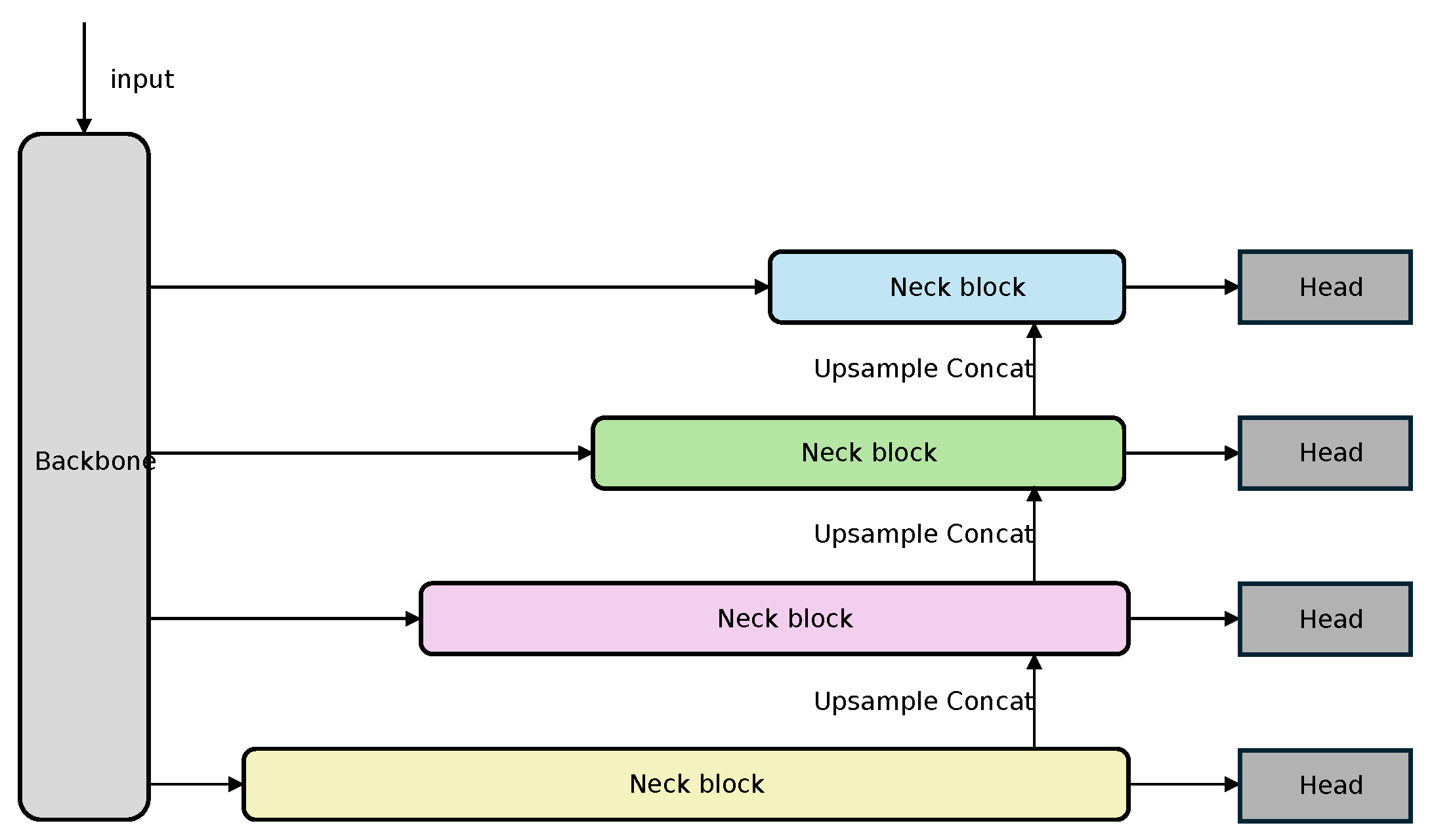

2.4. DC-PUFPN Block

The neck network of YOLOv11 adopts the PANet structure, which has the drawback of using a static weight fusion strategy for feature fusion, lacking dynamic adaptability. This makes it difficult to adjust the feature fusion strategy according to the input content, leading to being unsuitable for small-object detection tasks. Meanwhile, PANet repeatedly performs feature fusion, generating a large amount of redundant information and increasing computational overhead. This paper proposes a PUFPN structure (see

Figure 8), which adopts a unidirectional, progressive upsampling fusion strategy to reduce redundant processing. By orderly controlling the number of upsampling operations, the structure effectively reduces computational redundancy and memory consumption. The fused features at each scale are directly fed into the corresponding detection head, avoiding feature contamination caused by repeated transmission. This structure enables accurate recognition of targets at different scales in insulator images.

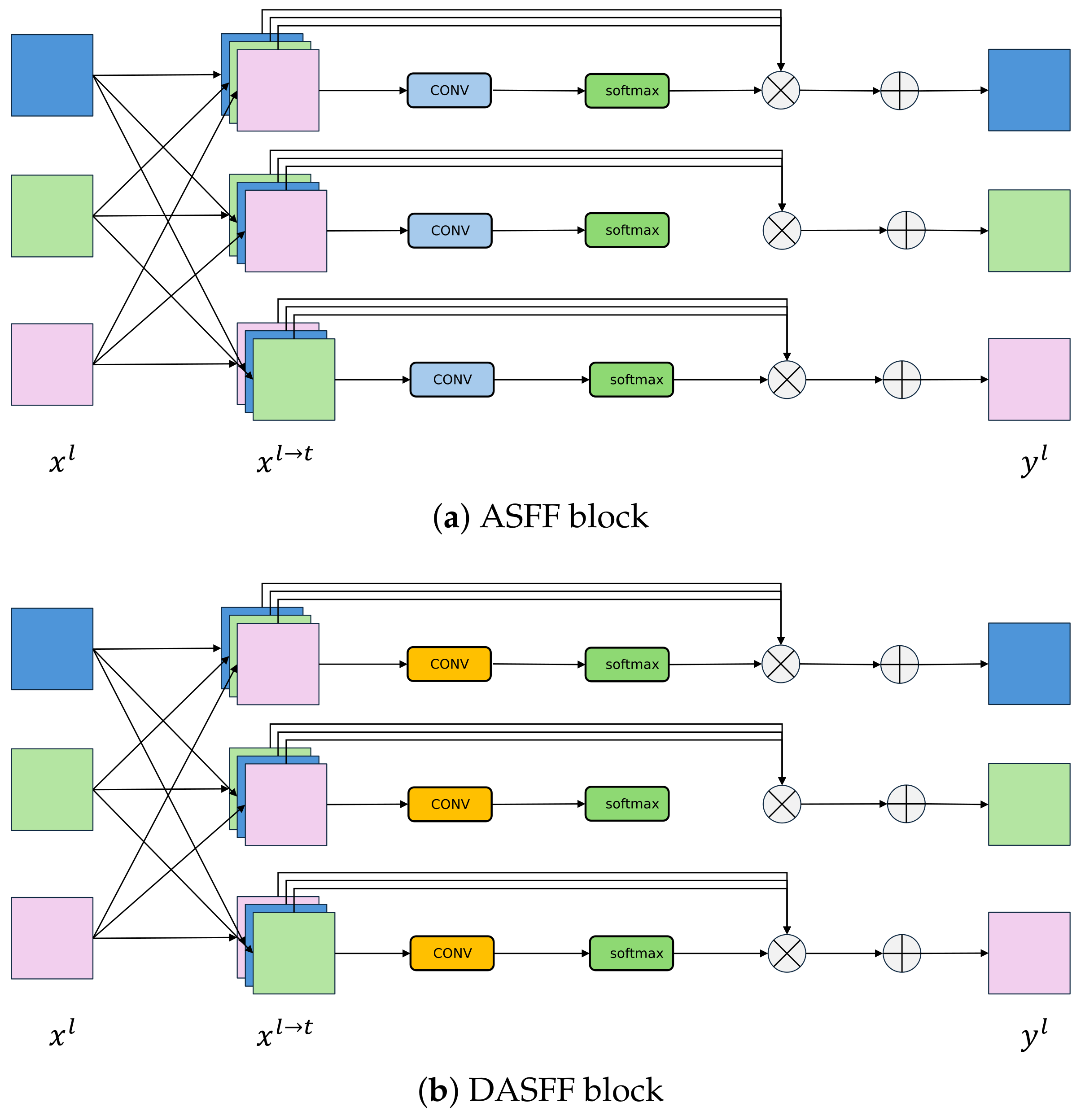

In addition, the PUFPN structure employs the Adaptive Spatial Feature Fusion (ASFF) module (see

Figure 9a) for feature fusion. Building on this, this paper introduces further improvements by drawing inspiration from the optimization strategies used in the Backbone network. Specifically, the standard convolution layers in the ASFF module are replaced with DC, resulting in the proposed Deformable Adaptive Spatial Feature Fusion (DASFF) module (see

Figure 9b), which enhances the network’s ability to model spatial structures.

2.5. Loss Function

The CIoU loss function commonly used in YOLO series algorithms has certain limitations when addressing challenges such as small objects, complex backgrounds, and class imbalance. Insulator defect detection is a typical small-object detection task often set in cluttered environments. Moreover, variations in lighting conditions, aerial shooting distances, and camera angles can lead to significant differences in image quality, resulting in an imbalance between low-quality and high-quality samples. These factors further degrade the accuracy and robustness of insulator defect detection in real-world applications.

To improve the network’s convergence speed and detection accuracy, this paper proposes a combined loss function that integrates Wise-IoUv3 and Focal Loss [

31]. Wise-IoUv3 uses a dynamic non-monotonic focusing mechanism to adaptively weight anchor boxes based on quality, reducing low-quality interference and improving localization. Focal Loss mitigates class imbalance by focusing on hard samples. Together, they jointly optimize regression and classification, enhancing overall performance and training stability.

Wise-IoUv3 is an improved bounding box regression loss function that introduces a dynamic non-monotonic focusing mechanism to intelligently assess anchor quality and dynamically adjust gradient allocation. This reduces the negative impact of low-quality anchors during training, enhances the model’s focus on medium-quality anchors, and improves overall detection performance. The expression for the non-monotonic focusing Wise–IoUv3 loss function is as follows:

where

is the outlier adjustment factor, which represents the quality of the anchor box, while

.

are model learning parameters,

are the predicted box center coordinates, and

are the ground truth box center coordinates.

represents the size of the minimum bounding box; the superscript * indicates that

and

are detached from the computation graph.

is the generalized IoU loss, used to evaluate and optimize the matching degree between predicted bounding boxes and ground truth bounding boxes. Focal Loss helps handle class imbalance by focusing training on hard-to-classify examples and reducing the impact of easy ones, improving model performance on difficult samples. The expression for the Focal Loss function is as follows:

where

is the class balancing factor,

stands for the focusing factor, and

is the predicted probability of the model for a certain class.

The combination of Wise–IoUv3 and Focal can enhance the model’s convergence speed, focus on small targets, and select low-quality samples, thereby improving the model’s generalization performance. The loss function designed in this paper is as follows:

where

are hyper-parameters controlling weight dynamics,

t denotes the number of epochs during training, and

is the adaptive weight for the regression–classification balance.

3. Results and Analysis

3.1. Experimental Preparation

Experimental Environment: The experiment was conducted locally, and the experimental environment is shown in

Table 1.

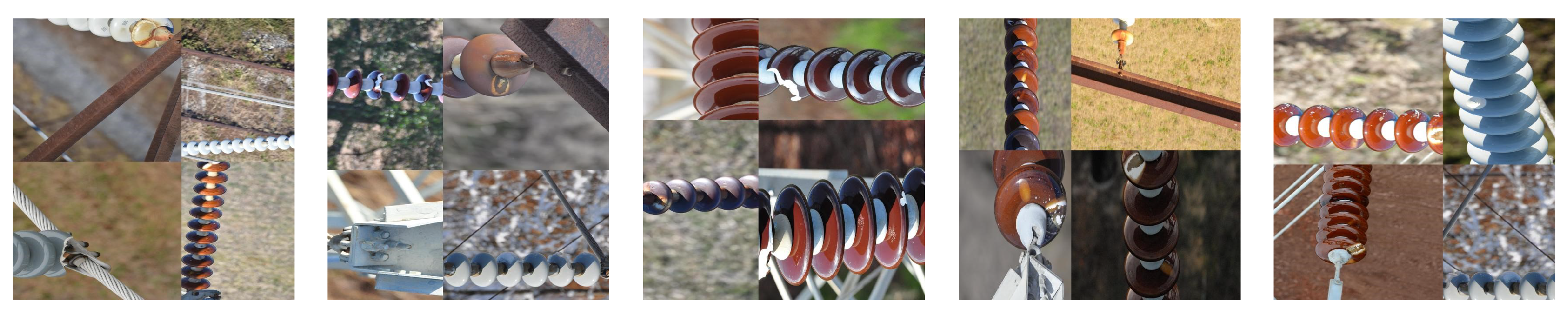

Experimental Data: To improve the model’s generalization ability, the transmission line insulator defect dataset used in this paper consists of two parts, with a total of 4648 images. The dataset is randomly split into training, validation, and testing sets in a 7:2:1 ratio. The first part is a self-built dataset containing 3800 insulator images sized 640 × 640 pixels. As shown in

Figure 10, these images were captured across different regions using drones and cameras, then stitched to form composites with multiple insulators. This enhances data diversity and complexity. The dataset includes various environmental conditions and viewpoints, reflecting real-world challenges such as lighting changes, occlusions, and complex backgrounds. The second part is the CPLID public dataset [

5], which contains 848 images of insulators. These images include complete insulator string images from real-world scenarios. The dataset used in this paper not only contains subtle defect features of the insulators but also includes complex backgrounds found in real-world environments, thus the experimental results can better reflect the quality of insulator defect detection in real environments.

The model’s training hyperparameters are shown in

Table 2.

3.2. Evaluation Metrics

In this paper, the model’s detection performance is evaluated using metrics such as precision (

P), recall (

R), and Mean Average Precision (

), and the mathematical expressions are as follows:

where

represents the number of true positives correctly identified as positive,

represents the number of false positives incorrectly identified as positive, and

denotes the number of false negatives incorrectly identified as negative.

is the Average Precision for a single class, calculated as the area under the precision–recall (

) curve.

is the mean value across all classes, with higher values indicating better model precision.

3.3. Ablation Experiment

To verify the advantages of the proposed DGNCA-Net model in insulator defect detection, an ablation experiment is conducted based on the YOLOv11 model. The experimental results are shown in

Table 3.

The model was trained using the same training data and hyperparameter settings. After 600 epochs, the evaluation metrics P, R, and were calculated on the same test set. Based on the results from the eight experimental groups mentioned above, it can be clearly observed that the progressive integration of the Ghost-Backbone, DC-PUFPN, and components significantly improves model performance. Starting from the baseline, the individual addition of Ghost-Backbone, DC-PUFPN, and led to steady increases in P, R, and . When combining any two of these modules, the performance continued to improve, demonstrating their complementary effects. Finally, the complete integration of all three modules resulted in the highest performance, with P, R, and reaching 91.07%, 79.21%, and 85.46%, respectively. Compared to the baseline, this reflects improvements of 4.55%, 4.32%, and 3.79%. These results confirm that the proposed DGNCA-Net achieves a substantial cumulative gain in detection accuracy through the joint contribution of each component.

3.4. Comparative Experiments

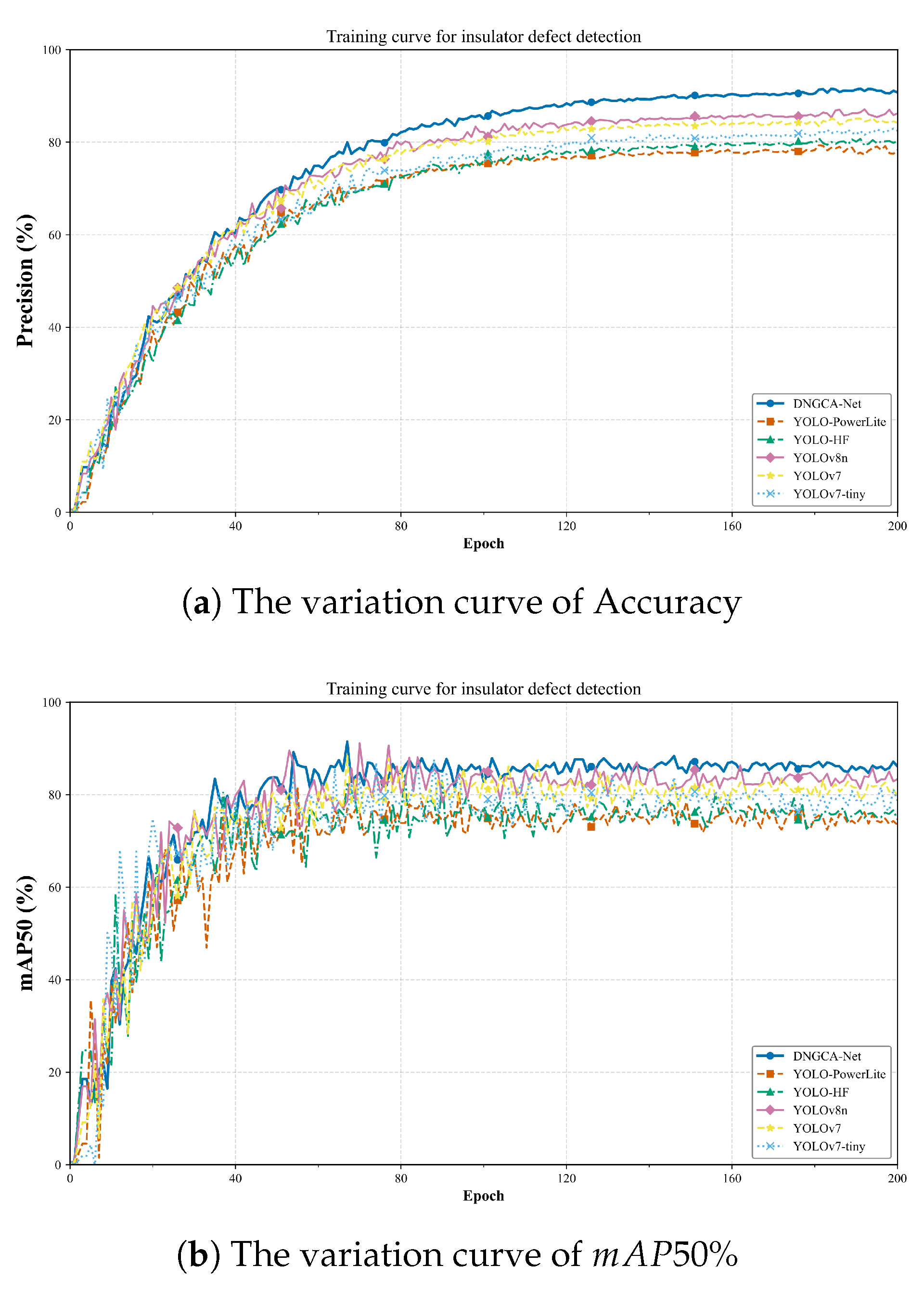

To comprehensively evaluate the superiority of the proposed DGNCA-Net model in terms of model size, computational complexity, and insulator defect detection accuracy, a comparison was made with four different defect detection models under the same dataset and hardware environment. The compared deep learning models and the comparison results are shown in

Table 4 and

Figure 11.

In addition to evaluating the model’s accuracy in insulator defect detection using P, R, and , we introduce two additional metrics: the number of parameters and GFLOPs, to comprehensively assess the model’s scale and computational complexity. The number of parameters represents the total count of trainable weights in the model—higher values indicate a more complex structure. A lower GFLOPs value signifies reduced computational complexity and lighter computational load.

Based on the comparative results of P, R, and , the proposed DGNCA-Net algorithm outperforms models such as YOLO-PowerLite, YOLO-HF, YOLOv8n, YOLOv7, and YOLOv7-tiny in defect detection accuracy, while maintaining a smaller parameter size. This reflects a well-balanced design between lightweight architecture and detection performance. In achieving higher accuracy, DGNCA-Net also significantly reduces the number of parameters and computational overhead, making it more suitable for deployment on resource-constrained edge devices or in real-time scenarios. Therefore, DGNCA-Net not only ensures reliable detection performance but also demonstrates excellent efficiency and adaptability, offering a more practical and effective solution for real-world engineering applications.

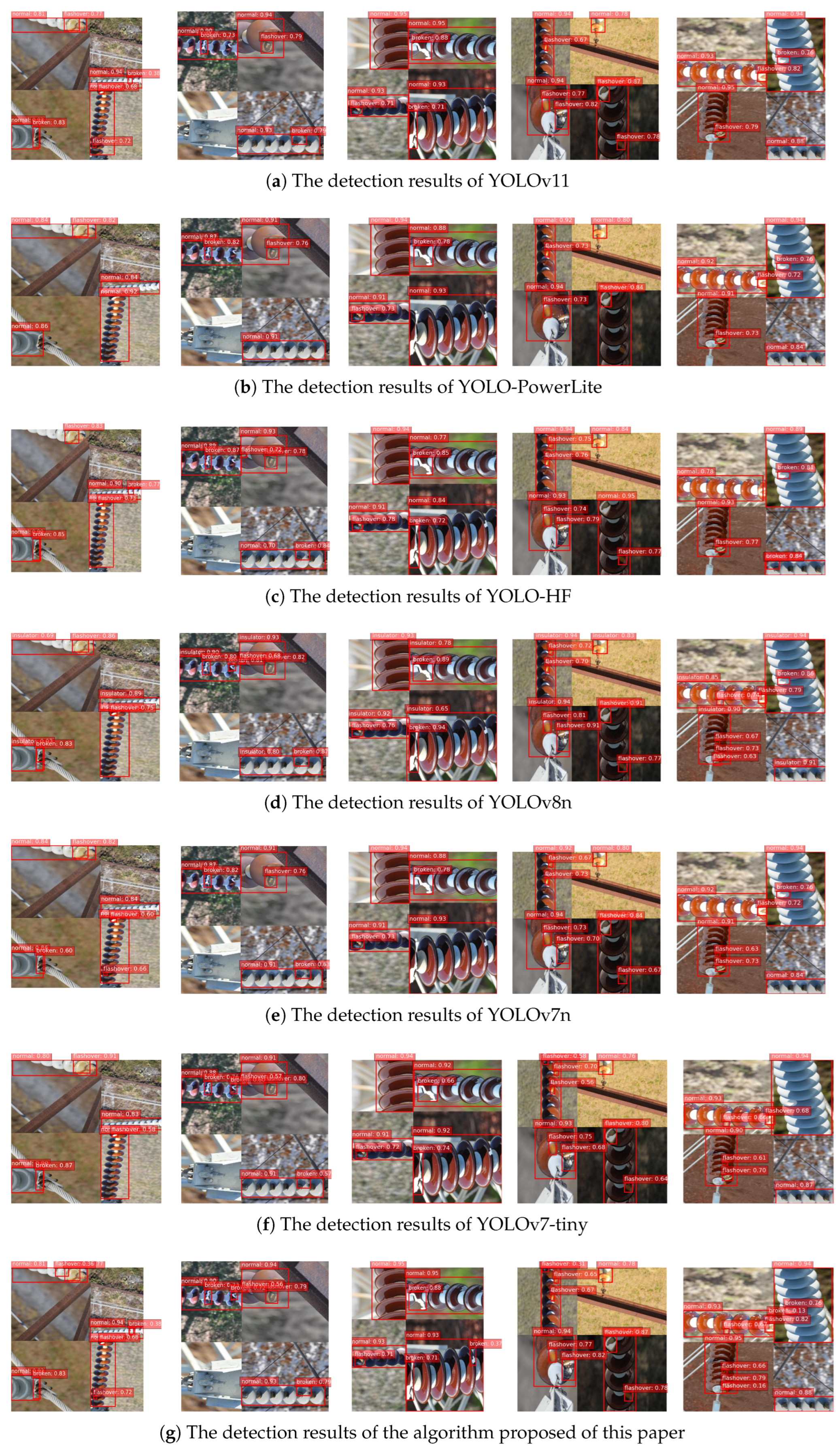

3.5. Visualization Comparison Experiment

To further verify the superiority of the proposed algorithm, the visual comparison results of insulator defect detection between the algorithm proposed in this study, the YOLOv11 algorithm, and the algorithms listed in

Table 4 are presented in

Figure 12.

Based on the results presented in

Figure 12 and

Table 5, the proposed DGNCA-Net demonstrates superior detection performance compared to other mainstream models. It is the only model that achieves zero missed detections across all categories. In contrast, other models such as YOLOv11 and YOLO-PowerLite exhibit significantly higher total missed cases, with 12 and 19, respectively. Even lightweight detectors like YOLOv8-n and YOLOv7-tiny show total misses of 8 and 9. These results highlight the robustness and reliability of DGNCA-Net, especially in accurately identifying challenging defect types. Overall, the findings confirm that DGNCA-Net offers substantial improvements in detection completeness and is highly suitable for real-world insulator defect detection scenarios where precision is critical.

4. Conclusions

This paper proposes a lightweight insulator defect detection algorithm, DGNCA-Net, based on the YOLOv11 framework. By integrating an improved Ghost-based backbone, the DC-PUFPN neck network, and a refined loss function, the model effectively enhances both detection accuracy and computational efficiency for multi-scale small target defects in insulators. The research offers a lightweight and high-precision solution for power line inspections, significantly reducing manual inspection costs and safety risks, and holds important practical value for improving the intelligence level of power grid operation and maintenance. Although the proposed method demonstrates excellent detection performance, there are still some challenges to be addressed in real-world applications: (1) under extreme weather conditions (e.g., strong backlighting, rain, or snow), image quality significantly degrades, which affects the model’s detection accuracy and stability. (2) In cluttered backgrounds or scenarios where insulators are densely arranged, target overlap and occlusion may occur, hindering accurate localization and identification of defects. Future work will focus on enhancing the model’s adaptability to complex environments and improving its robustness against image noise and interference in extreme scenarios, in order to further increase its practicality.