In-Context Learning for Low-Resource Machine Translation: A Study on Tarifit with Large Language Models

Abstract

1. Introduction

1.1. Background

1.2. Challenges and Motivations

1.3. Literature Survey

1.4. Contributions and Novelties

1.5. Organization of This Paper

2. Related Work

2.1. Amazigh Language Processing and Machine Translation

2.2. LLM-Based In-Context Machine Translation

2.3. Tarifit-Specific Research and Digital Linguistic Landscape

3. Tarifit Language Background

3.1. Geographic Distribution and Multilingual Context

3.2. Writing Systems and Orthographic Variation

3.3. Linguistic Features and Computational Challenges

4. Methodology

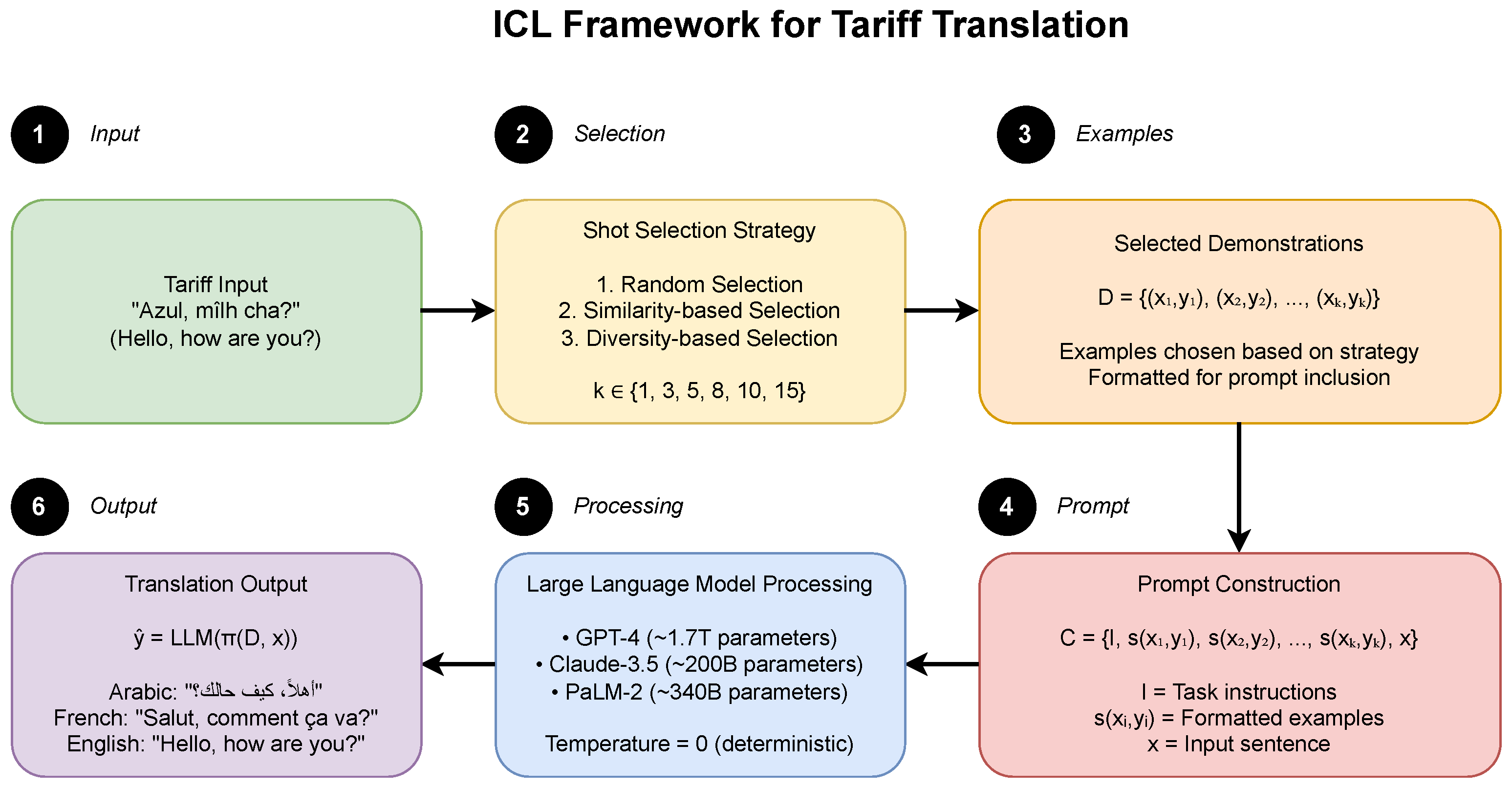

4.1. In-Context Learning Framework

4.2. Dataset Construction

4.3. Shot Selection Strategies

4.3.1. Random Selection

4.3.2. Similarity-Based Selection

4.3.3. Diversity-Based Selection

4.4. Model Configuration

4.5. Evaluation Framework

4.5.1. Automatic Metrics

4.5.2. Human Evaluation

4.5.3. Cross-Validation Protocol

5. Results

5.1. Model Performance and Cross-Lingual Analysis

5.2. In-Context Learning Optimization

5.3. Error Analysis and Human Evaluation

6. Discussion and Conclusions

6.1. Discussion

6.2. Societal and Educational Implications

6.3. Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

Appendix A. Experimental Reproducibility Details

Appendix A.1. Sample ICL Prompt Templates

Appendix A.2. Human Evaluation Protocol

- Native Tarifit speakers

- Fluent in target languages (Arabic/French/English)

- Linguistic or translation background preferred

- Adequacy (1–5): Does the translation convey the meaning of the source text?

- 5: Complete meaning preserved

- 4: Most meaning preserved, minor gaps

- 3: Essential meaning preserved

- 2: Some meaning preserved

- 1: Little or no meaning preserved

- Fluency (1–5): Is the translation natural in the target language?

- 5: Perfect fluency

- 4: Good fluency, minor issues

- 3: Acceptable fluency

- 2: Disfluent but understandable

- 1: Very disfluent

Appendix A.3. Sample Human Evaluation Examples

- Tarifit Source: Azul, mlih cha?

- GPT-4 Translation: Hello, how are you?

- Reference Translation: Hello, how are you?

- Evaluator Scores: Adequacy: 5/5, Fluency: 5/5

- Comments: Perfect translation preserving greeting convention

- Tarifit Source: Tamghart ni tsawar tamazight

- GPT-4 Translation: That woman speaks Amazigh

- Reference Translation: That woman speaks Berber

- Evaluator Scores: Adequacy: 4/5, Fluency: 5/5

- Comments: Accurate but uses “Amazigh” instead of more common “Berber”

- Tarifit Source: Netta wa ditis cha

- GPT-4 Translation: He will not coming

- Reference Translation: He will not come

- Evaluator Scores: Adequacy: 3/5, Fluency: 2/5

- Comments: Meaning preserved but grammatical error in English

- Tarifit Source: Chha tsa3at?

- GPT-4 Translation: How many hours?

- Reference Translation: What time is it?

- Evaluator Scores: Adequacy: 2/5, Fluency: 4/5

- Comments: Literal translation misses idiomatic time-asking expression

Appendix A.4. Data Collection Methodology

- Source Selection: Sentences collected from social media posts, traditional stories, and conversational recordings with speaker consent

- Translation Process: Each sentence translated independently by three qualified native speakers

- Quality Control: Disagreements resolved through consensus discussion

- Cultural Sensitivity: All materials reviewed for cultural appropriateness before inclusion

Appendix A.5. Shot Selection Algorithm

| Algorithm 1: Similarity-Based Shot Selection |

|

Appendix A.6. Statistical Analysis Details

- Significance Testing: Paired t-tests for performance comparisons

- Confidence Intervals: Bootstrap sampling with 1000 iterations

- Effect Size: Cohen’s d for practical significance assessment

- Multiple Comparisons: Bonferroni correction applied where appropriate

References

- Joshi, P.; Santy, S.; Budhiraja, A.; Bali, K.; Choudhury, M. The state and fate of linguistic diversity and inclusion in the NLP world. arXiv 2020, arXiv:2004.09095. [Google Scholar]

- Galla, C.K. Indigenous language revitalization, promotion, and education: Function of digital technology. Comput. Assist. Lang. Learn. 2016, 29, 1137–1151. [Google Scholar] [CrossRef]

- Awar.ai: First Speech Recognition for Tarifit. Available online: https://awar.ai (accessed on 5 May 2025).

- Aissati, A.E.; Karsmakers, S.; Kurvers, J. ‘We are all beginners’: Amazigh in language policy and educational practice in Morocco. Comp. A J. Comp. Int. Educ. 2011, 41, 211–227. [Google Scholar] [CrossRef]

- Ait Laaguid, B.; Khaloufi, A. Amazigh language use on social media: An exploratory study. J. Arbitrer 2023, 10, 24–34. [Google Scholar] [CrossRef]

- Taghbalout, I.; Allah, F.A.; Marraki, M.E. Towards UNL-based machine translation for Moroccan Amazigh language. Int. J. Comput. Sci. Eng. 2018, 17, 43–54. [Google Scholar] [CrossRef]

- Maarouf, O.; Maarouf, A.; El Ayachi, R.; Biniz, M. Automatic translation from English to Amazigh using transformer learning. Indones. J. Electr. Eng. Comput. Sci. 2024, 34, 1924–1934. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Zhang, K.; Choi, Y.; Song, Z.; He, T.; Wang, W.Y.; Li, L. Hire a linguist!: Learning endangered languages in LLMs with in-context linguistic descriptions. In Findings of the Association for Computational Linguistics: ACL 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 15654–15669. [Google Scholar]

- Tanzer, G.; Suzgun, M.; Visser, E.; Jurafsky, D.; Melas-Kyriazi, L. A benchmark for learning to translate a new language from one grammar book. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Hus, J.; Anastasopoulos, A. Back to school: Translation using grammar books. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 20207–20219. [Google Scholar]

- Pei, R.; Liu, Y.; Lin, P.; Yvon, F.; Schütze, H. Understanding In-Context Machine Translation for Low-Resource Languages: A Case Study on Manchu. arXiv 2025, arXiv:2502.11862. [Google Scholar]

- El Ouahabi, S.; Atounti, M.; Bellouki, M. Toward an automatic speech recognition system for amazigh-tarifit language. Int. J. Speech Technol. 2019, 22, 421–432. [Google Scholar] [CrossRef]

- Boulal, H.; Bouroumane, F.; Hamidi, M.; Barkani, J.; Abarkan, M. Exploring data augmentation for Amazigh speech recognition with convolutional neural networks. Int. J. Speech Technol. 2024, 28, 53–65. [Google Scholar] [CrossRef]

- Outahajala, M.; Zenkouar, L.; Rosso, P.; Martí, A. Tagging amazigh with ancorapipe. In Proceedings of the Workshop on Language Resources and Human Language Technology for Semitic Languages, Valletta, Malta, 26 January; 2010; pp. 52–56. [Google Scholar]

- Outahajala, M. Processing Amazighe Language. In Natural Language Processing and Information Systems, Proceedings of the 16th International Conference on Applications of Natural Language to Information Systems, NLDB 2011, Alicante, Spain, 28–30 June 2011; Proceedings 16; Springer: Berlin/Heidelberg, Germany, 2011; pp. 313–317. [Google Scholar]

- Boulaknadel, S.; Ataa Allah, F. Building a standard Amazigh corpus. In Proceedings of the Third International Conference on Intelligent Human Computer Interaction (IHCI 2011), Prague, Czech Republic, 29–31 August 2011; Springer: Berlin/Heidelberg, Germany, 2012; pp. 91–98. [Google Scholar]

- Allah, F.A.; Miftah, N. The First Parallel Multi-lingual Corpus of Amazigh. Fadoua Ataa Allah J. Eng. Res. Appl. 2018, 8, 5–12. [Google Scholar]

- Diab, N.; Sadat, F.; Semmar, N. Towards Guided Back-translation for Low-resource languages—A Case Study on Kabyle-French. In Proceedings of the 2024 16th International Conference on Human System Interaction (HSI), Paris, France, 8–11 July 2024; pp. 1–4. [Google Scholar]

- Nejme, F.Z.; Boulaknadel, S.; Aboutajdine, D. Finite state morphology for Amazigh language. In Proceedings of the International Conference on Intelligent Text Processing and Computational Linguistics, Samos, Greece, 24–30 March 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 189–200. [Google Scholar]

- Nejme, F.Z.; Boulaknadel, S.; Aboutajdine, D. AmAMorph: Finite state morphological analyzer for amazighe. J. Comput. Inf. Technol. 2016, 24, 91–110. [Google Scholar] [CrossRef]

- Ammari, R.; Zenkoua, A. APMorph: Finite-state transducer for Amazigh pronominal morphology. Int. J. Electr. Comput. Eng. 2021, 11, 699. [Google Scholar] [CrossRef]

- Lin, X.V.; Mihaylov, T.; Artetxe, M.; Wang, T.; Chen, S.; Simig, D.; Ott, M.; Goyal, N.; Bhosale, S.; Du, J.; et al. Few-shot learning with multilingual generative language models. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 9019–9052. [Google Scholar]

- Vilar, D.; Freitag, M.; Cherry, C.; Luo, J.; Ratnakar, V.; Foster, G. Prompting PaLM for translation: Assessing strategies and performance. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15406–15427. [Google Scholar]

- Ghazvininejad, M.; Gonen, H.; Zettlemoyer, L. Dictionary-based phrase-level prompting of large language models for machine translation. arXiv 2023, arXiv:2302.07856. [Google Scholar]

- Elsner, M.; Needle, J. Translating a low-resource language using GPT-3 and a human-readable dictionary. In Proceedings of the 20th SIGMORPHON workshop on Computational Research in Phonetics, Phonology, and Morphology, Toronto, ON, Canada, 14 July 2023; pp. 1–13. [Google Scholar]

- Zhang, C.; Liu, X.; Lin, J.; Feng, Y. Teaching large language models an unseen language on the fly. In Findings of the Association for Computational Linguistics: ACL 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 8783–8800. [Google Scholar]

- Merx, R.; Mahmudi, A.; Langford, K.; de Araujo, L.A.; Vylomova, E. Low-resource machine translation through retrieval-augmented LLM prompting: A study on the Mambai language. In Proceedings of the 2nd Workshop on Resources and Technologies for Indigenous, Endangered and Lesser-resourced Languages in Eurasia (EURALI) @ LREC-COLING 2024, Turin, Italy, 20–24 May 2024; pp. 1–11. [Google Scholar]

- Tahiri, N. Word Boundaries in the Writing System of Standard Amazigh: Challenges from Tarifit Facebook Users. In The Handbook of Berber Linguistics; Springer: Berlin/Heidelberg, Germany, 2024; pp. 229–253. [Google Scholar]

- Ataa Allah, F.; Boulaknadel, S. New trends in less-resourced language processing: Case of Amazigh language. Int. J. Nat. Lang. Comput. (IJNLC) 2023, 12. [Google Scholar]

| Region/Country | Speakers | Primary Contact Languages |

|---|---|---|

| Northern Morocco | 3,000,000 | Arabic, French |

| Belgium | 700,000 | Dutch, French |

| Netherlands | 600,000 | Dutch |

| France | 300,000 | French |

| Spain | 220,000 | Spanish, Catalan |

| Other Europe | 180,000 | Various |

| Total | 5,000,000 | - |

| Script | Text | Usage Context |

|---|---|---|

| Tifinagh |  | Cultural, digital, academic, and formal contexts |

| Latin | Azul, mlih cha? | |

| Berber Latin | Aẓul, mliḥ ca? | |

| Arabic |  | |

| Translation: “Hello, how are you?” | ||

| Additional Examples in Context: | ||

| Latin | Yossid qbar i thmadith | He came before noon |

| Latin | Tamghart ni thsawar tamazight | That woman speaks Amazigh |

| Latin | Wanin bo awar ni | They don’t say that word |

| Domain | Sentences | Avg. Length | Source |

|---|---|---|---|

| Conversational | 500 | 8.2 | Social media, interviews |

| Literary | 330 | 12.4 | Traditional stories, poetry |

| Cultural | 170 | 15.1 | Proverbs, oral traditions |

| Total | 1000 | 10.3 | - |

| Specification | GPT-4 | Claude-3.5 | PaLM-2 |

|---|---|---|---|

| Parameters | ∼1.7 T | ∼200 B | 540 B |

| Context Window | 8192 tokens | 200 K tokens | 8192 tokens |

| Access Method | OpenAI API (Paid) | Anthropic API (Paid) | Google API (Free tier) |

| Temperature | 0 | 0 | 0 |

| Max Tokens | 1500 | 1500 | 1500 |

| API Rate Limit | 10K RPM | 5K RPM | 1K RPM |

| Model | Tarifit→Arabic | Tarifit→French | Tarifit→English | ||||||

|---|---|---|---|---|---|---|---|---|---|

| BLEU | chrF | BERT | BLEU | chrF | BERT | BLEU | chrF | BERT | |

| GPT-4 | 20.2 | 38.7 | 69.4 | 14.8 | 32.1 | 61.2 | 10.9 | 27.8 | 56.8 |

| Claude-3.5 | 18.6 | 36.3 | 67.1 | 13.1 | 29.6 | 58.9 | 9.4 | 25.2 | 54.3 |

| PaLM-2 | 16.9 | 33.8 | 64.2 | 11.7 | 27.4 | 56.1 | 8.1 | 23.1 | 51.7 |

| Strategy | Arabic | French | English | ||||||

|---|---|---|---|---|---|---|---|---|---|

| BLEU | chrF | BERT | BLEU | chrF | BERT | BLEU | chrF | BERT | |

| Random | 17.4 | 34.2 | 65.1 | 11.9 | 28.7 | 57.8 | 7.8 | 24.1 | 52.3 |

| Similarity | 19.7 | 37.5 | 68.2 | 13.6 | 31.4 | 60.5 | 9.5 | 26.8 | 55.1 |

| Diversity | 18.8 | 36.1 | 66.9 | 12.7 | 29.9 | 59.2 | 8.9 | 25.4 | 53.7 |

| Language | Adequacy | Fluency | BLEU |

|---|---|---|---|

| Arabic | 3.4 ± 0.7 | 3.6 ± 0.6 | 20.2 |

| French | 2.8 ± 0.8 | 3.0 ± 0.7 | 14.8 |

| English | 2.5 ± 0.9 | 2.7 ± 0.8 | 10.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akallouch, O.; Fardousse, K. In-Context Learning for Low-Resource Machine Translation: A Study on Tarifit with Large Language Models. Algorithms 2025, 18, 489. https://doi.org/10.3390/a18080489

Akallouch O, Fardousse K. In-Context Learning for Low-Resource Machine Translation: A Study on Tarifit with Large Language Models. Algorithms. 2025; 18(8):489. https://doi.org/10.3390/a18080489

Chicago/Turabian StyleAkallouch, Oussama, and Khalid Fardousse. 2025. "In-Context Learning for Low-Resource Machine Translation: A Study on Tarifit with Large Language Models" Algorithms 18, no. 8: 489. https://doi.org/10.3390/a18080489

APA StyleAkallouch, O., & Fardousse, K. (2025). In-Context Learning for Low-Resource Machine Translation: A Study on Tarifit with Large Language Models. Algorithms, 18(8), 489. https://doi.org/10.3390/a18080489