Development of Digital Training Twins in the Aircraft Maintenance Ecosystem

Abstract

1. Introduction

2. Materials and Methods

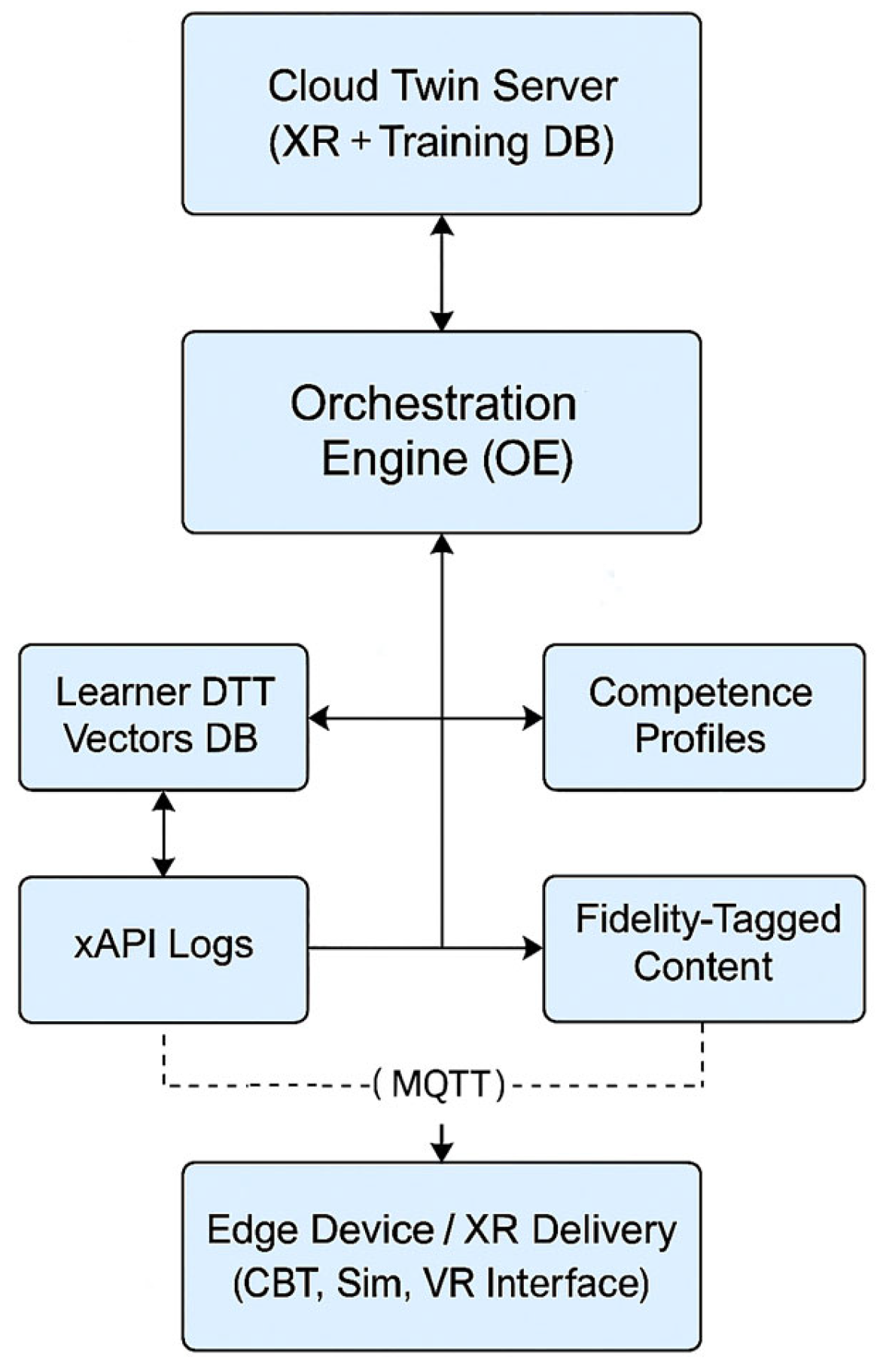

2.1. Digital Training Twin Model

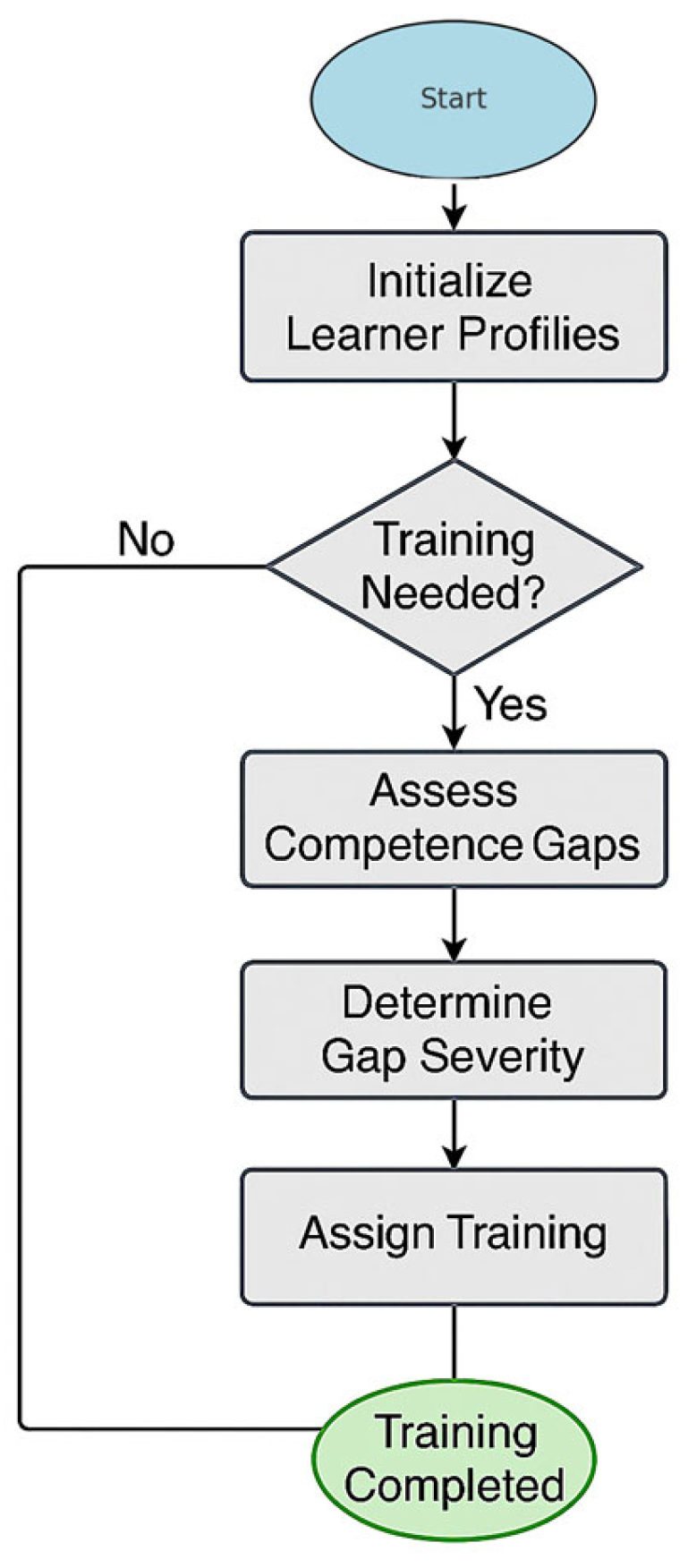

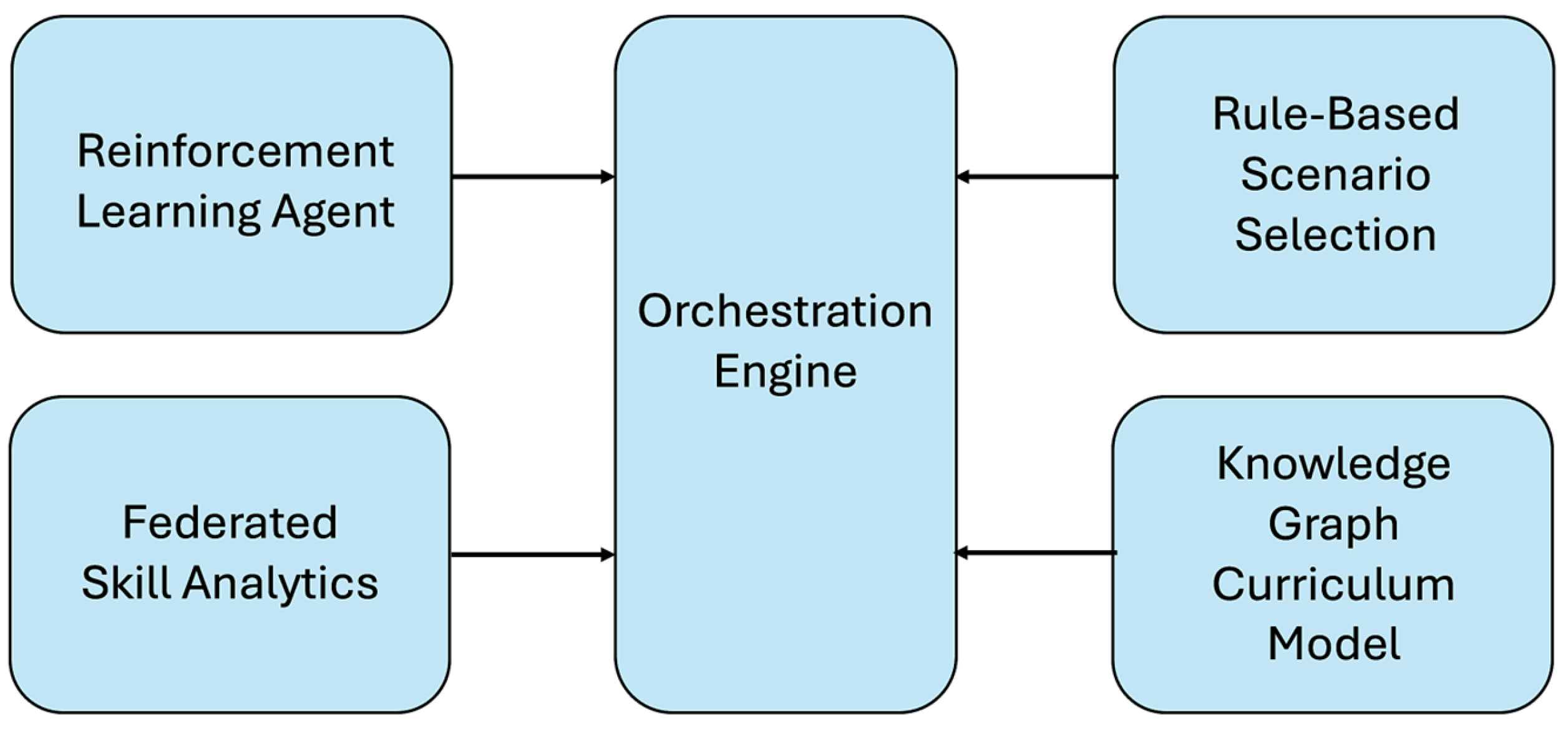

2.2. Algorithmic Orchestration Workflow

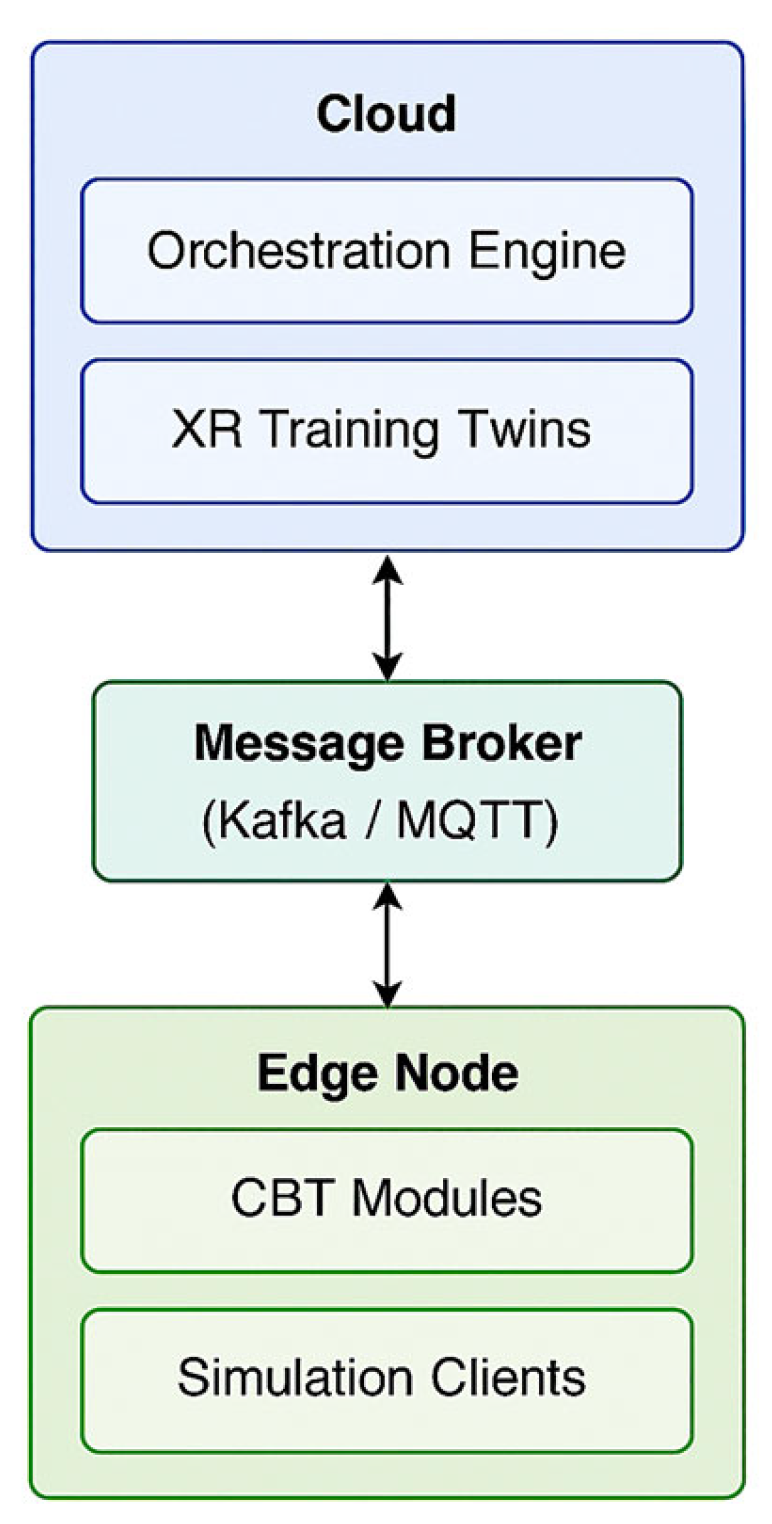

2.3. Cloud–Edge Deployment Architecture

2.4. Algorithms for Orchestration and Analytics

2.5. Simulation Methodology

3. Results

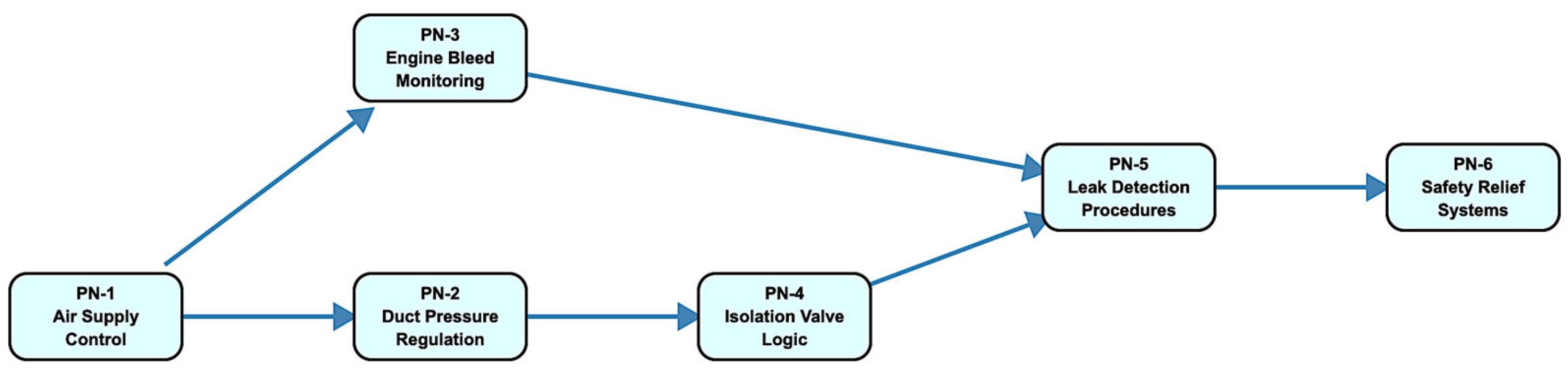

3.1. Learner Initialization and Competence Profiles

- PN-1: Air Supply Control;

- PN-2: Duct Pressure Regulation;

- PN-3: Engine Bleed Monitoring;

- PN-4: Isolation Valve Logic;

- PN-5: Leak Detection Procedures;

- PN-6: Safety Relief Systems.

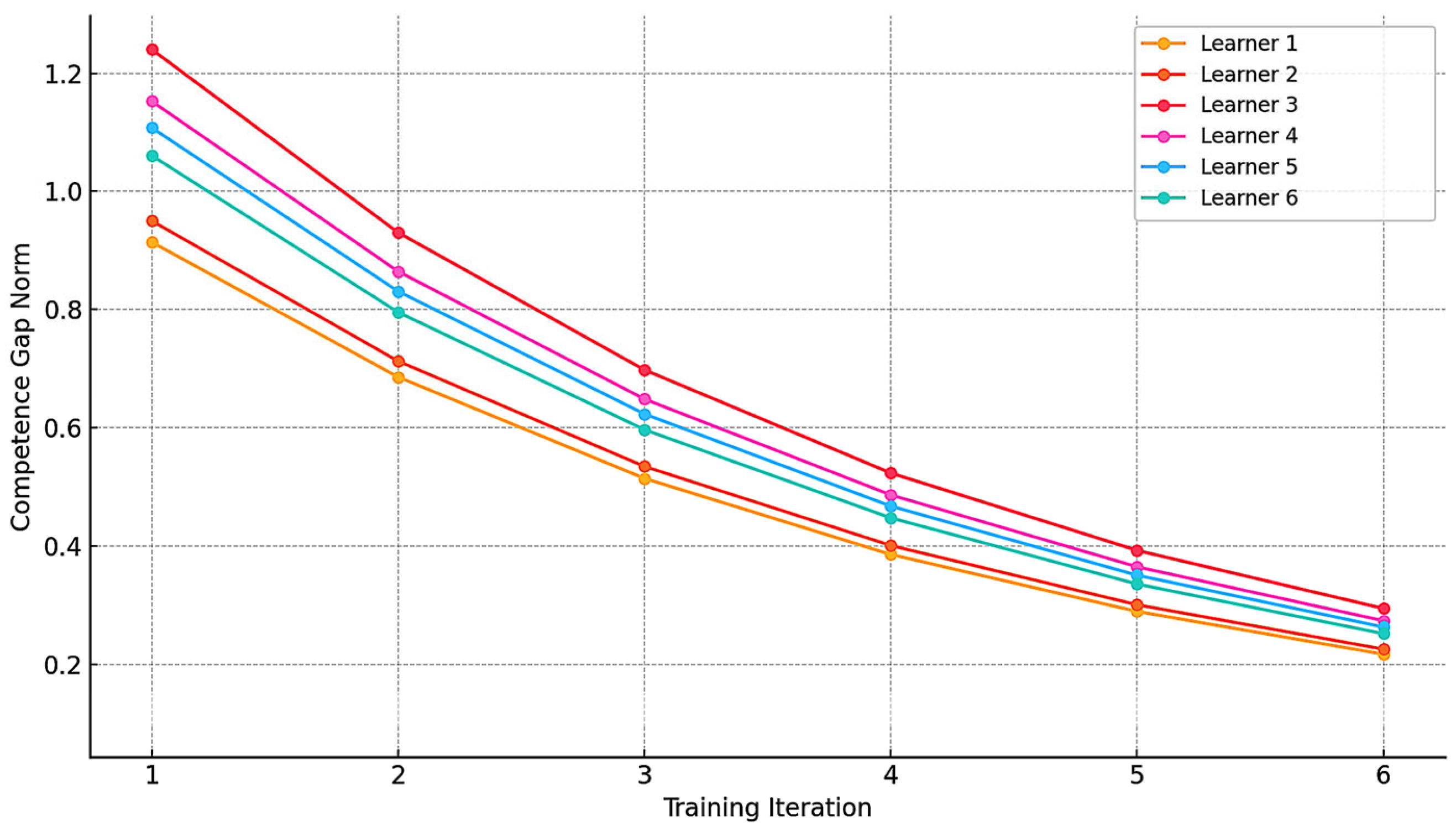

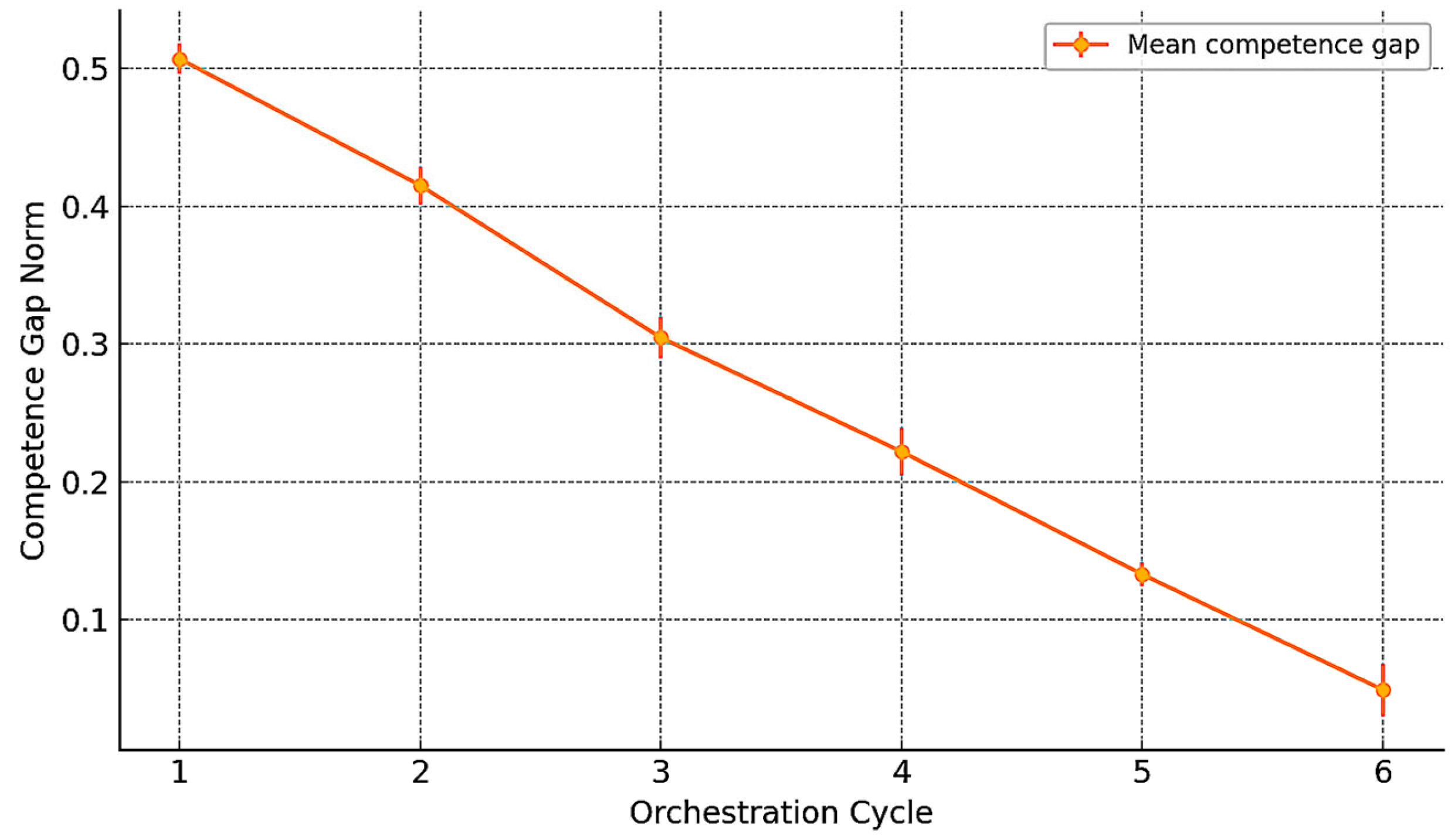

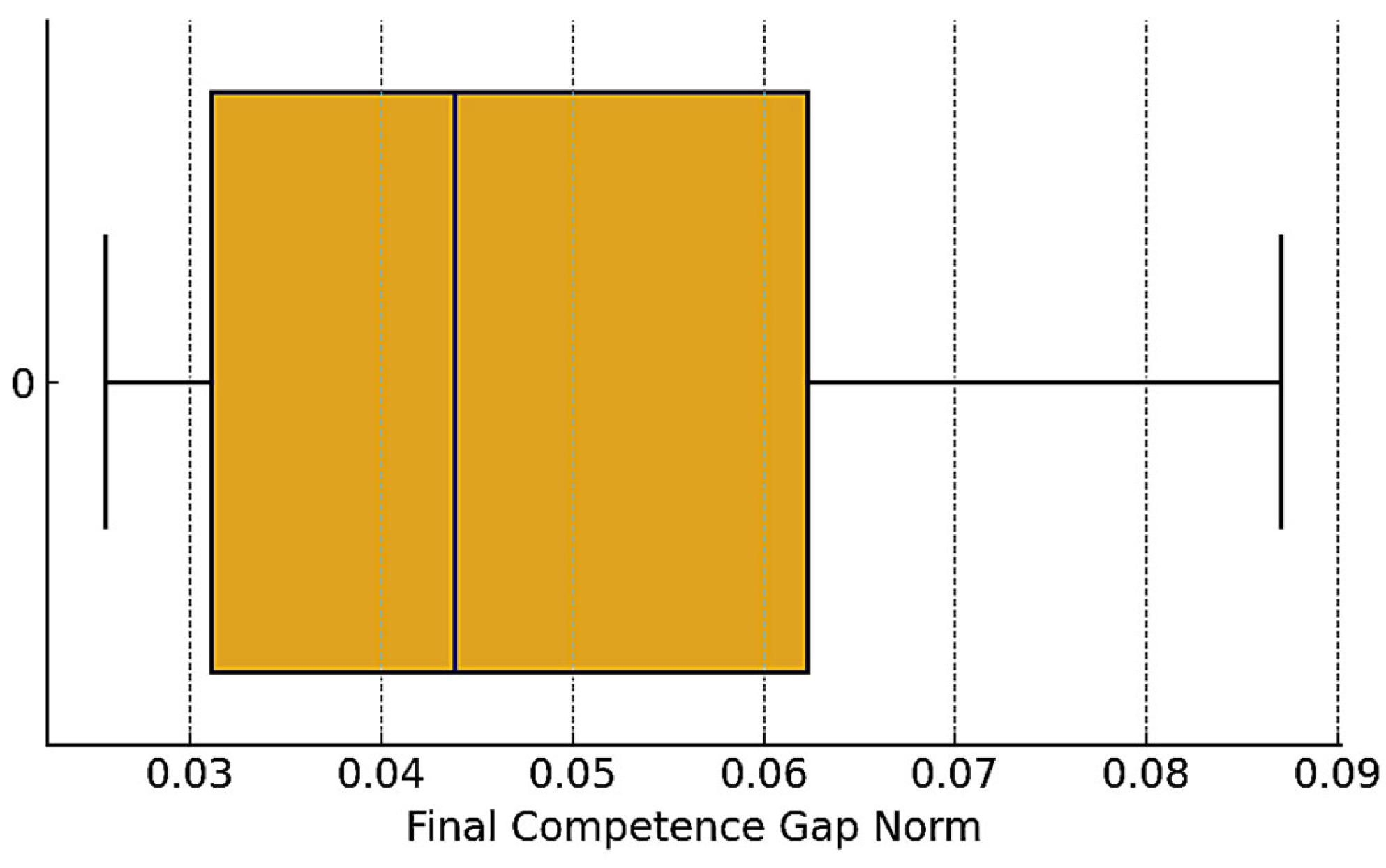

3.2. Orchestration Cycles and Convergence Behavior

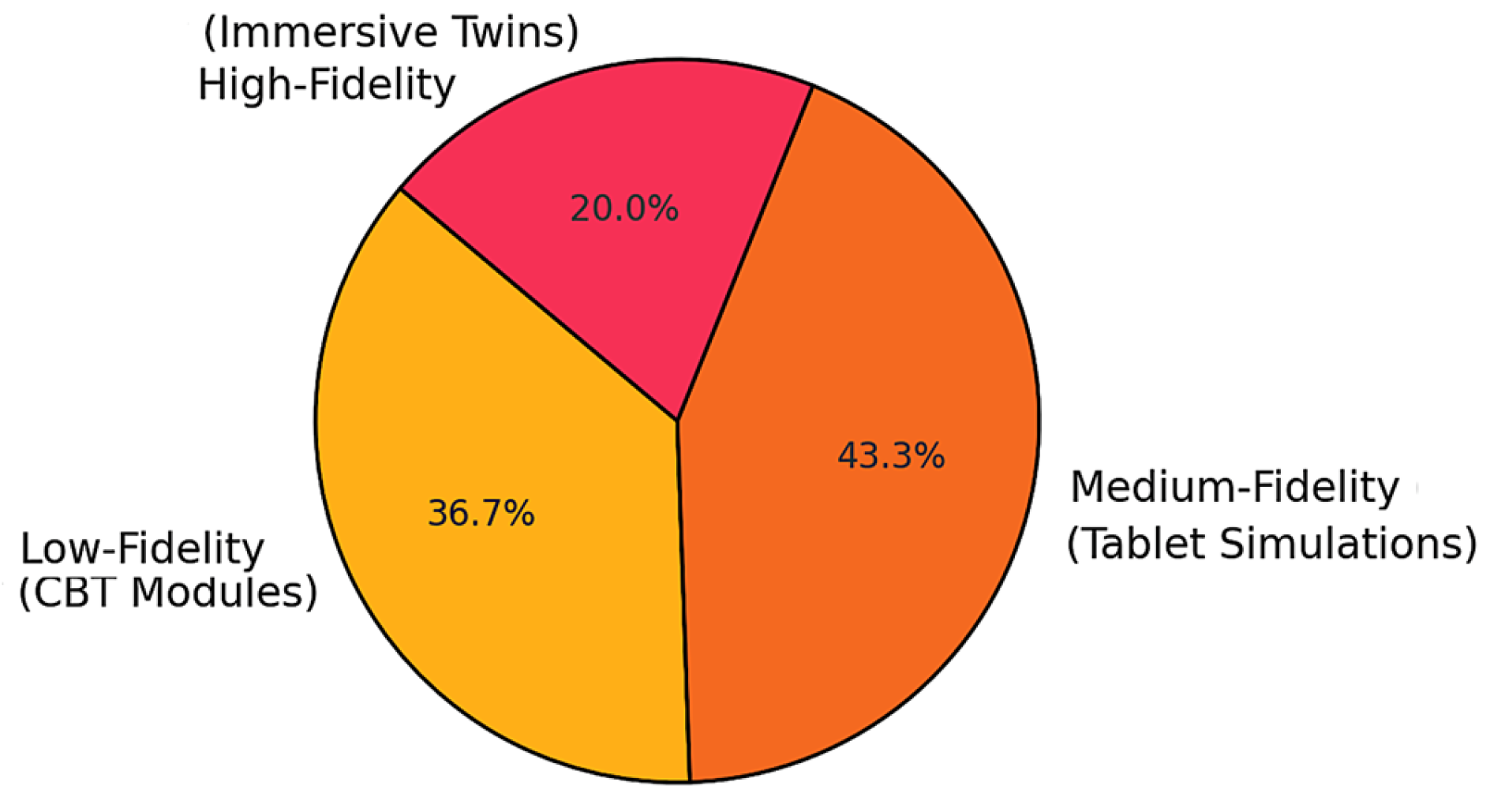

3.3. Fidelity Allocation Behavior

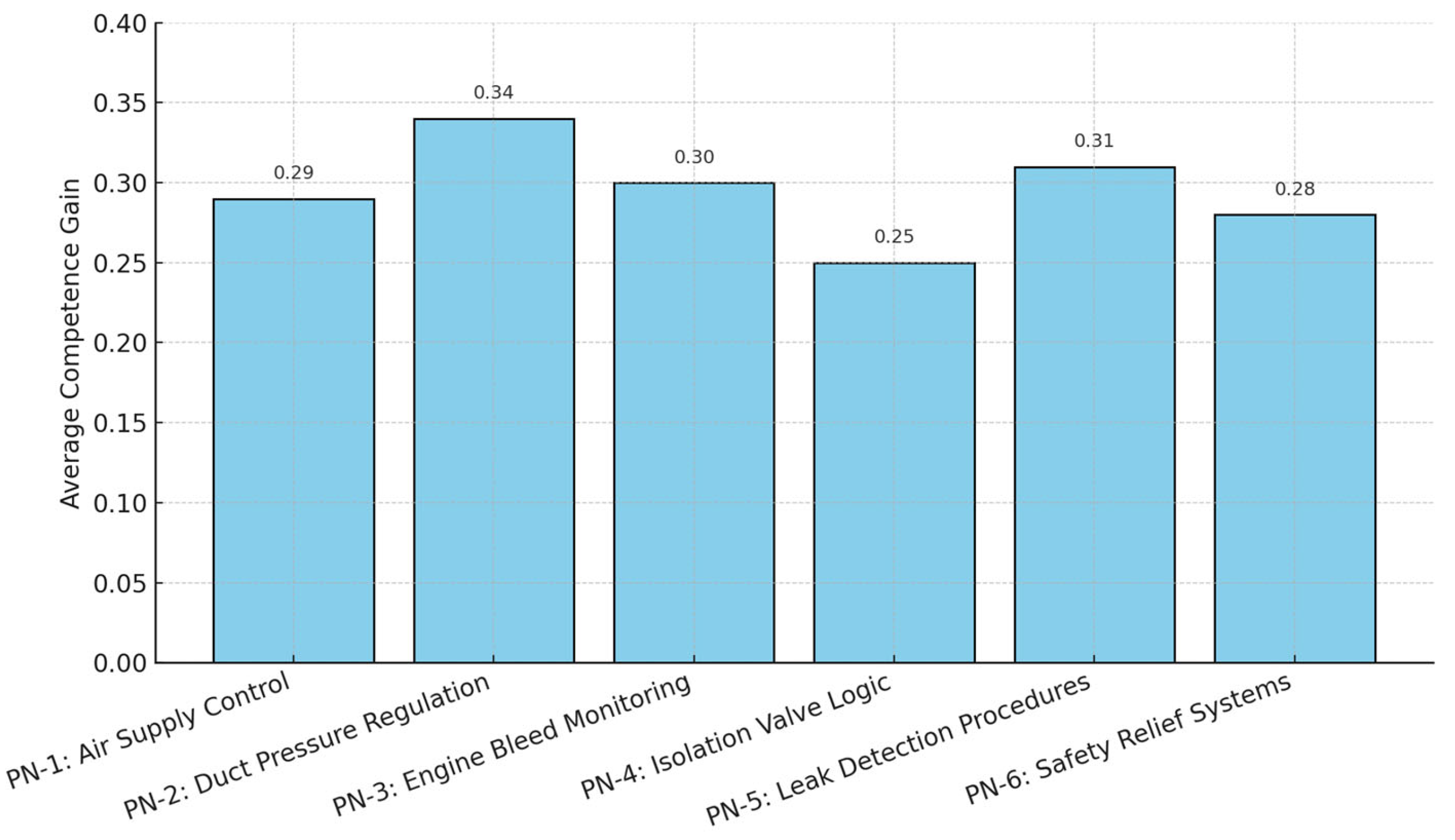

3.4. Domain-Specific Competence Gains

- PN-2: Duct Pressure Regulation—average gain + 0.34;

- PN-5: Leak Detection Procedures—average gain + 0.31;

- PN-3: Engine Bleed Monitoring—average gain + 0.30.

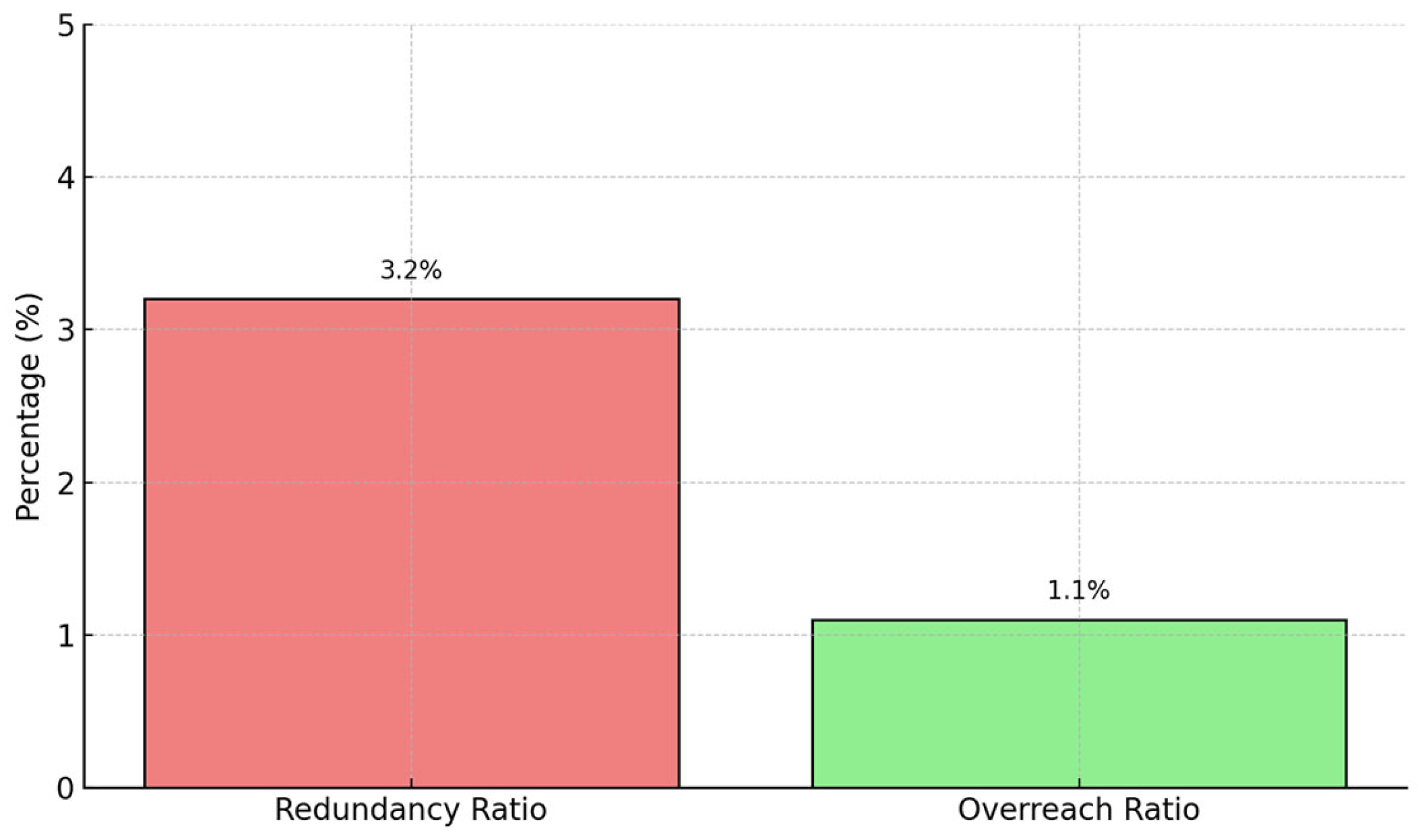

3.5. Personalization Accuracy Metrics

3.6. System Traceability and Auditability

- Learner ID—uniquely identifies each learner within the system;

- Iteration—sequential orchestration cycle;

- Skill Domain—specific competence dimension addressed during the session;

- Pre-Training Gap—calculated skill gap prior to resource assignment;

- Resource ID—internal identifier of the training asset assigned;

- Fidelity Tier—resource fidelity classification (low, medium, high);

- Bloom Level—cognitive complexity level based on Bloom’s Taxonomy (levels 1–6);

- Session Duration—scheduled or actual training time for the assignment;

- Instructional Effectiveness Coefficient—resource-specific learning gain multiplier;

- Post-Training Competence—updated competence value following session completion.

4. Discussion

4.1. Integrated Evaluation of Orchestration Efficiency

4.2. Ethical and Regulatory Considerations

4.3. Limitations and Future Research Directions

- The current competence update model assumes fixed responsiveness coefficients across learners. Incorporating adaptive learning rate estimation based on real-world learner behavior could further improve personalization precision.

- The simulation presently focuses on a single ATA domain (ATA 36 Pneumatic Systems); expansion to multi-domain, cross-ATA orchestration remains an important next step.

- Integration of real-world learner data into the federated learning modules will enable predictive modeling for skill gap evolution and facilitate early intervention strategies.

- Extending orchestration algorithms to incorporate real-time instructor feedback may enable hybrid human-in-the-loop personalization models that balance algorithmic optimization with expert pedagogical judgment.

- While federated learning was implemented in a horizontal architecture for privacy-preserving updates, no adversarial scenarios (e.g., model poisoning or node dropout) were simulated. Future work should explore robust federated learning techniques, including differential privacy, secure aggregation, and federated unlearning, to improve resilience and trust in decentralized training environments.

5. Conclusions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dewan, M.H.; Godina, R.; Chowdhury, M.R.K.; Noor, C.W.M.; Wan Nik, W.M.N.; Man, M. Immersive and Non-Immersive Simulators for the Education and Training in Maritime Domain—A Review. J. Mar. Sci. Eng. 2023, 11, 147. [Google Scholar] [CrossRef]

- Muzata, A.R.; Singh, G.; Stepanov, M.S.; Musonda, I. Immersive Learning: A Systematic Literature Review on Transforming Engineering Education Through Virtual Reality. Virtual Worlds 2024, 3, 480–505. [Google Scholar] [CrossRef]

- Vachálek, J.; Šišmišová, D.; Vašek, P.; Fiťka, I.; Slovák, J.; Šimovec, M. Design and Implementation of Universal Cyber-Physical Model for Testing Logistic Control Algorithms of Production Line’s Digital Twin by Using Color Sensor. Sensors 2021, 21, 1842. [Google Scholar] [CrossRef] [PubMed]

- Vodyaho, A.; Abbas, S.; Zhukova, N.; Chervoncev, M. Model Based Approach to Cyber–Physical Systems Status Monitoring. Computers 2020, 9, 47. [Google Scholar] [CrossRef]

- Shin, M.H. Effects of Project-Based Learning on Students’ Motivation and Self-Efficacy. Engl. Teach. 2018, 73, 95–114. [Google Scholar] [CrossRef]

- Kwok, P.K.; Yan, M.; Qu, T.; Lau, H.Y. User Acceptance of Virtual Reality Technology for Practicing Digital Twin-Based Crisis Management. Int. J. Comput. Integr. Manuf. 2021, 34, 874–887. [Google Scholar] [CrossRef]

- Geng, R.; Li, M.; Hu, Z.; Han, Z.; Zheng, R. Digital Twin in Smart Manufacturing: Remote Control and Virtual Machining Using VR and AR Technologies. Struct. Multidiscip. Optim. 2022, 65, 321. [Google Scholar] [CrossRef]

- Madni, A.M.; Erwin, D.; Madni, A. Exploiting Digital Twin Technology to Teach Engineering Fundamentals and Afford Real-World Learning Opportunities. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 16–19 June 2019. [Google Scholar] [CrossRef]

- Krupas, M.; Kajati, E.; Liu, C.; Zolotova, I. Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods. Sensors 2024, 24, 2232. [Google Scholar] [CrossRef] [PubMed]

- Hänggi, R.; Nyffenegger, F.; Ehrig, F.; Jaeschke, P.; Bernhardsgrütter, R. Smart Learning Factory–Network Approach for Learning and Transfer in a Digital & Physical Set Up. In Proceedings of the PLM 2020, Rapperswil, Switzerland, 5–8 July 2020; Springer: Cham, Switzerland, 2020; pp. 15–25. [Google Scholar] [CrossRef]

- Shi, T. Application of VR Image Recognition and Digital Twins in Artistic Gymnastics Courses. J. Intell. Fuzzy Syst. 2021, 40, 7371–7382. [Google Scholar] [CrossRef]

- Zaballos, A.; Briones, A.; Massa, A.; Centelles, P.; Caballero, V. A Smart Campus’ Digital Twin for Sustainable Comfort Monitoring. Sustainability 2020, 12, 9196. [Google Scholar] [CrossRef]

- Ahuja, K.; Shah, D.; Pareddy, S.; Xhakaj, F.; Ogan, A.; Agarwal, Y.; Harrison, C. Classroom Digital Twins with Instrumentation-Free Gaze Tracking. In Proceedings of the 2021 CHI Conference, Yokohama, Japan, 8–13 May 2021; pp. 1–9. [Google Scholar] [CrossRef]

- Bevilacqua, M.G.; Russo, M.; Giordano, A.; Spallone, R. 3D Reconstruction, Digital Twinning, and Virtual Reality: Architectural Heritage Applications. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 92–96. [Google Scholar] [CrossRef]

- Halverson, L.R.; Graham, C.R. Learner Engagement in Blended Learning Environments: A Conceptual Framework. Online Learn. 2019, 23, 145–178. [Google Scholar] [CrossRef]

- Zheng, X.; Lu, J.; Kiritsis, D. The Emergence of Cognitive Digital Twin: Vision, Challenges and Opportunities. Int. J. Prod. Res. 2022, 60, 7610–7632. [Google Scholar] [CrossRef]

- Hazrat, M.A.; Hassan, N.M.S.; Chowdhury, A.A.; Rasul, M.G.; Taylor, B.A. Developing a Skilled Workforce for Future Industry Demand: The Potential of Digital Twin-Based Teaching and Learning Practices in Engineering Education. Sustainability 2023, 15, 16433. [Google Scholar] [CrossRef]

- Kabashkin, I. Digital Twin Framework for Aircraft Lifecycle Management Based on Data-Driven Models. Mathematics 2024, 12, 2979. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, Y.; Wang, X.; Yang, K.; Zhao, Y. A Novel Digital Twin Framework for Aeroengine Performance Diagnosis. Aerospace 2023, 10, 789. [Google Scholar] [CrossRef]

- Zaccaria, V.; Stenfelt, M.; Aslanidou, I.; Kyprianidis, K.G. Fleet Monitoring and Diagnostics Framework Based on Digital Twin of Aeroengines. In Proceedings of the ASME Turbo Expo, Oslo, Norway, 11–15 June 2018; Volume 6. [Google Scholar] [CrossRef]

- Alasim, F.; Almalki, H. Virtual Simulation-Based Training for Aviation Maintenance Technicians: Recommendations of a Panel of Experts. SAE Int. J. Adv. Curr. Prac. Mobil. 2021, 3, 1285–1292. [Google Scholar] [CrossRef]

- Charles River Analytics. Maintenance Training Based on an Adaptive Game-Based Environment Using a Pedagogic Interpretation Engine (MAGPIE). Available online: https://cra.com/blog/maintenance-training-based-on-an-adaptive-game-based-environment-using-a-pedagogic-interpretation-engine-magpie/ (accessed on 12 June 2025).

- Wu, W.-C.; Vu, V.-H. Application of Virtual Reality Method in Aircraft Maintenance Service—Taking Dornier 228 as an Example. Appl. Sci. 2022, 12, 7283. [Google Scholar] [CrossRef]

- Lufthansa Technik. AVIATAR. Available online: https://www.lufthansa-technik.com/de/aviatar (accessed on 12 June 2025).

- Airbus. Skywise. Available online: https://aircraft.airbus.com/en/services/enhance/skywise (accessed on 12 June 2025).

- GE Digital. PREDIX Analytics Framework. Available online: https://www.ge.com/digital/documentation/predix-platforms/afs-overview.html (accessed on 12 June 2025).

- AFI KLM E&M. PROGNOS—Predictive Maintenance. Available online: https://www.afiklmem.com/en/solutions/about-prognos (accessed on 12 June 2025).

- Boeing Global Services. Enhanced Digital Solutions Focus on Customer Speed and Operational Efficiency. Available online: https://investors.boeing.com/investors/news/press-release-details/2018/Boeing-Global-Services-Enhanced-Digital-Solutions-Focus-on-Customer-Speed-and-Operational-Efficiency/default.aspx (accessed on 12 June 2025).

- Kabashkin, I.; Misnevs, B.; Zervina, O. Artificial Intelligence in Aviation: New Professionals for New Technologies. Appl. Sci. 2023, 13, 11660. [Google Scholar] [CrossRef]

- Rojas, L.; Peña, Á.; Garcia, J. AI-Driven Predictive Maintenance in Mining: A Systematic Literature Review on Fault Detection, Digital Twins, and Intelligent Asset Management. Appl. Sci. 2025, 15, 3337. [Google Scholar] [CrossRef]

- Lu, Q.; Li, M. Digital Twin-Driven Remaining Useful Life Prediction for Rolling Element Bearing. Machines 2023, 11, 678. [Google Scholar] [CrossRef]

- European Union Aviation Safety Agency (EASA). Part-66-Maintenance Certifying Staff. Available online: https://www.easa.europa.eu/en/acceptable-means-compliance-and-guidance-material-group/part-66-maintenance-certifying-staff (accessed on 12 June 2025).

- iSpec 2200: Information Standards for Aviation Maintenance, Revision 2024.1. Available online: https://publications.airlines.org/products/ispec-2200-information-standards-for-aviation-maintenance-revision-2024-1 (accessed on 12 June 2025).

- xAPI Solved and Explained. Available online: https://xapi.com/?utm_source=google&utm_medium=natural_search (accessed on 12 June 2025).

- Apache Kafka. Available online: https://kafka.apache.org/ (accessed on 12 June 2025).

- MQTT: The Standard for IoT Messaging. Available online: https://mqtt.org/ (accessed on 12 June 2025).

- Bloom, B.S.; Engelhart, M.D.; Furst, E.J.; Hill, W.H.; Krathwohl, D.R. Handbook I: Cognitive Domain. In Taxonomy of Educational Objectives: The Classification of Educational Goals; David McKay: New York, NY, USA, 1956. [Google Scholar]

| Skill Domain | Target | Learner 1 | Learner 2 | Learner 3 | Learner 4 | Learner 5 | Learner 6 |

|---|---|---|---|---|---|---|---|

| PN-1: Air Supply Control | 0.92 | 0.55 | 0.66 | 0.61 | 0.61 | 0.64 | 0.58 |

| PN-2: Duct Pressure Reg. | 0.88 | 0.48 | 0.47 | 0.56 | 0.64 | 0.66 | 0.67 |

| PN-3: Engine Bleed Mon. | 0.90 | 0.52 | 0.54 | 0.49 | 0.55 | 0.56 | 0.61 |

| PN-4: Isolation Valve Logic | 0.89 | 0.62 | 0.54 | 0.63 | 0.67 | 0.52 | 0.68 |

| PN-5: Leak Detection Proc. | 0.91 | 0.44 | 0.50 | 0.42 | 0.50 | 0.55 | 0.51 |

| PN-6: Safety Relief Systems | 0.93 | 0.49 | 0.60 | 0.46 | 0.53 | 0.63 | 0.52 |

| Metric | Value | Interpretation |

|---|---|---|

| Initial Competence Gap Norm | 0.38–0.60 | Significant variability in baseline learner profiles |

| Final Competence Gap Norm | <0.10 | Full regulatory convergence achieved in all learners |

| Convergence Iterations | ≤6 iterations | Rapid adaptation within limited training cycles |

| Redundancy Ratio | 3.2% | Minimal unnecessary assignments |

| Overreach Ratio | 1.1% | Very few inappropriate high-fidelity assignments |

| High-Fidelity Usage (XR) | 24% | Immersive twins used sparingly for refinement |

| Low-Fidelity Usage (CBT) | 44% | Broadly used for gap remediation |

| Regulatory Threshold Achievement | 100% | Full compliance across entire learner cohort |

| Learner ID | Iteration | Skill Domain | Pre-Training Gap | Resource ID | Fidelity Tier | Bloom Level | Session Duration (min) | Effectiveness | Post-Training Competence |

|---|---|---|---|---|---|---|---|---|---|

| L1 | 1 | PN-2 | 0.40 | R102 | Low | 2 | 20 | 0.3 | 0.62 |

| L1 | 2 | PN-5 | 0.25 | R205 | Medium | 3 | 35 | 0.5 | 0.72 |

| L2 | 1 | PN-3 | 0.38 | R315 | Low | 2 | 25 | 0.3 | 0.75 |

| L3 | 1 | PN-2 | 0.42 | R102 | Low | 2 | 20 | 0.3 | 0.60 |

| L4 | 3 | PN-5 | 0.18 | R205 | High | 5 | 50 | 0.8 | 0.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kabashkin, I. Development of Digital Training Twins in the Aircraft Maintenance Ecosystem. Algorithms 2025, 18, 411. https://doi.org/10.3390/a18070411

Kabashkin I. Development of Digital Training Twins in the Aircraft Maintenance Ecosystem. Algorithms. 2025; 18(7):411. https://doi.org/10.3390/a18070411

Chicago/Turabian StyleKabashkin, Igor. 2025. "Development of Digital Training Twins in the Aircraft Maintenance Ecosystem" Algorithms 18, no. 7: 411. https://doi.org/10.3390/a18070411

APA StyleKabashkin, I. (2025). Development of Digital Training Twins in the Aircraft Maintenance Ecosystem. Algorithms, 18(7), 411. https://doi.org/10.3390/a18070411