Abstract

Nowadays, portrait drawing has become increasingly popular as a means of developing artistic skills and nurturing emotional expression. However, it is challenging for novices to start learning it, as they usually lack a solid grasp of proportions and structural foundations of the five senses. To address this problem, we have studied Portrait Drawing Learning Assistant System (PDLAS) for guiding novices by providing auxiliary lines of facial features, generated by utilizing OpenPose and OpenCV libraries. For PDLAS, we have also presented the exactness assessment method to evaluate drawing accuracy using the Normalized Cross-Correlation (NCC) algorithm. It calculates the similarity score between the drawing result and the initial portrait photo. Unfortunately, the current method does not assess the hair drawing, although it occupies a large part of a portrait and often determines its quality. In this paper, we present a hair drawing evaluation algorithm for the exactness assessment method to offer comprehensive feedback to users in PDLAS. To emphasize hair lines, this algorithm extracts the texture of the hair region by computing the eigenvalues and eigenvectors of the hair image. For evaluations, we applied the proposal to drawing results by seven students from Okayama University, Japan and confirmed the validity. In addition, we observed the NCC score improvement in PDLAS by modifying the face parts with low similarity scores from the exactness assessment method.

1. Introduction

Nowadays, the practice of portrait drawing has become increasingly popular as a means of fostering artistic talent and empathy [1]. Despite its widespread popularity, portrait drawing remains challenging, especially for novices. They frequently find it difficult to understand the proportions and structure of facial features without professional helps [2]. To address this problem, we have studied and implemented Portrait Drawing Learning Assistant System (PDLAS) to guide beginners in drawing a portrait by providing the auxiliary lines of facial features [3]. OpenPose [4] and OpenCV [5] libraries are used together to construct and integrate the auxiliary lines from a given face photo. In PDLAS, an iPad device is supposed to be used with the drawing application to sketch a portrait while following auxiliary lines as the guidance of the portrait. For PDLAS, to give feedback to the user, we have designed and implemented the exactness assessment method that quantifies the similarity of various facial parts, such as a mouth, a nose, and eyes, between a user’s drawing result and its face photo using the normalized cross-correlation (NCC) algorithm [6]. This method extracts a rectangle called a bounding box that surrounds each face part, and calculates the NCC value between the bounding boxes in the face photo and the drawing result as the similarity score in the drawing result for evaluations. Unfortunately, the currently implemented method does not assess the hair drawing, although it occupies a large part of a portrait and often determines its quality. In this paper, we present a hair drawing evaluation algorithm for the exactness assessment method to offer comprehensive feedback to users in PDLAS. The procedure for extracting the bounding box is newly defined. In addition, to emphasize hair lines, the texture of the bounding box is extracted by computing the eigenvalues and eigenvectors of the hair image for the NCC algorithm application. For evaluations, we applied the proposed hair drawing evaluation algorithm to the drawing results with PDLAS of seven students at Okayama University, Japan, and confirmed its validity. In particular, by comparing the NCC values before and after considering the texture, we verified the effectiveness of the evaluation improvement. As an overall evaluation of PDLAS, we asked three students to repeat modifying the face parts by PDLAS that give low similarity scores by the exactness assessment method. The results observed NCC show improvements among them. This paper is structured as follows:

Section 2 introduces related works in the literature. Section 3 reviews Portrait Drawing Learning Assistant System (PDLAS). Section 4 proposes the hair drawing evaluation algorithm. Section 5 evaluates the proposal through applications to portrait drawing results by students. Section 6 discusses the overall evaluation of PDLAS with the drawing exactness evaluation method. Finally, Section 8 concludes this paper and future works.

2. Related Works in the Literature

This section provides an overview of related work in the literature. In [7], Huang Z. et al. developed an artificial intelligence painting agent capable of sequentially applying strokes to a canvas to recreate a given image. They used neural networks to generate parameters that can determine the position, shape, color, and transparency of the strokes. While previous studies have focused on teaching agents skills like sketching, doodling, and character writing, this work aimed to train the agent to tackle more complex tasks, such as creating portraits of humans and natural scenes, characterized by intricate textures and complex structural compositions. In [8], Yi R. et al. introduced an innovative quality metric for portrait drawings, designed based on human perception, and incorporated a quality loss to steer the neural network toward producing more visually appealing portrait drawings. They noted that existing unpaired translation approaches like CycleGAN often embed hidden reconstruction details indiscriminately across the entire drawing. This is attributed to the significant imbalance in information between the photo and portrait drawing domains, resulting in the omission of crucial facial features. In [9], Takagi S. et al. developed a groundbreaking image-to-pencil translation method capable of not only producing high-quality pencil sketches but also simulating the drawing process. Unlike traditional pencil sketch algorithms, which focus on texture rendering rather than directly replicating strokes, this approach goes beyond merely delivering a final result by also showcasing the step-by-step drawing process. In [10], Looi L. et al. introduced a method of generating drawing guidelines from a front-facing facial image. Creating portrait drawings involves breaking down the face into fundamental guidelines, serving as a basis for the artwork. Their approach leverages a modified histogram of Oriented Gradients and a linear support vector machine (SVM) to detect the face’s region of interest within the image. To determine the positions of the key facial landmarks, they employed an ensemble of randomized regression trees. Using the selected points from the detected landmarks and incorporating data on average human head proportions, they successfully estimated the drawing guidelines. In [11], Takagi S. et al. designed a learning support system aimed at assisting beginners in pencil drawing, focusing on the foundational aspects of creating pictures. The system takes a dataset of motifs and a user’s sketch as input, providing tailored advice in return. The process involves four main functions: extracting features from motifs, extracting features from sketches, identifying errors, and generating and presenting advice. A prototype system was developed and tested, with its scope limited to basic motifs and primary advice. In [12], Huang Z. et al. introduced dualFace as a portrait drawing interface designed to help varying skill levels create recognizable and realistic face sketches. Drawing inspiration from traditional portrait drawing techniques, dualFace provides assistance in two stages to offer both global and local visual guidance. The first stage supports people in drawing contour lines for the overall geometric structure of the portrait, while the second stage aids in adding detailed features to facial parts, ensuring they align with the contour lines that users draw. In [13], Yi, R. et al. proposed APDrawingGAN, a novel GAN-based architecture that builds upon hierarchical generators and discriminators combining both a global network for images as a whole and local networks for individual facial regions. This allows dedicated drawing strategies to be learned for different facial features. Since artists’ drawings may not have lines perfectly aligned with image features, they developed a novel loss to measure similarity between generated and artists’ drawings based on distance transforms, leading to improved strokes in portrait drawing. To train APDrawingGAN, they constructed an artistic drawing dataset containing high-resolution portrait photos and corresponding professional artistic drawings. In [14], Huang Z. et al. introduced an AI-driven system designed to assist users in creating professional-quality anime portraits by progressively refining their sketches. Unlike traditional sketch-to-image (S2I) approaches, which struggles with generating high-quality images from incomplete rough sketches, this system ensures consistency and aesthetic quality throughout the sketching process. They addressed the challenge of maintaining high-quality outputs from low-completion sketches using the latent space exploration of StyleGAN combined with a two-stage training strategy. Specifically, they introduced the concept of stroke-level disentanglement, where freehand sketch strokes are aligned with structural attributes in the latent space of StyleGAN. In [15], Singh J. et al. were motivated by the need to learn more human-intelligible painting sequences in order to facilitate the use of autonomous painting systems in a more interactive context. To this end, they proposed a novel painting approach which learns to generate output canvases while exhibiting a painting style that is more relatable to human users. The proposed painting pipeline Intelli-Paint consists of three features. (1) A progressive layering strategy allows the agent to first paint a natural background scene before adding in each of the foreground objects in a progressive fashion. (2) They introduced a novel sequential brushstroke guidance strategy that helps the painting agent to shift its attention between different image regions in a semantic-aware manner. (3) They proposed a brushstroke regularization strategy that allows for a 60–80% reduction in the total number of required brushstrokes without any perceivable differences in the quality of generated canvases. In [16], Li S. et al. introduced AgeFace, an interactive drawing interface designed to help people create facial features with age-specific characteristics based on their input strokes. To assess its effectiveness, they conducted a user experience study and a comparative experiment against baseline methods. The results demonstrated that AgeFace outperformed the baseline systems in terms of usability and provided superior support for the creative process. In conclusion, although these methods and approaches have significantly enhanced the picture drawing process, they mainly focus on enhancing the final result or drawing with robots. They did not directly mentor novices during the learning process, in which they did not offer detailed guidelines to help beginners improve at drawing. Furthermore, the majority of these studies lack an assessment system that will offer user feedback. This gap in the research background will require additional studies and constructing a system that serves beginners the fundamentals of portrait drawing with possible guidance, feedback, and instructions.

3. Review of Portrait Drawing Learning Assistant System

This section provides an overview of Portrait Drawing Learning Assistant System (PDLAS) [17].

3.1. System Overview

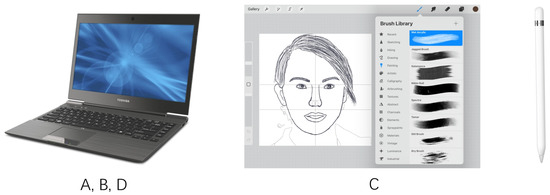

We have designed and developed PDLAS to support beginners in drawing portraits using electronic devices such as tablets or personal computers (PCs) equipped with a digital pen and drawing software. In the system setup, we have utilized a conventional PC, an iPad [18] with Apple Pencil [19] for the device, and Procreate v5.3.14 [20] for the drawing application, as illustrated in Figure 1.

Figure 1.

Devices used in PDLAS.

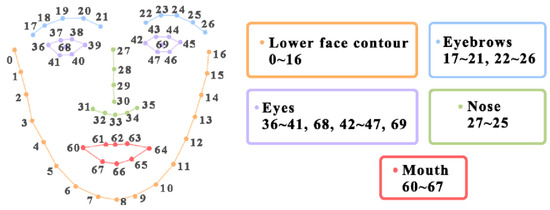

3.2. Auxiliary Lines

To assist novice users in creating portraits, an image with auxiliary lines is generated using the proposed auxiliary line generation algorithm on a PC and added as a separate layer in Procreate, distinct from the drawing layer. This algorithm processes a provided facial photograph by utilizing the OpenPose and OpenCV libraries implemented in a Python 3.7 program. OpenPose extracts the coordinates of 70 keypoints from a facial image, as shown in Figure 2. These keypoints represent essential locations critical for defining the structure of the human body. Based on these coordinates, the algorithm generates auxiliary lines for facial features such as the eyes, eyebrows, nose, and mouth. Subsequently, OpenCV is employed to create auxiliary lines for additional elements, including hair and eyeglasses.

Figure 2.

Face keypoints by OpenPose [3].

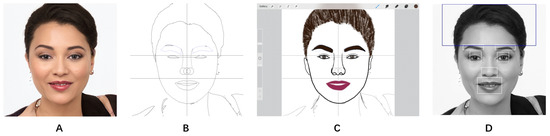

3.3. System Utilization Flow

Figure 3 illustrates the utilization flow of PDLAS. The process begins on a PC, where the original face image is imported, and the auxiliary line image is generated (Processes A and B). The guidelines in the auxiliary line image serve as a reference to assist users in drawing a portrait more accurately. Next, the auxiliary line image is transferred to the iPad and imported into the digital drawing software Procreate (Process C). Then, utilizing Apple Pencil and drawing tools within Procreate, an artwork is created referencing the provided auxiliary lines. Once the drawing is completed, the drawing result is transferred back to the PC for evaluations (Process D). The assessment method analyzes the drawing result and calculates similarity scores. A user can review the scores and may make necessary adjustments to improve the artwork. This iterative process allows users to refine their drawings with systematic feedback, enhancing both their accuracy and artistic skills.

Figure 3.

Flow of using PDLAS: (A) face photo; (B) auxiliary lines; (C) drawing; and (D) assessment.

The detailed utilization procedure of PDLAS is decribed as follows:

- 1.

- Import a portrait photo file as the face image to a PC.

- 2.

- Run the auxiliary line generation algorithm on the PC to generate the auxiliary line image.

- 3.

- Run Procreate on iPad.

- 4.

- Import the face image and the auxiliary line image into iPad at different layers.

- 5.

- Draw the portrait using brushes and layer functions provided in Procreate.

- 6.

- Save the drawing result image as a PNG file.

- 7.

- Apply the drawing exactness evaluation method to the result image and calculate the NCC scores for the hair, the eyebrows, the mouth, and the nose.

- 8.

- Modify the face parts if their NCC scores are low and go back to 7.

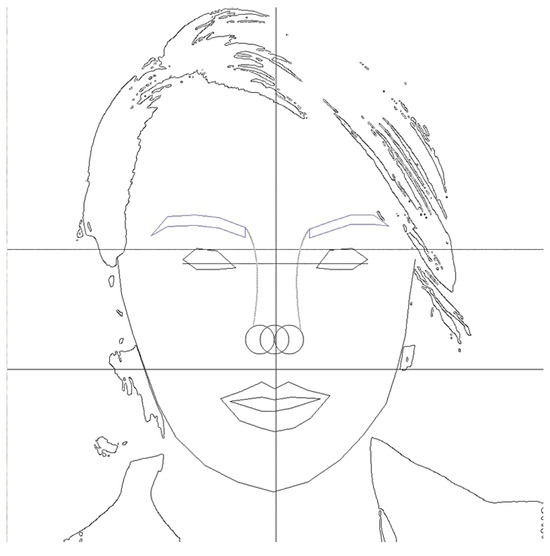

3.4. Auxiliary Line Image

Figure 4 and Figure 5 illustrate an example face photo and its corresponding auxiliary line image that is generated by the algorithm, respectively. The auxiliary lines highlight the key facial features and the structural elements. These guiding lines to draw them will allow users to focus on enhancing their artistic interpretations and refining details without being overwhelmed by the complexities of free-hand sketching.

Figure 4.

Example face photo.

Figure 5.

Auxiliary line image.

The auxiliary lines are designed to help users understand the overall facial structure and accurately place the individual face parts when drawing a portrait. They include the following:

- Triangular guidelines that are commonly employed in traditional drawing techniques [21];

- Contours that outline the eyes, the mouth, and the lower facial shape;

- Three circular guidelines that indicate the structure of the nose;

- Human nose lines using Bessel curves to be mimicked;

- Boundary lines defining the hair, the eyebrows, and the eyeglasses.

3.5. Auxiliary Line Generation Algorithm

Among the above six auxiliary lines, the first three are generated from the corresponding keypoints coordinates found by OpenPose, and the remaining are generated by using OpenCV library functions. Table 1 shows the keypoint indices that are used to generate the corresponding auxiliary lines.

Table 1.

OpenPose keypoints used for auxiliary line calculations [3].

3.6. Drawing Practice on iPad

The auxiliary line image is imported to Procreate on the iPad for users to draw portraits. The combination of the auxiliary line image and the intuitive tools offered in Procreate can create a seamless, low-hurdling workflow for users, enabling them to produce high-quality portraits with ease and precision. This process not only simplifies drawing tasks but also fosters learning and skill developments, particularly for students, to improve their portrait-drawing techniques.

3.7. Drawing Exactness Evaluation Method

To evaluate the drawing results of users on PDLAS, we have proposed the drawing accuracy evaluation method using normalized cross-correlation (NCC).

3.7.1. Normalized Cross-Correlation

This approach utilizes the normalized cross-correlation (NCC) algorithm [22] to assess the similarity between a user’s drawing and the provided facial photograph. NCC is a reliable method for assessing the resemblance between two signals and is especially well-suited for the processing of images [23,24,25,26]. As a widely used similarity measure in matching tasks, NCC stands out for its simplicity and effectiveness [27]. From a technical perspective, NCC identifies matching points between the template and the image by locating the maximum value within the image matrices [28,29]. This algorithm offers a robust framework on the pixel-by-pixel evaluation of likeness, primarily focusing on accurately reproducing the shapes and positions of facial features. The NCC calculation formula is given as follows [30]:

3.7.2. NCC Score for Face Component

The method calculates the NCC score for each individual face part, including the eye, nose, eyebrow, and mouth, in order to provide precise and comprehensive feedback. To achieve this, OpenPose [31] first extracts the bounding box that surrounds each face part with the rectangular frame using 70 keypoints. The NCC score will be calculated for each bounding box’s face photo and drawing results image. The following process outlines the steps for calculating the NCC score for each facial feature:

- 1.

- Choose Region: The corresponding region is identified in the image that aligns with the component to be assessed.

- 2.

- Extract Feature Vector: The pixel values from this region are extracted to construct the feature vector, where pre-processing is required to minimize the impact of noise and variations.

- 3.

- Normalize Feature Vector: The feature vector is normalized to have a zero mean and unit variance, helping to mitigate the effects of lighting or exposure differences between the images.

- 4.

- Compute Dot Product: The similarity is measured by computing the dot product of two normalized feature vectors.

- 5.

- Normalize Similarity Score: To obtain the final NCC value, the ratio of the dot product to the product of the magnitudes of the normalized feature vectors is computed, yielding the normalized correlation score.

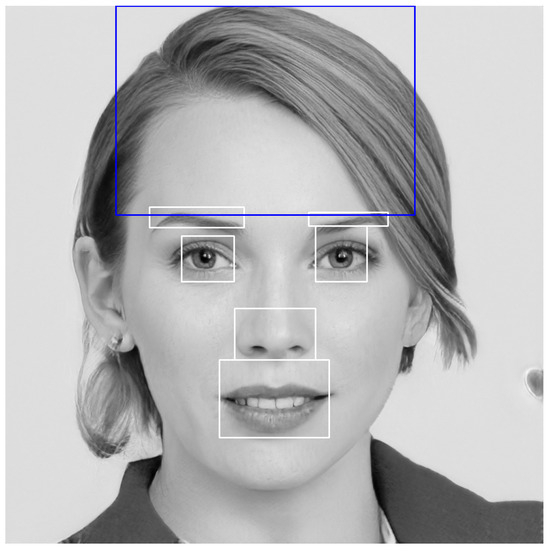

3.7.3. Bounding Box for Face Part

To evaluate the drawn face parts one by one, we extract the rectangle surrounding each part, called the bounding box [32]. The target face part in our previous study included both the eyebrows, both the eyes, the nose, and the mouth. Then, the NCC algorithm is applied to each bounding box to evaluate the drawing result of each face part, independently. The bounding box for each part is extracted using the location of the corresponding keypoints found by OpenPose. Since the bounding box is rectangular, it is necessary to extract the leftmost x value, the rightmost x value, the topmost y value, and the bottommost y value. Table 2 presents the indices of the keypoints utilized to define the bounding box for each facial feature.

Table 2.

Keypoints for bounding boxes [3].

Figure 6 shows the rectangular boxes of the face photo in Figure 4 that are extracted by the algorithm.

Figure 6.

Example bounding boxes (cited from INCIT2024) [32].

4. Proposal of Hair Drawing Evaluation Algorithm

In this section, we propose a drawing evaluation algorithm of hair for the exactness assessment method in PDLAS.

4.1. Bounding Box for Hair

First, we define the bounding box to cover the face part of the hair. Table 3 shows the keypoint indices from OpenPose to determine the four corners of the bounding box. Figure 6 shows the bounding box for the hair in blue.

Table 3.

Keypoints for hair bounding box.

4.2. Hair Texture

The hair texture is an important feature to determine the view impression of the hair. A hair texture is inherently complex due to fine details, irregular patterns, and varying densities, which will make the evaluation of hair to be the most challenging task among the face parts being assessed in our system [33]. Unlike simpler face parts such as an eye or a mouth, the hair evaluation requires a detailed approach to analyze the texture, because it involves intricate patterns and interplays of shading and density. To address this challenge, we adopt the preprocessing step of the texture feature detection. This step involves extracting the texture characteristics from both the original face image and the user’s drawing result using the specialized algorithms designed to capture the fine-grained details. By overlapping the features extracted, we compare them by re-evaluating their similarity using the normalized cross-correlation (NCC) method.

Hair Drawing Evaluation Procedure

The procedure of calculating the NCC score under the hair texture in the hair drawing evaluation algorithm is as follows:

- 1.

- Grayscale conversion: The color input image is converted to the grayscale one.

- 2.

- Edge detection: The Canny edge detection algorithm is applied to identify the edges in the grayscale image for locating the hair region.

- 3.

- Morphological processing: The dilation and erosion operations are applied to refine the edge detection result.

- 4.

- Hair region extraction: The grayscale image is combined with this mask to isolate the hair region.

- 5.

- Texture feature detection: The cornerEigenValsAndVecs method in the OpenCV library is applied to compute the eigenvalues for the blocks in the image, and produce the texture image for the hair region.

- 6.

- NCC score calculation: The NCC score is calculated for the texture image of both the original image and the user result.

5. Evaluation of Hair Drawing Evaluation Algorithm

In this section, we evaluate the proposed hair drawing evaluation algorithm through its applications to drawing results using PDLAS by 12 students at Okayama University, Japan.

5.1. Target Drawing Results

Table 4 shows the profile of the user and the photo person for portrait drawing in each of the 12 drawing results. These face photos were downloaded from [34].

Table 4.

Target drawing results.

5.2. NCC Score Results

Table 5 displays the NCC scores for face parts in the drawing results by the proposed method for the hair with and without considering the texture. They are compared with the scores for the eyes, nose, mouth, and eyebrows. The table suggests that the NCC score for the hair becomes close to the scores for other face parts by considering the texture. In the following, we focus on analyzing the details of the hair drawing.

Table 5.

NCC scores for drawing results.

We analyze the NCC scores for hairs separately for four different types of individuals: male, female, senior, and child.

5.3. User 1 Result Analysis

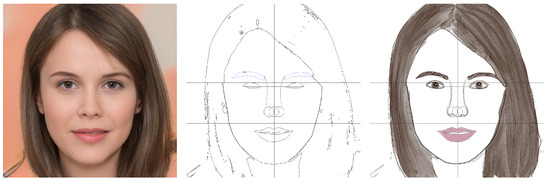

First, we discuss the NCC score of the hair of a child. Figure 7 shows the portrait photo and the drawing result by User 1.

Figure 7.

Face photo and drawing result by User 1 [32].

This drawing result received the highest NCC score among the seven. It captured the shape of the hair in the face photo well, especially, on the top and left sides of the hair. It also drew the outline and directions of the hair well, with the overall shape and flow of the hair consistent with the face photo. This user used richer strokes, especially at the top of the head and the hairline. As a result, this drawing result shows the three-dimensionality and the volume of the hair, avoiding the flatness in hair drawing.

5.4. User 3 Result Analysis

Second, we discuss the NCC score for the hair of a female adult. Figure 8 shows the face photo and the drawing result by User 3.

Figure 8.

Face photo and drawing result by User 3 [32].

User 3 used detailed lines to depict the hair, giving the texture to the overall hair and presenting the layers to the hair. The locations of the hairlines well match with those in the face photo. However, in the right area, the layering of the hair and the subtle changes of the hair are not well represented. The hair appears too smooth. It will be better to advise this user to use shorter and delicate strokes to make the hair look natural.

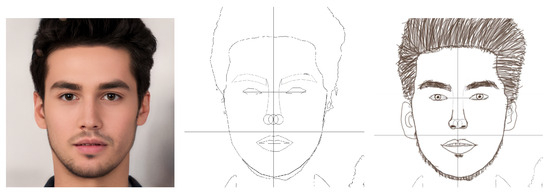

5.5. User 7 Result Analysis

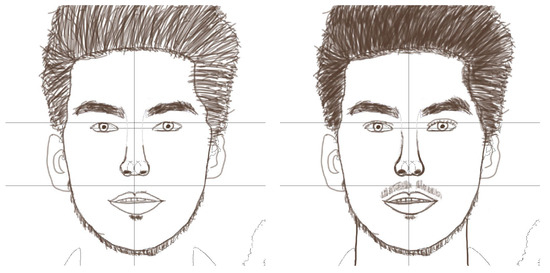

Third, we discuss the NCC score for the hair of a male adult. Figure 9 illustrates the face photo and the drawing result by User 7.

Figure 9.

Face photo and drawing result by User 7.

In this case, the hair density is considered, making a large impact on the drawing result. In the face photo, the hair has a higher density and fine texture variations. On the other hand, in the drawing result, the hair consists of sparse lines to show the hair, which cannot match those details. In addition, the contours of the hair do not exactly match those of the hair in the face photo. As a result, there is a larger difference in visual impressions between them.

5.6. User 12 Result Analysis

Fourth, we discuss the NCC score for the hair of a female senior. Figure 10 shows the face photo and the drawing result by User 12.

Figure 10.

Face photo and drawing result by User 12.

User 12 achieved a relatively low NCC score of 0.24 for the hair section. Unlike a dark or black hair, which typically has stronger edges and higher contrast, this silver/gray hair has softer gradients and lower contrast, which can reduce the correlation between the user’s hair drawing and the original image. In the user’s drawing, the hair is represented mainly with strokes, and lacks the necessary shading to mimic the original soft gray tones. Unlike black hair, which can be outlined with clear, bold strokes, gray hair requires more shading and subtle variations to achieve a closer match.

6. Evaluation of Iterative Drawing with PDLAS

In this section, we evaluate the effectiveness of the iterative portrait drawing using PDLAS. We investigate improvements to drawing results when users repeated portrait redrawing and accuracy evaluations.

6.1. NCC Score Improvements

For the evaluation, we invited User 1, User 3, and User 7 in Table 4 to join this experiment. We asked them to mainly modify the face parts in their drawing results that gave the low NCC scores. Then, we calculated the NCC scores of the modified drawing results. Table 6 presents the NCC scores of the initial drawing result and the modified one for each of the three users. In the following subsections, we will discuss the results of each user in detail.

Table 6.

NCC score improvements by iterative drawing.

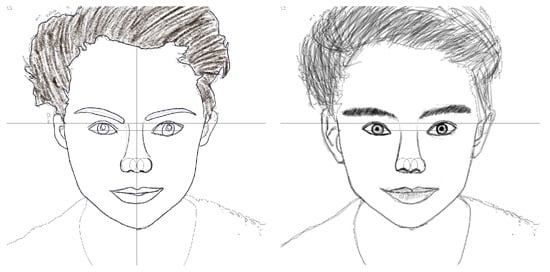

6.2. User 1 Result Analysis

Figure 11 shows the drawing results of User 1. Clearly, the modified result is an improvement from the initial one.

Figure 11.

Initial and modified results for User 1.

The average NCC score was improved by 0.04 in the modification. The scores for the face parts that had low scores in the initial result were improved in the modified one except for the right eyebrow. Both eyes and the nose were much improved where the boundary lines became solid and the eye pupil and the nose holes were colored. On the other hand, both eyebrows received low scores, compared with the other face parts in the initial and modified results. The reason may come from the color of the eyebrow. In the face photo, it is brown, not white nor black. The improvement will be further investigated in future studies.

6.3. User 3 Result Analysis

Figure 12 displays the drawing results of User 3.

Figure 12.

First and modified result for User 3.

The average NCC score was improved by 0.06 in the modification. The score for every face part was improved. The hair and the nose were much improved where the color became darker and the boundary lines became solid.

6.4. User 7 Result Analysis

Figure 13 shows the drawing results of User 7. Clearly, the modified result is an improvement from the initial one.

Figure 13.

First and modified result for User 7.

The average NCC score was improved by 0.02 in the modification. The score for every face part was improved in the modified one except for the mouth. Both eyes were improved to some extent where eyelashes and tarsal border lines were added. On the other hand, the hair still received the low score in the modification, compared with the other face parts. The reason may come from the color and the density of the hair. In the face photo, it is black and has a high density with less texture. The improvement of this hair will be further investigated in future studies.

7. Discussion of Portrait Drawing Evaluation

In this section, we discuss the advantages of the adopted approaches in this proposal and the next targets for future studies.

7.1. Advantages of Auxiliary Lines

The proposed system, PDLAS, has been designed to improve the drawing accuracy of users by showing the auxiliary lines at their drawing process. These lines will help users correctly grasp the positions and structure of facial features. If no auxiliary lines are provided, users, especially beginners, may often misplace key facial elements such as the eyes, nose, and mouth, which can have significantly negative impacts on the overall likeness of the portrait. By providing the auxiliary lines, PDLAS allows users to establish proper proportions and feature placements from the beginning, making it easier to achieve accurate and balanced drawings to them. This structured approach will be particularly beneficial for beginners, as it helps them develop a better understanding of facial proportions and composition, leading to more precise and higher-quality portraits and making portrait drawing more fun.

7.2. Advantages of NCC

The hair quality assessment is inherently challenging due to its complex texture, irregular patterns, and varying densities. Traditional evaluation methods often rely on subjective human judgments, which can lead to results that vary significantly between raters. To ensure an objective and quantifiable assessment, our proposal employs a pixel-wise similarity comparison using the normalized cross-correlation (NCC) method. The advantages of the proposed method are as follows:

- Texture feature extraction: The fine hair details are extracted by preprocessing both the reference image and the user’s drawing using edge detection and eigenvalue-based texture analysis.

- Pixel-by-pixel comparison: The NCC score is calculated by comparing corresponding pixels in the texture maps of both images, which ensures that even subtle differences in the texture and density are captured.

- Numerical assessment: The NCC score, ranging from −1 to 1, provides an objective measure of the similarity. A higher score indicates a more accurate reproduction of the hair texture, while a lower score highlights discrepancies in the structure and shading.

These steps ensure that our system provides a detailed and objective evaluation of the hair texture in the user’s drawing. By combining edge detection, texture analysis, and NCC scoring, the proposed system can effectively capture fine details and subtle variations in the hair density.

7.3. Subjective Evaluation

Currently, our system only provides a numerical score based on the NCC similarity measurement. However, this score may not always match human evaluations of portrait drawing quality. People, especially art experts, may consider other factors such as artistic expressions, brush strokes, and overall compositions. To improve the reliability of our system, it is important to know how the NCC score is matched with evaluations from both experts and non-experts. This will help us understand how well our scoring method aligns with human perceptions.

7.4. Drawing Cartoon Characters with PDLAS

Currently, our system relies on a real face photo and OpenPose, which is primarily designed for the human pose estimation and the facial keypoint detection in a real-world image. Unfortunately, OpenPose does not support feature extraction for cartoon or anime-style characters, since their facial proportions and structures are often significantly different those of real human faces. For example, anime characters tend to have larger eyes, simplified noses, and exaggerated facial expressions, which do not conform to the predefined face model of OpenPose. To enable cartoon-style drawing guidance, we would need to explore alternative approaches instead of OpenPose, such as deep learning-based facial landmark detection tailored for cartoon characters, using models trained on anime or stylized datasets, which can be explored in our future works.

8. Conclusions

This paper presented a hair drawing evaluation algorithm for the exactness assessment method using the localized normalized cross-correlation (NCC) algorithm to offer comprehensive feedback to users in Portrait Drawing Learning Assistant System (PDLAS). PDLAS has been designed as a self-learning tool to enhance accessibility and engagement in art education for novice artists. To emphasize hair lines, this algorithm extracts the texture of the hair region by computing the eigenvalues and eigenvectors of the hair image. For evaluations, the proposal was applied to drawing results using PDLAS by seven students from Okayama University, Japan, and its validity was confirmed In addition, the NCC score improvements were observed by modifying the face parts with low similarity scores from the exactness assessment method in PDLAS. This suggested the effectiveness of PDLAS in achieving the iterative learning of redrawing and evaluations. However, the current implementation of PDLAS has still several limitations. The exactness assessment method only shows the NCC score of each face part in the drawing result. There is no specific guidance or suggestion for how to improve the part. In addition, the subjects in our experiments were limited to university students. A number of diverse subjects in terms of ages, genders, professions, and countries should be involved in evaluations. These solutions will be investigated in our future works.

Author Contributions

Y.Z. developed code in line with the research objectives, focusing primarily on implementing parts of the auxiliary line algorithm and the evaluation section, as well as collecting user-generated results. N.F. guided the research from the beginning, defined the research objectives and the system functionality. E.C.F. and A.S. collected data to evaluate the proposal. C.H. refined the paper writing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The authors confirm that all participants involved in this research were fully informed, and all portrait images presented are AI-generated, ensuring there are no portrait rights concerns. Additionally, the authors have strictly complied with all ethical guidelines, including obtaining participant consent and ensuring data protection.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Blake, W.; Lawn, J. Portrait Drawing; Watson-Guptill Publications Inc.: New York City, NY, USA, 1981; pp. 004–005. [Google Scholar]

- Brandt, G.B. Bridgman’s Complete Guide to Drawing from Life; Sterling Publishing Co., Inc.: New York City, NY, USA, 2001. [Google Scholar]

- Zhang, Y.; Kong, Z.; Funabiki, N.; Hsu, C.-C. A study of a drawing exactness assessment method using localized normalized cross-correlations in a portrait drawing learning assistant system. Computers 2024, 13, 215. [Google Scholar] [CrossRef]

- CMU Perceptual Computing Lab. Available online: https://cmu-perceptual-computing-lab.github.io/openpose/web/html/doc/index.html (accessed on 1 January 2025).

- OpenCV. Available online: https://opencv.org/ (accessed on 1 January 2025).

- Sarvaiya, J.; Patnaik, S.; Bombaywala, S. Image Registration by Template Matching Using Normalized Cross-Correlation. In Proceedings of the International Conference on Advances in Computing, Control, and Telecommunication Technologies, Bangalore, India, 28–29 December 2009. [Google Scholar]

- Huang, Z.; Heng, W.; Zhou, S.; Inc, M. Learning to Paint with Model-based Deep Reinforcement Learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Yi, R.; Liu, Y.-J.; Lai, Y.-K.; Rosin, P.L. Quality Metric Guided Portrait Line Drawing Generation from Unpaired Training Data. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 905–918. [Google Scholar] [CrossRef] [PubMed]

- Tong, Z.; Chen, X.; Ni, B.; Wang, X. Sketch Generation with Drawing Process Guided by Vector Flow and Grayscale. arXiv 2020, arXiv:2012.09004. [Google Scholar] [CrossRef]

- Looi, L.; Green, R. Estimating Drawing Guidelines for Portrait Drawing. In Proceedings of the IVCNZ, Tauranga, New Zealand, 8–10 December 2021. [Google Scholar]

- Takagi, S.; Matsuda, N.; Soga, M.; Taki, H.; Shima, T.; Yoshimoto, F. An educational tool for basic techniques in beginner’s pencil drawing. In Proceedings of the PCGI, Tokyo, Japan, 9–11 July 2003. [Google Scholar]

- Huang, Z.; Peng, Y.; Hibino, T.; Zhao, C.; Xie, H. DualFace: Two-stage drawing guidance for freehand portrait sketching. Comput. Vis. Media 2022, 8, 63–77. [Google Scholar] [CrossRef]

- Yi, R.; Liu, Y.-J.; Lai, Y.-K.; Rosin, P.L. APDrawingGAN: Generating Artistic Portrait Drawings from Face Photos with Hierarchical GANs. In Proceedings of the 2019 IEEE/CVF, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Huang, Z.; Xie, H.; Fukusato, T.; Miyata, K. AniFaceDrawing: Anime Portrait Exploration during Your Sketching. In Proceedings of the ACM SIGGRAPH 2023 Conference (SIGGRAPH ’23), Association for Computing Machinery, New York, NY, USA, 6–10 August 2023. [Google Scholar]

- Singh, J.; Smith, C.; Echevarria, J.I.; Zheng, L. Intelli-Paint: Towards Developing Human-like Painting Agents. arXiv 2021, arXiv:2112.08930. [Google Scholar] [CrossRef]

- Li, S.; Xie, H.; Yang, X.; Chang, C.-M.; Miyata, K. A Drawing Support System for Sketching Aging Anime Faces. In Proceedings of the International Conference on Cyberworlds (CW), Kanazawa, Japan, 27–29 September 2022. [Google Scholar]

- Kong, Z.; Zhang, Y.; Funabiki, N.; Huo, Y.; Kuribayashi, M.; Harahap, D.P. A Proposal of Auxiliary Line Generation Algorithm for Portrait Drawing Learning Assistant System Using OpenPose and OpenCV. In Proceedings of the GCCE, Nara, Japan, 10–13 October 2023. [Google Scholar]

- Henderson, S.; Yeow, J. iPad in Education: A Case Study of iPad Adoption and Use in a Primary School. In Proceedings of the 45th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2012. [Google Scholar]

- Fernandez, A.L. Advances in Teaching Inorganic Chemistry Volume 2: Laboratory Enrichment and Faculty Community; Department of Chemistry and Biochemistry, George Mason University: Fairfax, VA, USA, 2020; pp. 79–93. [Google Scholar]

- Yongyeon, C. Tutorials of Visual Graphic Communication Programs for Interior Design 2; Iowa State University Digital Press: Ames, IA, USA, 2022; pp. 117–140. [Google Scholar]

- Hiromasa, U. Hiromasa’s Drawing Course: How to Draw Faces; Kosaido Pub.: Tokyo, Japan, 2014. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing with Matlab; Gatesmark Publishing: Knoxville, TN, USA, 2020; pp. 311–315. [Google Scholar]

- Pele, O.; Werman, M. Robust Real-Time Pattern Matching Using Bayesian Sequential Hypothesis Testing. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1427–1443. [Google Scholar] [CrossRef] [PubMed]

- Li, G. Stereo Matching Using Normalized Cross-Correlation in LogRGB Space. In Proceedings of the International Conference on Computer Vision in Remote Sensing, Xiamen, China, 16–18 December 2012. [Google Scholar]

- Dawoud, N.N.; Samir, B.; Janier, J. N-mean Kernel Filter and Normalized Correlation for Face Localization. In Proceedings of the IEEE 7th International Colloquium on Signal Processing and Its Applications, Penang, Malaysia, 4–6 March 2011. [Google Scholar]

- Chandran, S.; Mogiloju, S. Algorithm for Face Matching Using Normalized Cross-Correlation. Int. J. Eng. Adv. Technol. 2013, 2, 2249–8958. [Google Scholar]

- Feng, Z.; Qingming, H.; Wen, G. Image Matching by Normalized Cross-Correlation. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Toulouse, France, 14–19 May 2006. [Google Scholar]

- Zhang, B.; Yang, H.; Yin, Z. A Region-Based Normalized Cross-Correlation Algorithm for the Vision-Based Positioning of Elongated IC Chips. IEEE Trans. Semicond. Manuf. 2015, 28, 345–352. [Google Scholar] [CrossRef]

- Hisham, M.B.; Yaakob, S.N.; Raof, R.A.A.; Nazren, A.B.A.; Wafi, N.M. Template Matching Using Sum of Squared Difference and Normalized Cross-Correlation. In Proceedings of the IEEE Student Conference on Research and Development, Kuala Lumpur, Malaysia, 13–14 December 2015. [Google Scholar]

- Ban, K.D.; Lee, J.; Hwang, D.H.; Chung, Y.K. Face Image Registration Methods Using Normalized Cross-Correlation. In Proceedings of the International Conference on Control, Automation, and Systems, Seoul, Republic of Korea, 14–17 October 2008. [Google Scholar]

- Gao, Q.; Chen, H.; Yu, R.; Yang, J.; Duan, X. A Robot Portraits Pencil Sketching Algorithm Based on Face Component and Texture Segmentation. In Proceedings of the IEEE International Conference on Industrial Technology, Melbourne, VIC, Australia, 13–15 February 2019. [Google Scholar]

- Zhang, Y.; Kong, Z.; Funabiki, N.; Hsu, C.-C. An Extension of Drawing Exactness Assessment Method to Hair Evaluation in Portrait Drawing Learning Assistant System. In Proceedings of the 2024 8th International Conference on Information Technology (InCIT), Kanazawa, Japan, 14–15 November 2024. [Google Scholar]

- Muhammad, U.R.; Svanera, M.; Leonardi, R.; Benini, S. Hair Detection, Segmentation, and Hairstyle Classification in the Wild. Image Vis. Comput. 2018, 71, 25–37. [Google Scholar] [CrossRef]

- Person Photo. Available online: https://www.quchao.net/Generated-Photos.html (accessed on 1 January 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).