1. Introduction

Coalition formation is extensively employed to enable coordination among autonomous agents in deploying their resources to accomplish collaborative tasks [

1]. Determining the best possible coalition to form is a challenging task for participating agents due to the vast number of alternative feasible coalitions in large environments. This is further exacerbated by the fact that the agent population might be evolving dynamically, along with the task characteristics that require the agents’ joint efforts [

2]. Furthermore, in multiagent settings, the efficiency of the formed coalitions is often affected by the inherent uncertainty regarding various aspects of the agents or the environment—e.g., agent

types, intuitively corresponding to agent skills or capabilities; the agents’ resource contribution potential; the difference between expected and realized utility; and inherent communication constraints and restrictions [

2,

3,

4].

In large-scale autonomous systems, such as smart cities or IoT networks, coalition formation is critical for the accomplishment of complex tasks that require collaboration among multiple and possibly heterogeneous entities. The aspect of autonomy indicates that agents need to make decisions on their own—e.g., whether or not to participate in a coalition given only limited knowledge of the environment, such as the capabilities of the other participants in the coalition and the gains that would be achieved by completing a task. Moreover, rewards achieved in such settings are often stochastic. Thus, traditional coalition formation models, which often assume a deterministic and known characteristic function determining the value of coalitions, are ill-equipped to handle the prevalent uncertainty [

2]. Nevertheless, in large and dynamic settings, individual learning may easily become impractical. This is because training instances might be rare and the interaction opportunities among agents limited, considering also that other agents’ past experiences constitute private information and that the rewarding scheme can be uncertain. This scarcity of interactions interferes with the agents’ ability to learn, further amplifying the associated challenges.

To tackle such issues, a suitable solution would be to incorporate Graph Neural Networks (GNNs) [

5]. Such neural network designs possess a number of properties that are desirable in large-scale coalition formation. Unlike traditional neural networks that process grid-structured data such as images or sequences, GNNs are able to effectively handle complex relationships and dependencies inherent in graph-structured data [

6]. A key advantage of GNNs in coalition formation environments lies in their ability to aggregate information from each node’s neighborhood. By iteratively aggregating and propagating information across the graph, GNNs can efficiently gather knowledge from multiple sources, even when direct interactions are limited or training instances are scarce. This inherent aggregation mechanism enables GNNs to learn more robust representations of complex relationships. By modeling the graph structure as a representation of agent interactions, GNNs can identify key nodes and edges that facilitate information exchange [

6]. Agents are allowed in this way to focus on critical relationships and adapt their behavior accordingly, leading to formations of coalitions that optimize social welfare. As a positive side effect, strong assumptions for common priors among the agents or self-interest hypotheses are rendered obsolete or irrelevant, since the GNN is fitted using the real data observed so far—and not, e.g., arbitrarily defined probability distributions.

However, a shortcoming of classical GNN architectures is their limited capability to represent previously formed coalitions, as their edges are able to connect only two nodes [

7]. This inherent restriction makes it challenging for GNNs to capture the intricate dynamics of coalition formation settings, particularly when multiple agents with diverse skills and contribution potentials need to collaborate. An answer to this is the Hypergraph Neural Network (HGNN) design, which utilizes hyperedges instead. Hyperedges—each corresponding to a task undertaken by a particular coalition—allow the representation of connections between multiple nodes, i.e., between the agents that undertook the task [

7,

8]. Moreover, the HGNN’s capability of assigning features to the hyperedges allows us to encode particular task-specific characteristics—task identifiers, coalitional type vectors, task rewards, etc. As a result, an HGNN can learn information regarding a hyperedge’s value, i.e., a coalition–task pair’s utility, which is effectively represented via embeddings. These in turn can be used to assess the expected utility of new tasks to be undertaken by particular coalitions of agent types. Thus, HGNNs allow for a more realistic modeling of agent interactions, particularly in dynamic coalition formation scenarios, where varying dependencies and connections between multiple agents constantly emerge. Indeed, HGNNs can play an important role in capturing higher-order interactions among agent types in this setting.

Nevertheless, as is common in neural network-based solutions, the trained models manage to provide regression estimates but cannot work in reverse, that is, cannot generate the input that would lead to the maximum output value, for example. While various optimization methods (Bayesian optimization, or other informed search techniques) could, in principle, be employed to solve this inverse problem, we opt for Genetic Algorithms (GAs) and Simulated Annealing (SA) due to their simplicity, robustness in high-dimensional search spaces, and natural compatibility with the domain of interest, that is, the discrete coalition structure representation. Such informed search methods are coupled with the objective of discovering the task coalitions that would lead to maximized payoffs. These metaheuristics can aid in the search of the space of possible coalition structures for each task by iteratively querying the HGNN and utilizing the predictions in their corresponding objective functions.

Overall, this work puts forward an HGNN-based coalition formation framework that is applicable to settings where (a) the set of available agents and collaborative tasks is quite large, (b) the access to respective historic data and the characteristics of other agents is limited, and (c) the rewards obtained by the completion of the coalitional tasks are unknown. The HGNN is trained on a subset of the solution space to estimate the rewards for coalition–task pairs, where a coalition is seen as a collection of types—i.e., the HGNN learns the values of agent types’ synergies towards completing various tasks, and thus, the HGNN can be used as a surrogate reward function. To find the coalitions that have the ability to distill the most out of each task, informed search approaches are employed to query the HGNN for coalition-specific reward estimates. The experimental results verify the ability of the proposed method to identify rewarding coalitional configurations and can thus, in principle, be employed in any large multiagent setting that requires the formation of coalitions for task execution under uncertainty regarding the effectiveness of the agent synergies.

In summary, the contributions of this paper are the following:

An HGNN is combined with informed search, a GA and SA, in order to learn the unknown coalition–task values. This approach gives rise to two novel corresponding HGNN-based methods for coalition formation under uncertainty: HGNN-GA and HGNN-SA, respectively. To the best of our knowledge, this is the first work in the literature to propose such a methodology.

Unlike previous works in the literature, in this paper, the coalitional value uncertainty is due to uncertainty regarding the values of synergies among the different agent types: the unknown values of the agent types’ synergies in completing various coalitional tasks are what is learned by the HGNN.

The incorporation of a shared HGNN effectively allows for the formation of the best possible set of teams (i.e., partition of the agent space) to tackle a set of tasks. As such, it is an approach geared towards the solution of a

coalition structure generation problem [

9] and the maximization of social welfare.

At the same time, the proposed combined approach can serve as a tool, proposing to any querying agent the best possible coalition to join to serve a task, given the so-far-inferred values of the underlying agent synergies.

The effectiveness of the approach is verified via extensive simulation experiments. The experimental results reveal that the proposed hybrid HGNN-SA method consistently outperforms both a baseline DQN [

10] and the proposed HGNN-GA variant (as well as another simple baseline). Statistical analysis confirms that this performance improvement is significant. These findings highlight the advantage of integrating Simulated Annealing into the proposed HGNN framework, as it delivers superior performance with statistical confidence.

The framework and algorithms introduced in this paper can find applications in a large host of real-world settings: for instance, in the transportation domain [

11], in warehouse robotics [

12], or in the Smart Grid [

13], where it is often the case that individual agents of varying capabilities need to join forces to complete tasks—e.g., jointly transport groups of passengers; coordinate the transportation of large numbers of various-sized boxes; or jointly transfer electricity consumption to different time slots to help stabilize the electricity grid and allow for the optimal exploitation of renewable energy sources.

The rest of this paper is structured as follows.

Section 2 presents the necessary background and briefly reviews related work.

Section 3 outlines the details of the proposed HGNN framework, which incorporates informed search algorithms for learning to identify rewarding coalition–task configurations. Then,

Section 4 details the experimental evaluation conducted, and its results. Finally,

Section 5 concludes and discusses future work directions.

2. Background and Related Work

Coalition formation theory has long been used in the context of

cooperative game theory to model various microeconomic settings where the rational autonomous agents need to form teams in order to gain some joint reward that they can divide among themselves (as in the so-called

transferable utility (TU) games settings), or to gain individual payoffs due to the completion of a joint task (e.g., in some

non-

transferable utility (NTU) game settings) [

9]. As such, coalition formation is regularly used to model negotiation and cooperation dynamics of economic systems, highlighting strategies for effective resource utilization and decision-making in complex environments. A number of important cooperative game-theoretic equilibrium notions (e.g., stability or fairness solution concepts, such as

the core or

the Shapley value, are often the focus of attention in such settings [

9].

From a more practical machine learning standpoint, however, the focus of attention in coalition formation settings is on the creation of rewarding teams that can effectively operate under uncertainty, without their members necessarily adhering to hard-to-satisfy equilibrium notions—though exceptions to this exist, as in the work of [

14], for instance. Hence, in recent years, a number of

deep reinforcement learning (DRL)-based coalition formation approaches have been proposed, addressing problems such as coalition action selection [

15], task allocation for teams of mobile robots [

16], and also a more generic coalition formation paradigm, recently put forward by Koresis et al. [

17], that allows privacy preservation. DRL and reinforcement learning (RL) in general, however, typically utilize different models for individual agents and require a large number of iterations for sufficiently exploring the action space. This means that the training process is more complex than if a centralized approach were used, which could perhaps also account for shared knowledge among the individuals. Moreover, RL approaches rely on a number of assumptions that might not necessarily exist in all coalition formation settings, such as the need for sequential decision-making or the validity of the Markov property.

The

Best Reply with Experimentation (BRE) protocol [

14,

18] offers a decentralized solution to the problem of reaching stable coalitional configurations when the allocation of coalitional rewards among the members is taken into account, as is standard in cooperative game-theoretic approaches to coalition formation. Specifically, BRE allows agents to experiment with suboptimal actions [

18], and its performance can be further enhanced with learning capabilities [

3,

14,

19]. Specifically, by incorporating a stochastic exploration component (essentially, an

-greedy experimentation one) into a “best-reply” process, BRE allows the agents to move away from coalitional stability states that are absorbing—yet suboptimal in terms of payoff acquisition, and it guarantees asymptotic convergence to a stable solution concept—the

core [

9] when no uncertainty regarding coalitional values is assumed, or the

Strong Bayesian Core when agent-type-related uncertainty is taken into account [

3,

14,

19]—when such a solution concept is non-empty. However, its reliance on myopic optimization and predefined belief models can lead to slow convergence in complex environments. The current paper does not deal with stable or fair—in the game-theoretic sense [

9]—payoff distribution among coalitional members (the members are assumed to share coalitional payoff equally); but incorporating such considerations into the current model is interesting future work.

Generally, the study of coalition formation in

multiagent systems (MASs) is a vital area of research. Examples of application include a Smart Grid where agents form coalitions for peer-to-peer energy trading or for the control of V2G operations [

13,

20], scheduling of fleets for transfers [

21], and task allocation to teams [

22], among others.

In this context, Graph Neural Networks (GNNs) offer a novel approach for modeling and learning the complex interactions between agents and tasks, as they are well-suited to handle pairwise relationships between nodes. In coalition formation problems, GNNs can be used to model these relationships between agents and tasks, allowing for the efficient computation of coalition stability. However, when dealing with complex MAS settings characterized by a large number of agents and overlapping coalitions, GNNs may struggle to efficiently handle the computational complexity associated with higher-order interactions [

5].

An interesting work highlighting the use of a GNN in MAS environments is that of [

6]. The paper proposes a novel game abstraction mechanism based on a two-stage attention network called G2ANet, which models the relationship between agents using a complete graph to detect interactions and their importance. G2ANet addresses the problem of policy learning in large-scale multiagent systems, where the large number of agents and complex game relationships severely complicate the learning process. In order to capture interactions between agents, existing methods use predefined rules, which are nevertheless not suitable for large-scale environments due to the complexity of transforming all kinds of interactions into rules.

Recently, Ref. [

23] proposed the use of GNNs (in conjunction with mixed-integer linear programming) to deal with an instance of the optimal coalition structure generation problem—with the added requirement that coalitions formed are as “balanced” as possible in terms of utility gained. Note, however, that no uncertainty regarding coalitional values is assumed in that paper.

Now, notice that the fact that the edges of a GNN can connect only two nodes limits its representation power, particularly in multiagent systems and dynamic open coalition formation environments. This is because numerous connections will form and change between the nodes, and keeping track of those changes in the neighborhoods, the features, and the edges’ connections and features becomes computationally taxing in large settings.

By contrast,

hypergraphs are more appropriate for representing and handling complex multiagent settings, while HGNNs are particularly well-suited for handling complex MAS settings with a large number of agents and (potentially overlapping) coalitions. Such variants extend traditional graph-based models by representing multiagent coalitions using hypergraphs, where each hyperedge can connect more than two agents, capturing the higher-order interactions that are critical in coalition formation settings [

24]. Simply put, in contrast to GNNs, an HGNN can connect more than two nodes through its hyperedges. Additionally, it is easier to update the nodes connected to the hyperedge, as well as its features.

For instance, in [

25] hypergraphs are employed to solve a multi-criteria coalition formation problem instance for the Vehicle-to-Grid (V2G) domain, where electric vehicles can sell their battery-stored energy to stabilize electricity demand and supply in the main electricity network. In that approach, the nodes correspond to vehicles and the hyperedges to vehicle characteristics. Based on these hypergraphs, coalitions are formed that optimize reliability, capacity, and discharge rate. No HGNN learning was used in that work, however.

Another work, that of [

26], incorporates hypergraphs to represent the complex and higher-order relationships between agents in multiagent systems settings. In this case, agents are represented by nodes, and hyperedges connect multiple agents that are present in a group. Each group includes two types of interaction—i.e., message-passing and force-based interactions. The first corresponds to agent interactions and the second to the representational similarity of agents with respect to how well agents match within a group.

The capability of an HGNN to offer a more flexible and powerful representational framework by connecting any number of nodes through hyperedges is exemplified in [

27]. They use directed hyperedges with multi-node tails and singleton heads to explicitly model group influences on an agent’s navigation decisions. By replacing GNN layers with attentional HGNN layers, they mitigate attention dilution—a common issue in dense scenarios where pairwise attention is spread too thinly—and effectively learn coordinated group behaviors. Then, in [

28], hyperedges dynamically group robots, humans, and points of interest based on spatial context to coordinate task allocation and social navigation. Unlike traditional graph-based methods that capture only pairwise interactions, this hypergraph structure is dynamically updated via a hypergraph diffusion mechanism, which propagates contextual information to balance the graph’s dynamics and infer latent correlations.

The hyperedge structure not only facilitates aggregation of information from multiple nodes simultaneously but also simplifies the updating process: features from all nodes connected by a hyperedge can be efficiently aggregated to compute a unified hyperedge representation, which is then propagated back to influence all connected nodes. This mechanism, such as the hypergraph diffusion in [

28] or the attentional messaging in [

27], allows for a more efficient update of node states based on complex group dynamics, overcoming the attentional dilution and limited relational reasoning of pairwise GNNs, especially in dense multiagent settings.

Given their representation power, we believe that HGNNs constitute a powerful tool for studying coalition structures, where the success of a coalition depends not only on the pairwise interactions but also on how groups of agents with different capabilities collaborate to complete tasks. The current paper explores the application of HGNNs to coalition formation problems with inherent uncertainty regarding the values of synergies among different agent types.

Indeed, previous work has demonstrated that the computational complexity of coalition formation often depends on the number of distinct agent types, rather than the total number of agents [

1,

2]. This is because in real-world MAS settings, the values of synergies are in fact determined by agent capabilities, and not the identity of the agents per se [

2,

14,

19,

29]. Since in such settings the number of agent types is often small relative to the total number of agents, this can contribute to significantly simplifying various aspects of the coalition formation problem. For instance, when the number of agent types is fixed, problems such as coalition stability or core-emptiness become computationally tractable [

30]. This insight is crucial in the design of HGNN-based models, as it allows for efficient handling of agent heterogeneity by grouping agents of the same type and learning generalized embeddings for each type.

Furthermore, although HGNNs—and most NN variants in general—are quite successful in fitting unknown functions, they do not offer a functionality for determining input values that result in optimized output values. Both GNNs and HGNNs need to receive an input in the form of a graph and generate a prediction. To this end, informed search methods can be combined to help with the discovery of such inputs. A suitable method is the Genetic Algorithm (GA), which is a population-based metaheuristic inspired by Darwinian evolution [

31,

32]. The GA maintains a population of individuals, representing candidate solutions, and iteratively improves them through selection (favoring high-fitness solutions), crossover (combining traits of parents), and mutation (introducing random perturbations). By exploring diverse regions of the solution space in parallel, GAs excel in optimization but may nevertheless suffer from premature convergence, e.g., in cases where the search space is not smooth.

At the same time, Simulated Annealing (SA) [

33] iteratively explores the solution space by probabilistically accepting worse solutions and gradually focusing on their refinement according to an “evolutionary index” value, termed temperature. In contrast to the population-based approach of the GA, the focus of SA on a single solution offers complementary advantages: SA requires fewer hyperparameters and adapts dynamically to rugged reward landscapes, while the GA can explore diverse regions in parallel.

The combination of Graph Neural Networks (GNNs) and informed search approaches, especially the GA, has been explored primarily in the context of optimizing neural architectures (e.g., hyperparameter tuning [

34]) or enhancing the dynamics of GNN training [

35]. However, this synergy has yet to be extended to coalition formation problems, where the objective is to discover reward-maximizing coalition structures. The current work bridges this gap by employing an HGNN as a surrogate to model coalition–task rewards and then leveraging informed search methods to discover the best coalitions, i.e., well-performing inputs of the network. As such, the proposed approach can be used for learning the values of coalition–task pairs (where a coalition is seen as a collection of agent types) in any coalitional task execution environment of interest. Moreover, to the best of our knowledge, this is the first application of an HGNN-informed search hybrid for learning the values of agent synergies in large coalitional task execution settings.

3. A Novel HGNN-Based Coalition Formation Framework

This section presents the multiagent environment adopted and describes in detail the HGNN computational model employed by the proposed framework for coalition formation. It also introduces the HGNN model’s combination with an informed search approach (e.g., GA or SA) that can be used to explore the input space of the HGNN, i.e., the different coalitions for each particular task. To assess the quality of the various candidate coalition structures, the GA and SA incorporate the output of the trained HGNN into their objective functions.

3.1. Identifying Valuable Coalitions in a Multiagent Task Execution Environment

The environment consists of

N agents in the set

,

. Each agent

possesses a discrete quantity of resources, denoted as

. Agent resources

indicate their suitability for their participation in coalitions

, where

denotes the space of all possible coalitions. Each agent

is formally characterized by its type

, where

represents the finite set of possible agent types. In practical settings, the cardinality of

M is significantly smaller than the total number of agents

N,

[

36,

37]. To enhance clarity in subsequent discussions, the notation

is adopted when explicitly referring to an agent alongside its associated type.

Next, the coalition type vector is denoted as , and it is used to describe the composition of a coalition C in terms of its agent types. The type of an agent dictates the amount of resources an agent contributes upon joining a coalition. It is assumed that agents possess limited knowledge: they are only aware of (i) their own type (and do not observe the types of others) and (ii) the historical coalition–task pairs (along with corresponding rewards) in which they have participated so far. Effectively, agents cannot predict the rewards associated with not-yet-observed coalition–task pairs, but can use the services of an HGNN to this end.

The strategic decisions of participating or not in a particular coalition is a resource allocation problem, and it affects the results of the collective endeavor at hand. Each task , is assigned to a particular coalition C and is characterized by its requirements, i.e., a quota of resources that must be collected and spent by the coalescing agents in order for the task to be completed. Note that is not assumed to be provided to the agents beforehand, and the agents are in fact tasked with determining the effectiveness of coalitions in completing tasks over time and gathering task-specific rewards that actually depend on the coalition members’ types. In addition, and as is typical of coalition formation settings, the resources of do not necessarily need to be accumulated by a single agent type, but from coalitions that are possibly composed of different types.

Given that a coalition

C is formed and assigned to a task

, then a reward is generated and granted to the agents that constitute

C. In particular, the reward function

R is given by Equation (

1), and its details are not available to agents—i.e., it is considered unknown:

where

represents the type vector containing the agent types that make up the coalition

C;

v is a (characteristic) function determining the effectiveness of collaboration between any two

i,

j agents with specific types in

participating in

C to complete task

—and could, in principle, be stochastic. The characteristic function v samples values from a normal distribution for each agent type pair in the type vector of the coalition

C participating in task

and is different for each task, as is the quota.

An example of a matrix for a task from which the reward function would sum the values can be found in

Table 1.

Now, the aim within a multiagent task execution environment is to identify the most valuable task-executing coalitions for the agents to participate in. The proposed approach does so via intertwining (a) an HGNN learning the aforementioned reward function (in other words, learning the value of coalition–task configurations) and (b) an informed search algorithm of choice, used to identify a highly valuable coalition–task configuration within the space of the HGNN-evaluated configurations.

An overview of the paper’s approach is provided in

Figure 1.

The coalitions and their corresponding rewards are represented as hypergraphs that constitute a training dataset. The HGNN model samples a batch of hypergraphs from the dataset and trains to estimate the rewards for each coalition–task pair. It does so by aiming to minimize the loss between the estimation and the actual payoff of the coalition–task pair.

After the HGNN model is fitted, the informed search approach is configured to use the HGNN predictions as its objective function in order to aid in the discovery process of high-rewarding coalitions for given tasks, leading to the respective algorithm variants HGNN-GA and HGNN-SA.

The following subsections provide the details for the HGNN and the informed search algorithms used in this work.

3.2. Hypergraph Neural Network

A Hypergraph Neural Network (HGNN) is a type of Graph Neural Network designed to handle complex relationships between nodes that go beyond pairwise connections. Unlike traditional Graph Neural Networks (GNNs), which only consider first-order neighborhoods, HGNNs can capture higher-order correlations by incorporating hyperedges into their modeling [

8].

The core idea behind an HGNN is to represent the input data as a hypergraph, where each node corresponds to a data point, and each hyperedge connects a subset of nodes. The network processes this hypergraph structure using a set of learnable embeddings for both nodes and hyperedges [

7]. Specifically, HGNNs consist of three primary components: (

i) Hypergraph Construction, which creates the hypergraph representation from the input data; (

ii) Node Embeddings, which capture the local and global properties of individual nodes; and (

iii) Message-Passing Mechanisms, which aggregate information from neighboring nodes connected by hyperedges.

The input to an HGNN can take various forms, including the node features or attributes associated with each data point, optional additional information about the relationships between nodes, such as edge weights or labels, and a set of hyperedges that define the connectivity between nodes. Specifically, the input tensors for an HGNN model are (i) the node feature matrix, (ii) the hyperedge feature matrix, and (iii) the hyperincidence matrix, which maps which nodes are connected to which hyperedges. The instantiation of these notions—particularly of the nodes connected via hyperedges and the hyperedge features for the specific setting—is described immediately below. The output of an HGNN can also be a variety of predictions or representations, such as classifications, regression values, clustering assignments for individual data points, or even properties of the graph configuration [

24]. In our case, the output of the model is the hyperedge embeddings, which are then used to generate estimations for the reward that each coalition–task is anticipated to acquire.

The hypergraph-based representation that HGNNs utilize enables the effective handling of large-scale graphs and improves the robustness of neural network models [

8]. Moreover, HGNNs have been shown to outperform GNNs on various tasks, including node classification and graph regression, due to their ability to incorporate global information from the entire graph structure [

7]. HGNNs can be applied to a wide range of domains, including social network analysis, molecular graph convolutional networks, and recommender systems [

24].

In this work, the coalition formation environment is perceived using a hypergraph representation. Specifically, the nodes of the hypergraph correspond to the available agent types. The node features correspond to values for the agent type and the number of agents that belong to each type. Now, a hyperedge in the hypergraph represents a formed coalition

C that attempts to complete a task

. Thus, each agent that participates in a coalition is linked to the rest of the agents in the coalition via a hyperedge. Recall that a hyperedge can connect more than two nodes, as opposed to a classical graph, where edges connect only two nodes. Having each coalition–task pair represented by a hyperedge results in more concise and compact data structures. The hyperedge features are the total amount of resources gathered by the coalition, and the type vector

represents the types of agents participating. In the example in

Figure 2, there are nine agents of different types,

n tasks, and two coalitions,

and

, for undertaking tasks

and

. The hyperedge representing

is composed of

,

, and

, and reaches the task quota, while the hyperedge for

consists of

,

, and

and does not manage to reach the quota for

. This is represented as a hypergraph, with the rewards also added as hyperedge labels,

and

, respectively.

In essence, the HGNN is used to learn embeddings for agent types and tasks. The hyperedge convolution operation [

38] in particular allows the model to aggregate information about which types can be assigned to which tasks, considering the type capabilities. Then, the learned embeddings can be used to evaluate potential coalitions based on their ability to fulfill task requirements. The HGNN can help identify the most effective coalitions by analyzing the relationships and dependencies among agent types and tasks.

In the proposed HGNN approach, apart from incorporating the hyperedge features to capture the elements of coalition formation, an attention mechanism between nodes and hyperedges is also employed, enabled by hyperedge features. Each hyperedge’s embedding represents the expected utility that the social group expects from forming that particular coalition. The hypergraph convolution for producing the hyperedge embeddings is given by the following formula:

where

is the output hyperedge embeddings (matrix of size num_edges × output_dim). The term

is the attention score for node

v in hyperedge

e, while

represents the diagonal hyperedge degree matrix, where

, with

being the number of nodes in hyperedge

e. The hyperincidence matrix

maps nodes to hyperedges, and

corresponds to the node features. Focusing on the node features,

is a learnable weight matrix,

is the input edge feature matrix, and, lastly,

is a learnable weight matrix for edge features.

The primary objective of training an HGNN is to learn an accurate approximation of the reward function associated with each task. Specifically, the model is designed to predict the expected reward that a given coalition structure would obtain upon attempting to complete a particular task. By so doing, the trained HGNN serves as an effective predictive tool for estimating the cooperative value of candidate coalitions within the defined environment, enabling informed decision-making regarding coalition formation and task allocation. To commence the training of the HGNN, a number of exploratory coalition formation trials are performed, which can be generated by adopting any arbitrary method, such as random agent selections, or RL-backed strategies.

Following the training phase, the model’s predictive performance is evaluated to ensure its reliability in reward estimation. Once reliability is validated, the next step involves the application of an optimization algorithm—such as a Genetic Algorithm, Simulated Annealing, or another suitable method, e.g., Bayesian optimization—to discover the best coalition for each task with respect to reward maximization, according to the “belief” of the trained HGNN.

Note that the current focus of this work is on learning the value of coalitions in a single time step. Capturing the

sequential aspects of the coalition formation process is an interesting direction for future work. In fact, the work in the current paper can be extended for this purpose via intertwining HGNN learning with DRL learning (for instance, with the methods proposed in a recent paper by Koresis et al. [

17]), as outlined in the concluding section of this document.

3.3. Genetic Algorithm and Simulated Annealing for Discovering High-Quality Coalitions

Having trained the HGNN to approximate reward functions specific to distinct tasks, there is also an accompanying “inverse” challenge: finding coalitions that maximize these rewards. In light of the combinatorial characteristics inherent to the search space, this paper adopts two metaheuristic methods, a Genetic Algorithm (GA) and Simulated Annealing (SA), to efficiently explore high-reward solutions. While the GA is able to perform parallel exploration in diverse regions of the solution space, SA provides a targeted and adaptive mechanism that manages to escape local optima.

3.3.1. Genetic Algorithm

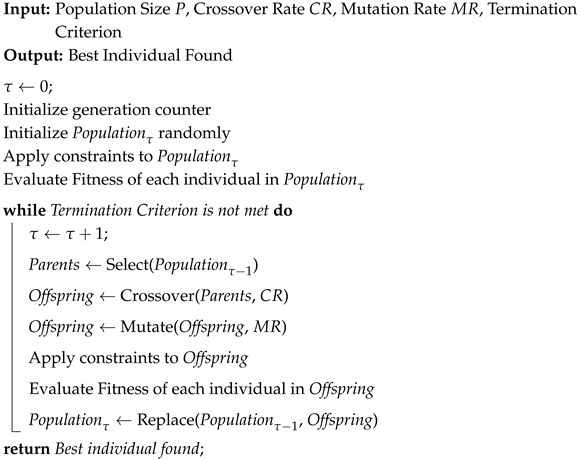

A

Genetic Algorithm is a well-known metaheuristic search method [

32] that operates on a population, inspired by natural selection. In the proposed approach, each GA individual represents a set of candidate coalition–task pairings and utilizes the HGNN as a surrogate fitness evaluator, thus enabling accurate reward estimations. The process presented in Algorithm 1 is elaborated upon immediately below.

| Algorithm 1: Genetic Algorithm (GA) |

|

To integrate the hypergraph structure into the Genetic Algorithm (GA) framework, the appropriate chromosomal representation for each individual in the population is first defined. Specifically, each individual is encoded with

n chromosomes, where

n is the total number of tasks in the environment. Then, each chromosome is an integer index referencing a possible coalition type vector. It is important that the type vector is valid, meaning it contains the number of instances of a type, at most, for which there are enough such agents of that type that have enough resources to participate in a coalition. The total number of possible coalition type vectors is calculated by the combinatorial expression

where

M is the number of types, and

k is the potential number of agents in a coalition (ranging from 2 to

M agents, as coalitions require at least two members).

A crucial aspect of employing the GA is how the fitness function is defined to evaluate an individual. To that end, the trained HGNN’s reward predictions are employed to calculate the fitness score of the hypergraph that the chromosomes of an individual represent. Additionally, it is crucial to ensure that all individuals that are produced from the operations of the GA are valid. To that end, several constraints are applied regarding the individuals’ chromosomes:

It cannot be the case that a task is completed simultaneously by more than one coalition.

A coalition cannot complete more than one task at the same time.

The number of chromosomes cannot be higher or lower than the number of tasks.

A chromosome cannot have an invalid type vector.

While the GA can explore the solution space in a broad sense, the following shortcomings might be faced: (i) premature convergence in complex and non-smooth reward landscapes (e.g., sparse high-reward regions) and (ii) increased execution time due to the overhead for evaluating the whole population of candidate solutions in each iteration.

3.3.2. Simulated Annealing

To address the GA’s limitations, an alternative version of the optimization framework is also considered—specifically one that utilizes

Simulated Annealing (SA), which adaptively balances exploration and exploitation through a temperature-controlled acceptance criterion. In this variant, the GA’s chromosomal representation of a hypergraph is re-employed, using an

n-vector of coalition type vector indices for consistency. At each iteration of SA, a neighboring solution is generated via randomly replacing one type vector index from the current solution vector. A “temperature”

variable is used to balance exploration (high

) and exploitation (low

), and it decays exponentially

, with

. Suboptimal solutions are accepted with a probability:

where

is the reward difference between the current and candidate solutions. The above process is also described in Algorithm 2. Similarly to the GA, SA queries the HGNN for reward predictions, but its single-solution focus reduces the computational overhead. This is particularly advantageous for large-scale tasks, where population-based methods can become computationally expensive.

| Algorithm 2: Simulated Annealing (SA) |

|

3.4. Aspects of Real-World Applicability

As mentioned earlier in the text, coalition formation of agents with heterogeneous types for the collective undertaking of given tasks is a timely problem with many applications in the real world. As such, the developed solutions must be able to capture corresponding requirements, i.e., (a) to achieve high performance, (b) to be computationally tractable, (c) to preserve users’ privacy, and (d) to adopt a realizable system architecture. The proposed approach sufficiently captures requirements (a) to (c):

- (a)

It is able to tackle the complexity of the environment and lead to satisfactory agent rewards, despite the inherent uncertainties, as also illustrated by the results in the following section.

- (b)

The computational efficiency of GNNs combined with the representational power of HGNNs in the coalition formation domain, as well as the admitted effectiveness of informed search methods, constitutes an appropriate combination that can scale for very large numbers of agents.

- (c)

Agent privacy is maintained, as the only information required to be shared is the agent type, which is not unique for each agent and cannot be used to disambiguate specific agents.

Now, regarding (

d), a conceptual architecture is shown in

Figure 3. First of all, a trusted third party is required to train, execute, and maintain the HGNN-SA. Then, this third party needs to be networked with the agents so as to be able to exchange the required messages, such as task requests, coalition proposals, acceptance or declining of participation, and reward-acquisition-related information. The training data for the HGNN can either be gathered gradually during a “setup” period, where coalitions are formed in a manual manner, or apply a transfer learning approach to obtain a pre-trained model, e.g., trained on data originating from another location or time instance.

4. Experimental Evaluation

This section thoroughly evaluates the performance of the

HGNN-GA and

HGNN-SA hybrids against a vanilla

DQN [

10] algorithm and a plain random coalition formation approach as baselines. The implementation is openly available at

https://github.com/GerasimosKoresis/HGNN-MDPI, accessed on 13 November 2025.

4.1. Setup

The experimentation environment consists of

agents of

types. The number of available tasks is

, and at most 10 agents are allowed in a single coalition. Each agent

i’s resources

are sampled from a uniform distribution

. The same type of distribution is used to generate the types’ contribution, but with a different range, i.e.,

. The quota of each task is sampled from a normal distribution

. The function

v quantifies the synergy between all pair types

in a type vector

. For each type pair

, the synergy value

is sampled from a normal distribution

, as also shown in

Figure 4. In this experimental setting we empirically selected the particular parameters of the distribution so that there is a graceful

v-value degradation around the mean (see

Figure 4). Note, however, that the method would still operate with any distribution considered.

These values reflect how well specific type combinations perform together, with higher values indicating stronger cooperative potential. The coalition–task space consists of 4,618,625 such pairs. This is calculated by , where k is the potential number of agents in a coalition. The number of potential coalitions is therefore /25, which equals . On the other hand, the number of potential coalitional configurations—the number of potential coalition structures, i.e., the number of potential arrangements of coalitions undertaking tasks at a time—is thus , which takes the astronomical value . This is the potential number of hypergraphs that could be constructed to be used for training.

Regarding the HGNN configuration and architecture, it is equipped with one hypergraph convolution layer followed by three linear layers, with each layer consisting of 128 neurons. To train the HGNN, a constructed dataset of 1000 hypergraphs is used, each consisting of

n coalitions completing the environment’s tasks, meaning that training includes 2500 coalitions in the setup. A different set of 200—not previously observed or used—hypergraphs is also created, upon which the already trained HGNN model is evaluated. Notice that the dataset size of 1000 hypergraphs is very small compared to the potential number of coalitional configurations (

, as calculated above) that can give rise to corresponding hypergraphs. In order to ensure the model’s fair training in estimating both successful and incompetent coalitions for a task, half of the training dataset consisted of unsuccessful hypergraph representations, while profitable ones made up the other half. As a loss function, the

is used alongside the

for the non-linear activation of the network’s layers.

Figure 5 illustrates the loss values during the HGNN’s training for 200 K steps, indicating that the applied configuration manages to adequately fit the underlying model of the agent synergy potential. Judging by the figure, the training process could have been terminated at 50 K steps since there is no significant improvement after this point. This result suggests not only that convergence is reached quite fast but also that the training is stable, since the loss remains at low levels throughout the rest of the training process.

In HGNN-GA’s case, a population of 300 individuals was used, and, as already mentioned, for the evaluation of the individuals, the HGNN model was utilized to calculate the fitness scores. The crossover probability was set to , while the mutation probability was , and the termination criterion was set to a maximum number of generations .

Regarding HGNN-SA, again, the HGNN model is used as a reward estimator for the possible solutions that it examines during the search procedure. The temperature parameter was initialized to with a cooling rate of .

As previously mentioned, one of the selected baselines is

DQN [

10], which is perhaps the most well-known deep reinforcement learning algorithm. DQN effectively integrates deep learning with

Q-learning to identify optimal actions in sequential decision-making settings. The

DQN model used in the comparison makes use of a specific

DQN implementation, the one in [

17]. It consists of five fully connected linear layers, each having 32 neurons. Exploration was performed with the

action selection strategy, and the model was trained for 12800 episodes, with no HGNN involvement of any kind. In order for agents to use the

DQN baseline to form coalitions, they have to employ a

coalition formation protocol that unfolds in episodes. The episode proceeds in rounds, where, in each round, a randomly selected proposer suggests one coalition–task pair based on agent types believed to be sufficient for task completion. Each proposal must be unanimously accepted by the addressed agents; otherwise, a new proposal is made. The proposer role rotates once the proposer reaches a fixed proposal limit or exhausts their resources. Episodes end when all tasks are “covered”, or all agents have served as proposers without further viable coalitions. Notice that

DQN (or any other deep reinforcement learning algorithm) cannot be a natural candidate to tackle the coalition structure generation problem (i.e., to propose or evaluate a complete collection of coalitions to perform a task each), as it requires the use of the aforementioned formation protocol.

Finally, to further illustrate the learning capabilities of the methods put forward in this paper, the results of a uniform random selection mechanism regarding the agents that constitute the coalitions (Random) are also presented. In this simple baseline case, no information regarding agent types or capabilities is taken into account, and no guarantees exist that the formed coalitions will be able to successfully complete the given tasks.

Experiments were run (the seeds used for the experiments were generated randomly and were [82, 84, 67, 40, 85, 57, 66, 90, 71, 64]) on an i7-13700 CPU, with 64GB of RAM and an RTX 4060Ti-16GB GPU. Python 3.9.18 with Pytorch v.2.2.1 was used, as well as Adam optimizer with a learning rate . The DQN discount factor was . The hyperparameter values for the HGNN-{GA,SA} and DQN architectures have been fine-tuned by performing grid search in similar, though smaller-scale trials. The Huber loss function was used for both the HGNN-based methods and the DQN. Hyperparameter sensitivity analysis was performed on the values used for the batch size and learning rate. Testing on learning rates of and showed that bigger learning rates cause the model to learn too fast and do not help it reach convergence but hold back its training. The tested batch sizes were 16, 32, 64 and 128; it was found that larger batch sizes exhibited better stability and contributed to speeding up the learning process.

The dataset used for the training of the

HGNN model consists of possible hypergraph instances of the environment. More specifically, each hypergraph has

M nodes for the types of agents and

n hyperedges representing the coalition–tasks, and hyperedge embeddings represent the rewards for each coalition attempting its task (as also presented in

Section 3.2).

Approximately 12 h is required for DQN to complete its training process in the particular setting. Generating a complete coalition–task configuration takes about 7 s. The HGNN needs h to train on the batches of the dataset, which consists of 1000 random hypergraph representations of possible coalition–task configurations of the environment from a vastly larger configuration space, as explained above. The trained model then takes s to evaluate a configuration of the environment.

4.2. Performance Evaluation

The DQN requires training on samples from a replay buffer that gathers successful coalition formations in order to learn its policy. The HGNN variants also require training, but in this case, the samples constitute complete coalition–task configurations of the environment.

For the sake of fair comparison, all three methods were run for 10 random seeds. The results are shown in

Table 2. The total reward values reported represent the payoff that coalitions acquire for completing a set of tasks after each model has been trained following the procedures previously described.

It is obvious that

Random performs extremely poorly, while

DQN and

HGNN-GA perform similarly, and

HGNN-SA ends up being better—in a statistically significantly manner. In particular,

Random, as expected, is not a good solution for such a dynamic multiagent environment, with its performance, shown in

Table 2, showcasing the need for a kind of learning process in order to tackle this coalition formation setting.

Regarding

HGNN-GA, the results in

Table 3 show that there is no statistical significance in the difference between its performance and that of

DQN. Overall,

HGNN-GA appears to fall into local optima, which prevents it from reaching convergence early enough, as well as to discover, although not the best-performing, a well-performing solution.

By contrast, the

HGNN-SA method is clearly able to perform better, showing a 3.14% increase over

DQN and 4.40% over

HGNN-GA in terms of average reward acquired. This improvement is due to its temperature mechanism, which allows it to easily evade local optima. Notice that the standard deviation (

) of the method’s results is also better (lower) than those of its competitors. Importantly,

Table 3 indicates that

AAHGNN-

SA outperforms both its competitors

in a statistically significant manner, with

p-values that are lower than

for both the

t-test and

Wilcoxon statistical tests conducted.

The results in

Table 2 and

Table 3 are also visualized in

Figure 6 and

Figure 7, with

Figure 7 focusing on the performance of

HGNN-SA,

HGNN-GA, and

DQN. In those figures, boxplots depict the rewards that each method achieved across all simulation trials. All methods are shown to outperform

Random by a huge margin while managing to accumulate rewards on the order of

of the theoretical maximum. The statistically significantly superior performance of

HGNN-SA is clear in

Figure 7, however. Moreover, we stress again the superiority of the HGNN methods compared to

DQN when it comes to

time performance: as mentioned in

Section 4.1,

DQN requires 12 h of

training time, as opposed to

h for the HGNN methods—i.e.,

DQN requires more than four times the HGNN training time; the 7 s required by

DQN to propose a coalition–task configuration “at runtime” is 35-fold more time-consuming than the near-instantaneous (

s) response given by the

HGNN regarding the evaluation of a given coalition structure.

The experimental results highlight the shortcoming of the GA with respect to requiring the tuning of a high number of hyperparameters; on the contrary, HGNN-SA is able to perform well with minimal tuning. This occurs because the GA initializes a number of random configurations and attempts—using the crossover operation—to reach the best solution for the formation of coalition–task pairs in the environment. In this manner, there is a lot of room for chance, as the initial population may be nowhere near the optimal configuration, and thus, the GA would need a great amount of time to even remotely reach convergence. SA, on the other hand, does not face this issue due to its working on a single solution. In addition, it tries to change components of the solution to find the best combination of coalition–task pairs. It does so only when it increases the solution’s reward.

To summarize, HGNN-SA has a clear—indeed, a statistically significant—advantage in accumulating higher rewards compared to DQN and HGNN-GA. Regardless, the aim of the experiments is not to advocate for a particular algorithm over another—e.g., regarding the preferred choices for the informed search approach—but to illustrate the advantage of combining HGNNs with other algorithms. The results indicate that there is substantial merit from such combinations, and that hybrid approaches have the potential to achieve even better performance than “plain” ones.