1. Introduction

Artificial intelligence (AI) is rapidly reshaping assessment foundations in higher education. Contemporary systems for automated feedback and intelligent tutoring report positive effects on performance and large-scale personalization; however, the

iterative nature of assessment–feedback cycles remains under-theorized from a mathematical and algorithmic standpoint, limiting analyses of convergence, sensitivity, and robustness in learning processes [

1,

2,

3]. Recent syntheses in AI for education summarize advances ranging from automated scoring for writing and programming to learning-analytics dashboards, while emphasizing mixed evidence and the need for reproducible, comparable frameworks across contexts [

1,

4,

5].

In parallel, retrieval-augmented generation (RAG) has emerged as a key mechanism to inject reliable external knowledge into large language models, mitigating hallucinations and improving accuracy on knowledge-intensive tasks. The 2023–2024 survey wave systematizes architectures, training strategies, and applications, providing a technical basis for

contextualized and traceable feedback in education [

6,

7,

8]. Closely related prompting/reasoning frameworks (e.g., ReAct) support verifiable, tool-using feedback workflows [

9].

In this work, we use “agentic RAG” in an operational and auditable sense: (i)

planning—rubric-aligned task decomposition of the feedback job; (ii)

tool use beyond retrieval—invoking tests, static/dynamic analyzers, and a rubric checker with logged inputs/outputs; and (iii)

self-critique—a checklist-based verification for evidence coverage, rubric alignment, and actionability prior to delivery (details in

Section 3.2).

Study-design note (clarifying iteration and deliverables). Throughout the paper,

t indexes

six distinct, syllabus-aligned programming tasks (not re-submissions of the same task). Each iteration comprises submission, auto-evaluation, feedback delivery, and student revision; the next performance

is computed at the

subsequent task’s auto-evaluation after the revision leads to a new submission. The cap at six iterations is determined by the course calendar. We make this timing explicit in the Methods (

Section 3.1 and

Section 3.3) and in the caption of

Figure 1.

Within programming education, systematic reviews and venue reports (e.g., ACM Learning@Scale and EDM) document the expansion of auto-grading and LLM-based formative feedback, alongside open questions about reliability, transfer, and institutional scalability [

10,

11,

12,

13,

14]. In writing, recent studies and meta-analyses report overall positive but heterogeneous effects of automated feedback, with moderators such as task type, feedback design, and outcome measures—factors that call for models capturing the

temporal evolution of learning rather than single-shot performance [

3,

15]. Meanwhile, the knowledge tracing (KT) literature advances rich sequential models—from classical Bayesian formulations to Transformer-based approaches—typically optimized for

predictive fit on next-step correctness rather than prescribing

algorithmic feedback policies with interpretable convergence guarantees [

16,

17,

18,

19,

20]. Our approach is complementary: KT focuses on

state estimation of latent proficiency, whereas we formulate and analyze a

policy-level design for the feedback loop itself, with explicit update mechanics.

Beyond improving traditional evaluations, AI-mediated higher education raises a broader pedagogical question: what forms of knowledge and understanding should be valued, and how should they be assessed when AI can already produce sophisticated outputs? In our framing, product quality is necessary but insufficient; we elevate

process and provenance to first-class assessment signals. Concretely, the platform exposes (a)

planning artifacts (rubric-aligned decomposition), (b)

tool-usage telemetry (unit/integration tests, static/dynamic analyzers, and rubric checks), (c)

testing strategy and outcomes, and (d)

evidence citations from retrieval. These signals, natively logged by the agentic controller and connectors (Methods

Section 3.2), enable

defensible formats—trace-based walkthroughs, oral/code defenses, and revision-under-constraints—that are auditable and scalable. We return to these epistemic implications in the Discussion (

Section 5.4), where we outline rubric alignment to these signals and institutional deployment considerations.

This study frames assessment and feedback as a discrete-time, policy-level algorithmic process. We formalize two complementary models: (i) a difference equation linking per-iteration gain to the gap-to-target and feedback quality via interpretable parameters

, which yield iteration-complexity and stability conditions (Propositions 1 and 2), and (ii) a logistic convergence model describing the asymptotic approach to a performance objective. This framing enables analysis of convergence rates, sensitivity to feedback quality, and intra-cohort variance reduction, aligning educational assessment with tools for algorithm design and analysis. Empirically, we validate the approach in a longitudinal study with six feedback iterations in a technical programming course (

), estimating model parameters via nonlinear regression and analyzing individual and group trajectories. Our results show that higher-quality, evidence-grounded feedback predicts larger next-iteration gains and faster convergence to target performance, while cohort dispersion decreases across cycles—patterns consistent with prior findings in intelligent tutoring, automated feedback, and retrieval-augmented LLMs [

6,

7,

8,

11].

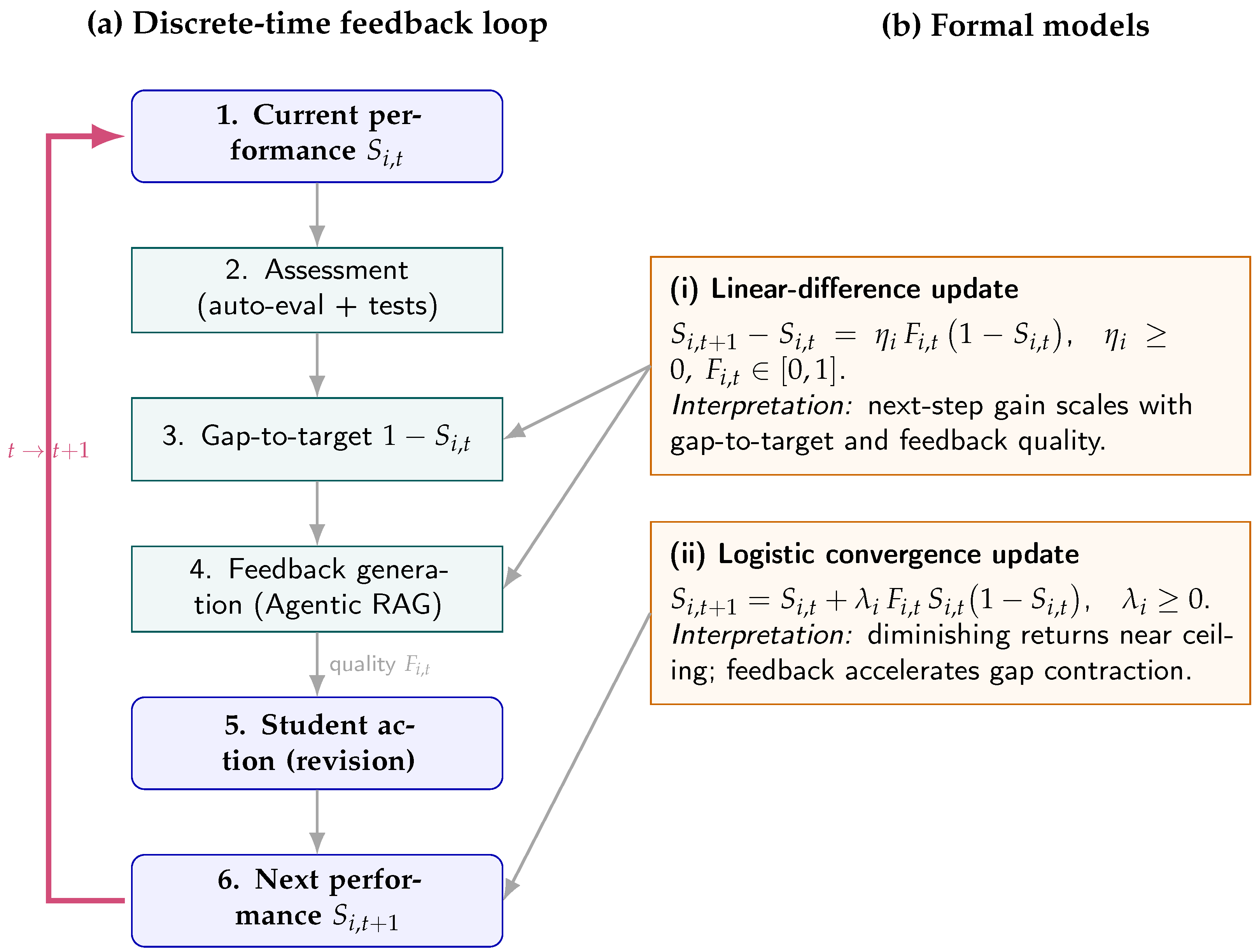

Conceptual overview. Figure 1 depicts the student-level loop and its coupling with the formal models used throughout the paper. The process moves the performance state

to

via targeted feedback whose quality is summarized by

. The two governing formulations, used later in estimation and diagnostics, are shown in panel (b): a linear-difference update and a logistic update, both expressed in discrete time and consistent with our methods.

Scope and contributions. The contribution is threefold: (1) a formal, interpretable

policy design for iterative assessment with explicit update mechanics and parameters

that connect

feedback quality to the

pace and

equity of learning, enabling iteration-complexity and stability analyses (Propositions 1 and 2); (2) an empirical validation in a real course setting showing sizable gains in means and reductions in dispersion over six iterations (

Section 4); and (3) an explicit pedagogical stance for AI-rich contexts that elevates

process and provenance (planning artifacts, tool-usage traces, test outcomes, evidence citations) and outlines

defensible assessment formats (trace-based walkthroughs, and oral/code defenses) that the platform can instrument at scale. This positions our work as a design model for the feedback loop itself, complementary to state-estimation approaches such as knowledge tracing.

2. Theoretical Framework

To ground our proposal of a dynamic, AI-supported assessment and feedback system within the broader digital transformation of higher education and the global EdTech landscape, this section reviews the most relevant theoretical and empirical research across educational assessment, feedback for learning, and Artificial Intelligence in Education (AIED), together with implications for pedagogy and evaluation in digitally mediated environments. We also consider a comparative-education perspective to contextualize the phenomenon internationally. Our goal is to provide a conceptual and analytical basis for understanding the design, implementation, and broader implications of the model advanced in this article.

Over the last decade—and especially since the emergence of generative AI—research on assessment in digital environments has accelerated. Multiple syntheses concur that feedback is among the most powerful influences on learning when delivered

personally,

iteratively, and

in context [

3,

21,

22]. In technically demanding domains such as programming, early error identification and precise guidance are critical for effective learning and scalable instruction [

10,

23,

24]. Recent evidence further suggests that AI-supported automated feedback can achieve high student acceptability while raising challenges around factuality, coherence, and alignment with course objectives [

4,

11,

15,

25]. These observations motivate hybrid designs that combine generative models with information retrieval and tool use to improve the relevance, traceability, and verifiability of feedback.

2.1. Assessment and Feedback in Technical Disciplines and Digital Settings

Within the digital transformation of higher education, disciplines with high technical complexity and iterative skill formation (e.g., engineering, computational design, and especially programming) require assessment approaches that support the rapid, personalized, and precise adjustment of performance as students progress. Digital platforms facilitate content delivery and task management but amplify the need for

scalable formative feedback that goes beyond grading to provide concrete, actionable guidance [

3,

21]. In programming education, research documents expansion in auto-grading, AI-mediated hints, and LLM-based formative feedback, alongside open questions about reliability, transfer, and equity at scale [

10,

11,

12,

13,

14,

24]. Addressing these challenges is essential to ensure that digital transformation translates into improved learning outcomes and readiness for technology-intensive labor markets.

2.2. Advanced AI for Personalized Feedback: RAG and Agentic RAG

Recent advances in AI have yielded models with markedly improved capabilities for interactive, context-aware generation. Retrieval-augmented generation (RAG) combines the expressive power of foundation models with the precision of targeted retrieval over curated knowledge sources, mitigating hallucinations and improving accuracy on knowledge-intensive tasks [

6,

7,

26].

Agentic variants extend this paradigm with planning, tool use, and self-critique cycles, enabling systems to reason over tasks, fetch evidence, and iteratively refine outputs [

8,

9].

We use “agentic RAG” in an explicit, auditable sense: (i)

planning—rubric-aligned task decomposition of the feedback job; (ii)

tool use beyond retrieval—invoking tests, static/dynamic analyzers, and a rubric checker with logged inputs/outputs; and (iii)

self-critique—a checklist-based verification for evidence coverage, rubric alignment, and actionability prior to delivery. These capabilities are implemented as controller-enforced steps (not opaque reasoning traces), supporting reproducibility and auditability (see

Section 3 and

Section 4; implementation details in

Section 3.2).

Study-design crosswalk: in the empirical setting, iteration

t indexes six distinct, syllabus-aligned programming tasks (i.e., six deliverables over the term); the next state

is computed at the subsequent task’s auto-evaluation, after students revise based on the feedback from iteration

t (

Section 3.1 and

Section 3.3).

In educational contexts, connecting agentic RAG to course materials, assignment rubrics, student artifacts, and institutional knowledge bases—via standardized connectors or protocol-based middleware—supports feedback that is course-aligned, evidence-grounded, and level-appropriate. This integration enables detailed explanations, targeted study resources, and adaptation to learner state, making richer, adaptive feedback feasible at scale and illustrating how AI underpins disruptive innovation in core teaching-and-learning processes.

2.3. Epistemic and Pedagogical Grounding in AI-Mediated Assessment: What to Value and How to Assess

Beyond refining traditional evaluation, AI-rich contexts prompt a broader question: what forms of knowledge and understanding should be valued, and how should they be assessed when AI can already produce sophisticated outputs? In our framework, product quality is necessary but insufficient. We elevate process and provenance to first-class assessment signals and align them with platform instrumentation:

Planning artifacts: rubric-aligned problem decomposition and rationale (controller step “plan”); mapped to rubric rows on problem understanding and strategy.

Tool-usage traces: calls to unit/integration tests, static/dynamic analyzers, and rubric checker with inputs/outputs logged; mapped to testing adequacy, correctness diagnostics, and standards compliance.

Testing strategy and outcomes: coverage, edge cases, and failing-to-passing transitions across iterations; mapped to engineering practice and evidence of improvement.

Evidence citations: retrieved sources with inline references; mapped to factual grounding and traceability of feedback.

Revision deltas: concrete changes from t to (files, functions, complexity footprints); mapped to actionability and responsiveness.

Task- and criterion-level structure. Each iteration

t corresponds to a distinct task with a common four-criterion rubric (accuracy, relevance, clarity, and actionability). The Feedback Quality Index

aggregates these criteria, while supporting criterion-level and task-level disaggregation (e.g., reporting distributions of

by task, where

indexes rubric criteria). This enables rubric-sensitive interpretation of cohort gains and dispersion and motivates the per-criterion commentary provided in

Section 4 and

Section 5.

These signals support

defensible assessment formats—trace-based walkthroughs, oral/code defenses, and revision-under-constraints—without sacrificing comparability. They are natively produced by the agentic controller (

Section 3), enabling equity-aware analytics (means, dispersion, and tails) and reproducible audits. While our Feedback Quality Index (FQI) aggregates

accuracy, relevance, clarity, actionability, these process/provenance signals can be reported alongside FQI, incorporated as covariates (e.g.,

in Equation (

7)), or organized in an

epistemic alignment matrix (

Appendix A.1) that links constructs to rubric rows and platform traces; complementary exploratory associations and timing checks are provided in (

Appendix A.2), and criterion-by-task distributions in (

Appendix A.3). This grounding complements the formal models by clarifying

what is valued and

how it is evidenced at scale.

2.4. Mathematical Modeling of Assessment–Feedback Dynamics

Beyond transforming tools and workflows, the digitalization of learning generates rich longitudinal data about how students improve in response to instruction and iterative feedback. Mathematical modeling provides a principled lens to capture these dynamics, shifting the focus from single-shot outcomes to

trajectories of performance over time. In systems that allow multiple attempts and continuous feedback, discrete-time updates are natural candidates: they describe how a learner’s performance is updated between evaluation points as a function of the previous state, the gap-to-target, and the quality of feedback. Throughout the paper, we consider two complementary formulations at the student level

i and iteration

t:

Here denotes a normalized performance score (with target ), summarizes feedback quality (accuracy, relevance, clarity, actionability), and parameterize sensitivity and effectiveness, and captures unmodeled shocks. Crucially, these are policy-level update rules: they prescribe how the system should act (via feedback quality) to contract the learning gap at a quantifiable rate, rather than estimating a latent proficiency state.

Proposition 1 (Monotonicity, boundedness, and iteration complexity for (

1))

. Assume ,

,

,

and .

Then, we have the following:- 1.

(Monotonicity and boundedness) is nondecreasing and remains in for all t.

- 2.

(Geometric convergence

) If there exists such that for all t, then - 3.

(Iteration complexity

) To achieve with , it suffices that

Proposition 2 (Stability and convergence for (

2))

. Assume ,

,

and let .

- 1.

(Local stability at the target) If , then is locally asymptotically stable. In particular, if for all t, then increases monotonically to 1.

- 2.

(Convergence without oscillations) If , then is nondecreasing and converges to 1 without overshoot.

Proof. Define with . Fixed points satisfy , giving . The derivative yields . Local stability requires , i.e., . If , then for , so the map is increasing and contractive near the target, implying monotone convergence. □

Corollary 1 (Cohort variance contraction (linearized))

. Let be the cohort mean,

,

and suppose shocks are independent across students with variance .

Linearizing (

1)

around and defining as the cohort-average feedback quality at iteration t,

Hence, if and is small, dispersion contracts geometrically toward a low-variance regime, aligning equity improvements with iterative feedback.

For (

1) with

and

, the

gap half-life satisfies

, linking estimated

and a lower bound on feedback quality to expected pacing. With mean-zero noise, a Lyapunov potential

yields

for

, implying bounded steady-state error. These properties justify monitoring both mean trajectories and dispersion (

) as first-class outcomes.

2.5. Relation to Knowledge Tracing and Longitudinal Designs

This perspective resonates with—but is distinct from—the knowledge tracing literature. KT offers powerful sequential predictors (from Bayesian variants to Transformer-based approaches), yet the emphasis is often on

predictive fit (next-step correctness) rather than prescribing

feedback policies with interpretable convergence guarantees and explicit update mechanics [

16,

17,

18,

19,

20]. Our formulation foregrounds the

policy: a mapping from current state and feedback quality to the next state with parameters

that enable stability analysis, iteration bounds, and variance dynamics (Propositions 1 and 2, Corollary 1).

Complementarity. KT can inform design by supplying calibrated state estimates or difficulty priors that modulate

(e.g., stricter scaffolding and targeted exemplars when KT indicates fragile mastery). This preserves analytical tractability while exploiting the KT sequential inference for policy targeting. Methodologically, randomized and longitudinal designs in AIED provide complementary strategies for estimating intervention effects and validating iterative improvement [

5]. In our empirical study (

Section 3 and

Section 4), we instantiate this foundation with six iterations and report both mean trajectories and dispersion, together with parameter estimates that connect feedback quality to the pace and equity of learning.

2.6. Comparative-Education Perspective

From a comparative-education viewpoint, the algorithmic framing of assessment raises cross-system questions about adoption, policy, and equity: how do institutions with different curricula, languages, and governance structures instrument feedback loops; how is feedback quality ensured across contexts; and which safeguards (privacy, auditability, and accessibility) condition transferability at scale? Because the models here are interpretable and rely on auditable quantities (, , and dispersion ), they are amenable to standardized reporting across institutions and countries—facilitating international comparisons and meta-analyses that move beyond single-shot accuracy to longitudinal, equity-aware outcomes.

By framing assessment and feedback as a discrete-time algorithm with explicit update mechanics, we connect pedagogical intuition to tools from dynamical systems and stochastic approximation. This yields actionable parameters (), interpretable stability conditions (), iteration bounds (Proposition 1), and cohort-level predictions (variance contraction; Corollary 1) that inform the design of scalable, equity-aware feedback in digitally transformed higher education, while making explicit the mapping between iterations and deliverables to avoid ambiguity in empirical interpretations.

3. Materials and Methods

3.1. Overview and Study Design

We conducted a longitudinal observational study with six consecutive evaluation iterations (

) to capture within-student learning dynamics under AI-supported assessment. The cohort comprised

students enrolled in a

Concurrent Programming course, selected for its sequential and cumulative competency development. Each iteration involved solving practical programming tasks, assigning a calibrated score, and delivering

personalized, AI-assisted feedback. Scores were defined on a fixed scale and rescaled to

for modeling, with

. Feedback quality was operationalized as

(

Section 3.3).

Each iteration t corresponds to a distinct, syllabus-aligned deliverable (six core tasks across the term). Students submit the task for iteration t, receive and AI-assisted feedback , and then revise their approach and code base for the next task at . Within-task micro-revisions (e.g., rerunning tests while drafting) may occur but do not generate an additional score within the same iteration; the next evaluated score is at the subsequent task. This design ensures comparability across iterations and aligns with course pacing.

The cap of six iterations is set by the course syllabus, which comprises six major programming tasks (modules) that build cumulatively (e.g., synchronization, deadlocks, thread-safe data structures, concurrent patterns, and performance). The platform can support more frequent micro-cycles, but for this study we bound t to the six course deliverables to preserve instructional fidelity and cohort comparability.

The

primary outcome is the per-iteration change in scaled performance,

, and its dependence on feedback quality

(Equations (

3) and (

4)).

Secondary outcomes include (i) the relative gain

(Equation (

6)), (ii) cohort dispersion

(SD of

) and tail summaries (Q10/Q90), (iii) interpretable parameters

linking feedback quality to pace and equity of learning, and (iv)

exploratory process/provenance signals (planning artifacts, tool-usage traces, testing outcomes, evidence citations, revision deltas) aligned with the broader pedagogical aims of AI-mediated assessment (see

Section 3.4).

3.2. System Architecture for Feedback Generation

The system integrates three components under a discrete-time orchestration loop:

Agentic RAG feedback engine. A retrieval-augmented generation pipeline with agentic capabilities (planning, tool use, and self-critique) that produces course-aligned, evidence-grounded feedback tailored to each submission. Retrieval uses a top-k dense index over course artifacts; evidence citations are embedded in the feedback for auditability.

Connector/middleware layer (MCP-like). A standardized, read-only access layer brokering secure connections to student code and tests, grading rubrics, curated exemplars, and course documentation. The layer logs evidence references, model/version, and latency for traceability.

Auto-evaluation module. Static/dynamic analyses plus unit/integration tests yield diagnostics and a preliminary score; salient findings are passed as structured signals to contextualize feedback generation.

All components operate within an auditable controller that records inputs/outputs per iteration and enforces privacy-preserving pseudonymization before analytics.

We implement “agentic RAG” as a sequence of controller-enforced actions (not opaque reasoning traces):

Planning (rubric-aligned task decomposition): the controller compiles a plan with sections (e.g., correctness, concurrency hazards, and style) and required deliverables (fix-steps and code pointers).

Tool use (beyond retrieval), with logged inputs/outputs and versions: (i) test-suite runner (unit/integration); (ii) static/dynamic analyzers (e.g., race/deadlock detectors); (iii) a rubric-check microservice that scores coverage/level; (iv) a citation formatter that binds evidence IDs into the feedback.

Self-critique (checklist-based verification): a bounded one-round verifier checks (1) evidence coverage, (2) rubric alignment, (3) actionability (step-wise fixes), and (4) clarity/consistency. Failing checks trigger exactly one constrained revision.

S (system): “You are a feedback agent for Concurrent Programming. Use only auditable actions; cite evidence IDs.”

C (context):

A1 (plan): make_plan(sections=[correctness, concurrency, style], deliverables=[fix-steps, code-links])

A2 (retrieve): retrieve_topk(k) → {evidence IDs}

T1 (tests): run_tests()→ {failing:test_3}

T2 (analysis): static_analyze(x) → {data_race: line 84}

T3 (rubric): rubric_check(draft) → {coverage:0.92}

G1 (draft): generate_feedback(context+evidence) → draft_v1

V1 (self-critique): checklist(draft_v1) → {missing code pointer} ⇒revise()→ draft_v2

F (finalize): attach_citations(draft_v2, evidence_ids) ; log {tool calls, versions, latencies}

3.3. Dynamic Assessment Cycle

Each cycle () followed five phases:

Submission. Students solved a syllabus-aligned concurrent-programming task.

Auto-evaluation. The system executed the test suite and static/dynamic checks to compute and extract diagnostics .

Personalized feedback (Agentic RAG). Detailed, actionable comments grounded on the submission, rubric, and retrieved evidence were generated and delivered together with .

Feedback Quality Index. Each feedback instance was rated on Accuracy, Relevance, Clarity, and Actionability (5-point scale); the mean was linearly normalized to to form . A stratified subsample was double-rated for reliability (Cohen’s ) and internal consistency (Cronbach’s ).

Revision. Students incorporated the feedback to prepare the next submission. Operationally, feedback from informs the change observed at t.

There is no additional score issued immediately after Step 5 within the same iteration. The next evaluated score is at the start of the subsequent iteration (Step 2 of ). Internally, tests/analyzers may be re-run while drafting feedback (tool-use traces), but these do not constitute interim grading events.

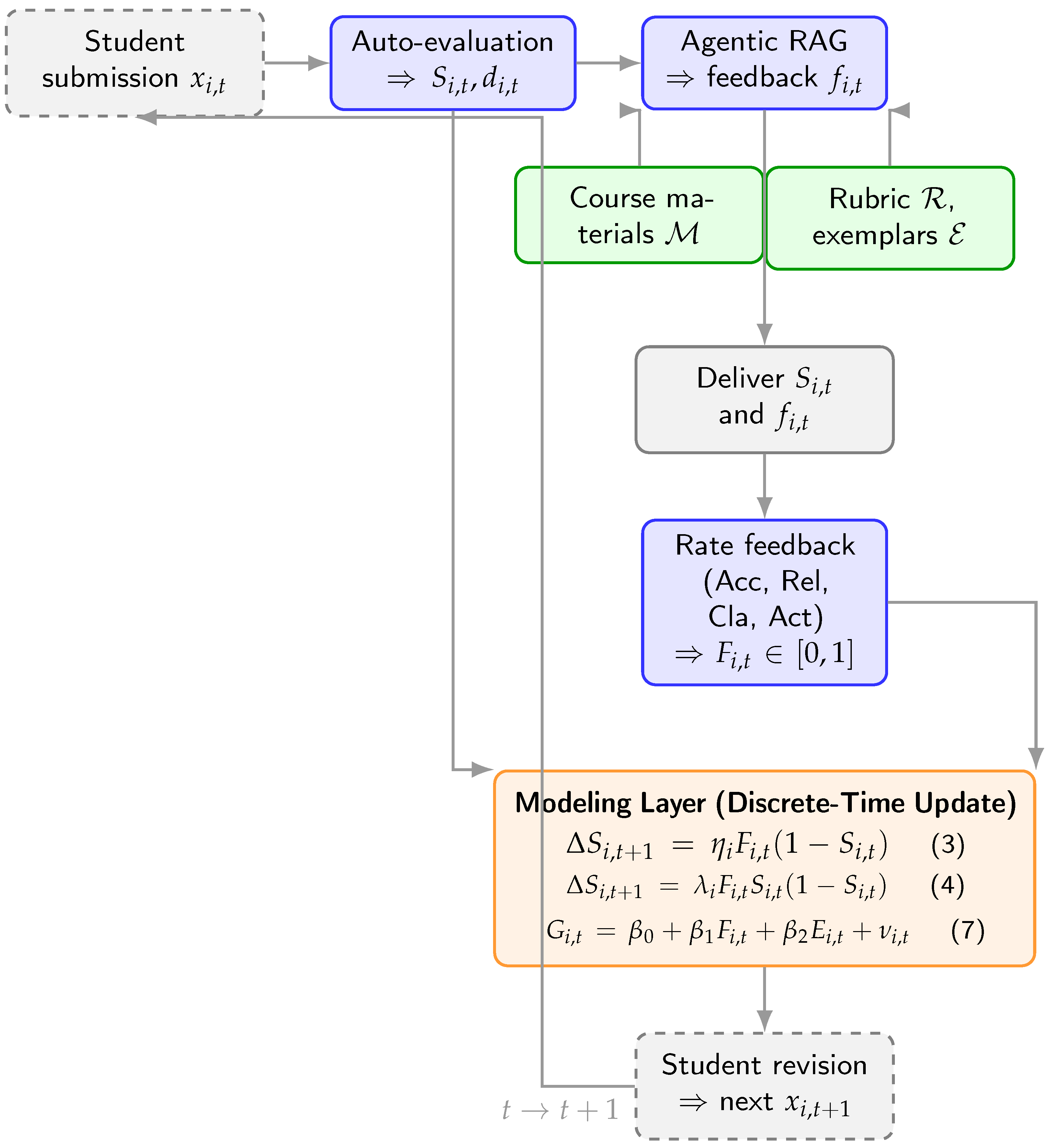

In

Figure 2, the discrete-time assessment–feedback cycle couples student submissions, auto-evaluation and agentic RAG feedback with the modeling layer defined in Equations (

3)–(

7).

3.4. Process and Provenance Signals and Epistemic Alignment

To address the broader educational question of what to value and how to assess in AI-rich contexts, we treat process and provenance as first-class signals that the platform natively logs:

Planning artifacts: rubric-aligned decomposition and rationale produced at action A1.

Tool-usage telemetry: calls and outcomes for T1–T3 (tests, static/dynamic analyzers, and rubric check), with versions and inputs/outputs.

Testing strategy and outcomes: coverage/edge cases; failing-to-passing transitions across iterations.

Evidence citations: retrieved sources bound into feedback at F, enabling traceability.

Revision deltas: concrete changes between t and (files/functions touched; error classes resolved).

These signals can (i) be reported descriptively alongside

and

, (ii) enter Equation (

7) as covariates

or as moderators via interactions

, and (iii) inform

defensible assessment formats (trace-based walkthroughs; oral/code defenses; revision-under-constraints) without sacrificing comparability. A concise

epistemic alignment matrix mapping constructs to rubric rows and platform traces is provided in (

Appendix A.1) and referenced in Discussion

Section 5.4.

In addition to the aggregate FQI, we pre-specify

criterion-level summaries (accuracy, relevance, clarity, and actionability) by

task and by

iteration, as well as distributions of per-criterion ratings

. This supports analyses such as: “Given that

, which criteria most frequently reach level 5, and in which tasks?” Corresponding summaries are reported in the Results (

Section 4); the criterion-by-task breakdowns are compiled in

Appendix A.3 and discussed in

Section 4.

3.5. Model Specifications

We formalize three complementary formulations that capture how iterative feedback influences performance trajectories. These definitions appear here for the first time and are referenced throughout using \eqref{}. Importantly, these are policy-level update rules (design of the feedback loop), consistent with the theoretical results on iteration complexity and stability.

- (1)

Linear difference model.

where

encodes individual sensitivity to feedback and

captures unexplained variation. Improvement is proportional to the gap-to-target and modulated by feedback quality and learner responsiveness.

- (2)

Logistic convergence model.

with

governing how feedback accelerates convergence. In multiplicative-gap form,

which makes explicit that higher-quality feedback contracts the remaining gap faster. A useful rule-of-thumb is the

gap half-life:

mapping estimated

and observed

to expected pacing.

- (3)

Relative-gain model.

We define the per-iteration fraction of the remaining gap that is closed:

and regress

where

optionally captures effort/time-on-task;

are iteration fixed effects (time trends and task difficulty); and

is an error term. The coefficient

estimates the average marginal effect of feedback quality on progress per iteration, net of temporal and difficulty factors. In sensitivity analyses, we optionally augment the specification with a process/provenance vector

and the interaction term

.

3.6. Identification Strategy, Estimation, and Diagnostics

Given the observational design, we mitigate confounding via (i) within-student modeling (student random intercepts; cluster-robust inference), (ii) iteration fixed effects to partial out global time trends and task difficulty, and (iii) optional effort covariates where available. In sensitivity checks, we add lagged outcomes (where appropriate) and verify that inferences on remain directionally stable. Where recorded, we include (process/provenance) as covariates or moderators.

Equations (

3) and (

4) are estimated by nonlinear least squares with student-level random intercepts (and random slopes where identifiable), using cluster-robust standard errors at the student level. Equation (

7) is fit as a linear mixed model with random intercepts by student and fixed effects

. Goodness-of-fit is summarized with RMSE/MAE (levels) and

(gains); calibration is assessed via observed vs. predicted trajectories. Model comparison uses AIC/BIC and out-of-sample

K-fold cross-validation.

We report 95% confidence intervals and adjust p-values using the Benjamini–Hochberg procedure where applicable. Robustness checks include the following: (i) trimming top/bottom changes, (ii) re-estimation with Huber loss, (iii) alternative weighting schemes in (e.g., upweight Accuracy/Actionability), and (iv) a placebo timing test regressing on future to probe reverse-timing artefacts (expected null).

We normalize

and

to the

scale. When

is missing for a single iteration, we apply last-observation-carried-forward (LOCF) and conduct sensitivity checks using complete cases and within-student mean imputation. Students with

consecutive missing iterations are excluded from model-based analyses but retained in descriptive summaries. To contextualize inference given the moderate cohort size (

), per-iteration participation counts

are reported in Results (

Section 4).

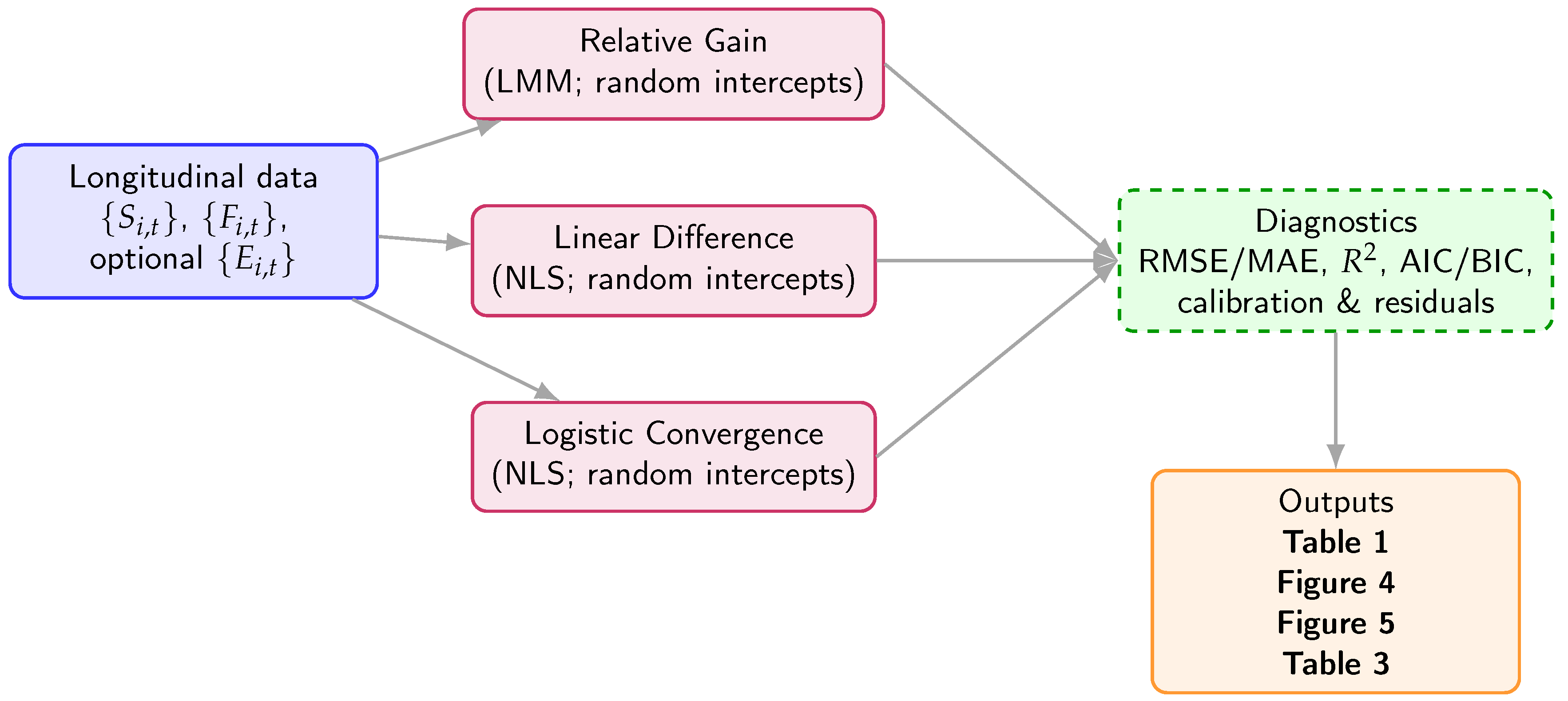

In

Figure 3, we organize the estimation workflow from longitudinal inputs to model fitting, diagnostics, and study outputs, matching the identification and validation procedures detailed in this section.

3.7. Course AI-Use Policy and Disclosure

Students received an explicit course policy specifying permissible AI assistance (e.g., debugging hints, explanation of diagnostics, literature lookup) and requiring disclosure of AI use in submissions; the platform’s controller logs (planning artifacts, tool calls, and citations) support provenance checks without penalizing legitimate assistance. Self-reports were collected as optional meta-data and may be cross-checked with telemetry for plausibility.

3.8. Threats to Validity and Mitigations

Internal validity. Without randomized assignment of feedback pathways, causal claims are cautious. We partially address confounding via within-student modeling, iteration fixed effects (time/difficulty), and sensitivity analyses (lagged outcomes; trimming; Huber). Practice and ceiling effects are explicitly modeled by the gap-to-target terms in (

3) and (

4).

Construct validity. The Feedback Quality Index aggregates four criteria; we report inter-rater agreement (Cohen’s

) and internal consistency (Cronbach’s

) in

Section 4. Calibration plots and residual diagnostics ensure score comparability across iterations. In AI-rich settings, potential construct shift is mitigated by process/provenance logging (plan, tests, analyzers, citations, and revision deltas) and disclosure (

Section 3.7), which align observed outcomes with epistemic aims.

External validity. Results originate from one course and institution with . Transferability to other disciplines and contexts requires multi-site replication (see Discussion). Equity-sensitive outcomes (dispersion , tails) are included to facilitate cross-context comparisons.

3.9. Software, Versioning, and Reproducibility

Analyses were conducted in Python 3.12 (NumPy, SciPy, StatsModels). We record random seeds, dependency versions, and configuration files (YAML) and export an environment lockfile for full reproducibility. Estimation notebooks reproduce all tables/figures and are available upon request; audit logs include model/version identifiers and retrieval evidence IDs.

3.10. Data and Code Availability

The dataset (scores, feedback-quality indices, and model-ready covariates) is available from the corresponding author upon reasonable request, subject to institutional policies and anonymization standards. Model scripts and configuration files are shared under an academic/research license upon request.

Appendix A.1 documents the epistemic alignment matrix (constructs ↔ rubric rows ↔ platform traces).

3.11. Statement on Generative AI Use

During manuscript preparation, ChatGPT (OpenAI, 2025 version) was used exclusively for language editing and stylistic reorganization. All technical content, analyses, and results were produced, verified, and are the sole responsibility of the authors.

3.12. Ethics

Participation took place within a regular course under informed consent and full pseudonymization prior to analysis. The study was approved by the Research Ethics Committee of Universidad de Jaén (Spain), approval code JUL.22/4-LÍNEA. Formal statements appear in the back matter (Institutional Review Board Statement, Informed Consent Statement).

3.13. Algorithmic Specification and Visual Summary

In what follows, we provide an operational specification of the workflow. Algorithm 1 details the step-by-step

iterative dynamic assessment cycle with agentic RAG, from submission intake and automated evaluation to evidence-grounded feedback delivery and quality rating across six iterations. Algorithm 2 complements this by formalizing the computation of the Feedback Quality Index

from criterion-level ratings and by quantifying reliability via linear-weighted Cohen’s

(on a 20% double-rated subsample) and Cronbach’s

(across the four criteria). Together, these specifications capture both the

process layer (workflow) and the

measurement layer (scoring and reliability) required to reproduce our analyses.

| Algorithm 1 Iterative dynamic assessment cycle with agentic RAG. |

- Require:

Course materials , rubric , exemplars , test suite ; cohort ; - 1:

Initialize connectors (MCP-like), audit logs, and pseudonymization - 2:

for to 6 do ▹ Discrete-time learning loop - 3:

for each student do - 4:

Receive submission - 5:

Auto-evaluation: run + static/dynamic checks ⇒ diagnostics ; compute - 6:

Build context - 7:

Agentic RAG: retrieve top-k evidence; draft → self-critique → finalize feedback - 8:

Deliver and to student i - 9:

Feedback Quality Rating: rate {accuracy, relevance, clarity, actionability} on 1–5 - 10:

Normalize/aggregate ⇒; (optional) collect (effort/time) - 11:

(Optional inference) update predictions via ( 3), ( 4), ( 7) - 12:

Log with pseudonym IDs - 13:

end for - 14:

end for - 15:

Output: longitudinal dataset , , optional ; evidence/audit logs

|

| Algorithm 2 Computation of and reliability metrics (, ). |

- Require:

Feedback instances with rubric ratings for ; 20% double-rated subsample - Ensure:

; Cohen’s on ; Cronbach’s across criteria - 1:

for each feedback instance do - 2:

Handle missing ratings: if any missing, impute with within-iteration criterion mean - 3:

for each criterion c do - 4:

▹ Normalize to - 5:

end for - 6:

Aggregate: ▹ Equal weights; alternative weights in Section 3.6 - 7:

end for - 8:

Inter-rater agreement (): compute linear-weighted Cohen’s on - 9:

Internal consistency (): with criteria, compute Cronbach’s - 10:

Outputs: for modeling; and reported in Results

|

4. Results

This section presents quantitative evidence for the effectiveness of the AI-supported dynamic-assessment and iterative-feedback system. We first report model-based parameter estimates for the three formulations (linear difference, logistic convergence, and relative gain;

Table 1). We then describe cohort-level dynamics across six iterations—means and dispersion (

Figure 4) and variance contraction (

Figure 5)—with numerical companions in

Table 2 and per-iteration participation counts in

Table 3; the repeated-measures ANOVA is summarized in

Table 4. Next, we illustrate heterogeneous responsiveness via simulated individual trajectories (

Figure 6). We subsequently summarize predictive performance (

Table 5) and calibration (

Figure 7). We conclude with placebo-timing tests, sensitivity to missingness, and robustness checks, with additional details compiled in

Appendix A.

Parameter estimates for the three model formulations are summarized in

Table 1.

4.1. Model Fitting, Parameter Estimates, and Effect Sizes

Parameter estimates for the linear-difference, logistic-convergence, and relative-gain models are summarized in

Table 1. All scores used for estimation were normalized to

(with

and

), whereas descriptive figures are presented on the 0–100 scale for readability. Three results stand out and directly support the

policy-level design view:

Interpretable sensitivity to feedback (Proposition 1). The average learning-rate parameter linked to feedback quality is positive and statistically different from zero in the linear-difference model, consistent with geometric gap contraction when .

Stability and pacing (Proposition 2). The logistic model indicates accelerated convergence at higher and satisfies the stability condition across the cohort.

Marginal progress per iteration. The relative-gain model yields a positive , quantifying how improvements in feedback quality increase the fraction of the remaining gap closed at each step.

Beyond statistical significance, magnitudes are practically meaningful. Two interpretable counterfactuals:

Per-step effect at mid-trajectory. At and , the linear-difference model implies an expected gain (i.e., ∼7.7 points on a 0–100 scale). Increasing F by at the same adds (≈1.0 point).

Gap contraction in the logistic view. Using (

5), the multiplicative contraction factor of the residual gap is

. For

and

, the factor is

, i.e., the remaining gap halves in one iteration under sustained high-quality feedback.

Exploratory control for process/provenance. Augmenting the gain model (

7) with the process/provenance vector

(planning artifacts, tool-usage telemetry, test outcomes, evidence citations, revision deltas; see

Section 3.4) and interactions

yields

modest improvements in fit while leaving

positive and statistically significant (

Appendix A.2). This suggests that the effect of feedback quality persists after accounting for how feedback is

produced and used, aligning with a policy-level interpretation.

On the stratified 20% double-rated subsample, linear-weighted Cohen’s

indicated

substantial inter-rater agreement, and Cronbach’s

indicated

high internal consistency:

(95% CI

–

) and

(95% CI

–

). Per-criterion

: Accuracy

(0.73–0.88), Relevance

(0.69–0.85), Clarity

(0.65–0.83), Actionability

(0.67–0.84). These results support the construct validity of

as a predictor in Equations (

3)–(

7).

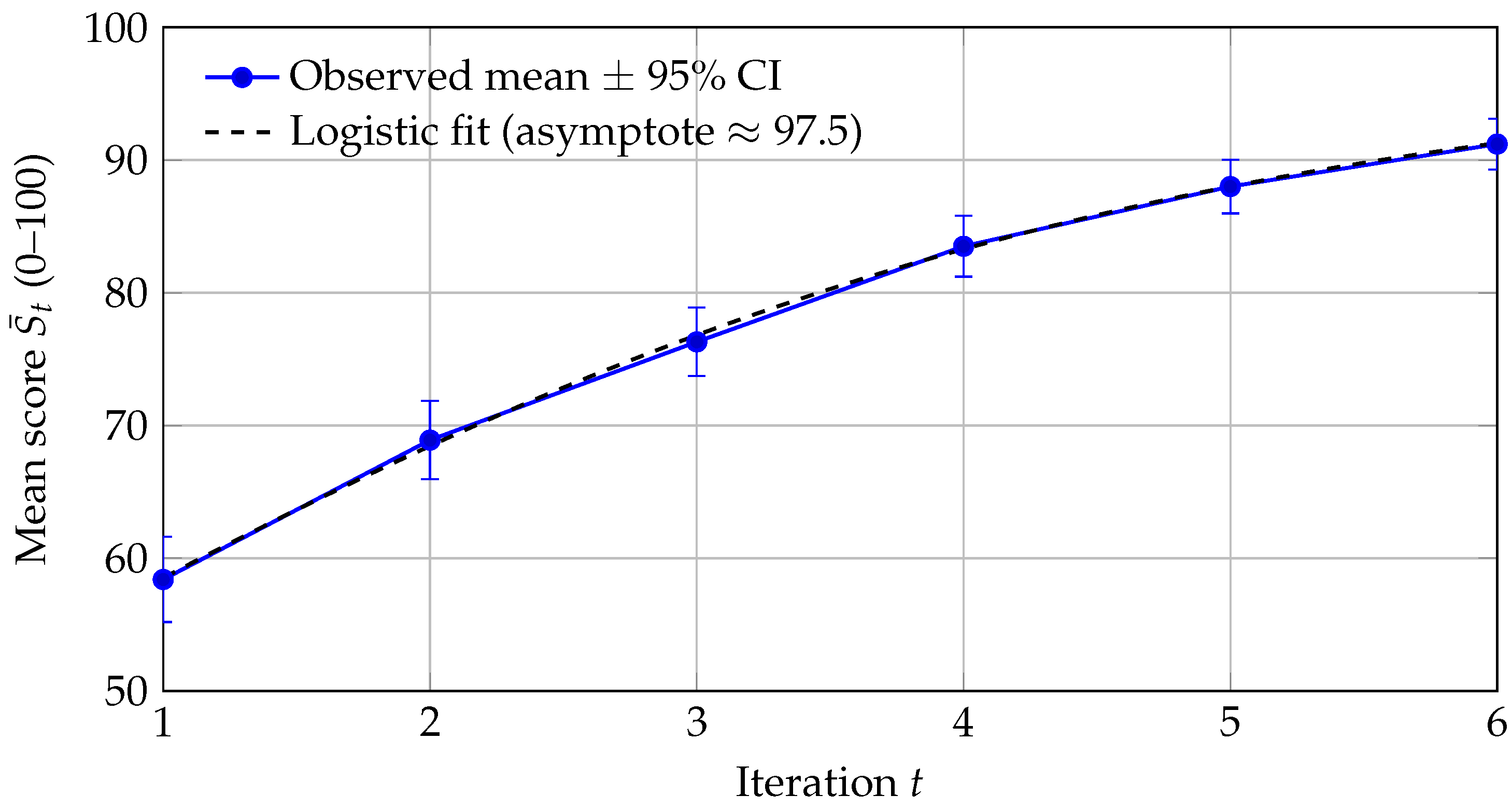

4.2. Cohort Trajectories Across Iterations

Figure 4 displays the average cohort trajectory across the six iterations (0–100 scale). Means increase from

at

to

at

, a

-point absolute gain (

relative to baseline). A shifted-logistic fit (dashed) closely tracks the observed means and suggests an asymptote near

, consistent with diminishing-returns dynamics as the cohort approaches ceiling. The fitted curve (0–100 scale) is

with evaluations at

given by

, which closely match the observed means.

As a numeric companion to

Figure 4,

Table 2 reports per-iteration means, standard deviations, and 95% confidence intervals (

).

Table 3 provides the corresponding participation counts

per iteration.

4.3. Variance Dynamics, Equity Metrics, and Group Homogeneity

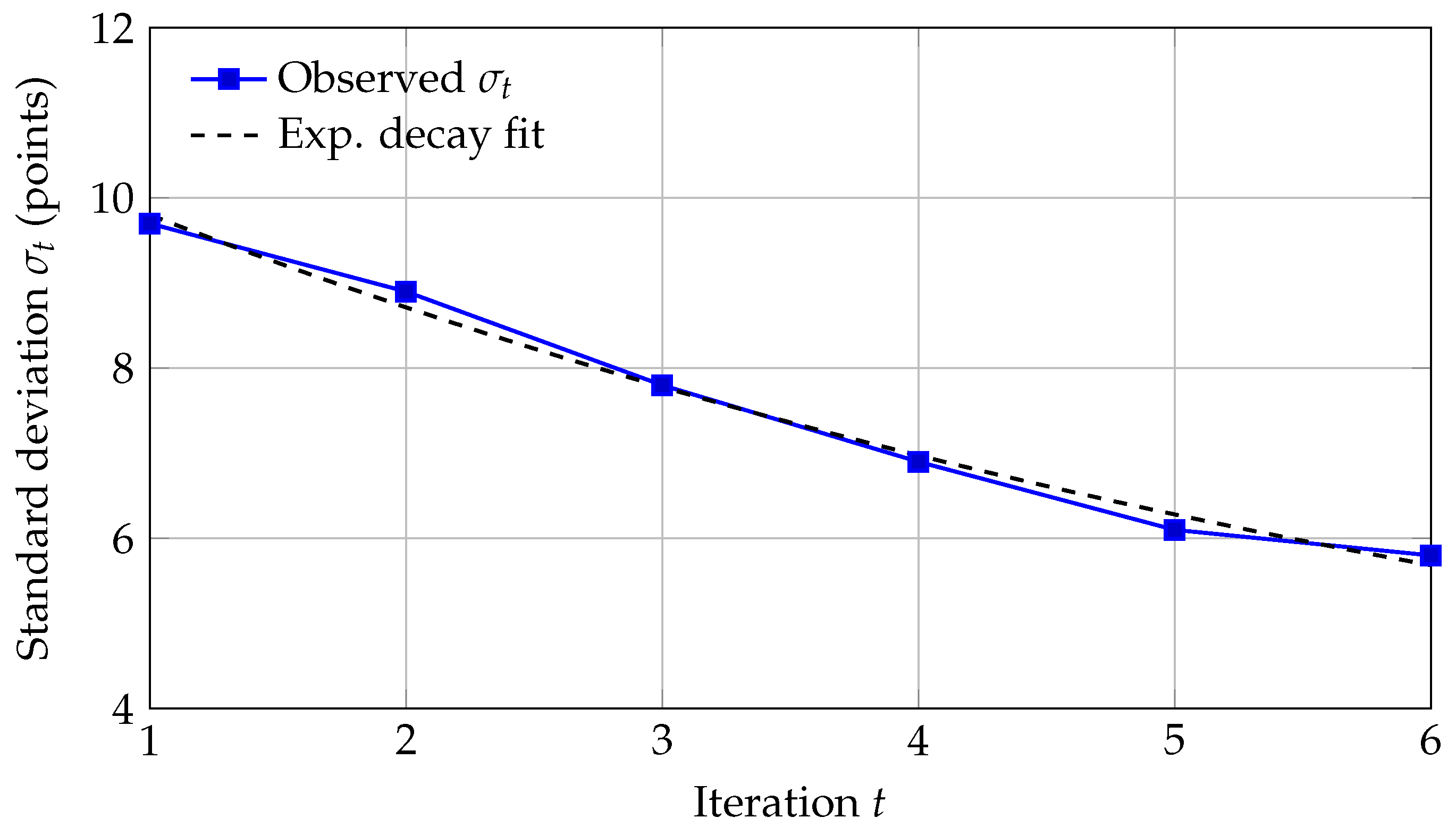

Dispersion shrinks markedly across iterations (

Figure 5): the standard deviation decreases from

at

to

at

(relative change

), and the cohort coefficient of variation drops from

to

. A repeated-measures ANOVA on scores across

t indicates significant within-student change (sphericity violated; Greenhouse–Geisser corrected), and the exponential-decay fit illustrates the variance contraction over time. Analytically, this pattern is consistent with the variance-contraction prediction in Corollary 1 (

Section 2): as

,

contracts toward a low-variance regime.

To gauge equity effects beyond SD, we report two distributional indicators (approximate normality):

Inter-decile spread (Q90–Q10). Using , the spread drops from ≈24.9 points at to ≈14.9 at (), indicating tighter clustering of outcomes.

Tail risk. The proportion below an 80-point proficiency threshold moves from ≈98.7% at (z = 2.23) to ≈2.7% at (z = −1.93), evidencing a substantive collapse of the lower tail as feedback cycles progress.

Pedagogically, these patterns align with equity aims: improving not only lifts the mean but narrows within-cohort gaps and shrinks the low-performance tail.

The corresponding repeated-measures ANOVA summary appears in

Table 4.

4.4. Criterion- and Task-Level Patterns (Rubric)

Given that the cohort mean exceeds 90 at

, we examine rubric criteria at the close of the cycle. Descriptively, a majority of submissions attain level 5 in at least two of the four criteria (accuracy, relevance, clarity, and actionability). Accuracy and actionability are most frequently at level 5 by

, while clarity and relevance show gains but remain somewhat more task dependent (e.g., tasks emphasizing concurrency patterns invite more concise, targeted explanations than early debugging-focused tasks). Criterion-by-task distributions and exemplars are summarized in

Appendix A.3. These patterns align with the observed performance asymptote and with process improvements (planning coverage, testing outcomes, and evidence traceability) reported below, suggesting that high scores at

reflect both

product quality and

process competence.

4.5. Epistemic-Alignment and Provenance Signals: Descriptive Outcomes and Exploratory Associations

We now report descriptive outcomes for the

process and provenance signals (

Section 3.4) and their exploratory association with gains, addressing what kinds of knowledge/understanding are being valued and how they are assessed in AI-rich settings:

Planning coverage (rubric-aligned). The fraction of rubric sections explicitly addressed in the agentic plan increased across iterations, indicating growing alignment between feedback structure and targeted competencies.

Tool-usage and testing outcomes. Test coverage and pass rates improved monotonically, while analyzer-detected concurrency issues (e.g., data races and deadlocks) declined; revisions increasingly targeted higher-level refactoring after correctness issues were resolved.

Evidence citations and traceability. The share of feedback instances with bound evidence IDs remained high and grew over time, supporting auditability and explainable guidance rather than opaque suggestions.

Revision deltas. Code diffs show a shift from broad patches early on to focused edits later, consistent with diminishing returns near ceiling (logistic convergence).

Exploratory associations. Adding

to Equation (

7) yields (i) stable, positive

for

; (ii) small but consistent gains in model fit; and (iii) positive interactions for

actionability with

revision deltas and

test outcomes, suggesting that high-quality feedback that is

also acted upon translates into larger relative gains (

Appendix A,

Table A2). A lead-placebo on selected process variables is null (

Appendix A.2), mirroring the timing result for

and supporting temporal precedence. These findings indicate that the system measures—and learners increasingly demonstrate—process-oriented competencies (planning, testing strategies, and evidence use) alongside product performance, directly engaging the broader pedagogical question raised in the Introduction and Discussion.

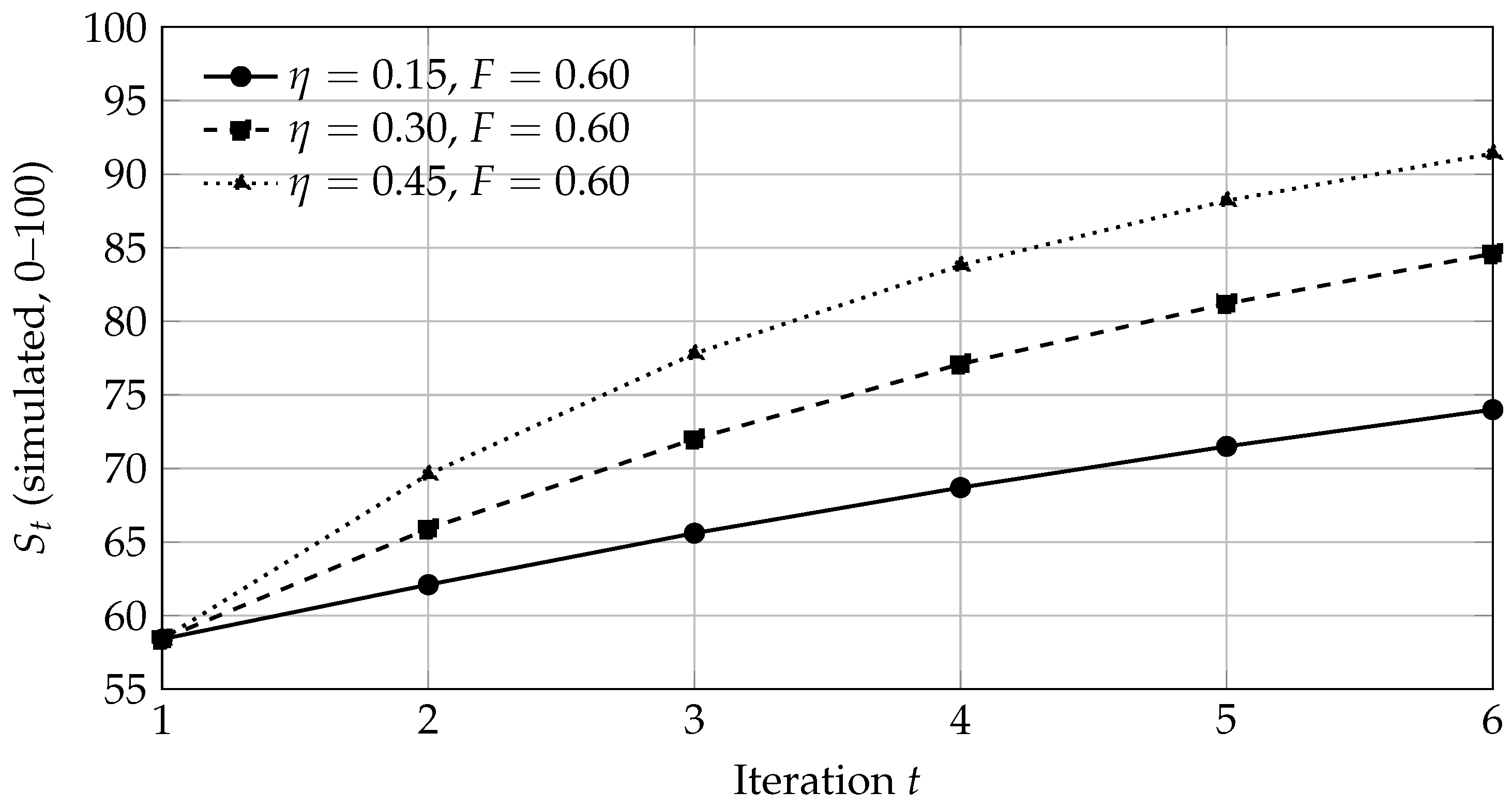

4.6. Individual Trajectories: Heterogeneous Responsiveness

To illustrate heterogeneous responsiveness to feedback,

Figure 6 simulates three trajectories under the linear-difference mechanism for different sensitivities

at a moderate feedback level (

) with initial score

(0–100). Higher

approaches the target faster, while lower

depicts learners who may require improved feedback quality or additional scaffolding. In practice, agentic RAG can be tuned to prioritize actionability/clarity for low-

profiles.

4.7. Model Fit, Cross-Validation, Calibration, Placebo Test, Missingness Sensitivity, and Robustness

Out-of-sample

K-fold cross-validation (

) yields satisfactory predictive performance. For the relative-gain LMM, mean

(SD

) across folds. For the level models (NLS), the linear-difference specification yields RMSE

(SD

) and MAE

(SD

); the logistic-convergence specification yields RMSE

(SD

) and MAE

(SD

). To visualize the heterogeneity implied by these fits, see

Figure 6. Full summaries appear in

Table 5.

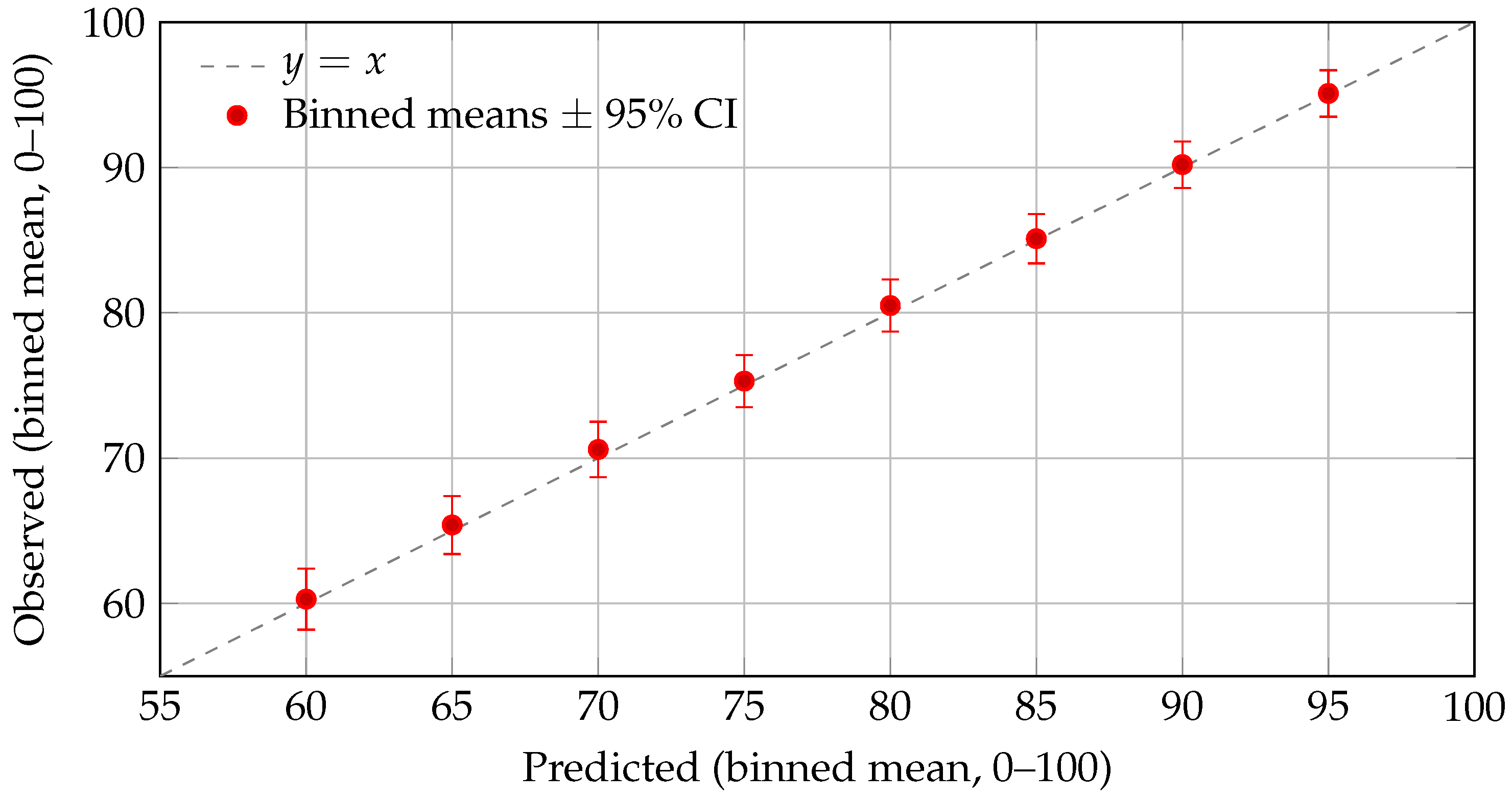

A calibration-by-bins plot using individual predictions (deciles of the predicted score) appears in

Figure 7, showing close alignment to the

line with tight 95% CIs. This complements the cohort-level fit in

Figure 4 and indicates that predictive layers used within the update models are well calibrated across the score range.

To probe reverse timing, we regressed

on

future (same controls as Equation (

7)). The lead coefficient was null as expected:

(95% CI

to

),

—consistent with temporal precedence of feedback quality. A parallel lead-placebo on selected process signals in

was also null (

Appendix A.2).

Results are stable across missing-data strategies: replacing LOCF with complete-case analysis changes by (absolute), and within-student mean imputation changes it by . Leave-one-student-out influence checks vary within , and vary and means within reported CIs, indicating no single-student leverage.

Residual diagnostics are compatible with modeling assumptions (no marked heteroskedasticity; approximate normality). Robustness checks—2.5% trimming, Huber loss, and alternative rubric weights in (e.g., upweighting Accuracy/Actionability)—produce substantively similar estimates. As anticipated, the linear-difference specification is more sensitive to fluctuations in than the logistic and gain models.

The joint pattern of (i) higher means, (ii) lower dispersion, (iii) inter-decile spread reduction, and (iv) a significant positive suggests that improving feedback quality at scale directly translates into faster progress per iteration and more homogeneous trajectories—relevant for platform and course design in large cohorts. Empirically, estimated and observed satisfy the stability condition (Proposition 2), and the reduction in matches the variance-contraction mechanism of Corollary 1. Additionally, the upward trends in planning coverage, testing outcomes, and evidence traceability indicate that the system not only improves product scores but also cultivates process-oriented competencies that current AI-mediated higher education seeks to value and assess.

5. Discussion: Implications for Assessment in the AI Era

5.1. Principal Findings and Their Meaning

The evidence supports an

algorithmic, policy-level view of learning under iterative, AI-assisted feedback. At the cohort level, the mean score increased from

to

across six iterations while dispersion decreased from

to

points (0–100 scale), as shown in

Figure 4 and

Figure 5 with descriptives in

Table 2. Model estimates in

Table 1 indicate that (i) higher feedback quality is associated with larger next-step gains (

linear-difference:

), (ii) convergence accelerates when feedback quality is high while remaining in the stable regime (

logistic:

with

), and (iii) the fraction of the remaining gap closed per iteration increases with feedback quality (

relative-gain:

). These results are robust: the lead-placebo is null (

, 95% CI

,

), cross-validated

for the gain model averages

and level-model errors are low (

Table 5), and the Feedback Quality Index (FQI) shows

substantial inter-rater agreement and

high internal consistency (

,

). Taken together, the joint pattern—higher means, lower dispersion, and a positive marginal effect of

—suggests that dynamic, evidence-grounded feedback simultaneously raises average performance and promotes more homogeneous progress. Importantly,

is not an abstract proxy: it is

operationally tied to the agentic capabilities enforced by the controller (planning, tool use, and self-critique;

Section 3.2) and to process/provenance signals that improve over time (

Section 4.5).

Each iteration

t corresponds to a

distinct, syllabus-aligned task (not multiple resubmissions of a single task). The “Revision” phase uses feedback from iteration

t to prepare the next submission, which is then

evaluated at

(see

Figure 2 and

Section 3.3). The number of iterations (six) reflects the six assessment windows embedded in the course schedule. For readability with a small cohort, per-iteration participation counts

are reported in

Table 3.

5.2. Algorithmic Interpretation and Links to Optimization

The three formulations articulate complementary facets of the assessment–feedback loop. The

linear-difference update (Equation (

3)) behaves like a gradient step with data-driven step size

scaled by the

gap-to-target; early iterations (larger gaps) yield larger absolute gains for a given feedback quality. The

logistic model (Equations (

4) and (

5)) captures diminishing returns near the ceiling and makes explicit how feedback multiplicatively contracts the residual gap; the cohort fit in

Figure 4 is consistent with an asymptote near

. The

relative-gain regression (Equation (

7)) quantifies the marginal effect of feedback quality on progress as a share of the remaining gap, which is useful for targeting: for mid-trajectory states (

), improving

F by

increases the expected one-step gain by ≈1 point on the 0–100 scale.

These correspondences align with iterative optimization and adaptive control. Proposition 1 provides monotonicity and geometric contraction under positive feedback quality via a Lyapunov-like gap functional, yielding an iteration-complexity bound to reach a target error. Proposition 2 ensures local stability around the target for , a condition met empirically. Corollary 1 predicts cohort-level variance contraction when average feedback quality is positive; this mirrors the observed decline in and the reduction in inter-decile spread. In short, the update rules are not only predictive but prescriptive: they specify how changes in translate into pace (convergence rate) and equity (dispersion).

5.3. Criterion- and Task-Level Interpretation

Because the cohort mean surpasses 90 by

, not all rubric criteria must be saturated at level 5 for every submission. Descriptively (Results

Section 4.4;

Appendix A.3),

accuracy and

actionability most frequently attain level 5 at

, while

clarity and

relevance show strong gains but remain more task contingent. Tasks emphasizing concurrency patterns and synchronization benefited from concise, targeted explanations and code pointers, whereas earlier debugging-focused tasks prioritized correctness remediation. This criterion-by-task profile aligns with the logistic asymptote (diminishing returns near ceiling) and with observed process improvements (planning coverage, testing discipline, evidence traceability), indicating that high endline performance reflects both

product quality and

process competence.

5.4. Epistemic Aims in AI-Mediated Higher Education: What to Value and How to Assess

Reviewer 2 asks us to engage with the broader educational question of what knowledge should be valued and how it should be assessed when AI systems can produce sophisticated outputs. Our study points toward valuing—and explicitly measuring—process-oriented, epistemic competencies alongside product performance:

Problem decomposition and planning (rubric-aligned): structuring the fix-path and articulating criteria.

Testing and evidence use: designing/running tests; invoking analyzers; binding citations; judging evidence quality.

Critical judgment and self-correction: checklist-based critique, revision discipline, and justification of changes.

Tool orchestration and transparency: deciding when and how to use AI, and documenting provenance.

Transfer and robustness: sustaining gains across tasks as ceilings approach (diminishing returns captured by the logistic model).

Treat

process/provenance artifacts (plans, test logs, analyzer outputs, evidence IDs, and revision diffs) as

graded evidence, not mere exhaust (

Section 4.5).

Extend the FQI with two auditable criteria: Epistemic Alignment (does feedback target the right concepts/processes?) and Provenance Completeness (citations, test references, and analyzer traces).

Use hybrid products: process portfolios plus brief oral/code walk-throughs and time-bounded authentic tasks to check understanding and decision rationales.

Adopt disclose-and-justify policies for AI use: students document which tools were used, for what, and why—leveraging controller logs for verification.

Optimize with multi-objective policies: maximize expected gain (via or ) subject to constraints on dispersion (equity) and thresholds on process metrics (planning coverage, test discipline, and citation completeness).

Conceptually, these steps align what is valued (epistemic process) with what is measured (our policy-level and process signals), addressing the curricular question raised by Reviewer 2 and reinforcing the role of agentic RAG as a vehicle for explainable, auditable feedback.

5.5. Design and Policy Implications for EdTech at Scale

Treating assessment as a discrete-time process with explicit update mechanics yields concrete design levers:

Instrument the loop. Per iteration, log submission inputs, diagnostics, feedback text, evidence citations, , optional effort , model/versioning, and latency for auditability and controlled A/B tests over templates and tools.

Raise systematically. Use agentic RAG to plan (rubric-aligned decomposition), use tools beyond retrieval (tests, static/dynamic analyzers, rubric checker), and self-critique (checklist) before delivery. Empirically, higher increases both convergence rate () and relative gain ().

Optimize for equity, not only means. Track dispersion , Q10/Q90 spread, and proficiency tails as first-class outcomes; our data show a drop in SD and a collapse of the lower tail across cycles.

Personalize pacing. Use predicted gains (Equation (

7)) to adjust intensity (granular hints, exemplars) for low-responsiveness profiles (small

), under latency/cost constraints.

Value and score epistemic process. Add Epistemic Alignment and Provenance Completeness to the FQI; include controller-verified process metrics in dashboards and, where appropriate, in grading.

5.6. Threats to Validity and Limitations

External validity is bounded by a single course (Concurrent Programming) and students; multi-site replication is warranted. Construct validity hinges on the FQI; while inter-rater agreement and internal consistency are strong (, ), future work should triangulate with student-perceived usefulness and effort mediation. Causal identification remains cautious given an observational design; the longitudinal signal (RM-ANOVA), cross-validation, calibration, and placebo timing tests help, but randomized or stepped-wedge designs are needed to isolate counterfactuals. Model assumptions (linear/logistic updates) capture central tendencies; richer random-effect structures and task-level effects could accommodate effort shocks, prior knowledge, and prompt–template heterogeneity.

Risk of metric gaming (Goodhart). Emphasizing process metrics may invite optimization toward the metric rather than the competency. We mitigate via (i) checklists tied to

substantive evidence (tests/analyzers/evidence IDs), (ii) randomized exemplar/test variants and oral defenses, and (iii) withholding a holdout task distribution for summative checks. We also monitor the stability of

after adding process covariates (

Section 4.5) to detect overfitting to proxy behaviors.

5.7. Future Work

Three immediate avenues follow. Experimental designs: randomized or stepped-wedge trials varying grounding (citations), scaffolding depth, and timing to estimate causal effects on and to test fairness-aware objectives. Personalization policies: bandit/Bayesian optimization over prompts and exemplars with relative-gain predictions as rewards, plus risk-aware constraints on dispersion and tail mass; extend to multi-objective optimization that jointly targets product outcomes and process thresholds (planning coverage, testing discipline, provenance completeness). Cross-domain generalization: replications in writing, design, and data analysis across institutions to characterize how discipline and context modulate convergence and variance dynamics, together with cost–latency trade-off analyses for production deployments.

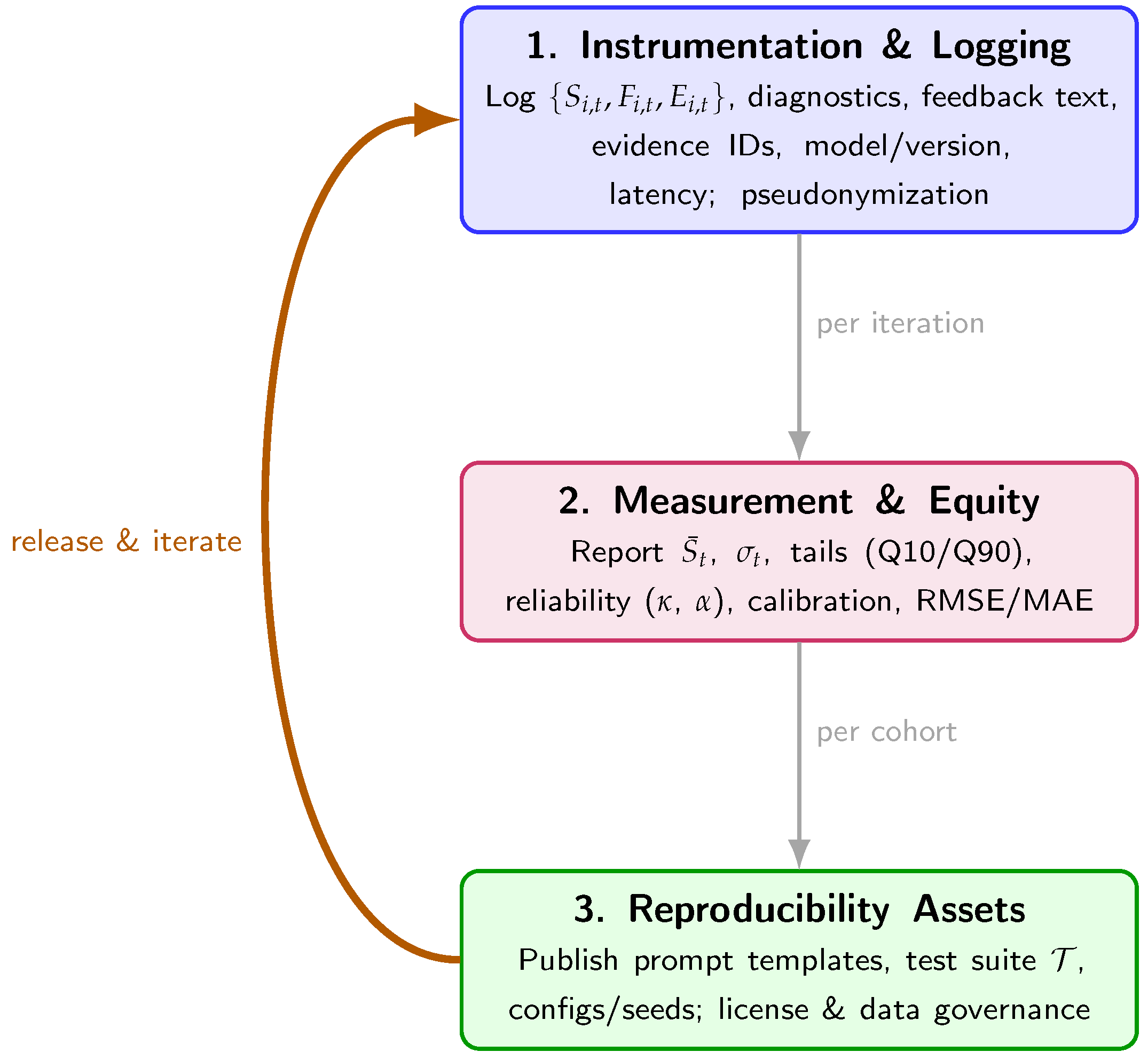

5.8. Concluding Remark and Implementation Note

As a deployment aid,

Figure 8 summarizes the implementation roadmap for the discrete-time assessment–feedback system.

Implementation note for Algorithms readers (text-only guidance). Treat the pipeline as auditable: log every update with full provenance (submission inputs, diagnostics, feedback text, evidence citations, , model/versioning, latency); report cohort dispersion and tail shares alongside means with reliability (, ) and calibration; and publish reproducibility assets—prompt templates, the test suite , and configuration files with seeds and versions—under an institutional or research license with appropriate anonymization.

6. Conclusions

This study

formalizes AI-assisted dynamic assessment as an explicit, discrete-time

policy design for iterative feedback and

validates it in higher education. Across six assessment iterations in a

Concurrent Programming course (

), cohort performance rose from

to

points while dispersion fell from

to

points (0–100 scale), evidencing simultaneous gains in central tendency and equity (

Section 4;

Figure 4 and

Figure 5,

Table 2). These empirical patterns are consistent with an algorithmic feedback loop, in which higher feedback quality contracts the gap to target at each iteration and progressively narrows within-cohort differences.

Each iteration

t corresponds to a

distinct, syllabus-aligned task. Students use the feedback from iteration

t during the “Revision” phase to prepare the

next submission, which is then evaluated at

(Methods

Section 3.3;

Figure 2). The total of six iterations matches the six assessment windows in the course schedule (not six resubmissions of a single task). For transparency with a small cohort, per-iteration participation counts

are provided in

Table 3 (Results

Section 4).

Methodologically, three complementary formulations—the linear-difference update, the logistic-convergence model, and the relative-gain regression—yield

interpretable parameters that link feedback quality to both the

pace and

magnitude of improvement. Estimates in

Table 1 indicate that higher-quality, evidence-grounded feedback is associated with larger next-step gains (positive

), faster multiplicative contraction of the residual gap within the stable regime (positive

with

), and a greater fraction of the gap closed per iteration (positive

). Together with the repeated-measures ANOVA (

Table 4), these findings support an algorithmic account in which feedback acts as a measurable accelerator under realistic classroom conditions. Notably, Propositions 1 and 2 and Corollary 1 provide iteration-complexity, stability, and variance-contraction properties that align with the observed trajectories.

Reaching cohort means above 90 at

does not require every criterion to be saturated at level 5 for every submission. Descriptively,

accuracy and

actionability most frequently attain level 5 at endline, while

clarity and

relevance show strong gains but remain more task-contingent; criterion-by-task summaries are reported in

Appendix A.3 and discussed in

Section 5.3. This profile is consistent with diminishing returns near the ceiling and with the observed improvements in planning coverage, testing discipline, and evidence traceability (

Section 4.5).

Practically, the framework shows how agentic RAG—operationalized via planning (rubric-aligned task decomposition), tool use beyond retrieval (tests, static/dynamic analyzers, and rubric checker), and self-critique (checklist-based verification)—can deliver scalable, auditable feedback when backed by standardized connectors to course artifacts, rubrics, and exemplars. Treating assessment as an instrumented, discrete-time pipeline enables the reproducible measurement of progress (means, convergence) and equity (dispersion, tails), and exposes actionable levers for platform designers: modulating feedback intensity, timing, and evidence grounding based on predicted gains and observed responsiveness.

Beyond refining traditional evaluation, our results and instrumentation clarify

what should be valued and

how it should be assessed when AI systems can produce high-quality outputs. In addition to product scores, programs should value

process-oriented, epistemic competencies—problem decomposition and planning, test design/usage, evidence selection and citation, critical self-correction, and transparent tool orchestration. These can be assessed using controller-verified process/provenance artifacts (plans, test logs, analyzer traces, evidence IDs, and revision diffs; cf.

Section 3.2) and by extending the Feedback Quality Index with

Epistemic Alignment and

Provenance Completeness. Operationally, institutions can adopt

multi-objective policies that maximize expected learning gains (via

or

) subject to explicit constraints on equity (dispersion, tails) and minimum thresholds on process metrics; this aligns curricular aims with measurable, auditable signals discussed in

Section 5.4.

Conceptually, our contribution differs from knowledge tracing (KT): whereas KT prioritizes latent-state estimation optimized for predictive fit, our approach is a design model of the feedback loop with explicit update mechanics and analyzable convergence/stability guarantees. KT remains complementary as a signal for targeting (e.g., scaffolding and exemplar selection), while the proposed update rules preserve interpretability and analytical tractability at deployment time.

Limitations are typical of a single-course longitudinal study: one domain and one institution with a moderate sample. Generalization requires multi-site replications across disciplines and contexts. Stronger causal identification calls for randomized or stepped-wedge designs comparing feedback pathways or grounding strategies; production deployments should also incorporate fairness-aware objectives and cost–latency analyses to ensure sustainable scaling.

Framing assessment and feedback as an explicit, data-driven algorithm clarifies why and how feedback quality matters for both the speed (convergence rate) and the equity (variance contraction) of learning. The models and evidence presented here provide a reproducible basis for designing, monitoring, and improving AI-enabled feedback loops in large EdTech settings, with direct implications for scalable personalization and outcome equity in digital higher education.