Deep Learning for Brain MRI Tissue and Structure Segmentation: A Comprehensive Review

Abstract

1. Introduction

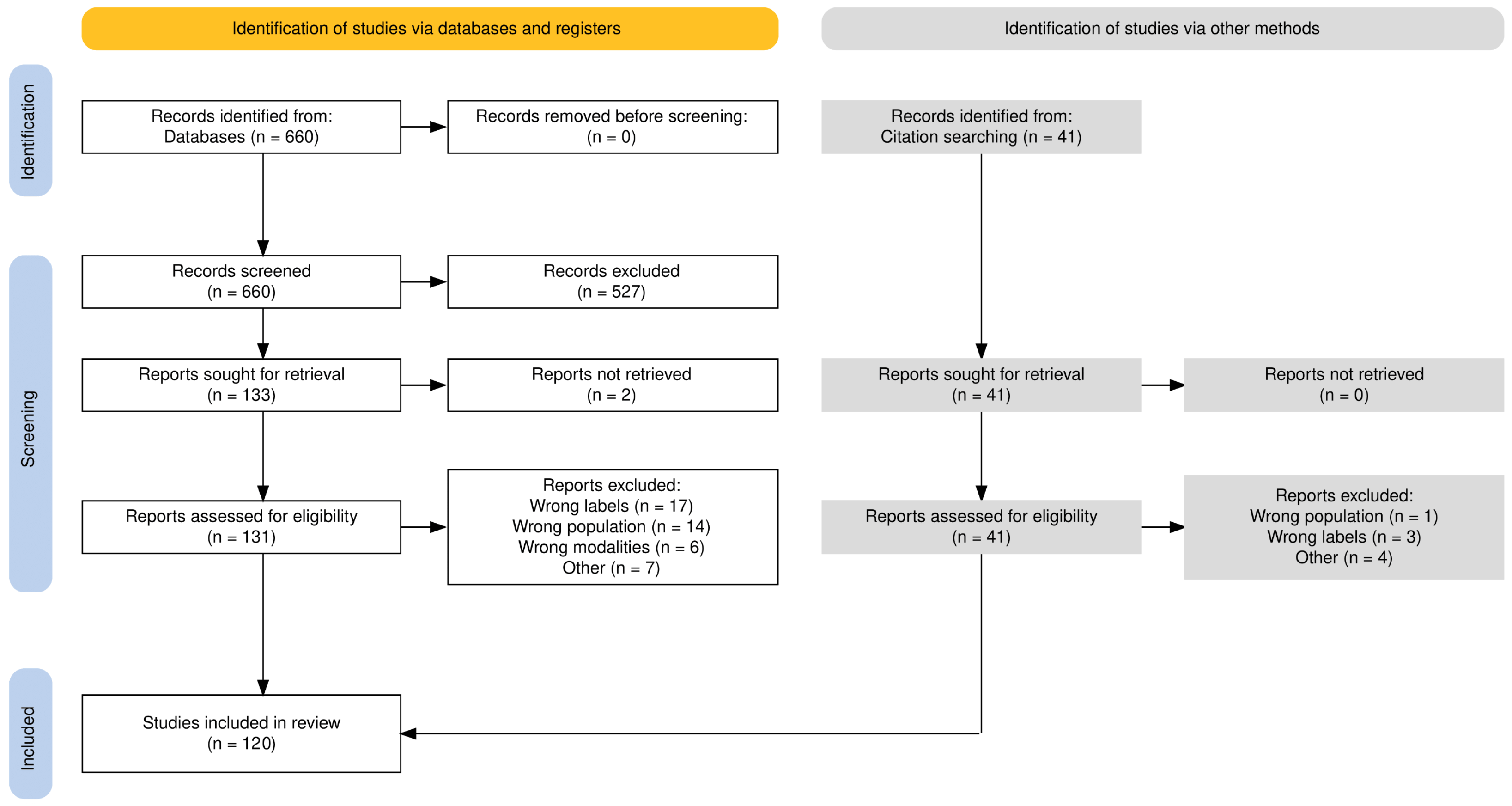

2. Methods

2.1. Literature Search

(brain[MeSH Terms]) AND (magnetic resonance imaging[MeSH Terms]) AND (segmentation[Title/Abstract] OR segmentations[Title/Abstract]) AND (“neural network”[Title/Abstract] OR “neural networks”[Title/Abstract] OR “deep learning”[Title/Abstract] OR transformer[Title/Abstract])

2.2. Definition and Applications

2.3. Traditional Segmentation Approaches

2.4. Deep Learning Segmentation

2.5. Segmentation Architectures

2.6. Patch-Based and Whole-Image Based Models

| Method | Modalities | Labels | Dim. | Input | Arch. | Avail. | DSC (%) |

|---|---|---|---|---|---|---|---|

| Brébisson et al. [83] | T1 | 133 | 2.5D and 3D | Patch | CNN | No | 72.5 |

| Shakeri et al. [88] | T1 | 8 subcortical | 2D | Full | FCN | Yes | 82.4 |

| Moeskops et al. [84] | T1, T2 | 8 | 2D | Patch | CNN | No | 89.8 |

| Milletari et al. [89] | QSM | 26 subcortical | 2D, 2.5D, or 3D | Patch | CNN | No | 77 |

| Bao et al. [90] | T1 | 7 subcortical | 2D | Patch | CNN | No | 82.22 |

| Moeskops et al. [58] | T1 | 7 | 2D | Patch | FCN | No | 92 |

| Dolz et al. [91] | T1 | 8 subcortical | 3D | Patch | FCN | Yes | 89 |

| Kushibar et al. [81] | T1 | 14 subcortical | 2.5D | Patch | CNN | Yes | 86.9 |

| Mehta et al. [85] | T1 | 32-134 | 2D and 3D | Patch | CNN | No | 84.4 |

| Mehta et al. [92] | T1 | 7 subcortical | 2D and 3D | Patch | U-Net | No | 83 |

| Wachinger et al. [93] | T1 | 25 | 3D | Patch | CNN | Yes | 92 |

| Li et al. [57] | T1 | 155 | 3D | Patch | Dilated | Yes | 84.3 |

| Karani et al. [94] | T1, T2 | 7 subcortical | / | / | U-Net | No | 89.3 |

| Roy et al. [47] | T1 | 27 | 2.5D | Full | U-Net | Yes | 90.1 |

| Roy et al. [63] | T1 | 27 | / | / | U-Net + Attention | No | 86.2 |

| Li et al. [59] | T1, T1-IR, FLAIR | 10 | 2D | Full | U-Net | No | 80.9 |

| Kaku et al. [95] | T1 | 102 | 2D | Full | U-Net | Yes | 81.9 |

| Huo et al. [96] | T1 | 133 | 3D | Patch | U-Net | Yes | 77.6 |

| Jog et al. [97] | T1, T2 | 9, 12 | 3D | Patch | U-Net | Yes | 94 |

| Novosad et al. [98] | T1 | 8 and 12 subcortical | 3D | Patch | FCN | Yes | 89.5 |

| Novosad et al. [99] | T1 | 12 subcortical | 3D | Patch | CNN | Yes | 80.7 |

| Sun et al. [64] | T1, T1-IR, FLAIR | Tissue, 25 | 3D | Patch | U-Net + Attention | No | 84.8 |

| Dai et al. [100] | T1 | 15-138 | 3D | Patch | U-Net | No | 87.9 |

| Roy et al. [101] | T1 | 33 | 2.5D | Full | U-Net | Yes | 88 |

| Luna et al. [102] | T1, T1-IR, FLAIR | 8 | 3D | Patch | U-Net | No | 85.5 |

| McClure et al. [61] | T1 | 50 | 3D | Patch | Dilated | Yes | 83.7 |

| Dalca et al. [103] | T1, PD | 12 | 3D | Full | U-Net | Yes | 83.5 |

| Coupe et al. [104] | T1 | 133 | 3D | Patch | U-Net | Yes | 79 |

| Ramzan et al. [62] | T1, T1-IR, FLAIR | Tissues, 8 | 3D | Patch | Dilated | No | 91.4 |

| Henschel et al. [26] | T1 | 95 | 2.5D | Full | U-Net | Yes | 89 |

| Bontempi et al. [80] | T1 | 8 | 3D | Full | U-Net | Yes | 91.3 |

| Liu et al. [105] | T1 | 14 subcortical | 3D | Patch | U-Net + LSTM | No | 88.7 |

| Lee et al. [49] | T1 | 33, 100+ | 3D | Patch | U-Net + Attention | No | 89.7 |

| Zopes et al. [106] | T1, T2, DWI, CT | 27 | 3D | Patch | U-Net | No | 85.3 |

| Li et al. [16] | T1 | 8 subcortical | 3D | Patch | C-LSTM | No | 97.6 |

| Li et al. [107] | T1 | 8 subcortical | 3D | Patch | U-Net | No | 96.8 |

| Svanera et al. [108] | T1 | 8 | 3D | Full | U-Net | Yes | 97.8 |

| Li et al. [109] | T1 | 133 | 2D | Full | U-Net + Attention | Yes | 89.7 |

| Greve et al. [110] | T1 | 12 subcortical | 3D | Full | U-Net | Yes | 77.8 |

| Li et al. [40] | T1 | 54 | 3D | Full | FCN | Yes | 83.1 |

| Meyer et al. [41] | T1 | 8 | 3D | Patch | U-Net | Yes | 93.2 |

| Wu et al. [86] | T1 | 14, 54 | 3D | Patch | M-FCN | No | 92.2 |

| Nejad et al. [111] | T1 | 12 | 2D | Patch | U-Net | Yes | 89.3 |

| Liu et al. [112] | T1 | 5, 7 | 3D | Full | CLMorph | No | 76.3 |

| Ghazi et al. [113] | T1 | 133 | 2.5D | Full | U-Net | Yes | 81 |

| Henschel et al. [48] | T1 | 95 | 2.5D | Full | U-Net | Yes | 89.9 |

| Laiton-Bonadiez et al. [70] | T1 | 37 | 3D | Patch | Transformer | No | 90 |

| Wei et al. [114] | T1 | 136 | 2D | Full | U-Net + Attention | Yes | 86 |

| Yee et al. [82] | T1 | 102 | 3D | Patch | U-Net | No | 84 |

| Baniasadi et al. [115] | T1 | 30 subcortical | 3D | Patch | U-Net | Yes | 89 |

| Billot et al. [116] | T1, T2, PD, DBS | 110 | 3D | Full | U-Net | Yes | 88 |

| Billot et al. [117] | T1, T2, DBS, FLAIR, PD, CT | 33 | 3D | Full | U-Net | Yes | 88 |

| Cao et al. [69] | T1 | 31 subcortical | 3D | Patch | Transformer | No | 87.2 |

| Li et al. [46] | T1 | 28, 139 | 2D | Full | U-Net + Attention | Yes | 87.7 |

| Moon et al. [118] | T1 | 109 | 3D | / | U-Net | No | / |

| Cao et al. [74] | T1 | 31 subcortical | 3D | Patch | Mamba | Yes | 88.4 |

| Diaz et al. [119] | T1, T2, FLAIR | 7 | 3D | Patch | U-Net | Yes | 88 |

| Kujawa et al. [120] | T1 | 108 | 3D | Patch | U-Net | No | 87.5 |

| Lorzel et al. [25] | T1 | 58 | 3D | Patch | U-Net | No | 81 |

| Svanera et al. [52] | T1 | 7 | 3D | Full | LOD-brain | Yes | 93 |

| Goto et al. [121] | T1 | 107 | 3D | Full | U-Net | No | / |

| Le Bot et al. [122] | FLAIR | 133 | 3D | Patch | U-Net | No | 91 |

| Li et al. [65] | T1, PET | 45 | 3D | Full | Transformer | No | 85.3 |

| Li et al. [17] | T1 | 12 | 3D | Patch | U-Net | Yes | 90 |

| Puzio et al. [123] | T1, T2 | 38 | 3D | Patch | U-Net | No | 87 |

| Wei et al. [73] | T1 | 122 GM | 3D | Patch | Mamba | No | 91.1 |

2.7. Model Dimensionality

| Method | Modality | Dim. | Input | Arch. | Avail. | DSC (%) |

|---|---|---|---|---|---|---|

| Stollenga et al. [128] | T1, T1-IR, T2-FLAIR | 3D | Patch | PyraMiD-LSTM | No | 85.7 |

| Nguyen et al. [129] | T1 | 2.5D | Patch | CNN | No | 86 |

| Fedorov et al. [130] | T1 | 3D | Patch | Dilated | No | 86.5 |

| Chen et al. [43] | T1, T1-IR, T2-FLAIR | 3D | Patch | FCN | No | 86.6 |

| Khagi et al. [131] | T1 | 2D | Full | SegNet | No | 76.2 |

| Rajchl et al. [132] | T1 | 3D | Patch | FCN | Yes | 93 |

| Kumar et al. [53] | T1 | 2D | Patch | SegNet | No | 80 |

| Gottapu et al. [133] | T1 | 2D | Patch | CNN | No | 67.3 |

| Mahbod et al. [134] | T1, T1-IR, FLAIR | / | / | ANN | No | 85.3 |

| Chen et al. [135] | T1, T1-IR, T2-FLAIR | 2D | Patch | OctopusNet | No | 82.9 |

| Kong et al. [136] | T1 | 2D | Patch | CNN | No | / |

| Bernal et al. [77] | T1, and T1 and T2 | 2D, 3D | Patch | FCN, U-Net | Yes | 92.9 |

| Dolz et al. [45] | T1, T1-IR, T2-FLAIR | 3D | Patch | FCN | Yes | 87.2 |

| Gabr et al. [137] | T1, T2, T2-FLAIR, PD | 2D | Full | U-Net | Yes | 93 |

| Ito et al. [138] | T1 | 3D | Patch | FCN | No | 86 |

| Yogananda et al. [139] | T1 | 3D | Patch | U-Net | No | 86.6 |

| Mujica-Vargas et al. [140] | T1, T2, T2-FLAIR | 2D | Full | U-Net | No | 93.1 |

| Wang et al. [141] | T1 | 3D | Patch | U-Net | No | 90.4 |

| Xie et al. [142] | T1, T2, PD | 2D | Full | LSTM | No | 98.7 |

| Yan et al. [143] | T1 | 3D | Full | GCN | No | 91.6 |

| Li et al. [60] | T1, T1-IR, T2-FLAIR | 2.5D | Full | Dilated | Yes | 87 |

| Wei et al. [51] | T1 | 2.5D | Patch | U-Net | No | 96.3 |

| Sun et al. [64] | T1, T1-IR and T2-FLAIR | 3D | Patch | U-Net + Attention | No | 87 |

| Ramzan et al. [62] | T1, T1-IR, T2-FLAIR | 3D | Patch | Dilated | No | 88 |

| Lee et al. [79] | T1 | 2D | Patch | U-Net | Yes | 93.6 |

| Mostapha et al. [39] | T1 | 3D | Full | U-Net | No | 90.3 |

| Narayana et al. [144] | T1, T2, T2-FLAIR, PD | 2D | Full | U-Net | Yes | 92.5 |

| Yamanakkanavar et al. [78] | T1 | 2D | Patch | U-Net | No | 95.2 |

| Sendra-Balcells et al. [24] | T1 | 2D | Full | U-Net | No | 88.5 |

| Dayananda et al. [54] | T1 | 2D | Patch | Squeeze U-Net | No | 95.3 |

| Basnet et al. [10] | T1, T2 | 3D | Patch | U-Net | Yes | 93.1 |

| Long et al. [145] | T1, T1-IR, T2-FLAIR | 3D | Patch | MSCD-UNet | No | 88.5 |

| Woo et al. [125] | T1 | 3D | Full | U-Net | No | 94.9 |

| Yamanakkanavar et al. [50] | T1 | 2D | Patch | U-Net | No | 95.7 |

| Zhang et al. [146] | Diffusion, T1, T2 | 2.5D | Full | U-Net | No | 85 |

| Zhang et al. [147] | T1 | 3D | Full | GCN | No | 92.3 |

| Wei et al. [66] | T1 | 3D | Patch | Nes-Net | No | 88.5 |

| Niu et al. [67] | T1 | 2D | Full | U-Net | Yes | 91.1 |

| Goyal et al. [148] | T1 | 2D | Patch | SegNet | No | 83 |

| Prajapati et al. [124] | T1 | 2D | Full | U-Net | No | 95.7 |

| Rao et al. [68] | T1 | 3D | Full | Transformer | Yes | 95.6 |

| Yamanakkanavar et al. [50] | T1 | 2D | Patch | Squeeze U-Net | No | 96 |

| Yamanakkanavar et al. [149] | T1 | 2D | Patch | MF2-Net | No | 95.3 |

| Dayananda et al. [44] | T1 | 2D | Patch | Squeeze U-Net | No | 95.7 |

| Clerigues et al. [150] | T1, T2-FLAIR | 3D | Patch | U-Net | Yes | 94.6 |

| Guven et al. [151] | T1 | 2D | Full | GAN | No | / |

| Gi et al. [8] | T2 | 2.5D, 3D | Patch, full | U-Net | No | 91.3 |

| Oh et al. [152] | T1 | 3D | Full | U-Net | Yes | 88.5 |

| Simarro et al. [9] | T1 | 3D | Patch | U-Net | Yes | / |

| Hossain et al. [127] | T1 | 3D | Patch | U-Net | Yes | 93.7 |

| Liu et al. [38] | T1 | 3D | Patch | U-Net + Attention | Yes | 98.9 |

| Mohammadi et al. [72] | T1, T2 | 2D | Full | Transformer | No | 84.4 |

2.8. Generalization Strategies

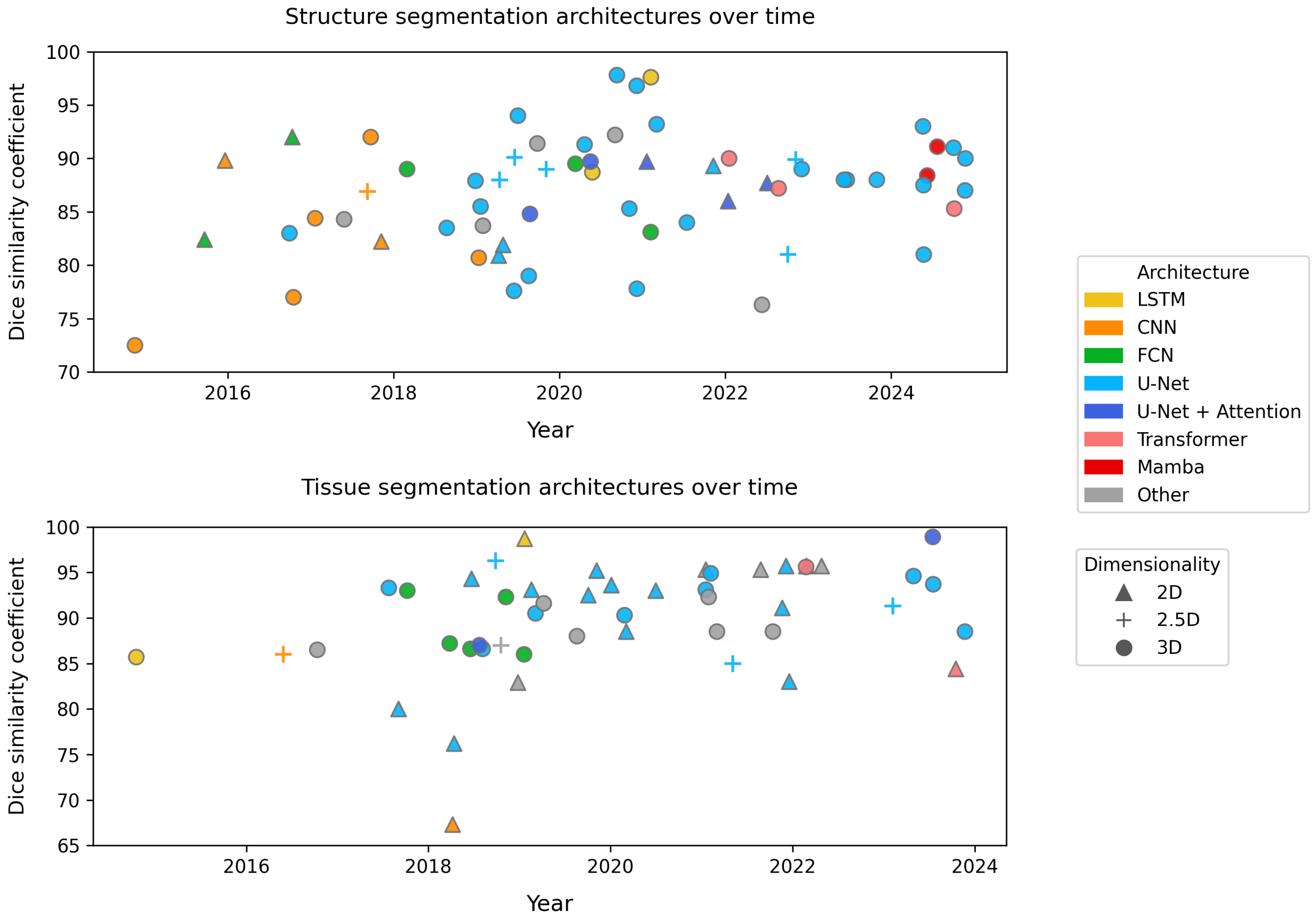

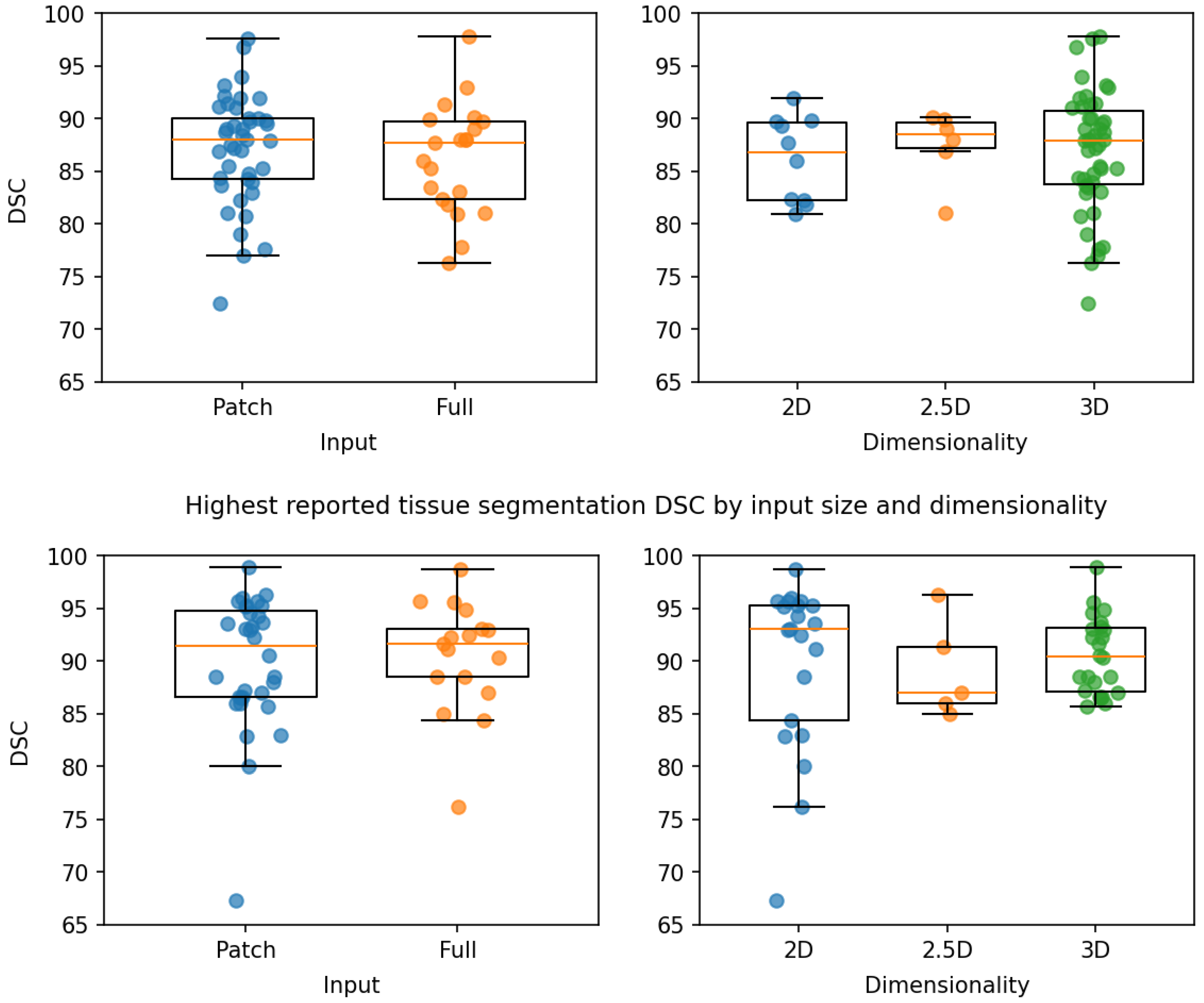

3. Results

3.1. Validation Strategies

3.2. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Yepes-Calderon, F.; McComb, J.G. Eliminating the need for manual segmentation to determine size and volume from MRI. A proof of concept on segmenting the lateral ventricles. PLoS ONE 2023, 18, e0285414. [Google Scholar] [CrossRef]

- Zhou, S.K.; Greenspan, H.; Davatzikos, C.; Duncan, J.S.; Van Ginneken, B.; Madabhushi, A.; Prince, J.L.; Rueckert, D.; Summers, R.M. A Review of Deep Learning in Medical Imaging: Imaging Traits, Technology Trends, Case Studies with Progress Highlights, and Future Promises. Proc. IEEE 2021, 109, 820–838. [Google Scholar] [CrossRef] [PubMed]

- Magadza, T.; Viriri, S. Deep Learning for Brain Tumor Segmentation: A Survey of State-of-the-Art. J. Imaging 2021, 7, 19. [Google Scholar] [CrossRef] [PubMed]

- Zeng, C.; Gu, L.; Liu, Z.; Zhao, S. Review of Deep Learning Approaches for the Segmentation of Multiple Sclerosis Lesions on Brain MRI. Front. Neuroinform. 2020, 14, 610967. [Google Scholar] [CrossRef] [PubMed]

- Ciceri, T.; Squarcina, L.; Giubergia, A.; Bertoldo, A.; Brambilla, P.; Peruzzo, D. Review on deep learning fetal brain segmentation from Magnetic Resonance images. Artif. Intell. Med. 2023, 143, 102608. [Google Scholar] [CrossRef]

- Wu, L.; Wang, S.; Liu, J.; Hou, L.; Li, N.; Su, F.; Yang, X.; Lu, W.; Qiu, J.; Zhang, M.; et al. A survey of MRI-based brain tissue segmentation using deep learning. Complex Intell. Syst. 2025, 11. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Gi, Y.; Oh, G.; Jo, Y.; Lim, H.; Ko, Y.; Hong, J.; Lee, E.; Park, S.; Kwak, T.; Kim, S.; et al. Study of multistep Dense U-Net-based automatic segmentation for head MRI scans. Med. Phys. 2024, 51, 2230–2238. [Google Scholar] [CrossRef]

- Simarro, J.; Meyer, M.I.; Van Eyndhoven, S.; Phan, T.V.; Billiet, T.; Sima, D.M.; Ortibus, E. A deep learning model for brain segmentation across pediatric and adult populations. Sci. Rep. 2024, 14, 11735. [Google Scholar] [CrossRef]

- Basnet, R.; Ahmad, M.O.; Swamy, M. A deep dense residual network with reduced parameters for volumetric brain tissue segmentation from MR images. Biomed. Signal Process. Control 2021, 70, 103063. [Google Scholar] [CrossRef]

- Bethlehem, R.A.I.; Seidlitz, J.; White, S.R.; Vogel, J.W.; Anderson, K.M.; Adamson, C.; Adler, S.; Alexopoulos, G.S.; Anagnostou, E.; Areces-Gonzalez, A.; et al. Brain charts for the human lifespan. Nature 2022, 604, 525–533. [Google Scholar] [CrossRef]

- Singh, V.; Chertkow, H.; Lerch, J.P.; Evans, A.C.; Dorr, A.E.; Kabani, N.J. Spatial patterns of cortical thinning in mild cognitive impairment and Alzheimer’s disease. Brain 2006, 129, 2885–2893. [Google Scholar] [CrossRef]

- Alves, F.; Kalinowski, P.; Ayton, S. Accelerated Brain Volume Loss Caused by Anti-β-Amyloid Drugs: A Systematic Review and Meta-analysis. Neurology 2023, 100, e2114–e2124. [Google Scholar] [CrossRef]

- Chu, R.; Kim, G.; Tauhid, S.; Khalid, F.; Healy, B.C.; Bakshi, R. Whole brain and deep gray matter atrophy detection over 5 years with 3T MRI in multiple sclerosis using a variety of automated segmentation pipelines. PLoS ONE 2018, 13, e0206939. [Google Scholar] [CrossRef] [PubMed]

- Uhr, V.; Diaz, I.; Rummel, C.; McKinley, R. Exploring Robustness of Cortical Morphometry in the presence of white matter lesions, using Diffusion Models for Lesion Filling. arXiv 2025. [Google Scholar] [CrossRef]

- Li, H.; Zhang, H.; Johnson, H.; Long, J.D.; Paulsen, J.S.; Oguz, I. Longitudinal subcortical segmentation with deep learning. In Proceedings of the Medical Imaging 2021: Image Processing, Online, 15–19 February 2021; p. 43. [Google Scholar] [CrossRef]

- Li, M.; Magnússon, M.; Kristjánsdóttir, I.; Lund, S.H.; Van Eimeren, T.; Ellingsen, L.M. Region-based U-nets for fast, accurate, and scalable deep brain segmentation: Application to Parkinson Plus Syndromes. NeuroImage Clin. 2025, 47, 103807. [Google Scholar] [CrossRef] [PubMed]

- Sacchet, M.D.; Livermore, E.E.; Iglesias, J.E.; Glover, G.H.; Gotlib, I.H. Subcortical volumes differentiate Major Depressive Disorder, Bipolar Disorder, and remitted Major Depressive Disorder. J. Psychiatr. Res. 2015, 68, 91–98. [Google Scholar] [CrossRef]

- Hokama, H.; Shenton, M.E.; Nestor, P.G.; Kikinis, R.; Levitt, J.J.; Metcalf, D.; Wible, C.G.; O’Donnella, B.F.; Jolesz, F.A.; McCarley, R.W. Caudate, putamen, and globus pallidus volume in schizophrenia: A quantitative MRI study. Psychiatry Res. Neuroimaging 1995, 61, 209–229. [Google Scholar] [CrossRef]

- Moazzami, K.; Shao, I.Y.; Chen, L.Y.; Lutsey, P.L.; Jack, C.R.; Mosley, T.; Joyner, D.A.; Gottesman, R.; Alonso, A. Atrial Fibrillation, Brain Volumes, and Subclinical Cerebrovascular Disease (from the Atherosclerosis Risk in Communities Neurocognitive Study [ARIC-NCS]). Am. J. Cardiol. 2020, 125, 222–228. [Google Scholar] [CrossRef]

- Yu, S.Y.; Chen, X.Y.; Chen, Z.Y.; Dong, Z.; Liu, M.Q. Regional volume changes of the brain in migraine chronification. Neural Regen. Res. 2020, 15, 1701. [Google Scholar] [CrossRef]

- Walker, K.A.; Gottesman, R.F.; Wu, A.; Knopman, D.S.; Mosley, T.H.; Alonso, A.; Kucharska-Newton, A.; Brown, C.H. Association of Hospitalization, Critical Illness, and Infection with Brain Structure in Older Adults. J. Am. Geriatr. Soc. 2018, 66, 1919–1926. [Google Scholar] [CrossRef]

- Auer, D.P.; Wilke, M.; Grabner, A.; Heidenreich, J.O.; Bronisch, T.; Wetter, T.C. Reduced NAA in the thalamus and altered membrane and glial metabolism in schizophrenic patients detected by 1H-MRS and tissue segmentation. Schizophr. Res. 2001, 52, 87–99. [Google Scholar] [CrossRef]

- Sendra-Balcells, C.; Salvador, R.; Pedro, J.B.; Biagi, M.C.; Aubinet, C.; Manor, B.; Thibaut, A.; Laureys, S.; Lekadir, K.; Ruffini, G. Convolutional neural network MRI segmentation for fast and robust optimization of transcranial electrical current stimulation of the human brain. bioRxiv 2020. [Google Scholar] [CrossRef]

- Lorzel, H.M.; Allen, M.D. Development of the next-generation functional neuro-cognitive imaging protocol—Part 1: A 3D sliding-window convolutional neural net for automated brain parcellation. NeuroImage 2024, 286, 120505. [Google Scholar] [CrossRef] [PubMed]

- Henschel, L.; Conjeti, S.; Estrada, S.; Diers, K.; Fischl, B.; Reuter, M. FastSurfer—A fast and accurate deep learning based neuroimaging pipeline. NeuroImage 2020, 219, 117012. [Google Scholar] [CrossRef] [PubMed]

- Despotović, I.; Goossens, B.; Philips, W. MRI Segmentation of the Human Brain: Challenges, Methods, and Applications. Comput. Math. Methods Med. 2015, 2015, 450341. [Google Scholar] [CrossRef] [PubMed]

- Fischl, B. FreeSurfer. NeuroImage 2012, 62, 774–781. [Google Scholar] [CrossRef]

- Friston, K.J. (Ed.) Statistical Parametric Mapping: The Analysis of Funtional Brain Images, 1st ed.; Elsevier: Amsterdam, The Netherlands; Academic Press: Boston, MA, USA, 2007. [Google Scholar]

- Gaser, C.; Dahnke, R.; Thompson, P.M.; Kurth, F.; Luders, E.; Alzheimer’s Disease Neuroimaging Initiative. CAT: A computational Anatomy Toolbox for the Analysis of Structural MRI Data. GigaScience 2024, 13, giae049. [Google Scholar] [CrossRef]

- Puonti, O.; Iglesias, J.E.; Van Leemput, K. Fast and sequence-adaptive whole-brain segmentation using parametric Bayesian modeling. NeuroImage 2016, 143, 235–249. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Giusti, A.; Gambardella, L.M.; Schmidhuber, J. Deep Neural Networks Segment Neuronal Membranes in Electron Microscopy Images. In Proceedings of the Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Li, X.; Ding, H.; Yuan, H.; Zhang, W.; Pang, J.; Cheng, G.; Chen, K.; Liu, Z.; Loy, C.C. Transformer-Based Visual Segmentation: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10138–10163. [Google Scholar] [CrossRef]

- Ma, J.; Li, F.; Wang, B. U-Mamba: Enhancing Long-range Dependency for Biomedical Image Segmentation. arXiv 2024. [Google Scholar] [CrossRef]

- Roy, S.; Kügler, D.; Reuter, M. Are 2.5D approaches superior to 3D deep networks in whole brain segmentation? In Proceedings of the International Conference on Medical Imaging with Deep Learning, Zurich, Switzerland, 6–8 July 2022. [Google Scholar]

- Klein, A.; Tourville, J. 101 Labeled Brain Images and a Consistent Human Cortical Labeling Protocol. Front. Neurosci. 2012, 6, 171. [Google Scholar] [CrossRef]

- Liu, Y.; Song, C.; Ning, X.; Gao, Y.; Wang, D. nnSegNeXt: A 3D Convolutional Network for Brain Tissue Segmentation Based on Quality Evaluation. Bioengineering 2024, 11, 575. [Google Scholar] [CrossRef]

- Mostapha, M.; Mailhe, B.; Chen, X.; Ceccaldi, P.; Yoo, Y.; Nadar, M. Braided Networks for Scan-Aware MRI Brain Tissue Segmentation. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 136–139. [Google Scholar] [CrossRef]

- Li, Y.; Cui, J.; Sheng, Y.; Liang, X.; Wang, J.; Chang, E.I.C.; Xu, Y. Whole brain segmentation with full volume neural network. Comput. Med. Imaging Graph. 2021, 93, 101991. [Google Scholar] [CrossRef]

- Meyer, M.I.; De La Rosa, E.; Pedrosa De Barros, N.; Paolella, R.; Van Leemput, K.; Sima, D.M. A Contrast Augmentation Approach to Improve Multi-Scanner Generalization in MRI. Front. Neurosci. 2021, 15, 708196. [Google Scholar] [CrossRef] [PubMed]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Dou, Q.; Yu, L.; Qin, J.; Heng, P.A. VoxResNet: Deep voxelwise residual networks for brain segmentation from 3D MR images. NeuroImage 2018, 170, 446–455. [Google Scholar] [CrossRef] [PubMed]

- Dayananda, C.; Choi, J.Y.; Lee, B. A Squeeze U-SegNet Architecture Based on Residual Convolution for Brain MRI Segmentation. IEEE Access 2022, 10, 52804–52817. [Google Scholar] [CrossRef]

- Dolz, J.; Gopinath, K.; Yuan, J.; Lombaert, H.; Desrosiers, C.; Ben Ayed, I. HyperDense-Net: A Hyper-Densely Connected CNN for Multi-Modal Image Segmentation. IEEE Trans. Med. Imaging 2019, 38, 1116–1126. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, C.; Zhang, Y.; Wang, X.; Ma, X.; Zhang, H.; Wu, S. CAN: Context-assisted full Attention Network for brain tissue segmentation. Med. Image Anal. 2023, 85, 102710. [Google Scholar] [CrossRef]

- Guha Roy, A.; Conjeti, S.; Navab, N.; Wachinger, C. QuickNAT: A fully convolutional network for quick and accurate segmentation of neuroanatomy. NeuroImage 2019, 186, 713–727. [Google Scholar] [CrossRef]

- Estrada, S.; Kügler, D.; Bahrami, E.; Xu, P.; Mousa, D.; Breteler, M.M.; Aziz, N.A.; Reuter, M. FastSurfer-HypVINN: Automated sub-segmentation of the hypothalamus and adjacent structures on high-resolutional brain MRI. Imaging Neurosci. 2023, 1, 1–32. [Google Scholar] [CrossRef]

- Lee, M.; Kim, J.; Ey Kim, R.; Kim, H.G.; Oh, S.W.; Lee, M.K.; Wang, S.M.; Kim, N.Y.; Kang, D.W.; Rieu, Z.; et al. Split-Attention U-Net: A Fully Convolutional Network for Robust Multi-Label Segmentation from Brain MRI. Brain Sci. 2020, 10, 974. [Google Scholar] [CrossRef] [PubMed]

- Yamanakkanavar, N.; Choi, J.Y.; Lee, B. SM-SegNet: A Lightweight Squeeze M-SegNet for Tissue Segmentation in Brain MRI Scans. Sensors 2022, 22, 5148. [Google Scholar] [CrossRef] [PubMed]

- Wei, J.; Xia, Y.; Zhang, Y. M3Net: A multi-model, multi-size, and multi-view deep neural network for brain magnetic resonance image segmentation. Pattern Recognit. 2019, 91, 366–378. [Google Scholar] [CrossRef]

- Svanera, M.; Savardi, M.; Signoroni, A.; Benini, S.; Muckli, L. Fighting the scanner effect in brain MRI segmentation with a progressive level-of-detail network trained on multi-site data. Med. Image Anal. 2024, 93, 103090. [Google Scholar] [CrossRef]

- Kumar, P.; Nagar, P.; Arora, C.; Gupta, A. U-Segnet: Fully Convolutional Neural Network Based Automated Brain Tissue Segmentation Tool. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3503–3507. [Google Scholar] [CrossRef]

- Dayananda, C.; Choi, J.Y.; Lee, B. Multi-Scale Squeeze U-SegNet with Multi Global Attention for Brain MRI Segmentation. Sensors 2021, 21, 3363. [Google Scholar] [CrossRef]

- Yamanakkanavar, N.; Lee, B. A novel M-SegNet with global attention CNN architecture for automatic segmentation of brain MRI. Comput. Biol. Med. 2021, 136, 104761. [Google Scholar] [CrossRef]

- Paschali, M.; Gasperini, S.; Roy, A.G.; Fang, M.Y.S.; Navab, N. 3DQ: Compact Quantized Neural Networks for Volumetric Whole Brain Segmentation. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2019; Shen, D., Liu, T., Peters, T.M., Staib, L.H., Essert, C., Zhou, S., Yap, P.T., Khan, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11766, pp. 438–446. [Google Scholar] [CrossRef]

- Li, W.; Wang, G.; Fidon, L.; Ourselin, S.; Cardoso, M.J.; Vercauteren, T. On the Compactness, Efficiency, and Representation of 3D Convolutional Networks: Brain Parcellation as a Pretext Task. In Information Processing in Medical Imaging; Niethammer, M., Styner, M., Aylward, S., Zhu, H., Oguz, I., Yap, P.T., Shen, D., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10265, pp. 348–360. [Google Scholar] [CrossRef]

- Moeskops, P.; Veta, M.; Lafarge, M.W.; Eppenhof, K.A.J.; Pluim, J.P.W. Adversarial Training and Dilated Convolutions for Brain MRI Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Cardoso, M.J., Arbel, T., Carneiro, G., Syeda-Mahmood, T., Tavares, J.M.R., Moradi, M., Bradley, A., Greenspan, H., Papa, J.P., Madabhushi, A., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10553, pp. 56–64. [Google Scholar] [CrossRef]

- Li, H.; Zhygallo, A.; Menze, B. Automatic Brain Structures Segmentation Using Deep Residual Dilated U-Net. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Crimi, A., Bakas, S., Kuijf, H., Keyvan, F., Reyes, M., Van Walsum, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11383, pp. 385–393. [Google Scholar] [CrossRef]

- Li, J.; Yu, Z.L.; Gu, Z.; Liu, H.; Li, Y. MMAN: Multi-modality aggregation network for brain segmentation from MR images. Neurocomputing 2019, 358, 10–19. [Google Scholar] [CrossRef]

- McClure, P.; Rho, N.; Lee, J.A.; Kaczmarzyk, J.R.; Zheng, C.Y.; Ghosh, S.S.; Nielson, D.M.; Thomas, A.G.; Bandettini, P.; Pereira, F. Knowing What You Know in Brain Segmentation Using Bayesian Deep Neural Networks. Front. Neuroinform. 2019, 13, 67. [Google Scholar] [CrossRef]

- Ramzan, F.; Khan, M.U.G.; Iqbal, S.; Saba, T.; Rehman, A. Volumetric Segmentation of Brain Regions From MRI Scans Using 3D Convolutional Neural Networks. IEEE Access 2020, 8, 103697–103709. [Google Scholar] [CrossRef]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent Spatial and Channel ‘Squeeze & Excitation’ in Fully Convolutional Networks. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11070, pp. 421–429. [Google Scholar] [CrossRef]

- Sun, L.; Ma, W.; Ding, X.; Huang, Y.; Liang, D.; Paisley, J. A 3D Spatially Weighted Network for Segmentation of Brain Tissue From MRI. IEEE Trans. Med. Imaging 2020, 39, 898–909. [Google Scholar] [CrossRef]

- Li, W.; Huang, Z.; Zhang, Q.; Zhang, N.; Zhao, W.; Wu, Y.; Yuan, J.; Yang, Y.; Zhang, Y.; Yang, Y.; et al. Accurate Whole-Brain Segmentation for Bimodal PET/MR Images via a Cross-Attention Mechanism. IEEE Trans. Radiat. Plasma Med. Sci. 2025, 9, 47–56. [Google Scholar] [CrossRef]

- Wei, J.; Wu, Z.; Wang, L.; Bui, T.D.; Qu, L.; Yap, P.T.; Xia, Y.; Li, G.; Shen, D. A cascaded nested network for 3T brain MR image segmentation guided by 7T labeling. Pattern Recognit. 2022, 124, 108420. [Google Scholar] [CrossRef] [PubMed]

- Niu, K.; Guo, Z.; Peng, X.; Pei, S. P-ResUnet: Segmentation of brain tissue with Purified Residual Unet. Comput. Biol. Med. 2022, 151, 106294. [Google Scholar] [CrossRef] [PubMed]

- Rao, V.M.; Wan, Z.; Arabshahi, S.; Ma, D.J.; Lee, P.Y.; Tian, Y.; Zhang, X.; Laine, A.F.; Guo, J. Improving across-dataset brain tissue segmentation for MRI imaging using transformer. Front. Neuroimaging 2022, 1, 1023481. [Google Scholar] [CrossRef]

- Cao, A.; Rao, V.M.; Liu, K.; Liu, X.; Laine, A.F.; Guo, J. TABSurfer: A Hybrid Deep Learning Architecture for Subcortical Segmentation. arXiv 2023. [Google Scholar] [CrossRef]

- Laiton-Bonadiez, C.; Sanchez-Torres, G.; Branch-Bedoya, J. Deep 3D Neural Network for Brain Structures Segmentation Using Self-Attention Modules in MRI Images. Sensors 2022, 22, 2559. [Google Scholar] [CrossRef]

- Yu, X.; Tang, Y.; Yang, Q.; Lee, H.H.; Bao, S.; Huo, Y.; Landman, B.A. Enhancing hierarchical transformers for whole brain segmentation with intracranial measurements integration. In Proceedings of the Medical Imaging 2024: Clinical and Biomedical Imaging, San Diego, CA, USA, 18–22 February 2024; p. 18. [Google Scholar] [CrossRef]

- Mohammadi, Z.; Aghaei, A.; Moghaddam, M.E. CycleFormer: Brain tissue segmentation in the presence of Multiple Sclerosis lesions and Intensity Non-Uniformity artifact. Biomed. Signal Process. Control 2024, 93, 106153. [Google Scholar] [CrossRef]

- Wei, Y.; Jagtap, J.M.; Singh, Y.; Khosravi, B.; Cai, J.; Gunter, J.L.; Erickson, B.J. Comprehensive Segmentation of Gray Matter Structures on T1-Weighted Brain MRI: A Comparative Study of Convolutional Neural Network, Convolutional Neural Network Hybrid-Transformer or -Mamba Architectures. Am. J. Neuroradiol. 2025, 46, 742–749. [Google Scholar] [CrossRef]

- Cao, A.; Li, Z.; Jomsky, J.; Laine, A.F.; Guo, J. MedSegMamba: 3D CNN-Mamba Hybrid Architecture for Brain Segmentation. arXiv 2024. [Google Scholar] [CrossRef]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef]

- Isensee, F.; Wald, T.; Ulrich, C.; Baumgartner, M.; Roy, S.; Maier-Hein, K.; Jaeger, P.F. nnU-Net Revisited: A Call for Rigorous Validation in 3D Medical Image Segmentation. arXiv 2024. [Google Scholar] [CrossRef]

- Bernal, J.; Kushibar, K.; Cabezas, M.; Valverde, S.; Oliver, A.; Llado, X. Quantitative Analysis of Patch-Based Fully Convolutional Neural Networks for Tissue Segmentation on Brain Magnetic Resonance Imaging. IEEE Access 2019, 7, 89986–90002. [Google Scholar] [CrossRef]

- Yamanakkanavar, N.; Lee, B. Using a Patch-Wise M-Net Convolutional Neural Network for Tissue Segmentation in Brain MRI Images. IEEE Access 2020, 8, 120946–120958. [Google Scholar] [CrossRef]

- Lee, B.; Yamanakkanavar, N.; Choi, J.Y. Automatic segmentation of brain MRI using a novel patch-wise U-net deep architecture. PLoS ONE 2020, 15, e0236493. [Google Scholar] [CrossRef]

- Bontempi, D.; Benini, S.; Signoroni, A.; Svanera, M.; Muckli, L. CEREBRUM: A fast and fully-volumetric Convolutional Encoder-decodeR for weakly-supervised sEgmentation of BRain strUctures from out-of-the-scanner MRI. Med. Image Anal. 2020, 62, 101688. [Google Scholar] [CrossRef]

- Kushibar, K.; Valverde, S.; González-Villà, S.; Bernal, J.; Cabezas, M.; Oliver, A.; Lladó, X. Automated sub-cortical brain structure segmentation combining spatial and deep convolutional features. Med. Image Anal. 2018, 48, 177–186. [Google Scholar] [CrossRef]

- Yee, E.; Ma, D.; Popuri, K.; Chen, S.; Lee, H.; Chow, V.; Ma, C.; Wang, L.; Beg, M.F. 3D hemisphere-based convolutional neural network for whole-brain MRI segmentation. Comput. Med. Imaging Graph. 2022, 95, 102000. [Google Scholar] [CrossRef]

- De Brebisson, A.; Montana, G. Deep neural networks for anatomical brain segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 20–28. [Google Scholar] [CrossRef]

- Moeskops, P.; Viergever, M.A.; Mendrik, A.M.; De Vries, L.S.; Benders, M.J.N.L.; Isgum, I. Automatic Segmentation of MR Brain Images With a Convolutional Neural Network. IEEE Trans. Med. Imaging 2016, 35, 1252–1261. [Google Scholar] [CrossRef]

- Mehta, R.; Majumdar, A.; Sivaswamy, J. BrainSegNet: A convolutional neural network architecture for automated segmentation of human brain structures. J. Med. Imaging 2017, 4, 024003. [Google Scholar] [CrossRef]

- Wu, J.; Tang, X. Brain segmentation based on multi-atlas and diffeomorphism guided 3D fully convolutional network ensembles. Pattern Recognit. 2021, 115, 107904. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. Int. J. Comput. Vis. 2020, 128, 742–755. [Google Scholar] [CrossRef]

- Shakeri, M.; Tsogkas, S.; Ferrante, E.; Lippe, S.; Kadoury, S.; Paragios, N.; Kokkinos, I. Sub-cortical brain structure segmentation using F-CNN’S. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 269–272. [Google Scholar] [CrossRef]

- Milletari, F.; Ahmadi, S.A.; Kroll, C.; Plate, A.; Rozanski, V.; Maiostre, J.; Levin, J.; Dietrich, O.; Ertl-Wagner, B.; Bötzel, K.; et al. Hough-CNN: Deep learning for segmentation of deep brain regions in MRI and ultrasound. Comput. Vis. Image Underst. 2017, 164, 92–102. [Google Scholar] [CrossRef]

- Bao, S.; Chung, A.C.S. Multi-scale structured CNN with label consistency for brain MR image segmentation. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 6, 113–117. [Google Scholar] [CrossRef]

- Dolz, J.; Desrosiers, C.; Ben Ayed, I. 3D fully convolutional networks for subcortical segmentation in MRI: A large-scale study. NeuroImage 2018, 170, 456–470. [Google Scholar] [CrossRef] [PubMed]

- Mehta, R.; Sivaswamy, J. M-net: A Convolutional Neural Network for deep brain structure segmentation. In Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, Australia, 18–21 April 2017; pp. 437–440. [Google Scholar] [CrossRef]

- Wachinger, C.; Reuter, M.; Klein, T. DeepNAT: Deep convolutional neural network for segmenting neuroanatomy. NeuroImage 2018, 170, 434–445. [Google Scholar] [CrossRef]

- Karani, N.; Chaitanya, K.; Baumgartner, C.; Konukoglu, E. A Lifelong Learning Approach to Brain MR Segmentation Across Scanners and Protocols. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2018; Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 11070, pp. 476–484. [Google Scholar] [CrossRef]

- Kaku, A.; Hegde, C.V.; Huang, J.; Chung, S.; Wang, X.; Young, M.; Radmanesh, A.; Lui, Y.W.; Razavian, N. DARTS: DenseUnet-based Automatic Rapid Tool for brain Segmentation. arXiv 2019, arXiv:1911.05567. [Google Scholar] [CrossRef]

- Huo, Y.; Xu, Z.; Xiong, Y.; Aboud, K.; Parvathaneni, P.; Bao, S.; Bermudez, C.; Resnick, S.M.; Cutting, L.E.; Landman, B.A. 3D whole brain segmentation using spatially localized atlas network tiles. NeuroImage 2019, 194, 105–119. [Google Scholar] [CrossRef]

- Jog, A.; Hoopes, A.; Greve, D.N.; Van Leemput, K.; Fischl, B. PSACNN: Pulse sequence adaptive fast whole brain segmentation. NeuroImage 2019, 199, 553–569. [Google Scholar] [CrossRef]

- Novosad, P.; Fonov, V.; Collins, D.L.; Alzheimer’s Disease Neuroimaging Initiative. Accurate and robust segmentation of neuroanatomy in T1-weighted MRI by combining spatial priors with deep convolutional neural networks. Hum. Brain Mapp. 2020, 41, 309–327. [Google Scholar] [CrossRef]

- Novosad, P.; Fonov, V.; Collins, D.L. Unsupervised domain adaptation for the automated segmentation of neuroanatomy in MRI: A deep learning approach. bioRxiv 2019. [Google Scholar] [CrossRef]

- Dai, C.; Mo, Y.; Angelini, E.; Guo, Y.; Bai, W. Transfer Learning from Partial Annotations for Whole Brain Segmentation. In Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data; Wang, Q., Milletari, F., Nguyen, H.V., Albarqouni, S., Cardoso, M.J., Rieke, N., Xu, Z., Kamnitsas, K., Patel, V., Roysam, B., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11795, pp. 199–206. [Google Scholar] [CrossRef]

- Roy, A.G.; Conjeti, S.; Navab, N.; Wachinger, C. Bayesian QuickNAT: Model uncertainty in deep whole-brain segmentation for structure-wise quality control. NeuroImage 2019, 195, 11–22. [Google Scholar] [CrossRef]

- Luna, M.; Park, S.H. 3D Patchwise U-Net with Transition Layers for MR Brain Segmentation. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Crimi, A., Bakas, S., Kuijf, H., Keyvan, F., Reyes, M., Van Walsum, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11383, pp. 394–403. [Google Scholar] [CrossRef]

- Dalca, A.V.; Yu, E.; Golland, P.; Fischl, B.; Sabuncu, M.R.; Eugenio Iglesias, J. Unsupervised Deep Learning for Bayesian Brain MRI Segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019; Shen, D., Liu, T., Peters, T.M., Staib, L.H., Essert, C., Zhou, S., Yap, P.T., Khan, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11766, pp. 356–365. [Google Scholar] [CrossRef]

- Coupé, P.; Mansencal, B.; Clément, M.; Giraud, R.; Denis De Senneville, B.; Ta, V.T.; Lepetit, V.; Manjon, J.V. AssemblyNet: A large ensemble of CNNs for 3D whole brain MRI segmentation. NeuroImage 2020, 219, 117026. [Google Scholar] [CrossRef]

- Liu, L.; Hu, X.; Zhu, L.; Fu, C.W.; Qin, J.; Heng, P.A. ψ-Net: Stacking Densely Convolutional LSTMs for Sub-Cortical Brain Structure Segmentation. IEEE Trans. Med. Imaging 2020, 39, 2806–2817. [Google Scholar] [CrossRef] [PubMed]

- Zopes, J.; Platscher, M.; Paganucci, S.; Federau, C. Multi-Modal Segmentation of 3D Brain Scans Using Neural Networks. Front. Neurol. 2021, 12, 653375. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Zhang, H.; Johnson, H.; Long, J.D.; Paulsen, J.S.; Oguz, I. MRI subcortical segmentation in neurodegeneration with cascaded 3D CNNs. In Proceedings of the Medical Imaging 2021: Image Processing, Online, 15–19 February 2021; p. 25. [Google Scholar] [CrossRef]

- Svanera, M.; Benini, S.; Bontempi, D.; Muckli, L. CEREBRUM-7T: Fast and Fully Volumetric Brain Segmentation of 7 Tesla MR Volumes. Hum. Brain Mapp. 2021, 42, 5563–5580. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Li, H.; Fan, Y. ACEnet: Anatomical context-encoding network for neuroanatomy segmentation. Med. Image Anal. 2021, 70, 101991. [Google Scholar] [CrossRef]

- Greve, D.N.; Billot, B.; Cordero, D.; Hoopes, A.; Hoffmann, M.; Dalca, A.V.; Fischl, B.; Iglesias, J.E.; Augustinack, J.C. A deep learning toolbox for automatic segmentation of subcortical limbic structures from MRI images. NeuroImage 2021, 244, 118610. [Google Scholar] [CrossRef]

- Nejad, A.; Masoudnia, S.; Nazem-Zadeh, M.R. A Fast and Memory-Efficient Brain MRI Segmentation Framework for Clinical Applications. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 2140–2143. [Google Scholar] [CrossRef]

- Liu, L.; Aviles-Rivero, A.I.; Schönlieb, C.B. Contrastive Registration for Unsupervised Medical Image Segmentation. arXiv 2022, arXiv:2011.08894. [Google Scholar] [CrossRef]

- Ghazi, M.M.; Nielsen, M. FAST-AID Brain: Fast and Accurate Segmentation Tool Using Artificial Intelligence Developed for Brain. In Image Analysis; Petersen, J., Dahl, V.A., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2025; Volume 15725, pp. 161–176. [Google Scholar] [CrossRef]

- Wei, C.; Yang, Y.; Guo, X.; Ye, C.; Lv, H.; Xiang, Y.; Ma, T. MRF-Net: A multi-branch residual fusion network for fast and accurate whole-brain MRI segmentation. Front. Neurosci. 2022, 16, 940381. [Google Scholar] [CrossRef]

- Baniasadi, M.; Petersen, M.V.; Gonçalves, J.; Horn, A.; Vlasov, V.; Hertel, F.; Husch, A. DBSegment: Fast and robust segmentation of deep brain structures considering domain generalization. Hum. Brain Mapp. 2023, 44, 762–778. [Google Scholar] [CrossRef] [PubMed]

- Billot, B.; Magdamo, C.; Cheng, Y.; Arnold, S.E.; Das, S.; Iglesias, J.E. Robust machine learning segmentation for large-scale analysis of heterogeneous clinical brain MRI datasets. Proc. Natl. Acad. Sci. USA 2023, 120, e2216399120. [Google Scholar] [CrossRef] [PubMed]

- Billot, B.; Greve, D.N.; Puonti, O.; Thielscher, A.; Van Leemput, K.; Fischl, B.; Dalca, A.V.; Iglesias, J.E. SynthSeg: Segmentation of brain MRI scans of any contrast and resolution without retraining. Med. Image Anal. 2023, 86, 102789. [Google Scholar] [CrossRef] [PubMed]

- Moon, C.M.; Lee, Y.Y.; Hyeong, K.E.; Yoon, W.; Baek, B.H.; Heo, S.H.; Shin, S.S.; Kim, S.K. Development and validation of deep learning-based automatic brain segmentation for East Asians: A comparison with Freesurfer. Front. Neurosci. 2023, 17, 1157738. [Google Scholar] [CrossRef]

- Diaz, I.; Geiger, M.; McKinley, R.I. Leveraging SO(3)-steerable convolutions for pose-robust semantic segmentation in 3D medical data. Mach. Learn. Biomed. Imaging 2024, 2, 834–855. [Google Scholar] [CrossRef]

- Kujawa, A.; Dorent, R.; Ourselin, S.; Vercauteren, T. Label Merge-and-Split: A Graph-Colouring Approach for Memory-Efficient Brain Parcellation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2024; Linguraru, M.G., Dou, Q., Feragen, A., Giannarou, S., Glocker, B., Lekadir, K., Schnabel, J.A., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; Volume 15009, pp. 350–360. [Google Scholar] [CrossRef]

- Goto, M.; Kamagata, K.; Andica, C.; Takabayashi, K.; Uchida, W.; Goto, T.; Yuzawa, T.; Kitamura, Y.; Hatano, T.; Hattori, N.; et al. Deep Learning-based Hierarchical Brain Segmentation with Preliminary Analysis of the Repeatability and Reproducibility. Magn. Reson. Med. Sci. 2025, 24. [Google Scholar] [CrossRef]

- Bot, E.L.; Giraud, R.; Mansencal, B.; Tourdias, T.; Manjon, J.V.; Coupé, P. FLAIRBrainSeg: Fine-grained brain segmentation using FLAIR MRI only. arXiv 2025. [Google Scholar] [CrossRef]

- Puzio, T.; Matera, K.; Karwowski, J.; Piwnik, J.; Białkowski, S.; Podyma, M.; Dunikowski, K.; Siger, M.; Stasiołek, M.; Grzelak, P.; et al. Deep learning-based automatic segmentation of brain structures on MRI: A test-retest reproducibility analysis. Comput. Struct. Biotechnol. J. 2025, 28, 128–140. [Google Scholar] [CrossRef]

- Prajapati, R.; Kwon, G.R. SIP-UNet: Sequential Inputs Parallel UNet Architecture for Segmentation of Brain Tissues from Magnetic Resonance Images. Mathematics 2022, 10, 2755. [Google Scholar] [CrossRef]

- Woo, B.; Lee, M. Comparison of tissue segmentation performance between 2D U-Net and 3D U-Net on brain MR Images. In Proceedings of the 2021 International Conference on Electronics, Information, and Communication (ICEIC), Jeju, Republic of Korea, 31 January–3 February 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Avesta, A.; Hossain, S.; Lin, M.; Aboian, M.; Krumholz, H.M.; Aneja, S. Comparing 3D, 2.5D, and 2D Approaches to Brain Image Auto-Segmentation. Bioengineering 2023, 10, 181. [Google Scholar] [CrossRef]

- Hossain, M.I.; Amin, M.Z.; Anyimadu, D.T.; Suleiman, T.A. Comparative Study of Probabilistic Atlas and Deep Learning Approaches for Automatic Brain Tissue Segmentation from MRI Using N4 Bias Field Correction and Anisotropic Diffusion Pre-processing Techniques. arXiv 2024. [Google Scholar] [CrossRef]

- Stollenga, M.F.; Byeon, W.; Liwicki, M.; Schmidhuber, J. Parallel Multi-Dimensional LSTM, With Application to Fast Biomedical Volumetric Image Segmentation. In Proceedings of the Neural Information Processing Systems, Istanbul, Turkey, 9–12 November 2015. [Google Scholar]

- Nguyen, D.M.H.; Vu, H.T.; Ung, H.Q.; Nguyen, B.T. 3D-Brain Segmentation Using Deep Neural Network and Gaussian Mixture Model. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 815–824. [Google Scholar] [CrossRef]

- Fedorov, A.; Johnson, J.; Damaraju, E.; Ozerin, A.; Calhoun, V.; Plis, S. End-to-end learning of brain tissue segmentation from imperfect labeling. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 3785–3792. [Google Scholar] [CrossRef]

- Khagi, B.; Kwon, G.R. Pixel-Label-Based Segmentation of Cross-Sectional Brain MRI Using Simplified SegNet Architecture-Based CNN. J. Healthc. Eng. 2018, 2018, 3640705. [Google Scholar] [CrossRef]

- Rajchl, M.; Pawlowski, N.; Rueckert, D.; Matthews, P.M.; Glocker, B. NeuroNet: Fast and Robust Reproduction of Multiple Brain Image Segmentation Pipelines. arXiv 2018, arXiv:1806.04224. [Google Scholar] [CrossRef]

- Gottapu, R.D.; Dagli, C.H. DenseNet for Anatomical Brain Segmentation. Procedia Comput. Sci. 2018, 140, 179–185. [Google Scholar] [CrossRef]

- Mahbod, A.; Chowdhury, M.; Smedby, O.; Wang, C. Automatic brain segmentation using artificial neural networks with shape context. Pattern Recognit. Lett. 2018, 101, 74–79. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, J.; Wei, D.; Li, Y.; Zheng, Y. OctopusNet: A Deep Learning Segmentation Network for Multi-modal Medical Images. In Multiscale Multimodal Medical Imaging; Li, Q., Leahy, R., Dong, B., Li, X., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 11977, pp. 17–25. [Google Scholar] [CrossRef]

- Kong, Z.; Li, T.; Luo, J.; Xu, S. Automatic Tissue Image Segmentation Based on Image Processing and Deep Learning. J. Healthc. Eng. 2019, 2019, 2912458. [Google Scholar] [CrossRef]

- Gabr, R.E.; Coronado, I.; Robinson, M.; Sujit, S.J.; Datta, S.; Sun, X.; Allen, W.J.; Lublin, F.D.; Wolinsky, J.S.; Narayana, P.A. Brain and lesion segmentation in multiple sclerosis using fully convolutional neural networks: A large-scale study. Mult. Scler. J. 2020, 26, 1217–1226. [Google Scholar] [CrossRef]

- Ito, R.; Nakae, K.; Hata, J.; Okano, H.; Ishii, S. Semi-supervised deep learning of brain tissue segmentation. Neural Netw. 2019, 116, 25–34. [Google Scholar] [CrossRef]

- Yogananda, C.G.B.; Wagner, B.C.; Murugesan, G.K.; Madhuranthakam, A.; Maldjian, J.A. A Deep Learning Pipeline for Automatic Skull Stripping and Brain Segmentation. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 727–731. [Google Scholar] [CrossRef]

- Mújica-Vargas, D.; Martínez, A.; Matuz-Cruz, M.; Luna-Alvarez, A.; Morales-Xicohtencatl, M. Non-parametric Brain Tissues Segmentation via a Parallel Architecture of CNNs. In Pattern Recognition; Carrasco-Ochoa, J.A., Martínez-Trinidad, J.F., Olvera-López, J.A., Salas, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11524, pp. 216–226. [Google Scholar] [CrossRef]

- Wang, L.; Xie, C.; Zeng, N. RP-Net: A 3D Convolutional Neural Network for Brain Segmentation From Magnetic Resonance Imaging. IEEE Access 2019, 7, 39670–39679. [Google Scholar] [CrossRef]

- Xie, K.; Wen, Y. LSTM-MA: A LSTM Method with Multi-Modality and Adjacency Constraint for Brain Image Segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 240–244. [Google Scholar] [CrossRef]

- Yan, Z.; Youyong, K.; Jiasong, W.; Coatrieux, G.; Huazhong, S. Brain Tissue Segmentation based on Graph Convolutional Networks. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1470–1474. [Google Scholar] [CrossRef]

- Narayana, P.A.; Coronado, I.; Sujit, S.J.; Wolinsky, J.S.; Lublin, F.D.; Gabr, R.E. Deep-Learning-Based Neural Tissue Segmentation of MRI in Multiple Sclerosis: Effect of Training Set Size. J. Magn. Reson. Imaging 2020, 51, 1487–1496. [Google Scholar] [CrossRef]

- Long, J.S.; Ma, G.Z.; Song, E.M.; Jin, R.C. Learning U-Net Based Multi-Scale Features in Encoding-Decoding for MR Image Brain Tissue Segmentation. Sensors 2021, 21, 3232. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Breger, A.; Kevin Cho, K.I.; Ning, L.; Westin, C.F.; O’Donnell, L.J.; Pasternak, O. Deep Learning Based Segmentation of Brain Tissue from Diffusion MRI. NeuroImage 2021. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, Y.; Kong, Y.; Wu, J.; Yang, J.; Shu, H.; Coatrieux, G. GSCFN: A graph self-construction and fusion network for semi-supervised brain tissue segmentation in MRI. Neurocomputing 2021, 455, 23–37. [Google Scholar] [CrossRef]

- Goyal, P. Shallow SegNet with bilinear interpolation and weighted cross-entropy loss for Semantic segmentation of brain tissue. In Proceedings of the 2022 IEEE International Conference on Signal Processing, Informatics, Communication and Energy Systems (SPICES), Thiruvananthapuram, India, 10–12 March 2022; pp. 361–365. [Google Scholar] [CrossRef]

- Yamanakkanavar, N.; Lee, B. MF2-Net: A multipath feature fusion network for medical image segmentation. Eng. Appl. Artif. Intell. 2022, 114, 105004. [Google Scholar] [CrossRef]

- Clèrigues, A.; Valverde, S.; Salvi, J.; Oliver, A.; Lladó, X. Minimizing the effect of white matter lesions on deep learning based tissue segmentation for brain volumetry. Comput. Med. Imaging Graph. 2023, 103, 102157. [Google Scholar] [CrossRef]

- Altun Güven, S.; Talu, M.F. Brain MRI high resolution image creation and segmentation with the new GAN method. Biomed. Signal Process. Control 2023, 80, 104246. [Google Scholar] [CrossRef]

- Oh, K.; Lee, J.; Heo, D.W.; Shen, D.; Suk, H.I. Transferring Ultrahigh-Field Representations for Intensity-Guided Brain Segmentation of Low-Field Magnetic Resonance Imaging. arXiv 2024. [Google Scholar] [CrossRef]

- Fletcher, E.; DeCarli, C.; Fan, A.P.; Knaack, A. Convolutional Neural Net Learning Can Achieve Production-Level Brain Segmentation in Structural Magnetic Resonance Imaging. Front. Neurosci. 2021, 15, 683426. [Google Scholar] [CrossRef]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 2015, 15, 29. [Google Scholar] [CrossRef]

- Mendrik, A.M.; Vincken, K.L.; Kuijf, H.J.; Breeuwer, M.; Bouvy, W.H.; De Bresser, J.; Alansary, A.; De Bruijne, M.; Carass, A.; El-Baz, A.; et al. MRBrainS Challenge: Online Evaluation Framework for Brain Image Segmentation in 3T MRI Scans. Comput. Intell. Neurosci. 2015, 2015, 813696. [Google Scholar] [CrossRef]

- Rohlfing, T. Image Similarity and Tissue Overlaps as Surrogates for Image Registration Accuracy: Widely Used but Unreliable. IEEE Trans. Med. Imaging 2012, 31, 153–163. [Google Scholar] [CrossRef] [PubMed]

- Landman, B.A.; Warfield, S.K. (Eds.) MICCAI 2012 Workshop on Multi-Atlas Labeling; CreateSpace Independent Publishing Platform: Scotts Valley, CA, USA, 2012. [Google Scholar]

- Worth, A.; Tourville, J. Acceptable values of similarity coefficients in neuroanatomical labeling in MRI. In Proceedings of the Society for Neuroscience Annual Meeting, Chicago, IL, USA, 17–21 October 2015. Program No. 829.21. [Google Scholar]

- Valverde, S.; Oliver, A.; Cabezas, M.; Roura, E.; Lladó, X. Comparison of 10 brain tissue segmentation methods using revisited IBSR annotations. J. Magn. Reson. Imaging 2015, 41, 93–101. [Google Scholar] [CrossRef] [PubMed]

- Jenkinson, M.; Beckmann, C.F.; Behrens, T.E.; Woolrich, M.W.; Smith, S.M. FSL. NeuroImage 2012, 62, 782–790. [Google Scholar] [CrossRef] [PubMed]

- Datta, S.; Narayana, P.A. A comprehensive approach to the segmentation of multichannel three-dimensional MR brain images in multiple sclerosis. NeuroImage Clin. 2013, 2, 184–196. [Google Scholar] [CrossRef]

- Avants, B.B.; Tustison, N.J.; Song, G.; Cook, P.A.; Klein, A.; Gee, J.C. A reproducible evaluation of ANTs similarity metric performance in brain image registration. NeuroImage 2011, 54, 2033–2044. [Google Scholar] [CrossRef]

- Cardoso, M.J.; Modat, M.; Wolz, R.; Melbourne, A.; Cash, D.; Rueckert, D.; Ourselin, S. Geodesic Information Flows: Spatially-Variant Graphs and Their Application to Segmentation and Fusion. IEEE Trans. Med. Imaging 2015, 34, 1976–1988. [Google Scholar] [CrossRef]

- Asman, A.J.; Landman, B.A. Hierarchical performance estimation in the statistical label fusion framework. Med. Image Anal. 2014, 18, 1070–1081. [Google Scholar] [CrossRef]

- Struyfs, H.; Sima, D.M.; Wittens, M.; Ribbens, A.; Pedrosa De Barros, N.; Phan, T.V.; Ferraz Meyer, M.I.; Claes, L.; Niemantsverdriet, E.; Engelborghs, S.; et al. Automated MRI volumetry as a diagnostic tool for Alzheimer’s disease: Validation of icobrain dm. NeuroImage Clin. 2020, 26, 102243. [Google Scholar] [CrossRef]

- Kondrateva, E.; Barg, S.; Vasiliev, M. Benchmarking the Reproducibility of Brain MRI Segmentation Across Scanners and Time. arXiv 2025, arXiv:2504.15931. [Google Scholar] [CrossRef]

- Battaglini, M.; Jenkinson, M.; De Stefano, N.; Alzheimer’s Disease Neuroimaging Initiative. SIENA-XL for improving the assessment of gray and white matter volume changes on brain MRI. Hum. Brain Mapp. 2018, 39, 1063–1077. [Google Scholar] [CrossRef]

- Cocosco, C.A.; Kollokian, V.; Kwan, R.K.S.; Evans, A.C. BrainWeb: Online Interface to a 3D MRI Simulated Brain Database. NeuroImage 1997, 5, 425. [Google Scholar]

| Segmentation Type | Comparison | Method 1 | Method 2 | Mean | p-Value |

|---|---|---|---|---|---|

| Structure | Input Size | Patch | Full | 0.3 | 0.41 |

| 2D | 2.5D | −1.67 | 0.56 | ||

| Dimensionality | 2D | 3D | −1.1 | 0.58 | |

| 2.5D | 3D | 0.56 | 0.83 | ||

| Tissue | Input Size | Patch | Full | −0.02 | 0.97 |

| 2D | 2.5D | 6.1 | 0.7 | ||

| Dimensionality | 2D | 3D | 2.7 | 0.36 | |

| 2.5D | 3D | −3.4 | 0.34 |

| Reference | Method | Training | Labels | DSC (%) |

|---|---|---|---|---|

| Kaku et al. [95] | DenseUNet | No | 102 | 74.31 |

| Yes | 102 | 81.9 | ||

| U-Net | No | 102 | 73.29 | |

| Yes | 102 | 80 | ||

| Henschel et al. [26] | FastSurfer | No | 33 subcortical | 80.19 |

| 62 cortical | 80.65 | |||

| 3D U-Net | 33 subcortical | 78.65 | ||

| 62 cortical | 79 | |||

| Li et al. [109] | AceNet QuickNat v2 | Yes | 62 cortical | 82.5 77.7 |

| Laiton-bonaidez et al. [70] | Proposed | Yes | 37 | 75 |

| Liu et al. [112] | CLMorph | No | 5 combined | 64.6 |

| Henschel et al. [48] | FastSurferVINN | No | 33 subcortical | 80.06 |

| 62 cortical | 81.89 | |||

| FastSurfer | 33 subcortical | 80.06 | ||

| 62 cortical | 81.23 | |||

| Cao et al. [69] | TABSurfer | Yes | 31 subcortical | 79.2 |

| FastSurfer | No | 75.8 | ||

| FreeSurfer | \ | 74 | ||

| Kujawa et al. [120] | Proposed | No | 108 | 74 |

| Lorzel et al. [25] | AutoParch | Yes | 58 combined | 77.8 |

| FreeSurfer | 85.7 | |||

| Svanera et al. [52] | LOD-Brain | No | 7 combined | 95.5 |

| FastSurfer | 96.5 | |||

| Diaz et al. [119] | e3nn | Yes | 7 subcortical | 0.88 |

| nnUnet | 0.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Šišić, N.; Rogelj, P. Deep Learning for Brain MRI Tissue and Structure Segmentation: A Comprehensive Review. Algorithms 2025, 18, 636. https://doi.org/10.3390/a18100636

Šišić N, Rogelj P. Deep Learning for Brain MRI Tissue and Structure Segmentation: A Comprehensive Review. Algorithms. 2025; 18(10):636. https://doi.org/10.3390/a18100636

Chicago/Turabian StyleŠišić, Nedim, and Peter Rogelj. 2025. "Deep Learning for Brain MRI Tissue and Structure Segmentation: A Comprehensive Review" Algorithms 18, no. 10: 636. https://doi.org/10.3390/a18100636

APA StyleŠišić, N., & Rogelj, P. (2025). Deep Learning for Brain MRI Tissue and Structure Segmentation: A Comprehensive Review. Algorithms, 18(10), 636. https://doi.org/10.3390/a18100636