Mapping the Distribution of High-Value Broadleaf Tree Crowns through Unmanned Aerial Vehicle Image Analysis Using Deep Learning

Abstract

1. Introduction

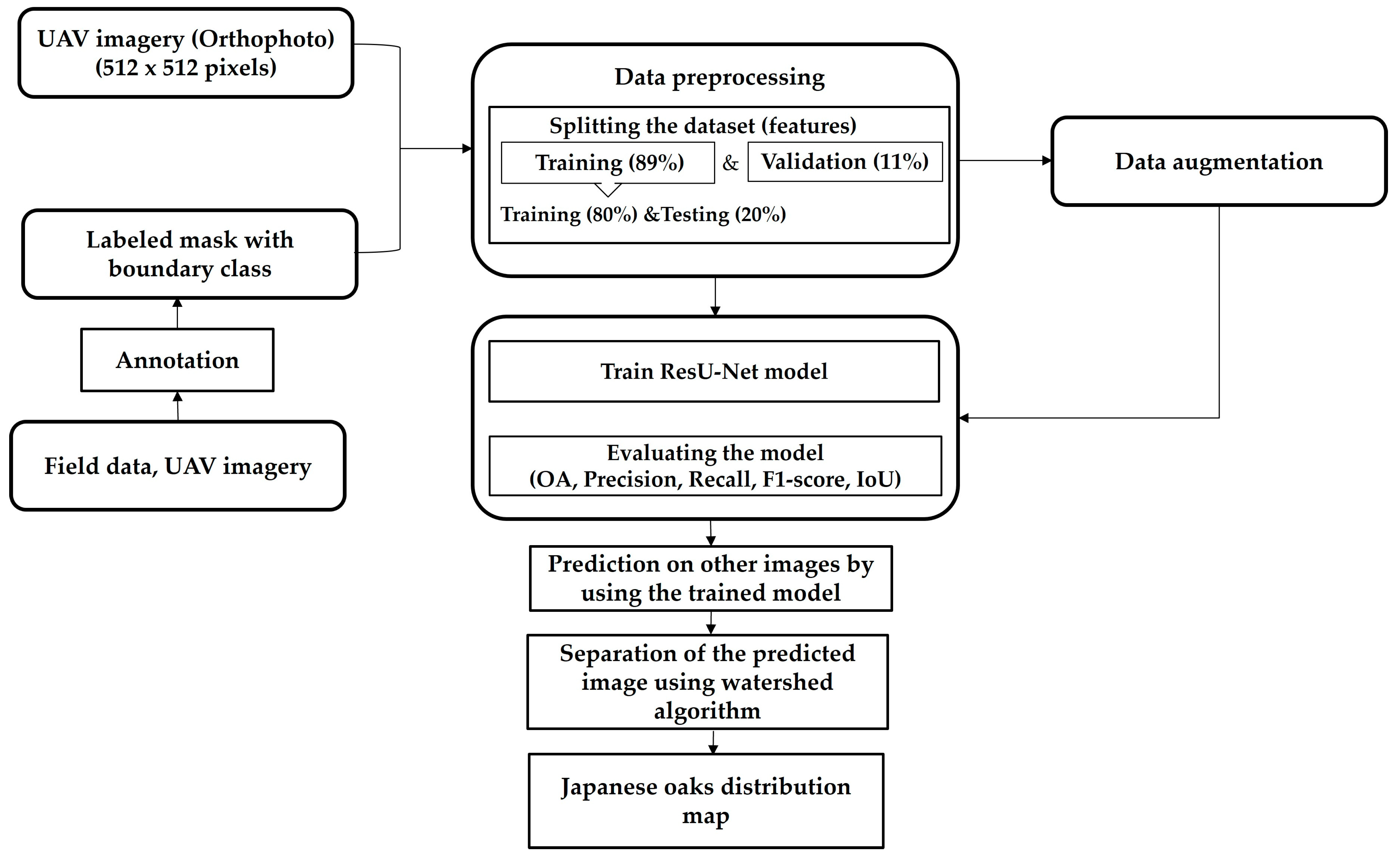

2. Materials and Methods

2.1. Study Site

2.2. Data Collection

2.2.1. Field Data

2.2.2. UAV Image Collection and Processing

2.2.3. Image Data Augmentation

2.3. Data Analysis

2.3.1. Deep Learning Algorithm

2.3.2. Identification of Japanese Oak Crowns Using the ResU-Net Model

2.3.3. Evaluation Metrics for the Model

- TP (True Positives): Pixels correctly predicted as positive (belonging to the target class).

- FP (False Positives): Pixels incorrectly predicted as positive (model predicts the class, but it is not actually present).

- FN (False Negatives): Pixels incorrectly predicted as negative (model fails to predict the class when it should have).

3. Results

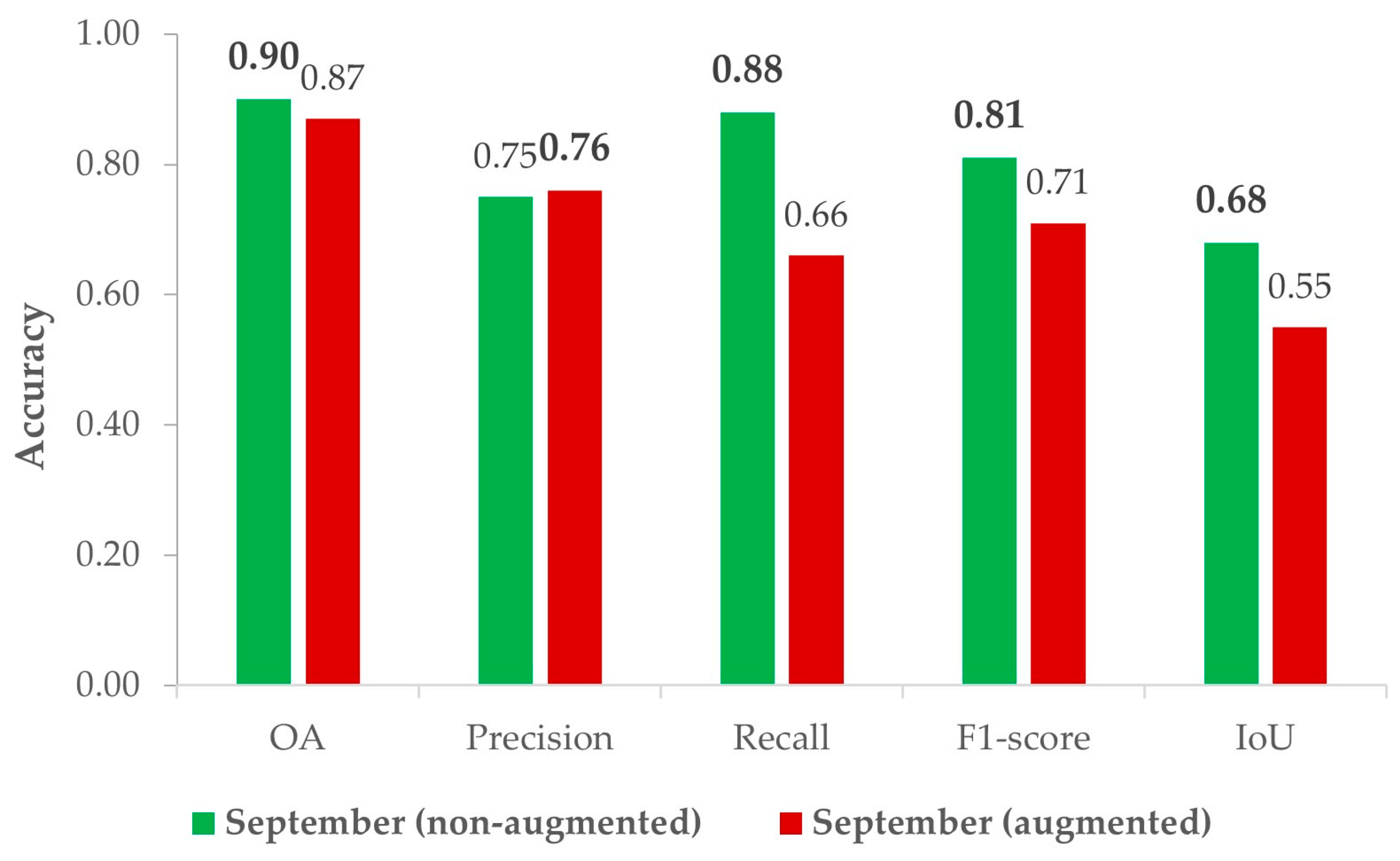

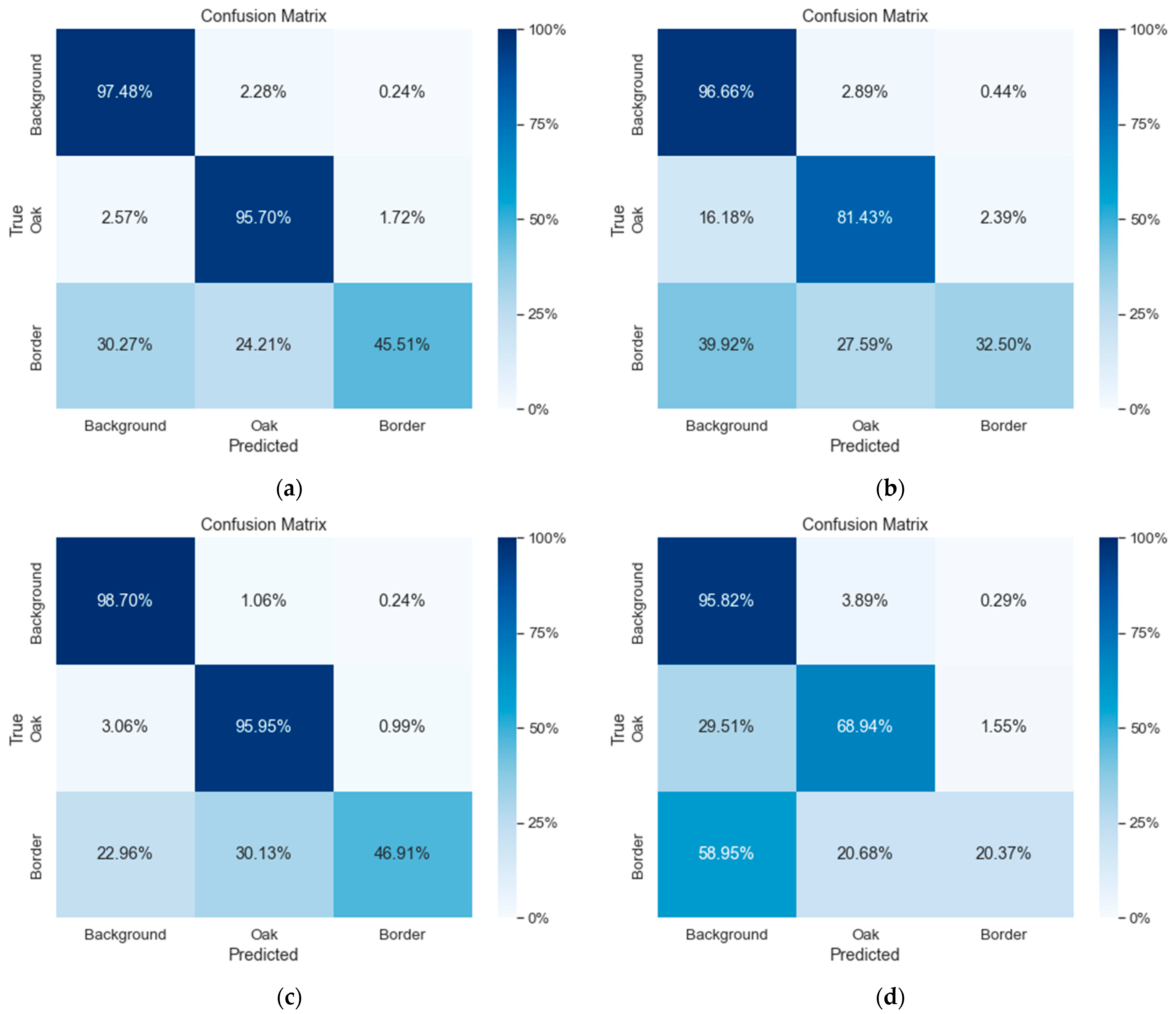

3.1. Japanese Oak Segmentation Using the ResU-Net Model and the UAV Datasets Acquired before the Leaf Color Change (without and with Augmentation)

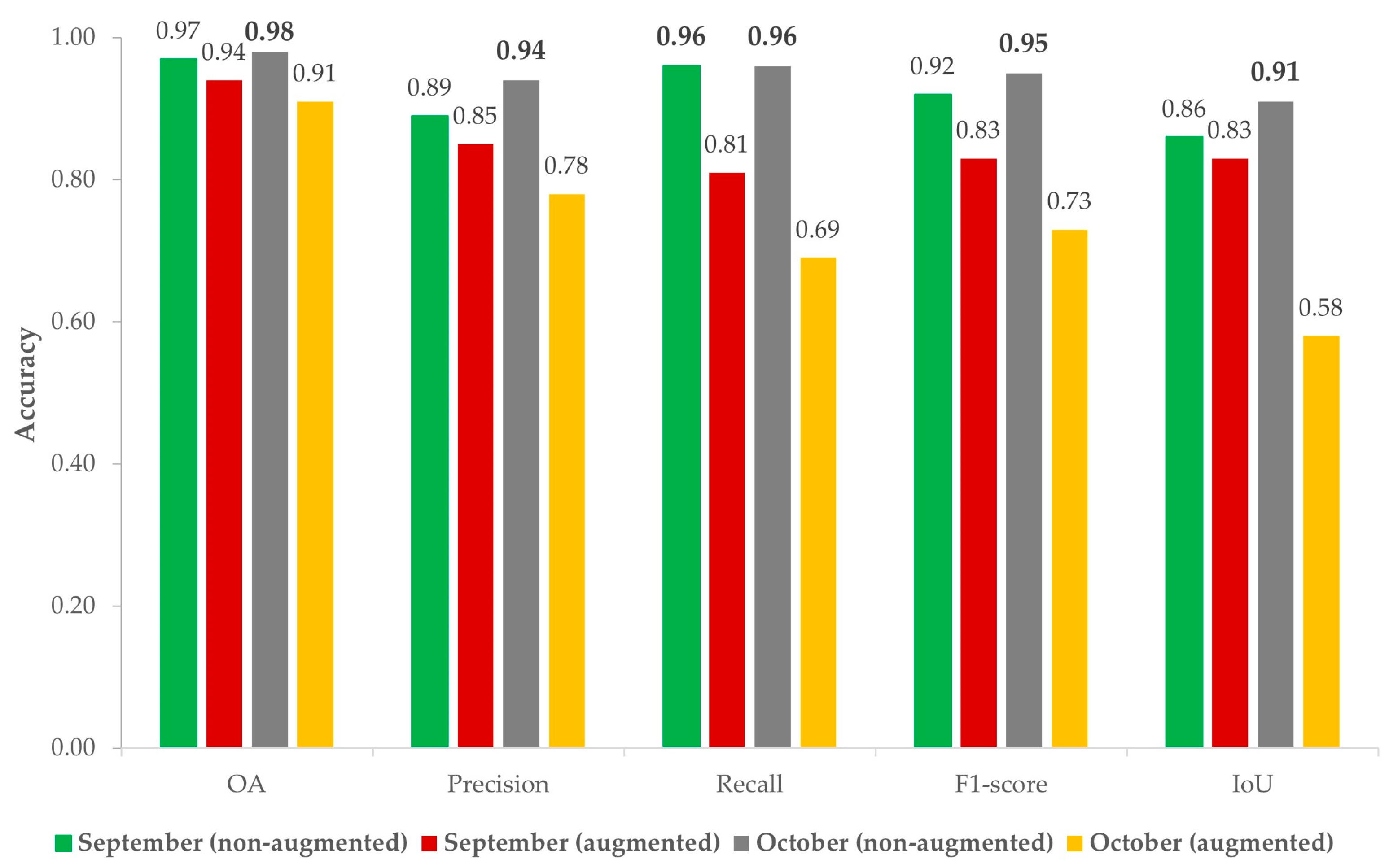

3.2. Japanese Oak Segmentation Using the ResU-Net Model and the UAV Datasets Acquired after the Change in Leaf Color (without and with Augmentation)

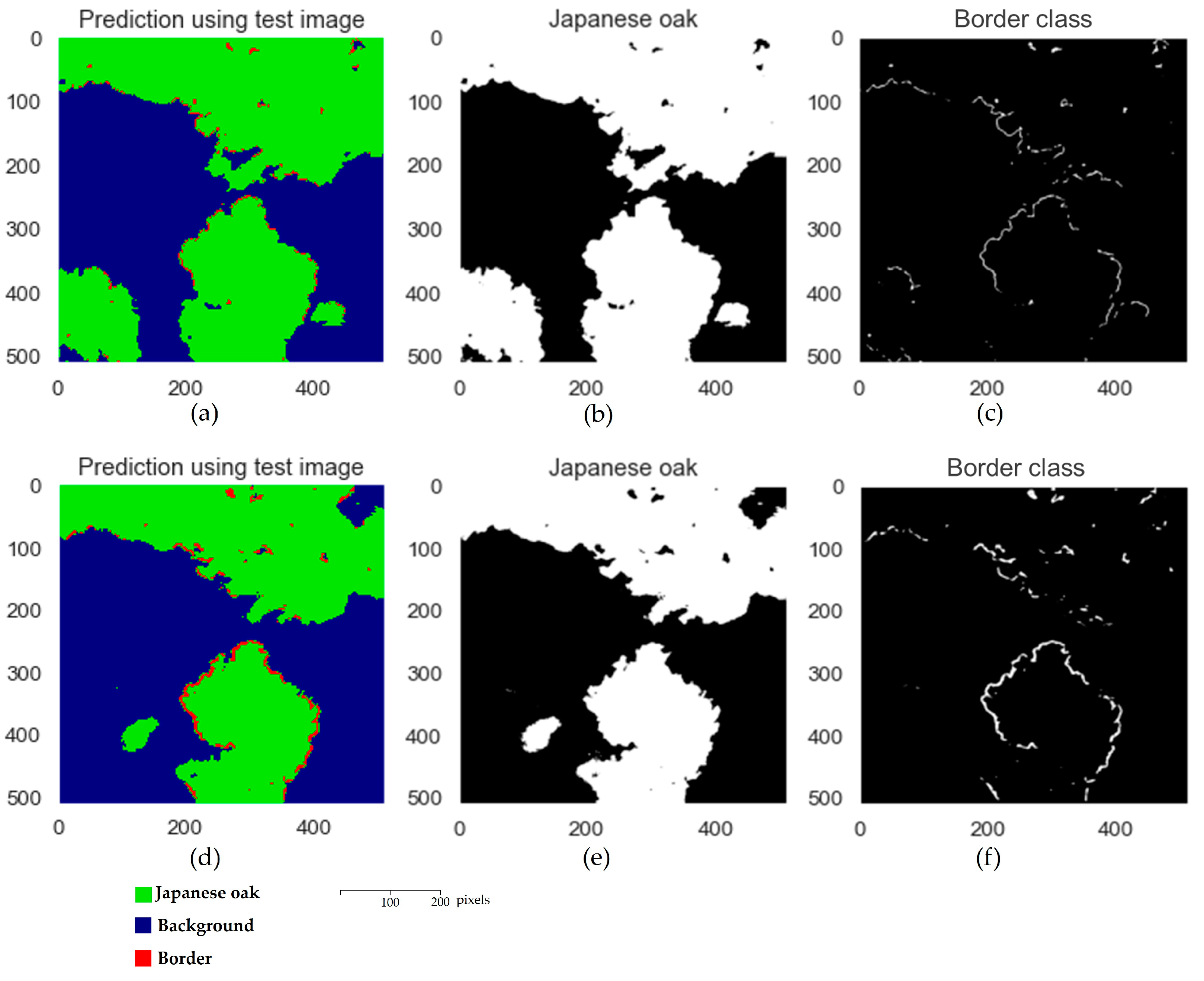

3.3. Performance of the ResU-Net Model with UAV Datasets for Mapping Japanese Oak Crowns Distribution in an Uneven-Aged Mixed Forest

4. Discussion

4.1. Performance of the ResU-Net Model for Individual Tree Crown Segmentation

4.2. Impact of Data Augmentation on the Classification Results

4.3. Importance of Preparing a Representative Validation Dataset for the Diversity of the Entire Dataset

4.4. Response of the ResU-Net Model to Two Different Seasonal UAV Datasets for Japanese Oak Crown Detection

4.5. General Discussion of the Misclassifications

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moe, K.T.; Owari, T. Sustainability of High-Value Timber Species in Mixed Conifer–Broadleaf Forest Managed under Selection System in Northern Japan. Forests 2020, 11, 484. [Google Scholar] [CrossRef]

- Owari, T.; Okamura, K.; Fukushi, K.; Kasahara, H.; Tatsumi, S. Single-Tree Management for High-Value Timber Species in a Cool-Temperate Mixed Forest in Northern Japan. Int. J. Biodivers. Sci. Ecosyst. Serv. Manag. 2016, 12, 74–82. [Google Scholar] [CrossRef]

- Moe, K.; Owari, T.; Furuya, N.; Hiroshima, T. Comparing Individual Tree Height Information Derived from Field Surveys, LiDAR and UAV-DAP for High-Value Timber Species in Northern Japan. Forests 2020, 11, 223. [Google Scholar] [CrossRef]

- Schulze, M.; Grogan, J.; Landis, R.M.; Vidal, E. How Rare Is Too Rare to Harvest? Management Challenges Posed by Timber Species Occurring at Low Densities in the Brazilian Amazon. For. Ecol. Manage 2008, 256, 1443–1457. [Google Scholar] [CrossRef]

- Huang, Y.; Wen, X.; Gao, Y.; Zhang, Y.; Lin, G. Tree Species Classification in UAV Remote Sensing Images Based on Super-Resolution Reconstruction and Deep Learning. Remote Sens. 2023, 15, 2942. [Google Scholar] [CrossRef]

- Liu, B.; Hao, Y.; Huang, H.; Chen, S.; Li, Z.; Chen, E.; Tian, X.; Ren, M. TSCMDL: Multimodal Deep Learning Framework for Classifying Tree Species Using Fusion of 2-D and 3-D Features. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Wessel, M.; Brandmeier, M.; Tiede, D. Evaluation of Different Machine Learning Algorithms for Scalable Classification of Tree Types and Tree Species Based on Sentinel-2 Data. Remote Sens. 2018, 10, 1419. [Google Scholar] [CrossRef]

- Korznikov, K.A.; Kislov, D.E.; Altman, J.; Doležal, J.; Vozmishcheva, A.S.; Krestov, P.V. Using U-Net-like Deep Convolutional Neural Networks for Precise Tree Recognition in Very High Resolution Rgb (Red, Green, Blue) Satellite Images. Forests 2021, 12, 66. [Google Scholar] [CrossRef]

- Safonova, A.; Hamad, Y.; Dmitriev, E.; Georgiev, G.; Trenkin, V.; Georgieva, M.; Dimitrov, S.; Iliev, M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones 2021, 5, 77. [Google Scholar] [CrossRef]

- Abdollahnejad, A.; Panagiotidis, D. Tree Species Classification and Health Status Assessment for a Mixed Broadleaf-Conifer Forest with Uas Multispectral Imaging. Remote Sens. 2020, 12, 3722. [Google Scholar] [CrossRef]

- Mäyrä, J.; Keski-Saari, S.; Kivinen, S.; Tanhuanpää, T.; Hurskainen, P.; Kullberg, P.; Poikolainen, L.; Viinikka, A.; Tuominen, S.; Kumpula, T.; et al. Tree Species Classification from Airborne Hyperspectral and LiDAR Data Using 3D Convolutional Neural Networks. Remote Sens. Environ. 2021, 256, 112322. [Google Scholar] [CrossRef]

- Chen, C.; Jing, L.; Li, H.; Tang, Y. A New Individual Tree Species Classification Method Based on the Resu-Net Model. Forests 2021, 12, 1202. [Google Scholar] [CrossRef]

- Chen, X.; Shen, X.; Cao, L. Tree Species Classification in Subtropical Natural Forests Using High-Resolution UAV RGB and SuperView-1 Multispectral Imageries Based on Deep Learning Network Approaches: A Case Study within the Baima Snow Mountain National Nature Reserve, China. Remote Sens. 2023, 15, 2697. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in Vegetation Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Pei, H.; Owari, T.; Tsuyuki, S.; Zhong, Y. Application of a Novel Multiscale Global Graph Convolutional Neural Network to Improve the Accuracy of Forest Type Classification Using Aerial Photographs. Remote Sens. 2023, 15, 1001. [Google Scholar] [CrossRef]

- Tran, D.Q.; Park, M.; Jung, D.; Park, S. Damage-Map Estimation Using Uav Images and Deep Learning Algorithms for Disaster Management System. Remote Sens. 2020, 12, 4169. [Google Scholar] [CrossRef]

- Pyo, J.C.; Han, K.J.; Cho, Y.; Kim, D.; Jin, D. Generalization of U-Net Semantic Segmentation for Forest Change Detection in South Korea Using Airborne Imagery. Forests 2022, 13, 2170. [Google Scholar] [CrossRef]

- Zhang, P.; Ban, Y.; Nascetti, A. Learning U-Net without Forgetting for near Real-Time Wildfire Monitoring by the Fusion of SAR and Optical Time Series. Remote Sens. Environ. 2021, 261, 112467. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.M.; Gloor, E.; Phillips, O.L.; Aragão, L.E.O.C. Using the U-Net Convolutional Network to Map Forest Types and Disturbance in the Atlantic Rainforest with Very High Resolution Images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef]

- Waldner, F.; Diakogiannis, F.I. Deep Learning on Edge: Extracting Field Boundaries from Satellite Images with a Convolutional Neural Network. Remote Sens. Environ. 2020, 245, 111741. [Google Scholar] [CrossRef]

- Cao, K.; Zhang, X. An Improved Res-UNet Model for Tree Species Classification Using Airborne High-Resolution Images. Remote Sens. 2020, 12, 1128. [Google Scholar] [CrossRef]

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban Land Use and Land Cover Classification Using Novel Deep Learning Models Based on High Spatial Resolution Satellite Imagery. Sensors 2018, 18, 3717. [Google Scholar] [CrossRef]

- Chu, Z.; Tian, T.; Feng, R.; Wang, L. Sea-Land Segmentation with Res-Unet and Fully Connected CRF. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Yokohama, Japan, 14 November 2019; pp. 3840–3843. [Google Scholar] [CrossRef]

- Zhang, C.; Atkinson, P.M.; George, C.; Wen, Z.; Diazgranados, M.; Gerard, F. Identifying and Mapping Individual Plants in a Highly Diverse High-Elevation Ecosystem Using UAV Imagery and Deep Learning. ISPRS J. Photogramm. Remote Sens. 2020, 169, 280–291. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Shia, W.C.; Chen, D.R. Classification of Malignant Tumors in Breast Ultrasound Using a Pretrained Deep Residual Network Model and Support Vector Machine. Comput. Med. Imaging Graph. 2021, 87, 101829. [Google Scholar] [CrossRef] [PubMed]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Evaluating the Performance of Photogrammetric Products Using Fixed-Wing UAV Imagery over a Mixed Conifer-Broadleaf Forest: Comparison with Airborne Laser Scanning. Remote Sens. 2018, 10, 187. [Google Scholar] [CrossRef]

- Alias, M.F.; Udin, W.S.; Piramli, M.K. High-Resolution Mapping Using Digital Imagery of Unmanned Aerial Vehicle (UAV) at Quarry Area, Machang, Kelantan. IOP Conf. Ser. Earth Environ. Sci. 2022, 1102, 2–9. [Google Scholar] [CrossRef]

- Sivanandam, P.; Turner, D.; Lucieer, A.; Sparrow, B.; Raja Segaran, R.; Ross Campbell, D.; Virtue, J.; Melville, B.; McCallum, K. Drone Data Collection Protocol Using DJI Matrice 300 RTK: Imagery and Lidar. 2022, pp. 1–58. Available online: https://www.tern.org.au/wp-content/uploads/20221103_M300_data_collection.pdf (accessed on 31 October 2023).

- Arzt, M.; Deschamps, J.; Schmied, C.; Pietzsch, T.; Schmidt, D.; Tomancak, P.; Haase, R.; Jug, F. LABKIT: Labeling and Segmentation Toolkit for Big Image Data. Front. Comput. Sci. 2022, 4, 10. [Google Scholar] [CrossRef]

- Sothe, C.; La Rosa, L.E.C.; De Almeida, C.M.; Gonsamo, A.; Schimalski, M.B.; Castro, J.D.B.; Feitosa, R.Q.; Dalponte, M.; Lima, C.L.; Liesenberg, V.; et al. Evaluating a Convolutional Neural Network for Feature Extraction and Tree Species Classification Using Uav-Hyperspectral Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 193–199. [Google Scholar] [CrossRef]

- Ahmed, I.; Ahmad, M.; Jeon, G. A Real-Time Efficient Object Segmentation System Based on U-Net Using Aerial Drone Images. J. Real. Time Image Process 2021, 18, 1745–1758. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Caceres, M.L.L.; Moritake, K.; Kentsch, S.; Shu, H.; Diez, Y. Individual Sick Fir Tree (Abies Mariesii) Identification in Insect Infested Forests by Means of UAV Images and Deep Learning. Remote Sens. 2021, 13, 260. [Google Scholar] [CrossRef]

- Ronneberge, O.; Fischer, P.; Brox, T. INet: Convolutional Networks for Biomedical Image Segmentation. IEEE Access 2015, 9, 16591–16603. [Google Scholar] [CrossRef]

- Shumeng, H.; Gaodi, X.; Houqun, Y. A Semantic Segmentation Method for Remote Sensing Images Based on Multiple Contextual Feature Extraction. Concurr. Comput. 2022, 10, 77432–77451. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Li, F.; Yan, L.; Wang, Y.; Shi, J.; Chen, H.; Zhang, X.; Jiang, M.; Wu, Z.; Zhou, K. Deep Learning-Based Automated Detection of Glaucomatous Optic Neuropathy on Color Fundus Photographs. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 851–867. [Google Scholar] [CrossRef]

- Orbe-trujillo, E.; Novillo, C.J.; Pérez-ramírez, M.; Vazquez-avila, J.L.; Pérez-ramírez, A. Fast Treetops Counting Using Mathematical Image Symmetry, Segmentation, and Fast K-Means Classification Algorithms. Symmetry 2022, 14, 532. [Google Scholar] [CrossRef]

- Chen, Q.; Gao, T.; Zhu, J.; Wu, F.; Li, X.; Lu, D.; Yu, F. Individual Tree Segmentation and Tree Height Estimation Using Leaf-Off and Leaf-On UAV-LiDAR Data in Dense Deciduous Forests. Remote Sens. 2022, 14, 2787. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in Rgb Imagery Using Semi-Supervised Deep Learning Neural Networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Yoshii, T.; Lin, C.; Tatsuhara, S.; Suzuki, S.; Hiroshima, T. Tree Species Mapping of a Hemiboreal Mixed Forest Using Mask R-CNN. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur Convention Centre (KLCC), Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 6228–6231. [Google Scholar] [CrossRef]

- Gan, Y.; Wang, Q.; Iio, A. Tree Crown Detection and Delineation in a Temperate Deciduous Forest from UAV RGB Imagery Using Deep Learning Approaches: Effects of Spatial Resolution and Species Characteristics. Remote Sens. 2023, 15, 778. [Google Scholar] [CrossRef]

- Beloiu, M.; Heinzmann, L.; Rehush, N.; Gessler, A.; Griess, V.C. Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning. Remote Sens. 2023, 15, 1463. [Google Scholar] [CrossRef]

- Moe, K.T.; Owari, T.; Furuya, N.; Hiroshima, T.; Morimoto, J. Application of Uav Photogrammetry with Lidar Data to Facilitate the Estimation of Tree Locations and Dbh Values for High-Value Timber Species in Northern Japanese Mixed-Wood Forests. Remote Sens. 2020, 12, 2865. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping Forest Tree Species in High Resolution UAV-Based RGB-Imagery by Means of Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Franklin, H.; Veras, P.; Pinheiro, M.; Paula, A.; Corte, D.; Roberto, C. Ecological Informatics Fusing Multi-Season UAS Images with Convolutional Neural Networks to Map Tree Species in Amazonian Forests. Ecol. Inform. 2022, 71, 101815. [Google Scholar] [CrossRef]

- Natesan, S.; Armenakis, C.; Vepakomma, U. Individual Tree Species Identification Using Dense Convolutional Network (Densenet) on Multitemporal RGB Images from UAV. J. Unmanned Veh. Syst. 2020, 8, 310–333. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Z.; Huang, R.; You, H.; Han, X.; Yue, T.; Zhou, G. The Effects of Spatial Resolution and Resampling on the Classification Accuracy of Wetland Vegetation Species and Ground Objects: A Study Based on High Spatial Resolution UAV Images. Drones 2023, 7, 61. [Google Scholar] [CrossRef]

| UAV Dataset | OA | Precision | Recall | F1-Score | IoU |

|---|---|---|---|---|---|

| September (non-augmented) | 0.90 | 0.75 | 0.88 | 0.81 | 0.68 |

| September (augmented) | 0.87 | 0.76 | 0.66 | 0.71 | 0.55 |

| UAV Dataset | OA | Precision | Recall | F1-Score | IoU |

|---|---|---|---|---|---|

| October (non-augmented) | 0.90 | 0.78 | 0.82 | 0.80 | 0.67 |

| October (augmented) | 0.89 | 0.82 | 0.70 | 0.76 | 0.61 |

| UAV Dataset | OA | Precision | Recall | F1-Score | IoU |

|---|---|---|---|---|---|

| September (non-augmented) | 0.97 | 0.89 | 0.96 | 0.92 | 0.86 |

| September (augmented) | 0.94 | 0.85 | 0.81 | 0.83 | 0.83 |

| October (non-augmented) | 0.98 | 0.94 | 0.96 | 0.95 | 0.91 |

| October (augmented) | 0.91 | 0.78 | 0.69 | 0.73 | 0.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Htun, N.M.; Owari, T.; Tsuyuki, S.; Hiroshima, T. Mapping the Distribution of High-Value Broadleaf Tree Crowns through Unmanned Aerial Vehicle Image Analysis Using Deep Learning. Algorithms 2024, 17, 84. https://doi.org/10.3390/a17020084

Htun NM, Owari T, Tsuyuki S, Hiroshima T. Mapping the Distribution of High-Value Broadleaf Tree Crowns through Unmanned Aerial Vehicle Image Analysis Using Deep Learning. Algorithms. 2024; 17(2):84. https://doi.org/10.3390/a17020084

Chicago/Turabian StyleHtun, Nyo Me, Toshiaki Owari, Satoshi Tsuyuki, and Takuya Hiroshima. 2024. "Mapping the Distribution of High-Value Broadleaf Tree Crowns through Unmanned Aerial Vehicle Image Analysis Using Deep Learning" Algorithms 17, no. 2: 84. https://doi.org/10.3390/a17020084

APA StyleHtun, N. M., Owari, T., Tsuyuki, S., & Hiroshima, T. (2024). Mapping the Distribution of High-Value Broadleaf Tree Crowns through Unmanned Aerial Vehicle Image Analysis Using Deep Learning. Algorithms, 17(2), 84. https://doi.org/10.3390/a17020084