Numerical Algorithms in III–V Semiconductor Heterostructures

Abstract

1. Introduction

2. Materials and Methods

2.1. Theory

2.2. The Used Genetic Algorithm

- Initialization Step

- (a)

- Set with the total number of chromosomes.

- (b)

- Set with the total number of generations allowed.

- (c)

- Define with K the number of weights for the neural networks.

- (d)

- Produce randomly chromosomes. Every chromosome consists of two equal parts. The first half represents the parameters of the artificial neural network and the second half represents the parameters of the artificial neural network . The size of each part is set to 3K, where K is the number of weights.

- (e)

- Set as the selection rate, with .

- (f)

- Set as the mutation rate, with .

- (g)

- Set iter = 0.

- Fitness calculation Step

- (a)

- For , do

- Calculate the fitness of every chromosome . The chromosome consists of two equal parts. The first part (parameters in range is used to represent the parameters of the artificial neural network and the second part (parameters in range ) represents the parameters of the artificial neural network . The calculation of the fitness has the following steps:

- Set , the first part of chromosome

- Set , the second part of chromosome

- Set the value for Equation (14)

- (b)

- EndFor

- Genetic operations step

- (a)

- Selection procedure. After sorting according to the fitness values, the first chromosomes with the lowest fitness values are copied to the next generation and the rest are replaced by offsprings produced during the crossover procedure.

- (b)

- Crossover procedure: Two new offsprings and are created for every selected couple of . The selection of is performed using the tournament selection. The new offsprings are produced according to the following:The value is a random number, where [42].

- (c)

- Perform the mutation procedure: For every element of each chromosome, a random number number is drawn. If , then this element is altered randomly.

- Termination Check Step

- (a)

- Set

- (b)

- The termination rule used here was initially proposed in the work of Tsoulos [43]. The algorithm computes the variance of the best-located fitness value at every iteration. If no better value was discovered for a number of generations, then this is a good evidence that the algorithm should terminate. Consider as the best fitness of the population and as the associated variance at generation iter. The termination rule is formulated aswhere is the last generation where a new minimum was found.

- (c)

- If the termination rule is not satisfied, go to step 2.

- Local Search Step

- (a)

- Set the best chromosome of the population.

- (b)

- Apply a local search procedure to the best chromosome. In the current implementation, the BFGS method published by Powell [44] was used as a local search procedure.

2.3. The Used PSO Variant

- Initialization Step

- (a)

- Set the current iteration.

- (b)

- Set as the total number of particles.

- (c)

- Set as the maximum number of allowed generations.

- (d)

- Set with the local search rate.

- (e)

- Initialize the positions of the m particles . Each particle consists of two equal parts as in the genetic algorithm case.

- (f)

- Perform a random initialization of the respected velocities .

- (g)

- For , do . The vector holds the best located values for the position of each particle i.

- (h)

- Set

- Termination Check. Check for termination. The termination criterion used here is the same in the genetic algorithm case.

- For , Do

- (a)

- Update the velocity as a function of aswhere

- The parameters are randomly selected numbers in [0,1].

- The parameters are in the range .

- The value denotes the inertia value and is calculated aswhere r a random number with [56]. With the above velocity calculation mechanism, the particles have greater freedom of movement and are not limited to small or large changes, more efficiently covering the research area of the objective problem.

- (b)

- Update the position of the particle as

- (c)

- Pick a random number . If , then , where is a local search procedure. In the current work, the BFGS variant of Powell used in genetic algorithm is also utilized here.

- (d)

- Calculate the fitness of the particle i, , with the same procedure as in the genetic algorithm case.

- (e)

- If , then

- End For

- Set

- Set .

- Go to Step 2

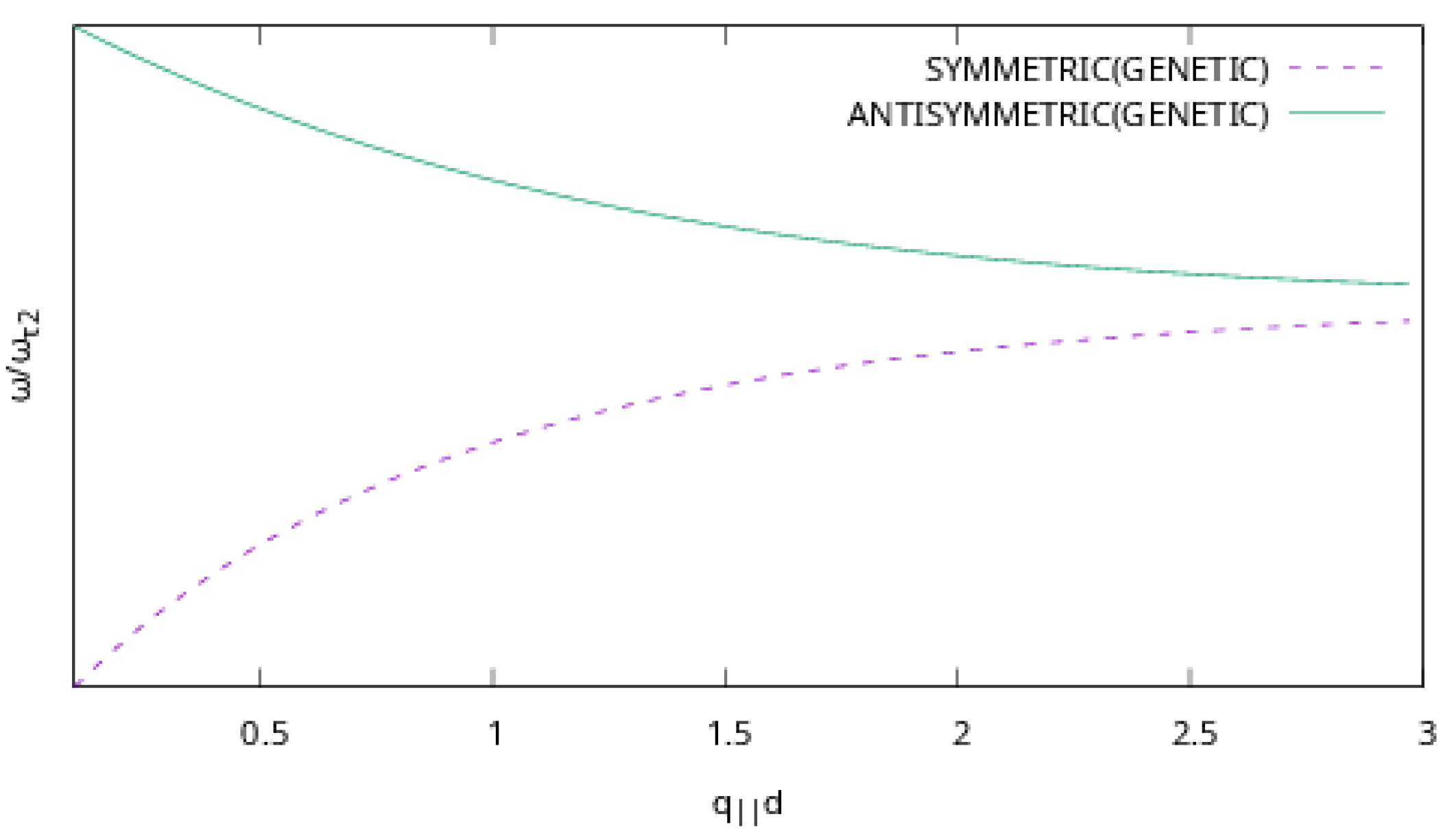

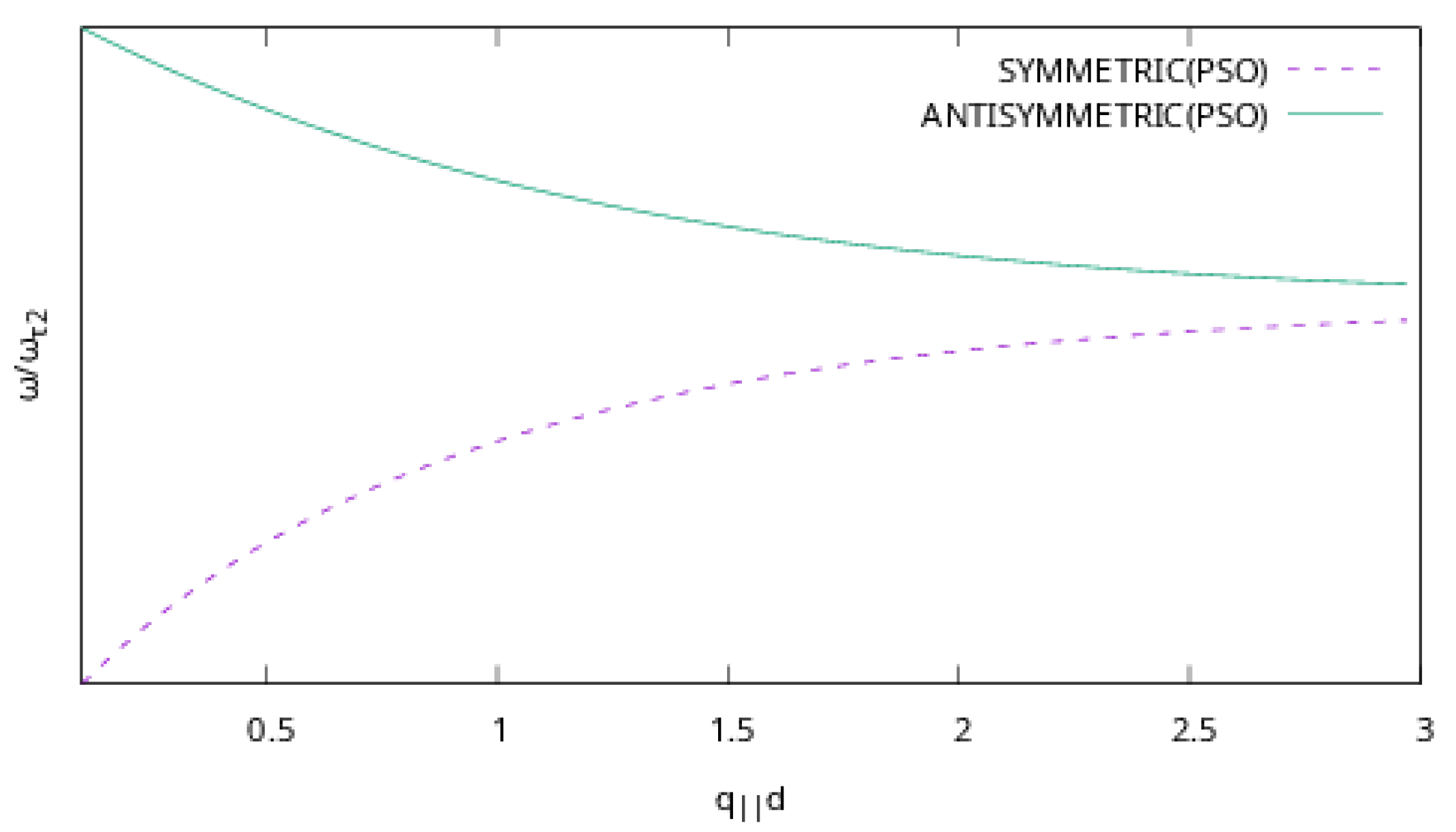

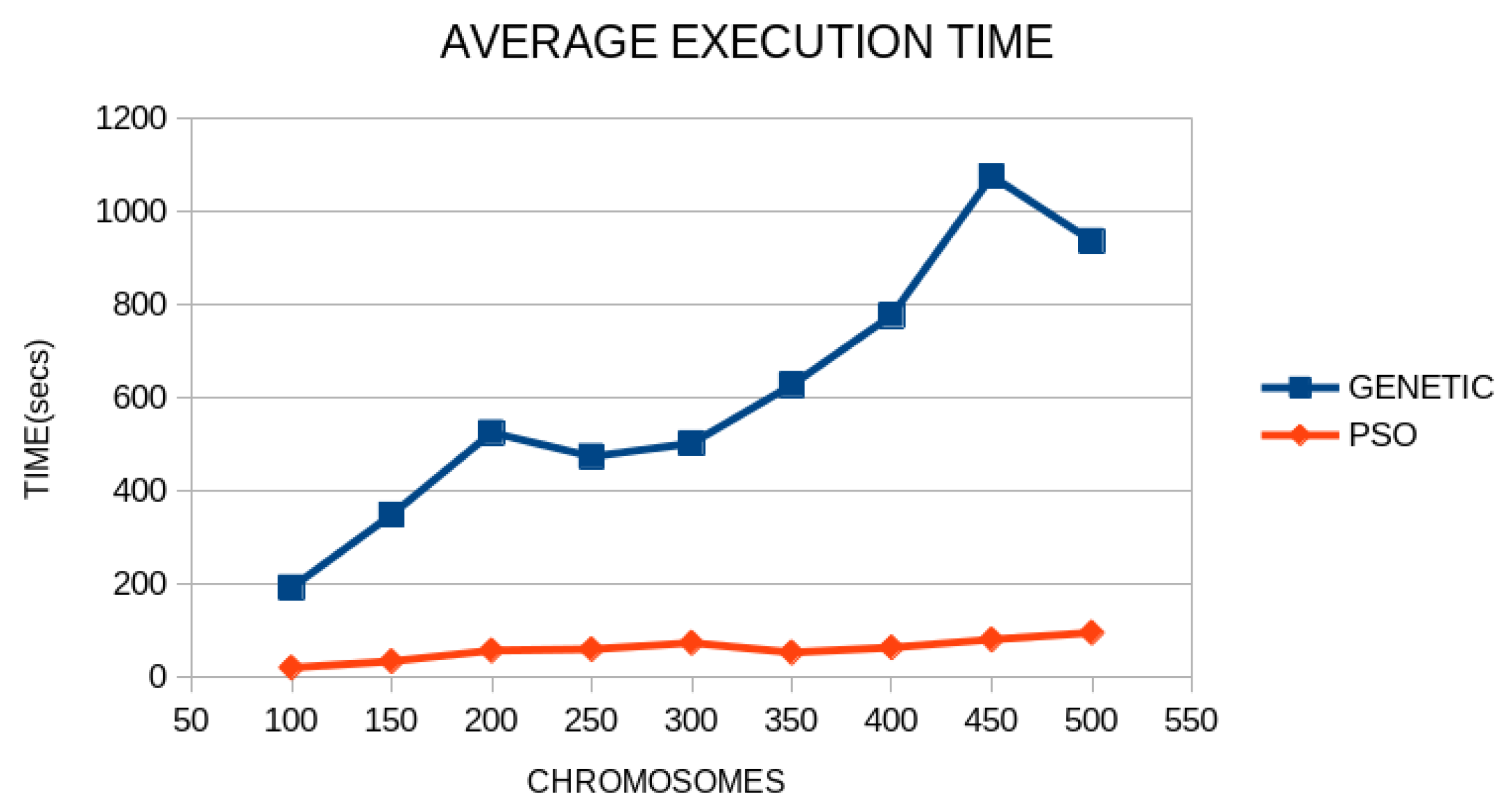

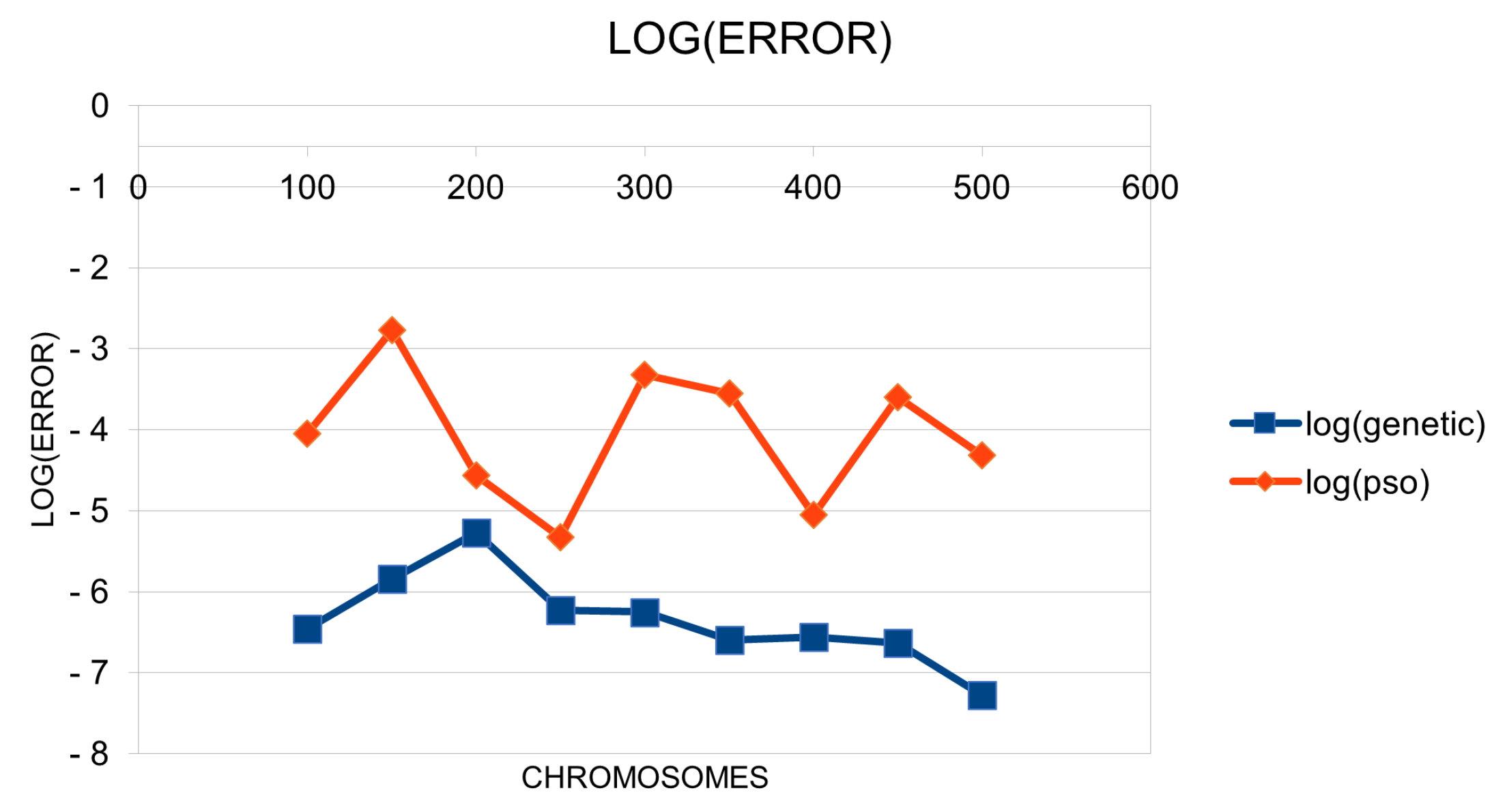

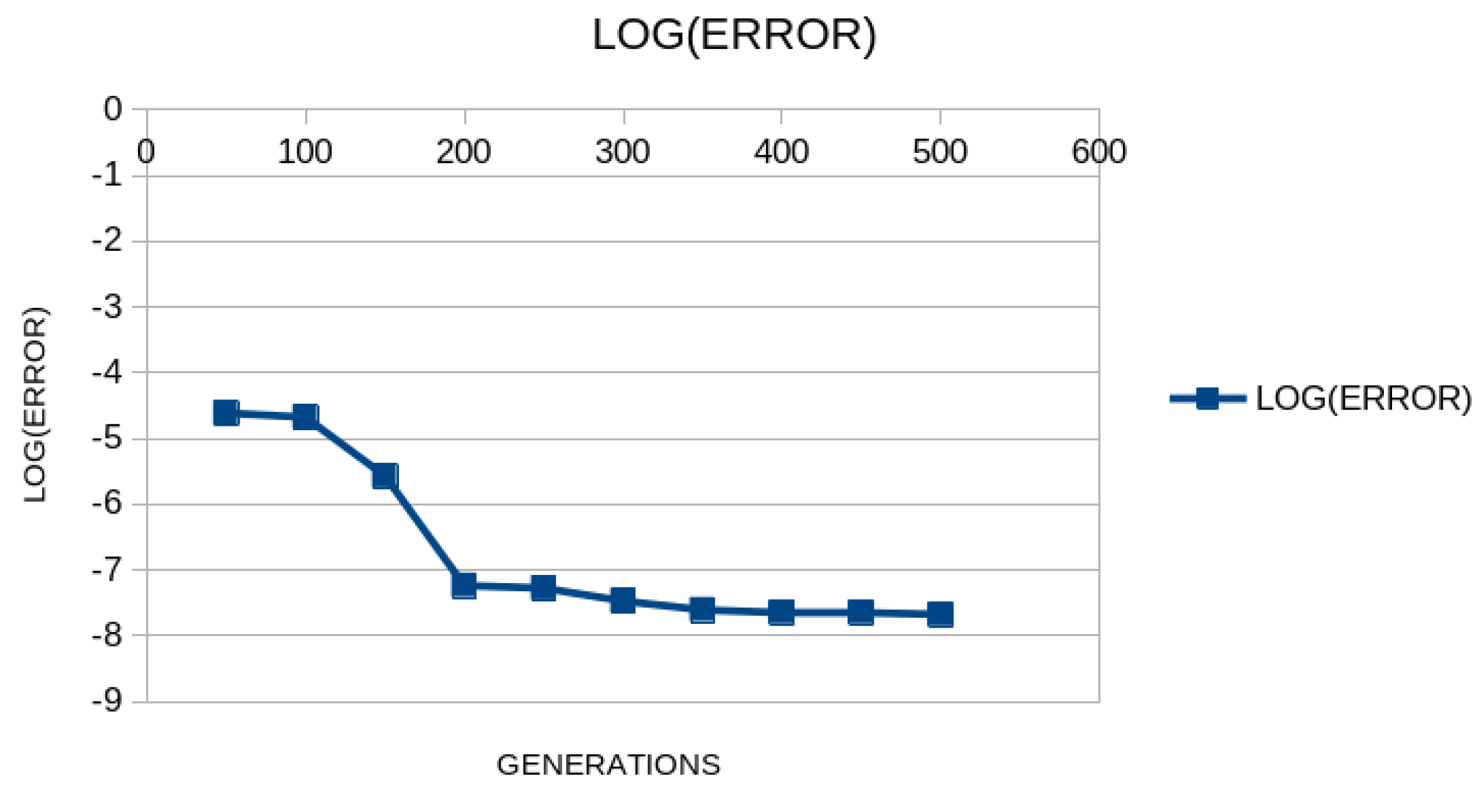

3. Results

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Geng, H. Semiconductor Manufacturing Handbook, 1st ed.; McGraw-Hill Education: New York, NY, USA, 2005. [Google Scholar]

- Li, G.; Bleu, O.; Levinsen, J.; Parish, M.M. Theory of polariton-electron interactions in semiconductor microcavities. Phys. Rev. B 2021, 103, 195307. [Google Scholar] [CrossRef]

- Al-Dossary, O.; Babiker, M.; Constantinou, N.C. Fuchs-Kliewer interface polaritons and their interactions with electrons in GaAs/AlAs double heterostructures. Semicond. Sci. Technol. 1992, 7, 891–893. [Google Scholar] [CrossRef]

- Chu, H.; Chang, Y.-C. Phonon-polariton modes in superlattices: The effect of spatial dispersion. Phys. Rev. B 1988, 38, 12369. [Google Scholar] [CrossRef] [PubMed]

- Zhou, K.; Zhong, X.; Cheng, Q.; Wu, X. Actively tunable hybrid plasmon-phonon polariton modes in ferroelectric/graphene heterostructure systems at low-THz frequencies. Opt. Mater. 2022, 131, 112623. [Google Scholar] [CrossRef]

- Fuchs, R.; Kliewer, K.L. Oytical Modes of Vibration in an Ionic Crystal Slab. Phys. Rev. A 1965, 140, 2076. [Google Scholar] [CrossRef]

- Fuchs, R.; Kliewer, K.L. Optical Modes of Vibration in an Ionic Crystal Slab Including Retardation. II. Radiative Region. Phys. Rev. 1966, 150, 573. [Google Scholar]

- Rogalski, A. Infrared Detectors, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Kang, F.E.N.G.; Zhong-Ci, S.; Kang, F.; Zhong-Ci, S. Finite element methods. In Mathematical Theory of Elastic Structures; Springer: Berlin/Heidelberg, Germany, 1996; pp. 289–385. [Google Scholar]

- Stefanou, G. The stochastic finite element method: Past, present and future. Comput. Methods Appl. Mech. Eng. 2009, 198, 1031–1051. [Google Scholar] [CrossRef]

- Schenk, O.; Bollhöfer, M.; Römer, R.A. On large-scale diagonalization techniques for the Anderson model of localization. SIAM J. Sci. Comput. 2006, 28, 963–983. [Google Scholar] [CrossRef]

- Bishop, C. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal Function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Baldi, P.; Cranmer, K.; Faucett, T.; Sadowski, P.; Whiteson, D. Parameterized neural networks for high-energy physics. Eur. Phys. J. C 2016, 76, 235. [Google Scholar] [CrossRef]

- Valdas, J.J.; Bonham-Carter, G. Time dependent neural network models for detecting changes of state in complex processes: Applications in earth sciences and astronomy. Neural Netw. 2006, 19, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Carleo, G.; Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 2017, 355, 602–606. [Google Scholar] [CrossRef] [PubMed]

- Shirvany, Y.; Hayati, M.; Moradian, R. Multilayer perceptron neural networks with novel unsupervised training method for numerical solution of the partial differential equations. Appl. Soft Comput. 2009, 9, 20–29. [Google Scholar] [CrossRef]

- Malek, A.; Beidokhti, R.S. Numerical solution for high order differential equations using a hybrid neural network—Optimization method. Appl. Math. Comput. 2006, 183, 260–271. [Google Scholar] [CrossRef]

- Topuz, A. Predicting moisture content of agricultural products using artificial neural networks. Adv. Eng. Softw. 2010, 41, 464–470. [Google Scholar] [CrossRef]

- Escamilla-García, A.; Soto-Zarazúa, G.M.; Toledano-Ayala, M.; Rivas-Araiza, E.; Gastélum-Barrios, A. Applications of Artificial Neural Networks in Greenhouse Technology and Overview for Smart Agriculture Development. Appl. Sci. 2020, 10, 3835. [Google Scholar] [CrossRef]

- Shen, L.; Wu, J.; Yang, W. Multiscale Quantum Mechanics/Molecular Mechanics Simulations with Neural Networks. J. Chem. Theory Comput. 2016, 12, 4934–4946. [Google Scholar] [CrossRef]

- Manzhos, S.; Dawes, R.; Carrington, T. Neural network-based approaches for building high dimensional and quantum dynamics-friendly potential energy surfaces. Int. J. Quantum Chem. 2015, 115, 1012–1020. [Google Scholar] [CrossRef]

- Wei, J.N.; Duvenaud, D.; Aspuru-Guzik, A. Neural Networks for the Prediction of Organic Chemistry Reactions. ACS Cent. Sci. 2016, 2, 725–732. [Google Scholar] [CrossRef]

- Falat, L.; Pancikova, L. Quantitative Modelling in Economics with Advanced Artificial Neural Networks. Procedia Econ. Financ. 2015, 34, 194–201. [Google Scholar] [CrossRef]

- Namazi, M.; Shokrolahi, A.; Maharluie, M.S. Detecting and ranking cash flow risk factors via artificial neural networks technique. J. Bus. Res. 2016, 69, 1801–1806. [Google Scholar] [CrossRef]

- Tkacz, G. Neural network forecasting of Canadian GDP growth. Int. J. Forecast. 2001, 17, 57–69. [Google Scholar] [CrossRef]

- Baskin, I.I.; Winkler, D.; Tetko, I.V. A renaissance of neural networks in drug discovery. Expert Opin. Drug Discov. 2016, 11, 785–795. [Google Scholar] [CrossRef]

- Bartzatt, R. Prediction of Novel Anti-Ebola Virus Compounds Utilizing Artificial Neural Network (ANN). Chem. Fac. Publ. 2018, 49, 16–34. [Google Scholar]

- Tsoulos, I.; Gavrilis, D.; Glavas, E. Neural network construction and training using grammatical evolution. Neurocomputing 2008, 72, 269–277. [Google Scholar] [CrossRef]

- Rem, B.S.; Käming, N.; Tarnowski, M.; Asteria, L.; Fläschner, N.; Becker, C.; Sengstock, K.; Weitenberg, C. Identifying quantum phase transitions using artificial neural networks on experimental data. Nat. Phys. 2019, 15, 917–920. [Google Scholar] [CrossRef]

- Hermann, J.; Schätzle, Z.; Noé, F. Deep-neural-network solution of the electronic Schrödinger equation. Nat. Chem. 2020, 12, 891–897. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Wang, S.; Perdikaris, P.; Karniadakis, G.E. Physics-Informed Neural Networks for Heat Transfer Problems. ASME. J. Heat Transf. 2021, 143, 060801. [Google Scholar] [CrossRef]

- Zhu, Q.; Liu, Z.; Yan, J. Machine learning for metal additive manufacturing: Predicting temperature and melt pool fluid dynamics using physics-informed neural networks. Comput. Mech. 2021, 67, 619–635. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Stender, J. Parallel Genetic Algorithms: Theory & Applications; IOS Press: Amsterdam, The Netherlands, 1993. [Google Scholar]

- Doorly, D.J.; Peiró, J. Supervised Parallel Genetic Algorithms in Aerodynamic Optimisation. In Artificial Neural Nets and Genetic Algorithms; Springer: Vienna, Austria, 1997; pp. 229–233. [Google Scholar]

- Sarma, K.C.; Adeli, H. Bilevel Parallel Genetic Algorithms for Optimization of Large Steel Structures. Comput. Aided Civ. Infrastruct. Eng. 2001, 16, 295–304. [Google Scholar] [CrossRef]

- Fan, Y.; Jiang, T.; Evans, D.J. Volumetric segmentation of brain images using parallel genetic algorithms. IEEE Trans. Med. Imaging 2002, 21, 904–909. [Google Scholar]

- Leung, F.H.F.; Lam, H.K.; Ling, S.H.; Tam, P.K.S. Tuning of the structure and parameters of a neural network using an improved genetic algorithm. IEEE Trans. Neural Netw. 2003, 14, 79–88. [Google Scholar] [CrossRef] [PubMed]

- Sedki, A.; Ouazar, D.; El Mazoudi, E. Evolving neural network using real coded genetic algorithm for daily rainfall—Runoff forecasting. Expert Syst. Appl. 2009, 36, 4523–4527. [Google Scholar] [CrossRef]

- Majdi, A.; Beiki, M. Evolving neural network using a genetic algorithm for predicting the deformation modulus of rock masses. Int. J. Rock Mech. Min. Sci. 2010, 47, 246–253. [Google Scholar] [CrossRef]

- Kaelo, P.; Ali, M.M. Integrated crossover rules in real coded genetic algorithms. Eur. J. Oper. Res. 2007, 176, 60–76. [Google Scholar] [CrossRef]

- Tsoulos, I.G. Modifications of real code genetic algorithm for global optimization. Appl. Math. Comput. 2008, 203, 598–607. [Google Scholar] [CrossRef]

- Powell, M.J.D. A Tolerant Algorithm for Linearly Constrained Optimization Calculations. Math. Program. 1989, 45, 547–566. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization An Overview. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- de Moura Meneses, A.A.; Machado, M.D.; Schirru, R. Particle Swarm Optimization applied to the nuclear reload problem of a Pressurized Water Reactor. Prog. Nucl. Energy 2009, 51, 319–326. [Google Scholar] [CrossRef]

- Shaw, R.; Srivastava, S. Particle swarm optimization: A new tool to invert geophysical data. Geophysics 2007, 72, F75–F83. [Google Scholar] [CrossRef]

- Ourique, C.O.; Biscaia Jr, E.C.; Pinto, J.C. The use of particle swarm optimization for dynamical analysis in chemical processes. Comput. Chem. Eng. 2002, 26, 1783–1793. [Google Scholar] [CrossRef]

- Fang, H.; Zhou, J.; Wang, Z.; Qiu, Z.; Sun, Y.; Lin, Y.; Chen, K.; Zhou, X.; Pan, M. Hybrid method integrating machine learning and particle swarm optimization for smart chemical process operations. Front. Chem. Sci. Eng. 2022, 16, 274–287. [Google Scholar] [CrossRef]

- Wachowiak, M.P.; Smolíková, R.; Zheng, Y.; Zurada, J.M.; Elmaghraby, A.S. An approach to multimodal biomedical image registration utilizing particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 289–301. [Google Scholar] [CrossRef]

- Marinakis, Y.; Marinaki, M.; Dounias, G. Particle swarm optimization for pap-smear diagnosis. Expert Syst. Appl. 2008, 35, 1645–1656. [Google Scholar] [CrossRef]

- Park, J.B.; Jeong, Y.W.; Shin, J.R.; Lee, K.Y. An Improved Particle Swarm Optimization for Nonconvex Economic Dispatch Problems. IEEE Trans. Power Syst. 2010, 25, 156–166. [Google Scholar] [CrossRef]

- Zhang, C.; Shao, H.; Li, Y. Particle swarm optimisation for evolving artificial neural network. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Nashville, TN, USA, 8–11 October 2000; pp. 2487–2490. [Google Scholar]

- Yu, J.; Wang, S.; Xi, L. Evolving artificial neural networks using an improved PSO and DPSO. Neurocomputing 2008, 71, 1054–1060. [Google Scholar] [CrossRef]

- Charilogis, V.; Tsoulos, I.G. Toward an Ideal Particle Swarm Optimizer for Multidimensional Functions. Information 2022, 13, 217. [Google Scholar] [CrossRef]

- Eberhart, R.C.; Shi, Y.H. Tracking and optimizing dynamic systems with particle swarms. In Proceedings of the Congress on Evolutionary Computation, Seoul, Republic of Korea, 27–30 May 2001. [Google Scholar]

- Cantú-Paz, E.; Goldberg, D.E. Efficient parallel genetic algorithms: Theory and practice. Comput. Methods Appl. Mech. Eng. 2000, 186, 221–238. [Google Scholar] [CrossRef]

- Wang, K.; Shen, Z. A GPU-Based Parallel Genetic Algorithm for Generating Daily Activity Plans. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1474–1480. [Google Scholar] [CrossRef]

- Kečo, D.; Subasi, A.; Kevric, J. Cloud computing-based parallel genetic algorithm for gene selection in cancer classification. Neural Comput. Appl. 2018, 30, 1601–1610. [Google Scholar] [CrossRef]

- Gropp, W.; Lusk, E.; Doss, N.; Skjellum, A. A high-performance, portable implementation of the MPI message passing interface standard. Parallel Comput. 1996, 22, 789–828. [Google Scholar] [CrossRef]

- Chandra, R.; Dagum, L.; Kohr, D.; Maydan, D.; McDonald, J.; Menon, R. Parallel Programming in OpenMP; Morgan Kaufmann Publishers Inc.: San Diego, CA, USA, 2001. [Google Scholar]

| PARAMETER | MEANING | VALUE |

|---|---|---|

| d | Well width | 5 nm |

| Left bound of Equation (14) | 0.1 | |

| Right bound of Equation (14) | 3.0 | |

| Number of points used to divide the interval | 100 | |

| ℏ | Longitudinal-optical phonon energy of material 1 (AlAs) | 50.09 meV |

| ℏ | Transverse-optical phonon energy of material 1 (AlAs) | 44.88 meV |

| ℏ | Longitudinal-optical phonon energy of material 2 (GaAs) | 36.25 meV |

| ℏ | Transverse-optical phonon energy of material 2 (GaAs) | 33.29 meV |

| High-frequency dielectric constant of material 1 (AlAs) | 8.16 | |

| High-frequency dielectric constant of material 2 (GaAs) | 10.89 | |

| Number of chromosomes/particles | 500 | |

| Maximum number of allowed generations | 200 | |

| Selection rate | 0.90 | |

| Mutation rate | 0.05 | |

| Local search rate | 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsoulos, I.G.; Stavrou, V.N. Numerical Algorithms in III–V Semiconductor Heterostructures. Algorithms 2024, 17, 44. https://doi.org/10.3390/a17010044

Tsoulos IG, Stavrou VN. Numerical Algorithms in III–V Semiconductor Heterostructures. Algorithms. 2024; 17(1):44. https://doi.org/10.3390/a17010044

Chicago/Turabian StyleTsoulos, Ioannis G., and V. N. Stavrou. 2024. "Numerical Algorithms in III–V Semiconductor Heterostructures" Algorithms 17, no. 1: 44. https://doi.org/10.3390/a17010044

APA StyleTsoulos, I. G., & Stavrou, V. N. (2024). Numerical Algorithms in III–V Semiconductor Heterostructures. Algorithms, 17(1), 44. https://doi.org/10.3390/a17010044