3.2. Numerical Results

The parameter settings for the mentioned algorithms were taken from the original papers. For example, for L-SHADE-RSP, the initial population size was set to and memory size ; for NL-SHADE-RSP, the initial population size , and memory size ; and for NL-SHADE-LBC, the population size , and . For the L-NTADE algorithm, the following values were used: , selective pressure parameter , biased parameter adaptation coefficient , and mutation strategy r-new-to-ptop/n/t. These values were set, as they enable us to achieve good results on both CEC 2017 and CEC 2022 benchmarks. The algorithms with crossover rate sorting were marked with _s, e.g., L-SHADE-RSP_s. It should be mentioned that the NL-SHADE-RSP and NL-SHADE-LBC had the sorting procedure in their standard setting, so here, the standard setting is denoted as NL-SHADE-RSP_s and NL-SHADE-LBC_s.

The efficiency comparison of the tested algorithms was performed with the Mann–Whitney statistical test with normal approximation and tie-breaking to compare variants with and without crossover rates sorting. Using normal approximation is possible due to the sufficient sample sizes (30 or 51 independent runs). It also enables us to calculate the standard score (Z-score), which simplifies the reasoning and estimating the difference between algorithm variants. If the decision about the significance of such a difference is to be made, the significance level equal to is used, which corresponds to the threshold Z score of (two-tailed test). In addition to the Mann–Whitney test, the Friedman ranking procedure was used to analyze the performance of the algorithms. The ranks were calculated for every function and then summed, with lower ranks being better.

In the first set of experiments, the algorithms are compared on the CEC 2017 benchmark. The results are shown in

Table 1 and

Table 2, the latter of which contains the Friedman ranking of the results.

The values in

Table 1 shown in every cell are the number of wins/ties/losses of the algorithm without crossover rate sorting, and with it, the sum of these three equals the number of test functions. The number in brackets is the total standard score, i.e., sum of all standard scores of the Mann–Whitney test over all test functions. The results indicate that the effect of the sorting crossover rates is not always positive. For example, for L-NTADE, the sorting results in worse performance in most cases, although for

functions, there are 4 improvements out of 30. However, the NL-SHADE-LBC algorithm receives significant improvements, as well as L-SHADE-RSP, which becomes better for 7 functions out of 30. At the same time, the NL-SHADE-RSP algorithm does not seem to be influenced by this modification, and its performance stays almost at the same level. The Friedman ranking demonstrates that the algorithms with crossover rate sorting received lower or equal ranks in almost all cases, except L-NTADE in

and

.

Table 3 shows the comparison on the CEC 2022 benchmark, and

Table 4 contains the Friedman ranking of the results.

The results of the modified algorithms with crossover rate sorting, shown in

Table 3, are much better, with the only exception being the NL-SHADE-RSP algorithm in the

case. Other algorithms achieved improvements on up to 6 functions out of 12, often without performance losses. However, it is important to mention here that most of the improvements are observed on functions, which are easily solved by the algorithm, and the improvement is in terms of convergence speed, i.e., number of function evaluations required to achieve the optimum. For the NL-SHADE-RSP algorithm, there was a performance loss observed on the 10-th function, i.e., Composition Function 2, combining Rotated Schwefel’s Function, Rotated Rastrigin’s Function and the HGBat Function. The Friedman ranking in

Table 4 shows that the crossover rate sorting leads to significant performance improvements in all cases.

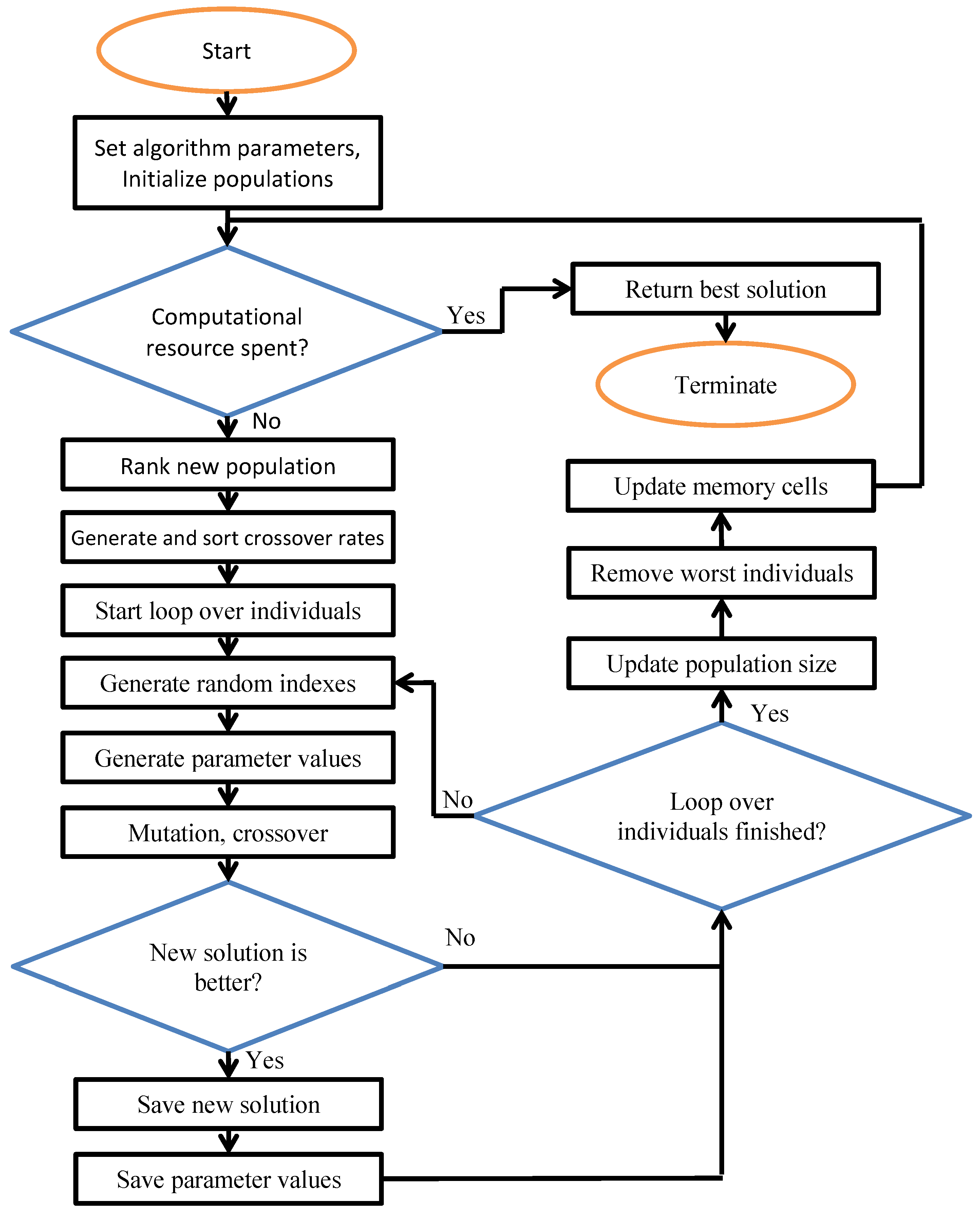

The presented comparison shows that there is a certain effect of the crossover rate sorting; however, it is important to understand the reasons for the algorithms’ different behaviors and convergence speeds. For this purpose, first of all, the diversity measure is introduced as the average pairwise distance (

) between individuals:

Figure 2 shows the diversity of the newest population in the L-NTADE algorithm with and without crossover rate sorting (CRS), CEC 2022,

case.

The graphs in

Figure 2 demonstrate that applying crossover rate sorting results in earlier diversity loss in the population in most cases. The only exception here is F4, Shifted and Rotated Non-Continuous Rastrigin’s Function, where the diversity stays at a high level for a longer period. Hence, the improvement on the CEC 2022 benchmark can be explained by faster convergence. However, this convergence may be premature, for example, like on F10, where applying crossover rate sorting leads to early stop of the search process. Two other important cases are hybrid functions F7 and F8, where diversity oscillated, but here, it is also lower with CRS. Similar trends are observed for other algorithms in both CEC 2022 and CEC 2017 benchmarks.

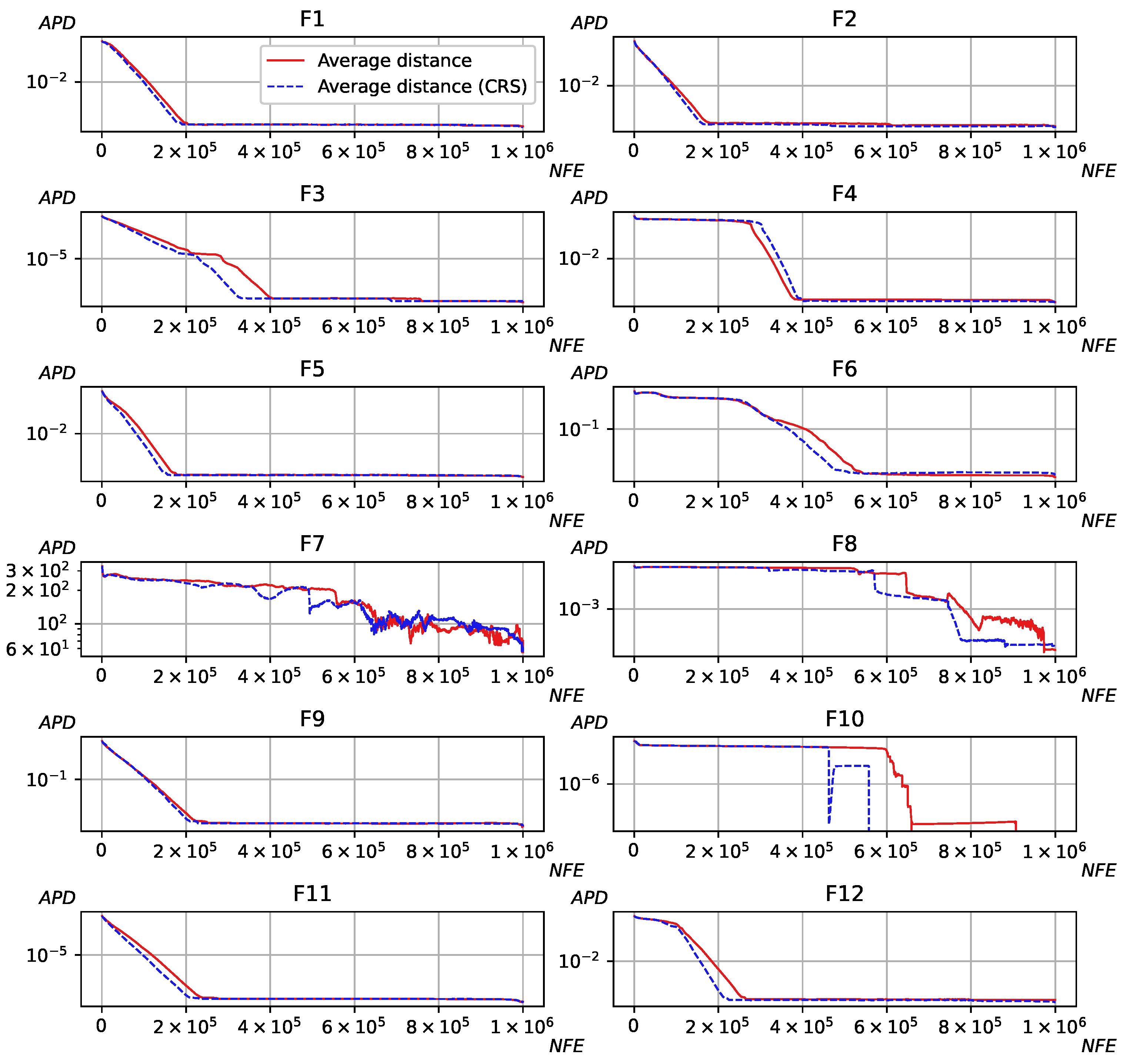

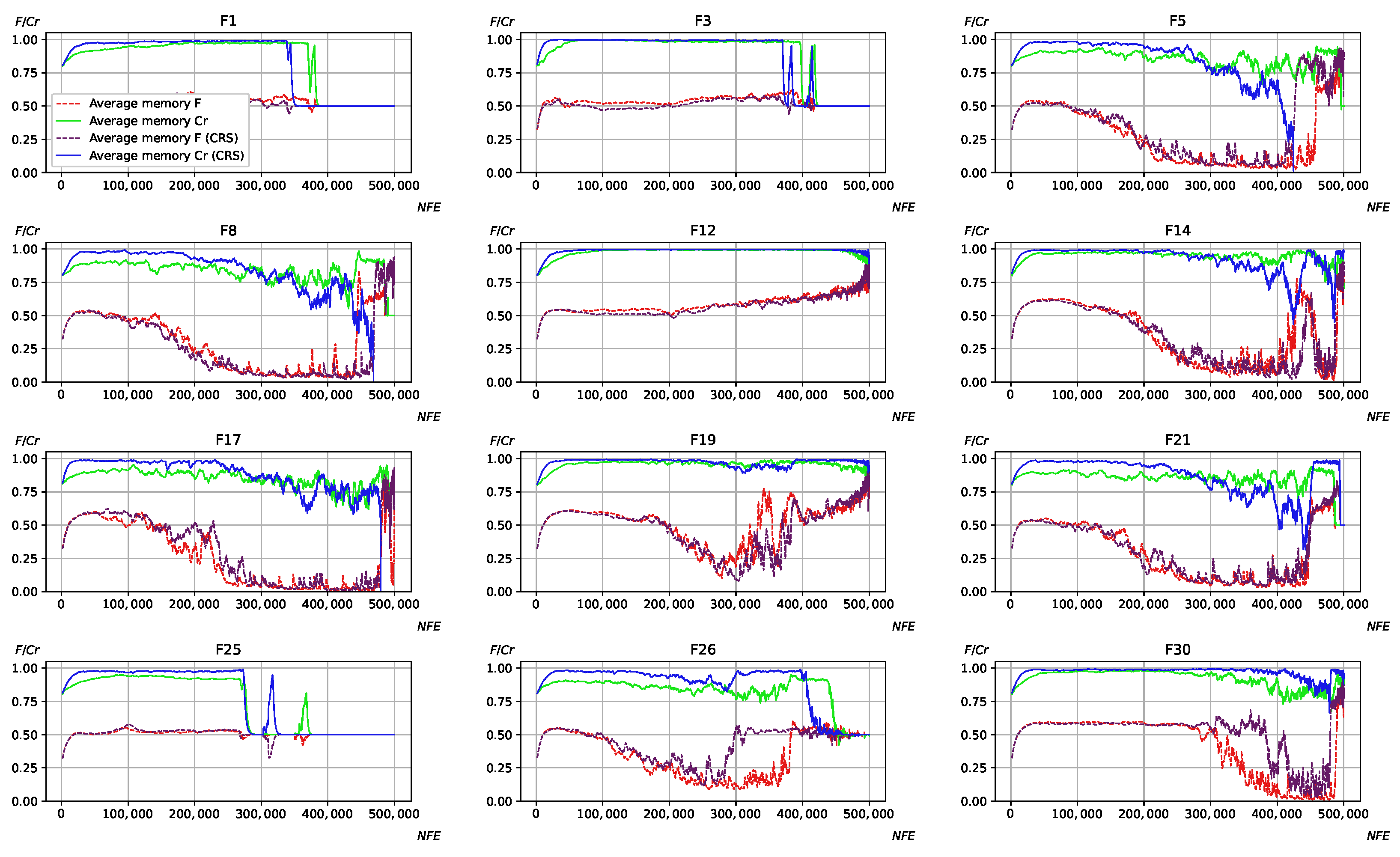

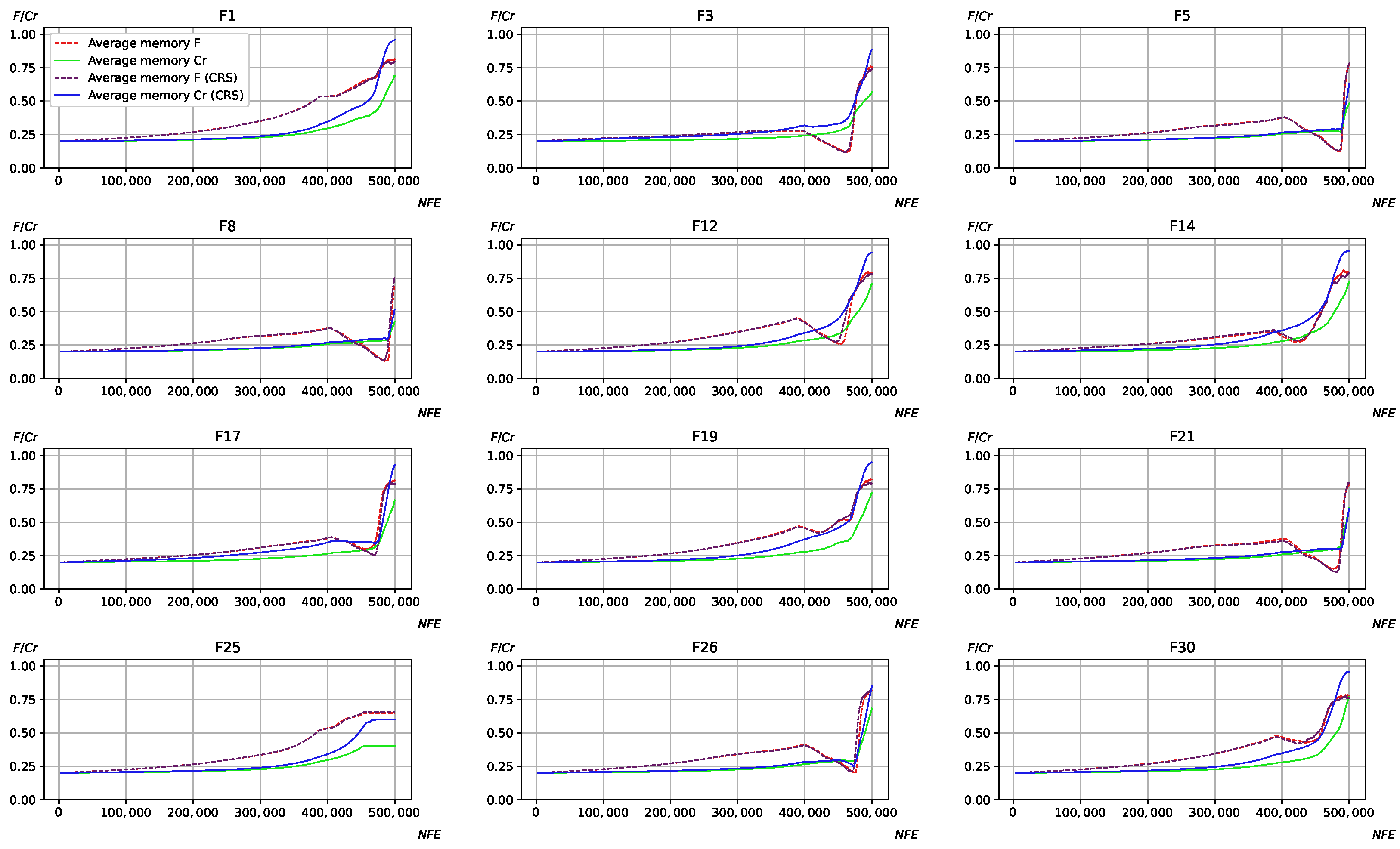

Another question worth investigating is the influence of crossover rate sorting on the process of parameter adaptation. For this purpose, the average values of all memory cells were calculated for

F and

for all algorithms.

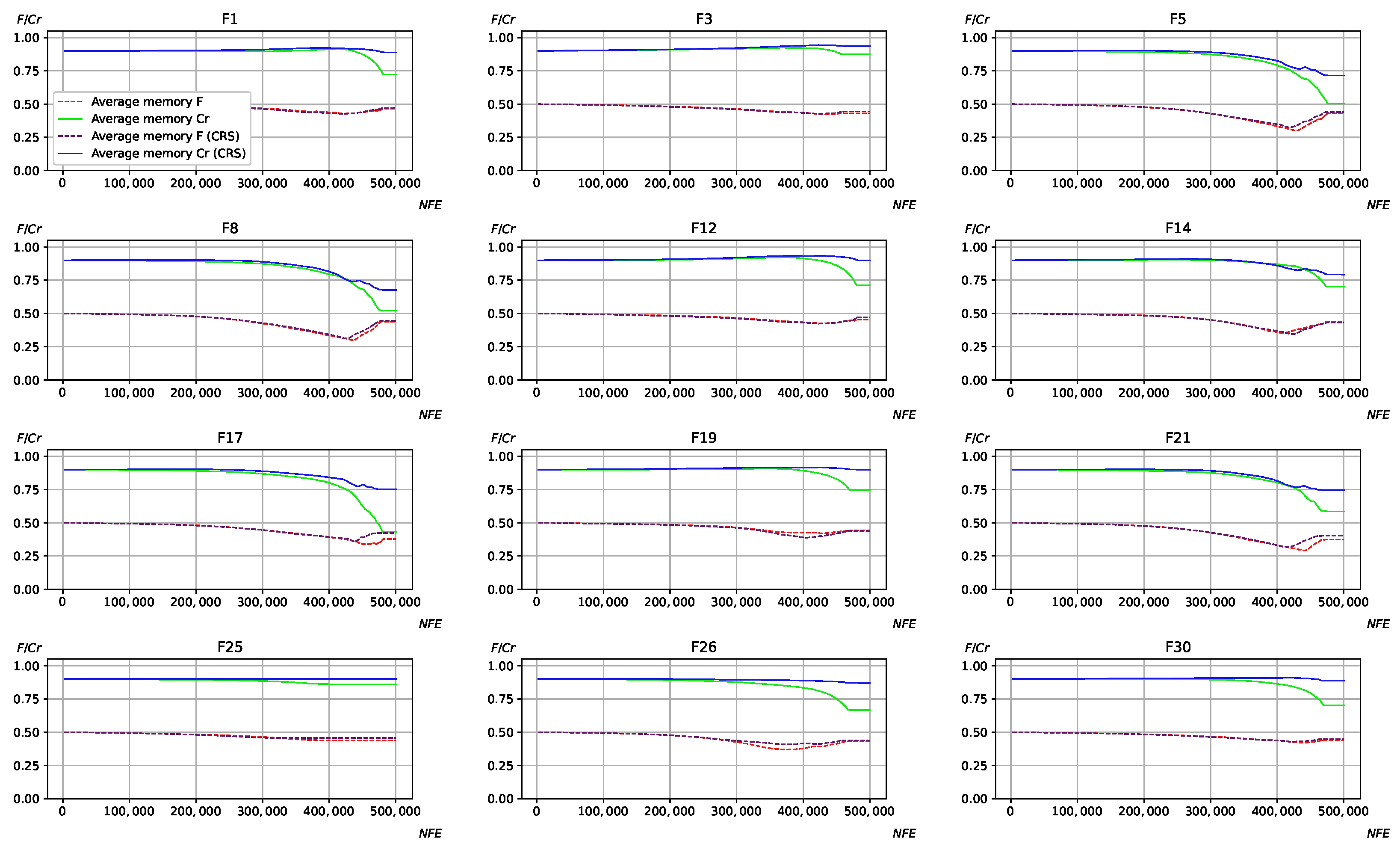

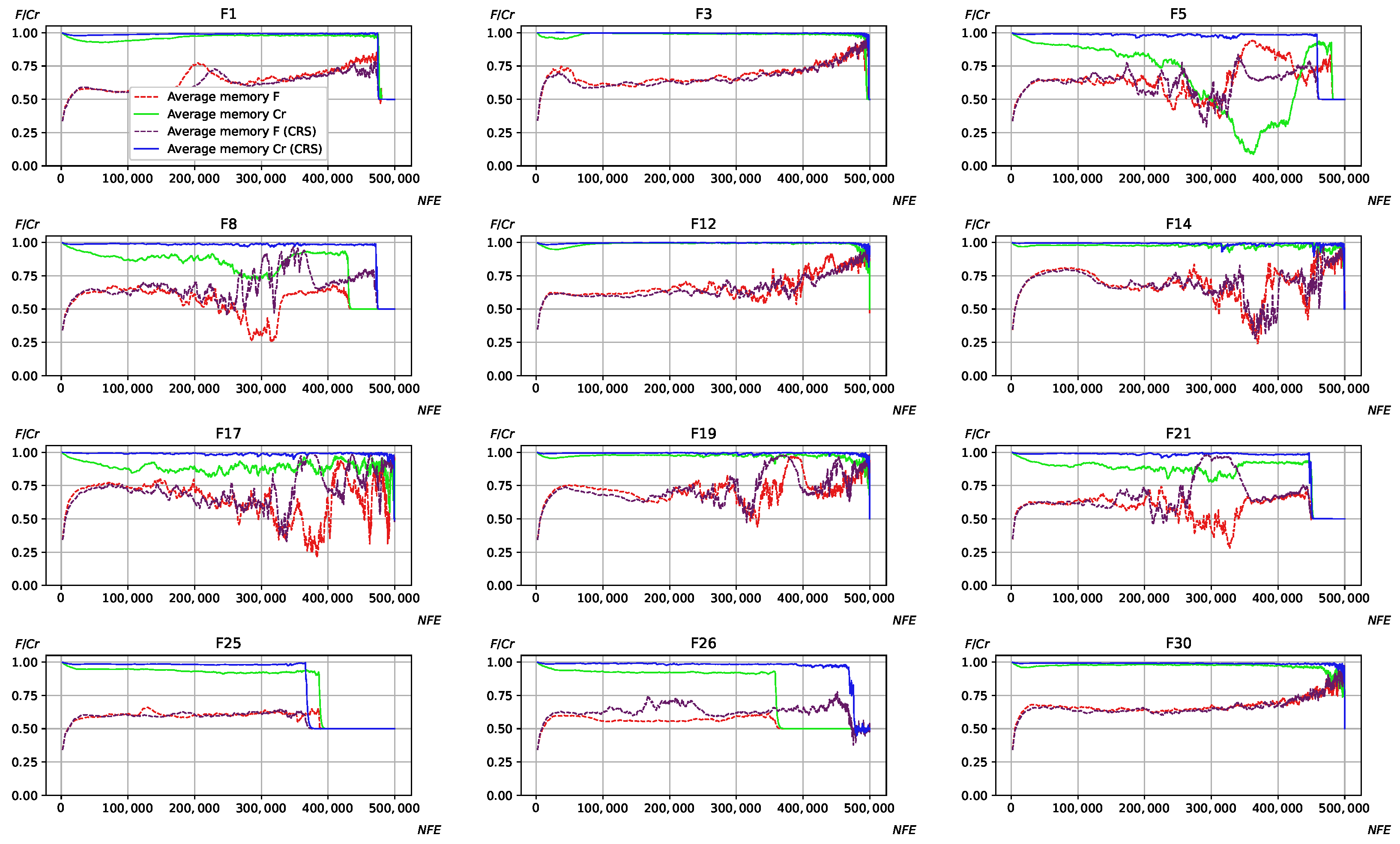

Figure 3,

Figure 4,

Figure 5 and

Figure 6 show the adaptation process on CEC 2017,

case.

Considering the results in

Figure 3,

Figure 4,

Figure 5 and

Figure 6, it can be observed that applying CRS results in larger

values stored in the memory cells in the majority of cases. Moreover,

often reaches 1, and rarely decreases, especially for L-SHADE-RSP and L-NTADE, where the memory size is rather small. The NL-SHADE-RSP is characterized by a more rapid increase in the average of the

values in the memory cells, while L-SHADE-LBC keeps larger

values for a longer period of time. The scaling factor parameter

F is also affected, especially for the L-NTADE algorithm, where its behavior may differ significantly, e.g., in cases such as F8, F17, and F21. One explanation for this could be that decreased diversity leads to different

F values to become more promising, usually resulting in larger

F values in the memory cells.

The presented graphs may lead to the following question: is the faster convergence and larger

values generated the only effects of the crossover rate sorting? Is it possible to achieve the same results simply by setting larger

values, without any sorting? To answer these questions, another set of experiments was performed. In these experiments, the biased parameter adaptation approach [

23] was applied; in particular, the Lehmer mean parameter

p in memory cells update was increased. The standard setting for this parameter in L-SHADE-RSP, NL-SHADE-RSP and L-NTADE is 2, and it was increased to 4. In L-SHADE-LBC, this parameter is linearly controlled so that it is increased by 2. Larger values of

p lead to a bias toward larger parameter values generated.

Table 5 and

Table 6 show the comparison of the four used algorithm with the increased Lehmer mean parameter (with _lm in the name) and with crossover rate sorting on CEC 2017 and CEC 2022, respectively.

Comparing

Table 1 and

Table 5, it can be noted that in terms of number of wins/ties/losses, applying the biased Lehmer mean does not change the situation in most cases. However, the performance of NL-SHADE-RSP in high-dimensional problems is increased, and the algorithm with crossover rate sorting becomes much worse, especially in

. Similar trends are observed for CEC 2022 in Table reftab6, where a significant difference may be observed for NL-SHADE-RSP in the

case—here adding more biased mean calculation leads to more similar performance to NL-SHADE-RSP_s, in terms of convergence speed.

Table 7 shows the comparison of algorithms with crossover rate sorting with some of the top-performing algorithms, such as EBOwithCMAR [

36] (first place in 2017), jSO [

7] (second place) and LSHADE-SPACMA [

37] (fourth place) on the CEC 2017 benchmark.

Table 8 contains the comparison on the CEC 2022 benchmark with EA4eigN100_10 [

38] (first place in competition), NL-SHADE-RSP-MID [

39] (third place in competition) and APGSK-IMODE [

40].

The statistical test results are shown in

Table 7; it can be seen that NL-SHADE-RSP and NL-SHADE-LBC algorithms did not perform well on this benchmark, as they were designed for CEC 2021 and CEC 2022 benchmarks. However, the L-SHADE-RSP_s was able to outperform the EBOwithCMAR and jSO in high-dimensional problems, despite losing several times in low-dimensional cases. As for the L-NTADE_s, its performance is also good, and it won against EBOwithCMAR and jSO in

,

and

, despite performing worse than L-SHADE-SPACMA.

In the case of the CEC 2022 benchmark, shown in

Table 8, the L-SHADE-RSP_s performed quite well, even in comparison with the best methods in the

problems, but showed worse results in

, unlike NL-SHADE-RSP_s, which had worse performance. The NL-SHADE-LBC with crossover rate sorting showed similar performance compared to the top algorithms, and the L-NTADE_s was worse in the

case but comparable in

. It is important to note here that the L-NTADE_s is capable of achieving high performance in both CEC 2017 and CEC 2022 benchmarks against different algorithms developed for these problem sets.