Abstract

A traditional total variation (TV) model for infrared image deblurring amid salt-and-pepper noise produces a severe staircase effect. A TV model with low-order overlapping group sparsity (LOGS) suppresses this effect; however, it considers only the prior information of the low-order gradient of the image. This study proposes an image-deblurring model (Lp_HOGS) based on the LOGS model to mine the high-order prior information of an infrared (IR) image amid salt-and-pepper noise. An Lp-pseudo-norm was used to model the salt-and-pepper noise and obtain a more accurate noise model. Simultaneously, the second-order total variation regular term with overlapping group sparsity was introduced into the proposed model to further mine the high-order prior information of the image and preserve the additional image details. The proposed model uses the alternating direction method of multipliers to solve the problem and obtains the optimal solution of the overall model by solving the optimal solution of several simple decoupled subproblems. Experimental results show that the model has better subjective and objective performance than Lp_LOGS and other advanced models, especially when eliminating motion blur.

1. Introduction

Images are a primary source of information because they contain large volumes of information and are well-aligned with the cognitive functions of the brain. In the absence of visible light, infrared (IR) radiation generated by an object can be converted into visible IR images using an IR thermal imaging system. The brightness of each pixel in the image corresponds to the change in the intensity of the object’s radiation energy [1]. IR thermal-imaging systems are widely used in biomedicine, military, industrial, and agricultural applications, owing to their strong environmental adaptability, concealment, anti-interference, and identification abilities. However, they have disadvantages such as high noise, low contrast, and blurring. Among the various types of noise, the salt-and-pepper and the Gaussian noises have the greatest impact on IR images [2].

Salt-and-pepper noise is a common random noise exhibiting sparsity in mathematics and statistics. The common methods for removing salt-and-pepper noise include median filter, partial differential equation (PDE) model-based methods, and total variation (TV) model-based methods. Although the median filter method can effectively remove salt-and-pepper noise, it produces deblurred images with incomplete image details [3]. In the last decade, PDE-based models have been developed for various physical applications in image restoration [4]. TV-based models can answer fundamental questions related to image restoration better than other models [5]. The main advantage of this method is that it preserves the image edge. Traditional TV models, including anisotropic TV (ATV) [6] and isotropic TV (ITV) [7], can only approximate the piecewise constant function, causing staircase effects in the restored images [8,9]. Scholars have proposed several variants of the TV model to alleviate the staircase effect. For example, Liu et al. used the L1 norm to describe the statistical characteristics of noise and introduced overlapping group sparsity TV into the salt-and-pepper noise model, achieving good results [10]. However, many non-convex models have achieved better sparse constraints in practical applications than those based on the L1 norm at low sampling rates. Therefore, Yuan and Ghanem proposed a new sparse optimization model (L0TVPADMM) that used an L0 norm as the fidelity term to solve the reconstruction problem based on the TV model [11]. Adam et al. proposed the HNHOTV-OGS method, which combined non-convex high-order total variation and overlapping group sparse regularization [12]. Chartrand proposed a non-convex optimization problem with the minimization of the Lp-pseudo-norm as the objective function [13,14]. Subsequently, Chartrand and Staneva provided a theoretical condition of the Lp-pseudo-norm to recover an arbitrary sparse signal [15]. Wu and Chen [16] and Wen et al. [17] theoretically demonstrated the superiority of methods based on the Lp-pseudo-norm. The Lp-pseudo-norm exhibited a stronger sparse representation ability than the L1 norm. Therefore, it has garnered extensive research interest in recent years [18,19,20,21,22,23]. For example, Lin et al. imposed sparse constraints on the high-order gradients of the image, combined the Lp-pseudo-norm with the total generalized variation model, and proposed an image restoration algorithm that achieved good performance [22]. Based on the mathematical model in [22], Wang et al. replaced the L1 norm with the Lp-pseudo-norm to describe the statistical characteristics of salt-and-pepper noise and proposed an image denoising method based on the Lp-pseudo-norm with low-order overlapping group sparsity (Lp_LOGS) [23].

Among these algorithms, Lp_LOGS performed the best in removing salt-and-pepper noise. However, this method only considers the prior information of the low-order gradient of an image. This study proposes an image-deblurring model based on the Lp_LOGS model to mine the high-order prior information of an IR image containing salt-and-pepper noise, called Lp_HOGS. The second-order TV regularization term was introduced with overlapping group sparsity into the LOGS model, and the Lp-pseudo-norm was retained in the salt-and-pepper noise model. Experimental results show that compared with Lp_LOGS and other advanced models, the proposed Lp_HOGS model demonstrated better peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and gradient magnitude similarity deviation (GMSD). Additionally, the proposed model retained more image details, making the visual effect greater in similarity to the original image. Finally, the proposed model facilitated the subsequent target recognition and tracking processing of the image.

2. Materials and Methods

2.1. The Background to Deblurring Algorithms

2.1.1. The Lp-Pseudo-Norm

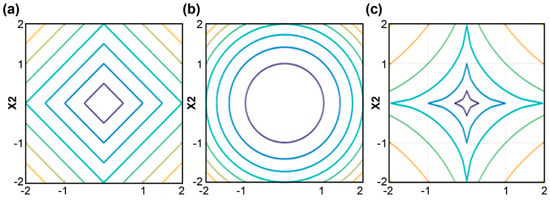

The Lp-pseudo-norm represents the distance in the vector space and is a generalized concept of “distance.” The Lp norm of the matrix is defined as , when p is 1 and 2, and corresponds to the L1 and L2 norms, respectively. The Lp-pseudo-norm is defined as . This study focused on the case where . The contour maps of different norms are presented in Figure 1. The Lp-pseudo-norm has one more degree of freedom than the L1 and L2 norms, and the contour of the Lp-pseudo-norm was closest to the coordinate axis. Hence, the solution has a higher probability of being a point that is on or close to the axis. The Lp-pseudo-norm has a stronger sparse representation ability based on these reasons. Therefore, the Lp-pseudo-norm was introduced into the model for salt-and-pepper denoising in this study.

Figure 1.

Contour maps of the different norms. (a) the L1 norm, (b) the L2 norm, and (c) the Lp-pseudo-norm.

2.1.2. The Overlapping Group Sparse TV Regularization Term

The overlapping group sparse TV regularization term is expressed as:

where represents the convolution operator, is the first-order horizontal difference convolution kernel, is the original image, and is the first-order vertical difference convolution kernel. is the function for calculating the combined gradient and is expressed as:

where represents the overlapping group sparsity matrix, which is defined as:

where represents the length or width of the matrix of the combined gradient, , and means round down to the nearest integer.

As indicated in Equation (3), the overlapping group sparse TV regularization term uses the gradient of the pixel point as the center, constructs a matrix, combines it using the L2 norm, and replaces the independent gradient of the pixel point. Compared with the traditional anisotropic TV regularization term, the overlapping group sparse TV regularization term fully mines the gradient information of each pixel and considers the structural information of the image, thereby increasing the difference between the smooth and the edge areas of the image.

2.1.3. The Lp_LOGS Model

Blurred images are generally considered images corrupted by blur kernels and additive noise. They can be represented by the following linear mathematical model:

where represents the degraded image to be deblurred, is the blur kernel, and is the additive noise, specifically the salt-and-pepper noise in this study.

The application of the Lp_LOGS model to solve is an ill-posed inverse problem, which is expressed as:

where is the fidelity term, is the prior term, and is the balance coefficient used to balance the prior and the fidelity terms.

The Lp_LOGS model leverages the Lp-pseudo-norm and overlapping group sparse TV regularization term, vastly outperforming the traditional ATV model in terms of deblurring. However, the Lp_LOGS model only considers the overlapping group sparse constraints of the low-order gradient information of the image.

To mine the prior information of the high-order gradient of an image, the second-order overlapping group sparse TV regularization term was introduced into the Lp_LOGS model. The proposed novel deblurring model is expressed as:

where represents the balance coefficient, is the second-order horizontal difference convolution kernel, is the second-order vertical difference convolution kernel, and is the second-order mixed difference convolution kernel.

This model was named Lp_HOGS to represent a deblurring model based on the Lp-pseudo-norm with high-order overlapping group sparsity regularization.

2.2. The Solution of the Lp_HOGS Model

The alternating direction method of multipliers (ADMM) was used to solve the Lp_HOGS model. When solving this model, ADMM transformed the original complex problem into several relatively simple subproblems by introducing decoupling variables.

According to the ADMM solution framework, the intermediate variables were included and the original problem was transformed into an optimization problem with constraints, which is expressed as:

The corresponding Lagrange multiplier and quadratic penalty coefficient were used to transform Equation (7) into an unconstrained optimization problem, i.e., the augmented Lagrange objective function of the original problem, which is expressed as:

where represents the inner product of the matrices.

Subsequently, adding Equation (9) to the right side of Equation (8) obtains:

Each subproblem must first be solved to solve the objective function. As the introduced variables , , and were decoupled, the objective function corresponding to the subproblems of became:

The convolution theorem was used to apply the Fourier transform to both sides of Equation (11), which is expressed as:

where denotes the dot product operator and is the Fourier transform of the matrix.

The partial derivative of can be calculated using:

where represents the conjugate matrix of the matrix.

Here, Equation (13) is assumed to be equal to a zero matrix and can be rearranged as:

where

and

The updated formula of is expressed as:

where represents the dot division operator and is the inverse Fourier transform of .

The objective function to solve the subproblem is expressed as:

According to the Lp shrinkage operator:

The updated formula of is expressed as:

Additionally, the objective function of the subproblem is expressed as:

According to the ADMM algorithm, the updated formula of is expressed as:

where is the vector matricization operator, is the matrix vectorization operator, is the identity matrix, and is the diagonal matrix with diagonal elements.

where represents an arbitrary matrix.

Similarly, the updated formulas of can be obtained, which are expressed as:

The objective function to solve subproblem is expressed as:

According to the gradient ascent method, the updated formula of is expressed as:

where is the learning rate.

The objective function to solve subproblem is expressed as:

According to the gradient ascent method, the updated formula of is expressed as:

Similarly, the updated formulas of can be obtained, which are expressed as:

Hence, the subproblems are solved.

Given the above descriptions, the specific description of the Lp_HOGS algorithm is shown in Algorithm 1.

where tol represents the threshold.

| Algorithm 1. Lp_ HOGS |

| Input Observed image . |

| Output Deblurred image . |

| Initialize: |

| 1: If do |

| 2: Use Equations (15)–(17) to update ; |

| 3: Use Equation (20) to update ; |

| 4: Use Equations (22) and (24) to update ; |

| 5: Use Equation (26) to update ; |

| 6: Use Equations (28) and (29) to update ; |

| 7: ; |

| 8: ; |

| 9: End if |

| 10: Return as . |

3. Results and Discussion

The IR test images used in the experiment were downloaded from the publicly available datasets found at http://adas.cvc.uab.es/elektra/datasets/far-infra-red/ (accessed on 5 January 2022) and http://www.dgp.toronto.edu/~{}nmorris/data/IRData/ (accessed on 5 January 2022). This study evaluated the quality of the denoised images from subjective and objective aspects. The objective evaluation metrics used in this study are PSNR, SSIM, and GMSD, which are defined using Equations (30)–(32), respectively:

where and are the original and the restored image, respectively. and represent the mean values of and , respectively. and represent the variances of and , respectively. represents the covariance of and . and are constants to ensure that SSIM is not zero.

where

where is a constant to ensure that the denominator is a non-zero number. and refer to the gradient amplitude of the image in the horizontal and vertical directions, respectively.

Larger PSNR, closer-to-1 SSIM, and smaller GMSD values indicate better deblurring performance.

The variable parameters were preset before the experiment to focus on PSNR optimization before optimizing SSIM and GMSD. The variable parameters of the Lp_HOGS model were set as follows:

The maximum number of iterations and the learning rate were set to 500 and 0.618, respectively.

For each model, the balance coefficient , the quadratic penalty coefficient , and the Lp-pseudo-norm p were manually optimized to achieve the best deblurring effect on the IR image and ensure a fair experiment.

A sensitivity experiment analysis revealed that K was essential and the image quality indicators were optimal when K = 3 was the size of the matrix. The structured information of the image could not be fully mined if the value of K was insignificant. Conversely, unstructured information could be introduced if the value was excessively large.

3.1. The Comparison of Lp_HOGS with Lp_LOGS

Lp_HOGS and Lp_LOGS were compared to verify the effect of adding the second-order overlapping group sparse TV regularization term. We added 30%, 40%, and 50% salt-and-pepper noise to the Gaussian, box, and motion blurs to obtain nine degradation combinations and compared the quality of the deblurred images. Nine distinct IR images were selected as the test images. Images of a passerby, a station, a truck, a car, and some buildings were 384 × 288 pixels each, and images of a garden, some stairs, a corridor, and a zebra crossing were 506 × 408 pixels each.

3.1.1. The Gaussian Blur

The noise was generated by the MATLAB built-in function “noise (I, type, parameters)”. For example, 30% of the salt-and-pepper noise was set to noise (I, ‘salt & Pepper’, 0.3). We included 30%, 40%, and 50% of the salt-and-pepper noise in the test images with a 7 × 7 Gaussian blur. The experimental results are summarized in Table 1.

Table 1.

The Lp_HOGS and Lp_LOGS deblurring effects on images with the 7 × 7 Gaussian blur. The optimal indicators for each condition are denoted in bold to facilitate data observation.

The three performance indicators obtained by the Lp_HOGS model were higher than those of the Lp_LOGS model; thereby demonstrating that the proposed method achieved better deblurring and denoising effects. The Lp_HOGS model achieved average PSNR values that were 0.304, 0.784, and 1.287 dB higher than those of the Lp_LOGS model when the salt-and-pepper noise was 30%, 40%, and 50%, respectively. Therefore, the Lp_HOGS model had a greater advantage when the noise levels increased.

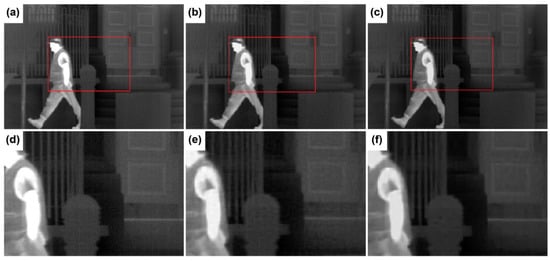

The passerby images with different degrees of degradation are shown in Figure 2. The deblurring effects using Lp_LOGS and Lp_HOGS are compared in Figure 3.

Figure 2.

The passerby image with different degrees of degradation. (a) The original image, (b) the 7 × 7 Gaussian blur, and (c) the 7 × 7 Gaussian blur + 30% salt-and-pepper noise.

Figure 3.

A comparison of the deblurring effects on a passerby image. (a) The original passerby image, (b) the image deblurred using Lp_LOGS, and (c) the image deblurred using Lp_HOGS. (d–f) The local enlarged images from the red boxes in (a–c), respectively.

A comparison of Figure 2a,c with Figure 3b,c revealed that the image deblurred by Lp_LOGS had more speckle noise than the one deblurred by Lp_HOGS. The images in the red boxes in Figure 3b,c were enlarged to further visualize the difference between the two and the results are displayed in Figure 3e,f, respectively.

The right half was a largely smooth area in Figure 3e,f. Figure 3f had less salt-and-pepper noise compared with Figure 3e and its visual effect was closer to that of the original image. The left half was mostly the edge area, therefore it was difficult to see the denoising effect. However, Figure 3f retained more details. For example, the lines of the iron fence are clearer and more continuous. Overall, the visual effect of Figure 3f was closer to that of the original image, indicating that the deblurring performance of Lp_HOGS under the Gaussian blur was better than that of Lp_LOGS. Lp_HOGS also preserved more details while suppressing the staircase effect of deblurred images.

3.1.2. The Box Blur

We added 30%, 40%, and 50% salt-and-pepper noise to the test images with a 7 × 7 box blur. The experimental results are listed in Table 2.

Table 2.

The Lp_HOGS and Lp_LOGS deblurring effects on images with a 7 × 7 box blur. The optimal indicators for each condition are denoted in bold.

The three performance indicators obtained by the Lp_HOGS model were higher than those obtained by the Lp_LOGS model. The Lp_HOGS model achieved average PSNR values that were 0.362, 0.805, and 1.356 dB higher than those of the Lp_LOGS model when the salt-and-pepper noise was 30%, 40%, and 50%, respectively. In terms of the difference in the PSNR value, Lp_HOGS had a marginally greater advantage over Lp_LOGS in the 7 × 7 box blur than in the 7 × 7 Gaussian blur.

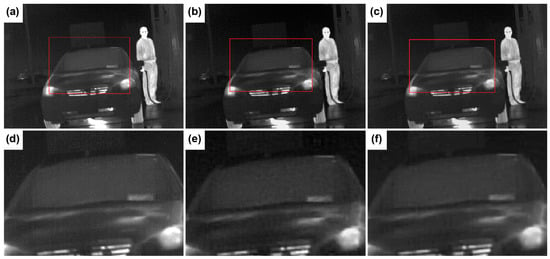

A station image with different degrees of degradation is shown in Figure 4. The images that were deblurred by Lp_LOGS and Lp_HOGS are compared in Figure 5.

Figure 4.

The station image with different degrees of degradation. (a) The original image, (b) the 7 × 7 box blur, and (c) the 7 × 7 box blur + 40% salt-and-pepper noise.

Figure 5.

A comparison of deblurring effects of the two models on a station image. (a) The original station image, (b) the image deblurred using Lp_LOGS, and (c) the image deblurred using Lp_HOGS. (d–f) The Local enlarged images from the red boxes in (a–c), respectively.

A comparison of Figure 4a,c with Figure 5b,c revealed satisfactory overall deblurring effects of Lp_LOGS and Lp_HOGS on images with the box blur. However, the image deblurred by Lp_LOGS contained more speckle noise than the image deblurred by Lp_HOGS. The images in the red boxes in Figure 5b,c are enlarged in Figure 5e,f, respectively.

The upper halves of Figure 5e,f were mostly smooth areas. The model used to create Figure 5f removed salt-and-pepper noise more thoroughly than that used on Figure 5e and its visual effect was closer to the original image. The lower half mostly contained the edge area. Thus, the denoising effect was not evident. However, Figure 5f retained more detail from the original image. For example, the car logo is clearer. Overall, the visual effect of Figure 5f was closer to that of the original image, indicating that Lp_HOGS exhibited better deblurring performance than Lp_LOGS under the box blur. It was also reconfirmed that Lp_HOGS preserved more detail while suppressing the staircase effect of the deblurred images.

3.1.3. The Motion Blur

Finally, 30%, 40%, and 50% salt-and-pepper noise were added to the test images with a 7 × 7 motion blur. The experimental results are listed in Table 3.

Table 3.

The Lp_HOGS and Lp_LOGS deblurring effects on images with the 7 × 7 motion blur. The optimal indicators under each condition are denoted in bold.

The three performance indicators obtained by the Lp_HOGS model were higher than those obtained by the Lp_LOGS model. The Lp_HOGS model achieved average PSNR values that were 1.387, 1.774, and 2.372 dB higher than those of the Lp_LOGS model when the salt-and-pepper noise was 30%, 40%, and 50%, respectively. The difference in the PSNR values showed that Lp_HOGS had more obvious advantages over Lp_LOGS in the motion blur than in the Gaussian and box blurs.

The images of a truck with different degrees of degradation are shown in Figure 6. The deblurring effects of Lp_LOGS and Lp_HOGS are compared in Figure 7.

Figure 6.

The truck image with different degrees of degradation. (a) The original image, (b) the 7 × 7 motion blur, and (c) the 7 × 7 motion blur + 50% salt-and-pepper noise.

Figure 7.

A comparison of deblurring effects of the two models on a truck image. (a) The original truck image, (b) the image deblurred using Lp_LOGS, and (c) the image deblurred using Lp_HOGS. (d–f) The local enlarged images of the red boxes from (a–c), respectively.

A comparison of Figure 6a,c with Figure 7b,c revealed that Lp_LOGS and Lp_HOGS achieved satisfactory deblurring effects in terms of motion blur. Furthermore, the performance edge of the proposed model was similar to that under the Gaussian and the box blurs. The deblurring effects are depicted in Figure 7b,c,e,f.

3.2. The Comparison with Other Methods

This section compares the proposed model with existing models, including ATV [4], ITV [5], L0TVPADMM [9], and HNHOTV-OGS [10]. Nine distinct IR images were selected as test images. The truck, the buildings, the car, and the figure images were 384 × 288 pixels, and the garden, the stairs, the corridor, the road, and the zebra crossing images were 506 × 408 pixels. The experimental results are listed in Table 4 and the unit of PSNR as dB.

Table 4.

The deblurring effects of Lp_HOGS and the other methods on the images with a 7 × 7 Gaussian blur. The optimal indicators under each condition are denoted in bold.

ITV performed the worst, whereas Lp_HOGS outperformed the other four methods in terms of PSNR, SSIM, and GMSD. The PSNR of Lp_HOGS under 30%, 40%, and 50% salt-and-pepper noise was at least 1.2 dB higher than that of L0TVPADMM, which had the second-best PSNR. Therefore, the proposed Lp_HOGS model achieved better performance for removing salt-and-pepper noise than the other state-of-the-art methods. In addition, the IR image after deblurring obtained a better visual effect, which is conducive to subsequent image analysis and processing.

4. Conclusions

This study proposed an image-deblurring model based on the LOGS model to mine the high-order prior information of an IR image containing salt-and-pepper noise. The LOGS regularization term was investigated, combining the advantage of the Lp-pseudo-norm in describing salt-and-pepper noise with replacing the low-order term with a high-order term. The proposed IR image-deblurring model (Lp_HOGS) successfully deblurred an IR image under salt-and-pepper noise. Lp_HOGS achieved average PSNR values that were 0.304, 0.784, and 1.287 dB higher for salt-and-pepper noise at 30%, 40% and 50%, respectively, than those of the Lp_LOGS model for a Gaussian blur. Similarly, Lp_HOGS was 0.362, 0.805, and 1.356 dB higher for the box blur and 1.387, 1.774, and 2.372 dB higher for the motion blur. The findings of this study resulted in the following conclusions:

- Upon adding the Gaussian blur and different levels of salt-and-pepper noise to a given test image, the Lp_HOGS model exhibited a better deblurring effect than the existing models. This result implies that the Lp-pseudo-norm had a stronger sparse representation ability and the overlapping group sparsity regularization term increased the difference between the smooth and the edge areas of an image.

- Upon adding the different types of blur and levels of salt-and-pepper noise to a given test image, Lp_HOGS yielded stronger indicators than Lp_LOGS. This advantage became greater as the noise level increased. Therefore, the high-order prior information of the image improved the quality of the deblurred IR images and the stability of salt-and-pepper noise removal.

- The advantage of Lp_HOGS over Lp_LOGS was most obvious in terms of the motion blur, indicating that adding the prior constraints of the high-order gradient to the model could significantly improve the IR image deblurring effect amid the motion blur.

A limitation of this approach is that the application of the Lp_HOGS model is time-consuming. In our future work, we will accelerate the process by introducing accelerated ADMM to improve the performance and efficiency of the proposed method. Nevertheless, the proposed Lp_HOGS model provides a new approach to reduce the salt-and-pepper noise impact on IR images.

Author Contributions

Conceptualization, Z.Y. and Y.C.; Methodology, Z.Y.; Software, Z.Y. and J.H.; Validation, Z.Y. and X.O.; Formal analysis, Z.Y.; Investigation, Z.Y. and J.H.; Resources, Y.C.; Data curation, Z.Y. and X.O.; Writing—original draft preparation, Z.Y. and X.O.; Writing—review and editing, Z.Y. and Y.C.; Visualization, Z.Y.; Supervision, Y.C.; Project administration, Y.C.; Funding acquisition, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation Project of Fujian Province, grant numbers 2020J05169 and 2020J01816; Principal Foundation of Minnan Normal University, grant number KJ19019; and High-Level Science Research Project of Minnan Normal University, grant number GJ19019.

Data Availability Statement

The data presented in this study are openly available at http://adas.cvc.uab.es/elektra/datasets/far-infra-red/ (accessed on 5 January 2022), http://www.dgp.toronto.edu/~{}nmorris/data/IRData/ (accessed on 5 January 2022), and http://adas.cvc.uab.es/elektra/datasets/far-infra-red/ (accessed on 5 January 2022). Additionally, the perfect code data for the proposed method in this paper are available upon request from the corresponding author.

Acknowledgments

Thank you to X.L. of the University of Electronic Science and Technology of China for sharing the FTV4Lp code.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Li, J.; Yang, W.; Zhang, X. Infrared Image Processing, Analysis, and Fusion; Science Press: Beijing, China, 2009; pp. 7–12. Available online: https://max.book118.com/html/2019/0105/5210100330001344.shtm (accessed on 15 January 2022).

- Fan, Z.; Bi, D.; He, L.; Ma, S. Noise suppression and details enhancement for infrared image via novel prior. Infrared Phys. Technol. 2016, 74, 44–52. [Google Scholar] [CrossRef]

- Faragallah, O.S.; Ibrahem, H.M. Adaptive switching weighted median filter framework for suppressing salt-and-pepper noise. Int. J. Electron. Commun. 2016, 70, 1034–1040. [Google Scholar] [CrossRef]

- Afraites, L.; Hadri, A.; Laghrib, A. A denoising model adapted for impulse and Gaussian noises using a constrained-PDE. Inverse Probl. 2020, 36, 025006. [Google Scholar] [CrossRef]

- Lim, H.; Williams, T.N. A Non-standard Anisotropic Diffusion for Speckle Noise Removal. Syst. Cybern. Inform. 2007, 5, 12–17. Available online: http://www.iiisci.org/Journal/pdv/sci/pdfs/P610066.pdf (accessed on 16 August 2022).

- Osher, S.; Burger, M.; Goldfarb, D.; Jinjun, X.; Wotao, Y. An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 2005, 4, 460–489. [Google Scholar] [CrossRef]

- Peng, Z.; Chen, Y.; Pu, T.; Wang, Y.; He, Y. Image denoising based on sparse representation and regularization constraint: A Review. J. Data Acquis. Process. 2018, 33, 1–11. Available online: http://www.cnki.com.cn/Article/CJFDTotal-SJCJ201801001.htm (accessed on 16 December 2021).

- Li, S.; He, Y.; Chen, Y.; Liu, W.; Yang, X.; Peng, Z. Fast multi-trace impedance inversion using anisotropic total p-variation regularization in the frequency domain. J. Geophys. Eng. 2018, 15, 2171–2182. [Google Scholar] [CrossRef]

- Wu, H.; He, Y.; Chen, Y.; Shu, L.; Peng, Z. Seismic acoustic impedance inversion using mixed second-order fractional ATpV regularization. IEEE Access 2020, 8, 3442–3452. [Google Scholar] [CrossRef]

- Liu, G.; Huang, T.Z.; Liu, J.; Lv, X.G. Total variation with overlapping group sparsity for image deblurring under impulse noise. PLoS ONE 2015, 10, e0122562. [Google Scholar] [CrossRef]

- Yuan, G.; Ghanem, B. l0tv: A new method for image restoration in the presence of impulse noise. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5369–5377. [Google Scholar]

- Adam, T.; Paramesran, R. Image denoising using combined higher order non-convex total variation with overlapping group sparsity. J. Multidimens. Syst. Signal Process. 2019, 30, 503–527. [Google Scholar] [CrossRef]

- Chartrand, R. Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. Lett. 2007, 14, 707–710. [Google Scholar] [CrossRef]

- Chartrand, R. Shrinkage mappings and their induced penalty functions. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 1026–1029. [Google Scholar] [CrossRef] [Green Version]

- Chartrand, R.; Staneva, V. Restricted isometry properties and nonconvex compressive sensing. Inverse Probl. 2008, 24, 035020. [Google Scholar] [CrossRef]

- Wu, R.; Chen, D.R. The improved bounds of restricted isometry constant for recovery via l(p)-minimization. IEEE Trans. Inf. Theory 2013, 59, 6142–6147. [Google Scholar] [CrossRef]

- Wen, J.; Li, D.; Zhu, F. Stable recovery of sparse signals via lp-minimization. Appl. Comput. Harmon. Anal. 2015, 38, 161–176. [Google Scholar] [CrossRef]

- Chen, Y.; Peng, Z.; Gholami, A.; Yan, J.; Li, S. Seismic signal sparse time-frequency representation by Lp-quasinorm constraint. Digital Signal Process. 2019, 87, 43–59. [Google Scholar] [CrossRef]

- Liu, X.; Chen, Y.; Peng, Z.; Wu, J. Total variation with overlapping group sparsity and Lp quasinorm for infrared image deblurring under salt-and-pepper noise. J. Electron. Imaging 2019, 28, 043031. [Google Scholar] [CrossRef]

- Liu, X.; Chen, Y.; Peng, Z.; Wu, J.; Wang, Z. Infrared image super-resolution reconstruction based on quaternion fractional order total variation with Lp quasinorm. Appl. Sci. 2018, 8, 1864. [Google Scholar] [CrossRef]

- Xu, J.; Chen, Y. Method of removing salt and pepper noise based on total variation technique and Lp pseudo-norm. J. Data Acquis. Process. 2020, 35, 89–99. Available online: https://qikan.cqvip.com/Qikan/Article/Detail?id=7100955546 (accessed on 16 August 2022).

- Lin, F.; Chen, Y.; Chen, Y.; Yu, F. Image deblurring under impulse noise via total generalized variation and non-convex shrinkage. Algorithms 2019, 12, 221. [Google Scholar] [CrossRef]

- Wang, L.; Chen, Y.; Lin, F.; Chen, Y.; Yu, F.; Cai, Z. Impulse noise denoising using total variation with overlapping group sparsity and Lp-pseudo-norm shrinkage. Appl. Sci. 2018, 8, 2317. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).