Abstract

The slime mold algorithm (SMA) is a swarm-based metaheuristic algorithm inspired by the natural oscillatory patterns of slime molds. Compared with other algorithms, the SMA is competitive but still suffers from unbalanced development and exploration and the tendency to fall into local optima. To overcome these drawbacks, an improved SMA with a dynamic quantum rotation gate and opposition-based learning (DQOBLSMA) is proposed in this paper. Specifically, for the first time, two mechanisms are used simultaneously to improve the robustness of the original SMA: the dynamic quantum rotation gate and opposition-based learning. The dynamic quantum rotation gate proposes an adaptive parameter control strategy based on the fitness to achieve a balance between exploitation and exploration compared to the original quantum rotation gate. The opposition-based learning strategy enhances population diversity and avoids falling into the local optima. Twenty-three benchmark test functions verify the superiority of the DQOBLSMA. Three typical engineering design problems demonstrate the ability of the DQOBLSMA to solve practical problems. Experimental results show that the proposed algorithm outperforms other comparative algorithms in convergence speed, convergence accuracy, and reliability.

1. Introduction

In the optimization field, solving an optimization problem usually means finding the optimal value to maximize or minimize a set of objective functions without violating constraints [1]. Optimization methods can be divided into two main categories: exact algorithms and metaheuristics [2]. While exact algorithms can provide global optima precisely, they have exponentially increasing execution times in proportion to the number of variables and are considered less suitable and practical [3]. In contrast, metaheuristic algorithms can identify the best or near-optimal solution in a reasonable amount of time [4]. During the last two decades, metaheuristic algorithms have gained much attention, and much development and work there have been on them due to their flexibility, simplicity, and global optimization. Thus, they are widely used for solving optimization problems in almost every domain, such as big data text clustering [5], tuning of fuzzy control systems [6,7], path planning [8,9], feature selection [10,11,12], training neural networks [13], parameter estimation for photovoltaic cells [14,15,16], image segmentation [17,18], tomography analysis [19], and permutation flowshop scheduling [20,21].

Metaheuristic algorithms simulate natural phenomena or laws of physics and are usually classified into three categories: evolutionary algorithms, physical and chemical algorithms, and swarm-based algorithms. Evolutionary algorithms are a class of algorithms that simulate the laws of evolution in nature. The best known is the genetic algorithm (GA) [22], which was developed from Darwin’s theory of superiority and inferiority. There are other algorithms, such as differential evolution (DE) [23], which simulates the crossover and variation mechanisms of inheritance, evolutionary programming (EP) [24], and evolutionary strategies (ES) [25]. Physical and chemical algorithms search for the optimum by simulating the universe’s chemical laws or physical phenomena. Algorithms in this category include simulated annealing (SA) [26], electromagnetic field optimization (EFO) [27], equilibrium optimizer (EO) [28], and Archimedes’ optimization algorithm (ArchOA) [29]. Swarm-based algorithms simulate the behavior of social groups of animals or humans. Examples of such algorithms include the whale optimization algorithm (WOA) [30], salp swarm algorithm (SSA) [31], moth search algorithm (MSA) [32], aquila optimizer (AO) [33], grey wolf optimizer (GWO) [34], harris hawks optimization (HHO) [35], and particle swarm optimization (PSO) [36].

However, the no free lunch (NFL) theorem [37] proves that no single algorithm can solve all optimization problems well. If an algorithm is particularly effective for a particular class of problems, it may not be able to solve other classes of optimization problems. This motivates us to propose new algorithms or improve the existing ones. The slime mold algorithm (SMA) [38] is a new meta-heuristic algorithm proposed by Li et al. in 2020. The basic idea of the SMA is based on the foraging behavior of slime mold, which has different feedback aspects according to the food quality. Different search mechanisms have been introduced into the SMA to solve various optimization problems. For example, Zhao et al. [39] introduced a diffusion mechanism and association strategy into the SMA and applied the proposed algorithm to the image segmentation of CT images. Salah et al. [40] applied the slime mold algorithm to optimize an artificial neural network model for predicting monthly stochastic urban water demand. Wang et al. [41] developed a parallel slime mold algorithm for the distribution network reconfiguration problem with distributed generation. Tang et al. [42] introduced chaotic opposition-based learning and spiral search strategies into the SMA and proposed two adaptive parameter control strategies. The simulation results show that the proposed algorithms outperform other similar algorithms. Örnek et al. [43] proposed an enhanced SMA that combines the sine cosine algorithm with the position update of the SMA. Experimental results show that the proposed hybrid algorithm has a better ability to jump out of local optima with faster convergence.

Although the SMA, as a new algorithm, is competitive with other algorithms, it also suffers from some shortcomings. The SMA, similarly to many other swarm-based metaheuristic algorithms, suffers from slow convergence and premature convergence to a local optimum solution [44]. In addition, the update strategy of SMA reduces exploration capabilities and reduces population diversity. To improve the above problems, an improved algorithm based on SMA, called the dynamic-quantum-rotation-gate- and opposition-based learning SMA (DQOBLSMA), is proposed. In this paper, we introduce two mechanisms, the dynamic quantum rotation gate (DQGR) and opposition-based learning (OBL), into the SMA simultaneously. Both mechanisms improve the shortcomings of the original algorithm in terms of slow convergence and the tendency to fall into local optima. First, DQGR rotates the search individuals to the direction of the optimum, improving the diversity of the population and enhancing the global exploration capability of the algorithm. At the same time, OBL explores the partial solution in the opposite direction, improving the algorithm’s ability to jump out of local optima. The performance of the DQOBLSMA was evaluated by comparing it with the original SMA algorithm and with other advanced algorithms. In addition, three different constraint engineering problems were used to verify the performance of the DQOBLSMA further: the welded beam design problem, the tension/compression spring design problem, and pressure vessel design.

The main contributions of this paper are summarized as follows:

- 1.

- DQRG and OBL strategies were introduced into SMA to improve the exploration capabilities of SMA.

- 2.

- The DQRG strategy is proposed in order to balance the exploration and exploitation phases.

- 3.

- By comparing five well-known metaheuristic algorithms, experiments show that the proposed DQOBLSMA is more robust and effective.

- 4.

- Experiments on three engineering design optimization problems show that the DQOBLSMA can be effectively applied to practical engineering problems.

This paper is organized as follows. Section 2 describes the slime mold algorithm, quantum rotation gate, and opposition-based learning. Section 3 presents the proposed improved slime mold algorithm. Section 4 show the experimental study and discussion using benchmark functions. The DQOBLSMA is applied to solve the three engineering problems in Section 5. Finally, the conclusion and future work are given in Section 6.

2. Materials and Methods

2.1. Slime Mold Algorithm

The slime mold algorithm (SMA) [38] is a swarm-based metaheuristic algorithm recently developed by Li et al. The algorithm simulates a range of behaviors for foraging by the slime mold. The negative and positive feedbacks of the slime mold using a biological oscillator to propagate waves during foraging for a food source are simulated by the SMA using adaptive weights. Three special behaviors of the slime mold are mathematically formulated in the SMA: approaching food, wrapping food, and grabbing food. The process of approaching food can be expressed as

where t is the number of current iterations, is the newly generated position, denotes the best position found by the slime mold in iteration t, and are two random positions selected from the population of slime mold, and r is a random value in [0, 1].

and vs.c are the coefficients that simulate the oscillation and contraction mode of slime mold, respectively, and vs.c is designed to linearly decrease from one to zero during the iterations. The range of is from to a, and the computational formula of a is

where T is the maximum number of iterations.

According to Equations (1) and (2), it can be seen that as the number of iterations increases, the slime mold will wrap the food.

W is a significantly important factor that indicates the weight of the slime mold, and it is calculated as follows:

where N is the size of the population, i represents the i-th individual in the population, , denotes the random value in the interval of [0, 1], denotes the optimal fitness obtained in the current iterative process, denotes the worst fitness value obtained in the iterative process currently, represents the fitness of X, denotes the sequence of fitness values sorted.

where denotes the best fitness obtained in all iterations.

Finally, when the slime mold has found the food, it still has a certain chance z to search other new food, which is formulated as

where and are the upper and lower limits, respectively, and implies a random value in the region [0, 1]. z is set to 0.03 in original SMA.

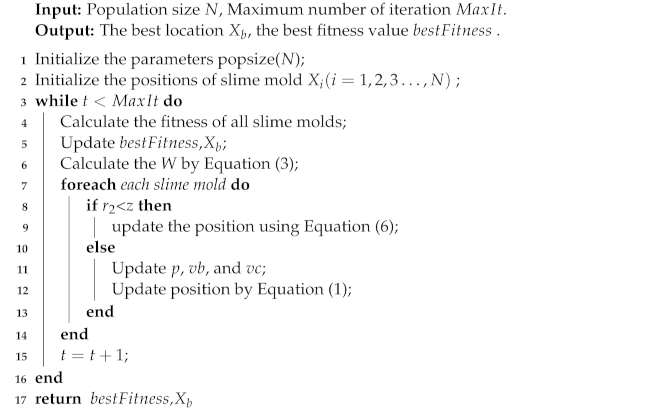

Finally, the pseudo-code of SMA is given in Algorithm 1.

| Algorithm 1: Pseudo-code of the slime mold algorithm (SMA) |

|

2.2. Description of the Quantum Rotation Gate

2.2.1. Quantum Bit

The fundamental storage unit is a quantum bit in quantum computer systems, communication systems, and other quantum information systems [45]. The difference between quantum bits and classical bits is that quantum bits can be in a superposition of two states simultaneously, whereas classical bits can be in only one state at a period of time, which is defined as Equation (7).

where and represent the probability amplitudes of the two superposition states. and are the e probabilities that the qubit is in two different states of “0” and “1”, and the relationship between them is shown in Equation (8).

Thus, a quantum bit can represent one state or be in both states at the same time.

2.2.2. Quantum Rotation Gate

In the DQOBLSMA, the QRG strategy is introduced to update the position of some search individuals to enhance the exploitation of the algorithm. In the physical discipline of quantum computing, the quantum rotation gate is used as a state processing technique. Quantum bits are binary, and the position information generated by the swarm-based algorithm is floating-point data. In order to process the position information, the discrete data of the quantum bits need to be turned into the algorithm’s continuous data. The information of each dimension of the search agent is rotated in couples and updated by a quantum rotation gate. The update process and adjustment operation of QRG are as follows. Equation (9) shows that the matrix represents the quantum rotation gate.

The updating process is as follows:

where shows the state of the quantum bit of the i-th quantum bit of the chromosome before the update of the quantum rotation gate, and indicates the state of the quantum bit after the update. denotes the rotation angle of the ith quantum bit, the size and sign of which have been pre-set, and its adjustment strategy is shown in Table 1.

Table 1.

Strategies for specifying rotation angle in QRG.

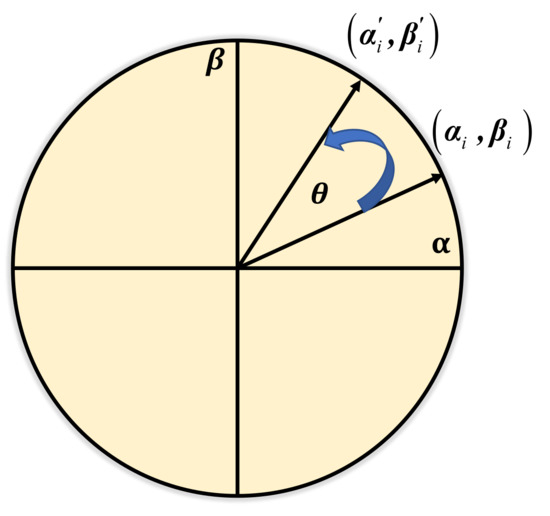

Table 1 shows the rotation angle is labeled by , where denotes the rotation of the target direction. represents the rotation angle of the i-th rotation, where the position state of the i-th search agent in the population is , and the position state of the optimal search agent in the whole population is . By comparing the fitness values of the current target and the optimal target, the direction of the target with higher fitness is selected to rotate the individual, thereby expanding the search space. If , then the algorithm evolves toward the current target. Conversely, let the quantum bit state vector transform towards the direction where the optimal individual exists [46]. Figure 1 shows the quantum bit state vector transformation process.

Figure 1.

The process of updating the state of a quantum bit.

2.3. Opposition-Based Learning (OBL)

Tizhoosh proposed OBL in 2005 [47]. This technique can increase the convergence speeds of metaheuristic algorithms by replacing a solution in the population by searching for a potentially better solution in the opposite direction of the current one. With this approach, a population with better solutions could be generated after each iteration and accelerate convergence speed. The OBL strategy has been successfully used in various metaheuristic algorithms to improve the ability of local optima stagnation avoidance [48], and the mathematical expression is as follows:

In opposition-based learning, for the original solution and the reverse solution , according to their fitness, save the better solution among them. Finally, the slime mold position for the next iteration is updated as follows in the minimization problem:

3. Proposed Method

3.1. Improved Quantum Rotation Gate

The magnitude of the rotation angle of the QRG significantly affects the convergence speed. A relatively large amplitude leads to premature convergence. Conversely, smaller angles lead to slower convergence. In particular, the rotation angle of the original quantum rotation gate is fixed, which is not conducive to the balance between exploration and exploitation. Based on this, we propose a new dynamic adaptation strategy to adjust the rotation angle of the quantum rotation gate. In the early exploration stage, the value of should be increased when the current individual is far from the best. In the exploitation stage, the value of should be decreased. This method allows the search process to adapt to different solutions and is more conducive to searching for the global optimum. In detail, this improved method determines the value of the rotation angle by the difference between the current individual’s fitness and the best fitness that has been obtained so far. The rotation angle is defined as

where and are the maximum and minimum values of the range of , respectively. The maximum and minimum values take and , respectively. is defined as:

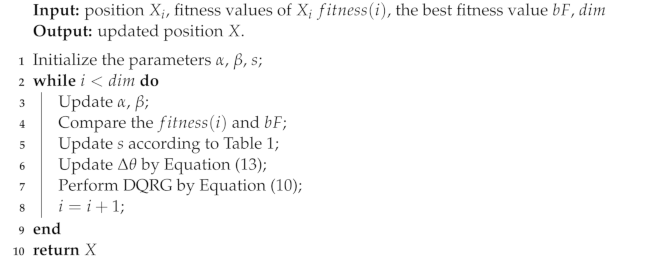

The pseudo-code of DQRG (Algorithm 2) is as follows:

| Algorithm 2: Pseudo-code of the quantum rotation gate (DQRG). |

|

3.2. OBL

In this work, an improved method to obtain the opposite solution is proposed further. Specifically, instead of using only lower and upper bounds to find the opposite point, the impact of the current better solution, including the optimal, suboptimal, and third optimal solutions, is added to the opposite point’s calculation procedure. The new formula of the opposite point is expressed as follows:

where is the average of three better solutions, is the current best solution, is the suboptimal solution, and is the third optimal solution.

where is the improved opposite solution, denotes the random value in the interval of [0, 1], and and are the upper and lower limits.

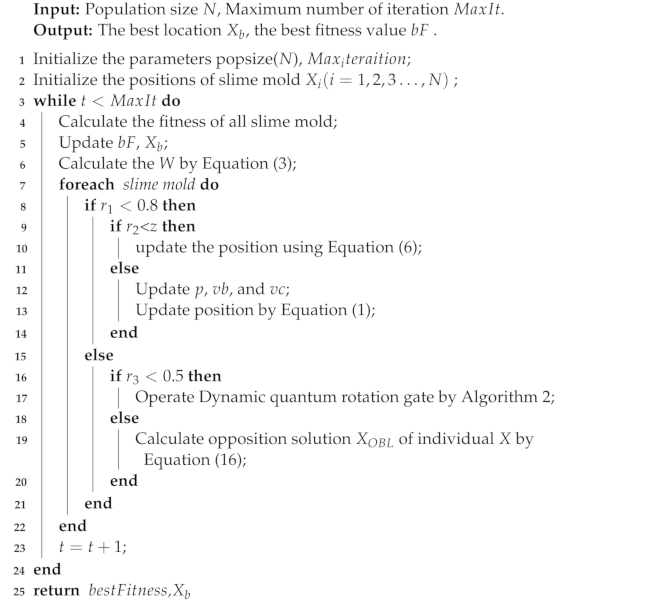

3.3. Improved SMA

To explore the solution space of complex optimization problems more efficiently, we propose two strategies based on the original SMA algorithm: the DQRG and OBL strategies. In the proposed method, two main conditions are considered to execute the proposed policy procedures. The first condition is the execution of SMA or two other strategies. If , then SMA is executed to update the position. Otherwise, the second condition is checked to determine the strategy to adopt. If in the second condition, the solution will be updated using the DQRG; otherwise, OBL will be executed for the searched individual. The pseudo-code of the DQOBLSMA is shown as Algorithm 3:

| Algorithm 3: Pseudo-code of the DQOBLSMA |

|

3.4. Computational Complexity Analysis

The computational complexity of the DQOBLSMA depends on the population size (N), dimension size (D), and maximum iterations (T). First, the DQOBLSMA produces the search agents randomly in the search space, so the computational complexity is O). Second, the computational complexity of calculating the fitness of all agents is O(N). The quick-sort of all search agents is O(). Moreover, updating the positions of agents in the original SMA is (). Therefore, the total computational complexity of original SMA is O( ).

Updating the positions through the DQRG is O() (maximum), and the OBL is O(N) (maximum). Updating the position using DQRG and the original SMA will not be done simultaneously. In summary, the final time complexity is O(DQOBLSMA) = O() (maximum). In summary, the improved strategy proposed in this paper does not increase the computational complexity when compared with the original SMA.

4. Experiments and Discussion

We conducted a series of experiments to verify the performance of the DQOBLSMA. The classical benchmark functions are introduced in Section 4.1. In the experiments of test functions, the impacts of two mechanisms were analyzed; see Section 4.2. In Section 4.3, the DQOBLSMA is compared with several advanced algorithms. In Section 4.4, the convergence of the algorithms is analyzed.

The performance of the DQOBLSMA was investigated using the mean result (Mean) and standard deviation (Std). In order to accurately make statistically reasonable conclusions, the results of the benchmark test functions were ranked using the Friedman test. In addition, the Wilcoxon’s rank-sum test was used to assess the average performances of the algorithms in a statistical sense. In this study, it was used to test whether there was a difference in the effect of the DQOBLSMA compared with those of other algorithms in pairwise comparisons. When the p-value is less than 0.05, the result is significantly different from the other methods. The symbols “+”, “−”, and “=” indicate if the DQOBLSMA is better than, inferior to, or equal to the other algorithms, respectively.

4.1. Benchmark Function Validation and Parameter Settings

In this study, the test set for the DQOBLSMA comparison experiment was the 23 classical test functions that had been used in the literature [34]. The details are shown in Table 2. These classical test functions are divided into unimodal functions, multimodal functions, and fixed-dimension multimodal functions. The unimodal functions (F1–F7) have only one local solution and one optimal global solution and are usually used to evaluate the local exploitation ability of the algorithm. Multimodal functions (F8–F13) are often used to test the exploration ability of the algorithm. F14–F23 are fixed-dimensional multimodal functions with many local optimal points and low dimensionality, which can be used to evaluate the stability of the algorithm.

Table 2.

The classic benchmark functions.

The DQOBLSMA has been compared to the original SMA and five other algorithms: the slime mold algorithm improved by opposition-based learning and Levy flight distribution (OBLSMAL) [48], the equilibrium slime mold algorithm (ESMA) [49], the equilibrium optimizer with a mutation strategy (MEO) [50], the adaptive differential evolution with an optional external archive (JADE) [51], and the gray wolf optimizer based on random walk (RWGWO) [52]. The parameter settings of each algorithm are shown in Table 3, and the experimental parameters for all optimization algorithms were chosen to be the same as those reported in the original works.

Table 3.

Parameter settings for the comparative algorithms.

In order to maintain a fair comparison, each algorithm was independently run 30 times. The population size (N) and the maximum function evaluation times () of all experimental methods were fixed at 30 and 15,000, respectively. The comparative experiment was run under the same test conditions to keep the experimental conditions consistent. The proposed method was coded in Python3.8 and tested on a PC with an AMD R5-4600 Hz, 3.00 GHz of memory, 16 GB of RAM, and the Windows 11 operating system.

4.2. Impacts of Components

In this section, different versions of the improvement are investigated. The proposed DQOBLSMA adds two different mechanisms to the original SMA. To verify their respective effects, they are compared when separated. Different combinations between SMA and two mechanisms are listed below:

- SMA combined with DQRG and OBL (DQOBLSMA);

- SMA combined with DQRG (DQSMA);

- SMA combined with OBL(OBLSMA);

- Original SMA;

Table 4 gives the comparison results between the original SMA and the improved algorithm after adding the mechanism. The ranking of the four algorithms is given at the end of the table, and it can be seen that the first-ranked algorithm is the DQOBLSMA. This ranking was obtained using the Friedman ranking test [53] and reveals the overall performance rankings of the compared algorithms against the tested functions. In these cases, the ranking from best to worst was roughly as follows: DQOBLSMA > OBLSMA > SMA > DQSMA. With the addition of both mechanisms, the performance of the DQOBLSMA is more stable, and the global search capability is much improved. When comparing DQSMA with OBLSMA, we can see that OBLSMA is much stronger than DQSMA, indicating that the contribution of OBL to the performance of SMA is more significant than the contribution of DQRG to the performance of SMA. When comparing DQSMA with SMA, we can see that DQSMA becomes worse on unimodal functions but stronger on most multimodal and fixed-dimensional multimodal functions than the original SMA in terms of optimization.

Table 4.

Search results (comparisons of the DQOBLSMA, DQSMA, OBLSMA, SMA).

Wilcoxon’s rank-sum test was used to verify the significance of the DQOBLSMA against the original SMA and SMA with the addition of one mechanism. The results are shown in Table 5. Based on these results and those in Table 4, the DQOBLSMA outperformed SMA on 13 benchmark functions, DQSMA on 17 benchmark functions, and OBLSMA on 8 benchmark functions. Thus, the DQOBLSMA algorithm proposed in this paper combines DQRG with OBL. Although DQSMA and OBLSMA can both find the solutions, there are more benefits to be gained by combining the two strategies. In conclusion, the DQOBLSMA offers better optimization performance and is significantly better than SMA, DQSMA, and OBLSMA.

Table 5.

Test statistical results of Wilcoxon’s rank-sum test.

4.3. Benchmark Function Experiments

As seen from Table 6, on unimodal benchmark functions (F1–F7), the DQOBLSMA can achieve better results than other optimization algorithms. For F1, F3, and F6, the DQOBLSMA could find the theoretical optimal value. For all unimodal functions, the DQOBLSMA obtained the smallest mean values and standard deviations compared to other algorithms, showing the best accuracy and stability.

Table 6.

Results of unimodal benchmark test functions.

From the results shown in Table 7 and Table 8, the DQOBLSMA outperformed the other algorithms for most of the multimodal and fixed-dimensional multimodal functions. For the multimodal functions F8–F13, the DQOBLSMA obtained almost all the best mean and standard deviation values, and obtainedthe global optimal solution for four functions (F8–F11). As shown in Table 8, the DQOBLSMA obtained theoretically optimal values in 8 of the 10 fixed-dimensional multimodal functions (F14–F23). Although the DQOBLSMA did not outperform JADE in F14–F23, it exceeded ESMA and OBLSMAL in overall performance. These results show that the DQOBLSMA also provides powerful and robust exploitation capabilities.

Table 7.

Results of multi-modal benchmark functions.

Table 8.

Results of fixed-dimension multi-modal benchmark functions.

In addition, Table 9 presents Wilcoxon’s rank-sum test results to verify the significant differences between the DQOBLSMA and the other five algorithms. It is worth noting that p-values less than 0.05 mean significant differences between the respective pairs of compared algorithms. The DQOBLSMA outperformed all other algorithms to varying degrees, and outperformed OBLSMAL, ESMA, MEO, JADE, and RWGWO, on 14, 15, 16, 15, and 18 benchmark functions, respectively. Table 10 shows the statistical results of the Friedman test, where the DQOBLSMA ranked first in F1–F7 and F8–F13 and second after JADE by a small margin in F14–F23. The DQOBLSMA received the best ranking overall. In summary, the DQOBLSMA provided better results on almost all benchmark functions than the other algorithms.

Table 9.

Test statistical results of Wilcoxon’s rank-sum test.

Table 10.

Test statistical results of the Friedman test.

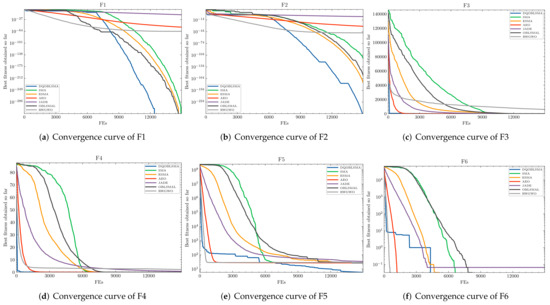

4.4. Convergence Analysis

To demonstrate the effectiveness of the proposed DQOBLSMA, Figure 2 shows the convergence curves of the DQOBLSMA, SMA, ESMA, AEO, JADE, and RWGWO for the classical benchmark functions. The convergence curves show that the initial convergence of the DQOBLSMA was the fastest in most cases, except for , and ; and RWGWO had faster initial convergence for these functions. For –, all comparison algorithms converged quickly to the global optimum, and the DQOBLSMA did not show a significant advantage. In Figure 2, a step or cliff drop in the DQOBLSMA’s convergence curve can be observed, which indicates outstanding exploration capability. In almost all test cases, the DQOBLSMA had a better convergence rate than SMA and SMA variants, indicating that the SMA’s convergence results can be significantly improved when applying the proposed search strategies. In conclusion, the DQOBLSMA is not only robust and effective at producing the best results, but also has a higher convergence speed than the other algorithms.

Figure 2.

Convergence figures on test functions F1–F23.

5. Engineering Design Problems

In this section, the DQOBLSMA is evaluated using three engineering design problems: the welded beam design problem, tension/compression springs, and the pressure vessel design problem. These engineering problems are well known and have been widely used to verify the effectiveness of methods for solving complex real-world problems [54]. The proposed method is compared with the state-of-the-art algorithms: OBLSMAL, ESMA, MEO, JADE, and RWGWO. The population size (N) and the maximum number of iterations were fixed at 30 and 500 for all comparison algorithms.

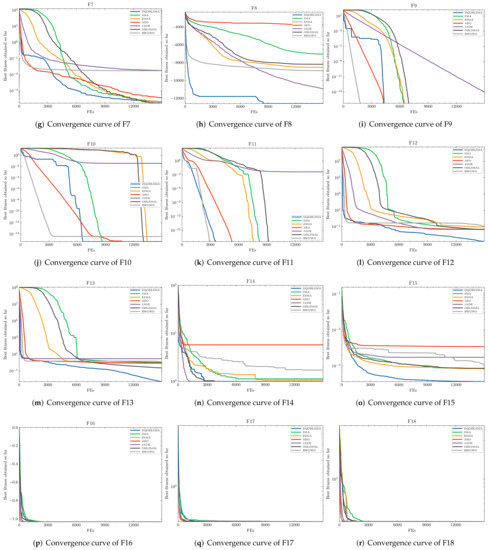

5.1. Welded Beam Design Problem

The design diagram for the structural problem of a welded beam [55] is shown in Figure 3. The objective of structural design optimization of welded beams is to minimize the total cost, subject to certain constraints, which are the shear stress , the bending stress on the beam, the buckling load , and the deflection of beam. Four variables are considered in this problem, welded thickness (h), the bar length (l), bar height (t), and the thickness of the bar (b).

Figure 3.

Welded beam design problem.

The mathematical equations of this problem are shown below:

Consider:

minimize:

subject to:

where:

range of variables:

In Table 11, the results of the proposed DQOBLSMA and other well-known comparative optimization algorithms are given. It is clear from Table 11 that the proposed DQOBLSMA provides promising results for the optimal variables compared to other well-known optimization algorithms. The DQOBLSMA obtained a minimum cost of 1.695436 when h = 0.205598, l = 3.255605, t = 9.036367, and b = 0.205741.

Table 11.

Comparison in welded beam design.

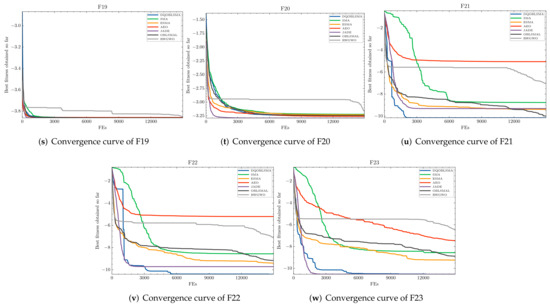

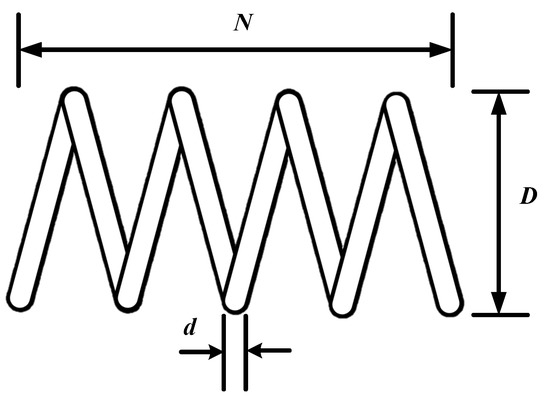

5.2. Tension/Compression Spring Design

The design goal for extension/compression springs [56] is to obtain the minimum optimum weight under four constraints: deviation (), shear stress (), surge frequency (), and deflection (). As shown in Figure 4, three variables need to be considered. They are the wire diameter (d), the mean coil diameter (D), and the number of active coils (N). The mathematical description of this problem is given below:

Figure 4.

Tension/compression spring design problem.

Consider:

minimize:

subject to:

range of variables:

The results of the DQOBLSMA and other comparative algorithms are presented in Table 12. The proposed DQOBLSMA achieved the best solution to the problem. The DQOBLSMA obtained a minimum cost of 0.012719 when d = 0.050000, D = 0.317425, and N = 14.028013.

Table 12.

Comparison for the tension/compression spring design problem.

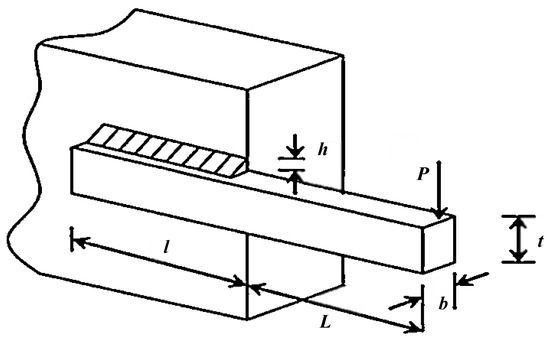

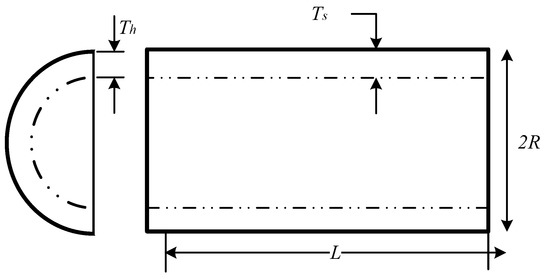

5.3. Pressure Vessel Design

The pressure vessel design problem is a four-variable, four-constraint problem in the industry field that aims to reduce the total cost of a given cylindrical pressure vessel [57]. The four variables studied include the width of the shell (), the width of the head (), the inner radius (R), and the length of the cylindrical section (L), as shown in Figure 5. The formulation of objective functions and four optimization constraints can be described as follows:

Figure 5.

Pressure vessel design problem.

Consider:

minimize:

subject to:

range of variables:

Table 13 shows how the DQOBLSMA compares with other competitor algorithms. The results shows the DQOBLSMA is able to find the optimal solution at the lowest cost, obtaining an optimal spend of 5885.623524 when = 0.778246, = 0.384708, R = 40.323469, and L = 199.950065.

Table 13.

Comparison in pressure vessel design.

6. Conclusions

In this paper, an enhanced SMA (DQOBLSMA) was proposed by introducing two mechanisms, DQRG and OBL, into the original SMA. In the DQOBLSMA, these two strategies further enhance the global search capability of the original SMA: DQRG enhances the exploration capability of the original SMA, and OBL increases the population diversity. The DQOBLSMA overcomes the weaknesses of the original search method and avoids premature convergence. The performance of the proposed DQOBLSMA was analyzed by using 23 classical mathematical benchmark functions.

First, the DQOBLSMA and the individual combinations of these two strategies were analyzed and discussed. The results showed that the proposed strategies are effective, and SMA achieved the best performance with the combination of the two mechanisms. Secondly, the results of the DQOBLSMA were compared with five state-of-the-art algorithms ESMA, AEO, JADE, OBLSMAL, and RWGWO. The results show that the DQOBLSMA is competitive with other advanced metaheuristic algorithms. To further validate the superiority of the DQOBLSMA, it was applied to three industrial engineering design problems. The experimental results show that the DQOBLSMA also achieves better results when solving engineering problems and significantly improves the original solutions.

As a future perspective, a multi-objective version of the DQOBLSMA will be considered. The proposed algorithm has promising applications in scheduling problems, image segmentation, parameter estimation, multi-objective engineering problems, text clustering, feature selection, test classification, and web applications.

Author Contributions

Conceptualization, S.D. and Y.Z.; software, Y.Z.; validation, S.D. and Q.Z.; formal analysis, S.D. and Y.Z.; investigation, S.D. and Y.Z.; resources, S.D.; writing—original draft preparation, Y.Z.; writing—review and editing, S.D. and Y.Z.; visualization, Y.Z.; funding acquisition, S.D. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge the support of the Key R & D Projects of Zhejiang Province (No. 2022C01236, 2019C01060), the National Natural Science Foundations of China (Grant Nos. 21875271, U20B2021, 21707147, 51372046, 51479037, 91226202, and 91426304), the Entrepreneurship Program of Foshan National Hi-tech Industrial Development Zone, the Major Project of the Ministry of Science and Technology of China (Grant No. 2015ZX06004-001), Ningbo Natural Science Foundations (Grant Nos. 2014A610006, 2016A610273, and 2019A610106).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Talbi, E.G. Metaheuristics: From Design to Implementation; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Jamil, M.; Yang, X.S. A literature survey of benchmark functions for global optimization problems. J. Math. Model. Numer. Optim. 2013, 4, 150. [Google Scholar] [CrossRef]

- Katebi, J.; Shoaei-parchin, M.; Shariati, M.; Trung, N.T.; Khorami, M. Developed comparative analysis of metaheuristic optimization algorithms for optimal active control of structures. Eng. Comput. 2020, 36, 1539–1558. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S. An improved grey wolf optimizer for solving engineering problems. Expert Syst. Appl. 2021, 166, 113917. [Google Scholar] [CrossRef]

- Abualigah, L.; Gandomi, A.H.; Elaziz, M.A.; Hamad, H.A.; Omari, M.; Alshinwan, M.; Khasawneh, A.M. Advances in meta-heuristic optimization algorithms in big data text clustering. Electronics 2021, 10, 101. [Google Scholar] [CrossRef]

- Marinaki, M.; Marinakis, Y.; Stavroulakis, G.E. Fuzzy control optimized by PSO for vibration suppression of beams. Control Eng. Pract. 2010, 18, 618–629. [Google Scholar] [CrossRef]

- David, R.C.; Precup, R.E.; Petriu, E.M.; Rădac, M.B.; Preitl, S. Gravitational search algorithm-based design of fuzzy control systems with a reduced parametric sensitivity. Inf. Sci. 2013, 247, 154–173. [Google Scholar] [CrossRef]

- Tang, A.D.; Han, T.; Zhou, H.; Xie, L. An improved equilibrium optimizer with application in unmanned aerial vehicle path planning. Sensors 2021, 21, 1814. [Google Scholar] [CrossRef]

- Fu, J.; Lv, T.; Li, B. Underwater Submarine Path Planning Based on Artificial Potential Field Ant Colony Algorithm and Velocity Obstacle Method. Sensors 2022, 22, 3652. [Google Scholar] [CrossRef]

- Alweshah, M.; Khalaileh, S.A.; Gupta, B.B.; Almomani, A.; Hammouri, A.I.; Al-Betar, M.A. The monarch butterfly optimization algorithm for solving feature selection problems. Neural Comput. Appl. 2020, 34, 11267–11281. [Google Scholar] [CrossRef]

- Alweshah, M. Solving feature selection problems by combining mutation and crossover operations with the monarch butterfly optimization algorithm. Appl. Intell. 2021, 51, 4058–4081. [Google Scholar] [CrossRef]

- Almomani, O. A Feature Selection Model for Network Intrusion Detection System Based on PSO, GWO, FFA and GA Algorithms. Symmetry 2020, 12, 1046. [Google Scholar] [CrossRef]

- Moayedi, H.; Nguyen, H.; Kok Foong, L. Nonlinear evolutionary swarm intelligence of grasshopper optimization algorithm and gray wolf optimization for weight adjustment of neural network. Eng. Comput. 2021, 37, 1265–1275. [Google Scholar] [CrossRef]

- Wunnava, A.; Naik, M.K.; Panda, R.; Jena, B.; Abraham, A. A novel interdependence based multilevel thresholding technique using adaptive equilibrium optimizer. Eng. Appl. Artif. Intell. 2020, 94, 103836. [Google Scholar] [CrossRef]

- Kundu, R.; Chattopadhyay, S.; Cuevas, E.; Sarkar, R. AltWOA: Altruistic Whale Optimization Algorithm for feature selection on microarray datasets. Comput. Biol. Med. 2022, 144, 105349. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chakrabortty, R.K.; Sallam, K.; Ryan, M.J. An efficient teaching-learning-based optimization algorithm for parameters identification of photovoltaic models: Analysis and validations. Energy Convers. Manag. 2021, 227, 113614. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Yousri, D.; Al-qaness, M.A.A.; AbdelAty, A.M.; Radwan, A.G.; Ewees, A.A. A Grunwald–Letnikov based Manta ray foraging optimizer for global optimization and image segmentation. Eng. Appl. Artif. Intell. 2021, 98, 104105. [Google Scholar] [CrossRef]

- Naik, M.K.; Panda, R.; Abraham, A. An opposition equilibrium optimizer for context-sensitive entropy dependency based multilevel thresholding of remote sensing images. Swarm Evol. Comput. 2021, 65, 100907. [Google Scholar] [CrossRef]

- Yang, Y.; Tao, L.; Yang, H.; Iglauer, S.; Wang, X.; Askari, R.; Yao, J.; Zhang, K.; Zhang, L.; Sun, H. Stress sensitivity of fractured and vuggy carbonate: An X-Ray computed tomography analysis. J. Geophys. Res. Solid Earth 2020, 125, e2019JB018759. [Google Scholar] [CrossRef]

- Lin, S.W.; Cheng, C.Y.; Pourhejazy, P.; Ying, K.C. Multi-temperature simulated annealing for optimizing mixed-blocking permutation flowshop scheduling problems. Expert Syst. Appl. 2021, 165, 113837. [Google Scholar] [CrossRef]

- Hernández-Ramírez, L.; Frausto-Solís, J.; Castilla-Valdez, G.; González-Barbosa, J.; Sánchez Hernández, J.P. Three Hybrid Scatter Search Algorithms for Multi-Objective Job Shop Scheduling Problem. Axioms 2022, 11, 61. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Rocca, P.; Oliveri, G.; Massa, A. Differential evolution as applied to electromagnetics. IEEE Antennas Propag. Mag. 2011, 53, 38–49. [Google Scholar] [CrossRef]

- Juste, K.; Kita, H.; Tanaka, E.; Hasegawa, J. An evolutionary programming solution to the unit commitment problem. IEEE Trans. Power Syst. 1999, 14, 1452–1459. [Google Scholar] [CrossRef]

- Beyer, H.G.; Schwefel, H.P. Evolution strategies–a comprehensive introduction. Nat. Comput. 2002, 1, 3–52. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Abedinpourshotorban, H.; Mariyam Shamsuddin, S.; Beheshti, Z.; Jawawi, D.N.A. Electromagnetic field optimization: A physics-inspired metaheuristic optimization algorithm. Swarm Evol. Comput. 2016, 26, 8–22. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl. Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Wang, G.G. Moth search algorithm: A bio-inspired metaheuristic algorithm for global optimization problems. Memetic Comput. 2018, 10, 151–164. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Zhao, S.; Wang, P.; Heidari, A.A.; Chen, H.; Turabieh, H.; Mafarja, M.; Li, C. Multilevel threshold image segmentation with diffusion association slime mould algorithm and Renyi’s entropy for chronic obstructive pulmonary disease. Comput. Biol. Med. 2021, 134, 104427. [Google Scholar] [CrossRef]

- Zubaidi, S.L.; Abdulkareem, I.H.; Hashim, K.S.; Al-Bugharbee, H.; Ridha, H.M.; Gharghan, S.K.; Al-Qaim, F.F.; Muradov, M.; Kot, P.; Al-Khaddar, R. Hybridised artificial neural network model with slime mould algorithm: A novel methodology for prediction of urban stochastic water demand. Water 2020, 12, 2692. [Google Scholar] [CrossRef]

- Wang, H.J.; Pan, J.S.; Nguyen, T.T.; Weng, S. Distribution network reconfiguration with distributed generation based on parallel slime mould algorithm. Energy 2022, 244, 123011. [Google Scholar] [CrossRef]

- Tang, A.D.; Tang, S.Q.; Han, T.; Zhou, H.; Xie, L. A modified slime mould algorithm for global optimization. Comput. Intell. Neurosci. 2021, 2021, 2298215. [Google Scholar] [CrossRef]

- Örnek, B.N.; Aydemir, S.B.; Düzenli, T.; Özak, B. A novel version of slime mould algorithm for global optimization and real world engineering problems: Enhanced slime mould algorithm. Math. Comput. Simul. 2022, 198, 253–288. [Google Scholar] [CrossRef]

- Kaveh, A.; Biabani Hamedani, K.; Kamalinejad, M. Improved slime mould algorithm with elitist strategy and its application to structural optimization with natural frequency constraints. Comput. Struct. 2022, 264, 106760. [Google Scholar] [CrossRef]

- Pfaff, W.; Hensen, B.J.; Bernien, H.; van Dam, S.B.; Blok, M.S.; Taminiau, T.H.; Tiggelman, M.J.; Schouten, R.N.; Markham, M.; Twitchen, D.J.; et al. Unconditional quantum teleportation between distant solid-state quantum bits. Science 2014, 345, 532–535. [Google Scholar] [CrossRef] [PubMed]

- Xu, B.; Heidari, A.A.; Kuang, F.; Zhang, S.; Chen, H.; Cai, Z. Quantum Nelder-Mead Hunger Games Search for optimizing photovoltaic solar cells. Int. J. Energy Res. 2022, 46, 12417–12466. [Google Scholar] [CrossRef]

- Tizhoosh, H. Opposition-based learning: A new scheme for machine intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA-IAWTIC’06), Vienna, Austria, 28–30 November 2005; Volume 1, pp. 695–701. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Elaziz, M.A. Improved slime mould algorithm by opposition-based learning and Levy flight distribution for global optimization and advances in real-world engineering problems. J. Ambient Intell. Humaniz. Comput. 2021, 1–40. [Google Scholar] [CrossRef]

- Naik, M.K.; Panda, R.; Abraham, A. An entropy minimization based multilevel colour thresholding technique for analysis of breast thermograms using equilibrium slime mould algorithm. Appl. Soft Comput. 2021, 113, 107955. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K.; Mirjalili, S. An efficient equilibrium optimizer with mutation strategy for numerical optimization. Appl. Soft Comput. 2020, 96, 106542. [Google Scholar] [CrossRef]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive differential evolution with optional external archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. A novel random walk grey wolf optimizer. Swarm Evol. Comput. 2019, 44, 101–112. [Google Scholar] [CrossRef]

- García, S.; Fernández, A.; Luengo, J.; Herrera, F. Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: Experimental analysis of power. Inf. Sci. 2010, 180, 2044–2064. [Google Scholar] [CrossRef]

- Wang, Z.; Luo, Q.; Zhou, Y. Hybrid metaheuristic algorithm using butterfly and flower pollination base on mutualism mechanism for global optimization problems. Eng. Comput. 2021, 37, 3665–3698. [Google Scholar] [CrossRef]

- Chen, H.; Heidari, A.A.; Zhao, X.; Zhang, L.; Chen, H. Advanced orthogonal learning-driven multi-swarm sine cosine optimization: Framework and case studies. Expert Syst. Appl. 2020, 144, 113113. [Google Scholar] [CrossRef]

- Wang, S.; Jia, H.; Abualigah, L.; Liu, Q.; Zheng, R. An improved hybrid aquila optimizer and harris hawks algorithm for solving industrial engineering optimization problems. Processes 2021, 9, 1551. [Google Scholar] [CrossRef]

- Zheng, R.; Jia, H.; Abualigah, L.; Liu, Q.; Wang, S. Deep ensemble of slime mold algorithm and arithmetic optimization algorithm for global optimization. Processes 2021, 9, 1774. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).