Abstract

A new bio-inspired method for optimizing the objective function on a parallelepiped set of admissible solutions is proposed. It uses a model of the behavior of tomtits during the search for food. This algorithm combines some techniques for finding the extremum of the objective function, such as the memory matrix and the Levy flight from the cuckoo algorithm. The trajectories of tomtits are described by the jump-diffusion processes. The algorithm is applied to the classic and nonseparable optimal control problems for deterministic discrete dynamical systems. This type of control problem can often be solved using the discrete maximum principle or more general necessary optimality conditions, and the Bellman’s equation, but sometimes it is extremely difficult or even impossible. For this reason, there is a need to create new methods to solve these problems. The new metaheuristic algorithm makes it possible to obtain solutions of acceptable quality in an acceptable time. The efficiency and analysis of this method are demonstrated by solving a number of optimal deterministic discrete open-loop control problems: nonlinear nonseparable problems (Luus–Tassone and Li–Haimes) and separable problems for linear control dynamical systems.

1. Introduction

Global optimization algorithms are widely used to solve engineering, financial, optimal control problems, as well as problems of clustering, classification, deep machine learning and many others [1,2,3,4,5,6,7,8,9,10]. To solve complex applied problems, both deterministic methods of mathematical programming [11,12,13] and stochastic metaheuristic optimization algorithms can be used [14,15,16,17,18,19,20,21,22,23,24,25,26]. The advantage of the methods of the first group is their guaranteed convergence to the global extremum, and the advantage of the second group is the possibility of obtaining a good-quality solution at acceptable computational costs, even in the absence of convergence guarantees. Among metaheuristic optimization algorithms, various groups are conventionally distinguished: evolutionary methods, swarm intelligence methods, algorithms generated by the laws of biology and physics, multi-start, multi-agent, memetic, and human-based methods. The classification is conditional, since the same algorithm can belong to several groups at once. Four characteristic groups of metaheuristic algorithms can be distinguished, in which heuristics that have proven themselves in solving various optimization problems are coordinated by a higher-level algorithm.

Evolutionary methods, in which the search process is associated with the evolution of a solutions set, named a population, include Genetic Algorithms (GA), Self-Organizing Migrating Algorithm (SOMA), Memetic Algorithms (MA), Differential Evolution (DE), Covariance Matrix Adaptation Evolution Strategy (CMAES), Scatter Search (SS), Artificial Immune Systems (AIS), Variable Mesh Optimization (VMO), Invasive Weed Optimization (IWO), and Cuckoo Search (SC) [3,4,14,15,16,17,18,20].

The group of Swarm Intelligence algorithms includes Particle Swarm Optimization (PSO), Ant Colony Optimization (ACO), Artificial Bee Colony (ABC), Bacterial Foraging Optimization (BFO), Bat-Inspired Algorithm (BA), Fish School Search (FSS), Cat Swarm Optimization (CSO), Firefly Algorithm (FA), Gray Wolf Optimizer (GWO), Whale Optimization Algorithm (WOA), Glowworm Swarm Optimization (GSO), Shuffled Frog-Leaping Algorithm (SFLA), Krill Herd (KH), Elephant Herding Optimization (EHO), Lion Pride Optimization Algorithm (LPOA), Spotted Hyena Optimizer (SHO), Spider Monkey Optimization (SMO), Imperialist Competitive Algorithm (ICA), Stochastic Diffusion Search (SDS), Human Group Optimization Algorithm (HGOA), and Perch School Search Algorithm (PSS). In the methods of this group, swarm members (solutions) exchange information during the search process, using information about the absolute leaders and local leaders among neighbors of each solution and their own best positions [2,15,16,17,20,22,23,24,25,26,27,28].

The group of physics-based algorithms includes Simulated Annealing (SA), Adaptive Simulated Annealing (ASA), Central Force Optimization (CFO), Big Bang-Big Crunch (BB-BC), Harmony Search (HS), Fireworks Algorithm (FA), Grenade Explosion Method (GEM), Spiral Dynamics Algorithm (SDA), Intelligent Water Drops Algorithm (IWD), Electromagnetism-like Mechanism (EM), and the Gravitational Search Algorithm (GSA) [15,16,17,19,23,24,25,26,27,28].

The group of multi-start-based algorithms includes Greedy Randomized Adaptive Search (GRAS) and Tabu Search (TS) [15,16,17].

Among these four groups, bio-inspired optimization algorithms can be distinguished as a part of nature-inspired algorithms [2,7,15,22,23,24,25,26,27,28]. In turn, among bio-inspired methods, bird-inspired algorithms that imitate the characteristic features of the behavior of flocks of various birds during foraging, migration, and hunting are widely used: Bird Mating Optimizer (BMO), Chicken Swarm Optimization (CSO), Crow Search Algorithm (CSA), Cuckoo Search (CS), Cuckoo Optimization Algorithm (COA), Emperor Penguin Optimizer (EPO), Emperor Penguins Colony (EPC), Harris Hawks Optimization (HHO), Migrating Bird Optimization (MBO), Owl Search Algorithm (OSA), Pigeon Inspired Optimization (PIO), Raven Roosting Optimization (RRO), Satin Bowerbird Optimizer (SBO), Seagull Optimization Algorithm (SOA), and the Sooty Tern Optimization Algorithm (STOA) [15,24,25,26].

One of the applications of bio-inspired optimization algorithms is the problem of finding control laws for discrete dynamical systems [1,10]. As a rule, to solve this class of optimal control problems, the necessary optimality conditions are applied, which are reduced to solve a boundary value problem for a system of difference equations. For systems with convex varying, the discrete maximum principle is applied. An alternative way is to apply sufficient optimality conditions in the form of the Bellman’s equation. In this case, the optimal control found in the form of feedback depends on the state vector of the system, which is more preferable. However, it is well known that with an increase in the state vector dimension, the computational costs of using the dynamic programming procedure increase significantly. Special problems are caused by the solution of nonseparable problems, as well as problems in which the vector variation is not convex and, therefore, the discrete maximum principle is not valid. Since the problem of optimal control of discrete systems is finite dimensional, the use of efficient bio-inspired optimization methods for its resolution is natural.

The article is devoted to the development of the swarm intelligence and bird-inspired groups of methods based on observing the process of searching for food by a flock of tomtits, organizing their sequential movement under the influence of the leader of the flock. To simulate the trajectories of movement of each tomtit, the solution of a stochastic differential equation with jumps is used. The parameters of the random process—the drift vector and the diffusion matrix—depend on the position of all members of the flock and their individual achievements. The method is hybrid because it also uses the ideas of particle swarm optimization methods [15,16,17], methods that imitate the behavior of cuckoos with Levy flights [29], and the Luus–Jaakola method with successive reduction and further incomplete restoration of the search area [1]. Based on the assertion of the Free Lunch Theorem [30], it can be argued that the problem of developing new efficient global optimization algorithms used to solve complex optimal control problems remains relevant. In particular, the method should allow us to solve both separable and non-classical nonseparable optimization problems for discrete deterministic dynamical control systems [1,10]. As a benchmark of problems for assessing the accuracy of the method and computational costs, a set of nonlinear nonseparable and classical linear separable problems with known best or exact solutions was used.

The paper is organized as follows. Section 2.1 contains the statement of the discrete deterministic open-loop control problem. Section 2.2 provides a description of the solution search strategy and a step-by-step novel bio-inspired metaheuristic optimization method. In Section 3, the application of the new optimization method described in Section 2.2 for the representative set of optimal control problems described in Section 2.1 is given. Recommendations on the choice of method hyperparameters are given, time costs are estimated to obtain numerical results of acceptable quality, and comparison with the results obtained by other known metaheuristic algorithms is presented.

2. Materials and Methods

2.1. Open-Loop Control Problem

Let us consider a nonlinear discrete deterministic dynamical control system described by a state equation of the form

where is a discrete time with number of stages ; is the state vector; is the control vector, are a known continuous functions.

Initial condition:

Let us define a set of pairs where is a trajectory, is an open-loop control, satisfying the state equation (1) and initial condition (2).

The performance index to be minimized is defined on the set as

or

where are known continuous functions. The notation form (3) is typical for separable optimal control problems, and the form (4) is typical for nonseparable ones.

It is required to find an optimal pair that minimizes the performance index, i.e.,

To solve the problem (5), an algorithm that imitates the behavior of a tomtits flock is proposed. This algorithm belongs to the nature-inspired (more precisely, bird-inspired) and swarm intelligence metaheuristic optimization algorithms [2,15,16,17]. This problem was solved using iterative dynamic programming and the Luus–Jaakola algorithm [1] and by the Perch School Search optimization algorithm [10]. The results of a comparative analysis of the obtained results are presented below in the solved examples.

2.2. Bio-Inspired Metaheuristic Optimization Method

The problem of finding the global minimum of the objective function on the set of feasible solutions of the form is considered.

The Tomtit Flock Optimization (TFO) method of simulating the behavior of a flock of tomtits is a hybrid algorithm for finding the global conditional extremum of functions of many variables, related to both swarm intelligence methods and bio-inspired methods.

A tomtit unites in flocks. They obey the commands of the flock leader and have some freedom in choosing the way to search for food. Tomtits are distinguished from other birds and animals by their special cohesion, coordination of collective actions, intensity of use of the food source found until it disappears, and clear and friendly execution of commands common to members of the flock.

Finite sets of possible solutions, named populations, are used to solve the problem of finding a global constrained minimum of objective function, where is a tomtit-individual (potential feasible solution) with number , and is a population size.

In the beginning, when number of iterations , the method creates initial population of tomtits via the uniform distribution law on a feasible solutions set . The value of objective function is calculated for each tomtit, which is a possible solution. The solution with the best value of objective function is the position of flock leader. The leader does not search for food in the current iteration. It waits for results of finding food for processing results and further storage in the memory matrix:

The memory matrix size is , where is a given maximum number of records. The best achieved result on each -th iteration is added in the matrix until the matrix is fulfilled. The number defines the iterations counted in one pass. Results in the matrix are ordered by the following rule. The first record in the matrix is the best solution ; other records are ordered by increasing (nondecreasing) value of the objective function. When the memory matrix is fulfilled, the best result is placed in a special set Pool (set of the best results of passes), after which the matrix is cleared.

New position of the leader is randomly generated by Levy distribution [29]:

where is a coordinate of the leader’s position on the -th iteration, and is a movement step, . Studies of animal behavior have shown that the Levy distribution most accurately describes the trajectory of birds and insects. Due to the “heavy tails” of the Levy distribution, the probability of significant deviations of the random variable from the mean is high. Therefore, according to the above expression, sufficiently large increments are possible for each coordinate of the vector If the new value of the certain coordinate does not belong to the set of feasible solutions; that is then one should repeat the generation process.

This process describes the flight of the flock leader from one place to another. Other tomtits fly after it at the command of the leader. Positions of these tomtits are modeled using a uniform distribution on the parallelepiped set. The center of the parallelepiped set is determined by the position of the leader of the flock; the lengths of the sides are equal , , where , , —reduction parameter of search set; if , then the current pass is stopped and a new one starts. Upon reaching iterations or when the condition is fulfilled, the pass is considered complete, the counter of passes is increased: , and the parameter that describes the size of the next search space is set equal to , where —reconstruction parameter of search set. The found coordinate values determine the initial conditions for the search at the current iteration (the initial position of each tomtit).

It is assumed that each -th individual has a memory that stores:

- Current iteration count ;

- Current position and the corresponding value of objective function ;

- The best position and the corresponding value of objective function in population ;

- The best position of a tomtit during all iterations and the corresponding value of objective function ;

- The best position among all tomtits located in the vicinity of the -th individual of radius and the corresponding value of objective function .

The trajectory of movement of each individual (for all tomtits ) on the segment , during which the search is carried out at the current iteration, is described by the solution of the stochastic differential equation:

where —standard Wiener stochastic process, —the time allotted by the leader of the pack for searching for members of the pack at the current iteration, —Poisson component, which can be written as:

—asymmetric delta function, —moments of jumps. In random moments of time the position of a tomtit experiences random increments , forming a Poisson stream of events of a given intensity . The solution of the equation determines the trajectories of the tomtit’s movement that implement the diffusion search procedure with jumps.

Drift vector is described by the equation:

i.e., it takes into account information about the best solution in the population: the position of the global leader of the flock.

Diffusion matrix takes into account information about the best solution obtained by a given individual for all past iterations, about the best solution in the vicinity of the current solution, determined by the radius :

where , , —effect coefficients; —random parameters uniformly distributed on the segment . Parameter determines the process of forgetting about one’s search history; parameter describes the leader’s effect among neighbors.

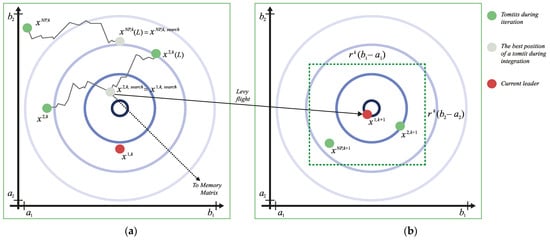

The solution of a stochastic differential equation is a random process, the trajectories of which have sections of continuous change, interrupted by jumps of a given intensity. It describes the movement of the tomtit, accompanied by relatively short jumps. This solution can be found by numerical integration with a step size . If any coordinate value hits the boundary of the search area or goes beyond it, then it is taken equal to the value on this boundary. The best position achieved during the current iteration is chosen as the new position of the tomtit. This process is shown in Figure 1a. Among all the new positions of tomtits, the best one is selected. It is recorded in the memory matrix and identified with the final position of the leader of the flock at the current iteration, after which the next iteration begins with the procedure for finding a new position of the leader of the flock and the initial positions of the members of the flock relative to it. This procedure is shown in Figure 1b. The method terminates when the maximum number of passes P is reached.

Figure 1.

Movement of tomtits; (a) stochastic movement of tomtits, choosing the best solution and adding into the memory matrix, (b) new position of the leader and flight results of other tomtits.

The proposed hybrid method uses the ideas of evolutionary methods to create the initial population [15,16,17,18], the method of imitating the behavior of cuckoo to simulate the jump of the flock leader based on Levy flights [29], the application of a modified numerical Euler–Maruyama method for solving a stochastic differential equation describing the movement of individuals in a population [31]; the idea of particle swarm optimization technique in a flock for describing the interaction of individuals with each other, the modified method of artificial immune systems for updating the population, the Luus-Jaakola method for updating the search set at the end of the next pass [1].

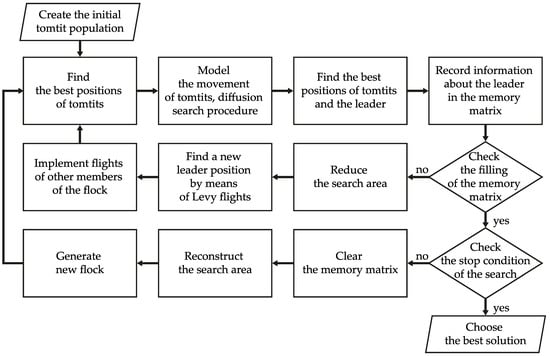

Figure 2 below illustrates the block diagram of the algorithm.

Figure 2.

A block diagram of the proposed TFO algorithm.

Below is a detailed description of the algorithm.

Step 1. Creation of the initial tomtit population:

Step 1.1. Set parameters of method:

- Number of tomtits in population ;

- Flock leader movement step ;

- Reduction parameter of search set ;

- Reconstruction parameter of search set ;

- Levy distribution parameter ;

- Tomtit’s neighborhood radius size ;

- Parameters , , , which describe drift vector and diffusion matrix in the stochastic differential equation;

- Number of maximum records in memory matrix ;

- Integration step size ;

- Number of maximum discrete steps ;

- Time required for searching by tomtits ;

- Number of maximum passes ;

- Jump intensity parameter .

Set the value (the number of passes), .

Step 1.2. Create initial population of solutions (tomtits) with randomly generated coordinates from the segment using a uniform distribution:

where is the uniform distribution law on the segment .

Step 2. Movement of flock members. Implementation of the diffusion search procedure with jumps.

Step 2.1. Set the value: (iteration counter).

Step 2.2. For each member of flock calculate the value of objective function: . Order flock members in increasing (nondecreasing) objective function values. The solution corresponds to the best value, i.e., the position of the leader.

Step 2.3. Process current information about flock members.

For set the following. The best position is and the corresponding value of objective function in population.

For all other flock members find:

- The best position of a tomtit during all iterations and the corresponding value of objective function ;

- The best position among all tomtits located in the vicinity of the -th individual of radius and the corresponding value of objective function .

Step 2.4. For each find the numerical solution of the stochastic differential equation with step size on the segment using the Euler–Maruyama method.

Step 2.4.1. Set the value: and .

Step 2.4.2. Find the diffusion part of the solution

where

are random parameters uniformly distributed on the segment and is a random variable that has a standard normal distribution with zero mean value and unit variance value. It is possible to model this variable using the Box–Muller method:

in which and are an independent random variables uniformly distributed on the segment .

Step 2.4.3. Check jump condition: , where is a random variable uniformly distributed on the segment . In the process of integration, one should check whether the solution belongs to the set of feasible solutions: if any coordinate value of the solution hits the boundary of the search area or goes beyond it, then it is taken equal to the corresponding value on the boundary.

If the jump condition is satisfied, then set the value:

where is a random increment, in which coordinates are modeled according to the uniform distribution law: .

Otherwise, set the value:

Step 2.4.4. Check the stop condition of the moving process of a flock’s member.

If , set the value and go to step 2.4.2. Otherwise, go to step 2.5.

Step 2.5. For each flock member (), find the best solution among all solutions obtained: . Denote it as .

Step 2.6. Among positions of tomtits (solutions) find the best one. Record it in the memory matrix. This is the leader’s position at the end of the current iteration .

Step 2.7. Check the stop condition of a pass. If (memory matrix is full) or , then stop the pass. From the memory matrix, choose the best solution and put it in the set Pool, then go to step 3. If , set the value , and go to step 2.8.

Step 2.8. Find the new position of the leader:

To generate a random variable according to the Levy distribution, it is required: for each coordinate to generate number , by uniform distribution law on the set where distinguishability constant, and carry out the following [10]:

- Generate numbers and , , where is a distribution parameter;

- Calculate values of coordinates:

If the obtained value of the coordinate does not belong to the set of feasible solutions; that is , then repeat the generation process for the coordinate .

If, after ten unsuccessful generations, the coordinate does not belong to the set of feasible solutions, then generate by uniform distribution law on the set .

Step 2.9. Release the flight of the rest of the tomtits.

Positions of all other tomtits () are modeled using a uniform distribution on the parallelepiped set (see step 1.2). The center of the parallelepiped set is determined by the position of the leader of the flock (see step 2.8), and the lengths of the sides are equal , .

If the value of tomtit’s coordinate does not belong to the set of feasible solutions, then generate a new position using uniform distribution:

- On the set when ;

- On the set when .

Go to step 2.2.

Step 3. Check the stop condition of the search. If , then stop the process and go to step 4. If , clear the memory matrix, increase the counter of passes: , and set the value , where is a reconstruction parameter of the search set.

Generate a new flock of tomtits. Choose the best solution from Pool set: . Positions of all other tomtits () are modeled using a uniform distribution on the parallelepiped set (see step 1.2). The center of the parallelepiped set is determined by the position of the leader of the flock , and the lengths of the sides are equal , .

If the value of the tomtit’s coordinate does not belong to the set of feasible solutions, then generate a new position using uniform distribution:

- On the set when ;

- On the set when .

Go to step 2.

Step 4. Choosing the best solution. Among solutions in the set , find the best one, which is considered to be the approximate solution of the optimization problem.

As a result of generalizing the data obtained both in solving classical separable and nonclassical nonseparable optimal control problems, recommendations for choosing the values of hyperparameters were developed: number of tomtits in population ; flock leader movement step ; reduction parameter of search set ; reconstruction parameter of search set ; Levy distribution parameter ; tomtit’s neighborhood radius size ; parameters , , ; number of maximum records in memory matrix ; integration step size ; number of maximum discrete steps ; number of maximum passes ; jump intensity parameter .

3. Results

3.1. Example 1. The One-Dimensional Optimal Control Problem with an Exact Solution

The dynamical system is described by the state equation:

where All variables, i.e., the coordinates of the state vector of dynamical systems and the coordinates of the control vector, hereinafter, are written in the normalized form.

It is required to find such a pair of trajectory and control that the value of the performance index is minimal:

In this case, it is possible to solve this problem analytically:

In this problem, the initial condition is known: , and the constraints on the control are also given: . For this task, the number of stages is set: ; the exact value of the performance index is

To solve the problems under consideration with the help of the new TFO algorithm, a computer with the following characteristics was used: an Intel Core i7-2860QM processor with a clock frequency of 3.3 GHz, 16 gigabytes of RAM. To implement the software package, the C# programming language (7.2) with Microsoft Framework. Net version 4.7.2 was used from https://dotnet.microsoft.com/en-us/ (accessed on 25 August 2022).

When solving this problem, the approximate value of the performance index obtained by the TFO algorithm was and the runtime was 1:07 min. The relative error of the obtained value of the performance index was . The same problem was solved by Perch School Search algorithm [10]. The runtime was 5:36 min and the value of performance index was . It is obvious that the new TFO algorithm makes it possible to obtain solutions much faster and with sufficient accuracy.

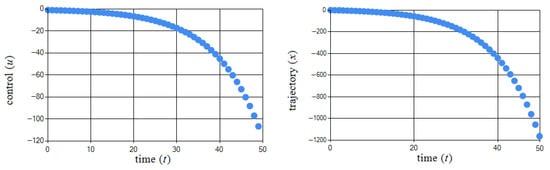

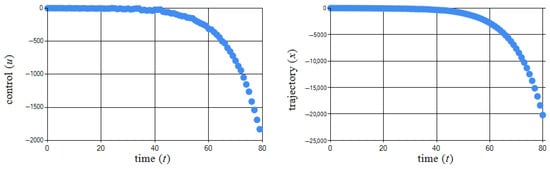

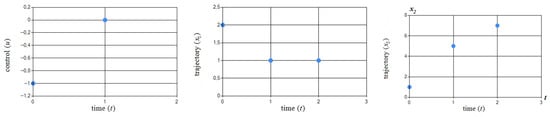

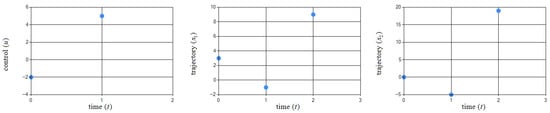

To solve this problem, the TFO algorithm was used with the parameters shown in Table 1. Figure 3 shows the obtained pair .

Table 1.

The set of parameters of the TFO algorithm in Example 1.

Figure 3.

Graphical illustration of obtained pair in Example 1.

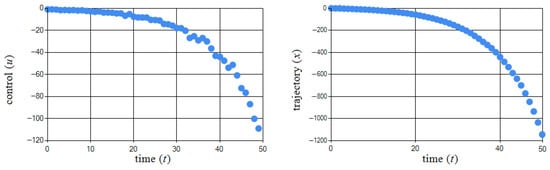

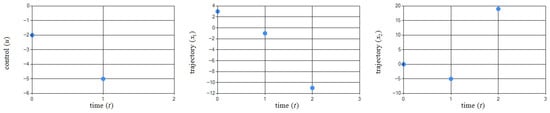

In order to analyze the effect of algorithm parameters on the result obtained, the same problem was solved with another set of algorithm parameters, which are presented in Table 2. Figure 4 shows the obtained pair .

Table 2.

The set of parameters of the TFO algorithm in Example 1.

Figure 4.

Graphical illustration of obtained pair in Example 1.

The approximate value of the performance index obtained by the TFO algorithm was , and the runtime was 14.92 s. It turned out that the resulting value of the performance index was worse due to other parameters, but the change in relative error was not significant. This example shows the importance of selecting parameters, which is a rather difficult task. A slight change in the parameters can significantly affect the result of solving the problem.

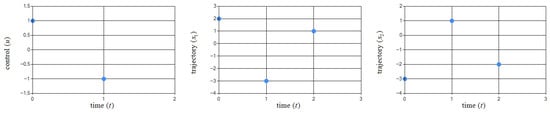

To demonstrate the increase in the runtime to solve the problem, a search for optimal control and the trajectory with the parameters of the algorithm from Table 1 is performed. The difference will be an increase in the number of stages: , but the parameters of the system remain unchanged.

Figure 5 shows the obtained pair .

Figure 5.

Graphical illustration of obtained pair in Example 1.

The exact value of the performance index was The obtained value of the performance index was , with a runtime of 1:58 min.

Example 1 illustrates the possibilities of the method in solving financial optimization problems that take the discounting effect into account.

3.2. Example 2. Luus–Tassone Nonseparable Control Problem

The dynamical system is described by three difference equations:

where , , and .

It is required to find such a pair of trajectories and control so that the value of the performance index I is minimal:

In this problem, the initial condition: , and the constraints on the control are also given: , , . For this task, the number of stages is set: .

The best known value of the performance index for this problem was obtained in [1]: The approximate value of the performance index obtained by the TFO algorithm was , and the runtime was 16.47 s. The same problem was solved by the Perch School Search algorithm [10]. The runtime was 49.92 s, and the value of performance index was . It is obvious that the new TFO algorithm makes it possible to obtain the solution much faster. However, the obtained solution of this problem is still not as accurate as that of the author of the problem [1]. It turned out to be extremely difficult to select the parameters for the algorithm for this problem.

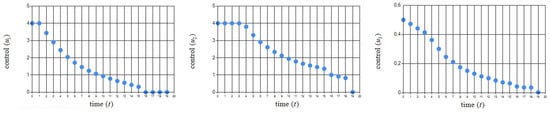

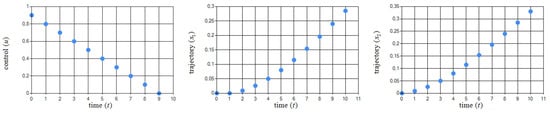

To solve this problem, the TFO algorithm was used with the parameters shown in Table 3. Figure 6 and Figure 7 show the obtained pair .

Table 3.

The set of parameters of the TFO algorithm in Example 2.

Figure 6.

Graphical illustration of obtained in Example 2.

Figure 7.

Graphical illustration of obtained in Example 2.

3.3. Example 3. Li–Haimes Nonseparable Control Problem

The dynamical system is described by three difference equations:

where , , and .

It is required to find such a pair of trajectory and control that the value of the performance index is minimal:

In this problem, the initial condition is known: , and the constraints on the control are also given: , . For this task, the number of stages is set: . The best-known value of the performance index obtained for this nonseparable control problem was obtained in [1]: .

The approximate value of the performance index obtained by the TFO algorithm was and the runtime was 0.13 s. The same problem was solved by Perch School Search algorithm [10]. The runtime was 0.19 s and the value of performance index was . The new TFO algorithm makes it possible to obtain almost the same solution much faster.

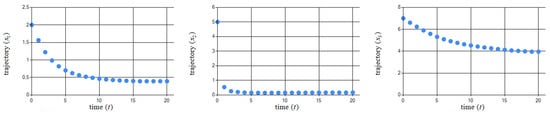

To solve this problem, the TFO algorithm was used with the parameters shown in Table 4. Figure 8 shows the obtained pair . The values of the obtained control

and trajectory are shown in Table 5.

Table 4.

The set of parameters of the TFO algorithm in Example 3.

Figure 8.

Graphical illustration of obtained pair in Example 3.

Table 5.

The values of the obtained control and trajectory.

Examples 2 and 3 illustrate the possibilities of solving complex optimal control problems with nonseparable performance indexes, for which obtaining solutions using known optimality conditions is extremely difficult.

3.4. Example 4. The Two-Dimensional Lagrange Optimal Control Problem with an Exact Solution

The dynamical system is described by two difference equations:

where , , and .

It is required to find such a pair of trajectory and control that the value of the performance index is minimal:

In this problem, the initial condition and the constraints on the control are also given: , the number of stages is set: . The exact value of the performance index is For this control problem, the analytical solution can be found: ; ; .

The approximate value of the performance index obtained by the TFO algorithm was and the runtime was less than 0.01 s. The relative error of the obtained value of the performance index was . The same problem was solved by the Perch School Search algorithm [10]. The runtime was 1.58 s and the value of the performance index was . The new TFO algorithm makes it possible to obtain solutions much faster and with almost the same accuracy.

To solve this problem, the TFO algorithm was used with the parameters shown in Table 6. Figure 9 shows the obtained pair .

Table 6.

The set of parameters of the TFO algorithm in Example 4.

Figure 9.

Graphical illustration of obtained pair in Example 4.

3.5. Example 5. The Two-Dimensional Meyer Optimal Control Problem with an Exact Solution

The dynamical system is described by two difference equations:

where , , and .

It is required to find such a pair of trajectory and control that the value of the performance index is minimal:

In this problem, the initial condition vector is known: , and the constraints on the control are also given: . For this task, the number of stages is set: ; the exact value of the performance index is

The approximate value of the performance index obtained by the TFO algorithm was and the runtime was less than 0.01 s. The same problem was solved by Perch School Search algorithm [10]. The runtime was 0.25 s and the value of the performance index was . It is obvious that the new TFO algorithm makes it possible to obtain the solution much faster and with almost the same accuracy.

To solve this problem, the TFO algorithm was used with the parameters shown in Table 7. Figure 10 shows the obtained pair . The values of the obtained control and trajectory components are shown in Table 8.

Table 7.

The set of parameters of the TFO algorithm in Example 5.

Figure 10.

Graphical illustration of obtained pair in Example 5.

Table 8.

The values of the obtained control and trajectory.

Examples 4 and 5 illustrate the possibility of solving the problem of finding the optimal program control for classical control problems for linear discrete deterministic dynamical systems with a quadratic performance index.

3.6. Example 6. The Two-Dimensional Bolza Optimal Control Problem with an Exact Solution

The dynamical system is described by two difference equations:

where , , and .

It is required to find such a pair of trajectory and control that the value of the performance index is minimal:

In this case, it is possible to solve this problem analytically:

In this problem, the initial condition vector is known: , and the constraints on the control are also given: . For this task, the number of stages is set: ; the exact value of the performance index is

When solving this problem, the approximate value of the performance index obtained by the TFO algorithm was and the runtime was 0.13 s. The relative error of the obtained value of the performance index was . The same problem was solved by Perch School Search algorithm [7]. The runtime was 7.39 s and the value of the performance index was . The new algorithm makes it possible to obtain solutions much faster and with almost the same accuracy.

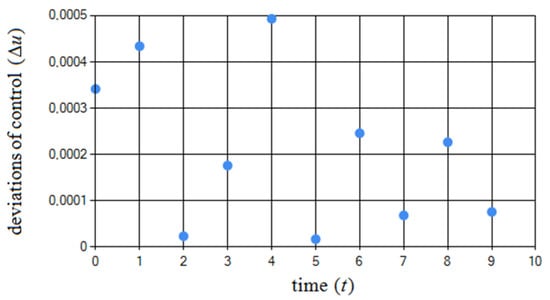

To solve this problem, the TFO algorithm was used with the parameters shown in Table 9. Figure 11 shows the obtained pair . Figure 12 shows deviations of approximate control values from exact ones at different moments of the dynamic system operation, i.e., .

Table 9.

The set of parameters of the TFO algorithm in Example 6.

Figure 11.

Graphical illustration of obtained pair in Example 6.

Figure 12.

Deviations of approximate control values from the exact ones.

3.7. Example 7. The Two-Dimensional Meyer Optimal Control Problem with an Exact Solution

The dynamical system is described by two difference equations:

where , , and .

It is required to find such a pair of trajectory and control that the value of the performance index is minimal:

In this problem, the initial condition vector is known: , and the constraints on the control are also given: . For this task, the number of stages is set: ; the exact value of the performance index is

In this example, the convex varying condition is not satisfied, which means that the discrete maximum principle cannot be applied, while the necessary optimality conditions are satisfied.

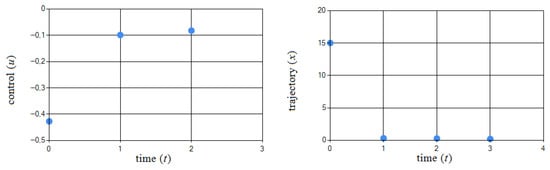

This problem has two solutions: , , and , , ; the TFO algorithm successfully finds both solutions.

When solving this problem, the approximate values of the performance index obtained by the TFO algorithm for both solutions were: (the runtime was less than 0.01 s for the first solution); (the runtime was less than 0.01 s for the second solution). The relative errors of the obtained value of the performance index were and , respectively. The same problem was solved by the Perch School Search algorithm [7]. Runtimes were 0.30 s and 0.31 s, and the values of the performance index were and , respectively. The TFO algorithm makes it possible to obtain solutions much faster and with almost the same accuracy.

To solve this problem, the TFO algorithm was used with the parameters shown in Table 10. Figure 13 and Figure 14 show the obtained pairs for both solutions. The values of the obtained control and trajectory components are shown in Table 11 and Table 12.

Table 10.

The set of parameters of the TFO algorithm in Example 7.

Figure 13.

Graphical illustration of obtained pair for the first solution in Example 7.

Figure 14.

Graphical illustration of obtained pair for the second solution in Example 7.

Table 11.

The values of the obtained control and trajectory components for the first solution.

Table 12.

The values of the obtained control and trajectory components for the second solution.

4. Conclusions

In this paper, a new bio-inspired metaheuristic optimization algorithm named TFO, used to find the solution to open-loop control problems for discrete deterministic dynamical systems, is proposed.

This algorithm has successfully inherited a number of new ideas and known techniques from several algorithms; for example, the numerical solution of stochastic differential equations with jumps by the Euler–Maruyama method to describe the movement of tomtits when foraging, compression and recovery of the search area б exchange of information between members of the flock. These ideas allow the algorithm to solve applied optimization problems in a short runtime, which was shown by examples of the problem of finding optimal control and trajectories of discrete dynamical systems. The solutions obtained in most cases are extremely close, or coincide with the analytical solutions if they are known. Compared to the previously developed PSS algorithm, this algorithm is more efficient when it comes to solving problems of finding the optimal control and trajectory, which is shown by the through comparison of the running time of the algorithm and the relative error.

In the future, we plan to improve this algorithm or create new algorithms based on the TFO algorithm in order to obtain more accurate (but no less fast) algorithms.

The direction of further development of this work can be the application of the developed metaheuristic optimization algorithm in solving applied problems of optimal control of aircraft of various types and optimal control problems with full and incomplete feedback on the measured variables, as well as solving problems in the presence of uncertainty when setting the initial state vector.

Author Contributions

Conceptualization, A.V.P.; methodology, A.V.P. and A.A.K.; software, A.A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Source code at https://github.com/AeraConscientia/N-dimensionalTomtitOptimizer (accessed on 30 May 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Luus, R. Iterative Dynamic Programming, 1st ed.; Chapman & Hall/CRC: London, UK, 2000. [Google Scholar]

- Yang, X.S.; Chien, S.F.; Ting, T.O. Bio-Inspired Computation and Optimization, 1st ed.; Morgan Kaufmann: New York, NY, USA, 2015. [Google Scholar]

- Goldberg, D. Genetic Algorithms in Search, Optimization and Machine Learning, 1st ed.; Addison-Wesley Publishing Company: Boston, MA, USA, 1989. [Google Scholar]

- Michalewicz, Z.; Fogel, D. How to Solve It: Modern Heuristics, 2nd ed.; Springer: New York, NY, USA, 2004. [Google Scholar]

- Panteleev, A.V.; Lobanov, A.V. Application of Mini-Batch Metaheuristic algorithms in problems of optimization of deterministic systems with incomplete information about the state vector. Algorithms 2021, 14, 332. [Google Scholar] [CrossRef]

- Roni, H.K.; Rana, M.S.; Pota, H.R.; Hasan, M.; Hussain, S. Recent trends in bio-inspired meta-heuristic optimization techniques in control applications for electrical systems: A review. Int. J. Dyn. Control. 2022, 10, 999–1011. [Google Scholar] [CrossRef]

- Floudas, C.A.; Pardalos, P.M.; Adjiman, C.S.; Esposito, W.R.; Gümüs, Z.H.; Harding, S.T.; Klepeis, J.L.; Meyer, C.A.; Schweiger, C.A. Handbook of Test Problems in Local and Global Optimization, 1st ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1999. [Google Scholar]

- Roman, R.C.; Precup, R.E.; Petriu, E.M. Hybrid data-driven fuzzy active disturbance rejection control for tower crane systems. Eur. J. Control 2021, 58, 373–387. [Google Scholar] [CrossRef]

- Chi, R.; Li, H.; Shen, D.; Hou, Z.; Huang, B. Enhanced P-type control: Indirect adaptive learning from set-point updates. IEEE Trans. Autom. Control 2022. [Google Scholar] [CrossRef]

- Panteleev, A.V.; Kolessa, A.A. Optimal open-loop control of discrete deterministic systems by application of the perch school metaheuristic optimization algorithm. Algorithms 2022, 15, 157. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 453. [Google Scholar] [CrossRef] [PubMed]

- Sergeyev, Y.D.; Kvasov, D.E. Deterministic Global Optimization: An Introduction to the Diagonal Approach, 1st ed.; Springer: New York, NY, USA, 2017. [Google Scholar]

- Pinter, J.D. Global Optimization in Action (Continuous and Lipschitz Optimization: Algorithms, Implementations and Applications), 1st ed.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1996. [Google Scholar]

- Chambers, D.L. Practical Handbook of Genetic Algorithms, Applications, 2nd ed.; Chapman & Hall/CRC: London, UK, 2001. [Google Scholar]

- Floudas, C.; Pardalos, P. Encyclopedia of Optimization, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- Gendreau, M. Handbook of Metaheuristics, 2nd ed.; Springer: New York, NY, USA, 2010. [Google Scholar]

- Glover, F.W.; Kochenberger, G.A. (Eds.) Handbook of Metaheuristics; Kluwer Academic Publishers: Boston, MA, USA, 2003. [Google Scholar]

- Neri, F.; Cotta, C.; Moscato, P. Handbook of Memetic Algorithms, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Chattopadhyay, S.; Marik, A.; Pramanik, R. A brief overview of physics-inspired metaheuristic optimization techniques. arXiv 2022, arXiv:2201.12810v1. [Google Scholar]

- Beheshti, Z.; Shamsuddin, S.M. A review of population-based meta-heuristic algorithms. Int. J. Adv. Soft Comput. Appl. 2013, 5, 1–35. [Google Scholar]

- Locatelli, M.; Schoen, F. (Global) Optimization: Historical notes and recent developments. EURO J. Comput. Optim. 2021, 9, 100012. [Google Scholar] [CrossRef]

- Dragoi, E.N.; Dafinescu, V. Review of metaheuristics inspired from the animal kingdom. Mathematics 2021, 9, 2335. [Google Scholar] [CrossRef]

- Brownlee, J. Clever Algorithms: Nature-Inspired Programming Recipes, 1st ed.; LuLu.com: Raleigh, CA, USA, 2011. [Google Scholar]

- Tzanetos, A.; Fister, I.; Dounias, G. A comprehensive database of nature-inspired algorithms. Data Brief 2020, 31, 105792. [Google Scholar] [CrossRef] [PubMed]

- Fister, I., Jr.; Yang, X.-S.; Fister, I.; Brest, J.; Fister, D. A brief review of nature-inspired algorithms for optimization. arXiv 2013, arXiv:1307.4186. [Google Scholar]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms, 2nd ed.; Luniver Press: Frome, UK, 2010. [Google Scholar]

- Panteleev, A.V.; Belyakov, I.A.; Kolessa, A.A. Comparative analysis of optimization strategies by software complex “Metaheuristic nature-inspired methods of global optimization”. J. Phys. Conf. Ser. 2022, 2308, 012002. [Google Scholar] [CrossRef]

- Del Ser, J.; Osaba, E.; Molina, D.; Yang, X.-S.; Salcedo-Sanz, S.; Camacho, D.; Das, S.; Suganthan, P.N.; Coello Coello, C.A.; Herrera, F. Bio-inspired computation: Where we stand and what’s next. Swarm Evol. Comput. 2019, 48, 220–250. [Google Scholar] [CrossRef]

- Yang, X.S.; Deb, S. Cuckoo search via Levy flights. In Proceedings of World Congress on Nature and Biologically Inspired Computing, Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evolut. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef] [Green Version]

- Averina, T.A.; Rybakov, K.A. Maximum cross section method in optimal filtering of jump-diffusion random processes. In Proceedings of the 15th International Asian School-Seminar Optimization Problems of Complex Systems, Novosibirsk, Russia, 26–30 August 2019; pp. 8–11. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).