1. Introduction

The TSP is one of the most intensively studied and relevant problems in the Combinatorial Optimization (CO) field [

1]. Its simple definition—despite the membership to the NP-complete class—and its huge impact on real applications [

2] make it an appealing problem to many researchers. As evidence of this, the last seventy years have seen the development of extensive literature, which brought valuable enhancement to the CO field. Concepts such as the Held-Karp algorithm [

3], powerful meta-heuristics such as the Ant Colony Optimization [

4], and effective implementations of local search heuristics such as the Lin-Kernighan-Helsgaun [

5] have been suggested to solve the TSP. These contributions, along with others, have supported the development of various applied research domains such as logistics [

6], genetics [

7], telecommunications [

8] and neuroscience [

9].

In particular, during the last five years, an increasing number of ML-driven heuristics have appeared to make their contribution to the field [

10,

11]. The surge of interest was probably moved by the rich literature, and by the interesting opportunities provided by CO applications. Among the many works recently proposed it is worth mentioning those that have introduced empowering concepts such as the opportunity to leverage knowledge from past solutions [

12,

13], the ability to imitate computationally expensive operations [

14], and the faculty of devising innovative strategies via reinforcement learning paradigms [

15].

In light of the new features being brought by ML approaches, we wish to couple these ML qualities with well-known heuristic concepts, aiming to introduce a new kind of hybrid algorithm. The scope is to engineer an efficient interlocking between ML and optimization algorithms, which seeks robust enhancements with respect to classic approaches. Many attempts have been proposed so far, but none of them until now has succeeded in preserving the improvements while scaling up to larger problems. A promising idea to contrast the scaling up issue is to use CLs [

16]. A CL identifies a subset of edges that are considered promising for the solution. Using CLs can help the solver to restrict the solution searching space since most of the edges are marked as unpromising and will not be considered in the optimization. Moreover, the employment of CL allows for a

divide et conquer abstraction, which favors generalization. It can be argued that the generalization issue which emerged in the previous ML-driven approaches is caused mostly by the lack of a proper consideration of the ML weaknesses and limitations [

17,

18]. In fact, ML is known for having troubles with imbalanced datasets, outliers and extrapolation tasks. Such cases could lead to significant obstacles in achieving good performances with most ML systems. The aforementioned statistical studies were very useful to achieve fundamental insights which allowed our

ML-Constructive to bypass these weaknesses. More details on these typical ML weaknesses, with our proposed solutions, will be provided in

Section 2.2.

Our main contribution is the introduction of the first ML-driven heuristic that actively uses ML to construct partial TSP solutions without losing quality when scaling. The

ML-Constructive heuristic is composed of two phases. The first phase uses ML to identify edges that are very likely to be optimal, the second completes the solution by a classic heuristic. The resulting overall heuristic shows good performance when tested on representative instances selected from the TSPLIB library [

19]. The instances considered present up to 1748 vertices, and surprisingly

ML-Constructive exhibits slightly better solutions on larger instances rather than on smaller ones, as shown in the experiments. Despite the fact that good results are shown in terms of quality, our heuristic presents an unappealing large constant computation time for training in the current state of the implementation. However, we prove that for the creation of a solution a number of operations bounded by

is required after training (which is executed only once).

ML-Constructive learns exclusively using local information, and it employs a simple ResNet architecture [

20] to recognize some patterns from the CLs through images. The use of images, even if not optimal in terms of computation, allowed us to plainly see the input of the network and to get a better understanding of the internal processes in the network. We have finally introduced a novel loss function to help the network to understand how to make a substantial contribution when building tours.

The TSP is formally stated in

Section 1.1, and a literature review is presented in

Section 1.2. The concept of constructive heuristic is described in detail in

Section 2.1 while statistical and exploratory studies on the CLs are spotlighted in

Section 2.2. The general idea of the new method is discussed in

Section 2.3, the

ML-Constructive heuristic is explained in

Section 2.4 and the ML model with the training procedure is discussed in

Section 2.5. To conclude, experiments are presented in

Section 3, and conclusions are stated in

Section 4.

1.1. The Traveling Salesman Problem

Given a complete graph

with

n vertices belonging to the set

, and edges

for each vertex

with

, let

be the cost for the directed edge

starting from vertex

i and reaching vertex

j. The objective of the Traveling Salesman Problem is to find the shortest possible route that visits each vertex in

V exactly once and creates a loop returning to the starting vertex [

1].

The [

21] formulation of the TSP is an integer linear program describing the requirements that must be met to find an optimal solution to the problem. The variable

defines if the optimal route found picks the edge that goes from vertex

i to vertex

j with

, if the route does not pick such edge then

. A solution is defined as a matrix

X with entries

and dimension

. The objective function is to minimize the route cost, as shown in Equation (

1).

There are the following constraints: each vertex is arrived at from exactly one other vertex (Equation (

2)), each vertex is a departure to exactly one other vertex (Equation (

3)) and no inner-loop between vertices for any proper subset

Q is created (Equation (

4)). The constraints in Equation (

4) prevent that the solution

X is the union of smaller tours. To conclude, each edge

in solution must be not fractional (Equation (

5)). We point out that the graphs used in this work are symmetric and placed in a two-dimension space.

A CL with cardinality k for vertex i is defined as the set of edges , with , such that the vertices j are the closest k vertices to vertex i. Note that for each vertex there is a CL, and for each CL there are at most two optimal edges.

1.2. Literature Review

The first constructive heuristic documented for the TSP is the Nearest Neighbor (NN), which is a greedy strategy that repeats the rule

“take the closest vertex from the set of unvisited vertices”. This procedure is very simple, but it is not very efficient. While the NN is choosing the best vertex to join the solution, the Multi-Fragment (MF) [

22,

23] and the Clarke-Wright (CW) [

24] are alternatives to add the most promising edge in the solution. Note that the NN grows a single fragment of the tour by adding the closest vertex to the fragment extreme considered during the construction. On the other hand, MF and CW grow, join and give birth to many fragments of the final tour [

25]. The approaches using many fragments show superior quality performances, and also come up with very low computational costs.

A different way of constructing TSP tours is known as insertion heuristic, such as the Furthest-Insertion (FI) [

23]. These approaches iteratively expand the tour generated by the previous iteration. Considering that—at the

mth iteration—the insertion heuristic has created a feasible tour with

vertices (iteration one starts with two vertices) belonging to the inserted edges subset

. At iteration

, the expansion is carried out, and a new vertex, e.g., vertex

j, is inserted into the subset

. To preserve the feasibility of the expanded tour, one edge is removed from the previous tour and two edges are added to connect the released vertices to the new vertex

j. The removal is done in such a way that the lowest cost for the new tour is achieved.

In case the insertion policy is

Furthest, the new inserting vertex is always the farthest from all the vertices belonging to the current tour. Once the last iteration is reached, a complete feasible tour passing through each vertex in

V has been constructed. For further details about the computational complexity and the functioning of these broadly used constructives (MF, CW and FI) we suggest chapter six of Gerhard Reinelt’s book [

26].

Initially, the exploration in the literature produced to introduce ML concepts was about a setup similar to the NN. Systems such as pointer networks [

27] or graph-based transformers [

12,

13,

28] were engaged to select the next vertex in the NN iteration. These architectures were engaged to predict values for each valid departure edge of the extreme considered on the single fragment approach. Then, the best choice (next vertex) according to these values was added to the fragment. These systems were applying stochastic sampling as well, so they could produce thousands of solutions in parallel using GPU processing. Unfortunately, these ML approaches failed to scale, since all vertices were given as input to the networks, and TSP instances with different dimensions exhibit inconsistent intrinsic features.

To attempt to overcome the generalization problem, several works proposed systems arguably claimed to be able to generalize [

16,

29,

30]. Results however showed that the proposed

structure2vec [

29] and Graph Pointer Network [

30] keep their performances close to the FI [

23] up to 250 vertices instances, then they lose their ability to generalize. The model called Att-GCN [

16] is used to pre-process the TSP by initializing CLs. Even if solutions are promising in this case, the ML was not actively used to construct TSP tours. Furthermore, no comparisons with other CL constructors as: POPMUSIC [

31], Delunay Triangolarization [

32], minimum spanning 1-tree [

1] and a recent CL constructor driven by ML [

33] were provided in the paper.

The use of Reinforcement Learning (RL) to tackle TSP was proposed as well [

34]. The ability to learn heuristics without the use of optimal solutions is very appealing. These architectures were trained just via the supervision of the objective function shown in Equation (

1). Several RL algorithms were applied so far to solve TSP (and CO problems in general [

35]). The actor-critic algorithm was employed in [

36]. Later, ref. [

13] used the actor-critic paradigm with an additional reward defined by the easy to compute minimum spanning tree cost (resembling a TSP solution, see [

37]). Moreover, the Q-learning framework was used by [

29], the Monte Carlo Tree Search was employed by [

16] and Hierarchical RL applied in [

30]. It is worth mentioning that [

38] explicitly advised rethinking about the generalization issues to solve TSP employing ML, suggesting that a more hybrid approach was necessary. In Miki [

39,

40] was proposed for the first time the use of image processing techniques to solve the TSP. The choice—even if not obvious in terms of efficiency—has the advantage to get a better understanding of internal network processes.

2. Materials and Methods

For the sake of clarity, materials and methods employed in this work have been divided into subsections. In

Section 2.1, constructive heuristics, based on fragments growth, are reviewed with some examples. The statistical study and the main intuitions behind our heuristic are presented in

Section 2.2. The general idea of the

ML-Constructive heuristic is described in

Section 2.3, the overall algorithm with its complexity is demonstrated in

Section 2.4. The ML system, the image creation process and the training procedure are explained in detail in

Section 2.5.

2.1. Constructive Heuristics

Constructive heuristics are employed to create TSP solutions from scratch, when just the initial formulation described in

Section 1.1 is available. They exploit intrinsic features during the solution creation, generally provide quick solutions with modest quality, and exhibit low polynomial computational complexity [

1,

24,

41].

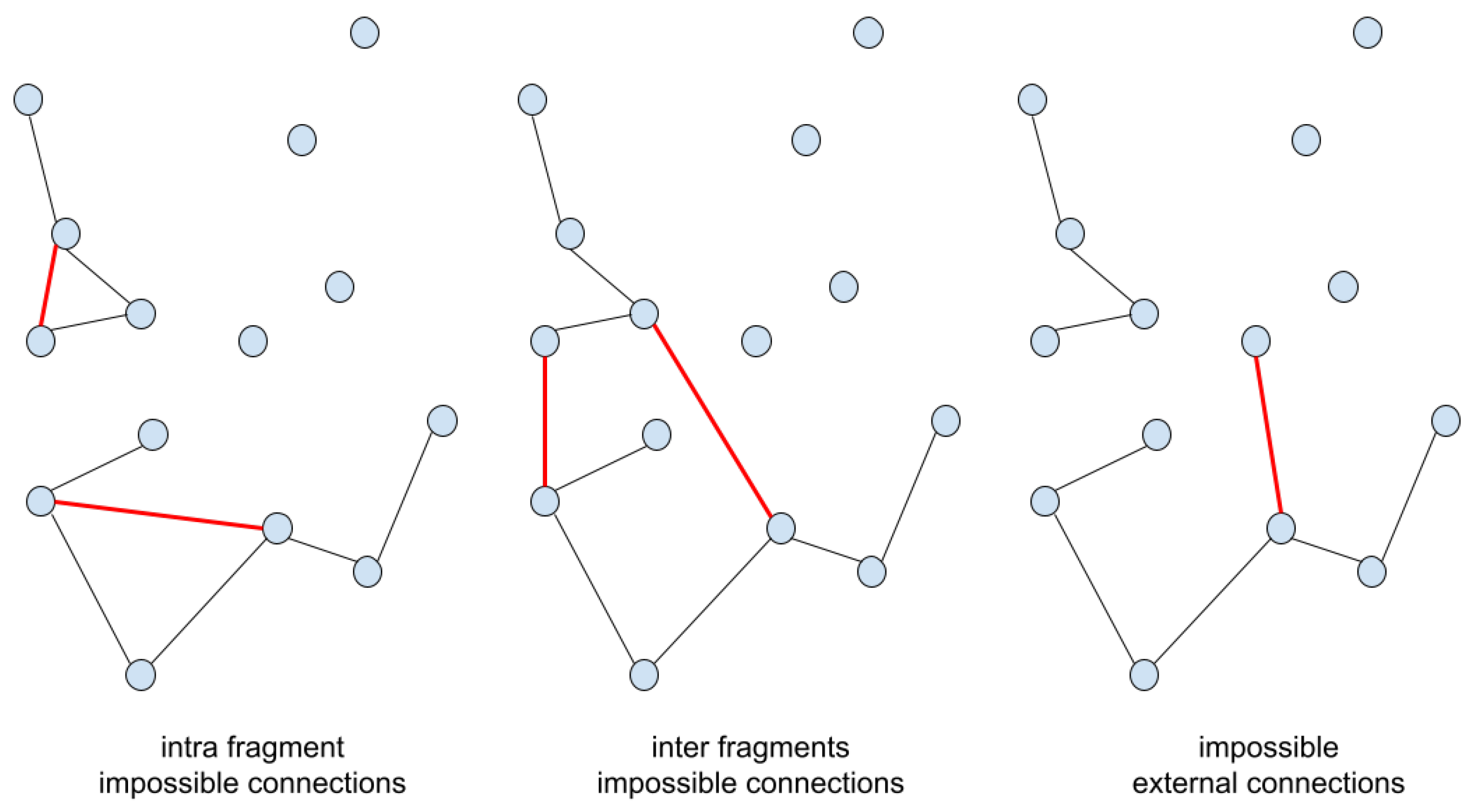

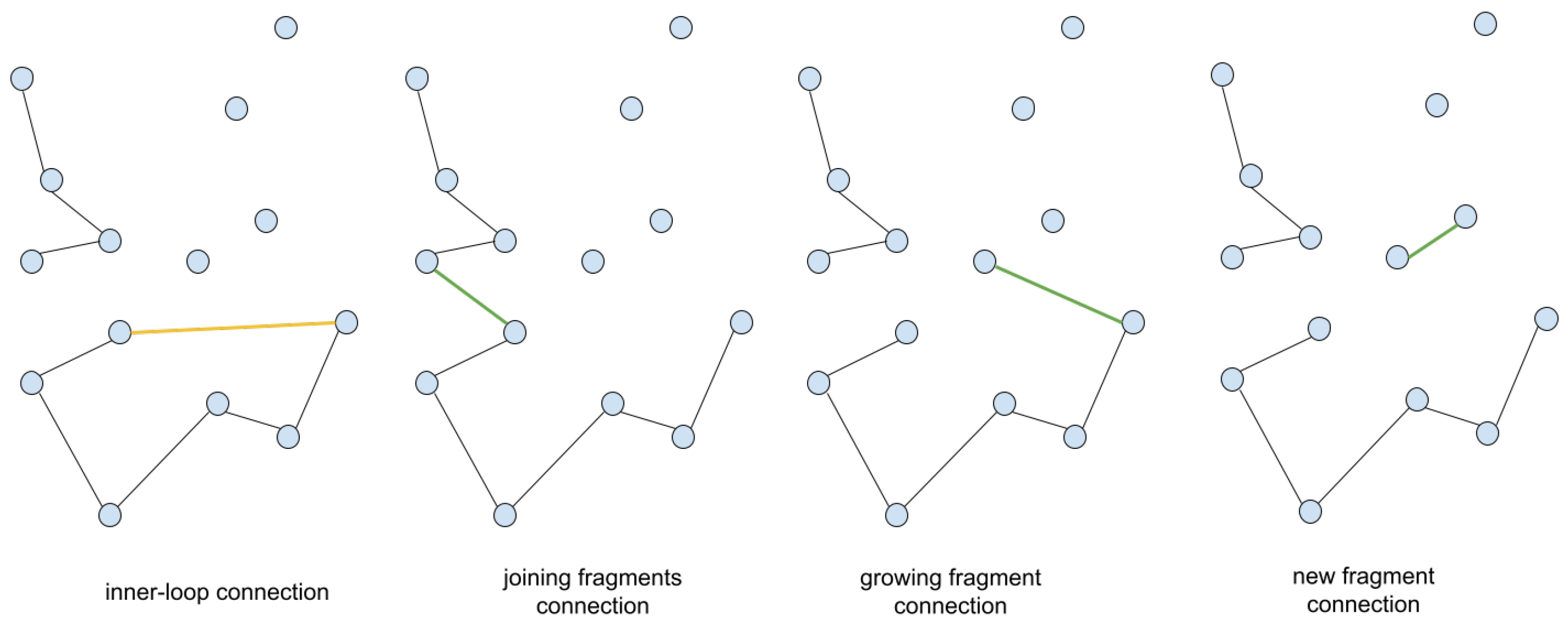

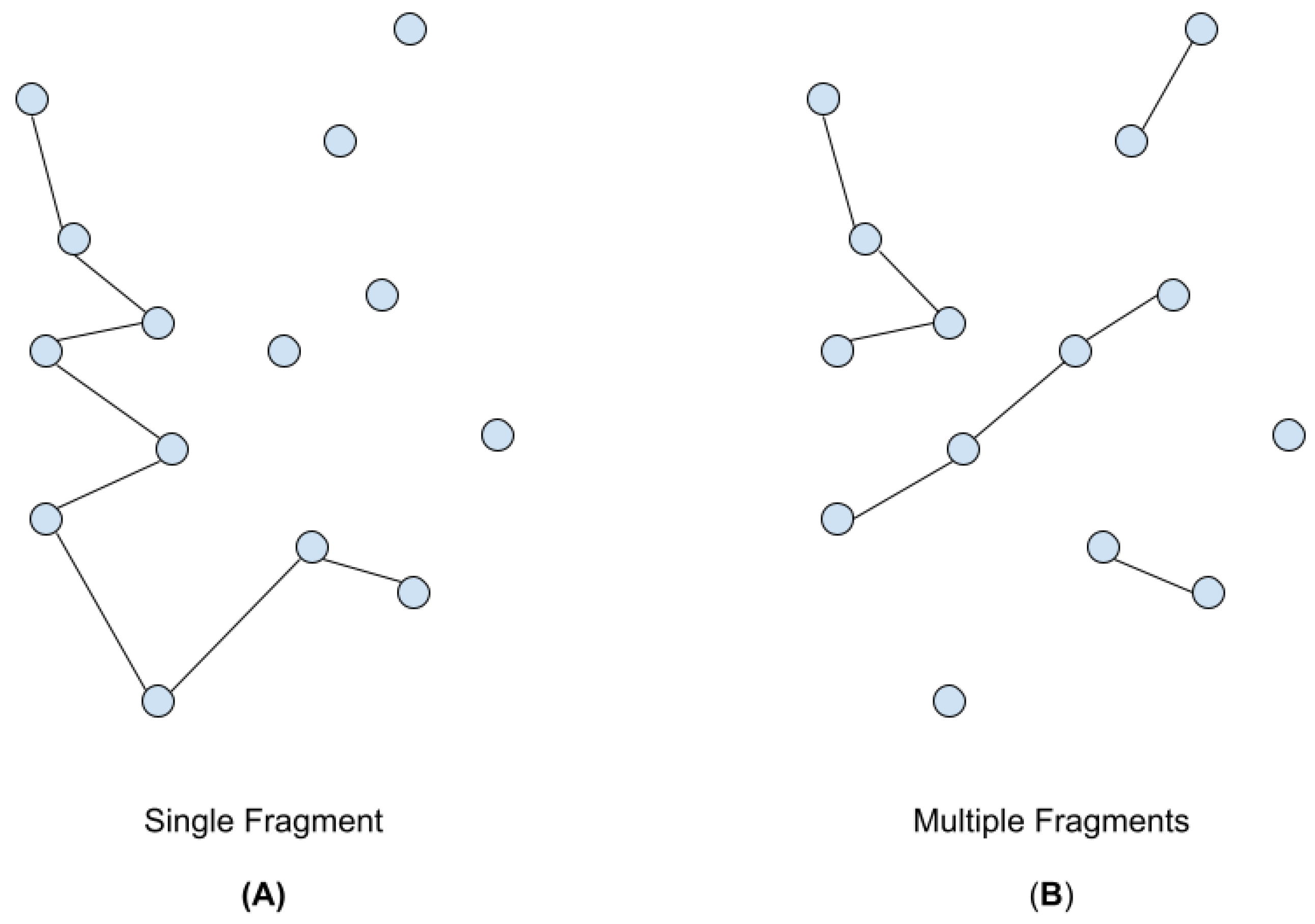

In this paper, we further develop constructive heuristics. Particularly, those that take their decisions on edges are addressed. These approaches grow many fragments of the tour, in opposition to NN that grows just a single fragment (

Figure 1). Since at each addition the procedure must avoid inner-loops and no vertex can be connected with more than two other vertices, they take an extra effort concerning the NN to preserve the TSP constraint. However, the computational time needed to construct a tour remains limited [

26].

As introduced by [

42,

43], two main choices are required to design an original constructive heuristic driven by edge choices: the order for the examination of the edges, and the constraints that ensure a correct addition. Note that to construct an optimal TSP solution the examination order of the edges must be optimal as well (there are more optimal orders). So, theoretically, the objective of an efficient constructive is to examine the edges in the best possible order.

The examination order is arranged according to the relevance that each edge of the instance exhibits. The relevance of an edge is related to the probability that we expect that such an edge is in the optimal solution. The higher is the relevance the earlier that edge should be examined. Different strategies are possible to measure the edges’ relevance, the most famous ones are the MF and the CW policies. The MF relevance is expressed by the cost values, the smaller is the cost, the higher is the probability of being added. Instead, the CW relevance depends on the saving value, which was designed on purpose to rethink the examination order. Saving is the gain obtained when rather than passing through a hub node

h, the salesman uses the straight connection between vertex

i and vertex

j. Note that the hub vertex is chosen to exhibit the shortest Total Distance (TD) from the other vertices. Equation (

6) describes the formulas used to find the hub, while Equation (

7) shows the function employed to compute the saving values.

The second important choice is to define simple constraints that ensure correct additions. The edge’s addition checker is the algorithm the allows to add feasible edges at each iteration. For both approaches (MF and CW), it is checked that the examined edge does not exhibit extremes vertices with already two connections (Equations (

2) and (

3)), and that does not create inner-loops (Equation (

4)) with the current partial solution.

Tracker is called the subroutine that checks if the examined edge can create an inner-loop, and it uses a quadratic number of operations for the worst-case scenario in our implementation (

Appendix A). Note that there exist more efficient data structures and algorithms for the tracker subroutine that even runs in

[

43].

2.2. Statistical Study

As mentioned earlier, the generalization issues of ML approaches are likely caused by a poor consideration of well-known deep learning weaknesses [

17,

18,

38]. It is known that dealing with imbalanced datasets, outliers and extrapolation tasks can critically affect the overall performances of an ML system. So one of the main reasons for using ML for TSP is to reduce the number of wrong forecasts during the construction of the solution.

An outlier arises when a data point differs significantly from other observations, outliers can cause problems during back-propagation [

44] and in their pattern recognition [

45]. Finally, with extrapolation it is mean the phenomenon that occurs when a learned system is required to operate beyond the space of known training examples, since it wants to extend the intrinsic features of the problem to similar but different tasks [

46].

To overcome the aforementioned problems, we suggest supporting the ML with an optimization heuristic. We instruct the model to act as a decision-taker, and we engineer to place it in a context that allows it to act confidently. We emphasize the significance of designing a good heuristic that avoids gross errors, e.g., by omitting imbalanced class skews and outlier points. Choosing wisely a context for the ML that does not change too frequently during the algorithm iterations, can help it to deal with extrapolation tasks as well.

We addressed the challenge by introducing two operational components: the use of a subroutine and the employment of ML as a decision-taker for the solution construction. The subroutine consists of the detection of optimal edges from the elements of a CL. As stated in

Section 1.1, CL identifies the most promising edges to be part of the optimal solution. For instance, [

47] proved that just around

of the edges need to be taken into account by an optimal solver for large instances with more than a thousand points. The use of CL with ML was firstly suggested by [

16]; but, as mentioned, they employed ML to initialize CL rather than constructing tours.

To understand the decision-taker task, an exploratory study was carried out to check the distribution of the optimal edges within the CLs. It was observed that after sorting the edges in the CL from the shortest to the longest, the occurring of an optimal edge was not uniform concerning the positions in the sorted CL, but followed a logarithmic distribution, as shown in

Figure 2. Such a pattern unfortunately revealed a severe class distribution skew for some positions. In fact, some positions displayed the presence of optimal edges much more frequently than other positions.

Figure 2 also shows the rate of optimal edges found for each position. Note that an optimal edge occurrence arises when the optimal tour passes through the edge in the position taken into consideration by the CL. This study emphasizes the relevance of detecting when the CL’s shortest edge is optimal since about

for the evaluation data-set and

for the test data-set of the times these edges belong to the optimal tour. However, it reveals as well that detecting with ML when the shortest edge is not optimal is a hard task due to the over-represented situation.

Instead, considering the second shortest edge, a balanced scenario is observed. About half of the occurrences are positive and the other half is negative for both data sets. On the other hand, the rapid growth of under-represented positions can be observed from the third position onwards. Note that up to the fifth position the under-representation is not too severe, and imbalanced learning techniques could make their contribution to infer some useful patterns [

48]. From the sixth on instead, the optimal occurrences are too rare to be able to recognize any useful pattern, even if these positions could be interpreted as very useful ones in terms of construction. Considering the rate of optimal edges shown in

Figure 2, the sum of optimal occurrences for the first five positions in the CL represents about

of the total optimal edges available. Therefore, the selection of such a subset of edges is promising in regards to ML pattern recognition, in such a way as to avoid all the under-represented scenarios.

The empirical probability density functions (PDF) shown in

Figure 2 were computed using 1000 uniform random euclidean TSP instances—sampled in the unit side square with a total number of vertices varying uniformly between 500 and 1000—for the evaluation data-set and a representative selection of TSPLIB instances as test data-set. The latter data-set was select in such a way that all the instances available in the TSPLIB library with a total number of vertices varying between 100 and 2000 were included. Furthermore, these instances were required to be stacked in a two-dimensional space as well. The optimal solutions were computed with the Concorde solver [

49] for both cases.

After that the most promising edges were selected, a relevant choice to be made in our heuristic is the examination order of these edges. As mentioned, edges selected in earlier stages of the construction exhibit higher probabilities to be accepted regarding the later ones. To explore the effectiveness of different strategies, we tested the behavior of classic constructive solvers such as MF, FI and CW (

Figure 3). Using the representative TSPLIB instances [

19], these solvers were investigated on some classic metrics such as the TPR, the FPR, the accuracy and the precision. Considering each constructive solver as a predictor, each position in the CL as a sample point, and the optimal edge positions as the actual targets to be predicted. For each CL, there are two position targets and two positions predicted.

A true-positive occurs when the predicted edge is also optimal, a false-positive instead occurs if the predicted edge is not optimal. Note that avoiding false-positive cases is crucial since they block other optimal edges in the process. To take care also of this aspect, the precision of the predicting heuristic was considered in

Table 1 as well. Let

be the dataset of all the edges available in the

p positions for each CL. If the predictor truly finds an optimal edge in the observation

i, the variable

will be equal to one, otherwise, it is zero. Similarly, it is for the false-positive

variable. Note that a positive (

) or negative (

) observation occur when the observation is optimal or not, respectively, and the frequency of these events varies according to the

p position. Hence, studying the predictor performances by position is important since each position has different importance during the solution construction and for the optimal frequency.

To get a better look at the results shown in

Figure 3,

Table 1 emphasizes the values obtained during the experiment. MF exhibits a higher TPR for shorter edges as expected, while CW performs better with longer edges. However, less obvious is MF’s higher FPR in the first position. The latter fact brings attention to the importance of decreasing the FPR for the most frequent position. Note that the CW’s precision is higher concerning the other ones for the first position, which can be read as the main reason why CW comes up with better TSP solutions than MF. So, one of the main reasons for using ML for TSP is to reduce the FPR during the construction of the solution.

2.3. The General Idea

In light of the statistical study presented in

Section 2.2, we propose a constructive heuristic called

ML-Constructive. The heuristic follows the edge addition process (see MF and CW in

Section 2.1) extended by an auxiliary operation that asks the ML to agree for any attaching edge during a first phase. The goal is to avoid as much as possible adding bad edges in the solution while allowing the addition of promising edges which are considered auspicious by the ML model. We emphasize that our focus is not on the development of highly efficient ML architectures, but rather on the successful interaction between ML and optimization techniques. Therefore, the ML is conceived to act as a decision-taker and the optimization heuristics as the texture of the solution building story. The result is a new hybrid constructive heuristic that succeeds in scaling up to big problems while keeping the improvements achieved thanks to ML.

As aforementioned, the ML is exploited just in situations where the data do not suggest underrepresented cases, and since about

of the optimal edges are connections with one of the closest five vertices of a CL, only such subset of edges is initially considered to test the ML performances. Recall that it is a common practice to avoid employing ML in the prediction of rare events. Instead, it is commonly suggested to apply ML preferably in cases where a certain level of pattern recognition can be confidently detected. For this reason, our solution is designed to construct TSP tours in two phases. The first employs ML to construct partial solutions (

Figure 4). While the second uses the CW heuristic to connect the remaining free vertices and complete the TSP tour.

Initially, during the first phase, considered most likely edges to be found in the optimal tour are collected in the list of promising edges

. To appropriately choose these edges, several experiments were carried out and results are shown in

Table 2. The strategy used to build the promising list was to include the edges of the first

m vertices of each CL, considering the

m value ranging from 1 to 5. The ML was in charge of predicting whether the edges under consideration in

were in the optimal solution or not. Experiments were handled adopting the same ResNet [

20] architecture and procedure explained in

Section 2.5, but the ML was trained on different data to be consistent with the

m tested value.

Several classic metrics were compared for the different

m: the True Positive Rate (TPR), the False Positive Rate (FPR), and their difference [

50]. Please note that ML objectives are to keep the FPR small, meanwhile to obtain good results in terms of TPR. Keeping a small FPR ensures that during the second phase the

ML-Constructive has an higher probability in detecting good edges, meanwhile with a high TPR the search space for the second phase is hopefully reduced (

Appendix B).

In terms of TPR, it seems to be the best choice to include just the shortest edge in

(

), but by checking the FPR in

Table 2 it becomes obvious that the ML has learned to predict almost always an agreement for

. Therefore, such a behavior is undesirable and it leads to a high FPR, and hence to worse solutions during the second phase. However, if the difference between TPR and FPR is taken into account, the best arrangement is when the first two shortest edges are put into the list (

). Although other arrangements might show to be effective as well, the selection through positions in the CLs and the selection of the first two shortest edges in each CL are proven to be efficient by the results. Recall that too many edges in

can be confusing since outliers and classes with severe distribution skew can appear. For example, detecting optimal edges from the fourth position onwards is very difficult since they are very under-represented (

Figure 2), and the creation of images connecting edges that are in the fourth position in their CL is very uncommon—causing outliers in the third channel (

Section 2.5).

After engineering the promising list structure,

is sorted according to a heuristic that seeks to anticipate the processing of good edges. It is crucial to find an effective sorting heuristic for the promising list since the order of it affects the learning process and the

ML-Constructive algorithm as well. An edge belonging to the optimal tour and being straightforwardly detected by the ML model is regarded as good. Therefore, for simplicity, in this work, the list is sorted by edge’s position in the CL and cost length, but other approaches could be propitious, perhaps using ML. Note that, as repeatedly mentioned, the earlier examination of the most promising edges increases the probability to find good tours employing the multiple fragment paradigm (

Appendix B).

At this point, the edges belonging to the sorted promising list are drawn in images and fed to the ML one at a time. If the represented edge meets the TSP constraints considering the partial solution found at the current iteration, the ML system will be challenged to detect if the edge is in the optimal solution. If the ML agrees with a given level of confidence, the heuristic will add the edge to the solution. Assuming that some CLs information provides enough details to detect common patterns from previous solutions, the images represent just a small subset of vertices given by the CLs of each edge extremes of the edge that is processed. The partial solution visible in such local context and available up to the insertions made by moving through the promising list is represented as well.

Once all the edges of the promising list have been processed, the second phase of the algorithm will complete the tour. Initially, it detects the remaining free vertices to connect (

Figure 4), then it connects such vertices employing the CW. Note that CW usually captures the optimal long edges better than MF, as emphasized in

Figure 3 and

Table 1. Therefore CW represents a promising candidate solver to connect the remaining free fragment extremes of the partial solution into the final tour. However, other arrangements employing local searches or meta-heuristics may be considered promising as well even if more time-consuming [

51].

2.4. The ML-Constructive Algorithm

The ML-Constructive starts as a modified version of MF, then concludes the tour exploiting the CW heuristic. The ML model behaves consequently as a glue since it is crucial to determine the partial solution available at the switch between solvers.

The list of promising edges

and the confidence level of the ML decision-taker are critical specifications to set in the heuristic before than it runs. The reasons behind our promising list building choices were widely discussed in

Section 2.3. While the confidence level is used to handle the exploitation vs exploration trade-off. It consists of a simple threshold applied to the predictions made by the ML system. If the predicted probability that validates the insertion is greater than such threshold, then the insertion is applied. The value of

has been verified to provide good results on tested instances. Since, lower values increase the occurrence of false-positive cases, thus leading to the inclusion of edges that are not optimal. On the other hand, higher values of it decrease the occurrence of true-positive cases, hence increasing the challenge of the second phase.

The overall pseudo-code for the heuristic is shown in Algorithm 1. Firstly, the CLs for each vertex in the instance are computed (line 2). We noticed that considering just the closest thirty vertices for each CL was a good option. As mentioned, just the first two connections are considered in , while the other vertices are used to create the local context in the image. The CL construction takes a linear number of operations for each vertex, and the overall time complexity for constructing it is . Since finding the nearest vertex of a given vertex it takes linear time, the search for the second nearest takes the same time (after removing the previous from the neighborhood). So on until the thirtieth nearest vertex is found. As only the first thirty edges are searched, the operation can be completed in linear time. Then, promising edges are inserted in (line 3), then repeating edges are deleted to avoid unnecessary operations (line 4). The list is sorted according to the position in the CL and the cost values (line 5). All the edges that are the first nearest will be found first and sorted according to , then the second nearest and so on. Since only the first two edges for each CL can be in the list, the sorting task is completed in . Several orders for were preliminary tested—e.g., employing descending cost values or even savings to sort the edges in —but experiments suggested that sorting the list according to ascending cost values is the best arrangement.

The first phase of

ML-Constructive takes part. An empty solution

is initialized (line 6), and following the order in

a variable

l is updated with the edge considered for the addition (line 7). At first,

l is checked to ensure that the edge complies the TSP constraints (Equations (

2)–(

4)). Then the ML decision-taker is queried to confirm the addition of the edge

l. If the predicted probability is higher than the confidence level, the edge is added to the partial solution (lines 11 and 14). To evaluate the number of operations that this phase consumes, we must split the task according to the various sub-routines which are acted at each new addition in the solution. The “if” statements (lines 9, 13, 23 and 26) check that the constraints (

2) and (

3) are complied.

They verify that both extremes of the attaching edge

l exhibit at most one connection in the current solution. The operation is computed with the help of hash maps, and it takes constant time for each considered edge

l. The tracker verification (lines 10 and 24) ensures that

l will not create an inner-loop (Equation (

4)). This sub-routine is applied only after it is checked that both extremes of

l have exactly one connection, each in the current partial solution. It takes overall

operations up to the final tour (proof in

Appendix A).

Once all the constraints of the TSP have been met, the edge l is processed by the ML decision-taker (lines 11 and 14). Even if time-consuming, such sub-routine is completed in constant time for each l. Initially, the image depicting the l edge and its local information is created, then it is given as input to the neural network. To create the image, the vertices of the CLs and the existing connections in the current partial solution must be retrieved. Hash maps are used for both tasks, and since the image can include up to sixty vertices, this operation takes a constant amount of time for each l edge in . The size of the neural network does not vary with the number of vertices in the problem as well but remains constant for each edge in .

To complete the tour, the second phase starts by identifying the hub vertex (line 15). It considers all the vertices in the problem (free and not), following the rule explained in Equation (

6). Free vertices are selected from the partial solution, and edges connecting such vertices are inserted in the difficult edges list

(line 16). The saving for each edge in

is computed (line 17), and the list is sorted according to these values (line 18) in

. At this point (lines 20 to 27), the solution is completed employing the classical multiple fragment steps, which are known to be

[

41].

Therefore, the complexity of the worst-case scenario for the

ML-Constructive is:

Note that to complete the tour we proposed the use of CW, but rather hybrid approaches that also use some sort of exhaustive search could be very promising as well, although more time-consuming.

| Algorithm 1 ML-Constructive. |

-

Require:

TSP graph -

Ensure:

a feasible tour X - 1:

procedure ML-Constructive() - 2:

create CL for each vertex - 3:

insert the shortest two vertices for each CL into - 4:

remove from all duplicate edges in their higher positions - 5:

sort according to the position in the CL and the ascending costs - 6:

- 7:

for l in do - 8:

select the extreme vertices of l - 9:

if vertex iand vertex j have exactly one connection each in X then: - 10:

if l do not creates a inner-loop then: - 11:

if the ML agrees the addition of lthen: - 12:

else - 13:

if vertex iand vertex j have less than two connections each in X then: - 14:

if the ML agrees the addition of lthen: - 15:

find the hub vertex h - 16:

select all the edges that connects free vertices and insert them into - 17:

compute the saving values with respect to h for each edge in - 18:

sort according to the descending savings - 19:

- 20:

while the solution X is not complete do - 21:

, - 22:

select the extreme vertices of l - 23:

if vertex iand vertex j have less than two connections each in X then: - 24:

if l do not creates a inner-loop then:

|

2.5. The ML Decision-Taker

The ML decision-taker validates the insertions made by the ML-Constructive during the first phase. Its scope is to exploit the ML pattern recognition to increase the occurrences of finding good edges while reducing them for the bad edges.

Two data sets were specifically created to fit the ML decision-taker; the first was used to train the ML system while the second was to evaluate and choose the best model. The training data-set is composed of 38,400 instances uniformly sampled in the unit-sided square. The total number of vertices for instance

n ranges uniformly from 100 to 300. On the other hand, the evaluation data-set is composed of 1000 instances uniformly sampled from the unit-sided square. The total number of vertices varies in this case from 500 to 1000. The data-set has been used to create the results in

Table 2. The optimal solutions were found (in both cases) using the Concorde solver [

49]. The creation of the training instances and their optimal tours took about 12 hours on a single CPU thread, while a total time of 24 CPU hours was needed for the evaluation data-set creation (since it includes instances of greater size). Note that, in comparison with other approaches that use RL, good results were achieved here even though we used far fewer training instances [

13].

To get the ML input ready, the promising list

was created for each instance in the data sets. In case

(best scenario), the two shortest edges of each CL were inserted into the list. To avoid repetitions, edges that occur several times in the list were inserted just once at the shortest available position. For example, if edge

is the first shortest edge in CL

and the second shortest edge in CL

, it will only occur in

once such as the first position for vertex

i. After that all promising edges had been inserted into

, the list was sorted. Note that the list can contain at most

items in it. An image with a dimension of

pixels was created for each edge belonging to

. Three channels (red, green, and blue) were set up to provide the information used to feed into the neural network. Each channel depicts some information inside a square with sides of 96 pixels each (

Figure 5). The first channel of the image (red) shows each vertex in the local view, the second one (green) shows the edge

l considered for the insertion with its extremes and the third one (blue) shows all the existing fragments currently in the partial solution and visible from the local view drawn in the first channel.

As mentioned, the local view was formed by merging the vertices belonging to the CLs of each extreme of the inserting edge. These vertices were collected and their positions normalized to fill the image. The normalization required having the middle of the inserting edge l such as the image center, whereas all the vertices visible in the local view were interior to a virtual sphere inscribed into the squared image, such that the maximum distance between the image center and the vertices in the local view was less than the radius of such sphere. The scope of the normalization was to keep consistency among the images created for the various instances.

The third channel was concerned with giving a temporal indication to aid the ML system in its decision. Representing those edges that had been inserted during the previous stages of the ML-Constructive, this information gave a helpful hint in the interpretation of which edges the final solution needs most. Two different policies were employed in the construction of it: the optimal policy (offline) and the ML adaptive policy (online). The first used the optimal tour and the order to create this channel (just on training), while the second one used the ML previous validations (train and test).

A simple ResNet [

20] with 10 layers was adopted to agree on the inclusion of the edges into the solution. The choice of the model is motivated by the easiness that image processing ML models show on the understanding of the learning process. The architecture is shown in

Figure 6. There are four residual connections, containing two convolutional layers each. The first layer in each residual connection is characterized by a stride equal to two (/2). As usual for the ResNet the kernels are set to

, and the number of features increases by multiplying by 2 at each residual connection, to balance the downscale of the images. The output is preceded by a fully connected layer with 9 neurons (fc) and by an average pool operation (avg pool) that shrinks the information in a single vector with 1024 features. For additional details we refer to [

20]. The model is very compact, with the scope of avoiding the computational burden and other complexities.

The output of the network is represented by two neurons. One neuron is predicting the probability that the considered edge l is in the optimal solution, while the other is predicting if the edge is not optimal. The sum of both probabilities is equal to one. The choice of using two neurons as output instead of just one is due to the exploitation of the Cross-Entropy loss function, which is recommended to train classification problems. In fact, this loss penalizes especially those predictions that are confident and wrong. The network will know if the inserting edge l is optimal or not during train, while the ResNet should predict the probability that the edge is optimal during the test.

To train the network two loss functions were jointly utilized: the Cross-Entropy loss (Equation (

10)) [

52] and a reinforcement loss (Equation (

11)) which was developed specifically for the task at hand. Initially, the first loss is employed alone up to convergence (about 1000 back-prop iterations), then the second loss is also engaged in the training. At each iteration of back-propagation the first loss updates the network firstly, then (after 1000 iterations) the second loss updates the network as well. The gradient of the second loss function is approximated by the REINFORCE algorithm [

53] with a simple moving average with 100 periods used as a baseline.

In Equations (

10) and (

11),

is the image of the inserting edge

l, the function identifying whether

l is optimal is accounted as

p, while

is the ResNet approximation to it. Moreover, the

T function returns one if the prediction made by

is true (TP or TN), and zero otherwise. While the

F function returns one if the prediction is false. Note that the second loss exhibit an expected value with respect to

measure, since the third channel is updated using the ML adaptive policy (online), while the first loss uses the optimal policy (offline). The introduction of a second loss had the purpose of increasing the occurrences of true-positive while decreasing the false-positive cases. Note that it employs the same policy that will occur during the

ML-Constructive test run.

3. Experiments & Results

To test the efficiency of the proposed heuristic, experiments were carried out on 54 standard instances. Such instances were taken from the TSPLIB collection [

19] and their vertex set cardinality varies from 100 to 1748 vertices. Non-euclidean instances, such as the ones involving geographical distances (GEO) or special distance functions (ATT), were addressed as well. We recall that the ResNet model was trained on small (100 to 300 vertices) uniform random euclidean instances, evaluated on medium-large (500 to 1000 vertices) uniform random euclidean instances, and tested on TSPLIB instances. We emphasize that TSPLIB instances are generally not uniformly distributed, and, as mentioned, these instances were selected among all available instances in such a way to have no less than 100 total vertices, no more than 2000 total vertices and with the ability to be described in a two-dimensional space.

All the experiments were handled employing python 3.8.5 [

54] for the algorithmic component, and pytorch 1.7.1 [

55] to manage the neural networks. The following hardware was utilized:

During training, all hardware was exploited, while just the CPU was used to test.

The experiments presented in

Table 3 compare

ML-Constructive (ML-C) results—achieved employing the ResNet—to other famous strategies based on fragments, such as the MF and the CW. The first is equivalent to include all the existing edges in the list of promising edges

, sort the list according to the ascending cost values—without considering the CL positions—and then substituting the ML decision taker with a rule that always inserts the considered edge. While the second strategy is equivalent to keeping the list

empty, this means that the first phase of

ML-Constructive does not create any fragment, while the construction is made completely in the second phase. For the sake of completeness, the FI was tested as well and added to

Table 3. Even if FI is not a growing fragment approach, and therefore is not an alternative strategy of our

ML-Constructive heuristic, it is nevertheless a good benchmark solver to compare.

To explore, evaluate and interpret the behavior of our two-phase algorithm, other strategies were investigated as well. The ML decision-taker can act in very different ways, and a comparison with expert-made heuristic rules can be significant. Deterministic and stochastic heuristic rules were created to explore the optimality gap variation. The aim was to prove that the learned ML model would produce a higher gain for the heuristic rules, as corroborated by

Table 3. The heuristic rules were substituting the ML decision-taker component within the

ML-Constructive (lines 10 and 13 of Algorithm 1). No changes in the selecting and sorting strategies were applied to create the lists

and

. The First (F) rule decides to deterministically add the

l edge if one of its extremes is the first closest vertices in the CL of the other extreme. The Second (S) rule is similar, but it adds

l only if one extreme is the second shortest in the CL of the other. The policy that always validates (Y) the insertion of the edges in

was examined as well. It represents with CW the extreme cases where the ML decision-taker always validates or not, respectively, the edges in

. A stochastic strategy called empirical (E) was tested as well, which adds the edges in

according to the distribution seen for the evaluation data-set in

Figure 2. Therefore, it inserts the edge if one of the extremes is the first with probability

or the second with probability

. Twenty runs of the empirical strategy were made, and in (AE) we show the average results, while in (BE) we show the best from all runs. Finally, to check the behavior of the

ML-Constructive in case the ML system validates with

accuracy (the ML decision-taker is a perfect oracle), the Super Confident (ML-SC) policy was examined. This policy always answers correctly for all the edges in the promising list

, and is achieved by exploiting the known optimal tours. To capture the potentiality of a super-accurate network for the first phase, the partial solution created by the ML-SC policy in the first phase and the solution constructed in the second phase are shown in

Figure 7. Note that some crossing edges are created in the second phase. In fact, despite the solution created being very close to the optimal, the second phase sometimes adds bad edges to the solution. This last artificial policy has been added to demonstrate how much leverage we can still gain from the ML point of view.

The results obtained by the optimal policy (ML-SC) lead us to two interesting aspects. The first one, as mentioned before, shows the possible leverage from the ML perspective (first phase). While the second one gives us an idea of how much improvement is possible from the heuristic point of view (second phase). To emphasize these aspects the ML-SC (gap) column in

Table 3 shows the difference, in terms of percentage error, between the ML-SC solution and the best solution found by the other heuristics (in bold). In fact, the top nine columns were compared with each other to find the best solution, while the tenth column (ML-SC) was later compared with all the others. The average, the standard deviation (std), and the number of times when the heuristic is best are shown as well for each strategy. The gap column is of interest since it reveals that occasionally the solution is highly affected by the behavior of the second phase of the heuristic. Even if the heuristic behaves greatly for promising edges the second phase can still ruin the solution.

Among the many policies shown in

Table 3, the First (F) and the ML-based (ML-C) policies exhibit comparable average gaps. To prove that the enhancement introduced by the ML system is on average statistically significant, a statistical test was conducted. A T-test on the percentage errors obtained for the 54 instances in

Table 3 shows that the p-value against the hypothesis both policies are similar in terms of the average optimal gap is equal to 0.03. The result proves that the enhancement is relevant, and that these systems have a promising role in improving the quality of TSP solvers.

To check the behavior of the heuristics concerning time,

Table 4 shows the CPU time for each policy and heuristic shown in

Table 3. Note that for each query to the ResNet the input procedure produces an image that increases the computational burden. Therefore, future work could be proposed to speed the ML component, even though the computation times remain short and acceptable for many online optimization scenarios.

Finally, to make a comparison with the metrics presented for the MF, FI, and CW in

Section 2.2, the results in

Table 5 show the final tour achievements for the F, ML-C, and ML-SC policies across the various positions in the CL. Note that although the TPR of ML-SC is

, its FPR is not equal to zero since during the second phase some edges in the first and second position can be inserted. Also note that the accuracy of ML-C is consistently better than F, while the FPR for the first position is lower resulting in a higher TPR for the second position.