Methodology for Analyzing the Traditional Algorithms Performance of User Reviews Using Machine Learning Techniques

Abstract

1. Introduction

- We scraped recent Android application reviews by using the scraping technique, i.e., Beautiful Soup 4 (bs4), request, regular expression(re);

- We scraped raw data from the Google Play Store, collected these data in chunks and normalized the dataset for our analysis;

- We compared the accuracy of various machine-learning algorithms and found the best algorithm according to the results;

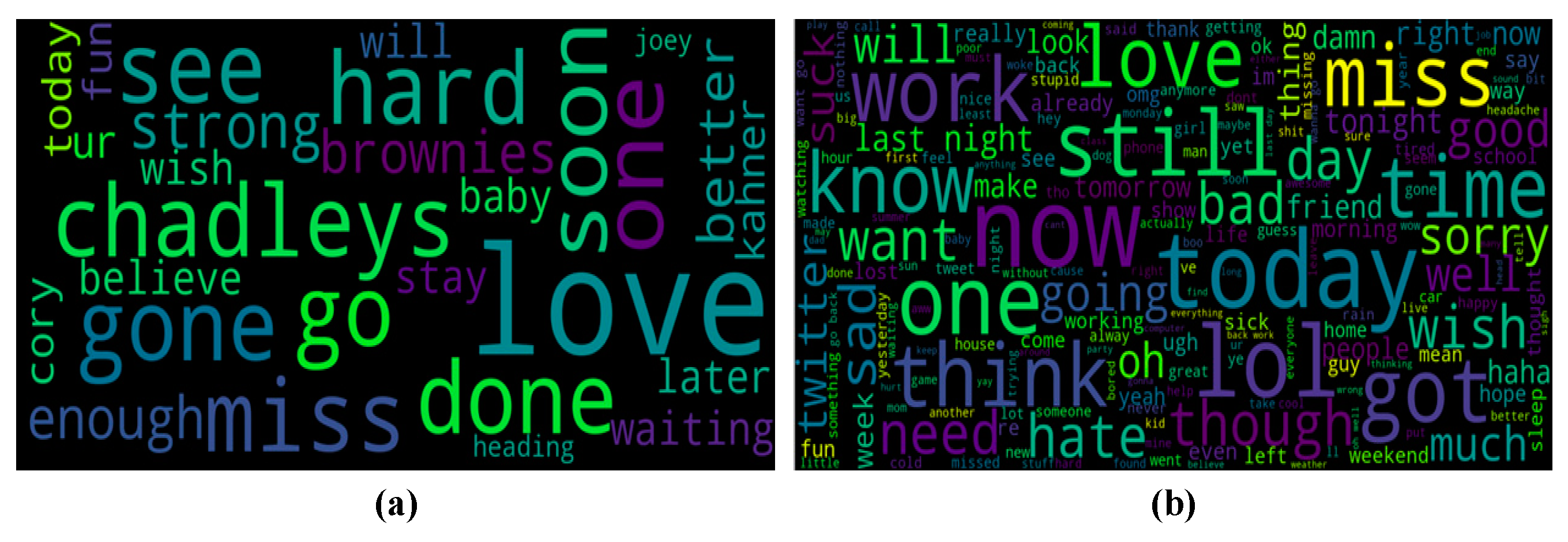

- Algorithms can check the polarity of sentiment based on whether a review is positive, negative or neutral. We can also prove this using the word cloud corpus.

- One of the key findings is that logistic regression performs better compared to random forest and naïve Bayes multinomial to multi-class data;

- A good preprocessing affects the performance of machine learning models;

- Term frequency (TF) overall results after preprocessing were better than the term frequency/inverse document frequency (TF/IDF).

2. Literature Review

3. Materials and Methods

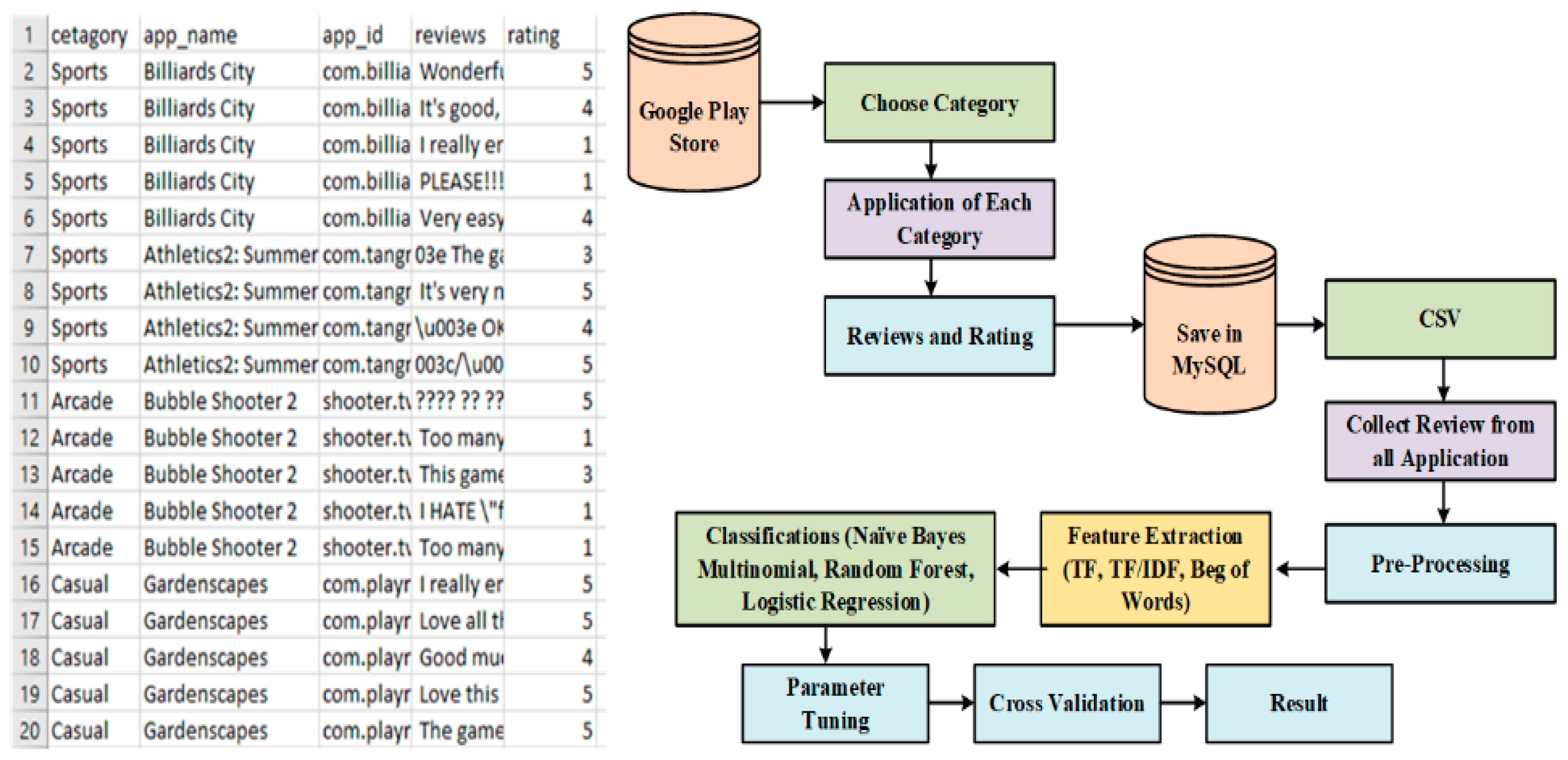

3.1. Data collection Description

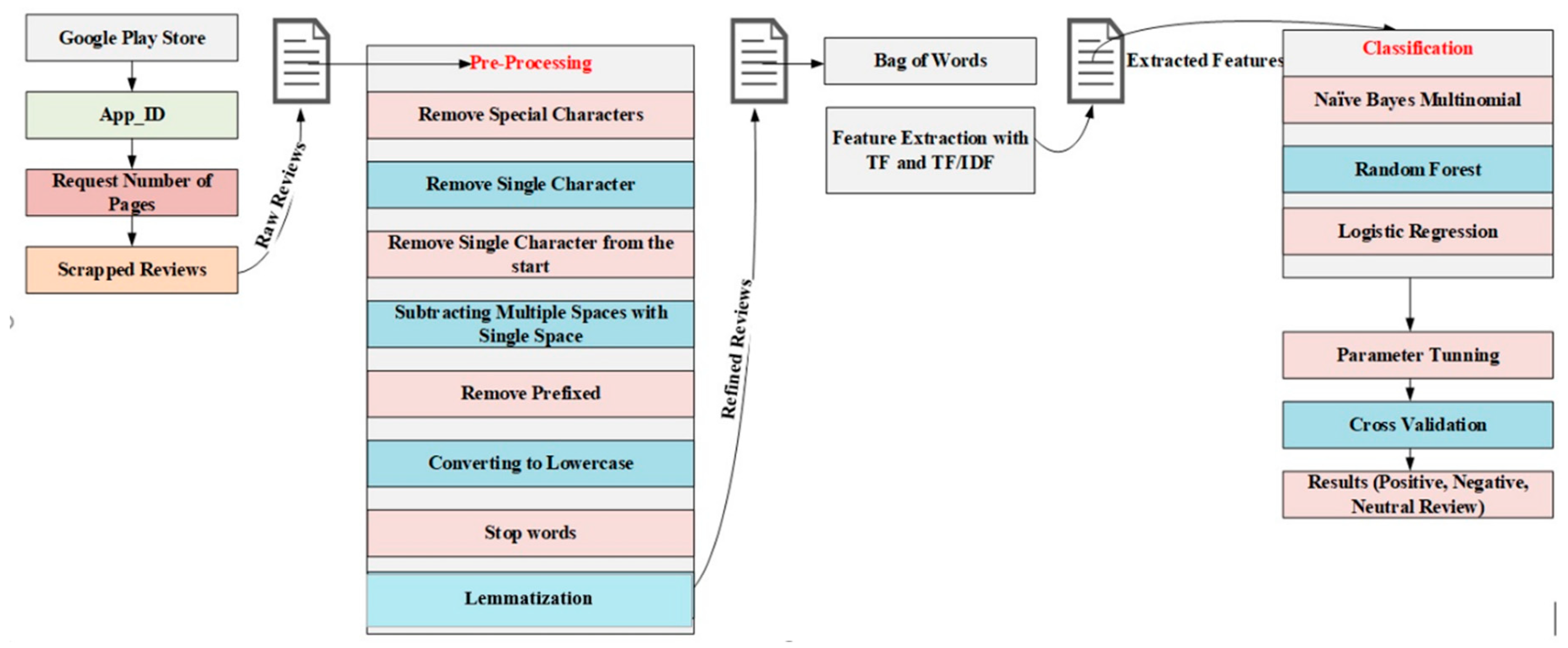

3.2. Methodology

3.3. Supervised Machine Learning Models

3.4. Classifier Used for Reviews

3.4.1. Logistic Regression

3.4.2. Random Forest

3.4.3. Naïve Bayes Multinomial

4. Result and Discussion

4.1. Analytical Measurement and Visualization after Preprocessing

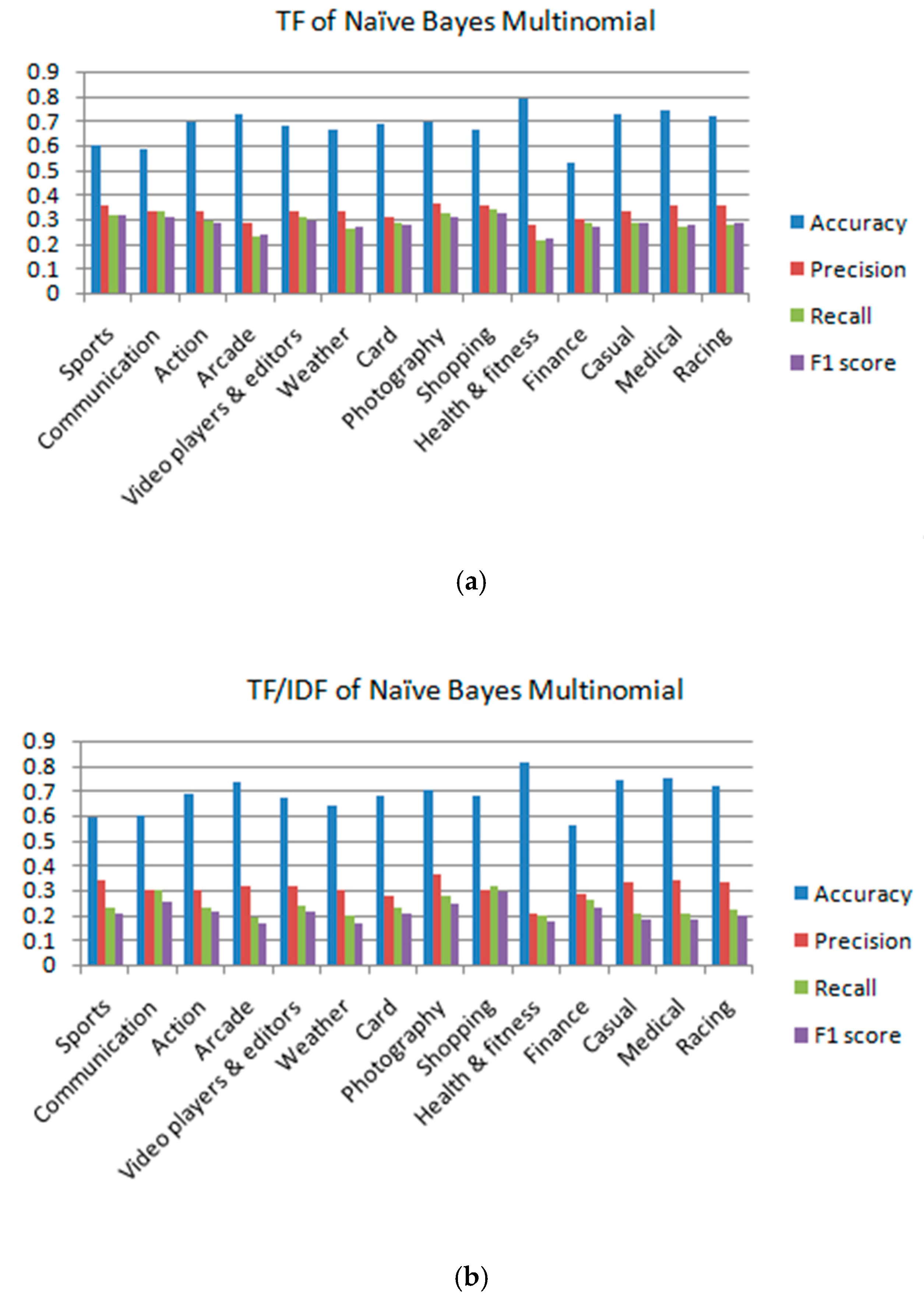

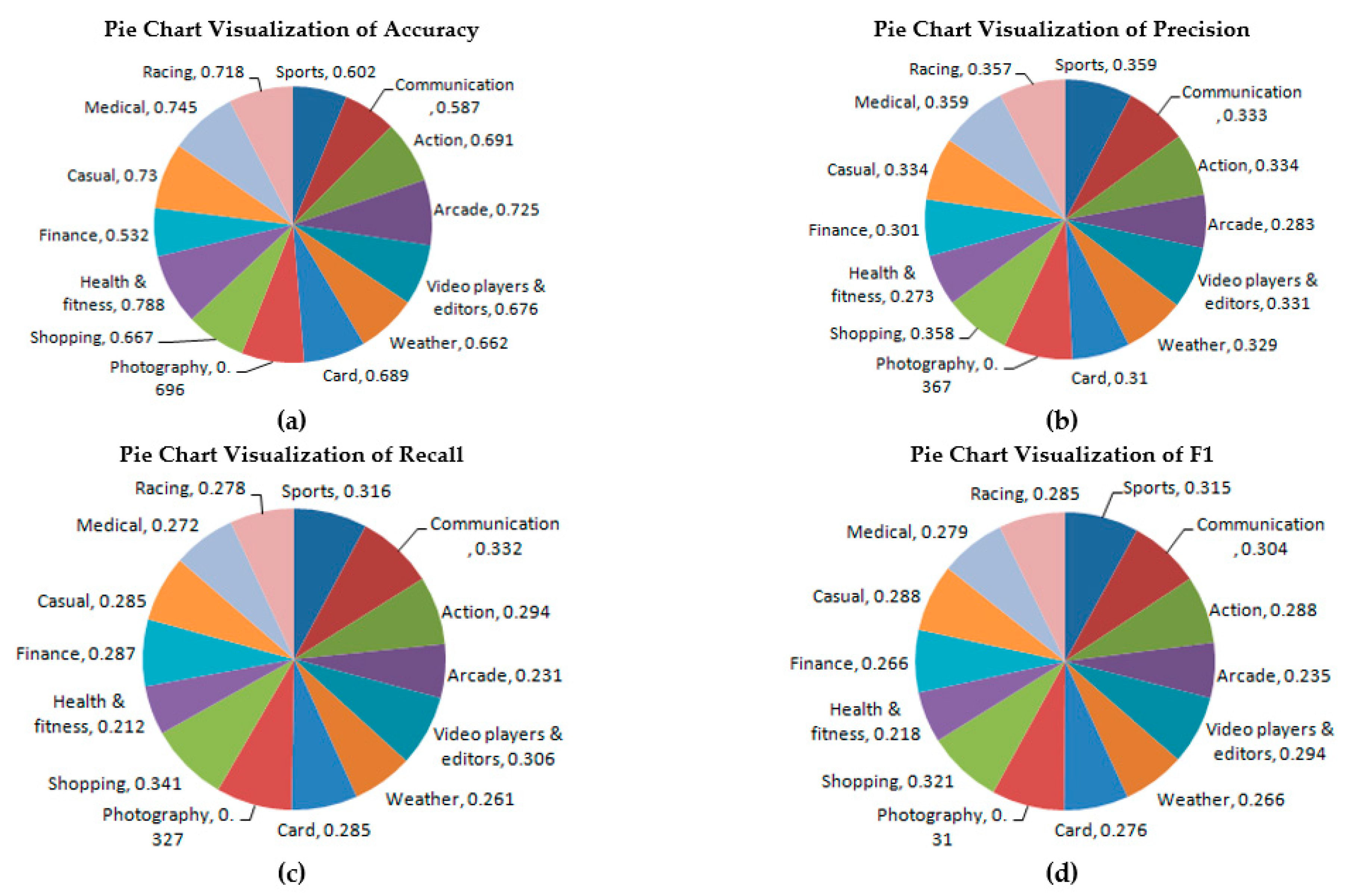

4.1.1. Naïve Bayes Multinomial

4.1.2. Random Forest Algorithm

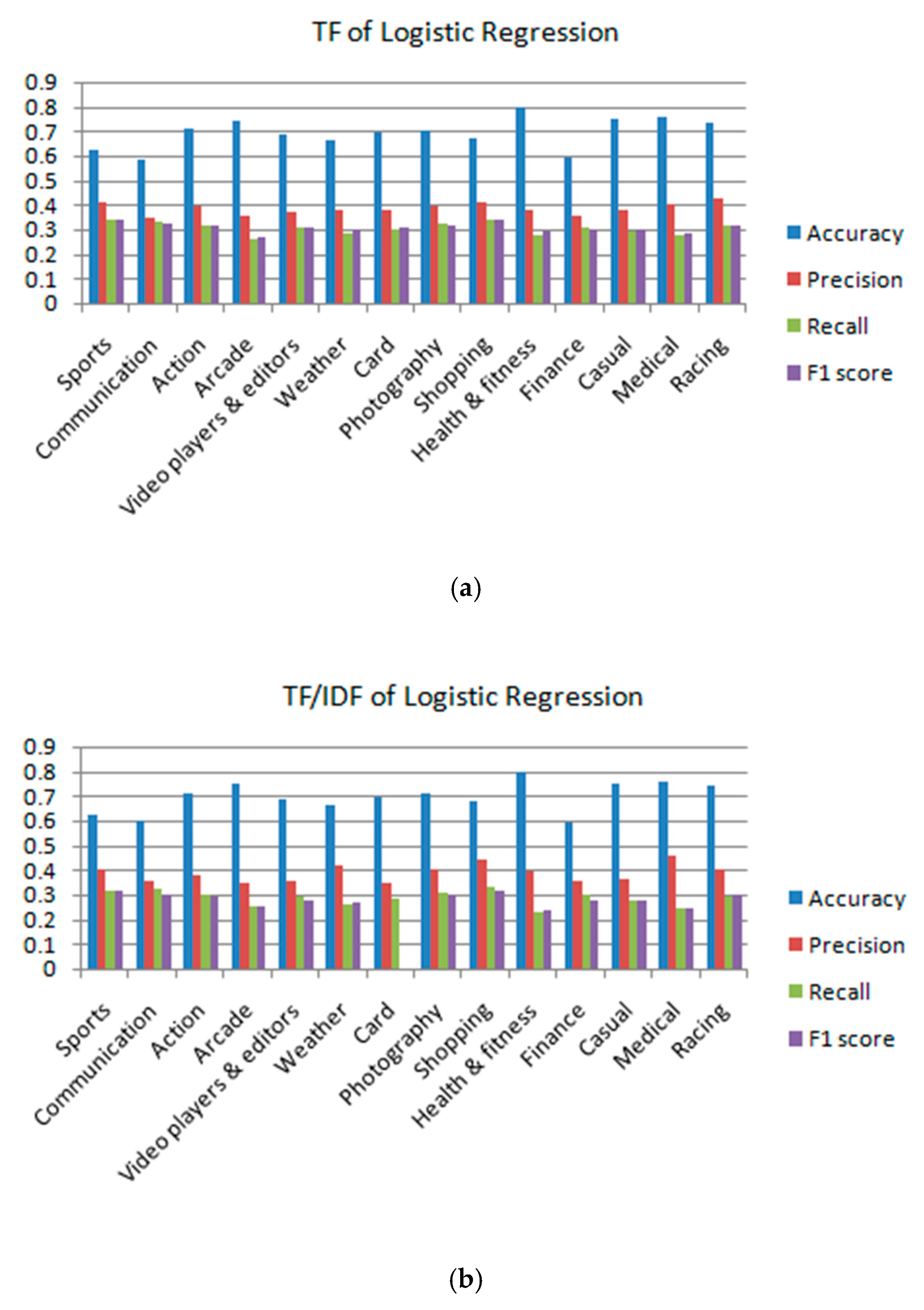

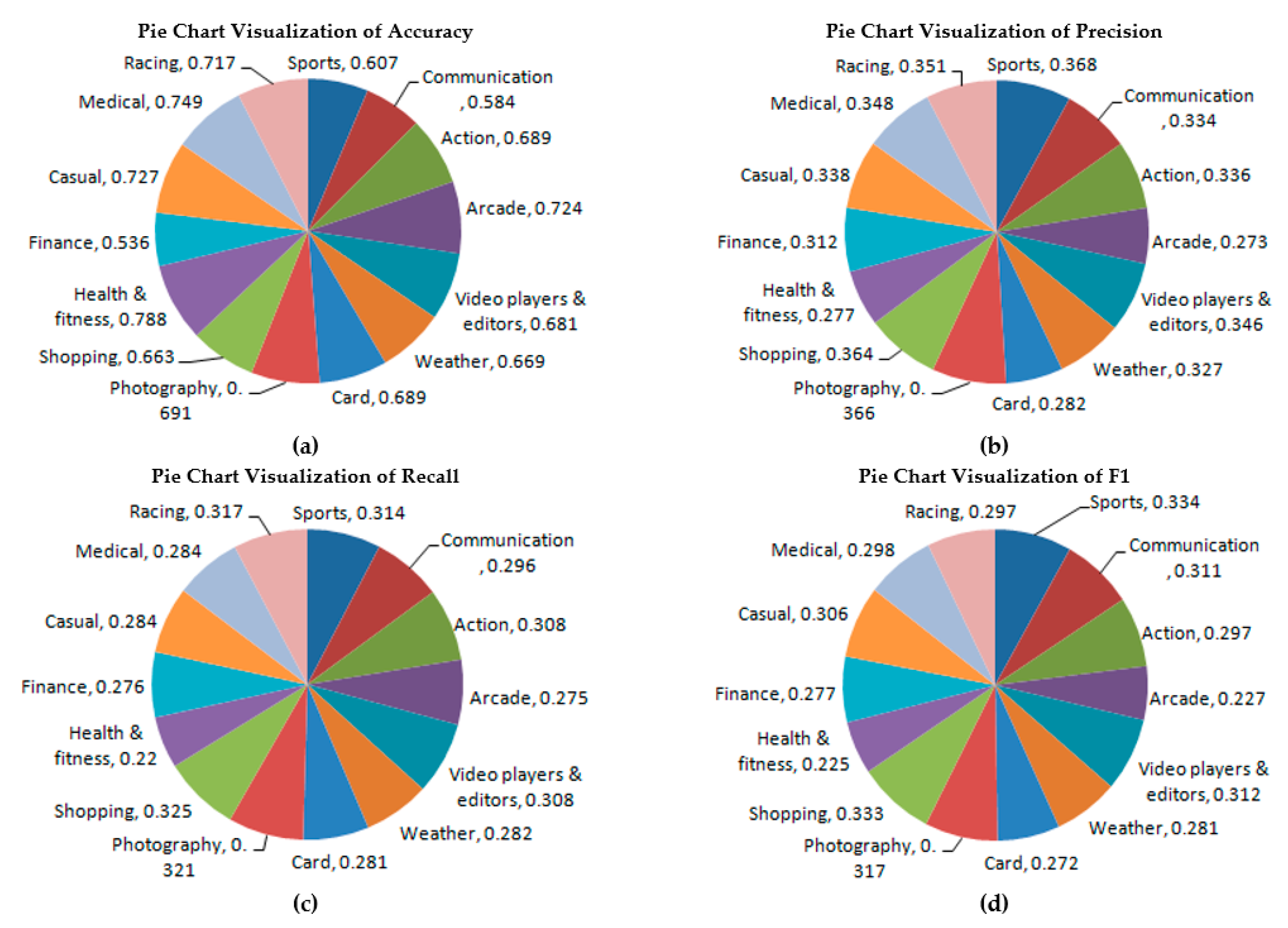

4.1.3. Logistic Regression Algorithm

4.2. Different Machine-Learning Algorithm Comparison after Preprocessing

4.3. Analytical Measurement and Visualization without Preprocessing of Dataset

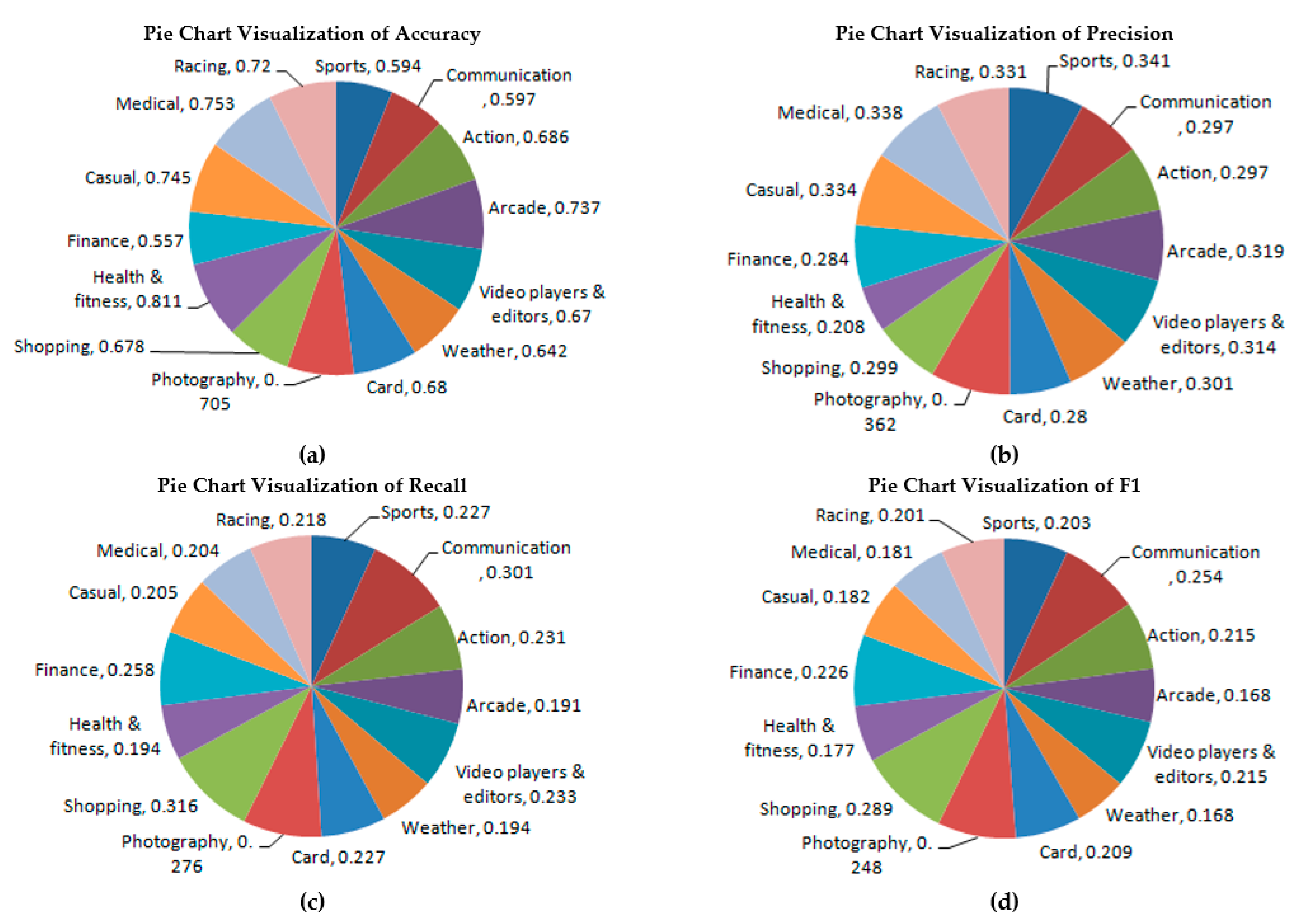

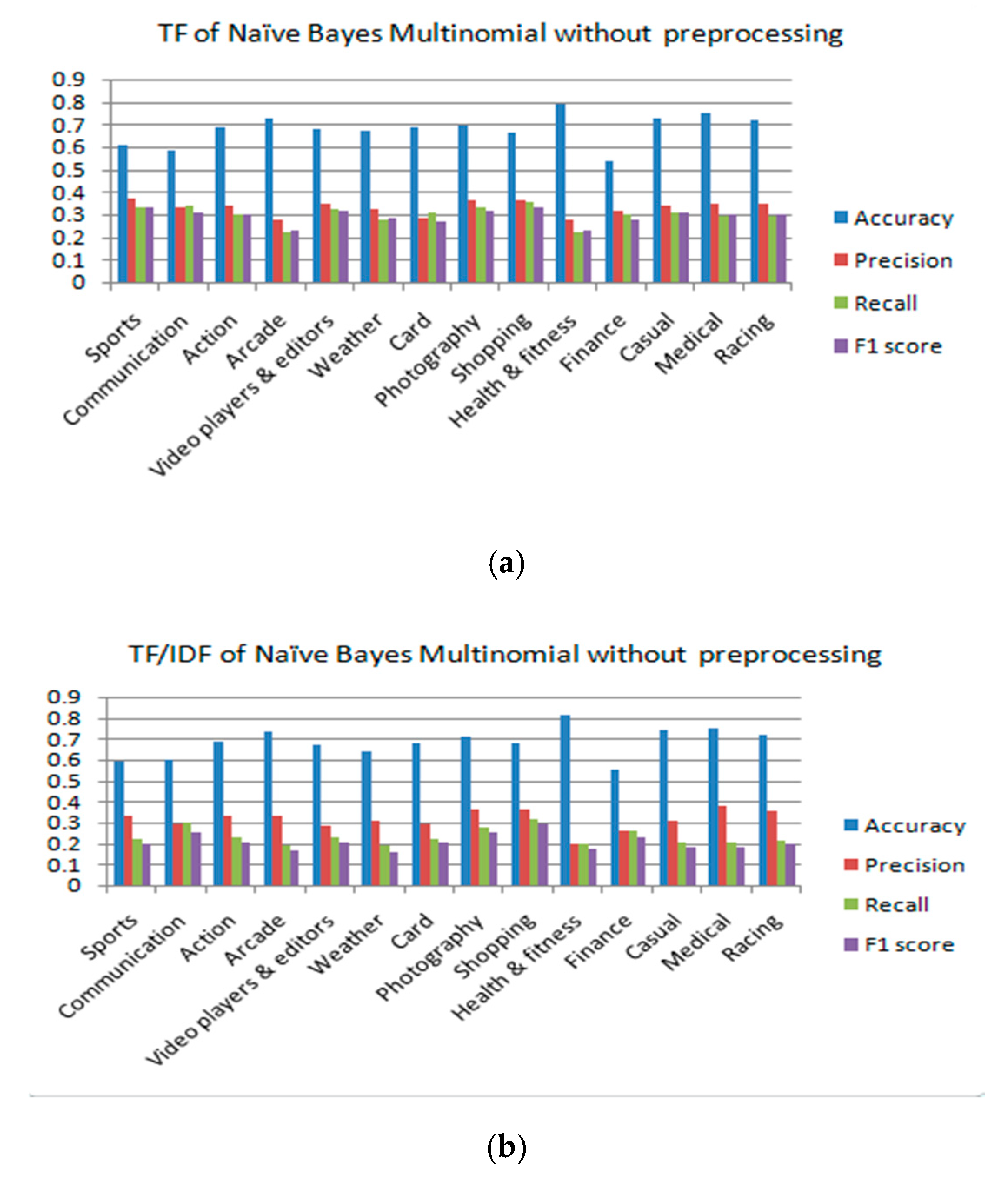

4.3.1. Naïve Bayes Multinomial

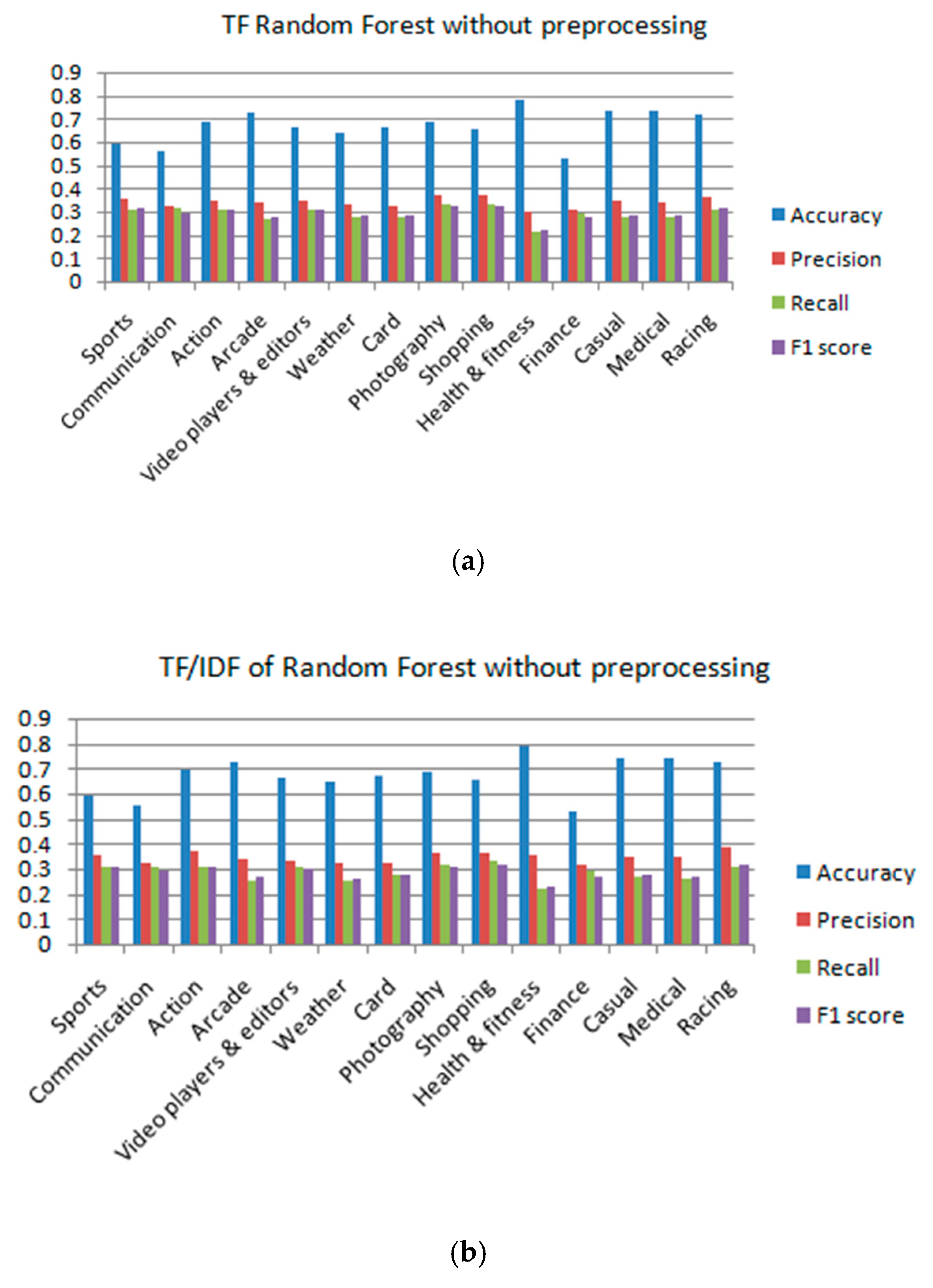

4.3.2. Random Forest Algorithm

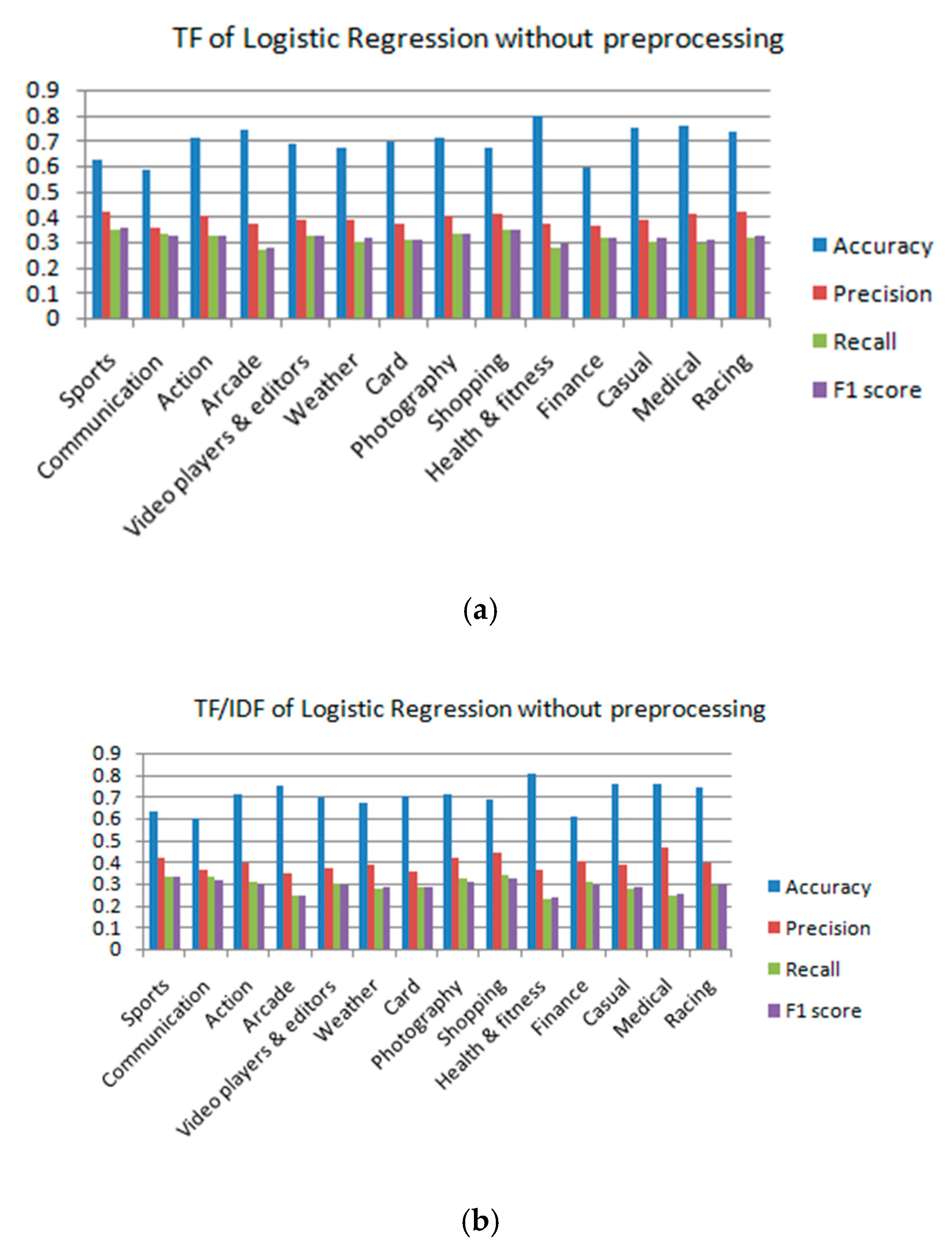

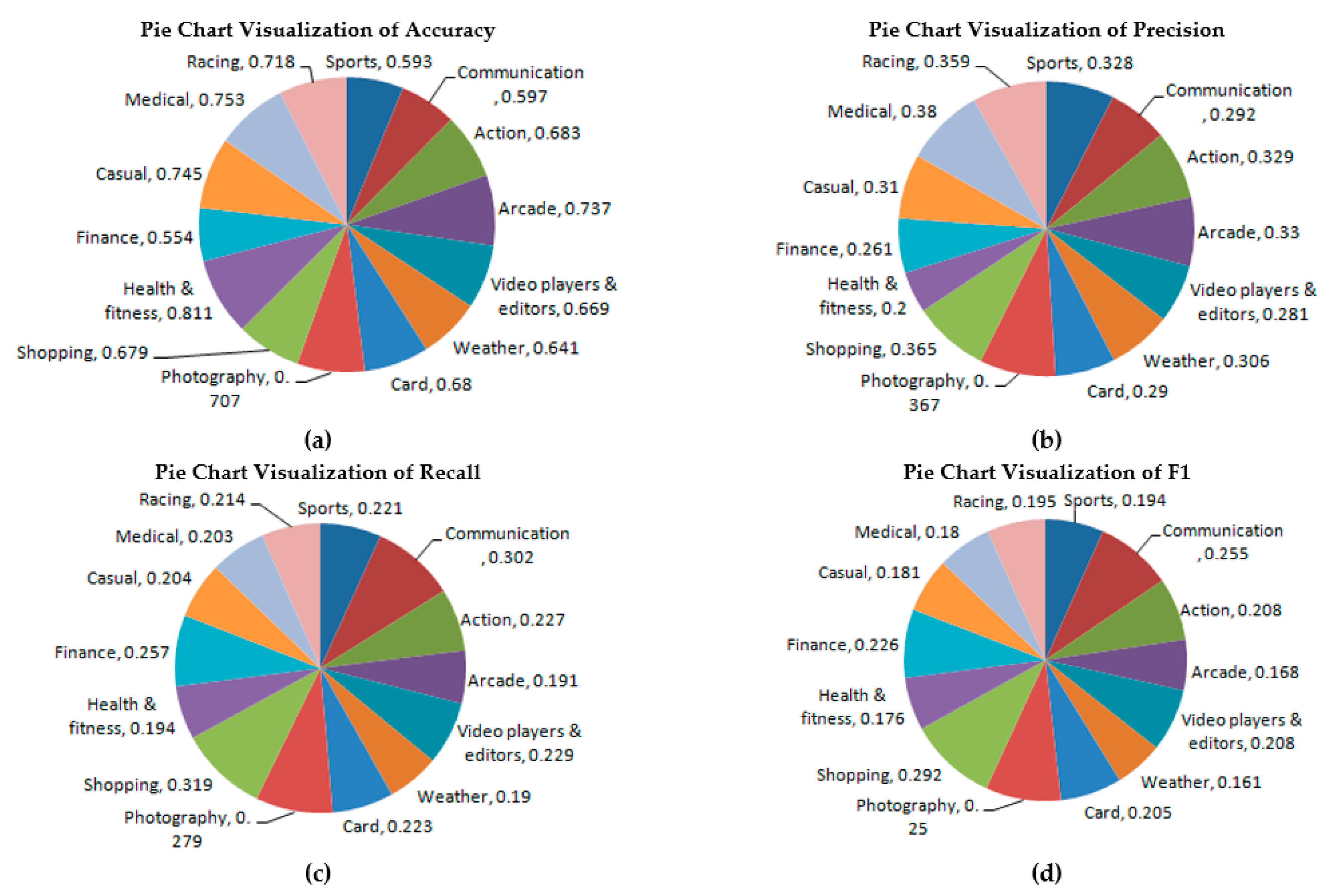

4.3.3. Logistic Regression Algorithm

4.4. Different Machine-Learning Algorithm Comparison without Preprocessing of Dataset

5. Semantic Analysis of Google Play Store Applications Reviews Using Logistic Regression Algorithm

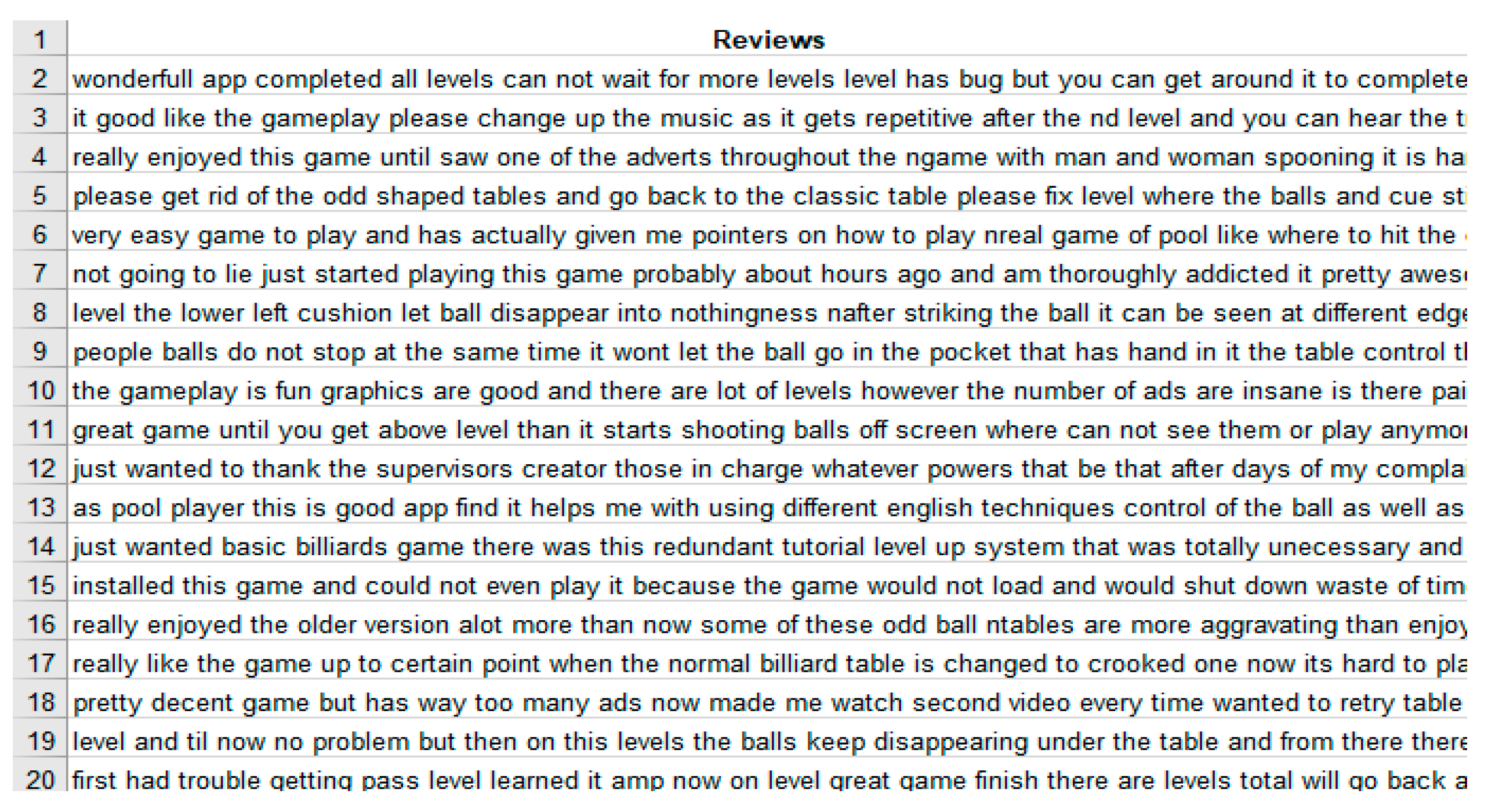

5.1. Data Preparation and Cleaning of Reviews Steps

- HTML DecodingTo convert HTML encoding into text and in the start or ending up in the text field as ‘&,’ ‘\amp’ & ‘quote.’

- Data Preparation 2: ‘#’ Mention“#” carries import information that must deal with is necessary.

- URL LinksRemove all URLs that appear in reviews remove them.

- UTF-8 BOM (Byte Order Mark)For patterns of characters like “\xef\xbf\xbd,” these are UTF-8 BOM. It is a sequence of bytes (EF, BB, BF) that allows the reader to identify a file as being encoded in UTF-8.

- Hashtag/NumbersHashtag text can provide useful information about the comment. It may be a bit tough to get rid of all the text together with the “#” or with a number or with any other unique character needs to deal.

- Negation Handlingis the factor that is not suitable in the review to remove them.

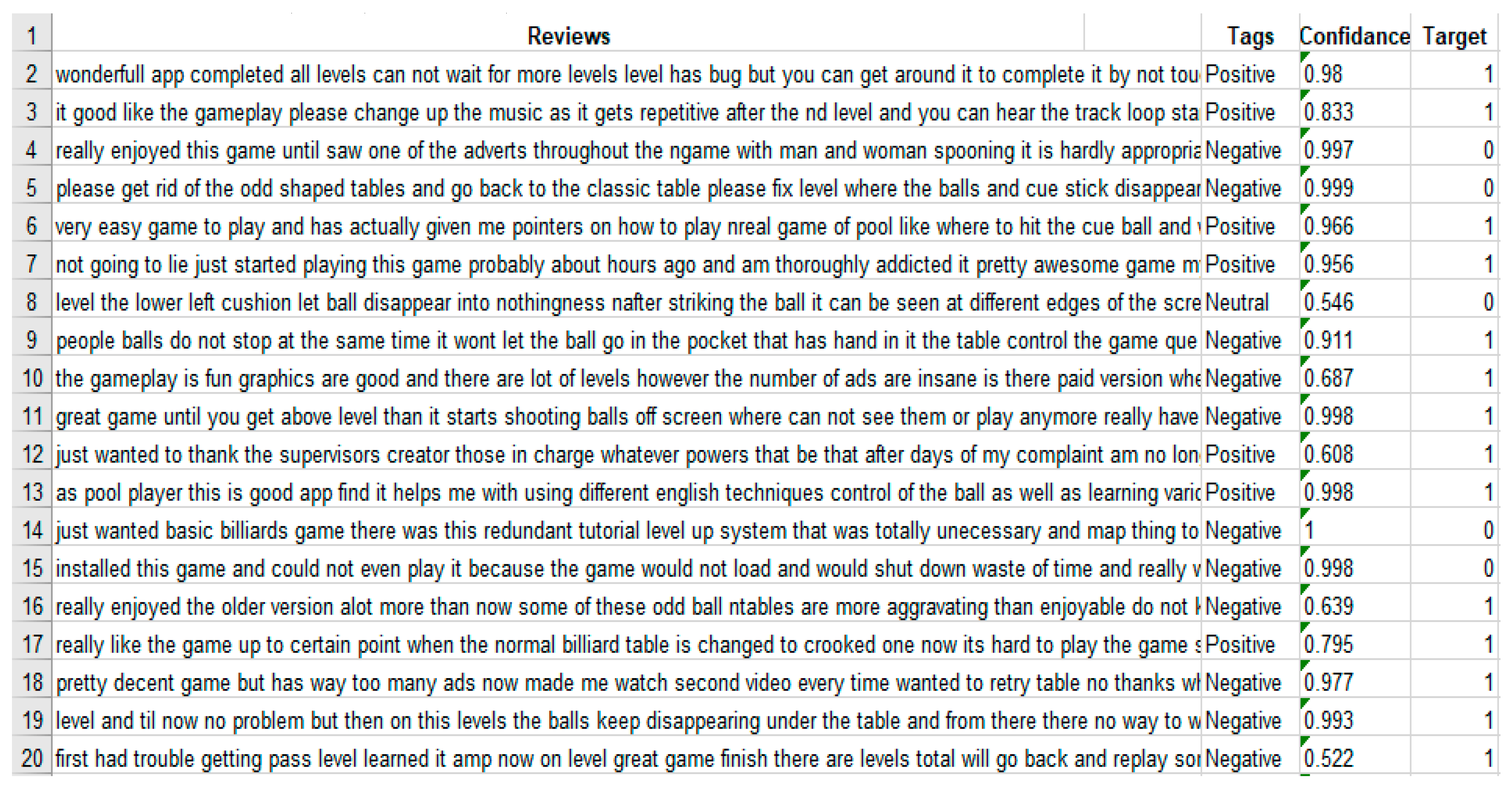

- Tokenizing and JoiningParse the whole comment into small pieces/segments and then merge again. After applying the above rules on cleaning, the reviews cleaned the form of reviews, as shown in Figure 14.

5.2. Find Null Entries from Reviews

| <class ‘pandas.core.frame.DataFrame’> Int64Index: 400000 entries, 0 to 399999 Data columns (total 2 columns): text 399208 non-null object target 400000 non-null int64 dtypes: int64(1), object (1) memory usage: 9.2 + MB |

5.3. Negative and Positive Words Dictionary

5.4. Semantic Analysis of Google Play Store Applications Reviews Using Logistic Regression Algorithm

6. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Goldberg, Y. Neural network methods for natural language processing. Synth. Lect. Hum. Lang. Technol. 2017, 10, 1–309. [Google Scholar] [CrossRef]

- Genc-Nayebi, N.; Abran, A. A systematic literature review: Opinion mining studies from mobile app store user reviews. J. Syst. Softw. 2017, 125, 207–219. [Google Scholar] [CrossRef]

- Cambria, E.; Schuller, B.; Xia, Y.; White, B. New avenues in knowledge bases for natural language processing. Knowl.-Based Syst. 2016, 108, 1–4. [Google Scholar] [CrossRef]

- Attik, M.; Missen, M.M.S.; Coustaty, M.; Choi, G.S.; Alotaibi, F.S.; Akhtar, N.; Jhandir, M.Z.; Prasath, V.B.S.; Coustaty, M.; Husnain, M. OpinionML—Opinion markup language for sentiment representation. Symmetry 2019, 11, 545. [Google Scholar] [CrossRef]

- Gao, C.; Zhao, Y.; Wu, R.; Yang, Q.; Shao, J. Semantic trajectory compression via multi-resolution synchronization-based clustering. Knowl.-Based Syst. 2019, 174, 177–193. [Google Scholar] [CrossRef]

- Santo, K.; Richtering, S.; Chalmers, J.; Thiagalingam, A.; Chow, C.K.; Redfern, J.; Bollyky, J.; Iorio, A. Mobile phone apps to improve medication adherence: A systematic stepwise process to identify high-quality apps. JMIR mHealth uHealth 2016, 4, e132. [Google Scholar] [CrossRef]

- Barlas, P.; Lanning, I.; Heavey, C. A survey of open source data science tools. Int. J. Intell. Comput. Cybern. 2015, 8, 232–261. [Google Scholar] [CrossRef]

- Man, Y.; Gao, C.; Lyu, M.R.; Jiang, J. Experience report: Understanding cross-platform app issues from user reviews. In Proceedings of the 2016 IEEE 27th International Symposium on Software Reliability Engineering (ISSRE), Ottawa, ON, Canada, 23–27 October 2016; pp. 138–149. [Google Scholar]

- Saura, J.R.; Bennett, D.R. A three-stage method for data text mining: Using UGC in business intelligence analysis. Symmetry 2019, 11, 519. [Google Scholar] [CrossRef]

- Benedetti, F.; Beneventano, D.; Bergamaschi, S.; Simonini, G. Computing inter-document similarity with Context Semantic Analysis. Inf. Syst. 2019, 80, 136–147. [Google Scholar] [CrossRef]

- Sachs, J.S. Recognition memory for syntactic and semantic aspects of connected discourse. Percept. Psychophys. 1967, 2, 437–442. [Google Scholar] [CrossRef]

- Zhang, T.; Ge, S.S. An improved TF-IDF algorithm based on class discriminative strength for text categorization on desensitized data. In Proceedings of the 2019 3rd International Conference on Innovation in Artificial Intelligence—ICIAI, Suzhou, China, 15–18 March 2019; pp. 39–44. [Google Scholar]

- Zhang, Y.; Ren, W.; Zhu, T.; Faith, E. MoSa: A Modeling and Sentiment Analysis System for Mobile Application Big Data. Symmetry 2019, 11, 115. [Google Scholar] [CrossRef]

- Richardson, L. Beautiful Soup Documentation. Available online: https://www.crummy.com/software/BeautifulSoup/bs4/doc/ (accessed on 4 May 2015).

- Chapman, C.; Stolee, K.T. Exploring regular expression usage and the context in Python. In Proceedings of the 25th International Symposium on Software Testing and Analysis, Saarbrücken, Germany, 18–20 July 2016; pp. 282–293. [Google Scholar]

- Chivers, H. Optimising unicode regular expression evaluation with previews. Softw. Pr. Exp. 2016, 47, 669–688. [Google Scholar] [CrossRef][Green Version]

- Coutinho, M.; Torquato, M.F.; Fernandes, M.A.C. Deep neural network hardware implementation based on stacked sparse autoencoder. IEEE Access 2019, 7, 40674–40694. [Google Scholar] [CrossRef]

- Di Sorbo, A.; Panichella, S.; Thomas, C.; Shimagaki, J.; Visaggio, C.A.; Canfora, G.; Gall, H.C. What would users change in my app? Summarizing app reviews for recommending software changes. In Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering—FSE 2016; Association for Computing Machinery (ACM), Seattle, WA, USA, 13–19 November 2016; pp. 499–510. [Google Scholar]

- Colhon, M.; Vlăduţescu, Ş.; Negrea, X. How objective a neutral word is? A neutrosophic approach for the objectivity degrees of neutral words. Symmetry 2017, 9, 280. [Google Scholar] [CrossRef]

- Al-Subaihin, A.; Finkelstein, A.; Harman, M.; Jia, Y.; Martin, W.; Sarro, F.; Zhang, Y. App store mining and analysis. In Proceedings of the 3rd International Workshop on Mobile Development Lifecycle—MobileDeLi, Pittsburgh, PA, USA, 25–30 October 2015; pp. 1–2. [Google Scholar]

- Nakov, P.; Popova, A.; Mateev, P. Weight Functions Impact on LSA Performance. EuroConference RANLP. 2001, pp. 187–193. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.69.9244&rep=rep1&type=pdf (accessed on 2 July 2020).

- Tröger, J.; Linz, N.; König, A.; Robert, P.H.; Alexandersson, J.; Peter, J.; Kray, J. Exploitation vs. exploration—Computational temporal and semantic analysis explains semantic verbal fluency impairment in Alzheimer’s disease. Neuropsychologia 2019, 131, 53–61. [Google Scholar] [CrossRef]

- Kansal, K.; Subramanyam, A.V. Hdrnet: Person re-identification using hybrid sampling in deep reconstruction network. IEEE Access 2019, 7, 40856–40865. [Google Scholar] [CrossRef]

- Jha, N.; Mahmoud, A. Using frame semantics for classifying and summarizing application store reviews. Empir. Softw. Eng. 2018, 23, 3734–3767. [Google Scholar] [CrossRef]

- Puram, N.M.; Singh, K.R. Semantic Analysis of App Review for Fraud Detection Using Fuzzy Logic. 2018. Available online: https://www.semanticscholar.org/paper/Semantic-Analysis-of-App-Review-for-Fraud-Detection-Puram-Singh/ed73761ad92b9c9914c8c5c780dc1b57ab6f49e8 (accessed on 2 July 2020).

- Wang, Y.; Liu, H.; Zheng, W.; Xia, Y.; Li, Y.; Chen, P.; Guo, K.; Xie, H. Multi-objective workflow scheduling with deep-q-network-based multi-agent reinforcement learning. IEEE Access 2019, 7, 39974–39982. [Google Scholar] [CrossRef]

- Puram, N.M.; Singh, K. An Implementation to Detect Fraud App Using Fuzzy Logic. Int. J. Future Revolut. Comput. Sci. Commun. Eng. 2018, 4, 654–662. [Google Scholar]

- Yang, W.; Li, J.; Zhang, Y.; Li, Y.; Shu, J.; Gu, D. APKLancet: Tumor payload diagnosis and purification for android applications. In Proceedings of the 9th ACM Symposium on Information, Computer and Communications Security, Kyoto, Japan, 3–6 June 2014; pp. 483–494. [Google Scholar]

- Huh, J.-H. Big data analysis for personalized health activities: Machine learning processing for automatic keyword extraction approach. Symmetry 2018, 10, 93. [Google Scholar] [CrossRef]

- Guo, S.; Chen, R.; Li, H. Using knowledge transfer and rough set to predict the severity of android test reports via text mining. Symmetry 2017, 9, 161. [Google Scholar] [CrossRef]

- Cerquitelli, T.; Di Corso, E.; Ventura, F.; Chiusano, S. Data miners’ little helper: Data transformation activity cues for cluster analysis on document collections. In Proceedings of the 7th International Conference on Web Intelligence, Mining and Semantics, Amantea, Italy, 19–22 June 2017; pp. 1–6. [Google Scholar]

- Zhang, Y.; d’Aspremont, A.; El Ghaoui, L. Sparse PCA: Convex relaxations, algorithms and applications. In Handbook on Semidefinite, Conic and Polynomial Optimization; Springer: Boston, MA, USA, 2012; pp. 915–940. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2012, 3, 993–1022. [Google Scholar]

- Hofmann, T. Probabilistic latent semantic analysis. arXiv 2013, arXiv:1301.6705. [Google Scholar]

- Di Corso, E.; Proto, S.; Cerquitelli, T.; Chiusano, S. Towards automated visualisation of scientific literature. In European Conference on Advances in Databases and Information Systems; Springer: Cham, Switzerland, 2019; pp. 28–36. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

| Action | Card | Arcade | |||

| App Name | Reviews | App Name | Reviews | App Name | Reviews |

| Bush Rush | 4001 | 29 Card Game | 4001 | Angry Bird Rio | 4481 |

| Gun Shot Fire War | 3001 | Blackjack 21 | 1601 | Bubble Shooter 2 | 4001 |

| Metal Soldiers | 4001 | Blackjack | 4481 | Jewels Legend | 4001 |

| N.O.V.A Legacy | 4364 | Callbreak Multiplayer | 4001 | Lep World 2 | 3001 |

| Real Gangster Crime | 4001 | Card Game 29 | 3066 | Snow Bros | 3001 |

| Shadow Fight 2 | 4481 | Card Words Kingdom | 4472 | Sonic Dash | 4481 |

| Sniper 3D Gun Shooter | 4481 | Gin Rummy | 3616 | Space Shooter | 4401 |

| Talking Tom Gold Run | 4001 | Spider Solitaire | 2801 | Subway Princess Runner | 3001 |

| Temple Run 2 | 3001 | Teen Patti Gold | 4481 | Subway Surfers | 4481 |

| Warship Battle | 4001 | World Series of poker | 4001 | Super Jabber Jump 3 | 2912 |

| Zombie Frontier 3 | 4001 | ||||

| Zombie Hunter King | 3782 | ||||

| Communication | Finance | Health and Fitness | |||

| App Name | Reviews | App Name | Reviews | App Name | Reviews |

| Dolphin Browser | 3001 | bKash | 4481 | Home Workout—No Equipment | 4481 |

| Firefox Browser | 3001 | CAIXA | 1220 | Home Workout for Men | 1163 |

| Google Duo | 3001 | CAPTETEB | 697 | Lose Belly Fat In 30 Days | 4481 |

| Hangout Dialer | 3001 | FNB Banking App | 2201 | Lose It!—Calorie Counter | 4001 |

| KakaoTalk | 3001 | Garanti Mobile Banking | 859 | Lose Weight Fat In 30 Days | 4481 |

| LINE | 3001 | Monobank | 605 | Nike+Run Club | 1521 |

| Messenger Talk | 3001 | MSN Money-Stock Quotes & News | 3001 | Seven—7 Minutes Workout | 4425 |

| Opera Mini Browser | 3001 | Nubank | 1613 | Six Pack In 30 Days | 3801 |

| UC Browser Mini | 3001 | PhonePe-UPI Payment | 4001 | Water Drink Reminder | 4481 |

| 3001 | QIWI Wallet | 1601 | YAZIO Calorie Counter | 1590 | |

| Yahoo Finance | 3001 | ||||

| YapiKredi Mobile | 1952 | ||||

| Stock | 3001 | ||||

| Photography | Shopping | Sports | |||

| App Name | Reviews | App Name | Reviews | App Name | Reviews |

| B612—Beauty & Filter Camera | 4001 | AliExpress | 4481 | Billiards City | 4481 |

| BeautyCam | 4001 | Amazon for Tablets | 4481 | Real Cricket 18 | 3001 |

| BeautyPlus | 4001 | Bikroy | 4481 | Real Football | 3001 |

| Candy Camera | 4481 | Club Factory | 4001 | Score! Hero | 3001 |

| Google Photos | 4481 | Digikala | 4001 | Table Tennis 3D | 3001 |

| HD Camera | 4001 | Divar | 4001 | Tennis | 3001 |

| Motorola Camera | 4001 | Flipkart Online Shopping App | 4481 | Volleyball Champions 3D | 3001 |

| Music Video Maker | 4001 | Lazada | 4481 | World of Cricket | 4481 |

| Sweet Selfie | 4481 | Myntra Online Shopping App | 4481 | Pool Billiards Pro | 4001 |

| Sweet Snap | 4001 | Shop clues | 4481 | Snooker Star | 2801 |

| Video Player Editor | Weather | Casual | |||

| App Name | Reviews | App Name | Reviews | App Name | Reviews |

| KineMaster | 1441 | NOAA Weather Radar & Alerts | 3601 | Angry Bird POP | 4481 |

| Media Player | 2713 | The Weather Channel | 4001 | BLUK | 3281 |

| MX Player | 3001 | Transparent Weather & Clock | 1441 | Boards King | 4481 |

| Power Director Video Editor App | 1641 | Weather & Clock Weight for Android | 4481 | Bubble Shooter | 4481 |

| Video Player All Format | 1041 | Weather & Radar—Free | 3601 | Candy Crush Saga | 4481 |

| Video Player KM | 3001 | Weather Forecast | 1681 | Farm Heroes Super Saga | 4481 |

| Video Show | 1321 | Weather Live Free | 1721 | Hay Day | 4481 |

| VivaVideo | 4190 | Weather XL PRO | 1401 | Minion Rush | 4481 |

| You Cut App | 1241 | Yahoo Weather | 4361 | My Talking Tom | 4481 |

| YouTube | 1201 | Yandex Weather | 1045 | Pou | 4481 |

| Shopping Mall Girl | 4481 | ||||

| Gardens capes | 4481 | ||||

| Medical | Racing | ||||

| App Name | Reviews | App Name | Reviews | ||

| Anatomy Learning | 2401 | Asphalt Nitro | 4481 | ||

| Diseases & Dictionary | 3201 | Beach Buggy Racing | 4481 | ||

| Disorder & Diseases Dictionary | 2401 | Bike Mayhem Free | 4481 | ||

| Drugs.com | 2401 | Bike Stunt Master | 2745 | ||

| Epocrates | 1001 | Dr. Driving 2 | 4481 | ||

| Medical Image | 1423 | Extreme Car Driving | 4481 | ||

| Medical Terminology | 1448 | Hill Climb Racing 2 | 3801 | ||

| Pharmapedia Pakistan | 4134 | Racing Fever | 4481 | ||

| Prognosis | 2401 | Racing in Car 2 | 4481 | ||

| WikiMed | 3201 | Trial Xtreme 4 | 4481 | ||

| Application Category | Naïve Bayes Accuracy | Random Forest Accuracy | Logistic Regression Accuracy | Naïve Bayes Precision | Random Forest Precision | Logistic Regression Precision | Naïve Bayes Recall | Random Forest Recall | Logistic Regression Recall | Naïve Bayes F1 Score | Random Forest F1 | Logistic Regression F1 Score |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sports | 0.602 | 0.585 | 0.622 | 0.359 | 0.34 | 0.414 | 0.316 | 0.312 | 0.343 | 0.315 | 0.308 | 0.343 |

| Communication | 0.587 | 0.544 | 0.585 | 0.333 | 0.314 | 0.349 | 0.332 | 0.313 | 0.329 | 0.304 | 0.294 | 0.32 |

| Action | 0.691 | 0.683 | 0.707 | 0.334 | 0.338 | 0.395 | 0.294 | 0.31 | 0.312 | 0.288 | 0.308 | 0.313 |

| Arcade | 0.725 | 0.721 | 0.744 | 0.283 | 0.32 | 0.353 | 0.231 | 0.27 | 0.262 | 0.235 | 0.274 | 0.266 |

| Video players & editors | 0.676 | 0.664 | 0.684 | 0.331 | 0.347 | 0.37 | 0.306 | 0.313 | 0.306 | 0.294 | 0.304 | 0.304 |

| Weather | 0.662 | 0.632 | 0.667 | 0.329 | 0.285 | 0.379 | 0.261 | 0.243 | 0.288 | 0.266 | 0.248 | 0.299 |

| Card | 0.689 | 0.665 | 0.696 | 0.31 | 0.312 | 0.379 | 0.285 | 0.285 | 0.301 | 0.276 | 0.279 | 0.305 |

| Photography | 0.696 | 0.683 | 0.703 | 0.367 | 0.353 | 0.391 | 0.327 | 0.32 | 0.321 | 0.31 | 0.312 | 0.315 |

| Shopping | 0.667 | 0.648 | 0.67 | 0.358 | 0.354 | 0.407 | 0.341 | 0.333 | 0.342 | 0.321 | 0.324 | 0.336 |

| Health & fitness | 0.788 | 0.765 | 0.796 | 0.273 | 0.324 | 0.38 | 0.212 | 0.248 | 0.278 | 0.218 | 0.254 | 0.295 |

| Finance | 0.532 | 0.517 | 0.592 | 0.301 | 0.309 | 0.352 | 0.287 | 0.291 | 0.311 | 0.266 | 0.27 | 0.303 |

| Casual | 0.73 | 0.728 | 0.747 | 0.334 | 0.341 | 0.381 | 0.285 | 0.284 | 0.29 | 0.288 | 0.292 | 0.302 |

| Medical | 0.745 | 0.729 | 0.754 | 0.359 | 0.33 | 0.401 | 0.272 | 0.28 | 0.277 | 0.279 | 0.285 | 0.288 |

| Racing | 0.718 | 0.714 | 0.737 | 0.357 | 0.359 | 0.428 | 0.278 | 0.317 | 0.312 | 0.285 | 0.319 | 0.318 |

| Application Category | Naïve Bayes Accuracy | Random Forest Accuracy | Logistic Regression Accuracy | Naïve Bayes Precision | Random Forest Precision | Logistic Regression Precision | Naïve Bayes Recall | Random Forest Recall | Logistic Regression Recall | Naïve Bayes F1 Score | Random Forest F1 Score | Logistic Regression F1 Score |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sports | 0.594 | 0.589 | 0.621 | 0.341 | 0.344 | 0.404 | 0.227 | 0.308 | 0.319 | 0.203 | 0.304 | 0.315 |

| Communication | 0.597 | 0.545 | 0.599 | 0.297 | 0.307 | 0.352 | 0.301 | 0.312 | 0.327 | 0.254 | 0.288 | 0.301 |

| Action | 0.686 | 0.691 | 0.71 | 0.297 | 0.347 | 0.38 | 0.231 | 0.306 | 0.299 | 0.215 | 0.302 | 0.293 |

| Arcade | 0.737 | 0.729 | 0.747 | 0.319 | 0.334 | 0.351 | 0.191 | 0.262 | 0.25 | 0.168 | 0.269 | 0.252 |

| Video players & editors | 0.67 | 0.664 | 0.687 | 0.314 | 0.34 | 0.352 | 0.233 | 0.304 | 0.289 | 0.215 | 0.295 | 0.276 |

| Weather | 0.642 | 0.638 | 0.667 | 0.301 | 0.305 | 0.421 | 0.194 | 0.252 | 0.262 | 0.168 | 0.255 | 0.265 |

| Card | 0.68 | 0.673 | 0.698 | 0.28 | 0.321 | 0.344 | 0.227 | 0.284 | 0.283 | 0.209 | 0.277 | 0.271 |

| Photography | 0.705 | 0.69 | 0.71 | 0.362 | 0.352 | 0.405 | 0.276 | 0.315 | 0.311 | 0.248 | 0.301 | 0.297 |

| Shopping | 0.678 | 0.653 | 0.682 | 0.299 | 0.359 | 0.444 | 0.316 | 0.33 | 0.332 | 0.289 | 0.316 | 0.315 |

| Health & fitness | 0.811 | 0.779 | 0.801 | 0.208 | 0.315 | 0.391 | 0.194 | 0.235 | 0.23 | 0.177 | 0.24 | 0.235 |

| Finance | 0.557 | 0.52 | 0.593 | 0.284 | 0.31 | 0.353 | 0.258 | 0.293 | 0.298 | 0.226 | 0.27 | 0.276 |

| Casual | 0.745 | 0.732 | 0.753 | 0.334 | 0.342 | 0.364 | 0.205 | 0.274 | 0.277 | 0.182 | 0.28 | 0.28 |

| Medical | 0.753 | 0.739 | 0.759 | 0.338 | 0.336 | 0.459 | 0.204 | 0.265 | 0.244 | 0.181 | 0.271 | 0.245 |

| Racing | 0.72 | 0.724 | 0.74 | 0.331 | 0.37 | 0.401 | 0.218 | 0.306 | 0.295 | 0.201 | 0.311 | 0.297 |

| Application Category | Naïve Bayes Accuracy | Random Forest Accuracy | Logistic Regression Accuracy | Naïve Bayes Precision | Random Forest Precision | Logistic Regression Precision | Naïve Bayes Recall | Random Forest Recall | Logistic Regression Recall | Naïve Bayes F1 score | Random Forest F1 Score | Logistic Regression F1 Score |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sports | 0.607 | 0.589 | 0.623 | 0.368 | 0.359 | 0.416 | 0.314 | 0.314 | 0.35 | 0.334 | 0.314 | 0.353 |

| Communication | 0.584 | 0.559 | 0.588 | 0.334 | 0.321 | 0.355 | 0.296 | 0.296 | 0.334 | 0.311 | 0.296 | 0.326 |

| Action | 0.689 | 0.686 | 0.71 | 0.336 | 0.35 | 0.405 | 0.308 | 0.308 | 0.32 | 0.297 | 0.308 | 0.324 |

| Arcade | 0.724 | 0.725 | 0.744 | 0.273 | 0.338 | 0.369 | 0.275 | 0.275 | 0.271 | 0.227 | 0.275 | 0.278 |

| Video players & editors | 0.681 | 0.665 | 0.69 | 0.346 | 0.351 | 0.39 | 0.308 | 0.308 | 0.323 | 0.312 | 0.308 | 0.323 |

| Weather | 0.669 | 0.641 | 0.674 | 0.327 | 0.335 | 0.386 | 0.282 | 0.282 | 0.303 | 0.281 | 0.282 | 0.316 |

| Card | 0.689 | 0.666 | 0.697 | 0.282 | 0.325 | 0.373 | 0.281 | 0.281 | 0.306 | 0.272 | 0.281 | 0.31 |

| Photography | 0.691 | 0.689 | 0.707 | 0.366 | 0.372 | 0.403 | 0.321 | 0.321 | 0.332 | 0.317 | 0.321 | 0.328 |

| Shopping | 0.663 | 0.654 | 0.674 | 0.364 | 0.37 | 0.411 | 0.325 | 0.325 | 0.351 | 0.333 | 0.325 | 0.346 |

| Health & fitness | 0.788 | 0.778 | 0.794 | 0.277 | 0.299 | 0.369 | 0.22 | 0.22 | 0.28 | 0.225 | 0.22 | 0.295 |

| Finance | 0.536 | 0.532 | 0.595 | 0.312 | 0.311 | 0.363 | 0.276 | 0.276 | 0.319 | 0.277 | 0.276 | 0.314 |

| Casual | 0.727 | 0.735 | 0.747 | 0.338 | 0.345 | 0.385 | 0.284 | 0.284 | 0.3 | 0.306 | 0.284 | 0.314 |

| Medical | 0.749 | 0.737 | 0.757 | 0.348 | 0.342 | 0.41 | 0.284 | 0.284 | 0.295 | 0.298 | 0.284 | 0.31 |

| Racing | 0.717 | 0.719 | 0.738 | 0.351 | 0.361 | 0.419 | 0.317 | 0.317 | 0.317 | 0.297 | 0.317 | 0.325 |

| Application Category | Naïve Bayes Accuracy | Random Forest Accuracy | Logistic Regression Accuracy | Naïve Bayes Precision | Random Forest Precision | Logistic Regression Precision | Naïve Bayes Recall | Random Forest Recall | Logistic Regression Recall | Naïve Bayes F1 Score | Random Forest F1 Score | Logistic Regression F1 Score |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sports | 0.593 | 0.595 | 0.629 | 0.328 | 0.352 | 0.416 | 0.221 | 0.307 | 0.331 | 0.194 | 0.309 | 0.328 |

| Communication | 0.597 | 0.555 | 0.602 | 0.292 | 0.32 | 0.361 | 0.302 | 0.309 | 0.334 | 0.255 | 0.29 | 0.312 |

| Action | 0.683 | 0.695 | 0.712 | 0.329 | 0.369 | 0.398 | 0.227 | 0.306 | 0.304 | 0.208 | 0.307 | 0.299 |

| Arcade | 0.737 | 0.729 | 0.747 | 0.33 | 0.336 | 0.349 | 0.191 | 0.256 | 0.246 | 0.168 | 0.265 | 0.247 |

| Video players & editors | 0.669 | 0.665 | 0.693 | 0.281 | 0.335 | 0.375 | 0.229 | 0.306 | 0.3 | 0.208 | 0.298 | 0.291 |

| Weather | 0.641 | 0.647 | 0.674 | 0.306 | 0.323 | 0.384 | 0.19 | 0.255 | 0.275 | 0.161 | 0.264 | 0.282 |

| Card | 0.68 | 0.669 | 0.699 | 0.29 | 0.322 | 0.359 | 0.223 | 0.274 | 0.288 | 0.205 | 0.273 | 0.281 |

| Photography | 0.707 | 0.69 | 0.714 | 0.367 | 0.363 | 0.422 | 0.279 | 0.318 | 0.322 | 0.25 | 0.308 | 0.309 |

| Shopping | 0.679 | 0.653 | 0.686 | 0.365 | 0.361 | 0.444 | 0.319 | 0.328 | 0.339 | 0.292 | 0.315 | 0.324 |

| Health & fitness | 0.811 | 0.788 | 0.803 | 0.2 | 0.354 | 0.363 | 0.194 | 0.22 | 0.232 | 0.176 | 0.225 | 0.239 |

| Finance | 0.554 | 0.529 | 0.604 | 0.261 | 0.317 | 0.401 | 0.257 | 0.294 | 0.308 | 0.226 | 0.272 | 0.29 |

| Casual | 0.745 | 0.739 | 0.755 | 0.31 | 0.346 | 0.385 | 0.204 | 0.267 | 0.28 | 0.181 | 0.277 | 0.285 |

| Medical | 0.753 | 0.743 | 0.759 | 0.38 | 0.351 | 0.468 | 0.203 | 0.259 | 0.246 | 0.18 | 0.268 | 0.249 |

| Racing | 0.718 | 0.726 | 0.74 | 0.359 | 0.383 | 0.391 | 0.214 | 0.307 | 0.295 | 0.195 | 0.317 | 0.297 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karim, A.; Azhari, A.; Belhaouri, S.B.; Qureshi, A.A.; Ahmad, M. Methodology for Analyzing the Traditional Algorithms Performance of User Reviews Using Machine Learning Techniques. Algorithms 2020, 13, 202. https://doi.org/10.3390/a13080202

Karim A, Azhari A, Belhaouri SB, Qureshi AA, Ahmad M. Methodology for Analyzing the Traditional Algorithms Performance of User Reviews Using Machine Learning Techniques. Algorithms. 2020; 13(8):202. https://doi.org/10.3390/a13080202

Chicago/Turabian StyleKarim, Abdul, Azhari Azhari, Samir Brahim Belhaouri, Ali Adil Qureshi, and Maqsood Ahmad. 2020. "Methodology for Analyzing the Traditional Algorithms Performance of User Reviews Using Machine Learning Techniques" Algorithms 13, no. 8: 202. https://doi.org/10.3390/a13080202

APA StyleKarim, A., Azhari, A., Belhaouri, S. B., Qureshi, A. A., & Ahmad, M. (2020). Methodology for Analyzing the Traditional Algorithms Performance of User Reviews Using Machine Learning Techniques. Algorithms, 13(8), 202. https://doi.org/10.3390/a13080202