Moving Deep Learning to the Edge

Abstract

1. Introduction

- Smart cities: DL algorithms help improve the data traffic management, the privacy of city data, the local management, and the speed of response of smart city services [15];

- Smart homes: home living improvement to help people with personal data analysis and adaptation of services to different personal behaviors. Once again, personal data privacy is important and requires data processing services closer to the user [16];

- Automated driving: Self-driving vehicles like cars and drones require a real-time response and highly accurate object classification and recognition algorithms. High-performance edge computing, including DL algorithms, is necessary to guarantee accuracy and real-time response for self-driving vehicles [10];

- Industrial applications: accurate DL algorithms are needed to detect failures in production lines, foment automation in industrial production, and improve industrial management. These workloads must also be processed locally and in real-time [17].

2. Survey Methodology

- Evolution of deep learning models from accuracy oriented to edge oriented;

- Application areas of deep learning when executed on the edge;

- Optimization of deep learning models to improve the inference and training;

- Computing devices to execute deep learning models on the edge;

- Edge computing solutions to run deep learning models;

- Methods to train deep learning models on the edge.

3. Deep Learning

3.1. Deep Neural Network Models

3.2. Deep Learning Applications

- Image and video: Most deep neural networks are associated with image classification [51,52] and object detection [53,54]. These are used for image and video analysis in a vast set of applications, like video surveillance, obstacle detection and face recognition. Many of these applications require real-time analysis and therefore must be executed at the edge. Wireless cameras are frequently used to detect objects and people [55,56]. Considering the computational limitations of edge platforms, some solutions propose an integrated solution between edge and cloud computing [57] that can guarantee high accuracy with low latency. Some commercial solutions [58] adopt this integrated solution, where the local edge device only forwards images to the cloud if it is classified locally as important.

- Natural language: Natural language processing [59,60] and machine translation [61,62] have also adopted DNNs to improve the accuracy. Applications like query and answer require low latency between the query and the answer to avoid unwanted idle times. Well-known examples of natural language processing are the voice assistants Alexa from Amazon [63] and Siri from Apple [64,65]. These voice assistants have an integrated solution with a local simple network at the edge to detect wake words. When detected, the systems record the voice and sends it to the cloud to process and generate a response.

- Smart home and smart city: The smart home is a solution that collects and processes data from home devices and human activity and provides a set of services to improve home living. Some examples are indoor localization [66], home robotics [67], and human activity monitoring [68]. This smart concept was extended to cities to improve many aspects of city living [69], like traffic flow management [70]. Data relative to the traffic state from different roads can be used to predict the flow of roads and suggest some alternative roads [71]. Object detection is also used to track incidents on the roads and warn the drivers about alternatives to avoid an incident [72].

- Medical: Medical is another field were DNNs are being applied successfully to predict diseases and to analyze images. In the genetics area, DNN has been successful to extract hidden features from genetic information that allows us to predict diseases, like autism [73,74]. Medical imaging analysis with DNN can detect different kinds of cancer [75,76,77,78] and also extract information from an image that is difficult to detect by a human.

- Agriculture: Smart agriculture is a new approach to improve the productivity in agriculture [79] to provide food for an increasing population. Deep learning is applied in several agriculture areas [80]. These include fruit counting [81], identification and recognition of plants [82], land analysis and classification [83,84], identification of diseases [85], and crop-type classification [86].

4. Deep Learning Inference on Edge Devices

4.1. Edge-Oriented Deep Neural Networks

4.2. Hardware-Oriented Deep Neural Network Optimizations

4.3. Computing Devices for Deep Neural Networks at the Edge

4.4. Edge Computing Architectures for Deep Learning

5. Deep Learning Training on Edge Devices

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AE | Auto-Encoder |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| ASIC | Application Specific Integrated Circuit |

| BM | Boltzmann Machine |

| BNN | Binary Neural Network |

| CNN | Convolutional Neural Network |

| DBM | Deep Boltzmann Machine |

| DBN | Deep Belief Network |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DRP | Dynamically Reconfigurable Processor |

| DSP | Digital Signal Processor |

| FPGA | Field Programmable Gate Array |

| GOP | Giga Operation |

| GPU | Graphics Processing Unit |

| IoT | Internet of Things |

| IP | Intellectual Property |

| LSTM | Long Short-Term Memory |

| MAC | Multiply and Accumulate |

| NAS | Neural Architecture Search |

| NoC | Network-on-Chip |

| PE | Processing Element |

| PNAS | Progressive Neural Architecture Search |

| RBM | Restricted Boltzmann Machine |

| ReLU | Rectified Linear Unit |

| RNN | Recurrent Neural Network |

| SE | Squeeze and Excitation |

| SMBO | Sequential Model-Based Optimization |

| SoC | System-on-Chip |

| TMAC | Tera Multiply and Accumulation |

| TOP | Tera Operations |

| ZB | Zettabytes |

References

- LeCun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Najafabadi, M.; Villanustre, F.; Khoshgoftaar, T.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef]

- Hwang, K. Cloud Computing for Machine Learning and Cognitive Applications; The MIT Press: New York, NY, USA, 2017. [Google Scholar]

- Varghese, B.; Buyya, R. Next generation cloud computing: New trends and research directions. Future Gener. Comput. Syst. 2018, 79, 849–861. [Google Scholar] [CrossRef]

- Khan, I.; Alam, M. Cloud computing: Issues and future direction. Glob. Sci. Tech. 2017, 9, 37–44. [Google Scholar] [CrossRef]

- Statista. Number of Internet of Things (IoT) Connected Devices Worldwide in 2018, 2025 and 2030. 2020. Available online: https://www.statista.com/statistics/802690/worldwide-connected-devices-by-access-technology/ (accessed on 29 April 2020).

- Cisco. Cisco Global Cloud Index: Forecast and Methodology. Available online: https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.pdf (accessed on 5 May 2020).

- Barbera, M.V.; Kosta, S.; Mei, A.; Stefa, J. To offload or not to offload? The bandwidth and energy costs of mobile cloud computing. In Proceedings of the IEEE INFOCOM, Turin, Italy, 14–19 April 2013; pp. 1285–1293. [Google Scholar] [CrossRef]

- Pan, J.; McElhannon, J. Future Edge Cloud and Edge Computing for Internet of Things Applications. IEEE Internet Things J. 2018, 5, 439–449. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Usha, D.; Bobby, M. Privacy issues in smart home devices using Internet of Things—A survey. Int. J. Adv. Res. 2018, 6, 566–568. [Google Scholar] [CrossRef]

- Hassan, N.; Gillani, S.; Ahmed, E.; Yaqoob, I.; Imran, M. The Role of Edge Computing in Internet of Things. IEEE Commun. Mag. 2018, 56, 110–115. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Leung, V.; Niyato, D.; Yan, X.; Chen, X. Convergence of Edge Computing and Deep Learning: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020. [Google Scholar] [CrossRef]

- Shi, W.; Pallis, G.; Xu, Z. Edge Computing [Scanning the Issue]. Proc. IEEE 2019, 107, 1474–1481. [Google Scholar] [CrossRef]

- Ullah, Z.; Al-Turjman, F.; Mostarda, L.; Gagliardi, R. Applications of Artificial Intelligence and Machine learning in smart cities. Comput. Commun. 2020, 154, 313–323. [Google Scholar] [CrossRef]

- Popa, D.; Pop, F.; Serbanescu, C.; Castiglione, A. Deep Learning Model for Home Automation and Energy Reduction in a Smart Home Environment Platform. Neural Comput. Appl. 2019, 31, 1317–1337. [Google Scholar] [CrossRef]

- Wang, J.; Ma, Y.; Zhang, L.; Gao, R.X.; Wu, D. Deep learning for smart manufacturing: Methods and applications. J. Manuf. Syst. 2018, 48, 144–156. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence With Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Ren, J.; Zhang, D.; He, S.; Zhang, Y.; Li, T. A Survey on End Edge-Cloud Orchestrated Network Computing Paradigms: Transparent Computing, Mobile Edge Computing, Fog Computing, and Cloudlet. ACM Comput. Surv. 2019, 52, 1–36. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, W.; Li, Y.; Colman Meixner, C.; Tornatore, M.; Zhang, J. Edge Computing and Networking: A Survey on Infrastructures and Applications. IEEE Access 2019, 7, 101213–101230. [Google Scholar] [CrossRef]

- Huang, Y.; Ma, X.; Fan, X.; Liu, J.; Gong, W. When deep learning meets edge computing. In Proceedings of the 2017 IEEE 25th International Conference on Network Protocols (ICNP), Toronto, ON, Canada, 10–13 October 2017; pp. 1–2. [Google Scholar]

- Véstias, M.P. Deep Learning on Edge: Challenges and Trends. In Smart Systems Design, Applications, and Challenges; IGI Global: Hershey, PA, USA, 2020; pp. 23–42. [Google Scholar] [CrossRef]

- Kang, Y.; Hauswald, J.; Gao, C.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative Intelligence Between the Cloud and Mobile Edge. ACM SIGARCH Comput. Archit. News 2017, 45, 615–629. [Google Scholar] [CrossRef]

- Chen, J.; Ran, X. Deep Learning With Edge Computing: A Review. Proc. IEEE 2019, 107, 1655–1674. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.; Yang, T.; Emer, J.S. Efficient Processing of Deep Neural Networks: A Tutorial and Survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Aggarwal, C. Neural Networks and Deep Learning: A Textbook, 1st ed.; Springer: Berlin, Germany, 2018. [Google Scholar]

- LeCun, Y. 1.1 Deep Learning Hardware: Past, Present, and Future. In Proceedings of the 2019 IEEE International Solid- State Circuits Conference—(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 12–19. [Google Scholar]

- Hassoun, M. Fundamentals of Artificial Neural Networks; MIT Press: New York, NY, USA, 2003. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: New York, NY, USA, 2016. [Google Scholar]

- LeCun, Y.; Boser, B.E.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.E.; Jackel, L.D. Handwritten Digit Recognition with a Back-Propagation Network. In Advances in Neural Information Processing Systems 2; Touretzky, D.S., Ed.; Morgan-Kaufmann: Burlington, MA, USA, 1990; pp. 396–404. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep Sparse Rectifier Neural Networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 14 June 2011; pp. 315–323. [Google Scholar]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Le Cun, Y.; Jackel, L.D.; Boser, B.; Denker, J.S.; Graf, H.P.; Guyon, I.; Henderson, D.; Howard, R.E.; Hubbard, W. Handwritten digit recognition: Applications of neural network chips and automatic learning. IEEE Commun. Mag. 1989, 27, 41–46. [Google Scholar] [CrossRef]

- Yu, A.W.; Lee, H.; Le, Q.V. Learning to Skim Text. arXiv 2017, arXiv:1704.06877. [Google Scholar]

- Hinton, G.E.; Sejnowski, T.J. Learning and Relearning in Boltzmann Machines. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition, Volume 1: Foundations; MIT Press: Cambridge, MA, USA, 1986; pp. 282–317. [Google Scholar]

- Hopfield, J. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Bengio, Y.; Lamblin, P.; Popovici, D.; Larochelle, H. Greedy Layer-Wise Training of Deep Networks. Available online: http://papers.nips.cc/paper/3048-greedy-layer-wise-training-of-deep-networks.pdf (accessed on 5 May 2020).

- Bourlard, H.; Kamp, Y. Auto-Association by Multilayer Perceptrons and Singular Value Decomposition. Biol. Cybern. 1988, 59, 291–294. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Available online: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf (accessed on 5 May 2020).

- Zeiler, M.; Fergus, R. Visualizing and Understanding Convolutional Neural Networks. In Proceedings of the 13th European Conference Computer Vision and Pattern Recognition, Zurich, Switzerland, 6–12 September 2014; pp. 6–12. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. Available online: https://arxiv.org/pdf/1409.1556.pdf (accessed on 5 May 2020).

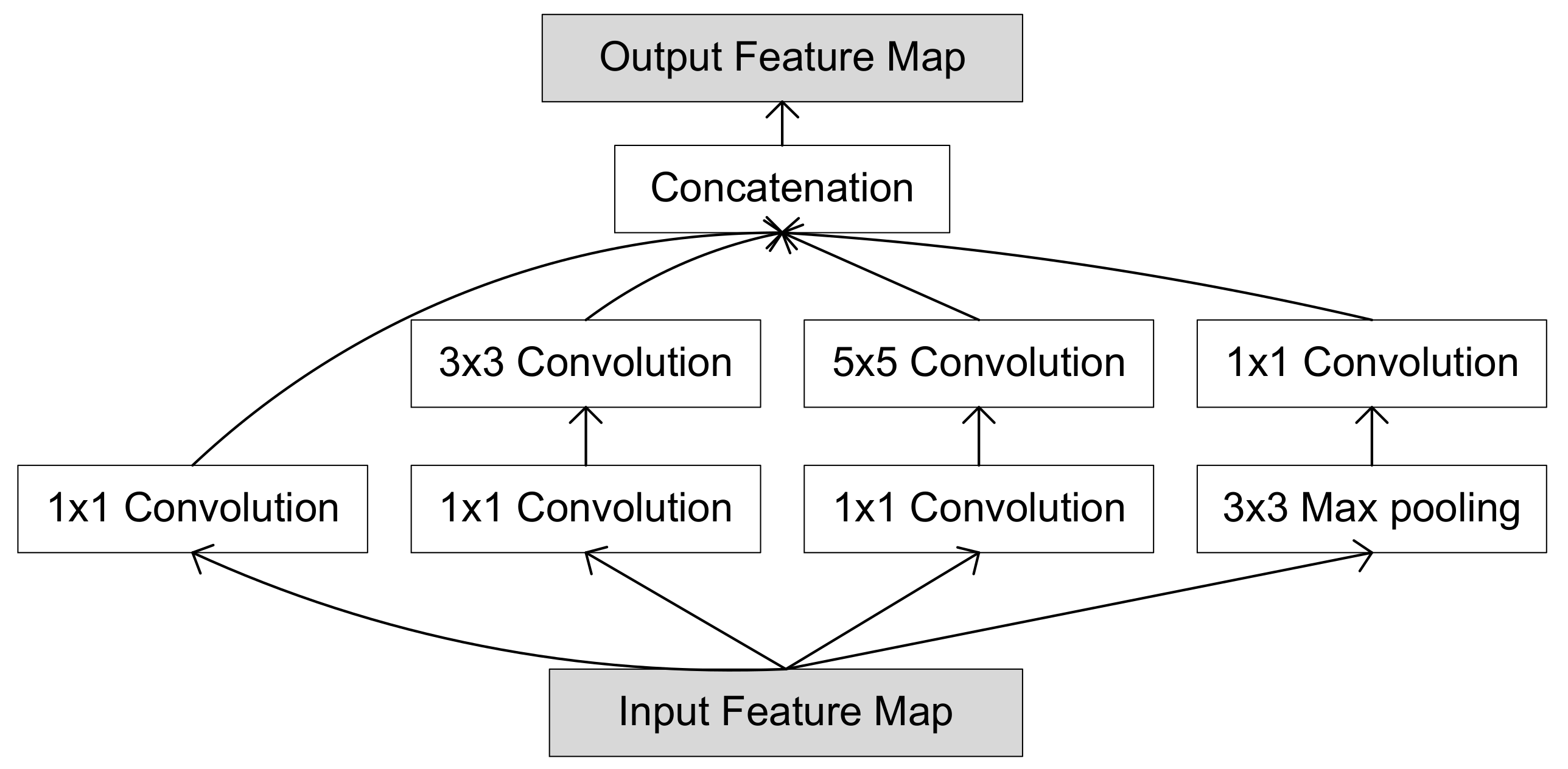

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

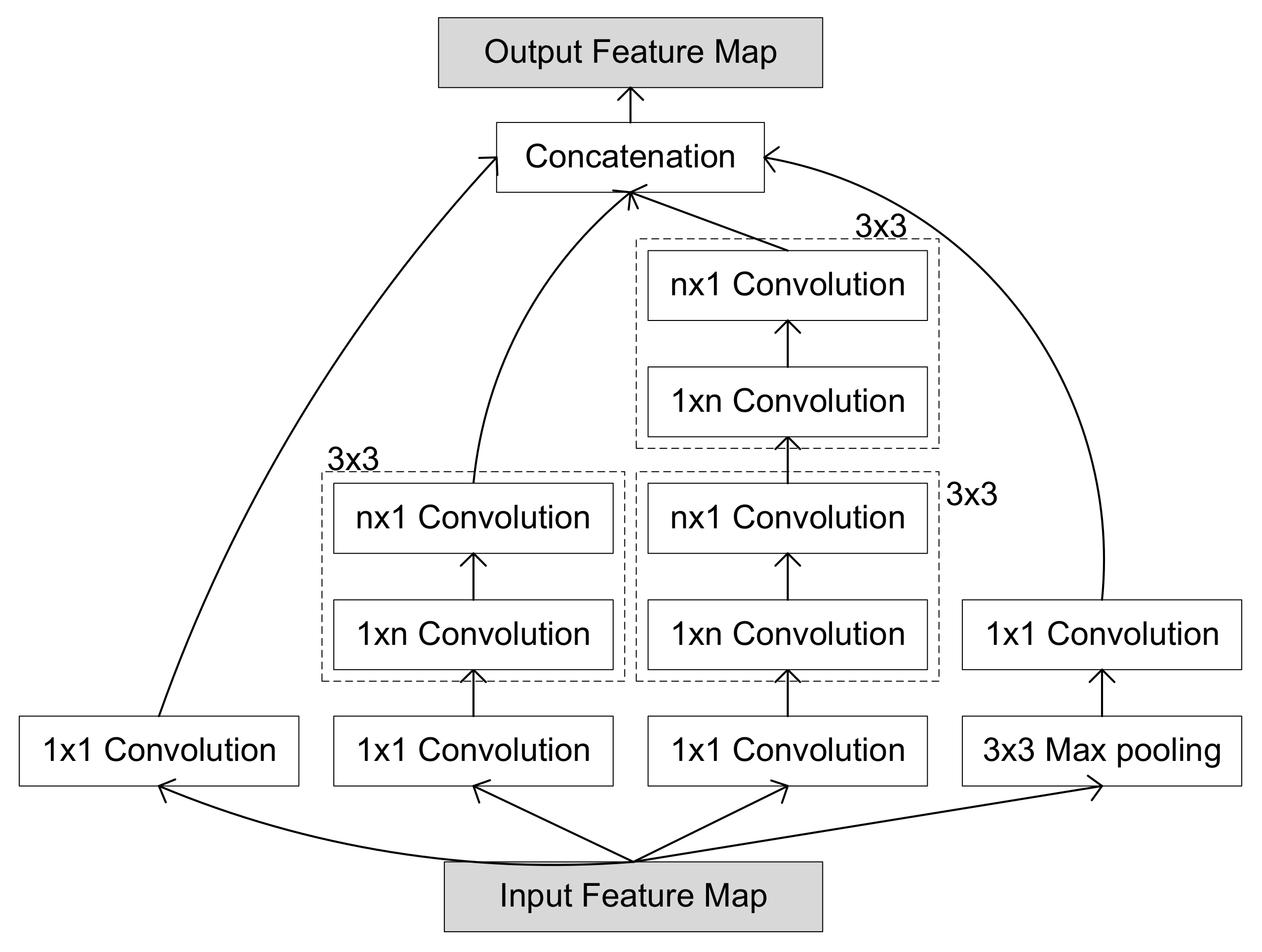

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.B.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Aggarwal, V.; Kaur, G. A review: Deep learning technique for image classification. ACCENTS Trans. Image Process. Comput. Vis. 2018, 4, 21–25. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Jiang, X.; Hadid, A.; Pang, Y.; Granger, E.; Feng, X. Deep Learning in Object Detection and Recognition; Springer: Berlin, Germany, 2019. [Google Scholar]

- Zhang, T.; Chowdhery, A.; Bahl, P.V.; Jamieson, K.; Banerjee, S. The Design and Implementation of a Wireless Video Surveillance System. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 426–438. [Google Scholar]

- Sreenu, G.; Durai, M.A.S. Intelligent video surveillance: A review through deep learning techniques for crowd analysis. J. Big Data 2019, 6, 1–27. [Google Scholar] [CrossRef]

- Hung, C.C.; Ananthanarayanan, G.; Bodík, P.; Golubchik, L.; Yu, M.; Bahl, V.; Philipose, M. VideoEdge: Processing Camera Streams using Hierarchical Clusters. In Proceedings of the ACM/IEEE Symposium on Edge Computing (SEC), Seattle, WA, USA, 25–27 October 2018. [Google Scholar]

- Amazon. AWS Deeplens. 2019. Available online: https://aws.amazon.com/deeplens/ (accessed on 5 May 2020).

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural Language Processing (Almost) from Scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Li, D.; Liu, Y. Deep Learning in Natural Language Processing; Springer: Berlin, Germany, 2018. [Google Scholar]

- Deng, L.; Li, J.; Huang, J.; Yao, K.; Yu, D.; Seide, F.; Seltzer, M.; Zweig, G.; He, X.; Williams, J.; et al. Recent advances in deep learning for speech research at Microsoft. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8604–8608. [Google Scholar]

- Singh, S.P.; Kumar, A.; Darbari, H.; Singh, L.; Rastogi, A.; Jain, S. Machine translation using deep learning: An overview. In Proceedings of the 2017 International Conference on Computer, Communications and Electronics (Comptelix), Jaipur, India, 1–2 July 2017; pp. 162–167. [Google Scholar]

- Amazon. Alexa Voice Service. 2016. Available online: https://developer.amazon.com/en-US/docs/alexa/alexa-voice-service/api-overview.html (accessed on 5 May 2020).

- Apple. Deep Learning for Siri’s Voice: On-Device Deep Mixture Density Networks for Hybrid Unit Selection Synthesis. 2017. Available online: https://machinelearning.apple.com/2017/08/06/sirivoices.html (accessed on 5 May 2020).

- Apple. Hey Siri: An On-Device DNN-Powered Voice Trigger for Apple’s Personal Assistant. 2017. Available online: https://machinelearning.apple.com/2017/10/01/hey-siri.html (accessed on 5 May 2020).

- Wang, X.; Gao, L.; Mao, S.; Pandey, S. CSI-Based Fingerprinting for Indoor Localization: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2017, 66, 763–776. [Google Scholar] [CrossRef]

- Erol, B.A.; Majumdar, A.; Lwowski, J.; Benavidez, P.; Rad, P.; Jamshidi, M. Improved Deep Neural Network Object Tracking System for Applications in Home Robotics. In Computational Intelligence for Pattern Recognition; Pedrycz, W., Chen, S.M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 369–395. [Google Scholar] [CrossRef]

- Mannini, A.; Sabatini, A.M. Machine Learning Methods for Classifying Human Physical Activity from On-Body Accelerometers. Sensors 2010, 10, 1154–1175. [Google Scholar] [CrossRef] [PubMed]

- Souza, J.; Francisco, A.; Piekarski, C.; Prado, G. Data Mining and Machine Learning to Promote Smart Cities: A Systematic Review from 2000 to 2018. Sustainability 2019, 11, 1077. [Google Scholar] [CrossRef]

- Zantalis, F.; Koulouras, G.; Karabetsos, S.; Kandris, D. Future internet A Review of Machine Learning and IoT in Smart Transportation. Future Internet 2019, 11, 94. [Google Scholar] [CrossRef]

- Huang, W.; Song, G.; Hong, H.; Xie, K. Deep Architecture for Traffic Flow Prediction: Deep Belief Networks With Multitask Learning. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2191–2201. [Google Scholar] [CrossRef]

- Zhang, Z.; He, Q.; Gao, J.; Ni, M. A deep learning approach for detecting traffic accidents from social media data. Transp. Res. Part C Emerg. Technol. 2018, 86, 580–596. [Google Scholar] [CrossRef]

- Xiong, H.; Alipanahi, B.; Lee, L.; Bretschneider, H.; Merico, D.; Yuen, R.; Hua, Y.; Gueroussov, S.; Najafabadi, H.; Hughes, T.; et al. RNA splicing. The human splicing code reveals new insights into the genetic determinants of disease. Science (New York N.Y.) 2014, 347, 1254806. [Google Scholar] [CrossRef]

- Alipanahi, B.; Delong, A.; Weirauch, M.; Frey, B. Predicting the sequence specificities of DNA- and RNA-binding proteins by deep learning. Nat. Biotechnol. 2015, 33, 831–838. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.; Ko, J.; Swetter, S.; Blau, H.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Jermyn, M.; Desroches, J.; Mercier, J.; Tremblay, M.A.; St-Arnaud, K.; Guiot, M.C.; Petrecca, K.; Leblond, F. Neural networks improve brain cancer detection with Raman spectroscopy in the presence of operating room light artifacts. J. Biomed. Opt. 2016, 21, 094002. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Khosla, A.; Gargeya, R.; Irshad, H.; Beck, A. Deep Learning for Identifying Metastatic Breast Cancer. arXiv 2016, arXiv:1606.05718. [Google Scholar]

- Tsochatzidis, L.; Costaridou, L.; Pratikakis, I. Deep Learning for Breast Cancer Diagnosis from Mammograms—A Comparative Study. J. Imaging 2019, 5, 37. [Google Scholar] [CrossRef]

- Tyagi, A.C. Towards a Second Green Revolution. Irrig. Drain. 2016, 65, 388–389. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Sheppard, C. Deep Count: Fruit Counting Based on Deep Simulated Learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef]

- Sun, Y.; Liu, Y.; Wang, G.; Zhang, H. Deep Learning for Plant Identification in Natural Environment. Comput. Intell. Neurosci. 2017, 2017, 7361042. [Google Scholar] [CrossRef]

- Ienco, D.; Gaetano, R.; Dupaquier, C.; Maurel, P. Land Cover Classification via Multi-temporal Spatial Data by Recurrent Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1685–1689. [Google Scholar] [CrossRef]

- Ali, I.; Greifeneder, F.; Stamenkovic, J.; Neumann, M.; Notarnicola, C. Review of Machine Learning Approaches for Biomass and Soil Moisture Retrievals from Remote Sensing Data. Remote Sens. 2015, 7, 16398–16421. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Addo, P.; Guegan, D.; Hassani, B. Credit Risk Analysis Using Machine and Deep Learning Models. Risks 2018, 6, 38. [Google Scholar] [CrossRef]

- Leo, M.; Sharma, S.; Maddulety, K. Machine Learning in Banking Risk Management: A Literature Review. Risks 2019, 7, 29. [Google Scholar] [CrossRef]

- Gensler, A.; Henze, J.; Sick, B.; Raabe, N. Deep Learning for solar power forecasting—An approach using AutoEncoder and LSTM Neural Networks. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 002858–002865. [Google Scholar]

- Trappey, A.; Chen, P.; Trappey, C.; Ma, L. A Machine Learning Approach for Solar Power Technology Review and Patent Evolution Analysis. Appl. Sci. 2019, 9, 1478. [Google Scholar] [CrossRef]

- Chen, C.; Fu, C.; Hu, X.; Zhang, X.; Zhou, J.; Li, X.; Bao, F.S. Reinforcement Learning for User Intent Prediction in Customer Service Bots. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 1265–1268. [Google Scholar]

- Miklosik, A.; Kuchta, M.; Evans, N.; Zak, S. Towards the Adoption of Machine Learning-Based Analytical Tools in Digital Marketing. IEEE Access 2019, 7, 85705–85718. [Google Scholar] [CrossRef]

- Roy, A.; Sun, J.; Mahoney, R.; Alonzi, L.; Adams, S.; Beling, P. Deep learning detecting fraud in credit card transactions. In Proceedings of the 2018 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 27 April 2018; pp. 129–134. [Google Scholar]

- Fujiyoshi, H.; Hirakawa, T.; Yamashita, T. Deep learning-based image recognition for autonomous driving. IATSS Res. 2019, 43, 244–252. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar]

- Zoph, B.; Le, Q.V. Neural Architecture Search with Reinforcement Learning. arXiv 2016, arXiv:1611.01578. [Google Scholar]

- Yang, T.; Howard, A.G.; Chen, B.; Zhang, X.; Go, A.; Sze, V.; Adam, H. NetAdapt: Platform-Aware Neural Network Adaptation for Mobile Applications. arXiv 2018, arXiv:1804.03230. [Google Scholar]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <1MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Gholami, A.; Kwon, K.; Wu, B.; Tai, Z.; Yue, X.; Jin, P.H.; Zhao, S.; Keutzer, K. SqueezeNext: Hardware-Aware Neural Network Design. arXiv 2018, arXiv:1803.10615. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Huang, G.; Liu, S.; van der Maaten, L.; Weinberger, K.Q. CondenseNet: An Efficient DenseNet Using Learned Group Convolutions. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2752–2761. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning Transferable Architectures for Scalable Image Recognition. arXiv 2017, arXiv:1707.07012. [Google Scholar]

- Liu, C.; Zoph, B.; Neumann, M.; Shlens, J.; Hua, W.; Li, L.J.; Li, F.; Yuille, A.; Huang, J.; Murphy, K. Progressive Neural Architecture Search. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 19–35. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized Evolution for Image Classifier Architecture Search. arXiv 2018, arXiv:1802.01548. [Google Scholar] [CrossRef]

- Liu, H.; Simonyan, K.; Yang, Y. DARTS: Differentiable Architecture Search. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Sandler, M.; Howard, A.; Le, Q.V. MnasNet: Platform-Aware Neural Architecture Search for Mobile. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 6–9 May 2019; pp. 2815–2823. [Google Scholar]

- Xiong, Y.; Kim, H.J.; Hedau, V. ANTNets: Mobile Convolutional Neural Networks for Resource Efficient Image Classification. arXiv 2019, arXiv:1904.03775. [Google Scholar]

- Gonçalves, A.; Peres, T.; Véstias, M. Exploring Data Bitwidth to Run Convolutional Neural Networks in Low Density FPGAs. In Proceedings of the International Symposium on Applied Reconfigurable Computing, Toledo, OH, USA, 9–11 April 2019; pp. 387–401. [Google Scholar]

- Micikevicius, P.; Narang, S.; Alben, J.; Diamos, G.F.; Elsen, E.; García, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed Precision Training. arXiv 2017, arXiv:1710.03740. [Google Scholar]

- Wang, N.; Choi, J.; Brand, D.; Chen, C.; Gopalakrishnan, K. Training Deep Neural Networks with 8 bit Floating Point Numbers. arXiv 2018, arXiv:1812.08011. [Google Scholar]

- Gysel, P.; Motamedi, M.; Ghiasi, S. Hardware-oriented Approximation of Convolutional Neural Networks. In Proceedings of the 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Gupta, S.; Agrawal, A.; Gopalakrishnan, K.; Narayanan, P. Deep Learning with Limited Numerical Precision. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1737–1746. [Google Scholar]

- Anwar, S.; Hwang, K.; Sung, W. Fixed point optimization of deep convolutional neural networks for object recognition. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2015; pp. 1131–1135. [Google Scholar]

- Lin, D.D.; Talathi, S.S.; Annapureddy, V.S. Fixed Point Quantization of Deep Convolutional Networks. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2849–2858. [Google Scholar]

- Faraone, J.; Kumm, M.; Hardieck, M.; Zipf, P.; Liu, X.; Boland, D.; Leong, P.H.W. AddNet: Deep Neural Networks Using FPGA-Optimized Multipliers. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020, 28, 115–128. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, D.; Ko, S. New Flexible Multiple-Precision Multiply-Accumulate Unit for Deep Neural Network Training and Inference. IEEE Trans. Comput. 2020, 69, 26–38. [Google Scholar] [CrossRef]

- Suda, N.; Chandra, V.; Dasika, G.; Mohanty, A.; Ma, Y.; Vrudhula, S.; Seo, J.S.; Cao, Y. Throughput- Optimized OpenCL-based FPGA Accelerator for Large-Scale Convolutional Neural Networks. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 21 February 2016; pp. 16–25. [Google Scholar]

- Wang, J.; Lou, Q.; Zhang, X.; Zhu, C.; Lin, Y.; Chen, D. A Design Flow of Accelerating Hybrid Extremely Low Bit-width Neural Network in Embedded FPGA. In Proceedings of the 28th International Conference on Field-Programmable Logic and Applications, Barcelona, Spain, 27–31 August 2018. [Google Scholar]

- Véstias, M.P.; Policarpo Duarte, R.; de Sousa, J.T.; Neto, H. Hybrid Dot-Product Calculation for Convolutional Neural Networks in FPGA. In Proceedings of the 2019 29th International Conference on Field Programmable Logic and Applications (FPL), Barcelona, Spain, 9–13 September 2019; pp. 350–353. [Google Scholar]

- Umuroglu, Y.; Fraser, N.J.; Gambardella, G.; Blott, M.; Leong, P.H.W.; Jahre, M.; Vissers, K.A. FINN: A Framework for Fast, Scalable Binarized Neural Network Inference. arXiv 2016, arXiv:1612.07119. [Google Scholar]

- Liang, S.; Yin, S.; Liu, L.; Luk, W.; Wei, S. FP-BNN: Binarized neural network on FPGA. Neurocomputing 2018, 275, 1072–1086. [Google Scholar] [CrossRef]

- Courbariaux, M.; Bengio, Y. BinaryNet: Training Deep Neural Networks with Weights and Activations Constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar]

- Lee, S.; Kim, K.; Kim, J.; Kim, Y.; Myung, H. Spike-inspired Deep Neural Network Design Using Binary Weight. In Proceedings of the 2018 18th International Conference on Control, Automation and Systems (ICCAS), Pyeongchang, Korea, 17–20 October 2018; pp. 247–250. [Google Scholar]

- Nakahara, H.; Fujii, T.; Sato, S. A fully connected layer elimination for a binarizec convolutional neural network on an FPGA. In Proceedings of the 2017 27th International Conference on Field Programmable Logic and Applications (FPL), Barcelona, Spain, 4–8 September 2017; pp. 1–4. [Google Scholar]

- Hubara, I.; Courbariaux, M.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized Neural Networks. In Advances in Neural Information Processing Systems 29; Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 4107–4115. [Google Scholar]

- Chang, Y.; Wu, X.; Zhang, S.; Yan, J. Ternary Weighted Networks with Equal Quantization Levels. In Proceedings of the 2019 25th Asia-Pacific Conference on Communications (APCC), Ho Chi Minh, Vietnam, 6–8 November 2019; pp. 126–130. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Network with Pruning, Trained Quantization and Huffman Coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Luo, J.; Zhang, H.; Zhou, H.; Xie, C.; Wu, J.; Lin, W. ThiNet: Pruning CNN Filters for a Thinner Net. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2525–2538. [Google Scholar] [CrossRef]

- Yang, C.; Yang, Z.; Khattak, A.M.; Yang, L.; Zhang, W.; Gao, W.; Wang, M. Structured Pruning of Convolutional Neural Networks via L1 Regularization. IEEE Access 2019, 7, 106385–106394. [Google Scholar] [CrossRef]

- Tung, F.; Mori, G. Deep Neural Network Compression by In-Parallel Pruning-Quantization. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 568–579. [Google Scholar] [CrossRef]

- Peres, T.; Gonçalves, A.M.V. Faster Convolutional Neural Networks in Low Density FPGAs using Block Pruning. In Proceedings of the International Symposium on Applied Reconfigurable Computing, Toledo, Spain, 9–11 April 2019; pp. 402–416. [Google Scholar]

- Yu, J.; Lukefahr, A.; Palframan, D.; Dasika, G.; Das, R.; Mahlke, S. Scalpel: Customizing DNN Pruning to the Underlying Hardware Parallelism. SIGARCH Comput. Archit. News 2017, 45, 548–560. [Google Scholar] [CrossRef]

- Albericio, J.; Judd, P.; Hetherington, T.; Aamodt, T.; Jerger, N.E.; Moshovos, A. Cnvlutin: Ineffectual-Neuron- Free Deep Neural Network Computing. In Proceedings of the 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, Korea, 18–22 June 2016; pp. 1–13. [Google Scholar]

- Kim, D.; Kim, S.; Yoo, S. FPGA Prototyping of Low-Precision Zero-Skipping Accelerator for Neural Networks. In Proceedings of the 2018 International Symposium on Rapid System Prototyping (RSP), Torino, Italy, 4–5 October 2018; pp. 104–110. [Google Scholar]

- Véstias, M.P.; Duarte, R.P.; de Sousa, J.T.; Neto, H.C. Fast Convolutional Neural Networks in Low Density FPGAs Using Zero-Skipping and Weight Pruning. Electronics 2019, 8, 1321. [Google Scholar] [CrossRef]

- Nurvitadhi, E.; Venkatesh, G.; Sim, J.; Marr, D.; Huang, R.; Ong Gee Hock, J.; Liew, Y.T.; Srivatsan, K.; Moss, D.; Subhaschandra, S.; et al. Can FPGAs Beat GPUs in Accelerating Next-Generation Deep Neural Networks? In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 5–14. [Google Scholar]

- Struharik, R.J.; Vukobratović, B.Z.; Erdeljan, A.M.; Rakanović, D.M. CoNNa–Hardware accelerator for compressed convolutional neural networks. Microprocess. Microsyst. 2020, 73, 102991. [Google Scholar] [CrossRef]

- Zhang, C.; Wu, D.; Sun, J.; Sun, G.; Luo, G.; Cong, J. Energy-Efficient CNN Implementation on a Deeply Pipelined FPGA Cluster. In Proceedings of the 2016 International Symposium on Low Power Electronics and Design, ISLPED ’16, San Francisco, CA, USA, 10–12 August 2016; ACM: New York, NY, USA, 2016; pp. 326–331. [Google Scholar] [CrossRef]

- Aydonat, U.; O’Connell, S.; Capalija, D.; Ling, A.C.; Chiu, G.R. An OpenCL™Deep Learning Accelerator on Arria 10. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 55–64. [Google Scholar] [CrossRef]

- Shen, Y.; Ferdman, M.; Milder, P. Escher: A CNN Accelerator with Flexible Buffering to Minimize Off-Chip Transfer. In Proceedings of the 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Napa, CA, USA, 30 April–2 May 2017; pp. 93–100. [Google Scholar]

- Winograd, S. Arithmetic Complexity of Computations; Society for Industrial and Applied Mathematics (SIAM): University City, PA, USA, 1980. [Google Scholar]

- Lavin, A.; Gray, S. Fast Algorithms for Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4013–4021. [Google Scholar]

- Lu, L.; Liang, Y.; Xiao, Q.; Yan, S. Evaluating Fast Algorithms for Convolutional Neural Networks on FPGAs. In Proceedings of the 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Napa, CA, USA, 30 April–2 May 2017; pp. 101–108. [Google Scholar]

- Zhao, Y.; Wang, D.; Wang, L. Convolution Accelerator Designs Using Fast Algorithms. Algorithms 2019, 12, 112. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, D.; Wang, L.; Liu, P. A Faster Algorithm for Reducing the Computational Complexity of Convolutional Neural Networks. Algorithms 2018, 11, 159. [Google Scholar] [CrossRef]

- Kala, S.; Jose, B.R.; Mathew, J.; Nalesh, S. High-Performance CNN Accelerator on FPGA Using Unified Winograd-GEMM Architecture. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 2816–2828. [Google Scholar] [CrossRef]

- Gyrfalcon Technology. Lightspeeur 2803S Neural Accelerator. 2018. Available online: https://www.gyrfalcontech.ai/solutions/2803s/ (accessed on 5 May 2020).

- The Linley Group. Ceva NeuPro Accelerates Neural Nets. 2018. Available online: https://www.ceva-dsp.com/wp-content/uploads/2018/02/Ceva-NeuPro-Accelerates-Neural-Nets.pdf (accessed on 5 May 2020).

- Synopsys. DesignWare EV6x Vision Processors. 2017. Available online: https://www.synopsys.com/dw/ipdir.php?ds=ev6x-vision-processors (accessed on 29 April 2020).

- Cadence. Tensilica DNA Processor IP For AI Inference. 2017. Available online: https://ip.cadence.com/uploads/datasheets/TIP_PB_AI_Processor_FINAL.pdf (accessed on 5 May 2020).

- Intel. Intel Movidius Myriad X VPU. 2017. Available online: https://software.intel.com/sites/default/files/managed/c3/ec/Intel-Vision-Accelerator-Design-with-Intel-Movidius-Vision-Processing-Unit-IoT-Intel-Software.pdf (accessed on 5 May 2020).

- Shin, D.; Lee, J.; Lee, J.; Yoo, H. 14.2 DNPU: An 8.1TOPS/W reconfigurable CNN-RNN processor for general-purpose deep neural networks. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 240–241. [Google Scholar]

- Qualcomm. 865 5G Mobile Platform. 2019. Available online: https://www.qualcomm.com/news/releases/2020/02/25/flagship-qualcomm-snapdragon-865-5g-mobile-platform-powers-first-wave-2020 (accessed on 5 May 2020).

- Hisilicon. Kirn 950 5G. 2019. Available online: https://consumer.huawei.com/en/press/media-coverage/2015/hw-462405/ (accessed on 5 May 2020).

- Huawei. Ascend 910 AI Processor. Available online: https://e.huawei.com/se/products/cloud-computing-dc/atlas/ascend910 (accessed on 5 May 2020).

- Véstias, M.P. A Survey of Convolutional Neural Networks on Edge with Reconfigurable Computing. Algorithms 2019, 12, 154. [Google Scholar] [CrossRef]

- Chen, Y.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks. IEEE J. Solid-State Circuits 2017, 52, 127–138. [Google Scholar] [CrossRef]

- Yin, S.; Ouyang, P.; Tang, S.; Tu, F.; Li, X.; Zheng, S.; Lu, T.; Gu, J.; Liu, L.; Wei, S. A High Energy Efficient Reconfigurable Hybrid Neural Network Processor for Deep Learning Applications. IEEE J. Solid-State Circuits 2018, 53, 968–982. [Google Scholar] [CrossRef]

- Fujii, T.; Toi, T.; Tanaka, T.; Togawa, K.; Kitaoka, T.; Nishino, K.; Nakamura, N.; Nakahara, H.; Motomura, M. New Generation Dynamically Reconfigurable Processor Technology for Accelerating Embedded AI Applications. In Proceedings of the 2018 IEEE Symposium on VLSI Circuits, Honolulu, HI, USA, 18–22 June 2018; pp. 41–42. [Google Scholar]

- Guo, K.; Zeng, S.; Yu, J.; Wang, Y.; Yang, H. A Survey of FPGA Based Neural Network Accelerator. arXiv 2017, arXiv:1712.08934. [Google Scholar]

- Qiao, Y.; Shen, J.; Xiao, T.; Yang, Q.; Wen, M.; Zhang, C. FPGA-accelerated deep convolutional neural networks for high throughput and energy efficiency. Concurr. Comput. Pract. Exp. 2017, 29, e3850. [Google Scholar] [CrossRef]

- Liu, Z.; Dou, Y.; Jiang, J.; Xu, J.; Li, S.; Zhou, Y.; Xu, Y. Throughput-Optimized FPGA Accelerator for Deep Convolutional Neural Networks. ACM Trans. Reconfig. Technol. Syst. 2017, 10, 17:1–17:23. [Google Scholar] [CrossRef]

- Venieris, S.I.; Bouganis, C. FpgaConvNet: Mapping Regular and Irregular Convolutional Neural Networks on FPGAs. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 326–342. [Google Scholar] [CrossRef] [PubMed]

- Guo, K.; Sui, L.; Qiu, J.; Yu, J.; Wang, J.; Yao, S.; Han, S.; Wang, Y.; Yang, H. Angel-Eye: A Complete Design Flow for Mapping CNN Onto Embedded FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2018, 37, 35–47. [Google Scholar] [CrossRef]

- Véstias, M.; Duarte, R.P.; Sousa, J.T.d.; Neto, H. Lite-CNN: A High-Performance Architecture to Execute CNNs in Low Density FPGAs. In Proceedings of the 28th International Conference on Field Programmable Logic and Applications, Barcelona, Spain, 27–31 August 2018. [Google Scholar]

- Deng, Y. Deep Learning on Mobile Devices: A review. 2019. Available online: http://toc.proceedings.com/50445webtoc.pdf (accessed on 5 May 2020).

- Ran, X.; Chen, H.; Zhu, X.; Liu, Z.; Chen, J. DeepDecision: A Mobile Deep Learning Framework for Edge Video Analytics. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 15–19 April 2018; pp. 1421–1429. [Google Scholar]

- Cuervo, E.; Balasubramanian, A.; Cho, D.k.; Wolman, A.; Saroiu, S.; Chandra, R.; Bahl, P. MAUI: Making Smartphones Last Longer with Code Offload. In Proceedings of the 8th International Conference on Mobile Systems, Applications, and Services, San Francisco, CA, USA, 15 June 2010; pp. 49–62. [Google Scholar]

- Jeong, H.J.; Lee, H.J.; Shin, C.H.; Moon, S.M. IONN: Incremental Offloading of Neural Network Computations from Mobile Devices to Edge Servers. In Proceedings of the ACM Symposium on Cloud Computing, Carlsbad, CA, USA, 11–13 October 2018; pp. 401–411. [Google Scholar]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed Deep Neural Networks Over the Cloud, the Edge and End Devices. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339. [Google Scholar]

- Mao, J.; Chen, X.; Nixon, K.W.; Krieger, C.; Chen, Y. MoDNN: Local distributed mobile computing system for Deep Neural Network. In Proceedings of the Design, Automation Test in Europe Conference Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 1396–1401. [Google Scholar]

- Zhao, Z.; Barijough, K.M.; Gerstlauer, A. DeepThings: Distributed Adaptive Deep Learning Inference on Resource-Constrained IoT Edge Clusters. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2018, 37, 2348–2359. [Google Scholar] [CrossRef]

- Zhang, S.; Choromanska, A.; LeCun, Y. Deep Learning with Elastic Averaging SGD. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2015; MIT Press: Cambridge, MA, USA; pp. 685–693. [Google Scholar]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-Edge AI: Intelligentizing Mobile Edge Computing, Caching and Communication by Federated Learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Distributed Federated Learning for Ultra-Reliable Low-Latency Vehicular Communications. IEEE Trans. Commun. 2020, 68, 1146–1159. [Google Scholar] [CrossRef]

- Abad, M.S.H.; Ozfatura, E.; GUndUz, D.; Ercetin, O. Hierarchical Federated Learning ACROSS Heterogeneous Cellular Networks. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 8866–8870. [Google Scholar]

- Konecný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated Learning: Strategies for Improving Communication Efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Liu, S.; Lin, Y.; Zhou, Z.; Nan, K.; Liu, H.; Du, J. On-Demand Deep Model Compression for Mobile Devices: A Usage-Driven Model Selection Framework. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, New York, NY, USA, 11–15 June 2018; pp. 389–400. [Google Scholar]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. Adaptive Federated Learning in Resource Constrained Edge Computing Systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. arXiv 2018, arXiv:1804.08333. [Google Scholar]

- Tang, H.; Gan, S.; Zhang, C.; Zhang, T.; Liu, J. Communication Compression for Decentralized Training. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18, Montreal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA; pp. 7663–7673. [Google Scholar]

- Lin, Y.; Han, S.; Mao, H.; Wang, Y.; Dally, W.J. Deep Gradient Compression: Reducing the Communication Bandwidth for Distributed Training. arXiv 2017, arXiv:1712.01887. [Google Scholar]

- Xie, C.; Koyejo, O.; Gupta, I. Practical Distributed Learning: Secure Machine Learning with Communication- Efficient Local Updates. arXiv 2019, arXiv:1903.06996. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24 October 2016; pp. 308–318. [Google Scholar]

- Zhang, T.; He, Z.; Lee, R.B. Privacy-preserving Machine Learning through Data Obfuscation. arXiv 2018, arXiv:1807.01860. [Google Scholar]

- A Privacy-Preserving Deep Learning Approach for Face Recognition with Edge Computing. In Proceedings of the USENIX Workshop on Hot Topics in Edge Computing (HotEdge 18), Boston, MA, USA, 10 July 2018.

- Wang, J.; Zhang, J.; Bao, W.; Zhu, X.; Cao, B.; Yu, P.S. Not Just Privacy: Improving Performance of Private Deep Learning in Mobile Cloud. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 2407–2416. [Google Scholar]

- Anil, R.; Pereyra, G.; Passos, A.T.; Ormandi, R.; Dahl, G.; Hinton, G. Large scale distributed neural network training through online distillation. arXiv 2018, arXiv:1804.03235. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. In Proceedings of the NIPS Deep Learning and Representation Learning Workshop, Montreal, QC, Canada, 12 December 2015. [Google Scholar]

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual Lifelong Learning with Neural Networks: A Review. arXiv 2018, arXiv:1802.07569. [Google Scholar] [CrossRef] [PubMed]

- Tao, Y.; Tu, Y.; Shyu, M. Efficient Incremental Training for Deep Convolutional Neural Networks. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 286–291. [Google Scholar]

- Awasthi, A.; Sarawagi, S. Continual Learning with Neural Networks: A Review. In Proceedings of the ACM India Joint International Conference on Data Science and Management of Data, Swissotel, India, 3–5 January 2019; pp. 362–365. [Google Scholar]

- Losing, V.; Hammer, B.; Wersing, H. Incremental On-line Learning: A Review and Comparison of State of the Art Algorithms. Neurocomputing 2017, 275, 1261–1274. [Google Scholar] [CrossRef]

| Metric | Cloud Computing | Edge Computing |

|---|---|---|

| Computing Power | Cloud servers are powerful computing machines, | Edge servers and devices are computationally less powerful, |

| but shared by many users | but shared only in a limited area | |

| Latency | Services run faster in cloud servers, but the access to the servers is done | The proximity of edge servers and devices to the source of data improves the |

| through public communication networks with a high and variable latency | latency between service request and answer; it allows real-time services | |

| Bandwidth | Since all processing is done at the cloud, all data need to be transmitted to the | Since some or all of the processing is done at the edge, |

| cloud servers; therefore, it requires high communication bandwidth | close to the data source, it requires less communication bandwidth | |

| Cost | Cloud usage has a cost and requires a large costly bandwidth to | Local processing with edge servers |

| transfer data. Both increase with the addition of new data sources | and devices reduces communication costs | |

| Security | Transferring sensitive data to the cloud using public | Edge computing reduces data-sensitive transfer, |

| shared communication links reduces security and privacy | which increases security and keeps data private | |

| Services | Cloud services are offered at the application level to | Distributed and local computing allows services at |

| be generic enough to satisfy more customers | task level directed to specific needs of a user or customer | |

| Management | Central services are easier to manage; from the point of view of | Edge services are harder to manage since |

| the user, it offers a free management service | they are distributed and closer to the user | |

| Energy | Computing platforms of cloud centers are not | Edge and end devices are energy-constrained; |

| energy-constrained; the main goal is performance | performance is traded off with energy |

| Depth | Parameters (M) | Operations (G) | Top 5 Error (%) | Top- 1 Error (%) | |

|---|---|---|---|---|---|

| AlexNet | 8 | 61 | 1.45 | 15.4 | 36.7 |

| ZfNet | 8 | 60 | 1.47 | — | 36.0 |

| VGG16 | 16 | 138 | 31.0 | 8.1 | 25.6 |

| Inception-v1 | 22 | 5 | 2.86 | 10.1 | 30.2 |

| Inception-v2 | 42 | 11 | 4.0 | 7.8 | 25.2 |

| Inception-v3 | 48 | 24 | 11.4 | 5.6 | 21.2 |

| Inception-v4 | 76 | 35 | 24.5 | 4.9 | 19.9 |

| ResNet-152 | 152 | 60 | 22.6 | 4.5 | 19.4 |

| ResNeXt | 50 | 68 | 8.4 | 4.4 | 19.1 |

| DenseNet-201 | 201 | 20 | 8.4 | 6.4 | 23.6 |

| Model | Parameters (M) | Operations (G) | Top 5 Error (%) | Top 1 Error (%) |

|---|---|---|---|---|

| SqueezeNet | 1.2 | 1.72 | 19.7 | — |

| SqueezeNext | 3.2 | 1.42 | 11.8 | 32.5 |

| ShuffleNet x2 | 5.4 | 1.05 | 10.2 | 29.1 |

| MobileNetV1 | 4.2 | 1.15 | 10.5 | 29.4 |

| MobileNetV2 | 3.4 | 0.60 | 9.0 | 28.0 |

| MobileNetV3-Large | 5.4 | 0.44 | 7.8 | 24.8 |

| MobileNetV3-Small | 2.9 | 0.11 | 12.3 | 32.5 |

| CondenseNet G=C=4 | 2.9 | 0.55 | 10.0 | 29.0 |

| CondenseNet G=C=8 | 4.8 | 1.06 | 8.3 | 26.2 |

| NASNet-A | 5.3 | 1.13 | 8.4 | 26.0 |

| NASNet-B | 5.3 | 0.98 | 8.7 | 27.2 |

| NASNet-C | 4.9 | 1.12 | 9.0 | 27.5 |

| PNASNet | 5.1 | 1.18 | 8.1 | 25.7 |

| MNASNet-A1 | 3.9 | 0.62 | 7.5 | 24.8 |

| MNASNet-small | 2.0 | 0.14 | — | 34.0 |

| AmoebaNet-A | 5.1 | 1.11 | 8.0 | 25.5 |

| AmoebaNet-C | 6.4 | 1.14 | 7.6 | 24.3 |

| ANTNets | 3.7 | 0.64 | 8.8 | 26.8 |

| DARTS | 4.9 | 1.19 | 9.0 | 26.9 |

| Data Quantization | |

|---|---|

| 16 bit floating-point | [113] |

| 8 bit floating-point | [114] |

| 8 bit fixed-point | [115,116,117,118] |

| <8 bit fixed-point | [119,120] |

| Hybrid fixed-point | [121,122,123] |

| Binary | [124,125,126,127] |

| Ternary | [128,129,130] |

| Data and Operation Reduction | |

| Pruning | [131,132,133,134,135,136] |

| Zero skipping | [137,139,140,141] |

| Batch | [142,143,144] |

| Winograd | [146,147,148,149,150] |

| Accelerator | Year | Model | GOPs | GOPs/W |

|---|---|---|---|---|

| ASIC Technology | ||||

| Gyrfalcon [151] | 2018 | CNN | 16.8 TOPs | 24 TOP/W |

| LinleyGroup [152] | 2018 | CNN | 12.5 TOPs | — |

| EV6x [153] | 2017 | DNN | 9.0 TOPs | 4 TOPs/W |

| Tensilica DNA [154] | 2017 | DNN | 24 TOPs | 6.8 TOPs/W |

| Myriad X [155] | 2017 | DNN | 4 TOPs | — |

| [156] | 2017 | CNN | — | 3.9 TOPs/W |

| Snapdragon 6 [157] | 2019 | DNN | 15 TOPs | 3 TOPs/W |

| Kirin 900 [158] | 2019 | DNN | 8 TOPs | — |

| Ascend 910 [159] | 2019 | DBB | 512 TOPs | 1.7 TOPs/W |

| Reconfigurable Computing Technology | ||||

| Eyeriss [161] | 2018 | CNN | 46.2 GOPs | 166 GOPs/W |

| Thinker chip [162] | 2018 | CNN | 368 GOPs | 1 TOPs/W |

| DRP [163] | 2018 | CNN | 960 GOPs | — |

| [167] | 2018 | CNN | 13 GOPs | — |

| [168] | 2018 | CNN | 84 GOPs | — |

| [169] | 2018 | CNN | 363 GOPs | 33 GOPs/W |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Véstias, M.P.; Duarte, R.P.; de Sousa, J.T.; Neto, H.C. Moving Deep Learning to the Edge. Algorithms 2020, 13, 125. https://doi.org/10.3390/a13050125

Véstias MP, Duarte RP, de Sousa JT, Neto HC. Moving Deep Learning to the Edge. Algorithms. 2020; 13(5):125. https://doi.org/10.3390/a13050125

Chicago/Turabian StyleVéstias, Mário P., Rui Policarpo Duarte, José T. de Sousa, and Horácio C. Neto. 2020. "Moving Deep Learning to the Edge" Algorithms 13, no. 5: 125. https://doi.org/10.3390/a13050125

APA StyleVéstias, M. P., Duarte, R. P., de Sousa, J. T., & Neto, H. C. (2020). Moving Deep Learning to the Edge. Algorithms, 13(5), 125. https://doi.org/10.3390/a13050125