Abstract

In the paper we deal with the problem of non-linear dynamic system identification in the presence of random noise. The class of considered systems is relatively general, in the sense that it is not limited to block-oriented structures such as Hammerstein or Wiener models. It is shown that the proposed algorithm can be generalized for two-stage strategy. In step 1 (non-parametric) the system is approximated by multi-dimensional regression functions for a given set of excitations, treated as representative set of points in multi-dimensional space. ‘Curse of dimensionality problem’ is solved by using specific (quantized or periodic) input sequences. Next, in step 2, non-parametric estimates can be plugged into least squares criterion and support model selection and estimation of system parameters. The proposed strategy allows decomposition of the identification problem, which can be of crucial meaning from the numerical point of view. The “estimation points” in step 1 are selected to ensure good task conditioning in step 2. Moreover, non-parametric procedure plays the role of data compression. We discuss the problem of selection of the scale of non-parametric model, and analyze asymptotic properties of the method. Also, the results of simple simulation are presented, to illustrate functioning of the method. Finally, the proposed method is successfully applied in Differential Scanning Calorimeter (DSC) to analyze aging processes in chalcogenide glasses.

1. Introduction

The problem of non-linear system modeling has been intensively examined over the past four decades. Owing to many potential applications (see [1]) and interdisciplinary scope of the topic, both scientists and engineers look for more precise and numerically efficient identification algorithms. First attempts at generalization of linear system identification theory for non-linear models were based on Volterra series representation ([2]). Traditional Volterra series-based approach leads to relatively high numerical complexity, which is often not acceptable from practical point of view. To cope with this problem regularization or tensor network techniques have been proposed recently ([3,4]). However, strong restrictions are imposed on the system characteristics (e.g., smoothness of nonlinearity, and short memory of the dynamics). Alternatively, the concept of block-oriented models was introduced ([5]). It was assumed that the system can be represented, or approximated with satisfactory accuracy, with the use of structural models including interconnected elementary blocks of two types—linear dynamics and static nonlinearities ([6]). The most popular structures in this class are Hammerstein system (see e.g., [7,8,9,10,11,12]) with static nonlinearity followed by linear dynamics, and the Wiener system [13,14,15,16,17,18]) including the same blocks connected reversely.

Traditionally, identification method needs two kinds of knowledge—set of input–output measurements and a priori parametric formula describing system characteristics and including finite number of unknown parameters ([6,19]). Usually, the polynomial model of static characteristics and the difference equation with known orders are assumed. This convention leads to relatively fast convergence of parameter estimates, but the risk of systematic approximation error appears, if the assumed model is not correct. As an alternative, the theory of non-parametric system identification ([20,21,22,23]) was proposed to solve this problem. The algorithms work under mild prior restrictions, such as stability of linear dynamic block and local continuity of static non-linear characteristics. Although the estimates converge to the true characteristics, the rate of convergence is relatively slow in practice, as a consequence of assumptions relaxation.

This paper represents the idea of combined, i.e., parametric-non-parametric, approach (see e.g., [24,25,26,27,28,29,30]), in which both parametric and non-parametric algorithms support each other to achieve the best possible results of identification for moderate number of measurements and to guarantee the asymptotic consistency (i.e., convergence to the true system characteristics), when the number of observations tends to infinity. Since the preliminary step of structure selection is treated rather cursorily in the literature, generalizations of the approach towards wider classes of systems seem to be of high importance from practical point of view. The paper was also motivated by the project of Differential Scanning Calorimeter ([31]) developed in the team, and particularly, modeling of heating process for examination the properties of chalcogenide glasses.

Main contribution of the paper lays in the following aspects:

- proposed identification algorithm is run without any prior knowledge about the system structure and parametric representation of nonlinearity,

- non-parametric multi-dimensional kernel regression estimate was generalized for modeling of non-linear dynamic systems, and the dimensionality problem was solved by using special input sequences,

- the scheme elaborated in the paper was successfully applied in Differential Scanning Calorimeter for testing parameters of chalcogenide glasses.

The paper is organized as follows. In Section 2 the general class of considered systems and the identification problem is formulated in detail. Next, in Section 3.1, purely non-parametric, regression-based approach is presented, and its disadvantages are discussed. Then, to cope with dimensionality problem, the idea of some specific input sequences is presented in Section 3.2. Owing to that, the system characteristics are identified only for some selected points, but the convergence is much faster. The idea of combined, two-stage strategy is introduced in Section 4. It allows use of prior knowledge to expand the model on the whole input space. Also, the results of simple simulation are included in Section 5 to illustrate and discuss some practical aspects of the approach. Finally, in Section 6, the algorithm is successfully applied in Differential Scanning Calorimeter to model aging properties of modern materials (chalcogenide glasses).

2. Problem Statement

2.1. Class of Systems

We consider discrete-time non-linear dynamic system with general representation

where is bounded random input sequence (), is zero mean random disturbance. The transformation is Lipschitz with respect to all arguments, and has the property of exponential forgetting (fading memory), i.e., if we put for and define the cut-off sequence as

We assume that it holds that

with some unknown , and . Similar class of fading memory systems, in which the output depends less and less on historical inputs, was considered in [32]. The goal is to identify the system (build the model ) using the sequence of N input–output measurements .

In considered system class, hysteresis is not admitted.

2.2. Examples

In this section, we show that some systems (popular in applications) fall into above description as special cases.

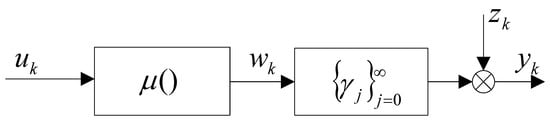

2.2.1. Hammerstein System

For Hammerstein system (see Figure 1), described by the equation

with the Lipschitz non-linear characteristic, i.e., such that

and asymptotically stable dynamics, i.e., , we get

Figure 1.

Hammerstein system.

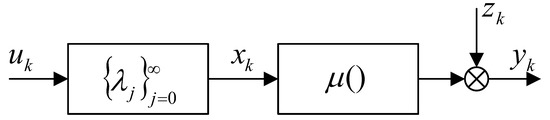

2.2.2. Wiener System

Analogously, for Wiener system (Figure 2), where the stable linear dynamics is followed by the Lipschitz static non-linear block

Figure 2.

Wiener System.

We get

Remark 1.

Analogously, it can be simply shown that also Wiener–Hammerstein (L–N–L) and Hammerstein–Wiener (N–L–N) sandwich systems belong to the assumed class.

2.2.3. Finite Memory Bilinear System

Another important and often met in application special case is the bilinear system with finite order m. It is described by the formula

i.e., for we get

for we have that

Since does not depend on , for we get , and obviously .

Considered example falls into the more general class of Volterra representation. Presented approach works without the knowledge of parametric representation. As regards applicability to the class (1), for we have finite memory system, i.e., , as . Moreover, since the input is assumed to be bounded (), resulting mapping fulfills Lipschitz condition (as ordinary polynomial on compact support).

3. Non-Parametric Regression

3.1. General Overview

Let us introduce the s-dimensional input regressor

and the regression function

with the argument vector

In particular, for we get

and for

The non-parametric kernel estimate ([20,21,22,23,33]) of has the following form

where denotes Euclidean norm, plays the role of kernel function, e.g.,

and h is a bandwidth parameter, responsible for the balance between bias and variance of the estimate. The class of possible kernels can obviously be generalized. Nevertheless, previous experiences shows that the kind of kernel function used for estimation is of secondary importance, whereas behavior of with respect to N is fundamental. We limited the presentation to Parzen (window) kernel for clarity of exposition. It fulfills all general assumptions made for kernels, i.e., it is even, non-negative and square integrable. The system (1) is thus approximated by the model

and s can be interpreted as its complexity. Obviously, both and need to be set depending on the number of measurements N. Observing that

the mean squared error of the model can be expressed as follows

and introducing the true finite-dimensional regression function we get

Since both as and as , these components can be set arbitrarily small by using appropriate scale s. Owing to above, for fixed s we focus on the first component of the MSE error of the form

where

It can simply be shown that

The bias order follows directly from Lipschitz condition, and the fact that for all k’s selected by kernel. Moreover,

For window kernel, Lipschitz function , and strictly positive input probability density function around the estimation point, probability of selection in s-dimensional space can be obviously evaluated from below by , where c is some constant. Hence, expected number of successes is proportional to (not less than). The variance order is thus a simple consequence of Wald’s identity. Hence, to assure the convergence , as in the mean square sense, the following conditions must be fulfilled

which leads to typical setting

To obtain the best asymptotic trade-off between squared bias and variance and comparing its orders we get

Moreover, to assure the balance between the estimation error and approximation error of order , connected with neglecting the tail we get

where . Owing to above, the scale must not be faster than , i.e.,

which illustrates slowness of admissible model complexity increasing in general case. The property (38) commonly known as “curse of dimensionality problem” illustrates the main drawback of multi-dimensional non-parametric regression approach to system modeling in traditional form. The reason is that probability of kernel selection

decreases rapidly when s grows large. We also refer the reader to the proof of Theorem 3 in [26], where a detailed discussion concerning an analogous problem can be found.

3.2. Dimension Reduction

To cope with the problem shown in (39) we consider two cases of some specific input excitation processes to speed up the rate of convergence.

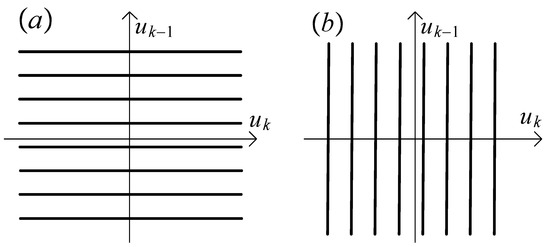

3.2.1. Discrete Input

In case 1 we assume that in each s-element input sub-sequence , there exists d inputs with discrete distribution on finite set of possible realizations. Consequently, all the points lay on a finite number of separable and compact subspaces with the internal dimension

and for we have

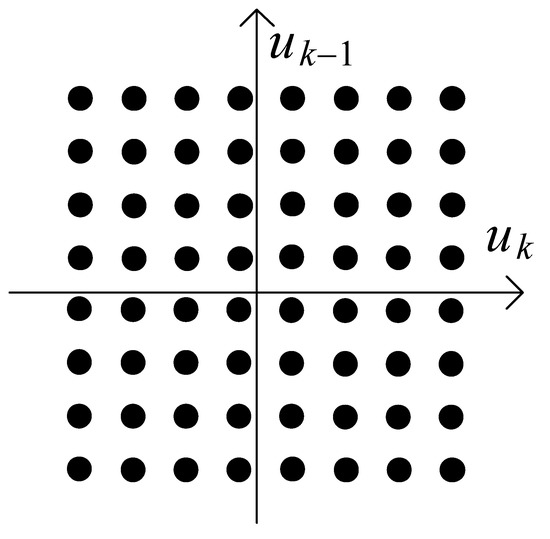

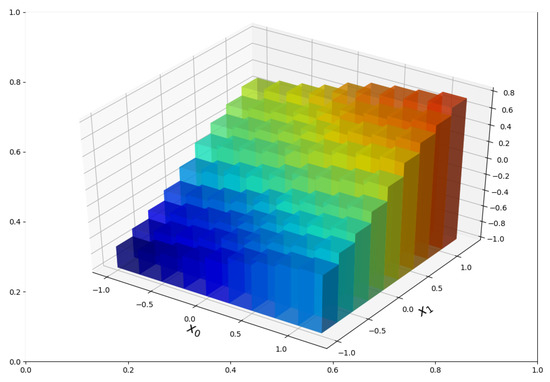

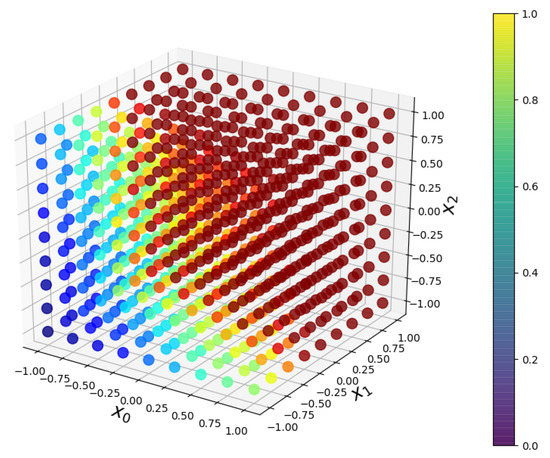

For each measurement point probability of kernel selection behaves like , where , , is internal dimension of this subspace. In particular, for (all input variables quantized) we get . The sets of possible input sequences for are illustrated in Figure 3 and Figure 4.

Figure 3.

Input space for and , (a) k odd, (b) k even.

Figure 4.

Input space for and .

3.2.2. Periodic Input

In case 2 we assume the input is periodic with the period , i.e., for each . Then, the value of the regressor (see (13)) evaluates to one of distinct points in , ,

with probabilities

Measurements are uniformly distributed on the finite set of distinct points

Narrowing of does not affect the kernel estimator asymptotically (i.e., ). Consequently, we get the best possible convergence rate . However, it must be admitted that estimators are calculated only for finite number of points, and, increasing causes increase of variance of the regression estimator for particular points (as the number of selected data is of order ).

4. Hybrid/Combined Parametric-Non-Parametric Approach

Since the special input excitations allows for fast recovering the system characteristics only in some points, additional prior knowledge is needed to extend the model for arbitrary process . In this section we assume that the transformation belongs to the given (a priori known), finite dimensional class of systems

with unknown parameter vector . In the proposed methodology, one of the input excitations described in Section 4 is applied. The system is identified on the finite set of representative points , where , and . Let us denote by —the true and unknown vector of system parameters. We assume that is identifiable, i.e., the following property holds

Moreover, let the quality index

be convex for , where is some neighborhood of the true

The following two-step algorithm is proposed.

Step 1. (non-parametric) Using the input–output observations for compute the estimates

Step 2. (parametric) Minimize the empirical version of the least squares criterion (47)

Lemma 1.

Let be Lipschitz with respect to all ’s included in and all parameters in θ. If the error of non-parametric estimate behaves like in the mean square sense, for all , then

in the parametric step 2.

Proof.

The property (51) can be proven following the lines of the proof of Theorem 1 in [25]. □

Remark 2.

Example 1.

For the system

in step 1 we can estimate two-dimensional () regression function in representative points , and , i.e., compute the pattern

Since the true values of the regression function are respectively and , in step 2 we get trivial estimates of parameters

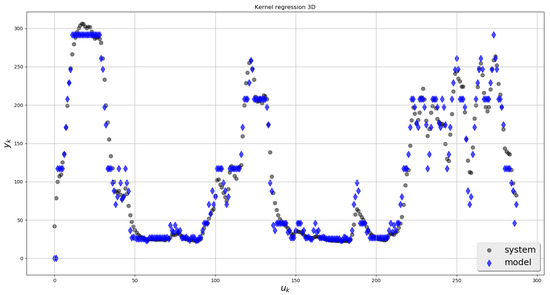

5. Simulation Example

To illustrate the proposed method, we simulated simple Wiener system (see Figure 2) with

excited by random process uniformly distributed on equidistant set of points

and uniformly distributed output disturbance

For simulated input–output pairs , the non-parametric models were computed for and compared with respect to the following empirical error

The results are presented in Table 1 and Figure 5 and Figure 6. Explicit derivation of the true finite order (2D or 3D) regression function is problematic, owing to the fact that the neglected part of input signal, i.e., the ‘tail’ connected with terms , is transferred through the non-linear characteristic . Figure 5 and Figure 6 illustrate non-parametric character and non-linear properties of the model, and give a general view on the shape of input–output relationship, which can be helpful for eventual parametrization. Quantized input (59) can be a good choice, when , and the non-parametric estimate plays supporting role for non-linear least squares-based parameter estimation in step 2. Nevertheless, in purely non-parametric approach, i.e., for , the number of possible realizations of increases exponentially. In the considered example, for 9 points in (59), probability of kernel selection for each behaves like and the estimate becomes sensitive on the noise .

Table 1.

Mean squared errors of model outputs for , compared to best linear approximation (BLA).

Figure 5.

Two-dimensional regression-based model .

Figure 6.

Three-dimensional regression-based model .

Table 1 illustrates reduction of error with respect to scale of the regression. The results are also compared with the best linear approximations of Wiener system. We emphasize that improvement is achieved under mild prior restrictions, i.e., the non-linear model is built based on measurements knowledge only.

6. Application in Testing of Chalcogenide Glasses with the Use of DSC Method

In this section, we apply the proposed algorithm for identification of heating process in Differential Scanning Calorimeter [31].

6.1. Chalcogenide Glasses

Materials with non-linear optical properties play a key role in frequency conversion and optical switching. One of the most promising materials in this area are chalcogenide glasses, because of good non-linear, passive and active properties. They are considered to be optical medium for the fibers of the 21st century. Chalcogenide glass fibers transmit into the IR, hence they can have numerous potential applications in the civil, medical and military areas. The IR light sources, lasers and amplifiers developed using these phenomena will be very useful in many areas of industry. High-speed optical communication requires ultra-fast all-optical processing and switching capabilities. In DSC experiment energy (heat flow) in function of time or temperature could be established. The energy from an external source is required to set to zero the difference in temperature of the tested and reference samples. Both samples are heated or cooled in a controlled mode and both techniques enable the detection of thermal events observed in the physical or chemical transformation under the influence of the changing temperature in a specific manner. Owing to that, many thermodynamically important parameters can be established, e.g., a glass transition or softening temperature, melting temperature, and melting enthalpy. The results also allow observation of physical aging processes. The goal is to control temperature of heating module precisely and ensure linearity of it. It is planned to design Model Following Control (MFC) structure of system to optimize quality indexes of temperature controlling. Below we present the results of identification experiment.

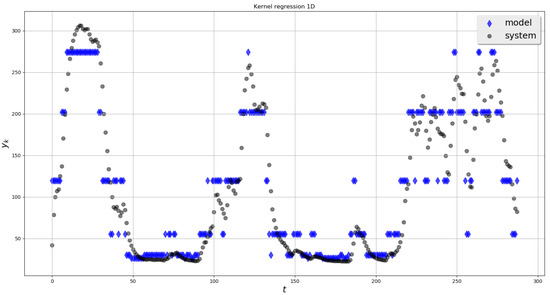

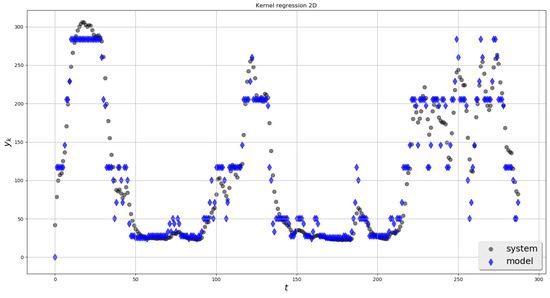

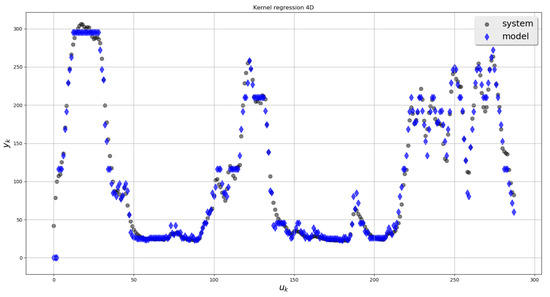

6.2. Results of Experiment

Treating the sample temperature as system output , and the power of the heating element as input , the non-parametric multi-dimension regression model was computed for . The results are shown in Figure 7, Figure 8, Figure 9 and Figure 10.

Figure 7.

Experimental data—1-dimensional regression.

Figure 8.

Experimental data—2-dimensional regression.

Figure 9.

Experimental data—3-dimensional regression.

Figure 10.

Experimental data—4-dimensional regression.

Differential Scanning Calorimeter for chalcogenide glasses (built by members of the team), was first approximated by the linear model, and the results were not satisfying. To improve accuracy, Hammerstein model was applied, and the decision of model structure was made arbitrarily. To avoid the risk of bad parameterization the general approach presented in the paper was applied. The results are comparable, although obtained without making any restrictive assumptions about the block-oriented structure of the model.

In Table 2 resulting mean squared errors for various scales are shown. The strong point of the method is that asymptotically, as , the model becomes free of approximation error, on the contrary to linear or block-oriented representation.

Table 2.

Mean squared errors of model outputs for .

The results have been compared to FIR(s) linear model and parametric Hammerstein model. Regarding non-parametric modeling of Hammerstein systems, proposed by Greblicki and Pawlak in the 1980s [20], their algorithms suffer from correlation of input, and they are not applicable here. On the other hand, for parametric Hammerstein model, the results are strongly dependent on the arbitrarily selected basis functions of nonlinearity. In our experiment we applied 3rd order polynomial function connected in cascade with FIR(s) linear dynamic filter. Table 2 shows that our method is more accurate, emphasizing that it works under mild prior restrictions.

7. Conclusions

The main contribution of the work lays in the fact that the model is built with lack of prior knowledge about the structure of the system and its characteristics. No decision of using particular Hammerstein or Wiener model is needed at the beginning to start the procedure. Obtained non-parametric estimators can be eventually plugged into the least squares optimization criterion in step 2, to provide parametric representation of the relationship. Both parametric and non-parametric methods can be combined to design strategy, which includes advantages of both approaches. Step 1 (non-parametric) is run to estimate selected points of system characteristic. It is done effectively thanks to generation of specific input excitation (discrete or periodic), which allows to avoid the problem of high dimensionality. Moreover, appropriate selection of estimation points can significantly decrease level of difficulty of the non-linear optimization task in step 2. The rate of convergence of parameter estimate is the same as for non-parametric ones.

The scheme presented in the paper is universal for a broad class of systems including Hammerstein and Wiener structures, and their interconnections. Non-parametric data pre-filtering plays also the role of compression algorithm and the result of step 1 can be treated as the simplified pattern of system for eventual structure detection and selection of its best parametric model. Regression-based non-parametric model can be computed only for the set of selected points, and the resulting pairs can used as compressed pattern (as ) of the system, instead of N data points .

Non-parametric pattern can help to support decision of model selection from the list of potential candidates, and model competitions can be performed in the user-defined regions of interests, e.g., in the working points.

Author Contributions

Formal analysis, G.M.; methodology, Z.H.; software, P.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research (including APC) was funded and supported by the National Science Centre, Poland, Grant No. 2016/21/B/ST7/02284.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Giannakis, G.; Serpedin, E. A bibliography on nonlinear system identification. Signal Process. 2001, 81, 533–580. [Google Scholar] [CrossRef]

- Schetzen, M. The Volterra and Wiener Theories of Nonlinear Systems; John Wiley & Sons: Hoboken, NJ, USA, 1980. [Google Scholar]

- Batselier, K.; Chen, Z.; Wong, N. Tensor Network alternating linear scheme for MIMO Volterra system identification. Automatica 2017, 84, 26–35. [Google Scholar] [CrossRef]

- Birpoutsoukis, G.; Marconato, A.; Lataire, J.; Schoukens, J. Regularized non-parametric Volterra kernel estimation. Automatica 2017, 82, 324–327. [Google Scholar] [CrossRef]

- Narendra, K.; Gallman, P.G. An iterative method for the identification of nonlinear systems using the Hammerstein model. IEEE Trans. Autom. Control 1966, 11, 546–550. [Google Scholar] [CrossRef]

- Giri, F.; Bai, E.W. Block-Oriented Nonlinear System Identification; Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Bai, E.; Li, D. Convergence of the iterative Hammerstein system identification algorithm. IEEE Trans. Autom. Control 2004, 49, 1929–1940. [Google Scholar] [CrossRef]

- Billings, S.; Fakhouri, S. Identification of systems containing linear dynamic and static nonlinear elements. Automatica 1982, 18, 15–26. [Google Scholar] [CrossRef]

- Chang, F.; Luus, R. A noniterative method for identification using Hammerstein model. IEEE Trans. Autom. Control 1971, 16, 464–468. [Google Scholar] [CrossRef]

- Giri, F.; Rochdi, Y.; Chaoui, F. Parameter identification of Hammerstein systems containing backlash operators with arbitrary-shape parametric borders. Automatica 2011, 47, 1827–1833. [Google Scholar] [CrossRef]

- Śliwiński, P. Lecture Notes in Statistics. In Nonlinear System Identification by Haar Wavelets; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Stoica, P.; Söderström, T. Instrumental-variable methods for identification of Hammerstein systems. Int. J. Control 1982, 35, 459–476. [Google Scholar] [CrossRef]

- Bershad, N.; Celka, P.; Vesin, J. Analysis of stochastic gradient tracking of time-varying polynomial Wiener systems. IEEE Trans. Signal Process. 2000, 48, 1676–1686. [Google Scholar] [CrossRef]

- Chen, H.F. Recursive identification for Wiener model with discontinuous piece-wise linear function. IEEE Trans. Autom. Control 2006, 51, 390–400. [Google Scholar] [CrossRef]

- Hagenblad, A.; Ljung, L.; Wills, A. Maximum likelihood identification of Wiener models. Automatica 2008, 44, 2697–2705. [Google Scholar] [CrossRef]

- Lacy, S.; Bernstein, D. Identification of FIR Wiener systems with unknown, non-invertible, polynomial non-linearities. Int. J. Control 2003, 76, 1500–1507. [Google Scholar] [CrossRef]

- Vörös, J. Parameter identification of Wiener systems with multisegment piecewise-linear nonlinearities. Syst. Control Lett. 2007, 56, 99–105. [Google Scholar] [CrossRef]

- Wigren, T. Convergence analysis of recursive identification algorithms based on the nonlinear Wiener model. IEEE Trans. Autom. Control 1994, 39, 2191–2206. [Google Scholar] [CrossRef]

- Pintelon, R.; Schoukens, J. System Identification: A Frequency Domain Approach; Wiley-IEEE Press: Hoboken, NJ, USA, 2004. [Google Scholar]

- Greblicki, W.; Pawlak, M. Nonparametric System Identification; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Györfi, L.; Kohler, M.; Krzyżak, A.; Walk, H. A Distribution-Free Theory of Nonparametric Regression; Springer: New York, NY, USA, 2002. [Google Scholar]

- Härdle, W. Applied Nonparametric Regression; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Wand, M.; Jones, H. Kernel Smoothing; Chapman and Hall: London, UK, 1995. [Google Scholar]

- Hasiewicz, Z.; Mzyk, G. Combined parametric-nonparametric identification of Hammerstein systems. IEEE Trans. Autom. Control 2004, 48, 1370–1376. [Google Scholar] [CrossRef]

- Hasiewicz, Z.; Mzyk, G. Hammerstein system identification by non-parametric instrumental variables. Int. J. Control 2009, 82, 440–455. [Google Scholar] [CrossRef]

- Mzyk, G. A censored sample mean approach to nonparametric identification of nonlinearities in Wiener systems. IEEE Trans. Circuits Syst. 2007, 54, 897–901. [Google Scholar] [CrossRef]

- Mzyk, G. Combined Parametric-Nonparametric Identification of Block-Oriented Systems; Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Mzyk, G.; Wachel, P. Kernel-based identification of Wiener-Hammerstein system. Automatica 2017, 83, 275–281. [Google Scholar] [CrossRef]

- Mzyk, G.; Wachel, P. Wiener system identification by input injection method. Int. J. Adapt. Control Signal Process. 2020, 34, 1105–1119. [Google Scholar] [CrossRef]

- Wachel, P.; Mzyk, G. Direct identification of the linear block in Wiener system. Int. J. Adapt. Control Signal Process. 2016, 30, 93–105. [Google Scholar] [CrossRef]

- Kozdraś, B.; Mzyk, G.; Mielcarek, P. Identification of the heating process in Differential Scanning Calorimetry with the use of Hammerstein model. In Proceedings of the 2020 21st International Carpathian Control Conference (ICCC), High Tatras, Slovakia, 27–29 October 2020. [Google Scholar]

- Boyd, S.; Chua, L. Fading memory and the problem of approximating nonlinear operators with Volterra series. IEEE Trans. Circuits Syst. 1985, 32, 1150–1161. [Google Scholar] [CrossRef]

- Van der Vaart, A.W. Asymptotic Statistics; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).