Abstract

A large amount of time series data is being generated every day in a wide range of sensor application domains. The symbolic aggregate approximation (SAX) is a well-known time series representation method, which has a lower bound to Euclidean distance and may discretize continuous time series. SAX has been widely used for applications in various domains, such as mobile data management, financial investment, and shape discovery. However, the SAX representation has a limitation: Symbols are mapped from the average values of segments, but SAX does not consider the boundary distance in the segments. Different segments with similar average values may be mapped to the same symbols, and the SAX distance between them is 0. In this paper, we propose a novel representation named SAX-BD (boundary distance) by integrating the SAX distance with a weighted boundary distance. The experimental results show that SAX-BD significantly outperforms the SAX representation, ESAX representation, and SAX-TD representation.

1. Introduction

Time series data are being generated every day in a wide range of application domains [1], such as bioinformatics, finance, engineering, etc. [2]. The parallel explosions of interest in streaming data and data mining of time series [3,4,5,6,7,8,9] have had little intersection. Time series classification methods can be divided into three main categories [10]: feature based, model based and distance based. There are many methods for feature extraction, for example: (1) spectral analysis such as discrete Fourier transform (DFT) [11], (2) discrete wavelet transform (DWT) [12], where features of the frequency domain are considered, and (3) singular value decomposition (SVD) [13], where eigenvalue analysis is carried out in order to find an optimal set of features. The model-based classification methods include auto-regressive models [14,15] or hidden Markov models [16], among others. In distance-based methods, 1-NN [1] has been a widely used method due to its simplicity and good performance.

Until now, almost all the research in distance-based classification has been oriented to defining different types of distance measures and then exploiting them within the 1-NN classifiers. The 1-NN classifier is probably the simplest classifier among all classifiers, while its performance is also good. Dynamic Time Warping(DTW) [17] as a distance method used for 1-NN classifier makes the classification accuracy reach the maximum at that time. However, due to the high dimensions, high volume, high feature correlation, and multiple noises, it has brought great challenges to the classification of time series, and even makes the DTW unusable. In fact, all non-trivial data mining and indexing algorithms decrease exponentially with dimensions. For example, above 16–20 dimensions, the index structure will be degraded to sequential scanning [18]. In order to reduce the time series dimensions and have a low bound to the Euclidean distance. The Piecewise Aggregate Approximation(PAA) [19] and Symbolic Aggregate Approximate(SAX) [20] were brought up. The distance in the SAX representation has a lower bound to the Euclidean distance. Therefore, the SAX representation speeds up the data mining process of time series data while maintaining the quality of the data mining results. SAX has been widely used in mobile data management [21], financial investment [22], feature extraction [23]. In recent years, with the popularity of deep learning, applying deep learning methods to multivariate time series classification has also received attention [24].

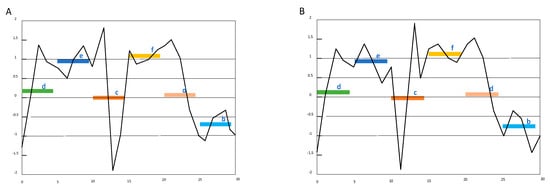

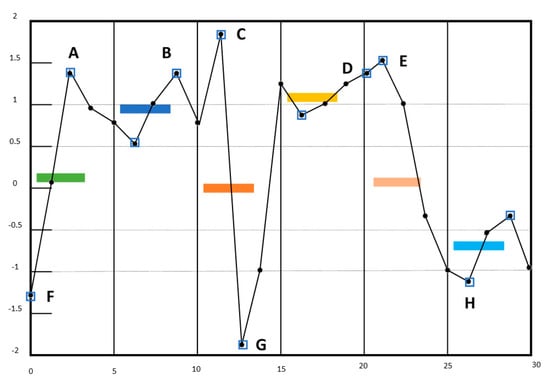

SAX allows a time series of arbitrary length n to be reduced to a string of arbitrary length w [20] (w < n, typically w << n). The alphabet size α is also an arbitrary integer. The SAX representation has a major limitation. In the SAX representation, symbols are mapped from the average values of segments, and some important features may loss. For example, in Figure 1, if w = 6 and α = 6, time series a represented as ‘decfdb’.

Figure 1.

Financial time series A and B have the same SAX symbolic representation ‘decfdb’ in the same condition where the length of time series is 30, the number of segments is 6 and the size of symbols is 6. However, they are different time series.

However, it can be clearly seen from the Figure 1 that the time series changes drastically. Therefore, a compromise is needed to reduce the dimension of time series while improving the accuracy. ESAX representation can express the characteristics of time series in more detail [25]. It chooses a maximum, a minimum and the average value in each time series segment as the new feature, then map the new feature to strings according to the SAX method. For the same time series, in Figure 1 time series a can be represented as ‘adfeeffcaefffdaabc’.

SAX-TD (trend distance) method improves the accuracy of ESAX and reduce the complexity of symbol representation [26]. It uses fewer values than ESAX due to the strategy that one segment only needs one trend distance. In the Figure 1, the time series a is represented as’ −1.4d0.13e0.75c0.13f1.25d−0.25b−0.25′.

In this paper we propose a new method SAX-BD, in which BD means the boundary distance. For each divided time series segment, they have the maximum point and minimum point, the distance from them to average value named boundary distance. Time series a and b in Figure 1 have a high probability of being identified as the same if SAX-TD is used. However, in our method, time series A is represented as ’ d(−1.4,0.63)e(−0.38,0.38)c(1.36,−1.39)f(−0.25,0.36)d(1.5,−1)b(−0.38,0.38)’ and time series B is represented as ‘d(−1.4,1.2)e(0.38,−0.5)c(−1.8,1.9)f(0.38,−0.25)d(1.5,−1.0)b(0.45,−0.55)‘. Obviously, there is a big difference between the two representations.

In our work, there are three main contributions. First, we prove an intuitive boundary distance measure on time series segments. The average value of the segment and its boundary distance help measure different trends of time series more accurately. Our representation captures the trends in time series better than the SAX, ESAX, and the SAX-TD representations. Second, we discussed the SAX-TD algorithm and the ESAX algorithm and explained that our method is actually a generalization of these two methods. For their poorly performing data, our method has improved the result to a certain extent. For the data they outperform, we can basically keep the reduced accuracy rate in a very small range. Third, we proved that our improved distance measure not only keeps a lower-bound to the Euclidean distance, but also achieves a tighter lower bound than that of the original SAX distance.

2. Related Work

Given that the normalized time series have highly Gaussian distribution, we can simply determine the “breakpoints” that will produce equal-sized areas under Gaussian curve. The idea of the SAX algorithm is to assume that the average value of each segment has the equal probability in Each segment is projected into its own specified area. While w determines how many dimensions to reduce for the n-dimension time series. The smaller w is, the larger n/w, indicating that more information will be compressed.

2.1. The Distance Calculation by SAX

For example, a sequence data of length n is converted into w symbols. The specific steps are as follows:

Divides time series data into w segments of the same size according to the Piecewise Aggregate Approximation (PAA) algorithm. The average value of each time segment for example the element of is the average of the segment and is calculated by the following equation:

where is one time point of time series C, using breakpoints to divide space into α equiprobable regions are determined

These breakpoints are arranged in list order as , They satisfy Gaussian distribution, and the spacing between and is 1/α.

Finally, the divided s time series segments are represented by these breakpoints. The SAX algorithm can map segments’ average values to alphabetic symbols. The mapping rule of SAX is as follows, if it is smaller than the lower limit of the minimum breakpoints, it is mapped to ‘a’, and then greater than a bit smaller than the second breakpoints lower limit is mapped to ‘b’. The symbols after these mappings can roughly indicate a time series.

Given two time series Q and C, the two time series are of the same length n, which is divided into w time segments. and are the symbol strings after they are transformed into SAX algorithm representation, then the SAX distance between Q and C can be expressed as follows:

Among them, the can be obtained according to Table 1, the query method can be expressed as the following equation:

Table 1.

A lookup table for breakpoints with the alphabet size from 3 to 10.

2.2. An Improvement of SAX Distance Measure for Time Series

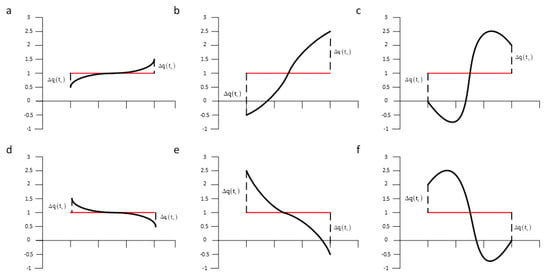

As the first to symbolize time series and can be effectively applied to time series classification, SAX has been recognized by many experts and scholars, however the shortcoming is also obviously to see. The larger w and smaller α, the more features will be lost for time series. To keep as much important information as possible, time series trend needed to be kept in the process of SAX dimensionality reduction. For example, in reference [26], some limitations of using SAX algorithm on the classification for time series were discussed. In this paper, these cases are listed separately in Figure 2.

Figure 2.

Several typical segments with the same average value but different trends [26]. Segment a and d, b and e, c and f are in opposite directions while all in same mean value.

In Figure 2, the average value of a and d, b and e, c, and f correspond to the same, but it is very clear that their time series tend to be significantly different. In order to correctly describe this difference, the author proposes using the SAX-TD method. According to the calculation rules of SAX-TD, the trend distance td (q, c) of two time series q and c is first calculated. The specific definition is as follows:

where and are the start point and end point of a time segment for the time series q and c. Respectively, the specific definition of is as follows:

will be calculated in the same way, in article the author refers to this method as the tendency of time segments.

With the SAX method description, the time series Q and C respectively represented as follows:

is a sequence symbolized by SAX, are the trend variations, and is the change of the last point.

The distance between two time series can be defined based on the trend distance as follows:

where and respectively, denote the time series Q and C, n is the length of Q and C, and w is the number of time segments. The distance between time series Q and C can be calculated by Equation (6). In this paper, the author proved that this method has a low bound to Euclidean distance, and the experimental results also showed that this method improves classification accuracy compared with ESAX.

3. SAX-BD: Boundary Distance-Based Method For Time Series

3.1. An Analysis of SAX-TD

Figure 3.

Several typical segments with the same average value and same trends but different boundary distance. Segment b and c, e and f with the same SAX representation and trend distance while they are different segments.

The difference between b and e, c and f can be identified by using the SAX-TD algorithm, because, for b, the trend distance is , and for the e is , the final calculation results can distinguish these time series. However, if you want to identify the difference between a and c, e and f, there is a great possibility that you will fail. The trend distance for b and c, e and f are both the same value or , according to the calculation rules of SAX-TD, they will be judged as the same time series.

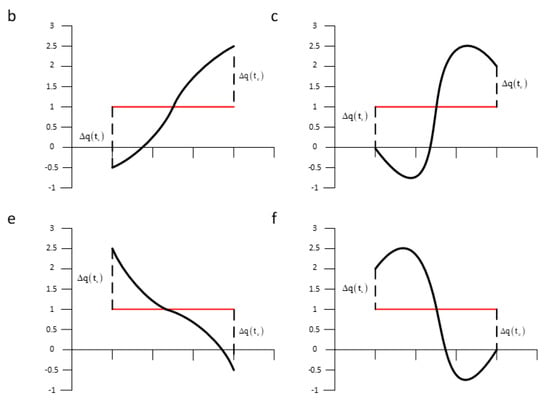

3.2. Our Method SAX-BD

In order to solve these problems, we propose to increase the boundary distance as a new reference instead of the trend distance. The details are as follows:

From Figure 4, we can see that this method is somewhat the same as ESAX, but it is different from ESAX.

Figure 4.

Several typical segments with the same average value but boundary distance. Segment a and d, b and e, c and f are in opposite directions while all in same mean value. The trend distance is replaced by boundary distance.

The maximum and minimum value of each time segment is the boundary. The boundary distance of c isand for f is, shown in Equations (7) and (8):

The tendency change of c calculated by SAX-BD algorithm is , and the tendency change of f is . It can be seen that our method can also distinguish well. For b and c, the distance calculated using SAX-TD is the same, but in our method, SAX-BD, the equation is not equal to 0, indicating that there is a possibility of distinction between the time series. For the cases of g and h, according to our method, it is as follows:

3.3. Difference from ESAX

In the ESAX method, the maximum, minimum, and mean values in each time segment are mapped and arranged according to the following formula:

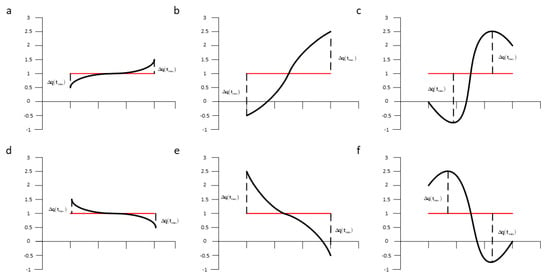

However, for the same feature points, we did not directly map these points in the same way as the ESAX method, mainly due to the following two reasons:

Firstly, in Figure 5, if you follow these points in the ESAX method diagram, for example, for A, B, C, D, E, they will all be mapped to the same character ‘f’ for α = 6 and F, G, H will be mapped to ‘a’. We directly retain these feature points and calculate the boundary distance. At this time, the specific values of A, B, C, D, E, and F, G, H can have a better discrimination.

Figure 5.

Time series represented as ‘adfeeffcaefffdaabc’ by ESAX [25]. Where the length of time series is 30, the number of segments is 6 and the size of symbols is 6. The capital letters A–H represent the maximum and minimum values in every segment.

Secondly, if we follow the ESAX method, we can see from Equation (11) that there may be a total of 6 comparisons. In fact, according to our method, only two comparisons are needed. Since our distance measurement is consistent with SXA-TD, the low correlation between Equation (13) and Euclidean distance has also been proven in the SAX-TD paper.

Finally, time series Q and C can be expressed as follows according to our method SAX-BD:

The equation for calculating the distance between Q and C can be expressed as follows:

3.4. Lower Bound

One of the most important characteristics of the SAX is that it provides a lower bounding distance measure. Lower bound is very useful for controlling errors and speeding up the computation. Below, we will show that our proposed distance also lower bounds the Euclidean distance.

According to [19,20], we have proved that the PAA distance lower bounds the Euclidean distance as follows:

For proving the TDIST also lower bounds the Euclidean distance, we repeat some of the proofs here. Let Q and C be the means of time series Q and C respectively. We first consider only the single frame case (i.e., w = 1), Equation (14) can be rewritten as follows:

Recall that Q is the average of the time series, so can be represented in terms of . The same applies to each point in C, Equation (15) can be rewritten as follows:

Because , Recall the definition in Equation (9) and Equation (12), , we can obtain an inequality as follows (its’ obviously exists that the boundary distance in ):

Substituting Equation (16) into Equation (17), we get:

According to [20], the MINDIST lower bounds the PAA distance, that is:

where and are symbolic representations of Q and C in the original SAX, respectively. By transitivity, the following inequality is true

Recall Equation (15), this means

N frames can be obtained by applying the single-frame proof on every frame, that is

The quality of a lower bounding distance is usually measured by the tightness of lower bounding (TLB).

The value of TLB is in the range [0, 1]. The larger the TLB value, the better the quality. Recall the distance measure in Equation (13), we can obtain that which means the SAX-BD distance has a tighter lower bound than the original SAX distance.

4. Experimental Validation

In this section, we will present the results of our experimental validation. We used a stand-alone desktop computer, Inter(R) Core(TM) i5-4440 CPU @ 3.10 GHz.

Firstly, we introduce the data sets used, the comparison methods and parameter settings. Then, in order to show experimental results more conveniently, we evaluate the performances of the proposed method in terms of classification accuracy rate shown in figures and classification error rate shown in tables.

4.1. Data Sets

According to the latest time series database UCRArchive2018, in order to make the experimental results more credible, 100 data sets were obtained on the basis of removing null values in the data and show in Table 2. Each data set is divided into a training set and a testing set and a detailed documentation of the data. The datasets contain classes ranging from 2 to 60 and have the lengths of time series varying from 15 to 2844. In addition, the types of the data sets are also diverse, including image, sensor, motion, ECG, etc. [27].

Table 2.

100 different types of time series datasets.

4.2. Comparison Methods and Parameter Settings

We compare the result with the above-mentioned ESAX and SAX. We also compare with SAX-TD, which is another latest research improving SAX based on the trend distance. We do the evaluation on the classification task, of which the accuracy is determined by the distance measure. In this way, it is well proved that our method improves the SAX-TD method. To compare the classification accuracy, we conduct the experiments using the 1 nearest neighbor (1-NN) classifier by reading the sun’s paper [26].

To make it fairer for each method, we use the testing data to search for the best parameters w and α. For a given timeseries of length n, w and α are picked using the following criteria [28]):

For w, we search for the value from 2 up ton = 2 and double the value of w each time.

For α, we search for the value from 3 up to 10.

If two sets of parameter settings produce the same classification error rate, we choose the smaller parameters.

The dimensionality reduction ratios are defined as follows:

4.3. Result Analysis

To make the table fit all the data, we abbreviate SAX-TD for SAXTD and SAX-BD for SAXBD. The overall classification results are listed in Table 3, where entries with the lowest classification error rates are highlighted. SAX-BD has the lowest error in the most of the data sets (69/100), followed by SAX-TD (22/100), EU (15/100). In some cases, it performs much better than the other two methods, and even achieves a 0 classification error rate.

Table 3.

1-NN classification error rates of different methods.

We use the Wilcoxon signed ranks test to test the significance of our method against other methods. The test results are displayed in Table 4. Where , and denote the numbers of data sets where the error rates of the SAX-BD are lower, larger than and equal to those of another method respectively. The p-values (the smaller a p-value, the more significant the improvement) demonstrate that our distance measure achieves a significant improvement over the other four methods on classification accuracy.

Table 4.

The Wilcoxon signed ranks test results of the SAX-BD vs. other methods. A p-value less than or equal to 0.05 indicates a significant improvement. n* means positive, n means equal and n0 means negative. The larger the value of n*, the better performance of SAX-TD.

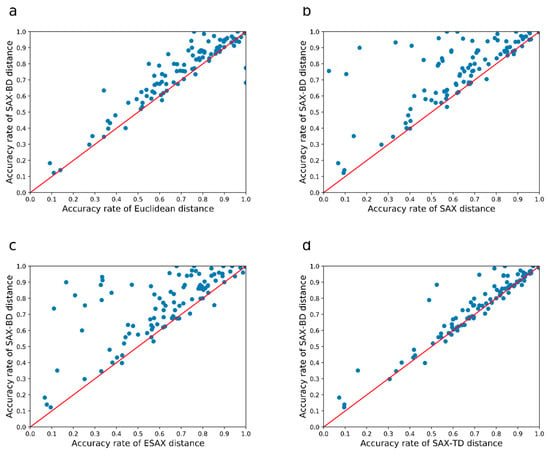

To provide a more intuitive illustration of the performance of the different measures compared in Table 3 and Table 4, we use scatter plots for pairwise comparisons. In a scatter plot, the accuracy rates of two measures under comparison are used as the x and y coordinates of a dot, where a dot represents a data set. When a dot above the diagonal line, the ‘y’ method performs better than the ‘x’ method. In addition, the further a dot is from the diagonal line, the greater the margin of an accuracy improvement. The region with more dots indicates a better method than the other.

In the following, we explain the results in Figure 6.

Figure 6.

The SAX-BD algorithm is compared with other algorithms for accuracy. (a–d) represents a comparison between SAX-BD with Euclidean, SAX, ESAX, SAX-TD. The more dots above the red slash, the better performs of SAX-BD.

We illustrate the performance of our distance measure against the Euclidean distance, SAX distance, ESAX distance, SAX-TD distance in Figure 6a–d, respectively. Our method outperforms the other four methods by a large margin, both in the number of points and the distance of these points from the diagonals. From these figures, we can see that most of the points are far away from the diagonals, which indicates that our method has much lower error rates on most of the data sets.

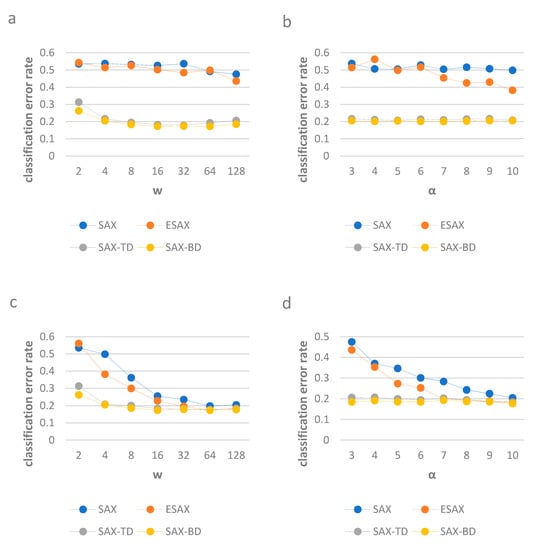

To show the continuity performance of our method and other three methods, we run the experiments on data set Yoga. We firstly compare the classification error rates with different w while α is fixed at 3, and then with different α while w is fixed at 4 (to illustrate the classification error rates using small parameters). Secondly, we use w, which varies, while α is fixed at 10, and then α varies while w is fixed at 128 (to illustrate the classification error rates using large parameters).

SAX-TD and SAX-BD has lower error rates than the other two methods when the parameters are small and large, SAX-BD has lower error rates than the SAX-TD. The results are shown in Figure 7.

Figure 7.

The classification error rates of SAX, ESAX, SAX-TD and SAX-BD with different parameters w and α. For (a), on Gun-Point, w varies while α is fixed at 3, for (b), on Gun-Point, varies while w is fixed at 4. For (c), on Yoga, w varies while α is fixed at 10, for (d), on Yoga, varies while w is fixed at 128.

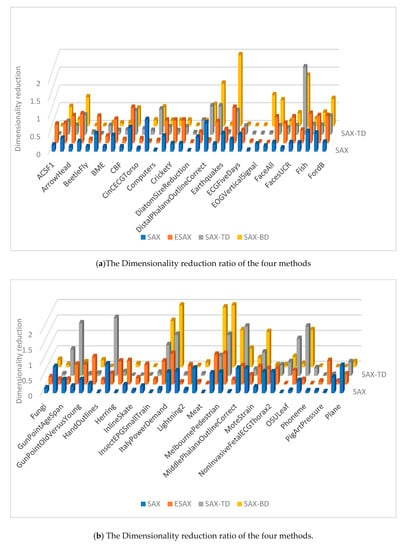

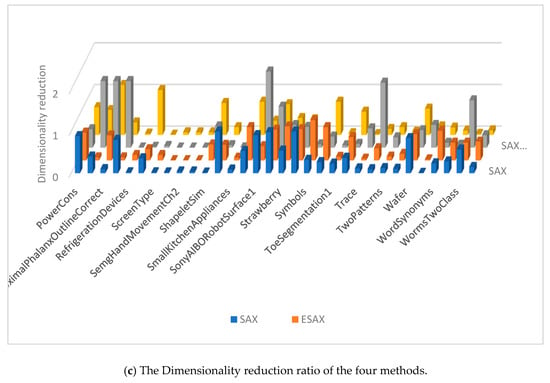

The dimensionality reduction ratios are calculated using the w when the four methods achieve their smallest classification error rates on each data set, shown in Figure 8. The SAX-TD and SAX-BD representation use more values than SAX, SAX-TD use fewer values than ESAX. In fact, our method has a low dimensionality reduction ratio in majority datasets, and even uses fewer values than SAX-TD.

Figure 8.

Dimensionality reduction ratio of the four methods.

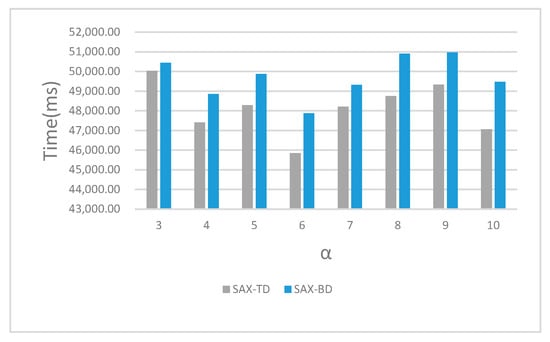

We also recorded the running time of SAX-TD and SAX-BD with different α from 3 to 10 shown in Figure 9. The experimental results indicated that we have made a greater improvement at the cost of only a little time, and that’s well worth it.

Figure 9.

The running time of different methods with different values of α.

5. Conclusions

Our proposed SAX-BD algorithm uses the boundary distance as a new distance metric to obtain a new time series representation. We analyze some cases that ESAX and SAX-TD cannot solve, and it is known that the classification accuracy of ESAX algorithm is not as good as SAX-TD. We combine the advantages of these two methods, analyzing and deriving our method as an extension of these two methods. We also proved that our improved distance measure not only keeps a lower-bound to the Euclidean distance, but also has a low dimensionality reduction ratio in majority datasets. In terms of the expression complexity of time series, our algorithm SAX-BD and ESAX algorithm are three times more than SAX, and two times more than SAX-TD. However, in terms of running time, we spend just a little more. In terms of the classification accuracy, we have improved this a lot, that means a good compromise has made between dimensional reduction and classification accuracy. For future work, we intend to change our original algorithm to make time advantage.

Author Contributions

Methodology, Z.H. and S.L.; Project administration, Z.H.; Software, H.Z.; Writing—review & editing, X.M. All authors have read and agreed to the published version of the manuscript.

Funding

The work described in this paper was supported by the National Natural Science Foundation of China (41972306, U1711267, 41572314) and the geo-disaster data processing and intelligent monitoring project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abanda, A.; Mori, U.; Lozano, J.A. A review on distance based time series classification. Data Min. Knowl. Discov. 2018, 33, 378–412. [Google Scholar] [CrossRef]

- Keogh, E.J.; Kasetty, S. On the Need for Time Series Data Mining Benchmarks: A Survey and Empirical Demonstration. Data Min. Knowl. Discov. 2003, 7, 349–371. [Google Scholar] [CrossRef]

- Vlachos, M.; Kollios, G.; Gunopulos, D. Discovering similar multidimensional trajectories. In Proceedings of the 18th International Conference on Data Engineering, San Jose, CA, USA, 26 Febuary–1 March 2002; p. 673. [Google Scholar]

- Lonardi, J.; Patel, P. Finding motifs in time series. In Proceedings of the 2nd Workshop on Temporal Data Mining, Washington, DC, USA, 24–27 August 2002. [Google Scholar]

- Keogh, E.; Lonardi, S.; Chiu, B.Y. Finding surprising patterns in a time series database in linear time and space. In Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, AB, Canada, 23–25 July 2002. [Google Scholar]

- Kalpakis, K.; Gada, D.; Puttagunta, V. Distance measures for effective clustering of ARIMA time-series. In Proceedings of the 2001 IEEE International Conference on Data Mining, San Jose, CA, USA, 29 November–2 December 2001; pp. 273–280. [Google Scholar]

- Huang, Y.-W.; Yu, P.S. Adaptive query processing for time-series data. In Proceedings of the Fifth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining—KDD ’99, San Diego, CA, USA, 15–18 August 1999; pp. 282–286. [Google Scholar]

- Chan, K.-P.; Fu, A.W.-C. Efficient time series matching by wavelets. In Proceedings of the 15th International Conference on Data Engineering (Cat. No.99CB36337), Sydney, Australia, 23–26 March 1999; pp. 126–133. [Google Scholar]

- Dasgupta, D.; Forrest, S. Novelty detection in time series data using ideas from immunology. In Proceedings of the International Conference on Intelligent Systems, Ahmedabad, Indian, 15–16 November 1996. [Google Scholar]

- Xing, Z.; Pei, J.; Keogh, E. A brief survey on sequence classification. ACM SIGKDD Explor. Newsl. 2010, 12, 40–48. [Google Scholar] [CrossRef]

- Faloutsos, C.; Ranganathan, M.; Manolopoulos, Y. Fast subsequence matching in time-series databases. ACM Sigmod Rec. 1994, 23, 419–429. [Google Scholar] [CrossRef]

- Popivanov, I.; Miller, R. Similarity search over time-series data using wavelets. In Proceedings of the 18th International Conference on Data Engineering, Washington, DC, USA, 26 February–1 March 2002. [Google Scholar]

- Korn, F.; Jagadish, H.V.; Faloutsos, C. Efficiently supporting ad hoc queries in large datasets of time sequences. ACM Sigmod Rec. 1997, 26, 289–300. [Google Scholar] [CrossRef]

- Bagnall, A.; Janacek, G. A Run Length Transformation for Discriminating Between Auto Regressive Time Series. J. Classif. 2013, 31, 154–178. [Google Scholar] [CrossRef][Green Version]

- Corduas, M.; Piccolo, D. Time series clustering and classification by the autoregressive metric. Comput. Stat. Data Anal. 2008, 52, 1860–1872. [Google Scholar] [CrossRef]

- Smyth, P. Clustering sequences with hidden Markov models. In Proceedings of the Advances in Neural Information Processing Systems, Curitiba, Brazil, 2–5 November 1997. [Google Scholar]

- Berndt, D.J.; Clifford, J. Using dynamic time warping to find patterns in time series. In Proceedings of the KDD Workshop, Seattle, WA, USA, 31 July 1994. [Google Scholar]

- Keogh, E.J.; Chakrabarti, K.; Pazzani, M.J.; Mehrotra, S. Dimensionality Reduction for Fast Similarity Search in Large Time Series Databases. Knowl. Inf. Syst. 2001, 3, 263–286. [Google Scholar] [CrossRef]

- Hellerstein, J.M.; Koutsoupias, E.; Papadimitriou, C.H. On the analysis of indexing schemes. In Proceedings of the Sixteenth ACM SIGACT-SIGMOD-SIGART Symposium on Principles of Database Systems—PODS ’97, Tucson, AZ, USA, 12–14 May 1997. [Google Scholar]

- Lin, J.; Keogh, E.; Lonardi, S.; Chiu, B. A symbolic representation of time series, with implications for streaming algorithms. In Proceedings of the 8th ACM SIGMOD Workshop on Research Issues in Data Mining and Knowledge Discovery, San Diego, CA, USA, 13 June 2003. [Google Scholar]

- Tayebi, H.; Krishnaswamy, S.; Waluyo, A.B.; Sinha, A.; Abouelhoda, M.; Waluyo, A.B.; Sinha, A. RA-SAX: Resource-Aware Symbolic Aggregate Approximation for Mobile ECG Analysis. In Proceedings of the 2011 IEEE 12th International Conference on Mobile Data Management, Lulea, Sweden, 6–9 June 2011; Volume 1, pp. 289–290. [Google Scholar]

- Canelas, A.; Neves, R.F.; Horta, N. A new SAX-GA methodology applied to investment strategies optimization. In Proceedings of the Fourteenth International Conference on Genetic and Evolutionary Computation Conference Companion—GECCO Companion ’12, Philadelphia, PA, USA, 7–11 July 2012; pp. 1055–1062. [Google Scholar]

- Rakthanmanon, T.; Keogh, E. Fast shapelets: A scalable algorithm for discovering time series shapelets. In Proceedings of the 2013 SIAM International Conference on Data Mining, Austin, TX, USA, 2–4 May 2013. [Google Scholar]

- Zheng, Y.; Liu, Q.; Chen, E.; Ge, Y.; Zhao, J.L. Time Series Classification Using Multi-Channels Deep Convolutional Neural Networks. In Proceedings of the Lecture Notes in Computer Science, Leipzig, Germany, 22–26 June 2014; Springer Science and Business Media LLC: Macau, China, 2014; pp. 298–310. [Google Scholar]

- Lkhagva, B.; Suzuki, Y.; Kawagoe, K. New Time Series Data Representation ESAX for Financial Applications. In Proceedings of the 22nd International Conference on Data Engineering Workshops (ICDEW’06), Atlanta, GA, USA, 3–7 April 2006; p. 115. [Google Scholar]

- Sun, Y.; Li, J.; Liu, J.; Sun, B.; Chow, C. An improvement of symbolic aggregate approximation distance measure for time series. Neurocomputing 2014, 138, 189–198. [Google Scholar] [CrossRef]

- Dau, H.A.; Bagnall, A.; Kamgar, K.; Yeh, C.-C.M.; Zhu, Y.; Gharghabi, S.; Ratanamahatana, C.A.; Keogh, E. The UCR time series archive. IEEE/CAA J. Autom. Sin. 2019, 6, 1293–1305. [Google Scholar] [CrossRef]

- Lin, J.; Keogh, E.; Wei, L.; Lonardi, S. Experiencing SAX: A novel symbolic representation of time series. Data Min. Knowl. Discov. 2007, 15, 107–144. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).