Abstract

We present a detailed survey of results and two new results on graphical models of uncertainty and associated optimization problems. We focus on two well-studied models, namely, the Random Failure (RF) model and the Linear Reliability Ordering (LRO) model. We present an FPT algorithm parameterized by the product of treewidth and max-degree for maximizing expected coverage in an uncertain graph under the RF model. We then consider the problem of finding the maximal core in a graph, which is known to be polynomial time solvable. We show that the Probabilistic-Core problem is polynomial time solvable in uncertain graphs under the LRO model. On the other hand, under the RF model, we show that the Probabilistic-Core problem is W[1]-hard for the parameter d, where d is the minimum degree of the core. We then design an FPT algorithm for the parameter treewidth.

1. Introduction

Network data analytics has come to play a key role in many scientific fields. A large body of such real-world networks have an associated uncertainty and optimization problems are required to be solved taking into account the uncertainty. Some of the uncertainty are due to the data collection process, machine-learning methods employed at preprocessing, privacy-preserving reasons and due to unknown causes during the operation of the network. Throughout this work, we study the case where the uncertainty is associated with the availability or the nature of relationship between the vertices of the network. The vertices themselves are assumed to be always available, in other words, the vertices are assumed to be certain. The concepts can be naturally modified to model uncertainty by associating uncertainty with the vertices. Road networks [1,2] are a natural source of optimization problems where the uncertainty is due to the traffic. Indeed, in uncertain traffic networks [2], the travel-time on a road is inherently uncertain. One way of modeling this uncertainty is by modeling the travel-time as a random variable. Indeed, the random variable is quite complex since the probability that it takes a certain value is dependent on other parameters like the day of the week and time of the day. However, our focus is only on the fact that uncertainty is modeled by an appropriately defined random variable. The natural optimization problem on an uncertain traffic network is to compute the expected minimum-time s-t path. In biological networks [3], the protein-protein interaction (PPI) network is an uncertain network. In a PPI network, proteins are represented by vertices and interaction between proteins are represented by edges. The interaction between proteins are derived through noisy and error-prone experiments which cause uncertainty. Sometimes the interactions are predicted by the nature of proteins instead of experiments. In this example, the uncertainty can be of two types: it can be on the presence or the absence of a protein-protein interaction or on the strength of an interaction between two proteins. Similarly, social networks are another example of uncertain networks where the members of the network are known, and the uncertainty is on the link between two members in the network. The interaction between members of an uncertain network associated with a social network are obtained using link prediction and by evaluating peer influence [4]. In all these three examples of networks with uncertainty, the uncertainty on the edges can be modeled as random variables. A random variable can be used to indicate the presence or the absence of an edge. In this case, the random variable takes values from the set with each value having an associated probability. A random variable can also be used to model the strength of the interaction between two entities in the network, in which case for an edge, the corresponding random variable takes values from a set of values and each value has an associated probability.

In this work, we survey the different models of uncertainty and the associated optimization problems. We then present our results on optimization problems on uncertain networks when the networks have bounded treewidth. The fundamental motivation for this direction of study is that a typical optimization problem on an uncertain graph is an expectation computation over many graphs implicitly represented by the uncertain graph. A natural question is there are problems which have efficient algorithms on a graph in which the edges have no uncertainty but become hard on uncertain graphs. The typical optimization problems considered on uncertain graphs are shortest path [5], reliability [6], minimum spanning trees [7,8], maxflows [9,10], maximum coverage [11,12,13], influence maximization [14] and densest subgraph [15,16]. This article is structured partly as a survey and partly as an original research article. In Section 1.1, we formally present the concepts in uncertain graphs and then present subsequent details on uncertain graphs in Section 2. In Section 1.2, we survey the different algorithmic results on uncertain graphs and in Section 1.3 we outline our results.

1.1. Uncertain Graphs-Definition and Semantics

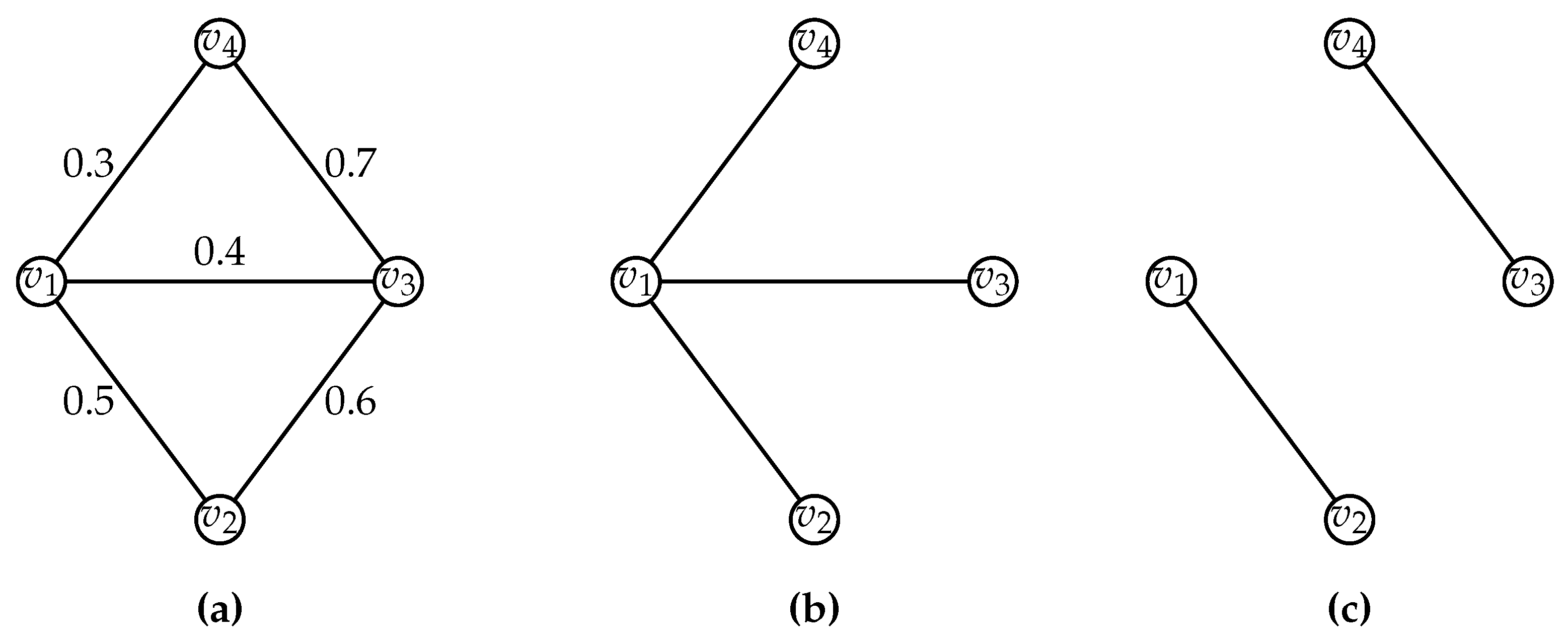

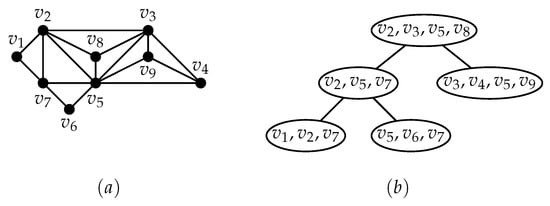

We consider the graphs with uncertain edges and certain vertices. An uncertain graph, denoted by , is a triple that consists of a vertex set V, an edge set E and a set of outcomes. For each edge , the outcome is a set of values and an associated probability distribution on . The outcome is considered to be an interval or a finite set. For each , if the outcome is an interval, the associated probability distribution is called a continuous distribution, and if it is a finite set, the associated probability distribution is called a discrete distribution. In either case, the natural distribution is the uniform distribution. For the case in which for each edge , is a closed interval , the probability that e gets specific value in is . In case for each , is a finite set under the uniform distribution, the probability that e gets a specific value from is . Such uncertain graphs were the focus of the earliest results [5,7,9,10] and we survey these in Section 1.2. However, in general, the uncertainty could be modeled by any probability distribution . Therefore, the uncertain graph is a succinct representation of the set of all edge weighted graphs such that for each edge e its weight is a value from . This set is an uncountable set when is an interval and it is in case each , is a finite set. In this paper, we present our results for the case when for each edge e, the outcome is the set of values . Naturally, 0 models the absence of an edge and 1 models the presence of an edge. An uncertain graph under this condition is a succinct representation of the set of all edge subgraphs of . In this case, the uncertain graph is represented as a triple where is a function defined on E and is said to be the survival probability of the edge e. The failure probability of an edge e is . The set of all graphs represented by an uncertain graph is well-known as the possible world semantics (PWS) [17,18] of the uncertain graph. For each , is called a possible world of and this is denoted by the notation . For an uncertain graph , there are possible worlds. An uncertain graph and two of its possible worlds are illustrated in Figure 1.

Figure 1.

(a) A probabilistic graph ; (b) A possible world of with ; (c) Another possible world of with .

The probability associated with a possible world depends on the probability distributions on and, most importantly, the dependence among the edge samples. A distribution model is a specification of the dependence among edge samples. Further, a distribution model uniquely determines a probability distribution on the possible worlds. For example, if the edge samples are all independent, the distribution model is called the Random Failure (RF) model. Under the RF model, the probability of a possible world H is given by . Based on the dependence among the edge samples, the literature is rich in different distributions on the possible worlds. The distributions of interest in this paper are the Random Failure (RF) model, Independent Cascade (IC) model, Set-based Dependency (SBD) model and the Linear Reliability Ordering (LRO) model. These distribution models are all well-motivated by practical considerations on uncertain graphs in influence maximization, facility location, network reliability, to name a few. The detailed description of these distribution models and the corresponding distributions on the possible worlds are discussed in Section 2. In short, an uncertain graph along with a distribution model is a succinct description of an unique probability distribution on the PWS of the uncertain graph. Therefore, an uncertain graph along with a distribution model is equivalent to a sampling procedure to obtain a random sample from the corresponding probability distribution on the PWS of the uncertain graph. In Section 2, we describe the different distribution models by describing the corresponding sampling procedures of the edges in E.

The typical computational problem is posed for a fixed nature of the dependence among the edge samples from an input uncertain graph. The distributions on the edges and the dependence among the edge samples uniquely define the probability distribution on the possible worlds. Therefore, for a fixed dependence among the edge samples, the generic computational problem is to compute a solution that optimizes the expected value of a function over the distribution on the possible worlds. The problems that have been extensively studied are facility location to maximize coverage [19] and a selection of influentials on a social network to maximize influence [14]. Clearly, both these problems are coverage problems, studied two decades apart motivated by different considerations. In this paper, we are also motivated by understanding the parameterized complexity of problems on uncertain graphs. Historically, the earliest results considered different graph problems in which the distributions are on the set of values of the edge weights. We next present a survey of those results.

1.2. Survey of Optimization Problems in Uncertain Graphs

Graphs have been used to represent the relationships between entities. A graph is denoted by where V is set of vertices and is set of edges representing relationship between pairs of vertices. PPI networks in bioinformatics, road networks and social networks are graphs with an uncertainty among the edges and are considered as uncertain graphs. The earliest ideas in graphs with uncertain edge weights were introduced by Frank and Hakimi [9] in 1965. Frank and Hakimi studied the maximum flow in a directed graph with uncertain capacities. On an input, consisting of an uncertain digraph and a continuous random variable with the uniform probability distribution on the capacity of each edge e, the probabilistic flow problem is to find the maximum flow probability and the joint distribution of the cut set values. Later in 1969, under the same setting Frank [5] studied the probabilistic shortest path problem on an undirected graph . The probabilistic shortest path problem is to compute for each ℓ, the probability that the shortest path is at most ℓ. In 1976, J. R. Evans [10] studied the probabilistic maximum flow in a directed acyclic graph (DAG) with a discrete probability distribution on the edge capacities. A relatively recent result in 2008 is on the minimum spanning tree problem on uncertain graphs, by Erlebach et al. [7], with uncertain edge weights from a continuous distribution. Here the goal is to optimize the number of edges whose weight is sampled to obtain a spanning tree which achieves the expected MST weight over the possible worlds. This problem is different from the optimization problems of interest to us in this paper- given an uncertain graph as input, our goal is to optimize the expected value of a structural parameter over the possible worlds of the uncertain graph.

Optimization problems on uncertain graphs related to connectedness are among the most fundamental problems. Apart from their practical significance, they pose significant algorithmic challenges in different computational models. Further, the computational complexity of the problems increases significantly in the presence of uncertainty. In 1979, Valiant [6] studied the network reliability problem on uncertain graphs. The network reliability problem is a well-studied #P-hard problem [6,17,20,21,22,23]. The reliability problems have numerous applications in communication networks [24,25], biological networks [3] and social networks [26,27]. For a given network, reliability is defined as the ability of the network to remain operational after the failure of some of its links. The input consists of an uncertain graph and a subset and the aim is to compute the probability that S is connected. Clearly, the reliability problem on uncertain graphs generalizes the graph connectivity problem which is polynomial time solvable. The Canadian Traveler Problem (CTP), formulated by Papadimitriou and Yannakakis in 1991 [28], is an online problem on uncertain graphs. Given an uncertain graph , a source s and a destination t, a traveler must find a walk from s to t, where an edge e is known to have survived with probability only when the walk reaches one of its end points, after which it does not fail, conditioned on it having survived. The objective is to minimize the expected path length over the distribution on the possible worlds and the walker’s choices.

Coverage in Uncertain Graphs. Apart from the themes of network flows and connectedness, coverage problems are very practical when the uncertainty is on the survival of the edges. In this framework, the uncertainty is on whether an edge will survive a disaster and the goal is to place facilities in the network such that the expected coverage over the possible worlds is maximized. Each possible world can be thought of as the set of edges which survive a disaster. Formally, an uncertain graph is a succinct description of the set of possible worlds that can arise due to a disaster in which an edge e is known to survive with probability . The nature of dependence among the edge samples is used to model the nature of edge failures in the event of a disaster. As mentioned earlier, a fixed nature of dependence among the edge samples uniquely defines a probability distribution on the possible worlds. From this point, in this paper, we consider uncertain graphs where the uncertainty is on the survival of an edge (recall, the other possibility is on the uncertain edge weight). Further, all the results we present are for a fixed dependence among edge samples and such a fixed dependence among the edge samples is called a distribution model. Given the motivation of disasters, the distribution model is specified based on the dependence among the edge failures (recall, the failure probability of an edge e is ). In this framework, for a fixed distribution model, the function to be optimized is the coverage function.

Definition 1

(Coverage within distance r). The input consists of an uncertain graph and an integer k. The goal is to compute a k-sized vertex set S which maximizes the expected total weight of the vertices which are at distance at most r from S. The expectation is over the possible worlds represented by the uncertain graph.

Naturally, we refer to k as the budget, r as the radius of coverage (the number of hops in the network) and S is the set of vertices where facilities have to be located. For certain graphs and , the facility location with unreliable edges problem is the well-studied budgeted dominating set problem which is known to be NP-hard [29]. The other case of natural interest is for . In this case, the coverage problem is to find a set S of k vertices so as to maximize the expected number of vertices connected to S. In the case of certain graphs, this is polynomial time solvable as the problem is to find k connected components whose total vertex weight is the maximum.

Coverage in Social Networks. Coverage problems also have a natural interpretation in social networks. The dynamics of a social network based on the word-of-mouth effect have been of significant interest in marketing and consumer research [30], where a social network is referred to as an interpersonal network. The work by Brown and Reingen [30] state the different hypotheses for estimating the amount of uncertainty in a relationship between two persons in an interpersonal network. With the advent of social networks on the internet, the works of Domingos and Richardson [4,31] formalized the questions relating to the effective marketing of a product based on interpersonal relationships in a social network. In 1969, the work of Bass [32] had modeled the adoption of products as a diffusion process as a global phenomenon, independent of the interpersonal relationships between people in a society. Kempe, Kleinberg and Tardos (KKT) [14] brought together the earlier works on adoption of products in an interpersonal network and posed the question of selecting the most influential nodes in a social network with an aim to influence the maximum number of people to adopt a certain product or opinion. They considered the uncertainty in the social network to be the influence exerted by one individual on another individual and this is naturally modeled as an uncertain graph . The propagation of influence is modeled as a diffusion process which is a function of time as in Bass [32]. The influence maximization problems in KKT are considered under distribution models, which are described as diffusion phenomena, called the Independent Cascade model and the Linear Threshold Model. Among these two models, the Independent Cascade model is defined as a sampling procedure whose outcome is an edge subgraph of a given uncertain graph. Thus, this model is more relevant for our study of uncertain graphs and the IC model is defined in Section 2. In the influence maximization problems considered in KKT [14], the aim is to select k influential people S such that the expected number of people connected to S is maximized. The expected number of vertices, over the distribution model, connected to a set S is called the influence of a set S or the expected coverage of S and is denoted by . Thus, the influence maximization problem is to find the set . In Section 2, we discuss the computational complexity of for different distribution models. Thus, the influence maximization problem in Reference [14] is essentially a facility location problem where the distribution model is specified by a diffusion phenomenon, and each input instance consists of an uncertain network and a budget k.

Coverage and Facility Location. The survey due to Snyder [33] in 2006 collects the vast body of results on the facility location problem in uncertain graphs into a single research article. The earliest result known to us, due to Daskin [19] who formulated the maximum expected coverage problem where vertices are uncertain, is different from our case where the uncertainty is on the edges and the vertices are known. To the best of our knowledge, it was Daskin’s work [19] that considered the general setting of dependence among vertex failures. In this case, the probability distribution would have been defined uniquely on the possible worlds which would have been the set of induced subgraphs. Subsequently, many variants of the facility location problem for uncertain graphs have been studied for different distribution models where the uncertainty is on the survival of edges.

Eiselt et al. [34] considered the problem with single edge failure and for . In this case, exactly one edge is assumed to have failed after a disaster and the objective is to place k facilities such that the expected weight of vertices not connected to any facility is minimized. In this case, each subgraph consisting of all the edges except one is a possible world. This problem is known to be polynomial time solvable for any . When the distribution model is the Random Failure model and , the most reliable source (MRS) is well-studied [35,36,37,38]. The input is an uncertain graph and . The goal is to select one vertex v such that the expected number of vertices connected to v is maximized. Melachrinoudis and Helander [38] gave a polynomial time algorithm for the MRS problem on trees, followed by linear time algorithm on uncertain trees by Ding and Xue [37]. Colbourn and Xue [35] gave an -time algorithm for the MRS problem on uncertain series-parallel graphs. Wei Ding [36] gave an -time algorithm for the MRS problem on uncertain ring graphs.

Apart from the RF model, the Linear Reliability Ordering ( LRO) distribution model has been well-studied recently. Under this distribution model, for each integer , the facility location problem is studied as Max-Exp-Cover-r-LRO problem [11,12,13]. For the case when , the problem is known as the Max-Exp-Cover-LRO problem. Hassin et al. [11] presented an algorithm to solve the Max-Exp-Cover-LRO problem via a reduction to the Max-Weighted-k-Leaf-Induced-Subtree problem. They then showed that the Max-Weighted-k-Leaf-Induced-Subtree problem can be solved in polynomial time on trees using a greedy algorithm. Consequently, they showed that the Max-Exp-Cover-LRO problem can be solved in polynomial time. For , the Max-Exp-Cover-1-LRO problem is NP-complete on planar graphs and the hardness follows from the hardness of budgeted dominating set due to Khuller et al. [29]. The Max-Exp-Cover-1-LRO admits a -approximation algorithm [12,13]. Similarly, Kempe et al. [14] shows that the influence maximization under the IC distribution model and the LT distribution model has a approximation algorithm. Both these results naturally follow due to the submodularity of the expected neighborhood size function, the monotonicity (as r increases) and submodularity of the expected coverage function and the result of Nemhauser et al. [39] on greedy maximization of submodular functions. In the setting of parameterized algorithms, the Max-Exp-Cover-1-LRO problem is W[1]-complete for solution size as the parameter and this follows from the hardness of budgeted dominating set problem. An FPT algorithm for the Max-Exp-Cover-1-LRO problem with treewidth as the parameter is presented in Reference [13]. Formally stated, given an instance of the Max-Exp-Cover-1-LRO problem, an optimal solution can be computed in time where t is the treewidth of the graph which is presented in the input as a tree decomposition.

Finding Communities in Uncertain Graphs. Finding communities is a significant problem in social network and in bioinformatics. A natural graph theoretic model for a community is a dense subgraph. A well-known dense subgraph is the core. Given an integer d, a graph is said to be d-core if degree of every vertex is at least d. The way of obtaining the unique maximal induced subgraph of a graph G which is a d-core is to repeatedly discard vertices of degree less than d. If the procedure terminates with a non-empty graph, then the graph is a d-core of the graph G. A vertex v is said to be in a d-core if there is a d-core which contains v. This idea is generalized to the uncertain graph framework as follows: Given an uncertain graph and the distribution model is the RF model, the d-core probability of a vertex , denoted by , is the probability that v is in the d-core of a possible world. In other words, , where is an indicator function that takes value one if and only if there is a d-core of H that contains v, and is probability of the possible world H. In this setting, we consider the -core problem defined by Peng et al. [16]. We refer to this problem as the Individual Core problem and it is defined as follows.

Definition 2

(Individual-Core). The input consists of an uncertain graph , an integer d and a probability threshold . The objective is to compute an such that for each , .

Peng et al. [16] shows that the Individual-Core problem is NP-complete. Prior to the results of Peng et al. [16], Bonchi et al. [15] introduced the study of d-core problem in uncertain graphs. We refer to the d-core problem on uncertain graphs as the Probabilistic-Core problem defined the follows.

Definition 3

(Probabilistic-Core). Given an uncertain graph , an integer d and a probability threshold , then the aim of the Probabilistic-Core problem is to find a set such that the is at least θ.

The problem of deciding on the existence of such a set K can be shown to be NP-hard using the hardness result given in Reference [16]. On the other hand, if for all , then the d-problem is polynomial time solvable as described at the beginning of this discussion.

A chronological listing of different optimization problems on uncertain graphs is presented in Table 1. The tabulation shows that there are many distribution models under which different optimization problems could be considered. The goal would be to understand the complexity of computing that expectation when the input is presented as an uncertain graph. Indeed, any NP- Complete problem on certain graphs remains NP-Complete for any distribution model when the inputs are uncertain graphs. Therefore, our natural focus is on Exact and Parameterized Computation of the expectation on uncertain graphs. The goal of the area of exact and parameterized computation is to classify problems based on their computational complexity as a function of input parameters other than the input size. The desired solution size, the treewidth of an input graph, and the input size are natural well-studied parameters. An algorithm with running time is said to be a Fixed Parameter Tractable algorithm with respect to the parameter k. Interestingly, there are many problems that do not have FPT algorithms with respect to some parameters, while they have FPT algorithms with respect to others. A rich complexity theory has evolved over nearly four decades of research with the W-hierarchy being the central classification. In this hierarchy problem classes are ordered in increasing order of computational complexity. In this hierarchy the problems which have FPT algorithms are considered the simplest in terms of computational complexity. The complete history of this line of research can be found in the most recent textbook [40].

Table 1.

A chronology of studies on uncertain graphs.

1.3. Our Questions and Results

Given our focus on exact and parameterized algorithms, we think that it should be possible to classify problems based on the hardness of efficiently computing the expectation over different distribution models. One of the contributions of this paper is to collect many of the known algorithmic results on uncertain graphs and the different distribution models for which results have been obtained. Our focus is on uncertain graph optimization problems on the LRO and RF distribution models, and these models have been the focus of many previous results in the literature. Our results add to the knowledge about these two models and are among the first parameterized algorithms on uncertain graphs. They also give an increased understanding of how treewidth of uncertain graphs can be used along with the structure of the distribution models to compute the expectation in time parameterized by the treewidth. Finally, the motivation for the choice of these two models is that the number of possible worlds with non-zero probability under the LRO model is equal to the number of edges m, while the number of possible worlds under the RF model is an exponential in m, which is the maximum number of possible worlds. We define the different distribution models listed in Section 1.1 in detail and some of their properties are identified from relationships between the models in Section 2.

Our first case study on parameterized algorithms under the RF model is on maximizing expected coverage in uncertain graphs. The starting point of our work is the reduction of the Max-Exp- Cover-LRO problem to the Max-Weighted-k-Leaf-Induced-Subtree problem, which can be solved in polynomial time [11,12]. The reduction was from maximizing the expectation coverage to maximizing the total weight of a combinatorial parameter. However, the reduction does not work for the Max-Exp-Cover-1-LRO, as the problem is at least as hard as budgeted dominating set. In the case when the graph has bounded treewidth, we were able to show in a previous work [13] that Max-Exp-Cover-1-LRO has an FPT algorithm parameterized by treewidth. The dynamic programming algorithm depends on properties specific to the LRO distribution model. On the other hand, the status of the question is unclear when the distribution model is the RF model. We address this by presenting a DP algorithm for the Max-Exp-Cover-1-RF problem in Section 4, which is an FPT algorithm parameterized by the product of the treewidth and max-degree of the input graph.

The second case study on parameterized algorithms under the RF model is on finding a d-core in uncertain graphs. In Section 5, we consider the Probabilistic-Core problem, and design a polynomial time algorithm for the LRO distribution model. For the RF distribution model, we observe that the Probabilistic-Core problem is W[1]-hard for the parameter d, where d is the minimum degree of the core. Then we design a DP algorithm, which is an FPT algorithm for Probabilistic-Core with respect to treewidth as the parameter.

Essentially in both the case studies, given an uncertain graph under the RF model, the DP uses the tree decomposition to efficiently compute the expected value, which is expressed as a weighted summation over the exponentially many possible worlds.

2. Distribution Models for Uncertain Graphs

As mentioned in Section 1.1, a distribution model along with an uncertain graph uniquely describes the probability distribution on the possible worlds. We describe the distribution model by describing a corresponding sampling procedure on the edges of . This formalism standardizes the nomenclature of optimization problems on uncertain graphs. For example, for the coverage problems on uncertain graphs, the problem names Max-Exp-Cover-1-LRO and Max-Exp-Cover-1-RF clearly state the distribution model and the radius of coverage relevant for the problem. An instance of each of these problems is an uncertain graph and an integer . In this section, we present an edge sampling procedure from a given uncertain graph corresponding to a distribution model on . The outcome of a sampling procedure is a possible world, which is an edge subgraph H of the graph . An edge is called a survived edge and an edge is called a failed edge. We also present some new observations regarding the different distribution models.

2.1. Random Failure Model

Random Failure (RF) model is the most natural distribution model. In this sampling procedure, an edge is selected with probability independent of the outcome of every other edge in E. Thus, each edge subgraph of G is a possible world and for an edge subgraph H, the probability that H is the outcome of the sampling procedure is denoted by which is given by the equation .

2.2. Independent Cascade Model

The sampling procedure to define the Independent Cascade (IC) model is very naturally described by a diffusion process which proceeds in rounds. The process is as defined in Reference [14]. The diffusion process starts after round 0. In round 0, is a non-empty set of vertices and these are called active vertices. The process maintains a set of edges after round . The vertices in are said to be active. The sampling process is as follows. If a vertex v is in , then it remains active in round t also, that is . The diffusion process in round is as follows: If v is in , that is v first becomes active after round , then consider each edge such that u is not in . Each such edge e is sampled with probability independent of the other outcomes. If is selected (that is, it survives), then u is added to . We reiterate that the edges incident on v will not be sampled in subsequent rounds. The sampling procedure terminates in not more than rounds. The outcome of this sampling procedure is the edge subgraph formed by a set of those edges which were selected when the first end point of the edge becomes active. Kempe et al. [14] showed that for any edge subgraph H of , the probability that H is the outcome is given by

Observation 1.

For an uncertain graph the RF Model and the IC Model are identical.

The next distribution model is a generalization of the RF model and was introduced by Gunnec and Salman [43].

2.3. Set-Based Dependency (SBD) Model

The uncertain graph satisfies the additional properties that E is partitioned into of E, for some . Further, p satisfies the property that for any two edges and that belong to the same part in the partition, . Typically, this partition is a fixed partition of E coming from the domain where the edge failures occur according to the SBD model. The sampling procedure definition of the SBD model is as follows: the edge sets are considered in order from to , and for , the edges in the set are considered before the edges in the set . For each , the edges in are considered in decreasing order of the value p. For each , when an edge is considered, it is sampled with probability independent of the outcome of the previous samples. If the outcome selects the edge e (that is, if e survives), then the next edge in is considered. Otherwise, all the remaining edges of that are to be considered after e, are considered to have failed, and the set is considered. The procedure terminates after considering . The edge subgraph consisting of the selected edges (survived edges) is the outcome of this sampling procedure. The number of possible worlds that have a non-zero probability of being an outcome of the sampling procedure is . To summarize, under the SBD model, for each , the survival of an edge e in a set implies that every edge with a greater survival probability than that of e would have survived. Further, the edge samples of edges in two different sets are mutually independent. As a consequence of this, we have the following observation.

Observation 2.

The RF model on an uncertain graph is identical to the SBD model on and E is partitioned into m sets each containing an edge of E.

2.4. Linear Reliable Ordering (LRO) Model

The case when the partition of E consists of only one set, the SBD model is called the Linear Reliability Ordering (LRO) model introduced by Hassin et al. [11]. Let . Then, under the LRO model it follows that for each , . Further, the possible worlds is the set of graphs where , , and for each , . The following lemma shown by Hassin et al. [11] regarding the probability distribution on the possible worlds uniquely defined by the LRO model.

Lemma 1

(Hassin et al. [11]). For , the probability of the possible world is given by

Coming back to the SBD model, on the uncertain graph and E partitioned into for some , the probability of an outcome H under the SBD model naturally follows from Lemma 1. The idea is to consider an outcome of the SBD model as ℓ independent samples from the LRO model on the uncertain graphs , where for each , is the function p restricted to .

This completes our description of the distribution models known in the literature. We next present two dynamic programming algorithms on the input uncertain graph , when the graph is presented as a nice tree decomposition. The algorithms compute an expectation under the RF model and have worse running times than that of corresponding algorithms for computing the expectation under the LRO model.

3. Definitions Related to Graphs

Every graph we consider in this work are simple undirected graphs unless. A graph is an undirected graph with vertex set V and edge set E. We denote the number of vertices and edges by n and m, respectively. For a vertex , denotes the set of neighbors of v and is the closed neighborhood of v. For each vertex , denote the degree of the vertex v in G. When G is clear in the context is used. The maximum degree of the graph G, denoted by , and the minimum degree of the graph G, denoted by , are the maximum and minimum degree of its vertices. When G is clear in the context and is used. Other than this, we follow the standard graph theoretic terminologies from Reference [44]. We define some special notations for the uncertain graphs as follows. Given a vertex , let denote the set of all edges incident on v. Given a subset of vertices , let denote the edge set of the vertex-induced uncertain subgraph . Similarly, given an edge set , let denote the vertex set of the edge-induced uncertain graph .

We study the parameterized complexity of the coverage problems and k-core problem on uncertain graphs. We follow the standard parameterized complexity terminologies from Reference [40]. We define the parameter treewidth that will be relevant to our discussion.

Definition 4

(Tree Decomposition [45,46]). A tree decomposition of a graph G is a pair such that H is a tree and . For each node , is referred to as bag of i. The following three conditions hold for a tree decomposition of the graph G.

- (a)

- For each vertex , there is a node such that .

- (b)

- For each edge , there is a node such that .

- (c)

- For each vertex , the induced subtree of the nodes in H that contains v is connected.

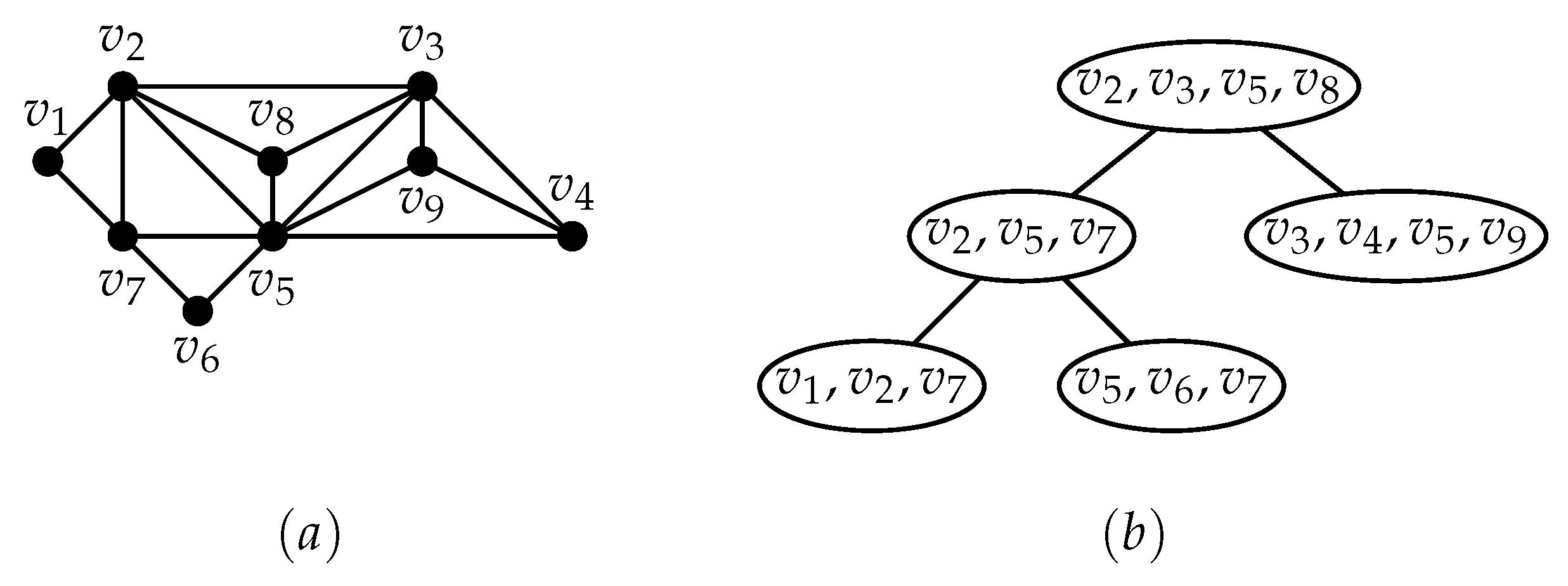

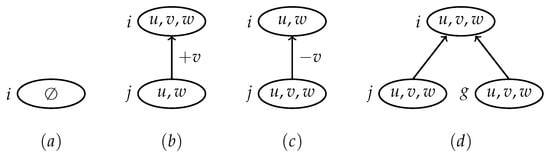

The width of a tree decomposition is the . The treewidth of a graph, denoted by , is the minimum width over all possible tree decompositions of G. An example of a tree decomposition is illustrated in Figure 2. For our algorithm, we require a special kind of decomposition, called the nice tree decomposition which we define below.

Figure 2.

(a) A graph with 9 vertices; (b) An optimal tree decomposition with treewidth 3.

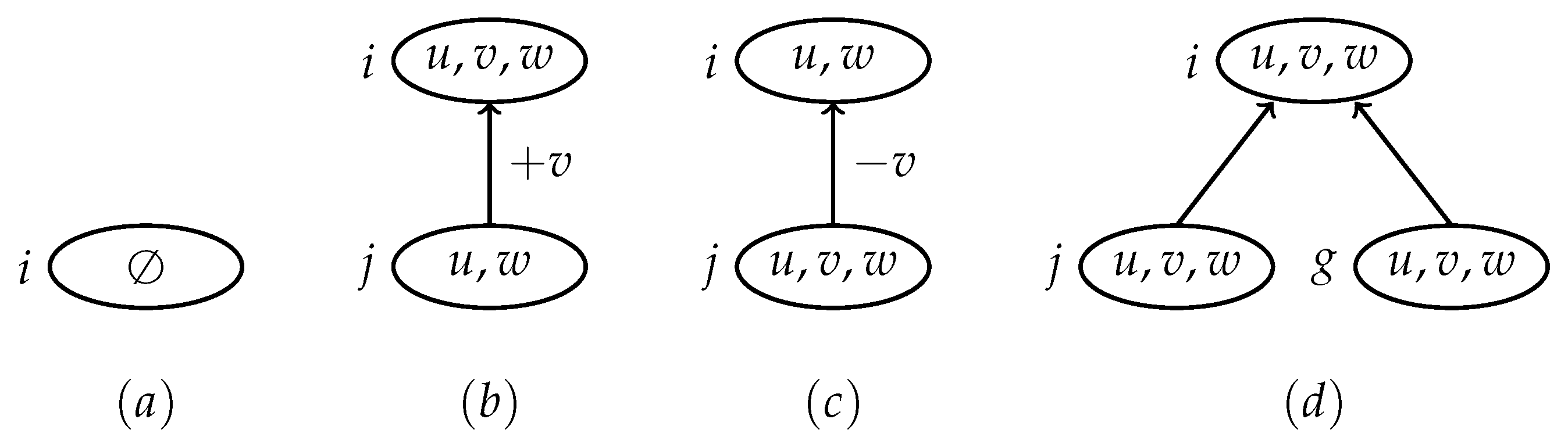

Definition 5

(Nice tree decomposition [46]). A nice tree decomposition is a tree decomposition, rooted by a node r with and each node in the tree decomposition is one of the following four type of nodes.

- 1.

- Leaf node.A node with no child and .

- 2.

- Introduce node.A node with one child j such that for some .

- 3.

- Forget node.A node with one child j such that for some .

- 4.

- Join node.A node with two children j and g such that .

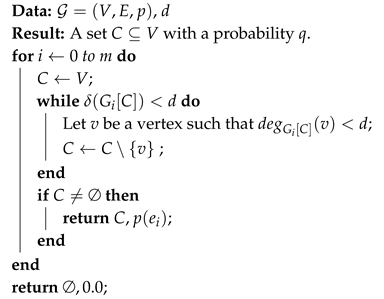

An example of four types of nodes is illustrated in Figure 3. Given a tree decomposition of a graph G with width k, a nice tree decomposition with same width and nodes can be computed in time [46]. Hereafter, we will assume that the tree decompositions considered are nice. For each node , let denote the subtree rooted at i. Let . Let be the set of all vertices in the bag of nodes in the subtree .

where is the set of all children of i in H. We use j and k to denote the two children of a join node i in H. Further, j denotes the left child, and g denotes the right child. In case i has only one child, as in the case of introduce and forget nodes, only the left child j is well-defined and g does not exist. In this case, is taken to be the empty set. We refer to Reference [40] for a thorough introduction to treewidth and its algorithmic properties.

Figure 3.

An example of leaf , introduce , forget and join nodes. Directed edges denote child to parent link.

4. Max-Exp-Cover-1-RF Problem is FPT by )

An instance of Max-Exp-Cover-1-RF consists of a tuple where is an uncertain graph, w is a function that assigns weight to each vertex v, and k is the budget. The goal is to find a set such that and the expected total weight of the vertices dominated by S is maximized. The expectation is computed over the probability distribution uniquely defined on the possible worlds by the RF model. We introduce the function which we refer to as the coverage function. The two arguments of the coverage function are subsets T and S of the vertex set V, and the value is the expected coverage of T by S, where the expectation is computed over the possible worlds. For any subsets , the Coverage of T by S, denoted by , is . For a vertex and , the expected coverage of v by the set S, denoted by , is given by

Note that if a set is a singleton set, then we abuse notation a little and write the element instead of the set.

Our algorithm for the Max-Exp-Cover-1-RF follows the classical bottom-up approach for dynamic programming based on the nice tree decomposition. We compute a certain number of candidate solutions for the subproblem at each node , using only the candidate solution values maintained in the children of i. At each node , we have candidate solutions which are stored in a table denoted by . The final optimal solution is obtained from the solutions stored in at the root node r of H. At any node i in H, the expected coverage of a vertex by a set S in the subproblem at node i in H is computed by decomposing carefully to avoid over-counting. Towards this end, we introduce notation for the coverage conditioned on the event that some edges have failed. We denote this event by a function f and call this the surviving neighbors (SN) function. The SN-function has the property that for each , . In other words, we are interested in the expected coverage conditioned on the event that for each , all the edges to vertices in have failed.

Definition 6.

Let u be a vertex and be a set and f be an SN-function such that . The conditional coverage of u by S restricted by f is defined to be

Extending this definition to sets, for any two vertex sets , we define .

For each , is the expected coverage of u by conditioned on the event that u is not covered by any vertex from the set . Further, is the product of and the probability of sampling a subgraph in which u has a neighbor in and no neighbor from . We refer to as the conditional coverage of u by S (we leave out the phrase restricted by f).

Lemma 2.

Let and such that , and consider an SN-function f such that . Then .

Proof.

Hence the proof. □

4.1. Recursive Formulation of the Value of a Solution

Let be a set of size k for the Max-Exp-Cover-1-RF problem in . We now present a recursive formulation to compute the expected coverage of V by S. Throughout this section S denotes this set of size k. Let i be a node in H. Let j and g be the children of i. In case i is either introduce node or forget node, then g is considered to be a null node with . In node i, let , and . Let . For the solution S, the set can be partitioned into five sets as follows.

Further, for the solution S, define the SN-function as follows:

Note:f is dependent on S and i and wherever f is used, it must be used as per the definition at the corresponding node in the tree decomposition.

Let be a subset of size k, i be a node in H in the nice tree decomposition, f be the SN-function for defined using S, be a partition of , and , and be as defined above. The following two lemmas are useful in setting up a recursive definition of and a bottom-up evaluation of the recurrence.

Lemma 3.

.

Proof.

The expected coverage of V by the set S is given by Since V is partitioned into three disjoint sets , , and , we get

By applying Lemma 2 to , it follows that

Further, , since , it follows that

By the definition of f and , . Therefore we get Hence the lemma. □

As a corollary it follows that . Recall that , and f is such that for all . We now show that for each node , can be written in terms of the appropriate sub-problems in children j and g of the node i.

Lemma 4.

Proof.

By definition, the expected coverage of by restricted by f is given by the following equations.

The second equation follows from the first due to the definition of the partition , and the fact that for all , . The third equation follows from the second due to the fact that and , since L and R are sets for which . □

Lemmas 3 and 4 show that the expected coverage of a set S of size k can be computed in a bottom-up manner over the nice tree decomposition. Further, at a node the expected coverage of by a S is , where f is uniquely determined by S, i and the tree decomposition. Also, is decomposed into six terms based on the partition which is uniquely determined by S, i and the tree decomposition. Of these six terms, five of them are computed at the node , and one term comes from j and g, which are the children of i. Therefore, the search for the optimum S of size k can be performed in a bottom-up manner by enumerating all possible choices of the 5-way partition of and all possible choices of the SN-function f at . For each candidate partition of and the SN-function f, the optimum is computed by considering the compatible solutions at and . This completes the recursive formulation of the expected coverage of a set S of size k. We next present the bottom-up evaluation of the recurrence to compute the optimum set which is .

4.2. Bottom-Up Computation of an Optimal Set

For each node , we associate a table . Each row in the table is a triple which consists of an integer b, a five way partitioning of and an SN-function f defined on . Throughout this section we assume that for vertices , . The columns corresponding to a row is a vertex set and a value . Let S denote . To define the value associated with S, consider , and let and . Then, . The set is a set S of size b such that and the associated value is maximized.

Leaf node. Let be a leaf node with bag . The only possible five-way partition of an empty set is the set with five empty sets and the budget is . The only valid SN-function is . Therefore, the value of the corresponding row in the table is

It is clear that the empty set is the set that achieves the maximum for the Max-Exp-Cover-1-RF problem on the empty graph with budget . Therefore, at the leaf nodes in H, the table maintains the optimum solution for each row. The time to update an entry is .

Introduce node. Let i be an introduce node with child j such that for some . Let be an integer, be a five-way partition of and f be the SN-function defined on . The computation of the table entry is split into two cases, depending on whether the vertex v belongs to the set A in the partition or not.

- Case . Define . Let denote the partition of obtained by removing vertex v from the set A of the partition . Let be the SN-function defined as follows:Then,

- Case . Since v is in but not in it follows that . Therefore, the coverage of the vertex v by the vertices that occur only in is zero. Let be the partition of obtained by removing the vertex v from the appropriate set in the partition . The SN-function is defined as follows on the set : For , .

Forget node. Let i be a forget node with child j such that for some . Let be a budget, be a five-way partition of and f be an SN-function. We consider the following five-way partitions of .

Let be the SN-function defined as follows: for , and . Let . is defined as follows:

Join node. Let i be a join node with children j and g such that . Let be a budget, be a five-way partition of and f be an SN-function. We consider the sets and of all SN-functions defined on and , respectively, satisfying the following conditions:

- For each , and .

- For each , and .

- For each , and are defined as follows. For each partition , and .

We then consider all possible candidates for such that and such that . Each such candidate defines a of and a of . Let and denote the set of all such candidate partitions of and , respectively.

Let , , , and be the values at which the maximum value for Equation (2) is achieved.

The value of the row corresponding to in is given as follows:

We now prove that the update steps presented above are correct.

Lemma 5.

For each node , for each row in , the pair , is such that , and this is the maximum possible value.

Proof.

The proof is by induction on the height of a node in H. The height of a node i in a rooted tree H is the distance to the farthest leaf in the subtree rooted at i. The base case is when i is a leaf node in H, and clearly its height is 0. For a leaf node i with , the row with , and is the only valid row entry and its value is 0. This completes the proof of the base case. Let us assume that the claim is true for all nodes of height at most . We prove that if the claim is true at all nodes of height at most , then it is true for a node of height h. Let b be a budget, be a partition of and f be an SN-function on . Let . For any optimal where , we prove that

We proceed by considering the type of node i so that the induction hypothesis can be applied at nodes of height at most .

Case when i is an introduce node. Let j be the child of i and for some . We now consider two cases depending on whether v belongs to A or not.

- Case . Let be the partition of obtained from by removing v from A. Let be the SN-function on such that , if and , otherwise. We know from our claimed optimality of , and that , and the value of , thatTherefore, it follows that . In other words, we have concluded that the value for the row in is not the optimum value. This contradicts our premise at node j, which is of height at most for which, by induction hypothesis, the table maintains the optimal values. Therefore, our assumption that is not optimum is wrong.

- Case . Let be the partition of obtained by removing v from the appropriate set in the partition . Let be the SN-function on such that for each . We know from our claimed optimality of , and that , and the value of that . Therefore, it follows that . In other words, we have concluded that the value for the row in is not the optimum value. This contradicts our premise at node j, which is of height at most for which, by induction hypothesis, the table maintains the optimal values. Therefore, our assumption that is not optimum is wrong.

Forget node. We know that , and v is in but not in , it follows that and . Define to be the SN-function at such that for each and . We have assumed . Further, since and due to the definition of , . Since is computed identically from some row in , let us say , it follows that . This contradicts the premise that the table is at the lowest height in the tree decomposition at which an entry is sub-optimal. Therefore, our premise is wrong.

Join node. We assume that is indeed a better solution than S for the table entry of . Let and . Let . Let and be the partitions of and defined using and , respectively. Note that, and . Let be the SN function on such that for and for . Let be the SN function on such that for , for and . The coverage can be written as , where the coverage of by and by are restricted to the partitions and .

We know that in case of join node. The table entry is updated using the entries and of the table and , respectively. In other words, the values of variables and are obtained from the Equation (2) described in the recursive definition of join node. The values and are also feasible for the range given in the Equation (2). Then, we have . Since is better than S for the entry of , we have . The above inequality shows that at least one of the table entries or is not optimal. This would again contradict the premise that i is the node at the least height at which some table entry is sub-optimal.

This completes the case analysis and our proof. Hence the lemma. □

Running Time. There are -many nodes in the nice tree decomposition H. Each node has a maximum of entries. The comes from the fact that at each vertex in a bag, we enumerate all subsets of neighbors to come up with the SN-functions. It is clear from the description that at the leaf nodes, introduce nodes, and forget nodes, the update time is . At a join node, the time taken to compute an entry depends on three basic operations. The optimal partitions and are computed in time and budget distribution can be done in time. The costliest operation is to enumerate the different SN-functions and for the given SN-function f. Since we need to consider all the -possible ways of distributing the for each vertex v, the distribution takes time. Therefore, the running time for an entry in a join node takes time, and this is . This analysis of the running time and Lemma 5 complete the proof of the following theorem which is our main result.

Theorem 1.

TheMax-Exp-Cover-1-RFproblem can be solved in time .

5. Parameterized Complexity of Probabilistic-Core Problem

Let be an uncertain graph and d be an integer. Given a set , we define the probability that the set K is being a d-core in , denoted by , to be , where I is an indicator variable that takes value 1 if and only if the set K forms a d-core in the graph H. The decision version of the Probabilistic-Core problem is formally stated as follows: given an uncertain graph , an integer d and a probability , decide if there exists a set such that .

We study the Probabilistic-Core problem under the LRO and RF model. First we show that the Probabilistic-Core-LRO problem and the Individual-Core problem are polynomial time solvable, due to the fact that an uncertain graph under the LRO model has only a polynomial number of worlds. Then we show that the Probabilistic-Core-RF problem is W[1]-hard for the parameter d and admits an FPT algorithm for the parameter treewidth.

5.1. An Exact Algorithm for the Probabilistic-Core-LRO Problem

We present a polynomial time algorithm (see as Algorithm 1) for the Probabilistic-Core-LRO problem. Let be an instance of the Probabilistic-Core-LRO problem. As per the definition of the LRO model, let the set be the possible worlds of , where and for , . For each , we consider the linear order in which precedes .

| Algorithm 1:Probabilistic-Core-LRO |

|

Lemma 6.

The Algorithm 1 solves theProbabilistic-Core-LROproblem in polynomial time.

Proof.

By definition of the possible worlds, . If , then does not contain a d-core. Moreover, for , since is an edge subgraph of , no graph in the possible worlds has a d-core. This is indicated by the return value-pair of the ∅ and probability . On the other hand if , let be the first graph in the linear ordering of possible worlds for which a non-empty C is computed by Algorithm 1 on exit from the While-loop. Clearly, C induces a d-core in . Since is the first graph which contains a d-core, for each , does not contain a d-core. Further, for every , C is a d-core in since it is an edge-subgraph of . Then,

For any set with , the set K can form a d-core only in the possible worlds . Thus, . This completes the proof. □

The following observation again uses the fact that there are only a polynomial number of possible worlds under the LRO model.

Observation 3.

TheIndividual-Coreproblem is polynomial time solvable on uncertain graphs under the LRO model.

Proof.

Clearly, for each , the While-loop in Algorithm 1 computes the maximal d-core in . Therefore, for each v, , where i the smallest index such that the non-empty d-core contains v. Since the number of possible worlds are , for each vertex , the probability can be computed in polynomial time. Thus, for a given uncertain graph , an integer d and probability threshold , the set is the optimum solution to the Individual-Core problem. □

5.2. Parameterized Complexity of the Probabilistic-Core-RF Problem

We show that the Probabilistic-Core-RF problem is W[1]-hard. The reduction that we show for the W[1]-hardness is similar to the hardness result of the Individual-Core problem shown by Peng et al. [16] (they call this the -Core problem). The reduction is from the Clique problem which is as follows: given a graph and an integer k, decide if G has a clique of size at least k. The Clique problem is known to be W[1]-hard [47].

Theorem 2.

TheProbabilistic-Core-RFproblem is W[1]-hard for the parameter d.

Proof.

Let be an instance of the Clique problem. The output of the reduction is denoted by , and it is an instance of Probabilistic-Core-RF problem. The vertex set and edge set of are same as V and E, respectively, and . Further, for each edge , define in . Now we show that the Clique problem on the instance and the Probabilistic-Core-RF problem on the instance are equivalent.

We prove the forward direction first. Let be a k-clique in G. The set K is indeed a d-core since every vertex has neighbors in K. The probability that K is a d-Core in a random sample from the possible worlds of is . Thus, the set K is a feasible solution for the instance of the Probabilistic-Core-RF problem.

Now we prove the reverse direction. We claim that any feasible solution contains exactly vertices. If K has less than vertices then K cannot form a d-core in any possible world. Therefore, we consider the case in which that K has more than vertices, and each vertex in the set K has degree at least d. Consequently, the number of edges in any possible world in which K is a d-core is at least . Then, the probability that the set K is a d-core in is at most , and this contradicts the hypothesis that K is a feasible solution. It follows that any feasible solution contains exactly vertices and each vertex has degree d, thus K is a k clique. Hence the theorem. □

5.3. The Probabilistic-Core-RF Problem is FPT by Treewidth

We show that the Probabilistic-Core-RF problem admits an FPT algorithm with treewidth as the parameter. The input consists of an instance of the Probabilistic-Core-RF problem. A nice tree decomposition of the graph is also given as part of the input. Let i be any node in and and be a pair of functions on . Given a set , the set K is said to be -constrained d-core of G if

- for each , and , and

- for each , .

Let denote the uncertain graph . We define a constrained version of the Probabilistic-Core-RF problem as follows. Given a set K, the probability that the set K is an -constrained d-core in is given by:

where is an indicator function that takes value 1 if and only if K is an -constrained d-core of H. The optimization version of the -constrained Probabilistic-Core-RF problem seeks to find the set K such that is maximized. The solution for -constrained Probabilistic-Core-RF problem for different values of i, and on are partial solutions which are used to come up with a recursive specification of the optimum value. The dynamic programming (DP) formulation on the nice tree decomposition results in an FPT algorithm with treewidth as the parameter.

The dynamic programming formulation maintains a table at every node . Each row in the table is a pair of functions and such that . The column corresponding to the row is a pair which consists of a set and a probability value. Further, the set is an optimal solution for the -constrained Probabilistic-Core-RF problem on the instance .

Intuitively, the functions and defined on stand for the “in-bag-degree” and “out-bag-degree” constraints, respectively, for each vertex . We maintain the candidate solutions in the table for different values of the functions and . We allow the candidate solutions that are infeasible at current stage and those vertices which are not satisfied with degree d will get neighbors from nodes at a higher level in the tree decomposition. The optimal solution for the instance of the Probabilistic-Core-RF problem can be obtained from the table where r is the root of the tree decomposition .

5.3.1. Dynamic Programming

We now present the dynamic programming formulation on the different types of nodes in . Let be a node with bag . For a pair of functions , we show how to compute the table entry in each type of node as follows.

Leaf node. Let i be a leaf node with bag . We have one row in the table . Let be the pair of functions corresponding to the row and the value of the table entry is given as:

Insert node. Let i be an insert node with a child j, and let for some . The row is computed based on the value of and . If , then the row becomes infeasible since . That is,

In the rest of the cases we have . We define to be a function such that for all . When , then the vertex v will not be in the solution . Let be a function such that for all . The value of the row is same as since v is excluded. When , then v is part of . Let . Then, we have . Otherwise, no feasible solution exists for the row . That is, degree constraint of v will not be met in any feasible solution. Assume . Let be a subset of U of size , and . The vertices in Y that are the neighbors of v contribute degree to v. Then, each vertex will lose a degree from in the node j. Define to be

Let

be the best neighbors of v such that the solution obtained from the table is maximized. Then, the recursive definition of the row is given as,

Forget node. Let i be a forget node with a child j, and let for some . From the definition of the tree decomposition, it follows that . Since , either v is part of solution with constraint or v is not part of solution. Let . For each and of size a, we define such that

and

For , let

Let . Then the value of the row is equal to .

Join node. Let i be a join node with children j and g such that . For a function defined on , we consider the function for the child node and for the other child node , consider the function such that for all . Since considers the neighbors from outside , each vertex with will get the d-core neighbors from the set . Since and both the sets are disjoint, we divide into two parts. For each vertex , we try all possible ways of dividing into two parts. For such that for each , , we define and to be and . Let

Then, the recursive definition of the row is given as,

5.3.2. Correctness and Running Time

Lemma 7.

Let i be a node in . For every pair of functions , the row is computed optimally.

Proof.

Let i be a node in . We claim that for each pair of functions , the row computes the optimal solution for the instance of the -constrained Probabilistic-Core problem. That is, the set is an optimal solution for the above instance. Let . We show that for any for some ,

The proof is by induction on the height of a node in . The height of a node i in the rooted tree is the distance to the farthest leaf in the subtree rooted at i. The base case is when i is a leaf node in and height is 0. For a leaf node i with , the row with completes the proof of the base case. Let us assume that the claim is true for all nodes of height at most . We now prove that if the claim is true at all nodes of height at most , then it is true for a node of height h. Let i be a node of height h. Clearly i is not a leaf node.

When i is an introduce node. Let j be the child of i, and for some . If , then no feasible solution exists since . This is captured in our dynamic programming. In the further cases, we consider . Let . Consider the case when . That is, the vertex v is not part of the solution. The recursive definition of the dynamic programming gives

where and are as defined in the dynamic programming. A feasible solution to the row should be feasible for the row . Otherwise, the degree constraints are not met by the solution. For the solution ,

since j is a node at height and by our induction hypothesis. Also,

since . Then, we have

This completes the case when and .

Now we consider the case where . In the solution , we need neighbors of U in the d-core where the set . Let . There exists a of size such that the probability can be written as follows:

The solution is compatible for the row where the vertices in Y are neighbors of v and degree constraint of these vertices is decreased by 1 in such that it will be satisfied by the edge from v. Since W in the dynamic programming is optimal over all possible sized subsets of W, we have

This completes the argument of the case when i is an introduce node.

When i is a forget node. Let j be the child of i, and for some . We consider two cases depending on whether v belongs to S. We first consider the case when . Consider the functions and as defined in the recursive computation of forget node. Since , v will get zero degree constraint in both functions and , and other vertices will have same constraints as and values. Then, the probability can be written as follows:

Since j is a node at height , we have

Secondly, we consider the case when . Let . Since v is in but v is not in its parent node , v should have degree d in the -constrained Probabilistic-Core problem. Then, and for an . Let of size a be the set of vertices that have an edge to v to compensate the degree constraint at v. Then, each vertex will gain a degree constraint and lose a degree constraint . Using the integer a and the set Y, the probability can be written as follows:

Since j is a node at height , we know that the row is computed optimally. That is,

This completes the argument of the case when i is a forget node.

When i is a join node. Let j and g be the children of i, and . The set can be partitioned into sets and where and . Let and . For each vertex , the degree constraint should be satisfied by the edges from U to u. Since , the degree constraint is either satisfied in the node j or node g and not in both. Without loss of generality we assume that the degree constraint is satisfied in the node j and no zero degree constraint in the node g. Then we define to be for every vertex , . For each vertex with , the degree constraint can be satisfied by the sets and together. There exists an integer such that neighbors in the core are obtained from the set and neighbors in the core are obtained from the set . Then, there exists a function such that for each vertex , . Using the function x, the probability can be given as follows:

The functions and for the given function x are defined in the description of dynamic programming. Since both j and g are nodes at height at most , by induction hypothesis, the rows and are computed optimally. Therefore, we have

where y is the optimal distribution of the degree which results in the , as defined in the recursive formulation at a join node. This completes the argument for the case when i is a join node. Hence the lemma. □

For a possible world , the degree of a vertex is at most the degree of v in . A vertex with degree less than d in G cannot be an element of a core in any possible world. Thus, those vertices can be excluded throughout the algorithm. Such a pruning results in either the pruned graph being an empty graph or the minimum degree of the pruned graph is becoming at least d. We state the following Lemma from Koster et al. [48].

Lemma 8

(Koster et al [48]). For a graph with , .

In the following lemma, we analyze the running time of the dynamic programming algorithm.

Lemma 9.

TheProbabilistic-Core-RFproblem can be solved optimally in time .

Proof.

For each node i in , our dynamic programming generates a table with rows. Each row for some is computed in our dynamic programming based on the type of node i. When i is leaf node, a single row exists in and that is computed in time. When i is introduce node, we consider two cases that and . If , then the functions and can be computed in time . If , the set can be found by enumerating all sized sets in U. This will take time and using the upper bound on values we get . It follows that if i is an introduce node, then can be computed in time . When i is a forget node, for each value of , we enumerate all a sized subsets of Y. This requires time. When i is join node, we need to compute the optimal distribution of for each vertex . This requires time. From Lemma 8, we know that , we upper bound the value d by . Overall, a row can be computed in time . The entire table can be computed in time . The nice tree decomposition has many nodes and a table on each node can be computed in time . An optimal solution to the Probabilistic-Core-RF problem on the input uncertain graph will be obtained from the table of the root node r. That is, is the optimal solution. □

The preceding lemmas results in the following theorem.

Theorem 3.

TheProbabilistic-Coreproblem admits an FPT algorithm for the parameter .

6. Discussion

There are many natural questions related to the parameterized complexity of algorithms on uncertain graphs under different distribution models. The following are some open questions.

- Are there efficient reductions between distribution models so that we can classify problems based on the efficiency of algorithms under different distribution models? This question is also of practical significance because the distribution models are specified as sampling algorithms. Consequently, the complexity of expectation computation on uncertain graphs under different distribution models is an interesting new parameterization. Further, one concrete question is whether the LRO model is easier than the RF model for other optimization problems on uncertain graphs. For the two case studies considered in this paper, that is the case.

- While our results do support the natural intuition that a tree decomposition is helpful in expectation computation, it is unclear to us how traditional techniques in parameterized algortihms can be carried over to this setting. In particular, it is unclear to us as to whether for any distribution model, a kernelization based algorithm can give an FPT algorithm on uncertain graphs.

- We have considered the coverage and the core problems on uncertain graphs under the LRO and RF models. However, we have not been able to get an FPT algorithm with the parameter treewidth for Max-Exp-Cover-1-RF. Actually, any approach to avoid the exponential dependence on would be very interesting and would give a significant insight on other approaches to evaluate the expected coverage.

- Even though the Individual-Core-RF problem and Probabilistic-Core-RF problem are similar, we have not been able to get an FPT algorithm for the Individual-Core-RF problem with treewidth as the parameter. Even for other structural parameters such as vertex-cover number and feedback vertex set number, FPT results will give a significant insight on the Individual-Core problem.

Author Contributions

Investigation, N.S.N. and R.V.; Writing–original draft, N.S.N. and R.V.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Añez, J.; Barra, T.D.L.; Pérez, B. Dual graph representation of transport networks. Trans. Res. Part B Methodol. 1996, 30, 209–216. [Google Scholar] [CrossRef]

- Hua, M.; Pei, J. Probabilistic Path Queries in Road Networks: Traffic Uncertainty Aware Path Selection. In Proceedings of the 13th International Conference on Extending Database Technology (EDBT ’10), Lausanne, Switzerland, 22–26 March 2010; pp. 347–358. [Google Scholar] [CrossRef]

- Asthana, S.; King, O.D.; Gibbons, F.D.; Roth, F.P. Predicting protein complex membership using probabilistic network reliability. Genome Res. 2004, 14, 1170–1175. [Google Scholar] [CrossRef]

- Domingos, P.; Richardson, M. Mining the Network Value of Customers. In Proceedings of the Seventh ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’01), San Francisco, CA, USA, 26–29 August 2001; pp. 57–66. [Google Scholar] [CrossRef]

- Frank, H. Shortest Paths in Probabilistic Graphs. Oper. Res. 1969, 17, 583–599. [Google Scholar] [CrossRef]

- Valiant, L.G. The Complexity of Enumeration and Reliability Problems. SIAM J. Comput. 1979, 8, 410–421. [Google Scholar] [CrossRef]

- Hoffmann, M.; Erlebach, T.; Krizanc, D.; Mihalák, M.; Raman, R. Computing Minimum Spanning Trees with Uncertainty. In Proceedings of the 25th Annual Symposium on Theoretical Aspects of Computer Science, Bordeaux, France, 21–23 February 2008; pp. 277–288. [Google Scholar] [CrossRef]

- Focke, J.; Megow, N.; Meißner, J. Minimum Spanning Tree under Explorable Uncertainty in Theory and Experiments. In Proceedings of the 16th International Symposium on Experimental Algorithms (SEA 2017), London, UK, 21–23 June 2017; pp. 22:1–22:14. [Google Scholar] [CrossRef]

- Frank, H.; Hakimi, S. Probabilistic Flows Through a Communication Network. IEEE Trans. Circuit Theory 1965, 12, 413–414. [Google Scholar] [CrossRef]

- Evans, J.R. Maximum flow in probabilistic graphs-the discrete case. Networks 1976, 6, 161–183. [Google Scholar] [CrossRef]

- Hassin, R.; Ravi, R.; Salman, F.S. Tractable Cases of Facility Location on a Network with a Linear Reliability Order of Links. In Algorithms-ESA 2009, Proceedings of the 17th Annual European Symposium, Copenhagen, Denmark, 7–9 September 2009; Springer: Berlin, Germany, 2009; pp. 275–276. [Google Scholar]

- Hassin, R.; Ravi, R.; Salman, F.S. Multiple facility location on a network with linear reliability order of edges. J. Comb. Optim. 2017, 34, 1–25. [Google Scholar] [CrossRef]

- Narayanaswamy, N.S.; Nasre, M.; Vijayaragunathan, R. Facility Location on Planar Graphs with Unreliable Links. In Proceedings of the Computer Science-Theory and Applications-13th International Computer Science Symposium in Russia, CSR 2018, Moscow, Russia, 6–10 June 2018; pp. 269–281. [Google Scholar] [CrossRef]

- Kempe, D.; Kleinberg, J.M.; Tardos, É. Maximizing the spread of influence through a social network. In Proceedings of the Ninth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 24–27 August 2003; pp. 137–146. [Google Scholar] [CrossRef]

- Bonchi, F.; Gullo, F.; Kaltenbrunner, A.; Volkovich, Y. Core decomposition of uncertain graphs. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’14), New York, NY, USA, 24–27 August 2014; pp. 1316–1325. [Google Scholar] [CrossRef]

- Peng, Y.; Zhang, Y.; Zhang, W.; Lin, X.; Qin, L. Efficient Probabilistic K-Core Computation on Uncertain Graphs. In Proceedings of the 34th IEEE International Conference on Data Engineering (ICDE), Paris, France, 16–19 April 2018; pp. 1192–1203. [Google Scholar] [CrossRef]

- Ball, M.O.; Provan, J.S. Calculating bounds on reachability and connectedness in stochastic networks. Networks 1983, 13, 253–278. [Google Scholar] [CrossRef]

- Zou, Z.; Li, J. Structural-Context Similarities for Uncertain Graphs. In Proceedings of the 2013 IEEE 13th International Conference on Data Mining, Dallas, TX, USA, 7–10 December 2013; pp. 1325–1330. [Google Scholar] [CrossRef]

- Daskin, M.S. A Maximum Expected Covering Location Model: Formulation, Properties and Heuristic Solution. Transp. Sci. 1983, 17, 48–70. [Google Scholar] [CrossRef]

- Ball, M.O. Complexity of network reliability computations. Networks 1980, 10, 153–165. [Google Scholar] [CrossRef]

- Karp, R.M.; Luby, M. Monte-Carlo algorithms for the planar multiterminal network reliability problem. J. Complex. 1985, 1, 45–64. [Google Scholar] [CrossRef][Green Version]

- Provan, J.S.; Ball, M.O. The Complexity of Counting Cuts and of Computing the Probability that a Graph is Connected. SIAM J. Comput. 1983, 12, 777–788. [Google Scholar] [CrossRef]

- Guo, H.; Jerrum, M. A Polynomial-Time Approximation Algorithm for All-Terminal Network Reliability. SIAM J. Comput. 2019, 48, 964–978. [Google Scholar] [CrossRef]

- Ghosh, J.; Ngo, H.Q.; Yoon, S.; Qiao, C. On a Routing Problem Within Probabilistic Graphs and its Application to Intermittently Connected Networks. In Proceedings of the 26th IEEE International Conference on Computer Communications, Joint Conference of the IEEE Computer and Communications Societies, INFOCOM, Anchorage, AK, USA, 6–12 May 2007; pp. 1721–1729. [Google Scholar] [CrossRef]

- Rubino, G. Network Performance Modeling and Simulation; chapter Network Reliability Evaluation; Gordon and Breach Science Publishers, Inc.: Newark, NJ, USA, 1999; pp. 275–302. [Google Scholar]

- Swamynathan, G.; Wilson, C.; Boe, B.; Almeroth, K.C.; Zhao, B.Y. Do social networks improve e-commerce?: a study on social marketplaces. In Proceedings of the first Workshop on Online Social Networks (WOSN 2008), Seattle, WA, USA, 17–22 August 2008; pp. 1–6. [Google Scholar] [CrossRef]

- White, D.R.; Harary, F. The Cohesiveness of Blocks In Social Networks: Node Connectivity and Conditional Density. Soc. Methodol. 2001, 31, 305–359. [Google Scholar] [CrossRef]

- Papadimitriou, C.H.; Yannakakis, M. Shortest paths without a map. Theor. Comput. Sci. 1991, 84, 127–150. [Google Scholar] [CrossRef]

- Khuller, S.; Moss, A.; Naor, J. The Budgeted Maximum Coverage Problem. Inf. Process. Lett. 1999, 70, 39–45. [Google Scholar] [CrossRef]

- Brown, J.J.; Reingen, P.H. Social Ties and Word-of-Mouth Referral Behavior. J. Consum. Res. 1987, 14, 350–362. [Google Scholar] [CrossRef]

- Richardson, M.; Domingos, P.M. Mining knowledge-sharing sites for viral marketing. In Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Edmonton, AB, Canada, 23–26 July 2002; pp. 61–70. [Google Scholar] [CrossRef]

- Bass, F.M. A New Product Growth for Model Consumer Durables. Manag. Sci. 1969, 15, 215–227. [Google Scholar] [CrossRef]

- Snyder, L.V. Facility location under uncertainty: A review. IIE Trans. 2006, 38, 547–564. [Google Scholar] [CrossRef]

- Eiselt, H.A.; Gendreau, M.; Laporte, G. Location of facilities on a network subject to a single-edge failure. Networks 1992, 22, 231–246. [Google Scholar] [CrossRef]

- Colbourn, C.J.; Xue, G. A linear time algorithm for computing the most reliable source on a series-parallel graph with unreliable edges. Theor. Comput. Sci. 1998, 209, 331–345. [Google Scholar] [CrossRef]

- Ding, W. Computing the Most Reliable Source on Stochastic Ring Networks. In Proceedings of the 2009 WRI World Congress on Software Engineering, Xiamen, China, 19–21 May 2009; Volume 1, pp. 345–347. [Google Scholar] [CrossRef]

- Ding, W.; Xue, G. A linear time algorithm for computing a most reliable source on a tree network with faulty nodes. Theor. Comput. Sci. 2011, 412, 225–232. [Google Scholar] [CrossRef][Green Version]

- Melachrinoudis, E.; Helander, M.E. A single facility location problem on a tree with unreliable edges. Networks 1996, 27, 219–237. [Google Scholar] [CrossRef]

- Nemhauser, G.L.; Wolsey, L.A.; Fisher, M.L. An analysis of approximations for maximizing submodular set functions—I. Math. Program. 1978, 14, 265–294. [Google Scholar] [CrossRef]

- Cygan, M.; Fomin, F.V.; Kowalik, L.; Lokshtanov, D.; Marx, D.; Pilipczuk, M.; Pilipczuk, M.; Saurabh, S. Parameterized Algorithms; Springer: Berlin, Germany, 2015. [Google Scholar] [CrossRef]

- Sigal, C.E.; Pritsker, A.A.B.; Solberg, J.J. The Stochastic Shortest Route Problem. Oper. Res. 1980, 28, 1122–1129. [Google Scholar] [CrossRef]