Abstract

Most of the architectural design problems are basically real-parameter optimization problems. So, any type of evolutionary and swarm algorithms can be used in this field. However, there is a little attention on using optimization methods within the computer aided design (CAD) programs. In this paper, we present Optimus, which is a new optimization tool for grasshopper algorithmic modeling in Rhinoceros CAD software. Optimus implements self-adaptive differential evolution algorithm with ensemble of mutation strategies (jEDE). We made an experiment using standard test problems in the literature and some of the test problems proposed in IEEE CEC 2005. We reported minimum, maximum, average, standard deviations and number of function evaluations of five replications for each function. Experimental results on the benchmark suite showed that Optimus (jEDE) outperforms other optimization tools, namely Galapagos (genetic algorithm), SilverEye (particle swarm optimization), and Opossum (RbfOpt) by finding better results for 19 out of 20 problems. For only one function, Galapagos presented slightly better result than Optimus. Ultimately, we presented an architectural design problem and compared the tools for testing Optimus in the design domain. We reported minimum, maximum, average and number of function evaluations of one replication for each tool. Galapagos and Silvereye presented infeasible results, whereas Optimus and Opossum found feasible solutions. However, Optimus discovered a much better fitness result than Opossum. As a conclusion, we discuss advantages and limitations of Optimus in comparison to other tools. The target audience of this paper is frequent users of parametric design modelling e.g., architects, engineers, designers. The main contribution of this paper is summarized as follows. Optimus showed that near-optimal solutions of architectural design problems can be improved by testing different types of algorithms with respect to no-free lunch theorem. Moreover, Optimus facilitates implementing different type of algorithms due to its modular system.

1. Introduction

1.1. The Necessity of Optimization in Architecture

Architectural design problems have an excessive number of design parameters. All possible combinations of design parameters correspond to thousands of different design alternatives. It is difficult to choose the desirable design within such a big search space. In addition, architectural design requires different performance aspects to be satisfied as design objectives focus on various topics (e.g., social, economic, physiological, health, safety, structural, cultural, sustainability, etc.) [1]. Some of these performance aspects (e.g., energy, and daylight) require non-linear equations, which increase the level of the complexity. All these refer that decision-making is highly important in the early stages of the design process. Because, the decisions that are taken in the early design phases have a great impact on the following design stages. As a result, they influence the overall performance and the appearance of the constructed building. At this point, computational optimization techniques became a necessity in architectural design.

Regarding the computational optimization techniques, metaheuristic algorithms can play a vital role for not only presenting promising design alternatives but also for dealing with complexity [2]. On the other hand, these algorithms do not guarantee to finding the global optimal solutions [3]. However, they can present near-optimal results within a reasonable time. For a decision maker, providing a near-optimal solution in a reasonable time can be more advantageous than presenting the optimal solution within extremely long time. To discover desirable solutions, metaheuristics are usually inspired by the natural processes (such as interactions within swarms and evolution over the generations). Some of these metaheuristic algorithms are harmony search (HS) [4], particle swarm optimization (PSO) [5], differential evolution (DE) [6,7], genetic algorithm (GA) [8], ant colony optimization (ACO) [9], simulated annealing (SA) [10], and evolutionary algorithm (EA) [11]. According to current state of the art, evolutionary computation and swarm optimization algorithms are the most popular metaheuristics in architectural domain [2].

1.2. Performative Computational Architecture Framework

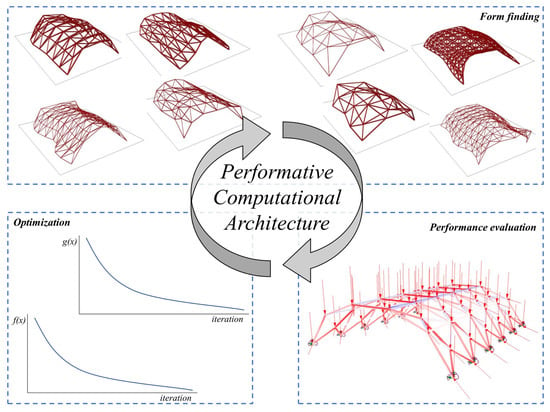

In order to investigate how the desirable solution can be found in the early phase of the design process, a general framework called performative computational architecture (PCA) [1,2] is considered in this paper. PCA proposes an iterative method based on form finding, performance evaluation, and optimization as illustrated in Figure 1. The form-finding stage includes the geometric generation using parameters in algorithmic modeling environments. The performance evaluation stage comprises of the numeric assessments of performance aspects to evaluate how well the form meets with the objectives. Optimization stage corresponds the metaheuristic algorithms for discovering desirable design solutions within a systematic search process.

Figure 1.

Performative computational architecture (PCA) framework.

1.3. Current Optimization Tools in Grasshopper

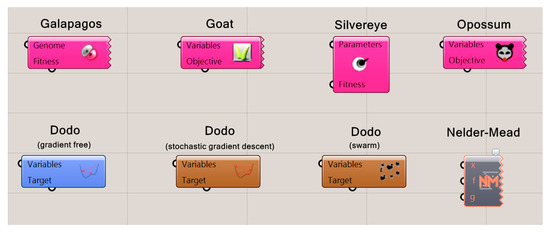

In this section, existing single objective optimization tools for grasshopper (GH) (available in www.food4rhino.com) are reviewed. Algorithm applications for specific optimization problems (such as topology optimization for structure) are not considered. In this context, Galapagos, Goat, Silvereye, Opossum, Dodo, and Nelder–Mead optimization plug-ins, shown in Figure 2, are explained. Some of these plug-ins have been compared in building optimization problems in the literature, that can be found in [12,13,14,15].

Figure 2.

Existing single-objective optimization plug-ins in grasshopper (GH).

1.3.1. Galapagos

Galapagos [16] is one of the first released optimization plug-in for GH. The tool provides two heuristic optimization algorithms, which are GA [8] and SA [10]. Author of the tool suggests SA for rough landscape navigation, whereas evolutionary solver for finding reliable intermediate solutions. Majority of the design optimization papers in the literature utilized Galapagos tool in dealing with energy [17,18,19], daylight [20,21], both energy and daylight [22,23] and structure [24,25,26].

1.3.2. Goat

Goat [27] uses the NLopt library [28] in the graphical user interface of Galapagos. The tool considers a mathematically-rigorous approach (gradient-free optimization algorithm) to reach fast and deterministic results. Goat provides several optimization algorithms as well. These are constrained optimization by linear approximation (COBYLA), bound optimization by quadratic approximation (BOBYQA), subplex algorithm (Sbplx), the dividing rectangles algorithm (DIRECT), and controlled random search 2 (CRS2). Very recently, Goat is used for building energy optimization [14] and structure optimization [29,30].

1.3.3. Silvereye

Despite the gradient-free optimization and evolutionary computation, Silvereye [15] is one of the swarm intelligence optimization plug-ins released for GH. The tool considers ParticleSwarmOptimization.dll, which is a shared library containing an implementation of the core version of the PSO. In the literature, Silvereye is used in the design optimization problems that are focusing on energy [14], micro climate [31] and structural [29].

1.3.4. Opossum

Opossum [32] is the first model-based optimization tool for GH. The solver is based on an open-source library for black-box optimization with costly function evaluations (RBFOpt) [33] such as energy and daylight simulations. RBFOpt library uses the radial basis function with local search while discovering satisfactory solutions with a small number of function evaluations. Opossum is used in several design problems to deal with daylight [34], structure [29,30] and energy [14].

1.3.5. Dodo

Dodo [35] is a plug-in based on different implementation of optimization algorithms. These are non-linear gradient free optimization based on NLopt library [28], stochastic gradient descent algorithm, and swarm optimization. In addition, Dodo also provides several supervised and unsupervised neural network algorithms.

1.3.6. Nelder–Mead Optimization

Nelder–Mead Optimization [36] is the first tool based on the Nelder–Mead method [37], a local search-based optimization algorithm, in GH. Compared to heuristics, Nelder–Mead typically has fewer function evaluations for computationally expensive models. In addition, the implementation of Nelder–Mead Optimization also allows considering multiple constraints using Kreisselmeier-Steinhauser function [38].

1.4. This Study: Optimus

In the field of computer science, different types of metaheuristic algorithms have been suggested to solve real-parameter optimization problems by researchers and engineers in many years. As a common approach, the performance of each developed algorithm is tested by using a set of the standard benchmark problems such as Sphere, Schwefel’s, Rosenbrock’s, Rastrigin’s, etc. For real-world problems, this test is done by using benchmark instances. The main reason for comparing algorithms is based on the no free lunch theorem (NFLT) [39]. The performance of an optimization algorithm depends on the nature of the problem. In other words, one algorithm can outperform another algorithm in a specific problem. Thus, NFLT argues that there is no global optimization algorithm, which can present the best results for all real-world and benchmark problems.

In the field of architecture, testing different types of algorithms for the same architectural design problem is not a common approach. One of the most important reason of this fact is that computer aided design (CAD) tools of architects does not include optimization algorithms in a wide range. According to the current state of the art [40], only 3% of total users of optimization tools are architect in the domain of building performance optimization. This fact clearly shows that there is a little attention on using optimization methods within the CAD programs. Therefore, this paper introduces a new optimization solver, called Optimus, with significant features listed below:

- Compatible with parametric design models created in GH [16] algorithmic modeling for Rhinoceros [41] CAD software.

- Supports PCA framework outlined in previous section.

- Implements a self-adaptive [42] differential evolution algorithm with ensemble of mutation strategies [43] (jEDE), explained in Section 3.

- Presents the highest performance when compared to other optimization algorithms available in GH reviewed in Section 1.3.

- ○

- The performance of the Optimus is tested by benchmark suite, which consists of standard single objective unconstrained problems and some of the test problems proposed in IEEE Congress on Evolutionary Computation 2005 (CEC 2005) [44]. Problem formulations and optimization results of these benchmarks are given in Section 4.1.

- ○

- Finally, Optimus is tested with a design optimization problem. The problem formulation and optimization results are given in Section 4.2.

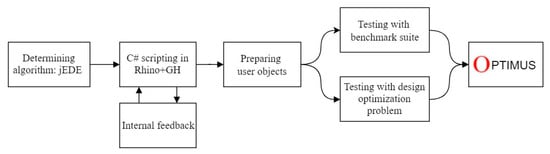

The development process of the Optimus is illustrated in Figure 3.

Figure 3.

Optimus development process.

2. Self-Adaptive Differential Evolution Algorithm with Ensemble of Mutation Strategies

Metaheuristics are one of the most used optimization methods in the domain of architectural design [2]. These algorithms can avoid local minima and maxima while coping with real-parameters in large search spaces. In addition, metaheuristics can present near-optimal results when compared to other direct search methods in a reasonable time [3].

Swarm intelligence (SI) and evolutionary algorithms (EAs) are the most common sub-categories of metaheuristics. These algorithms are inspired by nature using different search strategies. SI is based on interactions of swarms such as flocks of birds, schools of fish, ants and bees. Some examples of SI can be shown as Ant Colony Optimization (ACO) [9] and Particle Swarm Optimization (PSO) [45,46]. EAs are in the class of population-based metaheuristic optimization algorithms. EAs are inspired by the mechanisms of biological evolution that mimics the selection and reproduction processes of living organisms. EAs are very effective to deal with NP-hard problems. Some examples of EAs are Genetic Algorithms (GAs) [8,47]. Genetic Programming (GP) [48], Evolution Strategy (ES) [49], and DE [7].

DE, which is introduced by Storn and Price [7], is potentially one of the most powerful stochastic real-parameter optimization algorithms in the literature. The algorithm can converge very fast in solving real-world problems such as in scheduling [50], optics [51], communication [6], power systems [52], pattern recognition [53] and recently in architectural design [54,55]. A recent survey by Das and Suganthan [56,57] clearly explained the history of DE and its success. DE algorithm has many advantages. The simple code structure of the algorithm facilitates its implementation. Other advantage is that the number of control parameters in DE is few, which are crossover rate (), mutation rate (), and population size (). In classical DE, these control parameters are constant during the whole optimization process. However, a simple change in or generation strategies can significantly improve the performance of the algorithm. Therefore, some variants of DE in the literature focus on parameter settings as presented in [42,58]. Moreover, DE can tackle the large scale and computationally expensive optimization problems. Because, the space complexity of DE is low as mentioned in [59].

2.1. The Basic Differential Evolution

The classical DE has four main stages. There is a recursive process among second, third and fourth steps as follows:

- Initialization for generating the initial target population once at the beginning.

- Reproduction with mutation for generating the mutant population by using the target population.

- Reproduction with crossover for generating the trial population by using the mutant population.

- Selection to choose the next generation among trial and target populations using one-to-one comparison. In each generation, individuals of the current population become the target population.

2.1.1. Initialization

In the basic algorithm, the initial target population has individuals with a -dimensional real-parameter vectors. Each vector is obtained randomly and uniformly within the search space restricted by the given minimum and maximum bounds: . Thus, the initialization of j-th component of i-th vector can be defined as:

where is the i-th target population at generation ; and is a uniform random number in the range .

2.1.2. Mutation

The difference vector in mutation operator is one of the main strengths of DEs [7]. Hence, DE differs from other EAs since it relies on a difference vector with a scale factor . The mutation process is the first step to generate new solutions. In order to obtain mutant population, two individuals are randomly chosen from the target population. The weighted difference of these individuals is added to a third individual from the target population as in Equation (2).

where are three randomly chosen individuals from the target population such that and . is a mutation scale factor influencing the differential variation between two individuals. is the mutant population in generation .

2.1.3. Crossover

To obtain the trial population, a binomial crossover is applied to each variable. If a randomly and uniformly generated number is less than or equal to the crossover rate (), the individuals from mutant population is chosen, otherwise target individuals are chosen. Simply, trial population is generated by recombining mutant individuals with their corresponding target individuals as follows:

where the index is a randomly chosen dimension from . It makes sure that at least one parameter of the trial population will be different from the target population . is a user-defined crossover constant in the range , and is a uniform random number in the interval whereas is the trial population at generation .

When trial population is obtained, parameter values might violate search boundaries. Therefore, the solution can be restricted. For this reason, parameter values that are violating the search range are randomly and uniformly re-generated as in Equation (4).

2.1.4. Selection

For the next generation, selection process is realized, which is based on the survival of the fittest among the trial and target populations. The population that has the lower fitness value is chosen according to one-to-one comparison, as in Equation (5).

2.2. Self-Adaptive Approach

In this paper, self-adaptive DE [42], so called , is employed. The is very simple, effective and converges much faster than the basic DE, especially when the dimensionality of the problem is high or the problem is complex. In the , each individual has its own and values. In this paper, they are initially taken as and and they are updated as follows:

where are uniform random numbers in the range . and represent the probabilities to adjust the and . They are taken as and and .

2.3. Ensemble Approach

In addition to the self-adaptive approach, an ensemble approach [43] is employed in the jDE, so called jEDE. This means that instead of using one type of mutation strategy with fixed parameter setting as in the basic DE, each mutant individual is generated according to different mutation strategies with different parameter settings. In this approach, each dimension has values pool for competition of producing better future offspring according to their success in the past generations. In this paper, following mutation strategies () are considered as in Equations (8)–(10). In , the individuals that formed the mutant population are randomly selected. In and , strategies are benefitted from the information of the best solution (xbest) so far.

where are three randomly selected individuals from the target population such that and . is a mutation scale factor, in our jEDE, it is generated by using self-adaptive procedure. is the strategy used in each population to choose different mutation strategies. The pseudo code of the jEDE is given in Algorithm 1.

| Algorithm 1. The self-adaptive differential evolution algorithm with ensemble of mutation strategies. |

| 1: Set parameters g = 0, NP = 100, = 4 2: Establish initial population randomly 3: 4: Assign a mutation strategy to each individual randomly 5: 6: Evaluate population and find 7: 8: Assign CR[i] = 0.5 and F[i] = 0.9 to each individual 9: Repeat the following for each individual 10: Obtain 11: Obtain 12: Obtain 13: If , 14: If , 15: Update and 16: If the stopping criterion is not met, go to Lines 9–15 17: Else stop and return |

3. Optimus

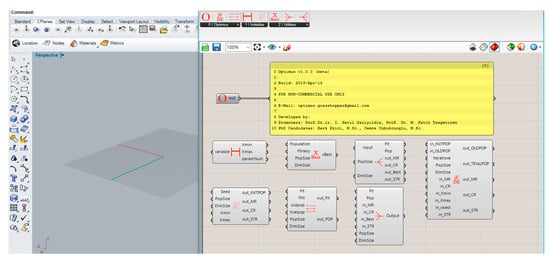

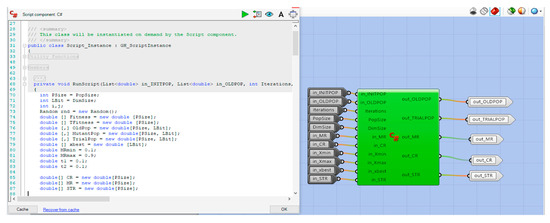

Optimus is a new optimization plug-in (https://www.food4rhino.com/app/optimus) developed for GH. The beta version (1.0.0) of the plug-in implements the self-adaptive [42] differential evolution algorithm with ensemble of mutation strategies [43] (jEDE). The algorithm is coded in C#, which is one of the available programming languages for custom scripting component in the GH. Optimus is based on a modular approach with many C# items. Every step of the optimization process can be observed within these items. Figure 4 shows the eight components of Optimus, which are categorized under three main titles, as follows:

Figure 4.

Components of Optimus v1.0.0 (beta).

- Optimus consists of

- ReadMe (giving details about Optimus),

- jEDE (using jEDE algorithm, generating mutant and trial populations)

- One-to-one (enabling selection process for next generation)

- Initialize consists of

- GetBound (taking the boundaries of design variables in dimensions)

- InitPop (generating initial population for population size in dimensions)

- Utilities consists of

- xBest (finding chromosomes that has the lowest fitness value in the population)

- Merge (collecting the fitness, population, xBest and optimization parameters)

- Unmerge (separating the fitness, population, xBest and optimization parameters)

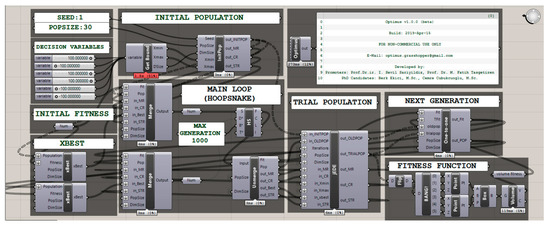

To manage the main loop of the Optimus, the HoopSnake component [60] is used for enabling feedback loops (recursive executions) within the GH.

In the Optimus, each of the eight components is transferred to GH clusters by defining collections of inputs and outputs as shown in Figure 5. This gives a flexibility to the user for improving the C# code according to his/her own implementation purposes. As next step, each cluster is converted to GH user object files, which are provided in the supplementary materials. By this way, Optimus can be used as a plug-in of the GH.

Figure 5.

Example cluster implementation for self-adaptive differential evolution algorithm with ensemble of mutation strategies (jEDE) component.

The user needs to follow several steps for using Optimus:

- Place GetBound on the GH canvas and connect with number sliders.

- Define the population size.

- Get InitPop for initialization using population size and output of GetBound.

- Evaluate initial fitness using the output of InitPop.

- Internalize the initial fitness.

- Place xBest on the GH canvas.

- Get Merge and connect with internalized initial fitness and outputs of InitPop and xBest.

- Connect Merge with starting input (S) of HoopSnake.

- Place UnMerge on the GH canvas and connect with feedback output (F) of HoopSnake.

- Get jEDE and connect outputs of UnMerge, InitPop, GetBound.

- Evaluate trial fitness using the output of jEDE.

- Get One-to-One and connect with initial fitness, trial fitness and outputs of jEDE.

- Place xBest and connect outputs of One-to-one for updating the new best chromosome.

- Get Merge and connect outputs of jEDE, One-to-one, and xBest.

- Complete the loop by connecting the output of Merge to the data input (D) of the HoopSnake.

These steps are visualized in Figure 6 and an example file is shared in supplementary materials.

Figure 6.

Visualization of the Optimus loop.

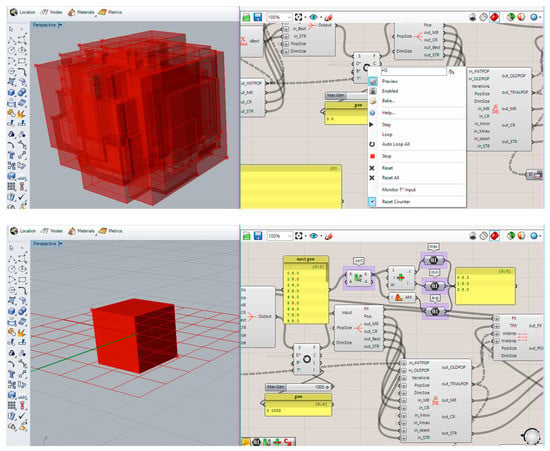

To save some computation time, Optimus does not update number sliders in the GH. During the optimization process of the design problem, the geometry is generated with initial and trial populations. For this reason, size of geometries is observed in each iteration. During the initial stage, various types of design alternatives are generated. However, when the Optimus is converged, similar geometries are observed, as shown in Figure 7.

Figure 7.

Examples of initial and optimized populations.

4. Experiments

In this section, we explain how the experiments were performed to test the performance of the Optimus in comparison to the other chosen plug-ins. As mentioned before, the evaluations are done by using standard benchmark problems in the literature and one architectural design problem proposed by the authors.

4.1. Benchmark Suite

The performance of the Optimus (jEDE algorithm) was firstly tested by using the following benchmark suite, which consists of 20 test functions. The first ten functions in the benchmark suite were classical test problems that have been commonly used in the literature. The remaining ten functions are taken from the benchmark suit presented in the CEC 2005 Special Session on Real-Parameter Optimization. These functions have been modified from the classical test problems in order to locate their global optimum under some circumstances such as shifted and/or rotated landscape, optimum placed on bounds, Gaussian noise and/or bias added etc. [44]. This fact makes these functions more difficult to solve than the classical test functions. In our test suit, to are unimodal, to are multimodal functions. All the benchmark functions are minimization problems. Our benchmark suite is presented in Table 1. These functions are summarized in Appendix A.

Table 1.

Benchmark Suite.

4.1.1. Experimental Setup and Evaluation Criteria

All the benchmark functions were coded in C# as individual components in the GH environment. These components are also available in supplementary materials as GH files to contribute evaluations of further developed architectural design optimization tools. Furthermore, all the benchmark functions ran on a computer that has Intel Core I7-6500U CPU @ 2.50 GHz with 16 GB RAM. Both the number of dimension and the population size are taken as 30 to limit the search space. To make a fair comparison, termination criteria defined as 30 min for each component. For each problem instance, five replications carried out for each function and for each tool (thus, the total run time is 12,000 min). For evaluating the performance of the algorithms (of the components), we simply reported , and where is the minimum fitness value of function , is the maximum fitness value of function and is the average fitness value of function , after all replications for each tool. The maximum number of fitness evaluations () within 30 min for each tool are also recorded, which means how many times the fitness is tested during each 30-min optimization process.

4.1.2. Experimental Results

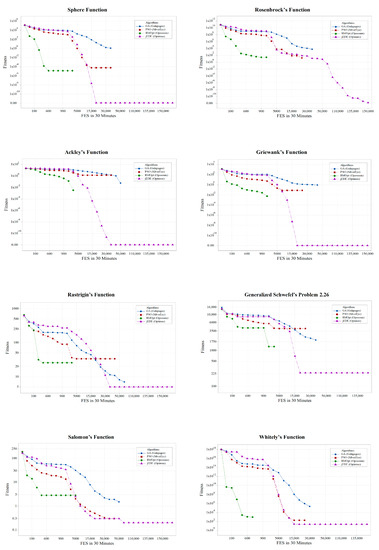

As mentioned before, Galapagos employs GA, SilverEye (v1.1.0) uses PSO and Opossum (v1.5.0) considers RBFOpt for enabling architectural design optimization in the GH environment. We compared results of Optimus, which uses jEDE, with those three optimization tools to present its performance. In addition, all the runs for each component and for each function were taken by the authors. Table 1 shows the fitness results of Optimus, Opossum, SilverEye, and Galapagos together with optimal fitness values of each function. Table 2 clearly indicates the superiority of the Optimus over other optimization tools such that it yielded the lowest minimum function values () in nineteen (19) functions out of twenty (20) functions, significantly. On the other hand, Galapagos favors Optimus in only one function () with a very small difference. Maximum () and average () function values in Table 1 further justify the better performance of the Optimus in such a way that the maximum and average function values of Optimus are closer to optimal fitness values in nineteen (19) functions than those yielded by other tools. Furthermore, the average number of function evaluations () within thirty minutes in each problem were the highest in Optimus. This clearly shows high margins between Optimus and other components where Optimus was tremendously faster in each iteration. For example, when solving , the Optimus (jEDE) approximately realized 5844 FES/minute, whereas GA made 1643 FES/minute, PSO made 1205 FES/minute, RBFOpt made 85 FES/minute. These facts explicitly imply the superiority of Optimus over other components in solving benchmark suite.

Table 2.

Comparison of Optimus, Opossum, Silvereye, Galapagos (D = 30, NP = 30, termination: 30 min).

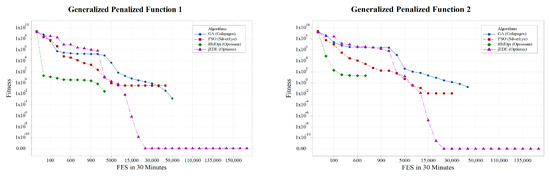

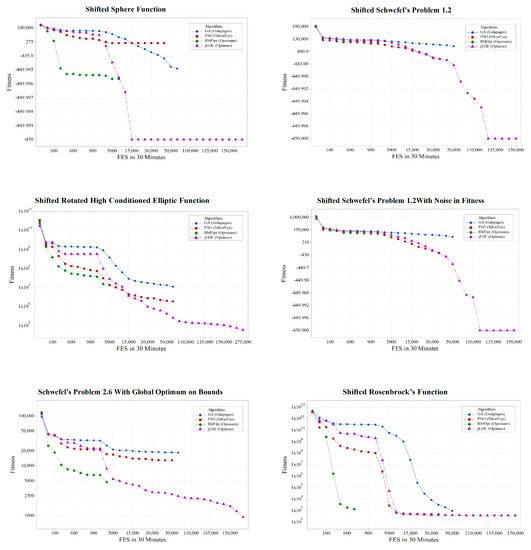

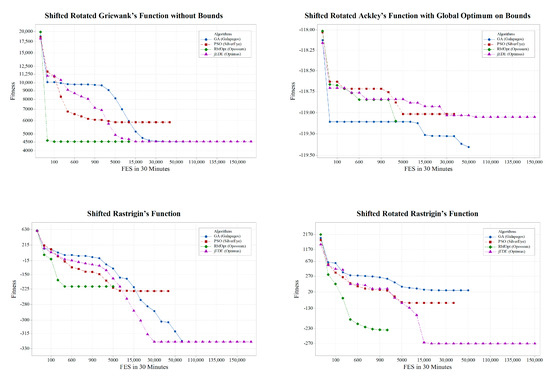

Results presented in Table 1 are provided in supplementary materials containing minimum fitness values of each replication, as well as their corresponding chromosomes. Considering , convergence of standard benchmarks is presented in Figure 8, whereas convergence of CEC 2005 benchmarks is given in Figure 9.

Figure 8.

Convergence graphs of standard benchmark functions.

Figure 9.

Convergence graphs of CEC2005 benchmark functions.

4.2. Design Optimization Problem

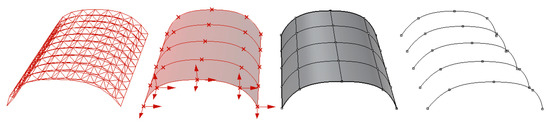

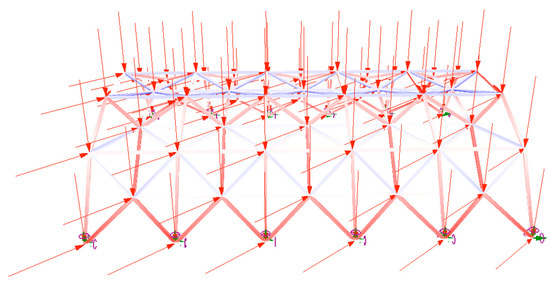

In this part, we present a design problem for a frame structure, which has a span for 30 m by 25 m. Before generating the frame structure, we executed a base surface, which controls the shape of the design. Therefore, a curved surface having 7.5 m height, five axes, and 25 controlling points is generated. Afterwards, 65 parameters are defined for 5 axes to change the shape of the base surface, so the shape of the frame structure. Points on ground level have two parameters for x and y directions, whereas other points have three parameters for changing the positions in all directions. In the next step, using the base surface, we generated the frame structure using truss structure component provided by LunchBox [61] plugin, which consists of several components using different geometrical shapes. In this component, the structure on the base surface is controlled by three parameters. These are division amounts on u and v directions, and the depth of the truss system. Finally, generated frame structure is combined with Karamba 3D plug-in [62], which provides evaluation of parametric structural models in GH. Structural evaluation process is mentioned on the following lines. Development of the parametric model is illustrated in Figure 10.

Figure 10.

Process of parametric frame structure.

To evaluate each generated structure alternative, each line in the structure model is defined as beam, whereas each point located on the ground level is defined as support points. In addition, cross-section and its parameters are also defined by Karamba just before the evaluation process. For the problem on hand, rectangular cross-section is defined using three parameters. These are height, upper and lower widths of the cross-section. To finalize the necessary inputs, we defined gravity and lateral loads. As gravity load, we considered the distribution of the total mass for each intersecting point. For the lateral load, we applied 2 kN on each intersecting point, as well. The material type of the model is assigned as steel. An example of evaluated model is shown in Figure 11.

Figure 11.

Evaluation of the structure model.

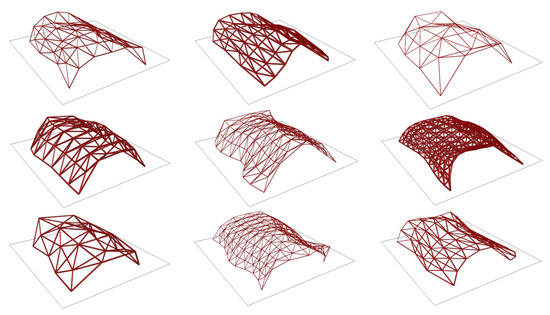

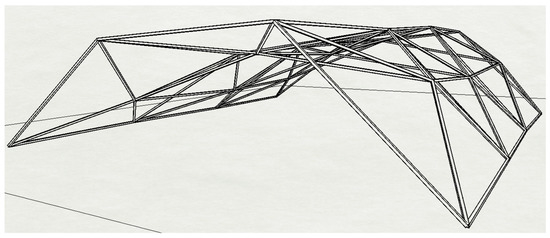

To sum up, 70 parameters are used to define the design optimization problem. This corresponds approximately 1.333 × alternatives in the search space. The reason of giving such a freedom is to investigate the impact of the design on the performance. Moreover, design problems are different than mathematical benchmark problems. A design problem can have more parameters due to an optimization task (e.g., optimizing the layout scheme and the façade design of a building to find the most desirable plan scheme with the lowest energy consumption). Properties of design parameters are given in Table 3. To show the divergence of the design problem, some of the frame structure alternatives are illustrated in Figure 12.

Table 3.

Properties of design parameters.

Figure 12.

Some alternatives of the design problem.

Objective function, which is minimizing the mass () subject to displacement (), is formulated as follows:

where is given by

where is the weight of th element of the frame structure and is the total number of the frame structure elements.

Subject to:

where is the loading force, and is the bending stiffness of the frame structure. To compare the optimization results of Optimus with others, we defined a penalty function by combining and as follows:

To sum up, for safety reasons, the final design should have minimum 0.1 m displacement. For this reason, displacement result that has smaller than 0.1 m is a feasible solution. In addition, for minimizing construction cost, the objective is defined as the minimization of the mass. Therefore, the final design should have the smallest amount of steel usage. The higher the mass is the lower the displacement, and the other way around.

4.2.1. Experimental Setup and Evaluation Criteria

The design optimization problem ran on a computer that had Intel Core I7-6500U CPU @ 2.50 GHz with 16 GB RAM. The number of dimensions was taken as 70, whereas the population size was taken as 50. As such the CEC 2005 benchmark problems, the termination criteria was determined as 30 min for each run. For the instance on hand, only one replication carried out for each optimization tool. To evaluate the performance of different optimization tools, we report , which is the minimum fitness value of the design problem, and , which is the constraint value of the minimum fitness. The maximum number of fitness evaluations () within 30 min for each tool are also recorded, which means how many times the fitness is evaluated during the optimization process. This problem definition is also available in supplementary materials as GH file to contribute evaluations of further developed architectural design optimization tools.

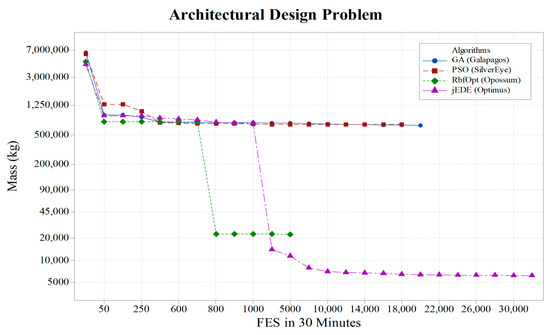

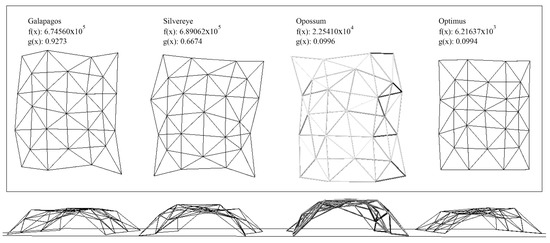

4.2.2. Design Results

After 30 min run for each tool in GH, overview of optimization results is given Table 4. Convergence graph for Optimus, Opossum, SilverEye and Galapagos is also given in Figure 13. In addition, final designs proposed by each algorithm are also illustrated in Figure 14. Based on these results, Optimus and Opossum found feasible solutions, whereas SilverEye and Galapagos discovered infeasible alternatives. Looking at feasible solutions, there is a significant difference between jEDE and RbfOpt. Optimus found smaller mass amount than Opossum. During the optimization process, we also observed that Optimus evaluated fitness function more than other tools. From the point of proposed design alternatives, Galapagos and SilverEye found bigger frame structures with smaller profile dimensions. This causes an increment on both displacement and mass. On the other hand, Opossum presented similar size with Galapagos and Silvereye, but bigger dimension sizes for profile. This suggests smaller amount of displacement. However, the final solution has more than 22 tons of steel. From the point of Optimus, the final design alternative presents the smallest frame structure having small profile sizes. This gives not only an advantage on displacement, but also provides a design alternative having smaller amount of steel comparing with other algorithms. A parametric model with 70 decision variables is a challenging optimization problem in the domain of architecture. From this perspective, the impact of self-adaptive parameter update, and ensemble of mutation strategies can be seen for the architectural design problem.

Table 4.

Comparison of Optimus, Opossum, Silvereye, Galapagos (D = 70, NP = 50, termination: 30 min).

Figure 13.

Convergence graph of architectural design problem.

Figure 14.

Optimized results of design problem.

Results presented in Table 3 are provided in supplementary materials containing minimum fitness values, and their corresponding chromosomes. The best result discovered by Optimus is illustrated in Figure 15.

Figure 15.

Illustration of the best alternative (Optimus) result for design optimization problem.

5. Discussion

In this paper, we presented a new optimization tool called Optimus, which implements jEDE algorithm, for GH. We tested the performance of Optimus using 20 benchmark problems and a design optimization problem against GA, PSO, RBFOpt. Experimental results show that jEDE outperforms these algorithms by finding better fitness values. There are several reasons to explain this fact. First, Optimus uses self-adaptive approach for producing control parameters. This gives an advantage to adapt the algorithm itself according to the nature of the problem. Secondly, Optimus presented significantly smaller fitness values than the other tools. This is related to the ensemble of mutation strategies. While the other algorithms are based on only one mutation operator, Optimus uses three mutation operators. During searching for a better solution, using more than one mutation strategy enlarges the search space. This increases the ability of searching near-optimal results. Thirdly, Optimus does not update number sliders, which corresponds to parameters, for each iteration. Instead, generated populations are directly connected to the geometry. Therefore, required computation time for one generation is less than the other algorithms. Finally, optimal values of some problems are outside of the initialization range (e.g., ). One may argue that this situation can be faced in architectural design problems, as well. Generating chromosome values outside of the initialization range is possible in the Optimus, whereas it is not possible in the other tools.

RBFOpt algorithm is recently compared with metaheuristics using several building optimization problems in [12,13,14,32]. According to the results, RBFOpt found better solutions than metaheuristics. In these studies, termination criterion is defined as number of iterations during the evaluation of different algorithms. This gives an advantage for RBFOpt, due to ability to discover desirable solutions with small number of function evaluations. However, metaheuristics require more function evaluations to find the optimal solutions. In this study, we defined the termination criterion as run time for 30 min to make a fair comparison. Results show that RBFOpt requires more computation time to execute one function than metaheuristics. For this reason, while comparing these algorithms, rather than iterations and/or function evaluations, run time should be considered as termination criterion.

Fitness functions based on simulations (e.g., energy and daylight) may require a huge amount of time for the convergence during the optimization. From the point of metaheuristics, usage of surrogate models [63] can be a solution to overcome this drawback. Because, surrogate models require less amount of function evaluation while approximating the fitness. By this way, researchers can consider many replications in a short run time.

Furthermore, handling constraints is another important topic for architectural design optimization. Most of the real-world problems require design constraints that may restrict the design alternatives during the optimization process. There are many constraint handling methods that can be practically integrated to the optimization algorithms [64]. In the reviewed literature, only Nelder–Mead optimization tool provides a constraint handling method for the design optimization problems in the GH. To make a fair comparison with Galapagos, SilverEye, and Opossum, we considered penalty function as constraint to find the feasible solution in the design optimization problem. However, there are extremely hard constraints that can be tackled in the real-world problems e.g., equality constraints. Currently, this is not available in GH.

In addition to the advantages of Optimus mentioned above, there are some limitations, as well. Firstly, even though modular approach provides a flexibility when we want to use different algorithms, it requires more plug-in items to generate each step of the optimization. This effects the practical usage of Optimus. Another limitation is evaluation strategy of fitness function. In other words, Optimus creating NP size of list, where each element in the list has D size of dimensions. All these parameters (with NPxD size) are sending to the objective function for fitness evaluation. Then, we obtain NP size of fitness results simultaneously. In the other tools, each parameter is sending to the fitness function in sequence. Then, the fitness function is evaluated. This fact gives an advantage for Optimus in terms of computation time but it may not be suitable for some of the architectural design problems.

6. Conclusions

As the conclusion, this paper presented a new optimization plug-in, called Optimus for grasshopper algorithmic modelling (GH) in the Rhinoceros CAD program. A self-adaptive differential evolution algorithm with ensemble of mutation strategies was implemented in Optimus for architectural design optimization problems. To test the algorithm performance, experimental design was made by using standard test problems in the literature, some of the test problems proposed in IEEE CEC 2005 and an architectural design problem. In general, Optimus outperformed other optimization tools in GH. This paper showed that an algorithm that presents a good performance in solving real-parameter benchmark functions can also find more desirable solutions in solving architectural design problems.

As future work, Optimus will be further improved by implementing different types of metaheuristic algorithms due to NFLT. These algorithms can be variants of PSO, GA, HS, and DE. Moreover, Optimus can be updated for constrained optimization problems using near-feasibility threshold [65,66], Superior of Feasibility [67], and Epsilon constraint [68]. Moreover, ensemble of constraint handling techniques [69] can be used in Optimus that may play a crucial role in architectural design problems. Finally, Optimus can be simply extended for multi-objective optimization problems due to its modular system.

Supplementary Materials

The following are available online at https://www.mdpi.com/1999-4893/12/7/141/s1.

Author Contributions

Conceptualization, C.C. and B.E.; Investigation, C.C. and B.E.; Methodology, C.C., B.E., M.F.T. and S.S.; Project administration, M.F.T. and S.S.; Software, C.C., B.E. and M.F.T.; Supervision, M.F.T. and S.S.; Validation, C.C. and B.E.; Writing–original draft, C.C. and B.E.; Writing–review & editing, C.C. and B.E.

Funding

M.F.T. was partially funded by the National Natural Science Foundation of China (Grant No. 51435009).

Acknowledgments

The authors would like to thank Michela Turrin and Pirouz Nourian for their useful feedbacks at the Chair of Design Informatics of the Faculty of Architecture and the Built Environment in TU Delft. M.F.T. acknowledges the HUST Project in Wuhan in China.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Benchmark Functions.

Table A1.

Benchmark Functions.

| : Sphere Function |

| : Rosenbrock’s Function |

| : Ackley’s Function |

| : Griewank’s Function |

| : Rastrigin’s Function |

| : Generalized Schwefel’s Problem 2.26 |

| : Salomon’s Function |

| : Whitely’s Function where |

| : Generalized Penalized Function 1 where and |

| : Generalized Penalized Function 2 |

| : Shifted Sphere Function , |

| : Shifted Schwefel’s Problem 1.2 , |

| : Shifted Rotated High Conditioned Elliptic Function , |

| : Shifted Schwefel’s Problem 1.2 With Noise in Fitness , |

| : Schwefel’s Problem 2.6 with Global Optimum on Bounds , |

| : Shifted Rosenbrock’s Function , |

| : Shifted Rotated Griewank’s Function without Bounds Initialize population in , Global optimum is outside of the initialization range, |

| : Shifted Rotated Ackley’s Function with Global Optimum on Bounds. , |

| : Shifted Rastrigin’s Function , |

| : Shifted Rotated Rastrigin’s Function . |

References

- Sariyildiz, S. Performative Computational Design, Keynote Speech. In Proceedings of the ICONARCH-I: International Congress of Architecture-I, Konya, Turkey, 15–17 November 2012. [Google Scholar]

- Ekici, B.; Cubukcuoglu, C.; Turrin, M.; Sariyildiz, I.S. Performative computational architecture using swarm and evolutionary optimisation: A review. Build. Environ. 2018, 147, 356–371. [Google Scholar] [CrossRef]

- Michalewicz, Z.; Fogel, D.B. How to Solve it: Modern Heuristics; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. A New Optimizer Using Particle Swarm Theory. In Proceedings of the MHS’95. Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Storn, R. On the Usage of Differential Evolution for Function Optimization. In Proceedings of the North American Fuzzy Information Processing, Berkeley, CA, USA, 19–22 June 1996; pp. 519–523. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Goldberg, D.E.; Holland, J.H. Genetic algorithms and machine learning. Mach. Learn. 1988, 3, 95–99. [Google Scholar] [CrossRef]

- Dorigo, M.; Gambardella, L.M. Ant colony system: A cooperative learning approach to the traveling salesman problem. IEEE Trans. Evol. Comput. 1997, 1, 53–66. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Coello, C.A.C.; Lamont, G.B.; van Veldhuizen, D.A. Evolutionary Algorithms for Solving Multi-Objective Problems; Springer: Berlin/Heidelberg, Germany, 2007; Volume 5. [Google Scholar]

- Wortmann, T. Genetic Evolution vs. Function Approximation: Benchmarking Algorithms for Architectural Design Optimization. J. Comput. Des. Eng. 2018, 6, 414–428. [Google Scholar] [CrossRef]

- Wortmann, T.; Waibel, C.; Nannicini, G.; Evins, R.; Schroepfer, T.; Carmeliet, J. Are Genetic Algorithms Really the Best Choice for Building Energy Optimization? In Proceedings of the Symposium on Simulation for Architecture and Urban Design, Toronto, CA, Canada, 22–24 May 2017; p. 6. [Google Scholar]

- Waibel, C.; Wortmann, T.; Evins, R.; Carmeliet, J. Building energy optimization: An extensive benchmark of global search algorithms. Energy Build. 2019, 187, 218–240. [Google Scholar] [CrossRef]

- Cichocka, J.M.; Migalska, A.; Browne, W.N.; Rodriguez, E. SILVEREYE—The Implementation of Particle Swarm Optimization Algorithm in a Design Optimization Tool. In Proceedings of the International Conference on Computer-Aided Architectural Design Futures, Istanbul, Turkey, 10–14 July 2017; pp. 151–169. [Google Scholar]

- Rutten, D. Galapagos: On the logic and limitations of generic solvers. Archit. Des. 2013, 83, 132–135. [Google Scholar] [CrossRef]

- Camporeale, P.E.; del Moyano, P.M.M.; Czajkowski, J.D. Multi-objective optimisation model: A housing block retrofit in Seville. Energy Build. 2017, 153, 476–484. [Google Scholar] [CrossRef]

- Calcerano, F.; Martinelli, L. Numerical optimisation through dynamic simulation of the position of trees around a stand-alone building to reduce cooling energy consumption. Energy Build. 2016, 112, 234–243. [Google Scholar] [CrossRef]

- Anton, I.; Tãnase, D. Informed geometries. Parametric modelling and energy analysis in early stages of design. Energy Procedia 2016, 85, 9–16. [Google Scholar] [CrossRef]

- Tabadkani, A.; Banihashemi, S.; Hosseini, M.R. Daylighting and visual comfort of oriental sun responsive skins: A parametric analysis. Build. Simul. 2018, 11, 663–676. [Google Scholar] [CrossRef]

- Lee, K.; Han, K.; Lee, J. Feasibility study on parametric optimization of daylighting in building shading design. Sustainability 2016, 8, 1220. [Google Scholar] [CrossRef]

- Fathy, F.; Sabry, H.; Faggal, A.A. External Versus Internal Solar Screen: Simulation Analysis for Optimal Daylighting and Energy Savings in an Office Space. In Proceedings of the PLEA, Edinburgh, UK, 16 August 2017. [Google Scholar]

- Lavin, C.; Fiorito, F. Optimization of an external perforated screen for improved daylighting and thermal performance of an office space. Procedia Eng. 2017, 180, 571–581. [Google Scholar] [CrossRef]

- Heidenreich, C.; Ruth, J. Parametric optimization of lightweight structures, In Proceedings of the 11th World Congress on Computational Mechanics, Barcelona, Spain, 21–25 July 2014.

- Eisenbach, P.; Grohmann, M.; Rumpf, M.; Hauser, S. Seamless Rigid Connections of Thin Concrete Shells—A Novel Stop-End Construction Technique for Prefab Elements. In Proceedings of the IASS Annual Symposia, Amsterdam, The Netherlands, 17–20 August 2015; Volume 2015, pp. 1–12. [Google Scholar]

- Almaraz, A. Evolutionary Optimization of Parametric Structures: Understanding Structure and Architecture as a Whole from Early Design Stages. Master’s Thesis, University of Coruna, La Coruña, Spain, 2015. [Google Scholar]

- Simon. Goat. 2013. Available online: https://www.food4rhino.com/app/goat (accessed on 10 July 2019).

- Johnson, S.G. The Nlopt Nonlinear-Optimization Package. Available online: https://nlopt.readthedocs.io/en/latest/ (accessed on 10 July 2019).

- Ilunga, G.; Leitão, A. Derivative-free Methods for Structural Optimization. In Proceedings of the 36th eCAADe Conference, Lodz, Poland, 19–21 September 2018. [Google Scholar]

- Austern, G.; Capeluto, I.G.; Grobman, Y.J. Rationalization and Optimization of Concrete Façade Panels. In Proceedings of the 36th eCAADe Conference, Lodz, Poland, 19–21 September 2018. [Google Scholar]

- Delmas, A.; Donn, M.; Grosdemouge, V.; Musy, M.; Garde, F. Towards Context & Climate Sensitive Urban Design: An Integrated Simulation and Parametric Design Approach. In Proceedings of the 4th International Conference On Building Energy & Environment 2018 (COBEE2018), Melbourne, Australia, 5–9 February 2018. [Google Scholar]

- Wortmann, T. Opossum: Introducing and Evaluating a Model-based Optimization Tool for Grasshopper. In Proceedings of the CAADRIA 2017, Hong Kong, China, 5–8 July 2017. [Google Scholar]

- Costa, A.; Nannicini, G. RBFOpt: An open-source library for black-box optimization with costly function evaluations. Math. Program. Comput. 2018, 10, 597–629. [Google Scholar] [CrossRef]

- Wortmann, T. Model-based Optimization for Architectural Design: Optimizing Daylight and Glare in Grasshopper. Technol. Archit. Des. 2017, 1, 176–185. [Google Scholar] [CrossRef]

- Greco, L. Dodo. 2015. Available online: https://www.food4rhino.com/app/dodo (accessed on 10 July 2019).

- Eckersley O’Callaghan’s Digital Design Group. 2013. Nelder-Mead Optimization. Available online: https://www.food4rhino.com/app/nelder-mead-optimisation-eoc (accessed on 10 July 2019).

- Lagarias, J.C.; Reeds, J.A.; Wright, M.H.; Wright, P.E. Convergence properties of the Nelder--Mead simplex method in low dimensions. SIAM J. Optim. 1998, 9, 112–147. [Google Scholar] [CrossRef]

- Wrenn, G.A. An Indirect Method for Numerical Optimization Using the Kreisselmeir-Steinhauser Function; NASA: Washington, DC, USA, 1989.

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Attia, S.; Hamdy, M.; O’Brien, W.; Carlucci, S. Assessing gaps and needs for integrating building performance optimization tools in net zero energy buildings design. Energy Build. 2013, 60, 110–124. [Google Scholar] [CrossRef]

- Robert McNeel & Associates. Rhinoceros 3D. NURBS Modelling. 2015. Available online: https://www.rhino3d.com/ (accessed on 10 July 2019).

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-adapting control parameters in differential evolution: A comparative study on numerical benchmark problems. IEEE Trans. Evol. Comput. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Mallipeddi, R.; Suganthan, P.N.; Pan, Q.-K.; Tasgetiren, M.F. Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl. Soft Comput. 2011, 11, 1679–1696. [Google Scholar] [CrossRef]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.-P.; Auger, A.; Tiwari, S. Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization. KanGAL Rep. 2005, 2005005, 2005. [Google Scholar]

- Blackwell, T.M.; Kennedy, J.; Poli, R. Particle swarm optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar]

- Sengupta, S.; Basak, S.; Peters, R. Particle Swarm Optimization: A survey of historical and recent developments with hybridization perspectives. Mach. Learn. Knowl. Extr. 2019, 1, 157–191. [Google Scholar] [CrossRef]

- Mirjalili, S. Genetic Algorithm. In Evolutionary Algorithms and Neural Networks; Springer: New York, NY, USA, 2019; pp. 43–55. [Google Scholar]

- Koza, J.R. Genetic Programming: On the Programming of Computers by Means of Natural Selection; MIT press: Cambridge, MA, USA, 1992; Volume 1. [Google Scholar]

- Knowles, J.; Corne, D. The Pareto Archived Evolution Strategy: A New Baseline Algorithm for Pareto Multiobjective Optimisation. In Proceedings of the Congress on Evolutionary Computation (CEC99), Washington, DC, USA, 6–9 July 1999; Volume 1, pp. 98–105. [Google Scholar]

- Pan, Q.-K.; Tasgetiren, M.F.; Liang, Y.-C. A discrete differential evolution algorithm for the permutation flowshop scheduling problem. Comput. Ind. Eng. 2008, 55, 795–816. [Google Scholar] [CrossRef]

- Venu, M.K.; Mallipeddi, R.; Suganthan, P.N. Fiber Bragg grating sensor array interrogation using differential evolution. Optoelectron. Adv. Mater. Commun. 2008, 2, 682–685. [Google Scholar]

- Varadarajan, M.; Swarup, K.S. Differential evolution approach for optimal reactive power dispatch. Appl. Soft Comput. 2008, 8, 1549–1561. [Google Scholar] [CrossRef]

- Das, S.; Konar, A. Automatic image pixel clustering with an improved differential evolution. Appl. Soft Comput. 2009, 9, 226–236. [Google Scholar] [CrossRef]

- Chatzikonstantinou, I.; Ekici, B.; Sariyildiz, I.S.; Koyunbaba, B.K. Multi-Objective Diagrid Façade Optimization Using Differential Evolution. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 2311–2318. [Google Scholar]

- Cubukcuoglu, C.; Chatzikonstantinou, I.; Tasgetiren, M.F.; Sariyildiz, I.S.; Pan, Q.-K. A multi-objective harmony search algorithm for sustainable design of floating settlements. Algorithms 2016, 9, 51. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Differential evolution: A survey of the state-of-the-art. IEEE Trans. Evol. Comput. 2011, 15, 4–31. [Google Scholar] [CrossRef]

- Das, S.; Mullick, S.S.; Suganthan, P.N. Recent advances in differential evolution—an updated survey. Swarm Evol. Comput. 2016, 27, 1–30. [Google Scholar] [CrossRef]

- Tasgetiren, M.F.; Suganthan, P.N.; Pan, Q.-K.; Mallipeddi, R.; Sarman, S. An Ensemble of Differential Evolution Algorithms for Constrained Function Optimization. In Proceedings of the IEEE congress on evolutionary computation, Vancouver, BC, Canada, 24–29 July 2016; pp. 1–8. [Google Scholar]

- Hansen, N.; Ostermeier, A. Completely derandomized self-adaptation in evolution strategies. Evol. Comput. 2001, 9, 159–195. [Google Scholar] [CrossRef] [PubMed]

- Chatzikonstantinou, I. HoopSnake. 2012. Available online: https://www.food4rhino.com/app/hoopsnake (accessed on 10 July 2019).

- Miller, N. LunchBox. 2012. Available online: https://www.food4rhino.com/app/lunchbox (accessed on 10 July 2019).

- Preisinger, C.; Heimrath, M. Karamba—A toolkit for parametric structural design. Struct. Eng. Int. 2014, 24, 217–221. [Google Scholar] [CrossRef]

- Shan, S.; Wang, G.G. Survey of modeling and optimization strategies to solve high-dimensional design problems with computationally-expensive black-box functions. Struct. Multidiscip. Optim. 2010, 41, 219–241. [Google Scholar] [CrossRef]

- Coello, C.A.C. Theoretical and numerical constraint-handling techniques used with evolutionary algorithms: A survey of the state of the art. Comput. Methods Appl. Mech. Eng. 2002, 191, 1245–1287. [Google Scholar] [CrossRef]

- Tasgetiren, M.F.; Suganthan, P.N. A Multi-Populated Differential Evolution Algorithm for Solving Constrained Optimization Problem. In Proceedings of the 2006 IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 33–40. [Google Scholar]

- Coit, D.W.; Smith, A.E. Penalty guided genetic search for reliability design optimization. Comput. Ind. Eng. 1996, 30, 895–904. [Google Scholar] [CrossRef]

- Deb, K. An efficient constraint handling method for genetic algorithms. Comput. Methods Appl. Mech. Eng. 2000, 186, 311–338. [Google Scholar] [CrossRef]

- Haimes, Y.V. On a bicriterion formulation of the problems of integrated system identification and system optimization. IEEE Trans. Syst. Man. Cybern. 1971, 1, 296–297. [Google Scholar]

- Mallipeddi, R.; Suganthan, P.N. Ensemble of constraint handling techniques. IEEE Trans. Evol. Comput. 2010, 14, 561–579. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).