Optical Flow Estimation with Occlusion Detection

Abstract

:1. Introduction

2. Related Work

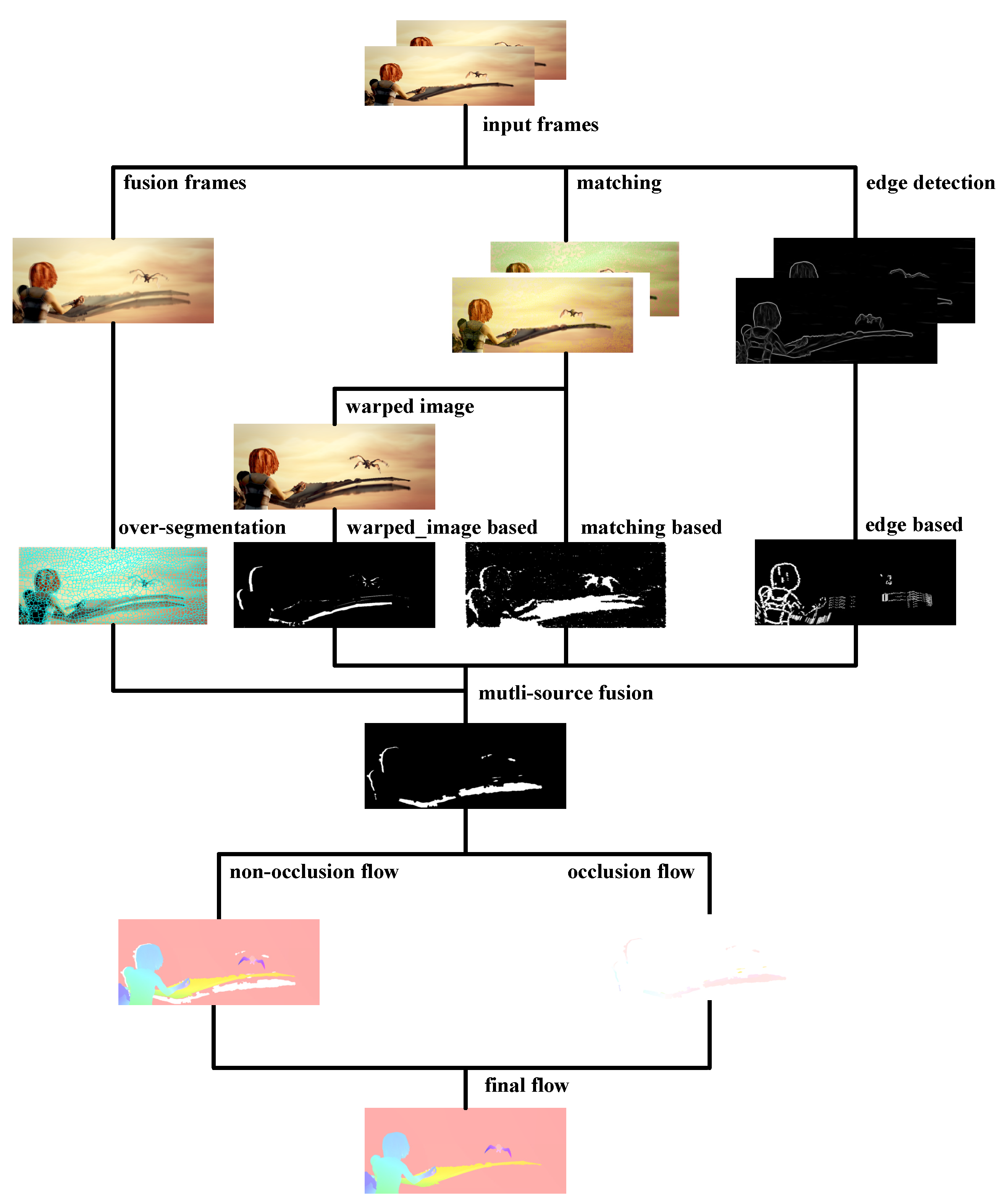

3. Occlusion Detection Strategy

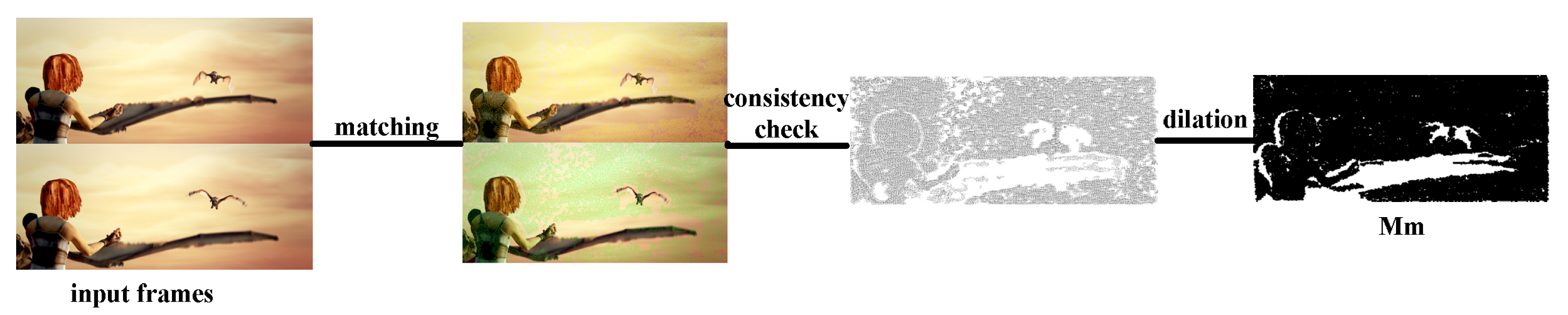

3.1. Matching-Based Strategy

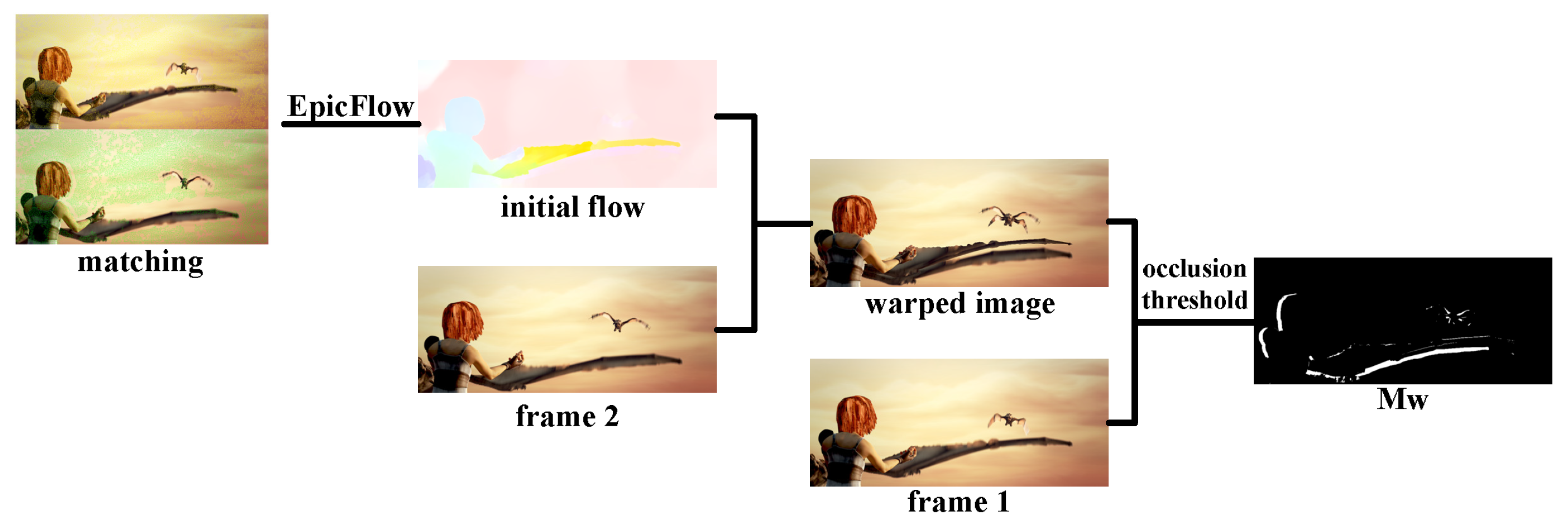

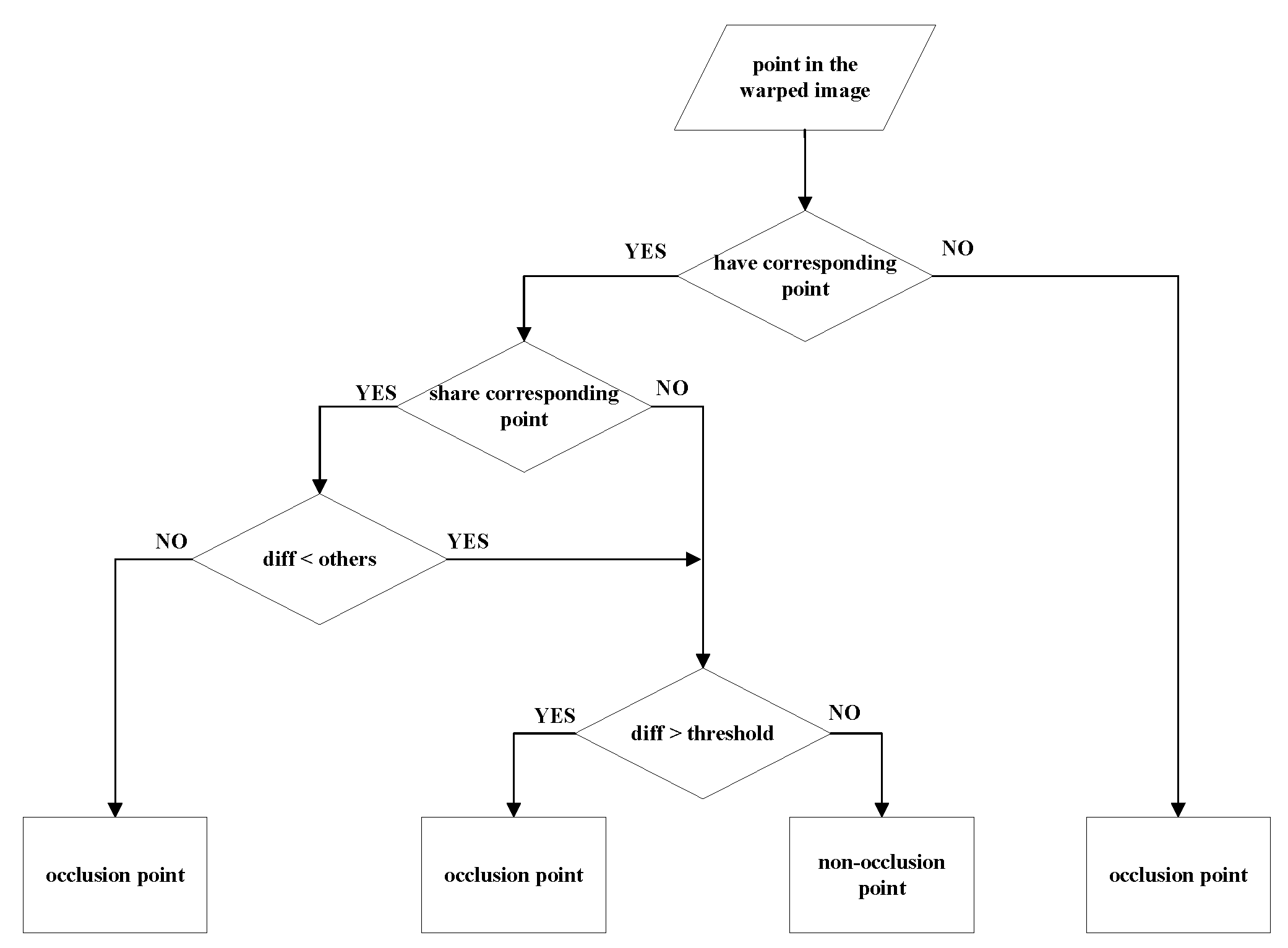

3.2. Warped Image-Based Strategy

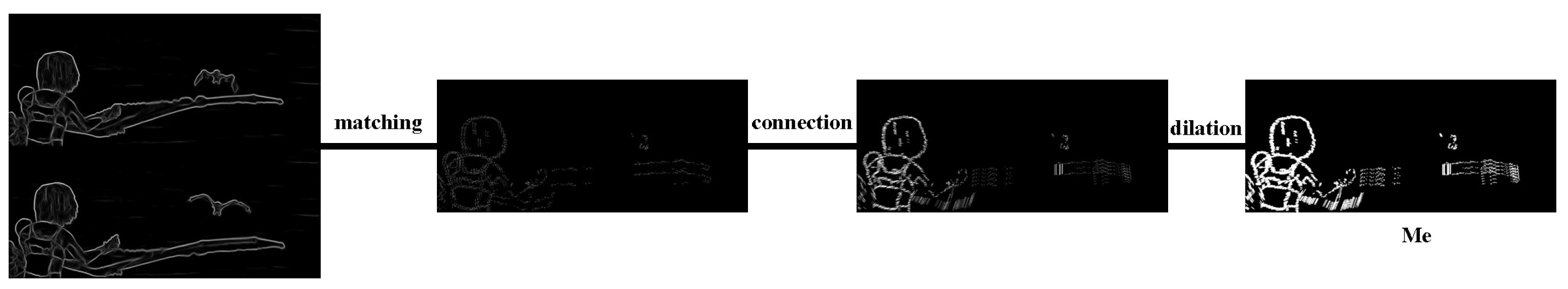

3.3. Edge-Based Strategy

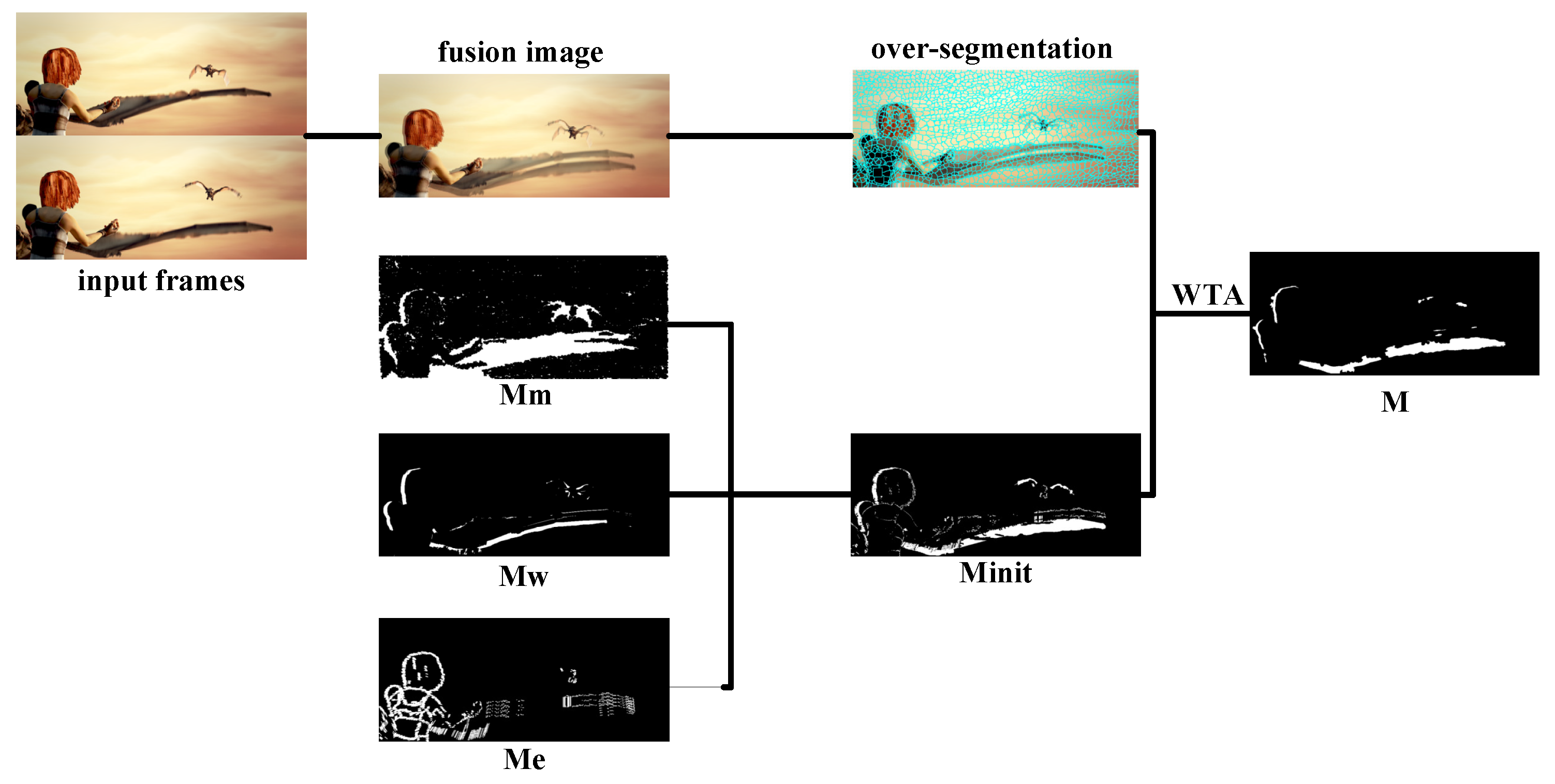

3.4. Regional-Based Fusion Algorithm

4. Occlusion-Aware Optical Flow Estimation

5. Experiments

5.1. Experiments on Occlusion Detection

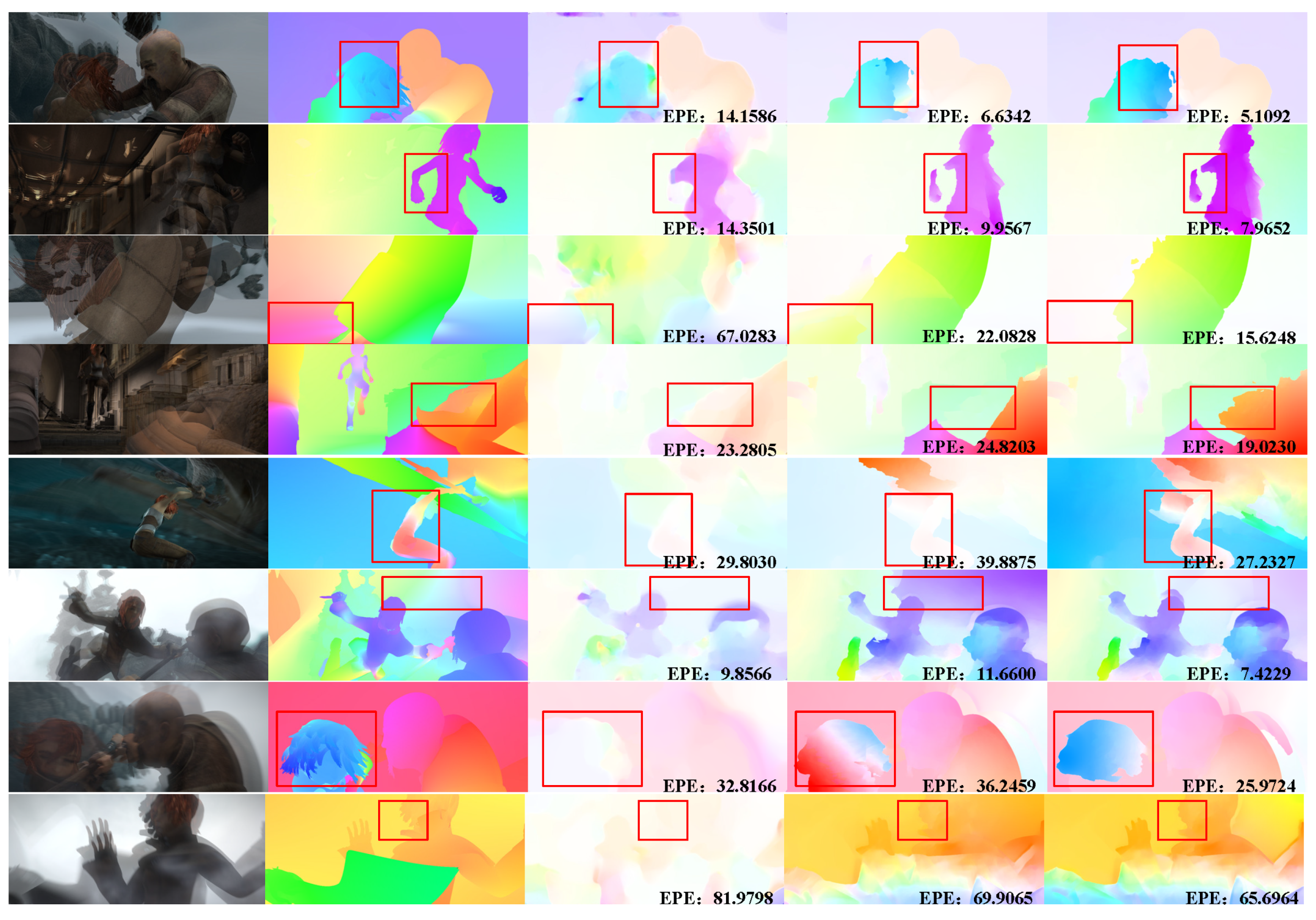

5.2. Experiments on Optical Flow

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Tsai, Y.H.; Yang, M.H.; Black, M.J. Video segmentation via object flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3899–3908. [Google Scholar]

- Baghaie, A.; Tafti, A.P.; Owen, H.A.; D’Souza, R.M.; Yu, Z. Three-dimensional reconstruction of highly complex microscopic samples using scanning electron microscopy and optical flow estimation. PLoS ONE 2017, 12, e0175078. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, K.; Mukherjee, A. Joint optical flow motion compensation and video compression using hybrid vector quantization. In Proceedings of the DCC’99 Data Compression Conference, Snowbird, UT, USA, 29–31 March 1999; p. 541. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the DARPA Image Understanding Workshop, 24–28 April 1981. [Google Scholar]

- Weinzaepfel, P.; Revaud, J.; Harchaoui, Z.; Schmid, C. DeepFlow: Large displacement optical flow with deep matching. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1385–1392. [Google Scholar]

- Bailer, C.; Taetz, B.; Stricker, D. Flow fields: Dense correspondence fields for highly accurate large displacement optical flow estimation. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 7–13 December 2015; pp. 4015–4023. [Google Scholar]

- Brox, T.; Malik, J. Large displacement optical flow: descriptor matching in variational motion estimation. IEEE Trans. Pattern Anal. Mach. Intel. 2011, 33, 500–513. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ranjan, A.; Black, M.J. Optical flow estimation using a spatial pyramid network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Thewlis, J.; Zheng, S.; Torr, P.H.; Vedaldi, A. Fully-trainable deep matching. arXiv 2016, arXiv:1609.03532. [Google Scholar]

- Žbontar, J.; Lecun, Y. Computing the tereo Mtching cost with a convolutional neural network. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1592–1599. [Google Scholar]

- Gadot, D.; Wolf, L. Patchbatch: A batch augmented loss for optical flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4236–4245. [Google Scholar]

- Sevilla-Lara, L.; Sun, D.; Jampani, V.; Black, M.J. Optical flow with semantic segmentation and localized layers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3889–3898. [Google Scholar]

- Bai, M.; Luo, W.; Kundu, K.; Urtasun, R. Exploiting semantic information and deep matching for optical flow. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 154–170. [Google Scholar]

- Shen, X.; Gao, H.; Tao, X.; Zhou, C.; Jia, J. High-quality correspondence and segmentation estimation for dual-lens smart-phone portraits. arXiv 2017, arXiv:1704.02205. [Google Scholar]

- Black, M.J.; Anandan, P. The robust estimation of multiple motions: Parametric and piecewise-smooth flow fields. Comput. Vis. Image Underst. 1996, 63, 75–104. [Google Scholar] [CrossRef]

- Bruhn, A.; Weickert, J.; Schnörr, C. Lucas/Kanade meets Horn/Schunck: Combining local and global optic flow methods. Int. J. Comput. Vis. 2005, 61, 211–231. [Google Scholar] [CrossRef]

- Lefebure, M.; Alvarez, L.; Esclarin, J.; Sánchez, J. A PDE model for computing the optical flow. In Proceedings of the XVI Congreso de Ecuaciones Diferenciales y Aplicaciones, Las Palmas de Gran Canaria, Spain, 21–24 September 1999; pp. 1349–1356. [Google Scholar]

- Alvarez, L.; Deriche, R.; Sanchez, J.; Weickert, J. Dense disparity map estimation respecting image discontinuities: A PDE and scale-space based approach. J. Vis. Commun. Image Represent. 2002, 13, 3–21. [Google Scholar] [CrossRef]

- Hur, J.; Roth, S. MirrorFlow: Exploiting symmetries in joint optical flow and occlusion estimation. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Kim, H.; Sohn, K. 3D reconstruction from stereo images for interactions between real and virtual objects. Signal Process. Image Commun. 2005, 20, 61–75. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intel. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Anandan, P. A computational framework and an algorithm for the measurement of visual motion. Int. J. Comput. Vis. 1989, 2, 283–310. [Google Scholar] [CrossRef]

- Yang, Y.; Soatto, S. S2F: Slow-to-fast interpolator flow. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Weickert, J.; Bruhn, A.; Brox, T.; Papenberg, N. A survey on variational optic flow methods for small displacements. In Mathematical Models for Registration and Applications to Medical Imaging; Springer: Berlin/Heidelberg, Germany, 2006; pp. 103–136. [Google Scholar]

- Monzón, N.; Salgado, A.; Sánchez, J. Regularization strategies for discontinuity-preserving optical flow methods. IEEE Trans. Image Process. 2016, 25, 1580–1591. [Google Scholar] [CrossRef] [PubMed]

- Sun, D.; Roth, S.; Black, M.J. A quantitative analysis of current practices in optical flow estimation and the principles behind them. Int. J. Comput. Vis. 2014, 106, 115–137. [Google Scholar] [CrossRef]

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High accuracy optical flow estimation based on a theory for warping. In Proceedings of the European Conference on Computer Vision, Prague, Czech Republic, 11–14 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 25–36. [Google Scholar]

- Alvarez, L.; Deriche, R.; Papadopoulo, T.; Sánchez, J. Symmetrical dense optical flow estimation with occlusions detection. Int. J. Comput. Vis. 2007, 75, 371–385. [Google Scholar] [CrossRef]

- Kennedy, R.; Taylor, C.J. Optical flow with geometric occlusion estimation and fusion of multiple frames. In Proceedings of the International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, Hong Kong, China, 13–16 January 2015; Springer: Cham, Switzerland, 2015; pp. 364–377. [Google Scholar]

- Chen, Q.; Koltun, V. Full flow: Optical flow estimation by global optimization over regular grids. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4706–4714. [Google Scholar]

- Wulff, J.; Sevilla-Lara, L.; Black, M.J. Optical flow in mostly rigid scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 2, p. 7. [Google Scholar]

- He, K.; Sun, J. Computing nearest-neighbor fields via propagation-assisted kd-trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 111–118. [Google Scholar]

- Luo, W.; Schwing, A.G.; Urtasun, R. Efficient deep learning for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5695–5703. [Google Scholar]

- Revaud, J.; Weinzaepfel, P.; Harchaoui, Z.; Schmid, C. Epicflow: Edge-preserving interpolation of correspondences for optical flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1164–1172. [Google Scholar]

- Dollár, P.; Zitnick, C.L. Structured forests for fast edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1841–1848. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intel. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Blake, A.; Zisserman, A. Visual Reconstruction; MIT Press: London, UK, 1987. [Google Scholar]

- Wainwright, M.J.; Jaakkola, T.S.; Willsky, A.S. MAP estimation via agreement on trees: message-passing and linear programming. IEEE Trans. Inf. Theory 2005, 51, 3697–3717. [Google Scholar] [CrossRef]

- Kolmogorov, V. Convergent tree-reweighted message passing for energy minimization. IEEE Trans. Pattern Anal. Mach. Intel. 2006, 28, 1568–1583. [Google Scholar] [CrossRef] [PubMed]

- Pérez, J.S.; López, N.M.; de la Nuez, A.S. Robust optical flow estimation. Image Process. Line 2013, 3, 252–270. [Google Scholar] [CrossRef]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 611–625. [Google Scholar]

- Wang, Y.; Yang, Y.; Yang, Z.; Zhao, L.; Wang, P.; Xu, W. Occlusion aware unsupervised learning of optical flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4884–4893. [Google Scholar]

| Methods | F-Measure | AEE |

|---|---|---|

| 0.2919 | 3.8244 | |

| 0.4534 | 3.7604 | |

| 0.1167 | 4.1567 | |

| 0.4483 | 3.7828 | |

| 0.4472 | 3.7760 | |

| M | 0.4715 | 3.7440 |

| [44] | 0.48 | 6.34 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Wang, Z. Optical Flow Estimation with Occlusion Detection. Algorithms 2019, 12, 92. https://doi.org/10.3390/a12050092

Wang S, Wang Z. Optical Flow Estimation with Occlusion Detection. Algorithms. 2019; 12(5):92. https://doi.org/10.3390/a12050092

Chicago/Turabian StyleWang, Song, and Zengfu Wang. 2019. "Optical Flow Estimation with Occlusion Detection" Algorithms 12, no. 5: 92. https://doi.org/10.3390/a12050092

APA StyleWang, S., & Wang, Z. (2019). Optical Flow Estimation with Occlusion Detection. Algorithms, 12(5), 92. https://doi.org/10.3390/a12050092