Abstract

System Gramian matrices are a well-known encoding for properties of input-output systems such as controllability, observability or minimality. These so-called system Gramians were developed in linear system theory for applications such as model order reduction of control systems. Empirical Gramians are an extension to the system Gramians for parametric and nonlinear systems as well as a data-driven method of computation. The empirical Gramian framework - emgr - implements the empirical Gramians in a uniform and configurable manner, with applications such as Gramian-based (nonlinear) model reduction, decentralized control, sensitivity analysis, parameter identification and combined state and parameter reduction.

Keywords:

model reduction; model order reduction; decentralized control; sensitivity analysis; parameter identification; empirical gramians; nonlinear systems; reduced order systems; controllability; observability PACS:

03.30. Yy

MSC:

93A15; 93B20; 93C10

Code Meta Data

| name (shortname) | EMpirical GRamian Framework (emgr) |

| version (release-date) | 5.4 (2018-05-05) |

| identifier (type) | doi:10.5281/zenodo.1241532 (doi) |

| authors (ORCIDs) | Christian Himpe (0000-0003-2194-6754) |

| topic (type) | Model Reduction (toolbox) |

| license (type) | 2-Clause BSD (open) |

| repository (type) | git:github.com/gramian/emgr (git) |

| languages | Matlab |

| dependencies | OCTAVE >= 4.2, MATLAB >= 2016b |

| systems | Linux, Windows |

| website | http://gramian.de |

| keywords | empirical-gramians, cross-gramian, combined-reduction |

1. Introduction

Attributes of input-output systems, such as controllability, observability or minimality can be assessed by special matrices. These so-called system Gramian matrices, or short system Gramians (Also: Grammian or Gram matrix.), have manifold applications in system theory, control theory and mathematical engineering.

Originally, Gramian-based methods were developed for linear systems [1]. The empirical Gramian matrices [2] are an extension of Gramian-based methods to nonlinear and parametric systems. This work summarizes the empirical Gramian framework (emgr) [3], a compact software toolbox, which implements various types of empirical Gramians as well as the related empirical covariance matrices.

An important use of empirical Gramian matrices is model order reduction (MOR), utilizing the capability of system Gramians to quantify the input-output importance of the underlying system’s states based on controllability and observability. Several variants of Gramian-based model reduction are available, for example:

- Linear Model Order Reduction [4],

- Robust Model Reduction [5],

- Parametric Model Order Reduction (pMOR) [6],

- Nonlinear Model Order Reduction (nMOR) [2,7,8,9],

- Second-Order System Model Reduction [10],

- Combined State and Parameter Reduction [11].

Beyond model reduction, (empirical) system Gramians can also be utilized for tasks like:

- Sensitivity Analysis [12,13],

- Parameter Identification [14,15],

- Decentralized Control [16,17,18],

- Optimal Sensor Placement [19,20], Optimal Actuator Placement [21],

- Optimal Control [22], Model Predictive Control [23],

- Nonlinearity Quantification [24,25].

In addition, various system invariants and indices are computable using system Gramians, and thus also by empirical Gramians:

- System gain [12],

- Cauchy index [26,27],

- Information entropy index [28],

- Nyquist plot enclosed area [29],

- System Frobenius norm and ellipsoid volume [30],

- System -norm [31].

This wide range of applications and the compatibility to nonlinear systems make empirical Gramians a versatile tool in many system-theoretic computations. Furthermore, the empirical Gramians provide a data-driven method of computation with close relations to proper orthogonal decomposition (POD) and balanced POD (bPOD) [32,33].

Various (Matlab) implementations are available for the computation of linear system Gramians by the solution of associated matrix equations, such as the basic sylvester command, the gram, lyap and covar commands from the Matlab Control Toolbox (http://mathworks.com/products/control) and Octave Control Package (http://octave.sourceforge.net/control). For empirical Gramians, the only other generic implementation, to the author’s best knowledge, is [34], which provides only the empirical controllability Gramian and the empirical observability Gramian, but not any empirical cross Gramian (see Section 3.1.3 and Section 3.1.4). This makes emgr the unique (open-source) implementation of all three: the empirical controllability Gramian , the empirical observability Gramian and the empirical cross Gramian (sometimes also symbolized by and ).

Lastly, it is noted that the term empirical Gramian is used as an umbrella term for the original empirical Gramians [2], the empirical covariance matrices [35], modified empirical Gramians [36] or local Gramians [37].

1.1. Aim

After its initial version (2013) release, accompanied by [38], the empirical Gramian framework (emgr is also listed in the Oberwolfach References on Mathematical Software (ORMS), no. 345: http://orms.mfo.de/project?id=345.) has been significantly enhanced. Apart from extended functionality and accelerated performance, various new concepts and features were implemented. Now, with the release of version (2018) [3], this is a follow-up work illustrating the current state of emgr and its applicability, as well as documenting the flexibility of this toolbox. In short, the major changes involve:

- Non-symmetric cross Gramian variant,

- linear cross Gramian variant,

- distributed cross Gramian variant and interface,

- inner product kernel interface,

- time-integrator interface,

- time-varying system compatibility,

- tensor-based trajectory storage,

- functional paradigm software design.

1.2. Outline

This work is structured as follows: In Section 2 the empirical Gramian’s main application, projection-based model order reduction, is briefly described; followed by Section 3, presenting the mathematical definitions of the computable empirical Gramians. Section 4 summarizes the design decision for emgr, while Section 5 documents usage and configuration. Numerical examples are demonstrated in Section 6 and lastly, in Section 7, a short concluding remark is given.

2. Mathematical Preliminaries

The mathematical objects of interest are nonlinear parametric input-output systems, which frequently occur in physical, chemical, biological and technical models or spatially discretized partial differential equations (PDE). These control system models consist of a dynamical system (typically on error, i.e., an ordinary differential equation (ODE)) as well as an output function, and maps the input via the state to the output :

The potentially nonlinear vector-field and output functional both depend on the time , the state , input or control and the parameters . Together with an initial condition , this setup constitutes an initial value problem.

Model Reduction

The aim of model reduction is the algorithmic computation of surrogate reduced order models with lower computational complexity or memory footprint than the original full order model. For the sake of brevity, only combined state and parameter reduction is summarized here, which includes state-space reduction, parametric state-space reduction and parameter-space reduction as special cases; for an elaborate layout see [39].

Given the general, possibly nonlinear, input-output system (1), a combined state and parameter reduced order model:

with a reduced state , , and a reduced parameter , , is sought. Accordingly, a reduced vector-field and a reduced output functional describe the reduced system, for which the reduced system’s outputs should exhibit a small error compared to the full order model, yet preserving the parameter dependency:

A class of methods to obtain such a reduced order model with the associated requirements is described next.

- Projection-Based Combined Reduction

Projection-based combined state and parameter reduction is based on (bi-)orthogonal truncated projections for the state- and parameter-space respectively. The state-space trajectory , not too far from a steady-state , , , is approximated affinely using truncated reducing and reconstructing projections and , with :

The relevant parameter-space volume is also approximated by truncated reducing and reconstructing projections and , with :

relative to a nominal parameter . Given these truncated projections, a projection-based reduced order model is then obtained by:

Thus, to obtain a projection-based reduced order model with respect to the state- and parameter-space, the overall task is determining the truncated projections , , and .

It should be noted that this approach produces globally reduced order models, meaning , , , are valid over the whole operating region, which is an application-specific subspace of the Cartesian product of the full order state- and parameter-space .

- Gramian-Based Model Reduction

Gramian-based model reduction approximates the input-output behavior of a system by removing the least controllable and observable state components. To this end, the system is transformed to a representation in which controllability and observability are balanced. Given a controllability Gramian (Section 3.1.1) and observability Gramian (Section 3.1.2) to an input-output system, a balancing transformation [4] is computable; here in the variant from [40], utilizing the singular value decomposition (SVD):

Partitioning the columns of U and V based on the Hankel singular values (HSV) in D, , which indicate the balanced state’s relevance to the system’s input-output behavior, into , related to the large HSV and , associated to the small HSV,

then discarding the partitions associated to small singular values , corresponds to the balanced truncation method [4,41].

A cross Gramian (Section 3.1.4) encodes both controllability and observability in a single linear operator. For a symmetric system, a balancing transformation can then be obtained from an eigenvalue decomposition (EVD) of the cross Gramian [42,43]:

Alternatively, an approximate balancing transformation is obtained from an SVD of the cross Gramian [11,44]:

The truncated projections, and , are obtained in the same way as for balanced truncation. Using only the left or only the right singular vectors of for the (truncated) projections of the state-space, and their transpose as reverse transformation, results in orthogonal (Galerkin) projections [45]. This approach is called direct truncation method [11,27], i.e., .

Similarly, the parameter projection can be based on associated covariance matrices. A transformation aligning the parameters along their principal axes, resulting from an SVD of such a parameter covariance matrix [11,46,47]:

yields truncatable projections given by the singular vectors, with partitioning of and based on the singular values in .

3. Empirical Gramians

Classically, the controllability, observability and cross Gramians are computed for linear systems by solving (linear) matrix equations. The empirical Gramians are a data-driven extension to the classic system Gramians, and do not depend on the linear system structure. Computing system Gramians empirically by trajectory simulations was already motivated in [4], but systematically introduced in [2]. The central idea behind the empirical Gramians is the averaging over local Gramians for any varying quantity, such as inputs, initial states, parameters or time-dependent components around an operating point [48]. This approach is closely related to the concept of local controllability and local observability for nonlinear systems [49].

In the following, first the empirical Gramians for state-space system input-output coherence are summarized, then the empirical Gramians for parameter-space identifiability and combined state and parameter evaluation are described.

3.1. State-Space Empirical Gramians

Gramian-based controllability and observability analysis originates in linear system theory [50], which investigates linear (time-invariant) systems,

An obvious approach for nonlinear systems is a linearization at a steady-state [51], but this may obfuscate the original transient dynamics [52,53]. Alternatively, the nonlinear balancing for control affine systems from [54], based on controllability and observability energy functions, could be used. Yet practically, the associated nonlinear system Gramians require solutions to a Hamilton-Jacobi partial differential equation (nonlinear controllability Gramian) and a nonlinear Lyapunov equation (nonlinear observability Gramian) or a nonlinear Sylvester equation (nonlinear cross Gramian), which is currently not feasible for large-scale systems. A compromise between linearized and nonlinear Gramians are empirical Gramians [2,7].

Empirical Gramians are computed by systematically averaging system Gramians obtained from numerical simulations over locations in an operating region near a steady-state. An operating region is defined in this context by sets of perturbations for inputs/controls and the steady-state. Originally in [2], these perturbation sets are constructed by the Cartesian product of sets of directions (standard unit vectors), rotations (orthogonal matrices) and scales (positive scalars) for the input and steady-state respectively. In the empirical Gramian framework, the rotations are limited to the set of the unit matrix and negative unit matrix, as suggested in [2]. This constraint on the rotation entails many numerical simplifications and reduces the perturbation sets to directions (standard unit vectors) and scales (non-zero scalars):

Yet, only single input and state components can be perturbed at a time in this manner, which is often practically sufficient.

The original empirical Gramians use a centering of the trajectories around the temporal average and solely use impulse input type controls [2]. The related empirical covariance matrices center the trajectories around a steady-state and allow arbitrary step functions , , , [35,55]. The empirical Gramian framework allows to compute either as well as further centering variants (Section 5.4). In the following, empirical Gramians and empirical covariance matrices will be jointly referred to by the term “empirical Gramian”.

3.1.1. Empirical Controllability Gramian

The (linear) controllability (The term controllability is used instead of reachability as in [2,4,56].) Gramian quantifies how well the state of an underlying linear system is driven by the input and is defined as:

The empirical variant is given by the following definition based on [2,35].

Definition 1

(Empirical Controllability Gramian). Given non-empty sets and , the empirical controllability Gramian is defined as:

with the state trajectories for the input configurations , and the offsets , .

For an asymptotically stable linear system, delta impulse input and an arithmetic average over time as offset , the empirical controllability Gramian is equal to the controllability Gramian [2]. The numerical computation of this empirical Gramian requires trajectories and is related to POD.

3.1.2. Empirical Observability Gramian

The (linear) observability Gramian quantifies how well a change in the state of an underlying linear system is visible in the outputs and is defined as:

The empirical variant is given by the following definition based on [2,35].

Definition 2

(Empirical Observability Gramian). Given non-empty sets and , the empirical observability Gramian is defined as:

with the output trajectories for the initial state configurations , , and the offsets , , .

For an asymptotically stable linear system, no input and an arithmetic average over time as offset , the empirical observability Gramian is equal to the observability Gramian [2]. The numerical computation of this empirical Gramians requires trajectories.

3.1.3. Empirical Linear Cross Gramian

The (linear) cross Gramian [56,57] quantifies the controllability and observability, and thus minimality, of an underlying square, , linear system and is defined as:

Augmenting the linear system’s dynamical system component with its transposed system (The transposed system is equivalent to the negative adjoint system), induces an associated controllability Gramian of which the upper right block corresponds to the cross Gramian [58,59]:

The empirical variant restricted to the upper right block of this augmented controllability Gramian (4) is given by the following definition based on [60].

Definition 3

(Empirical Linear Cross Gramian). Given non-empty sets and , the empirical linear cross Gramian is defined as:

with the state trajectories and adjoint state trajectories for the input configurations , and the offsets , , .

For an asymptotically stable linear system, delta impulse input and an arithmetic average over time as offset , , the empirical linear cross Gramian is equal to the cross Gramian due to the result of the empirical controllability Gramian. The numerical computation of this empirical Gramian requires trajectories and is related to balanced POD [61].

3.1.4. Empirical Cross Gramian

Analytically, a cross Gramian for (control-affine) nonlinear gradient systems was developed in [62,63], yet the computation of this nonlinear cross Gramian (This is also called cross operator or cross map in this context) is infeasible for large systems. For (nonlinear) Single-Input-Single-Output (SISO) systems, the empirical variant of the cross Gramian is developed in [12], for (nonlinear) Multiple-Input-Multiple-Output (MIMO) systems in [11].

Definition 4

(Empirical Cross Gramian). Given non-empty sets , , and , the empirical cross Gramian is defined as:

with the state trajectories for the input configurations , the output trajectories for the initial state configurations , and the offsets , , , .

For an asymptotically stable linear system, delta impulse input and an arithmetic averages over time as offsets , , the empirical cross Gramian is equal to the cross Gramian [11,12]. The numerical computation of this empirical Gramian requires trajectories.

3.1.5. Empirical Non-Symmetric Cross Gramians

The (empirical) cross Gramian is only computable for square systems, and verifiably useful for symmetric or gradient systems [11,44,57]. In [39] an extension to the classic cross Gramian is proposed. Based on results from decentralized control [16], a non-symmetric cross Gramian is computable for non-square systems and thus non-symmetric systems. Given a partitioning of , and , from the linear system (3), the (linear) non-symmetric cross Gramian is defined as:

For this cross Gramian to the associated “average” SISO system, an empirical variant is then given by:

Definition 5

(Empirical Non-Symmetric Cross Gramian). Given non-empty sets , , and , the empirical non-symmetric cross Gramian is defined as:

with the state trajectories for the input configurations , the output trajectories for the initial state configurations , and the offsets , , , .

Corollary 1.

For an asymptotically stable linear system, delta impulse input = δ(t) and arithmetic averages over time as offsets , , the empirical non-symmetric cross Gramian is equal to the cross Gramian of the average SISO system.

Proof.

This is a direct consequence of [11] (Lemma 3). □

The numerical computation of the empirical non-symmetric cross Gramian requires trajectories; the same as for the empirical cross Gramian.

3.2. Parameter-Space Empirical Gramians

To transfer the idea of Gramian-based state-space reduction to parameter-space reduction, the concepts of controllability and observability are extended to the parameter-space. This leads to controllability-based parameter identification and observability-based parameter identification. Note that the observability-based parameter identification (and parameter reduction) is related to the active subspaces method [64].

3.2.1. Empirical Sensitivity Gramian

Controllability-based parameter identification can be realized using an approach from [46], which treats the parameters as (additional) constant inputs. The controllability Gramian for a linear system with linear parametrization (constant source or load) can be decomposed additively based on linear superposition:

Similar to [12], the trace of the parameter-controllability Gramians embodies a measure of (average) sensitivity, and holds approximately for systems with nonlinear parametrization [38].

Definition 6

(Empirical Sensitivity Gramian). The empirical sensitivity Gramian is given by a diagonal matrix with entries corresponding to the traces of the parameter-controllability Gramians,

The sum over all controllability Gramians can also be used for robust model reduction [5]. Similarly, treating the parameters as inputs, the cross Gramian’s trace can be utilized as a sensitivity measure [13].

3.2.2. Empirical Identifiability Gramian

For an observability-based parameter identification, the parameters are interpreted as additional states of the system [14,19]. This approach leads to the augmented system, in which the system’s state x is appended by the parameter and, since the parameters are (assumed) constant over time, the components of the vector-field associated to the parameter-states are zero:

leaving the parameter-state’s initial value for testing parameter perturbations. The observability Gramian to this augmented system, the augmented observability Gramian, has the block structure:

with the state-space observability Gramian , the parameter-space observability Gramian and the mixed state and parameter block . To isolate the parameter identifiability information, the state-space block is eliminated.

Definition 7

(Empirical Identifiability Gramian). The empirical identifiability Gramian is given by the Schur-complement of the empirical augmented observability Gramian for the lower right block:

Often, it is sufficient to approximate the empirical identifiability Gramian by the lower right block of the augmented observability Gramian :

Apart from the relation of the identifiability Gramian to the Fischer information matrix [19], also the connection of the (parameter) observability Gramian to the (parameter) Hessian matrix [65] is noted here.

3.2.3. Empirical Cross-Identifiability Gramian

If a system is square, the augmented system (5) remains square and for linear systems symmetry is also preserved. Hence, a cross Gramian of the augmented system is computable [11].

Definition 8

(Empirical Joint Gramian).

The empirical joint Gramian is given by the empirical cross Gramian of the augmented system.

The joint Gramian is an augmented cross Gramian and has a similar block structure as the augmented observability Gramian,

but due to the uncontrollable parameter-states, the lower (parameter-related) blocks are identically zero. Nonetheless, the observability-based parameter identifiability information can be extracted from the mixed block .

Definition 9

(Empirical Cross-Identifiability Gramian).

The empirical cross-identifiability Gramian is given by the Schur-complement of the symmetric part of the empirical joint Gramian for the lower right block:

Thus, the empirical joint Gramian enables the combined state and parameter analysis by the empirical cross Gramian and empirical cross-identifiability Gramian from a single matrix. Note that the empirical joint Gramian may also be computed based on the non-symmetric cross Gramian. Additionally, a parameter Gramian, such as or the , could be balanced with the loadability Gramian from [15].

3.3. Notes on Empirical Gramians

It should be noted that empirical Gramians only yield workable results if the operating region of the system is restricted to a single steady-state. If the trajectories used for the assembly of empirical Gramians are periodic or do not attain this steady-state, their performance is similar to time-limited balancing methods, see for example [66] and references therein. Overall, the quality of empirical-gramian-based methods depend largely on the quality of the measured or simulated (output) trajectory data. Yet, due to the data-driven nature of the empirical Gramians, even unstable systems or systems with inhomogeneous initial conditions are admissible.

The dominant computational cost in the assembly of empirical Gramians is the computation of trajectories. This is especially obvious from the observability-based empirical Gramians, which appear to perturb every component of the steady or initial state. Yet in practice, the number of trajectories can often be reduced based on knowledge about the underlying model or operating region. Furthermore, the trajectories are computable in parallel.

4. Implementation Details

This section states concisely the theoretical, practical and technical design decisions in the implementation of the empirical Gramian framework–emgr [3], as well as describes the unified and configurable approach to empirical Gramian computation.

4.1. Design Principles

emgr is realized using the high-level, interpreted Matlab programming language, which is chosen due to its widespread use, long-term compatibility and mathematical expressiveness. This enables first, a wide circulation due to compatibility with Mathworks Matlab® [67], and second, the usage of the open-source variant Gnu Octave [68]. Generally, the implementation of emgr follows the procedural programming paradigm, includes various functional programming techniques and avoids object-oriented programming.

Since empirical Gramians are computable by mere basic linear algebra operations, Matlab code can be evaluated efficiently by vectorization, which transfers computationally intensive tasks as bulk operations to the Basic Linear Algebra Subroutines (BLAS) back-end.

Overall, emgr is a reusable open-source toolbox, and encompasses less than 500 LoC (Lines of Code) in a single file and a cyclomatic complexity of <100 of the main function. Apart from a Matlab interpreter, emgr has no further dependencies, such as on other toolboxes. The source code is engineered with regard to the best practice guides [69] (coding style) and [70] (performance).

Furthermore, two variants of emgr are maintained: First, emgr_oct (See http://gramian.de/emgroct.m), uses Octave-specific language extension: default arguments and assignment operations, second, emgr_lgc (See http://gramian.de/emgrlgc.m), enables compatibility to Matlab versions before 2016b not supporting implicit expansion, also known as automatic broadcasting.

4.2. Parallelization

Apart from vectorization allowing the implicit use of single-instruction-multiple-data (SIMD) functionality for vectorized block operations, also multi-core parallelization is used to maximize use of available compute resources.

4.2.1. Shared Memory Parallelization

For shared memory systems with uniform memory access (UMA), two types of parallelization are utilized. First, an implicit parallelization may be applied by the interpreter for an additional acceleration of block operations. Second, explicit parallelization is available for the computation of different state and output trajectories, using parallel for-loops parfor (See http://mathworks.com/help/matlab/ref/parfor.html), but deactivated by default to guarantee replicable results, as the use of parfor does not guarantee a unique order of execution.

4.2.2. Heterogeneous Parallelization

The actual empirical Gramians result from inner products. In case of the default Euclidean inner product, this amounts to a dense matrix-matrix-multiplication (Implemented as Generalized Matrix Multiplication (GEMM) ) by BLAS), which can be efficiently computed by General Purpose Graphics Processing Units (GPGPUs). In the case of an integrated GPU with zero-copy shared memory architecture, such as uniform memory model (UMM) or heterogeneous unified memory access (hUMA) [71], is used, the assembly of the Gramian matrices can be performed with practically no overhead, since the trajectories, which are usually computed and stored in CPU memory space, do not need to be copied between CPU and GPU memory spaces. The GPU can directly operate on the shared memory.

4.2.3. Distributed Memory Parallelization

A disadvantage of empirical Gramian matrices is the quadratically growing memory requirements with respect to the state-space (and parameter-space) dimension, since for an N dimensional system, a (dense) empirical Gramian of dimension is computed. To combat this shortcoming, a specific property of the empirical cross Gramian can be exploited: The columns of the empirical cross Gramian, and thus the empirical joint Gramian, may be computed separately,

hence this distributed empirical cross Gramian [72] (Section 4.2) is computable in parallel and communication-free on a distributed memory computer system, or sequentially in a memory-economical manner as a low-rank empirical cross Gramian [73] on a unified memory computer system. This column-wise computability translates also to the empirical joint Gramian and the non-symmetric variants of the empirical cross and joint Gramian.

Based on this partitioning, a low-rank representation can be obtained in a memory-bound or compute-bound setting together with the hierarchical approximate proper orthogonal decomposition (HAPOD) [72]. This POD variant allows to directly compute a Galerkin projection from an arbitrary column-wise partitioning of the empirical cross Gramian.

5. Interface

emgr provides a uniform function call for the computation of all empirical Gramian types. The subsequent signature documentation is based on [47] and http://gramian.de (the current instance (2018-06) of http://gramian.de is preserved at https://archive.li/p0cIO.) Minimally, the emgr function requires five mandatory arguments (single letter):

additionally eight optional arguments (double letter) allow a usage by:

furthermore, a single argument variant may also be used,

which returns the current version number.

emgr(f,g,s,t,w)

emgr(f,g,s,t,w,pr,nf,ut,us,xs,um,xm,dp)

emgr(’version’)

5.1. Mandatory Arguments

For the minimal usage, the following five arguments are required:

- f

- handle to a function with the signature xdot = f(x,u,p,t) representing the system’s vector-field and expecting the arguments: current state x, current input u, (current) parameter p and current time t.

- g

- handle to a function with the signature y = g(x,u,p,t) representing the system’s output functional and expecting the arguments: current state x, current input u, (current) parameter p and current time t.If g = 1, the identity output functional is assumed.

- s

- three component vector s = [M,N,Q] setting the dimensions of the input , state and output .

- t

- two component vector t = [h,T] specifying the time-step width h and time horizon T.

- w

- character selecting the empirical Gramian type; for details see Section 5.2.

5.2. Features

The admissible characters to select the empirical Gramian type are as follows:

- ′c′

- Empirical controllability Gramian (see Section 3.1.1),emgr returns a matrix:

- empirical controllability Gramian matrix .

- ′o′

- Empirical observability Gramian (see Section 3.1.2),emgr returns a matrix:

- empirical observability Gramian matrix .

- ′x′

- Empirical cross Gramian (see Section 3.1.4),emgr returns a matrix:

- empirical cross Gramian matrix .

- ′y′

- Empirical linear cross Gramian (see Section 3.1.3),emgr returns a matrix:

- empirical linear cross Gramian matrix .

- ′s′

- Empirical sensitivity Gramian (see Section 3.2.1),emgr returns a cell array. holding:

- empirical controllability Gramian matrix ,

- empirical sensitivity Gramian diagonal .

- ′i′

- Empirical identifiability Gramian (see Section 3.2.2),emgr returns a cell array holding:

- empirical observability Gramian matrix ,

- empirical identifiability Gramian matrix .

- ′j′

- Empirical joint Gramian (see Section 3.2.3),emgr returns a cell array holding:

- empirical cross Gramian matrix ,

- empirical cross-identifiability Gramian matrix .

A cell array is a generic container (array) in the Matlab language.

5.2.1. Non-Symmetric Cross Gramian

The non-symmetric cross Gramian [39] (see Section 3.1.5) is a special variant of the cross Gramian for non-square and non-symmetric MIMO systems, which reduces to the regular cross Gramian for SISO systems. Since the computation is similar to the empirical cross Gramian and a non-symmetric variant of the empirical joint Gramian shall be computable too, instead of a Gramian type selected through the argument w, it is selectable via an option flag: Non-symmetric variants may be computed for the empirical cross Gramian (w = ′x′), empirical linear cross Gramian (w = ′y′) or empirical joint Gramian (w = ′j′) by activating the flag nf(7) = 1.

5.2.2. Parametric Systems

Parametric model order reduction is accomplished by averaging an empirical Gramian over a discretized parameter-space [6]. To this end the parameter sampling points, arranged as columns of a matrix, can be supplied via the optional argument pr.

5.2.3. Time-Varying Systems

Since empirical Gramians are purely based on trajectory data, they are also computable for time varying systems as described in [74]. The empirical Gramian framework can compute averaged Gramians for time varying systems [75], which are time independent matrices.

5.3. Optional Arguments

The eight optional arguments allow a detailed definition of the operating region and configuration of the computation.

- pr system parameters (Default value: 0)

- vector a column vector holding the parameter components,

- matrix a set of parameters, each column holding one parameter.

- nf twelve component vector encoding the option flags, for details see Section 5.4.

- ut input function (Default value: 1)

- handle function handle expecting a signature u_t = u(t),

- 0 pseudo-random binary input,

- 1 delta impulse input,

- ∞ decreasing frequency exponential chirp.

- us steady-state input (Default value: 0)

- scalar set all M steady-state input components to argument,

- vector set steady-state input to argument of expected dimension .

- xs steady-state (Default value: 0)

- scalar set all N steady-state components to argument,

- vector set steady-state to argument of expected dimension .

- um input scales (Default value: 1)

- scalar set all M maximum input scales to argument,

- vector set maximum input scales to argument of expected dimension ,

- matrix set scales to argument with M rows; used as is.

- xm initial state scales (Default value: 1)

- scalar set all N maximum initial state scales to argument,

- vector set maximum steady-state scales to argument of expected dimension ,

- matrix set scales to argument with N rows; used as is.

- dp inner product interface via a handle to a function with the signature z = dp(x,y) defining the dot product for the Gramian matrix computation (Default value: []).

Inner Product Interface

The empirical Gramian matrices are computed by inner products of trajectory data. A custom inner products for the assembly of the empirical Gramians matrix, can be set by the argument dp, which expects a handle to a function with the signature:

and the arguments:

z = dp(x,y)

- x

- matrix of dimension ,

- y

- matrix of dimension for .

The return value z is typically an matrix, but scalar or vector-valued z are admissible, too. By default, the Euclidean inner product, the standard matrix multiplication is used:

dp = @(x,y) mtimes(x,y).

Other choices are for example: covariance-weighted products for Gaussian-noise-driven systems yielding system covariances [76], reproducing kernel Hilbert spaces (RKHS) [77], such as the polynomial, Gaussian or Sigmoid kernels [78], or energy-stable inner products [79]. Also, weighted Gramians [36] and time-weighted system Gramians [80] can be computed using this interface, i.e.,

for a monomial of order k time-domain weighted inner product. Furthermore, the inner product interface may be used to directly compute the trace of an empirical Gramian by using a pseudo-kernel:

which exploits a property for computing the trace of a matrix product . This interface may also be used to compute only the empirical Gramian’s diagonal:

for input-output importance [81] or input-output coherence [57] (Ch. 13). Lastly, it is noted that offloading matrix multiplications to an accelerator such as a GPGPU, motivated in Section 4.2.2, can also be achieved using this interface.

dp = @(x,y) mtimes([0:h:T].k.*x,y)

dp = @(x,y) sum(sum(x.*y’))

dp = @(x,y) sum(x.*y’,2)

5.4. Option Flags

The vector nf contains 12 components, each representing an option with the default value zero and the following functionality:

- nf(1)

- Time series centering:

- = 0

- No centering,

- = 1

- Steady-state (for empirical covariance matrices),

- = 2

- Final state,

- = 3

- Arithmetic average over time (for empirical Gramians),

- = 4

- Root-mean-square over time,

- = 5

- Mid-range over time.

- nf(2)

- Input scale sequence:

- = 0

- Single scale: um ← um,

- = 1

- Linear scale subdivision: um ← um * [0.25, 0.5, 0.75, 1.0],

- = 2

- Geometric scale subdivision: um ← um * [0.125, 0.25, 0.5, 1.0],

- = 3

- Logarithmic scale subdivision: um ← um * [0.001, 0.01, 0.1, 1.0],

- = 4

- Sparse scale subdivision: um ← um * [0.01, 0.5, 0.99, 1.0].

- nf(3)

- Initial state scale sequence:

- = 0

- Single scale: xm ← xm,

- = 1

- Linear scale subdivision: xm ← xm * [0.25, 0.5, 0.75, 1.0],

- = 2

- Geometric scale subdivision: xm ← xm * [0.125, 0.25, 0.5, 1.0],

- = 3

- Logarithmic scale subdivision: xm ← xm * [0.001, 0.01, 0.1, 1.0],

- = 4

- Sparse scale subdivision: xm ← xm * [0.01, 0.5, 0.99, 1.0].

- nf(4)

- Input directions:

- = 0

- Positive and negative: um ← [-um, um],

- = 1

- Only positive: um ← um.

- nf(5)

- Initial state directions:

- = 0

- Positive and negative: xm ← [-xm, xm],

- = 1

- Only positive: xm ← xm.

- nf(6)

- Normalizing:

- = 0

- No normalization,

- = 1

- Scale with Gramian diagonal (see [82]),

- = 2

- Scale with steady-state (see [34]).

- nf(7)

- Non-Symmetric Cross Gramian, only , , :

- = 0

- Regular cross Gramian,

- = 1

- Non-symmetric cross Gramian.

- nf(8)

- Extra input for state and parameter perturbation trajectories, only , , , , :

- = 0

- No extra input,

- = 1

- Apply extra input (see [83]).

- nf(9)

- Center parameter scales, only , , :

- = 0

- No centering,

- = 1

- Center around arithmetic mean,

- = 2

- Center around logarithmic mean.

- nf(10)

- Parameter Gramian variant, only , , :

- = 0

- Average input-to-state (), detailed Schur-complement (, ),

- = 1

- Average input-to-output (), approximate Schur-complement (, ).

- nf(11)

- Empirical cross Gramian partition width, only , :

- = 0

- Full cross Gramian computation, no partitioning.

- < N

- Maximum partition size in terms of cross Gramian columns.

- nf(12)

- Partitioned empirical cross Gramian running index, only , :

- = 0

- No partitioning.

- > 0

- Index of the set of cross Gramian columns to be computed.

5.4.1. Schur-Complement

The observability-based parameter Gramians, the empirical identifiability Gramian and the empirical cross-identifiability Gramian , utilize an inversion as part of a (approximated) Schur-complement. Instead of using a Schur-complement solver or the pseudo-inverse, an approximate inverse with computational complexity based on [84] is utilized,

with the diagonal matrix D, and the matrix of off-diagonal elements . This approximate inverse is used by default for and .

5.4.2. Partitioned Computation

The partitioned empirical cross Gramian (Section 4.2.3) can be configured by the option flags nf(11) and nf(12), with nf(11) defining the maximum number of columns per partition, and nf(12) setting the running index of the current partition. Together with a partitioned singular value decomposition, such as the HAPOD [72], an empirical-cross-Gramian-based Galerkin projection is computable with minimal communication in parallel on a distributed memory system, or sequentially on a shared memory system [73].

5.5. Solver Configuration

To provide a problem-specific integrator to generate the state and output trajectories, a global variable named ODE is available, and expects a handle to a function with the signature:

comprising the arguments:

y = ODE(f,g,t,x0,u,p)

- f

- handle to a function with the signature xdot = f(x,u,p,t) representing the system’s vector-field and expecting the arguments: current state x, current input u, (current) parameter p and current time t.

- g

- handle to a function with the signature y = g(x,u,p,t) representing the system’s output functional and expecting the arguments: the current state x, current input u, (current) parameter p and current time t.

- t

- two component vector t = [h,T] specifying the time-step width h and time horizon T.

- x0

- column vector of dimension N setting the initial condition.

- u

- handle to a function with the signature u_t = u(t).

- p

- column vector of dimension P holding the (current) parameter.

The solver is expected to return a discrete trajectory matrix of dimension .

As a default solver for (nonlinear) initial value problems, the optimal second-order strong stability preserving (SSP) explicit Runge-Kutta method [85] is included in emgr. This single-step integrator is implemented in a low-storage variant, and the stability of this method can be increased by additional stages, which is configurable by a global variable named STAGES. The default number of stages is STAGES , yielding the SSP32 method.

5.6. Sample Usage

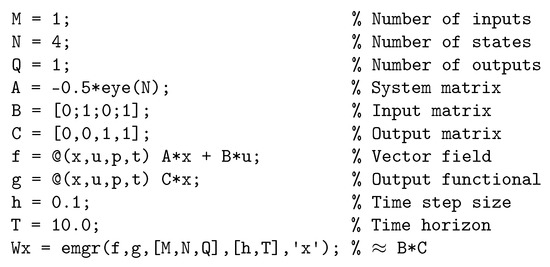

To illustrate the usage of emgr, the Matlab code for the computation of the empirical cross Gramian of a small linear system is presented in Figure 1. For demonstration purposes, this system has one uncontrollable and unobservable, one controllable and unobservable, one uncontrollable and observable, and one controllable and observable state:

Figure 1.

Sample code for the computation of the empirical cross Gramian of a non-minimal fourth order system, see Section 5.6.

The cross Gramian computes as:

which is approximately computed empirically following Section 3.1.4 in Figure 1.

6. Numerical Examples

In this section, empirical-Gramian-based model reduction techniques are demonstrated for three test systems using [68]; first, for a linear state-space symmetric MIMO system, second, for a hyperbolic SISO system, and third for a nonlinear SIMO system. All numerical tests are performed using Octave 4.4 [86].

6.1. Linear Verification

The first example is a linear test system of the form (3) and generated using the inverse Lyapunov procedure [87], in a variant that enforces state-space symmetric systems [88]. For state-space symmetric systems, , , all system Gramians are symmetric and equal [89]. The system is configured to have states and inputs and outputs. For the computation of the reduced order model, the empirical linear cross Gramian with an impulse input and a zero initial state is used, while for the trajectory simulation the default SSP32 (Section 5.5) integrator is utilized.

To quantify the quality of the resulting reduced order models, the error between the original full order model output and the reduced order model’s output is compared in the time-domain -norm,

In addition, the balanced truncation upper bound is assessed [41]:

for a reduced model of order n. Instead of impulse input, zero-centered, unit-variance Gaussian noise is used as input time series for the evaluation.

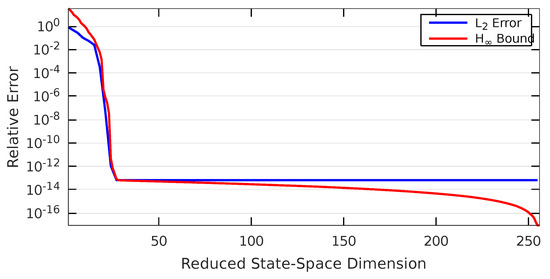

Figure 2 shows the reduced order model’s relative -norm model reduction error, as well as the upper bound for increasing reduced orders. The error evolves correctly tightly below the bound, until the numerical accuracy (double-precision floating point arithmetic) is reached.

Figure 2.

Model reduction error for the linear test system, see Section 6.1.

6.2. Hyperbolic Evaluation

The second numerical example is given by a one-dimensional transport equation. An input-output system is constructed by selecting the left boundary as the input and the right boundary as the output:

while the transport velocity is treated as a parameter. This partial differential equation system is spatially discretized by a first-order finite-difference upwind scheme, yielding a SISO ordinary differential equation system:

with . For this example, a spatial resolution of is chosen, hence , and . Since the system matrix is non-normal, using techniques such as POD may lead to unstable reduced order models. Thus, here a cross-Gramian-based balancing technique is used, guaranteeing stability of the reduced model. For training, impulse responses for the extremal velocities are used; for testing, a Gauss bell input is utilized over 10 uniformly random velocities, both utilizing the default integrator. The reduced order model quality is evaluated by the parametric norm [47]:

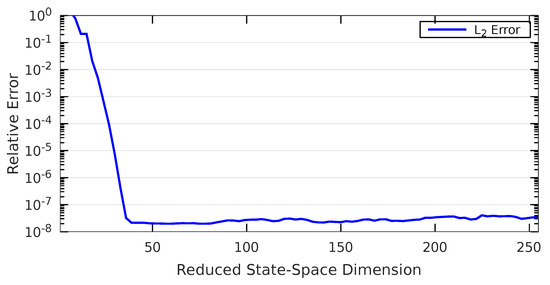

Even though the system is hyperbolic, a steep decay in error is obtained (Figure 3). Yet, due to the hyperbolicity and the parameter-dependence of the reduced order model, a lower overall numerical accuracy (≈) is achieved.

Figure 3.

Model reduction error for the hyperbolic test system, see Section 6.2.

6.3. Nonlinear Validation

The third example involves a parametric nonlinear system, based on the hyperbolic network model [90],

The structure of this system is similar to the linear system model (3), yet the vector-field includes a hyperbolic tangent nonlinearity, in which the parametrized activation is described by a diagonal gain matrix , . A negative Lehmer matrix (a Lehmer matrix is defined as , and is positive definite) is selected as system matrix , a vector of sequential cosine evaluations as input matrix , a binary matrix as output matrix, and parameters constrained to the interval . For this system a combined state and parameter reduction is demonstrated. To this end an empirical non-symmetric joint Gramian is computed, using again an impulse input , a zero initial state and the default integrator. The reduced order model quality is evaluated for the same input and initial state by the joint state and parameter norm , with respect to the reduced parameters for ten uniformly random samples from the admissible parameter-space.

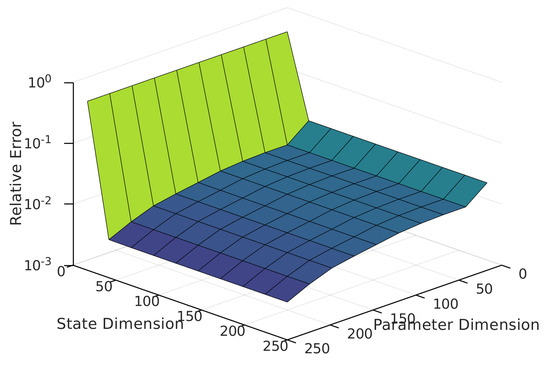

Figure 4 depicts the -norm model reduction error for increasing state- and parameter-space dimensions. The combined reduction errors decay for both: The reduced state-space and reduced parameter-space, yet faster for the state-space. As for the parametric model, the numerical accuracy is reduced due to the combined reduction.

Figure 4.

Model reduction error for the nonlinear test system, see Section 6.3.

6.4. On Hyper-Reduction

Projection-based model reduction for nonlinear systems (such as in Section 6.3) leads to a computational issue, the so-called lifting bottleneck, which results from the composition of the reconstructing projection with the original, (nonlinear) vector field f and the reducing projection :

in the reduced vector field (2). This means for nonlinear systems, the high-dimensional state has to be used in the reduced order model, as opposed to linear systems (3), where the truncated projections can be applied directly to the linear vector field components (The composition operators become matrix-matrix multiplications in the linear case) beforehand.

To reduce this computational complexity, hyper-reduction methods are utilized. Examples of hyper-reduction methods are: Gappy-POD [91], Missing Point Estimation (MPE) [92], Discrete Empirical Interpolation Method (DEIM) [93], Dynamic Mode Decomposition (DMD) [94] or numerical linearization [95]; for a comparison see [96]. These methods construct (linear) reduced representations of the nonlinearity in a data-driven manner.

emgr does not address hyper-reduction and thus it has to be applied in a separate post-processing step [97]. Yet, the trajectory data used to assemble the empirical Gramians can be recycled by interposing the hyper-reduction computation via the solver configuration (Section 5.5).

7. Concluding Remark

Empirical Gramians are a universal tool for nonlinear system and control theoretic applications with a simple, data-driven construction. The empirical Gramian framework - emgr - implements empirical Gramian computation for system input-output coherence and parameter identifiability evaluation. Possible future extensions of emgr may include Koopman Gramians [98], empirical Riccati covariance matrices [99], or empirical differential balancing [100]. Finally, further examples and applications can be found at the emgr project website: http://gramian.de.

Code Availability

The source code of the presented numerical examples can be obtained from:

and is authored by: Christian Himpe.

Funding

Supported by the German Federal Ministry for Economic Affairs and Energy, in the joint project: “MathEnergy—Mathematical Key Technologies for Evolving Energy Grids”, sub-project: Model Order Reduction (Grant number: 0324019B). Open access funding provided by Max Planck Society.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PDE | Partial Differential Equation |

| ODE | Ordinary Differential Equation |

| MOR | Model Order Reduction |

| pMOR | parametric Model Order Reduction |

| nMOR | nonlinear Model Order Reduction |

| POD | Proper Orthogonal Decomposition |

| bPOD | balanced Proper Orthogonal Decomposition |

| SVD | Singular Value Decomposition |

| HSV | Hankel Singular Values |

| EVD | Eigenvalue Decomposition |

| SISO | Single-Input-Single-Output |

| MIMO | Multiple-Input-Multiple-Output |

| BLAS | Basic Linear Algebra System |

| LoC | Lines of Code |

| SIMD | Single Instruction Multiple Data |

| UMA | Unified Memory Access |

| GPGPU | General Purpose Graphics Processing Unit |

| GPU | Graphics Processing Unit |

| UMM | Unified Memory Model |

| hUMA | heterogeneous Unified Memory Access |

| CPU | Central Processing Unit |

| GEMM | GEneralized Matrix Multiplication |

| HAPOD | Hierarchical Approximate Proper Orthgoonal Decomposition |

| RKHS | Reproducing Kernel Hilbert Spaces |

| SSP | Strong Stability Preserving |

| SIMO | Single-Input-Multiple-Output |

| MPE | Missing Point Estimation |

| DEIM | Discrete Empirical Interpolation Method |

| DMD | Dynamic Mode Decomposition |

References

- Kalman, R.E. Mathematical description of linear dynamical systems. SIAM J. Control Optim. 1963, 1, 182–192. [Google Scholar] [CrossRef]

- Lall, S.; Marsden, J.E.; Glavaški, S. Empirical model reduction of controlled nonlinear systems. IFAC Proc. Vol. 1999, 32, 2598–2603. [Google Scholar] [CrossRef]

- Himpe, C. emgr—EMpirical GRamian Framework (Version 5.4). Available online: http://gramian.de (accessed on 26 June 2018).

- Moore, B.C. Principal component analysis in linear systems: Controllability, observability, and model reduction. IEEE Trans. Autom. Control 1981, 26, 17–32. [Google Scholar] [CrossRef]

- Sun, C.; Hahn, J. Model reduction in the presence of uncertainty in model parameters. J. Process Control 2006, 16, 645–649. [Google Scholar] [CrossRef]

- Himpe, C.; Ohlberger, M. The Empirical Cross Gramian for Parametrized Nonlinear Systems. IFAC-PapersOnLine 2015, 48, 727–728. [Google Scholar] [CrossRef]

- Hahn, J.; Edgar, T.F. Reduction of nonlinear models using balancing of empirical Gramians and Galerkin projections. In Proceedings of the 2000 American Control Conference, Chicago, IL, USA, 28–30 June 2000; Volume 4, pp. 2864–2868. [Google Scholar]

- Condon, M.; Ivanov, R. Model reduction of nonlinear systems. Compel-Int. J. Comp. Math. Electr. Electron. Eng. 2004, 23, 547–557. [Google Scholar] [CrossRef]

- Yao, S.; Deng, Y.; Yu, Z. Balanced Truncation on Empirical Gramians for Model-Order-Reduction of Non-Quasi-Static Effects in MOSFETs. In Proceedings of the 9th International Conference on Solid-State and Integrated-Circuit Technology, Beijing, China, 20–23 October 2008; pp. 309–312. [Google Scholar]

- Zhanfeng, M.; Chao, H. Structure-preserving balanced truncation for flexible spacecraft using cross Gramian. J. Beijing Univ. Aeronaut. Astronaut. 2008, 34, 1437–1440. [Google Scholar]

- Himpe, C.; Ohlberger, M. Cross-Gramian Based Combined State and Parameter Reduction for Large-Scale Control Systems. Math. Probl. Eng. 2014, 2014, 1–13. [Google Scholar] [CrossRef]

- Streif, S.; Findeisen, R.; Bullinger, E. Relating Cross Gramians and Sensitivity Analysis in Systems Biology. Theory Netw. Syst. 2006, 10, 437–442. [Google Scholar]

- Lystianingrum, V.; Hredzak, B.; Agelidis, V.G. Abnormal overheating detectability analysis based on cross Gramian for a supercapacitors string. In Proceedings of the Power and Energy Society General Meeting, Boston, MA, USA, 17–21 July 2016. [Google Scholar]

- Geffen, D.; Findeisen, R.; Schliemann, M.; Allgöwer, F.; Guay, M. Observability Based Parameter Identifiability for Biochemical Reaction Networks. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 2130–2135. [Google Scholar]

- Tolks, C.; Ament, C. Model Order Reduction of Glucose-Insulin Homeostasis Using Empirical Gramians and Balanced Truncation. IFAC-PapersOnline 2017, 50, 14735–14740. [Google Scholar] [CrossRef]

- Moaveni, B.; Khaki-Sedigh, A. Input-Output Pairing based on Cross-Gramian Matrix. In Proceedings of the International Joint Conference SICE-ICAS, Busan, Korea, 18–21 October 2006; pp. 2378–2380. [Google Scholar]

- Shaker, H.R.; Komareji, M. Control Configuration Selection for Multivariable Nonlinear Systems. Ind. Eng. Chem. Res. 2012, 51, 8583–8587. [Google Scholar] [CrossRef]

- Shaker, H.R.; Stoustrup, J. An interaction measure for control configuration selection for multivariable bilinear systems. Nonlinear Dyn. 2013, 72, 165–174. [Google Scholar] [CrossRef]

- Singh, A.K.; Hahn, J. Determining Optimal Sensor Locations for State and Parameter Estimation for Stable Nonlinear Systems. Ind. Eng. Chem. Res. 2005, 44, 5645–5659. [Google Scholar] [CrossRef]

- Saltik, M.B.; Özkan, L.; Weiland, S.; van den Hof, P.M.J. Sensor Configuration Problem: Application to a Membrane Separation Unit. IFAC-PapersOnLine 2016, 49, 189–194. [Google Scholar] [CrossRef]

- Summers, T.H.; Cortesi, F.L.; Lygeros, J. On Submodularity and Controllability in Complex Dynamical Networks. IEEE Trans. Control Netw. Syst. 2016, 3, 91–101. [Google Scholar] [CrossRef]

- Lawrence, D.; Myatt, J.H.; Camphouse, R.C. On Model Reduction via Empirical Balanced Truncation. In Proceedings of the American Control Conference, Portland, OR, USA, 8–10 June 2005; Volume 5, pp. 3139–3144. [Google Scholar]

- Hahn, J.; Kruger, U.; Edgar, T.F. Application of Model Reduction for Model Predictive Control. IFAC Proc. Vol. 2002, 15, 393–398. [Google Scholar] [CrossRef]

- Hahn, J.; Edgar, T.F. A Gramian Based Approach to Nonlinearity Quantification and Model Classification. Ind. Eng. Chem. Res. 2001, 40, 5724–5731. [Google Scholar] [CrossRef]

- Jiang, M.; Wu, J.; Jiang, L.; Li, X. A Gramians Based Method for Nonlinearity Quantification of Spatio-Temporal Systems. In Advanced Science and Technology Letters; SERSC: Haikou, China, 2016; Volume 121, pp. 38–42. [Google Scholar]

- Fernando, K.V.; Nicholson, H. On the Cauchy Index of Linear Systems. IEEE Trans. Autom. Control 1983, 28, 222–224. [Google Scholar] [CrossRef]

- Fortuna, L.; Fransca, M. Optimal and Robust Control: Advanced Topics with MATLAB; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Fu, J.; Zhong, C.; Ding, Y.; Zhou, J.; Zhong, C. An Information Theoretic Approach to Model Reduction based on Frequency-domain Cross-Gramian Information. In Proceedings of the 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010; pp. 3679–3683. [Google Scholar]

- Halvarsson, B.; Castaño, M.; Birk, W. Uncertainty Bounds for Gramian-Based Interaction Measures. In Proceedings of the 14th WSEAS international conference on Systems: part of the 14th WSEAS CSCC multiconference, Corfu Island, Greece, 22–24 July 2010; Volume 2, pp. 393–398. [Google Scholar]

- Hrishikeshavan, V.; Humbert, J.S.; Chopra, I. Gramian Analysis of a Shrouded Rotor Micro Air Vehicle in Hover. J. Guid. Control Dyn. 2014, 37, 1684–1690. [Google Scholar] [CrossRef]

- Gugercin, S.; Antoulas, A.C.; Beattie, C. H2 Model Reduction for Large-Scale Linear Dynamical Systems. SIAM J. Matrix Anal. Appl. 2008, 30, 609–638. [Google Scholar] [CrossRef]

- Willcox, K.; Peraire, J. Balanced Model Reduction via the Proper Orthogonal Decomposition. AIAA J. 2002, 40, 2323–2330. [Google Scholar] [CrossRef]

- Rowley, C.W. Model reduction for fluids, using balanced proper orthogonal decomposition. Int. J. Bifurcat. Chaos 2005, 15, 997–1013. [Google Scholar] [CrossRef]

- Sun, C.; Hahn, J. Nonlinear Model Reduction Routines for MATLAB; Technical Report; Rensselaer Polytechnic Institute: Troy, NY, USA, 2006. [Google Scholar]

- Hahn, J.; Edgar, T.F. Balancing Approach to Minimal Realization and Model Reduction of Stable Nonlinear Systems. Ind. Eng. Chem. Res. 2002, 41, 2204–2212. [Google Scholar] [CrossRef]

- Choroszucha, R.B.; Sun, J.; Butts, K. Nonlinear Model Order Reduction for Predictive Control of the Diesel Engine Airpath. In Proceedings of the American Control Conference, Boston, MA, USA, 6–8 July 2016; pp. 5081–5086. [Google Scholar]

- Krener, A.; Ide, K. Measures of Unobservability. In Proceedings of the 48th IEEE Conference on Decision and Control, 2009 held jointly with the 2009 28th Chinese Control Conference, Shanghai, China, 16–18 December 2009; pp. 6401–6406. [Google Scholar]

- Himpe, C.; Ohlberger, M. A Unified Software Framework for Empirical Gramians. J. Math. 2013, 2013, 1–6. [Google Scholar] [CrossRef]

- Himpe, C.; Ohlberger, M. A note on the cross Gramian for non-symmetric systems. Syst. Sci. Control Eng. 2016, 4, 199–208. [Google Scholar] [CrossRef]

- Garcia, J.S.; Basilio, J.C. Computation of reduced-order models of multivariable systems by balanced truncation. Int. J. Syst. Sci. 2002, 33, 847–854. [Google Scholar] [CrossRef]

- Antoulas, A.C. Approximation of Large-Scale Dynamical Systems Volume 6 Advances in Design and Control; SIAM Publications: Philadelphia, PA, USA, 2005. [Google Scholar]

- Aldhaheri, R.W. Model order reduction via real Schur-form decomposition. Int. J. Control 1991, 53, 709–716. [Google Scholar] [CrossRef]

- Baur, U.; Benner, P. Gramian-Based Model Reduction for Data-Sparse Systems. SIAM J. Sci. Comput. 2008, 31, 776–798. [Google Scholar] [CrossRef]

- Sorensen, D.C.; Antoulas, A.C. The Sylvester equation and approximate balanced reduction. Numer. Linear Algebra Appl. 2002, 671–700. [Google Scholar] [CrossRef]

- Hahn, J.; Edgar, T.F. An improved method for nonlinear model reduction using balancing of empirical Gramians. Comput. Chem. Eng. 2002, 26, 1379–1397. [Google Scholar] [CrossRef]

- Sun, C.; Hahn, J. Parameter reduction for stable dynamical systems based on Hankel singular values and sensitivity analysis. Chem. Eng. Sci. 2006, 61, 5393–5403. [Google Scholar] [CrossRef]

- Himpe, C. Combined State and Parameter Reduction for Nonlinear Systems with an Application in Neuroscience. Ph.D. Thesis, Westfälische Wilhelms-Universität Münster, Münster, Germany, 2017. [Google Scholar]

- Keil, A.; Gouz;, J.L. Model Reduction of Modular Systems Using Balancing Methods; Technical Report; Technische Universität München: München, Germany, 2003. [Google Scholar]

- Stigter, J.D.; van Willigenburg, L.G.; Molenaar, J. An Efficient Method to Assess Local Controllability and Observability for Non-Linear Systems. IFAC-PapersOnLine 2018, 51, 535–540. [Google Scholar] [CrossRef]

- Hespanha, J. Linear Systems Theory; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Ma, X.; De Abreu-Garcia, J.A. On the Computation of Reduced Order Models of Nonlinear Systems using Balancing Technique. In Proceedings of the 27th IEEE Conference on Decision and Control, Austin, TX, USA, 7–9 December 1988; Volume 2, pp. 1165–1166. [Google Scholar]

- Singh, A.K.; Hahn, J. On the Use of Empirical Gramians for Controllability and Observability Analysis. In Proceedings of the 2005 American Control Conference, Portland, OR, USA, 8–10 June 2005; Volume 2005, pp. 140–141. [Google Scholar]

- Dones, I.; Skogestad, S.; Preisig, H.A. Application of Balanced Truncation to Nonlinear Systems. Ind. Eng. Chem. Res. 2011, 50, 10093–10101. [Google Scholar] [CrossRef]

- Scherpen, J.M.A. Balancing for nonlinear systems. Syst. Control Lett. 1993, 21, 143–153. [Google Scholar] [CrossRef]

- Hahn, J.; Edgar, T.F.; Marquardt, W. Controllability and observability covariance matrices for the analysis and order reduction of stable nonlinear systems. J. Process Control 2003, 13, 115–127. [Google Scholar] [CrossRef]

- Fernando, K.V.; Nicholson, H. On the Structure of Balanced and Other Principal Representations of SISO Systems. IEEE Trans. Autom. Control 1983, 28, 228–231. [Google Scholar] [CrossRef]

- Fernando, K.V. Covariance and Gramian Matrices in Control and Systems Theory. Ph.D. Thesis, University of Sheffield, Sheffield, UK, 1982. [Google Scholar]

- Fernando, K.V.; Nicholson, H. On the Cross-Gramian for Symmetric MIMO Systems. IEEE Trans. Circuits Syst. 1985, 32, 487–489. [Google Scholar] [CrossRef]

- Shaker, H.R. Generalized Cross-Gramian for Linear Systems. In Proceedings of the 7th IEEE Conference on Industrial Electronics and Applications (ICIEA), Singapore, 18–20 July 2012; pp. 749–751. [Google Scholar]

- Baur, U.; Benner, P.; Haasdonk, B.; Himpe, C.; Martini, I.; Ohlberger, M. Comparison of Methods for Parametric Model Order Reduction of Time-Dependent Problems. In Model Reduction and Approximation: Theory and Algorithms; Benner, P., Cohen, A., Ohlberger, M., Willcox, K., Eds.; SIAM: Philadelphia, PA, USA, 2017; pp. 377–407. [Google Scholar]

- Barbagallo, A.; De Felice, V.F.; Nagarajan, K.K. Reduced Order Modelling of a Couette Flow Using Balanced Proper Orthogonal Decomposition. In Proceedings of the 2nd Young ERCOFTAC Workshop, Montestigliano, Italy, 10 March 2008. [Google Scholar]

- Ionescu, T.C.; Fujimoto, K.; Scherpen, J.M.A. Singular Value Analysis of Nonlinear Symmetric Systems. IEEE Trans. Autom. Control 2011, 56, 2073–2086. [Google Scholar] [CrossRef]

- Fujimoto, K.; Scherpen, J.M.A. On balanced truncation for symmetric nonlinear systems. In Proceedings of the International Symposium on Mathematical Theory of Networks and Systems, Groningen, The Netherlands, 7–11 July 2014; Volume 21, pp. 1498–1502. [Google Scholar]

- Constantine, P. Active Subspaces: Emerging Ideas for Dimension Reduction in Parameter Studies; SIAM Spotlights, SIAM: Philadelphia, PA, USA, 2015. [Google Scholar]

- Lieberman, C.E.; Fidkowski, K.; Willcox, K.; van Bloemen Waanders, B. Hessian-based model reduction: Large-scale inversion and prediction. Int. J. Numer. Methods Fluids 2013, 71, 135–150. [Google Scholar] [CrossRef]

- Jazlan, A.; Sreeram, V.; Togneri, R. Cross Gramian Based Time Interval Model Reduction. In Proceedings of the 5th Australien Control Conference (AUCC), Gold Coast, Australia, 5–6 November 2015; pp. 274–276. [Google Scholar]

- The MathWorks, Inc. MATLAB. Available online: http://www.matlab.com (accessed on 26 June 2018).

- The Octave Developers. GNU Octave. Available online: http://octave.org (accessed on 26 June 2018).

- Johnson, R.K. The Elements of MATLAB Style; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Altman, Y.M. Accelerating MATLAB Performance: 1001 Tips to Speed up MATLAB Programs; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Rogers, P.; Marci, J.; Marinkovic, S. Heterogeneous Uniform Memory Access; AMD: Santa Clara, CA, USA, 2013. [Google Scholar]

- Himpe, C.; Leibner, T.; Rave, S. Hierarchical Approximate Proper Orthogonal Decomposition. arXiv, 2018; arXiv:1607.05210. [Google Scholar]

- Himpe, C.; Leibner, T.; Rave, S.; Saak, J. Fast Low-Rank Empirical Cross Gramians. Proc. Appl. Math. Mech. 2017, 17, 841–842. [Google Scholar] [CrossRef]

- Condon, M.; Ivanov, R. Empirical Balanced Truncation of Nonlinear Systems. J. Nonlinear Sci. 2004, 14, 405–414. [Google Scholar] [CrossRef]

- Nilsson, O.; Rantzer, A. A novel approach to balanced truncation of nonlinear systems. In Proceedings of the 2009 European Control Conference (ECC), Budapest, Hungary, 23–26 August 2009. [Google Scholar]

- Nikiforuk, P.N.; Gupta, M.M. On stochastic perturbation theory for linear systems. In Proceedings of the 1969 IEEE Symposium on Adaptive Processes (8th) Decision and Control, University Park, PA, USA, 17–19 November 1969. [Google Scholar]

- Bouvrie, J.; Hamzi, B. Kernel Methods for the Approximation of Nonlinear Systems. SIAM J. Control Optim. 2017, 55, 2460–2492. [Google Scholar] [CrossRef]

- Fasshauer, G.; McCourt, M. Kernel-Based Approximation Methods Using MATLAB Volume 19 Interdisciplinary Mathematical Sciences; World Scientific: Singapore, 2015. [Google Scholar]

- Kalashnikova, I.; Barone, M.; Arunajatesan, S.; van Bloemen Waanders, B. Construction of energy-stable projection-based reduced order models. Appl. Math. Comput. 2014, 249, 569–596. [Google Scholar] [CrossRef]

- Schelfhout, G.; de Moor, B. Time-Domain Weighted Balanced Truncation. In Proceedings of the 3rd European Control Conference, Rome, Italy, 5–8 September 1995; pp. 1–4. [Google Scholar]

- Snowden, T.J.; van der Graaf, P.H.; Tindall, M.J. A combined model reduction algorithm for controlled biochemical systems. BMC Syst. Biol. 2017, 11, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Eberle, C.; Ament, C. Identifiability and online estimation of diagnostic parameters with in the glucose insulin homeostasis. Biosystems 2012, 107, 135–141. [Google Scholar] [CrossRef] [PubMed]

- Powel, N.D.; Morgansen, K.A. Empirical Observability Gramian Rank Condition for Weak Observability of Nonlinear Systems with Control. In Proceedings of the 54th Annual Conference on Decision and Control, Osaka, Japan, 15–18 December 2015; pp. 6342–6348. [Google Scholar]

- Wu, M.; Yin, B.; Vosoughi, A.; Studer, C.; Cavallaro, J.R.; Dick, C. Approximate Matrix Inversion for High-Throughput Data Detection in the Large-Scale MIMO Uplink. In Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS2013), Beijing, China, 19–23 May 2013; pp. 2155–2158. [Google Scholar]

- Ketcheson, D.I. Highly Efficient Strong Stability-Preserving Runge-Kutta Methods with Low-Storage Implementations. SIAM J. Sci. Comput. 2008, 30, 2113–2136. [Google Scholar] [CrossRef]

- Eaton, J.W.; Bateman, D.; Hauberg, S.; Wehbring, R. GNU Octave Version 4.4.0 Manual: A High-Level Interactive Language for Numerical Computations. Available online: https://octave.org/octave.pdf (accessed on 26 June 2018).

- The MORwiki Community. MORwiki-Model Order Reduction Wiki. Available online: http://modelreduction.org (accessed on 26 June 2018).

- Himpe, C.; Ohlberger, M. Cross-Gramian-Based Model Reduction: A Comparison. In Model Reduction of Parametrized Systems; Benner, P., Ohlberger, M., Patera, A., Rozza, G., Urban, K., Eds.; Springer: Cham, Switzerland, 2017; Volume 17, pp. 271–283. [Google Scholar]

- Liu, W.Q.; Sreeram, V.; Teo, K.L. Model reduction for state-space symmetric systems. Syst. Control Lett. 1998, 34, 209–215. [Google Scholar] [CrossRef]

- Quan, Y.; Zhang, H.; Cai, L. Modeling and Control Based on a New Neural Network Model. In Proceedings of the American Control Conference, Arlington, VA, USA, 25–27 June 2001; Volume 3, pp. 1928–1929. [Google Scholar]

- Everson, R.; Sirovich, L. Karhunen-Loéve Procedure for Gappy Data. J. Opt. Soc. Am. A 1995, 12, 1657–1664. [Google Scholar] [CrossRef]

- Astrid, P. Fast Reduced Order Modeling Technique for Large Scale LTV Systems. In Proceedings of the American Control Conference, Boston, MA, USA, 30 June–2 July 2004; pp. 762–767. [Google Scholar]

- Chaturantabut, S.; Sorensen, D.C. Nonlinear model reduction via discrete empirical interpolation. SIAM J. Sci. Comput. 2010, 32, 2737–2764. [Google Scholar] [CrossRef]

- Williams, M.O.; Schmid, P.J.; Kutz, J.N. Hybrid Reduced-Order Integration with Proper Orthogonal Decomposition and Dynamic Mode Decomposition. Multiscale Model. Simul. 2013, 11, 522–544. [Google Scholar] [CrossRef]

- Moore, B.C. Principal Component Analysis in Nonlinear Systems: Preliminary Results. In Proceedings of the 18th IEEE Conference on Decision and Control including the Symposium on Adaptive Processes, Fort Lauderdale, FL, USA, 12–14 December 1979; Volume 2, pp. 1057–1060. [Google Scholar]

- Dimitriu, G.; Ştefănescu, R.; Navon, I.M. Comparative numerical analysis using reduced-order modeling strategies for nonlinear large-scale systems. J. Comput. Appl. Math. 2017, 310, 32–42. [Google Scholar] [CrossRef]

- Melchior, S.; Legat, V.; van Dooren, P. Gramian Based Model Reduction of Nonlinear MIMO Systems. In Proceedings of the Mathematical Theory of Networks and Systems, Melbourne, Australia, 9–13 July 2012. [Google Scholar]

- Yeung, E.; Liu, Z.; Hodas, N.O. A Koopman Operator Approach for Computing and Balancing Gramians for Discrete Time Nonlinear Systems. arXiv, 2017; arXiv:1709.08712. [Google Scholar]

- Choroszucha, R.B.; Sun, J. Empirical Riccati covariance matrices for closed-loop model order reduction of nonlinear systems by balanced truncation. In Proceedings of the American Control Conference, Seattle, WA, USA, 24–26 May 2017; pp. 3476–3482. [Google Scholar]

- Kawano, Y.; Scherpen, J.M.A. Empirical Differential Balancing for Nonlinear Systems. IFAC-PapersOnLine 2017, 50, 6326–6331. [Google Scholar] [CrossRef]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).