New and Efficient Algorithms for Producing Frequent Itemsets with the Map-Reduce Framework

Abstract

1. Introduction

- A novel algorithm for mining closed frequent itemsets in big, distributed data settings, using the Map-Reduce paradigm. Using Map-Reduce makes our algorithm very pragmatic and relatively easy to implement, maintain and execute. In addition, our algorithm does not require a duplication elimination step, which is common to most known algorithms (it makes both the mapper and reducer more complicated, but it gives better performance).

- A general algorithm for mining incremental frequent itemsets for general distributed environments with additional optimizations of the algorithm. Some of the optimizations are unique for the Map-Reduce environment but can be applied to other similar architectures.

- We conducted extensive experimental evaluation of our new algorithms and show their behavior under various conditions and their advantages over existing algorithms.

2. Background and Related Work

2.1. Association Rules and Frequent Itemsets

2.2. Mining Closed Frequent Itemsets Algorithms

2.3. Incremental Frequent Itemsets Mining

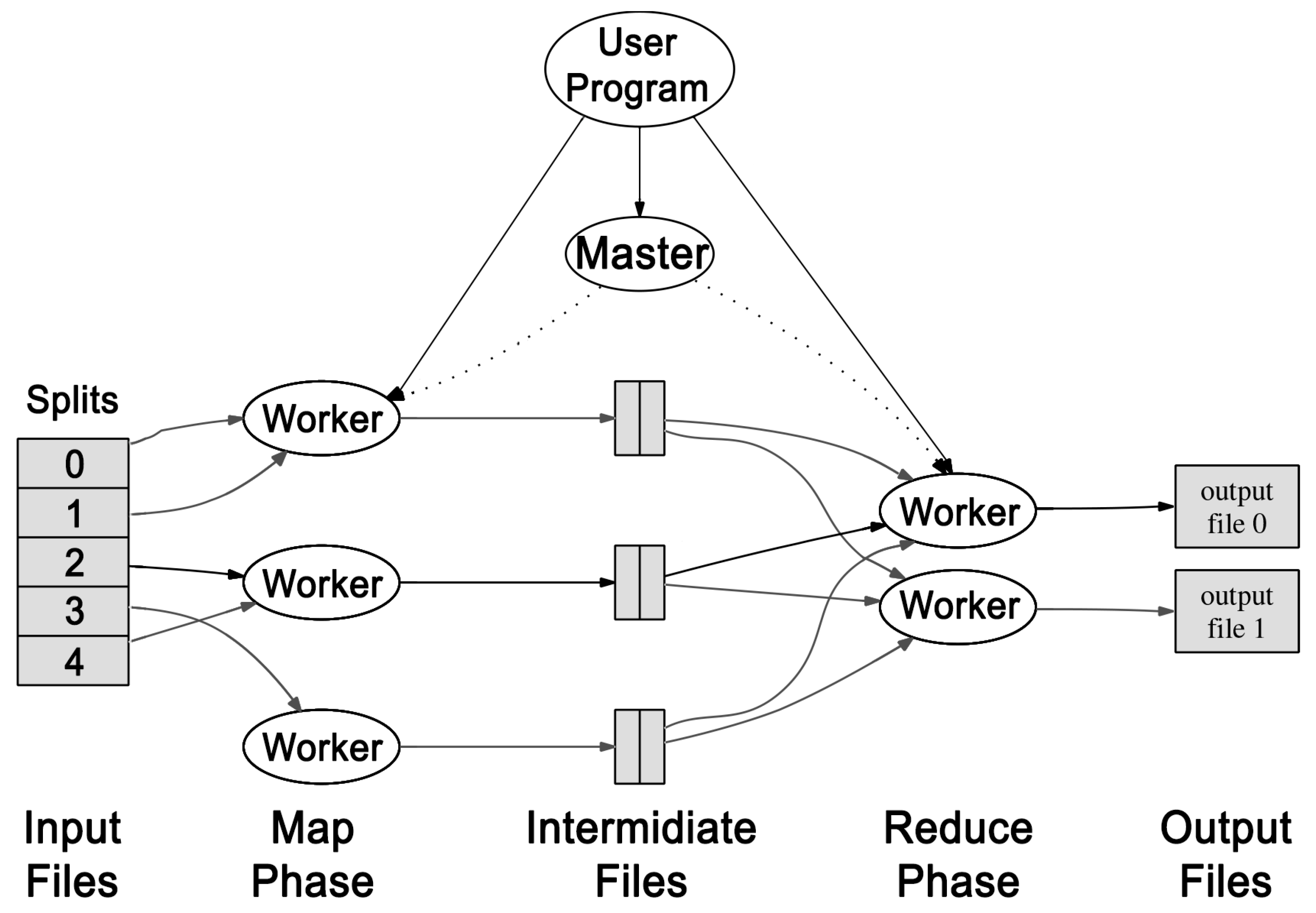

2.4. Map-Reduce Model

2.5. Incremental Computation in Map-Reduce

2.6. Map-Reduce Communication–Cost Model

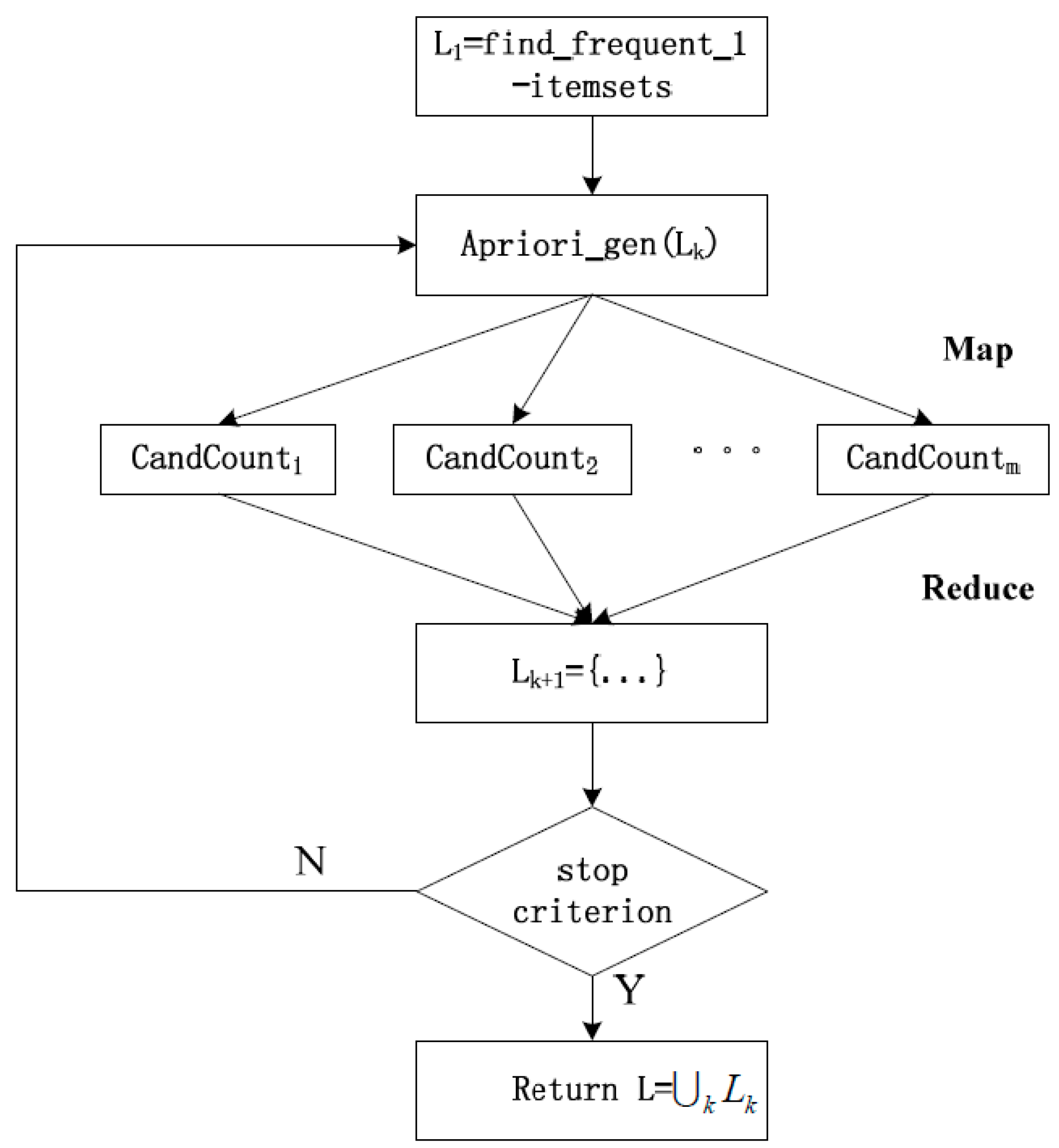

2.7. Apriori Map-Reduce Algorithms

2.8. Join Operation and Map-Reduce

3. Mining Closed Frequent Itemsets with Map-Reduce

3.1. Problem Definition

3.2. The Algorithm

3.2.1. Overview

- , the transaction database

- , the set of the closed frequent itemsets found in the previous iteration (, the input for the first iteration, is the empty set).

3.2.2. Definitions

3.2.3. Algorithm Steps

Map Step

Combine Step

Reduce Step

3.2.4. Run Example

3.2.5. Soundness

3.2.6. Completeness

3.2.7. Duplication Elimination

3.3. Experiments

3.3.1. Data

3.3.2. Setup

3.3.3. Measurement

3.3.4. Experiments Internals

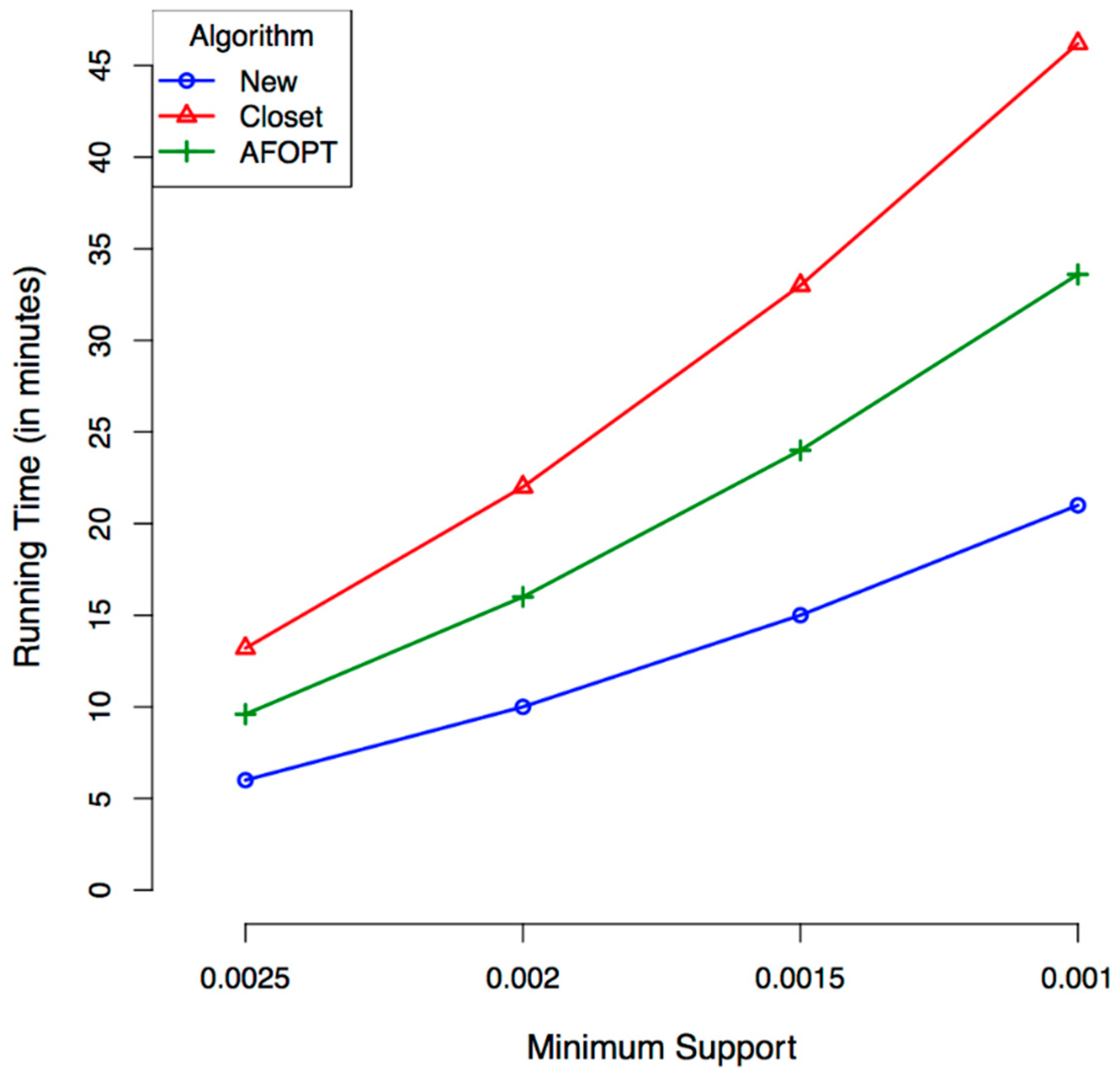

3.3.5. Results

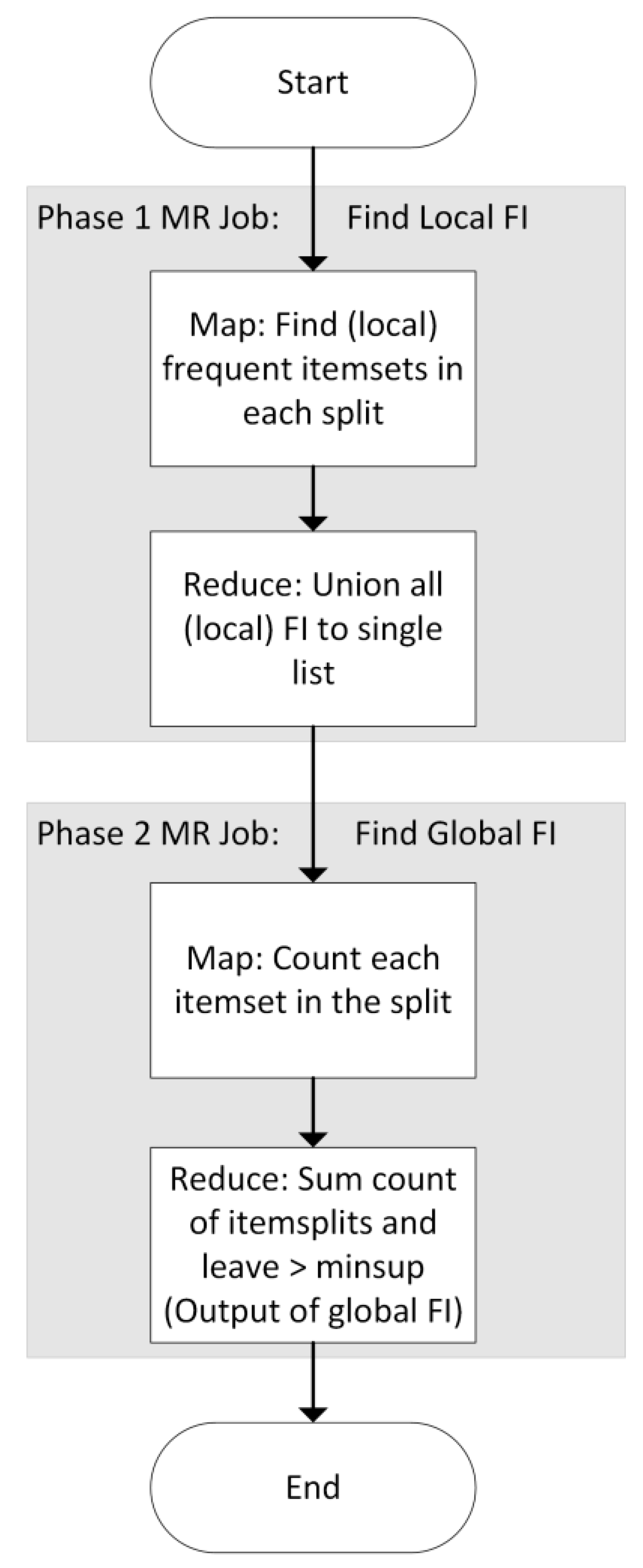

4. Incremental Frequent Itemset Mining with Map-Reduce

4.1. Problem Definition

4.2. The Algorithms

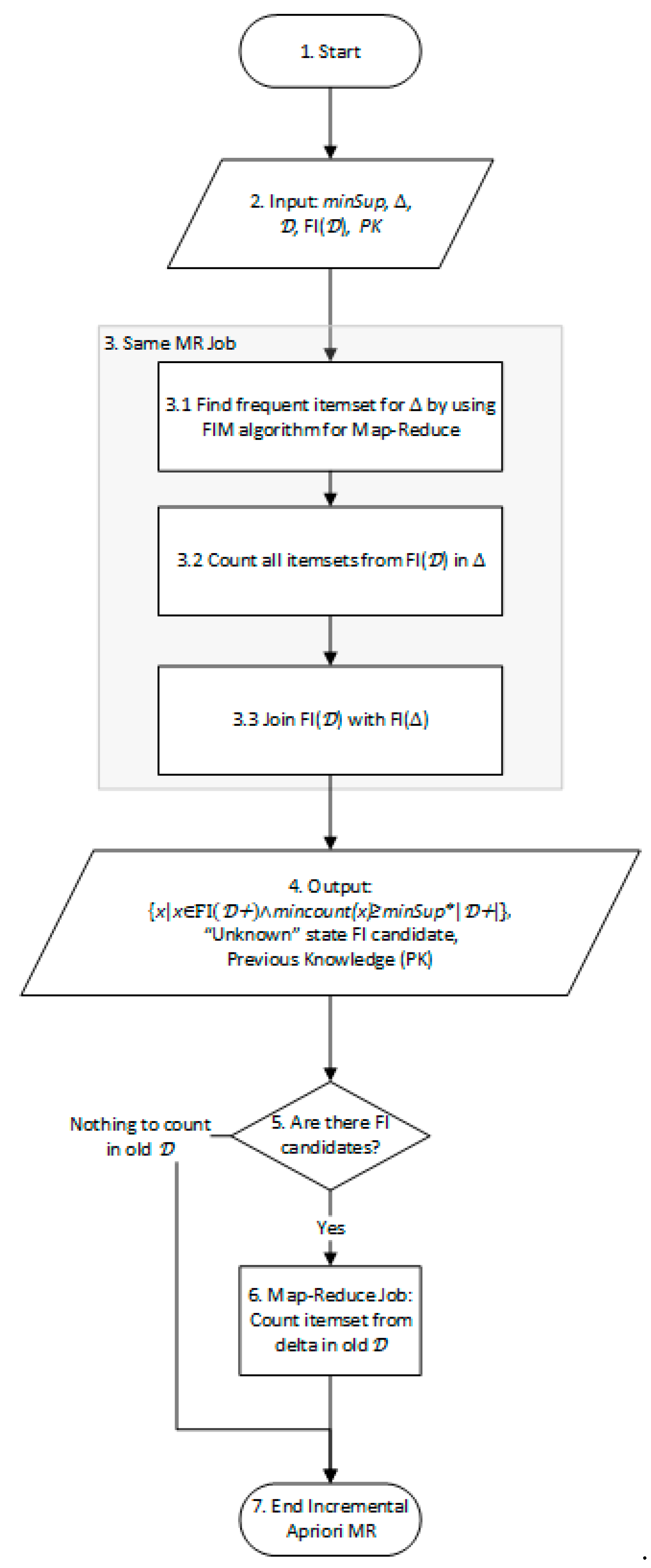

4.2.1. General Scheme

- In case that it is locally frequent in and old , then it is globally frequent so it may be outputted immediately (step 9).

- If it is locally frequent only in , then we need to count it in old (steps 10–11 by using the additional count MR job).

- If it is locally frequent in old , we need to count it in (steps 12–13 by using the same MR job as in steps 10–11 with other input).

- Find deltaFI by using any suitable MR algorithm (may have more than one job inside).

- Join MR job. Any Join algorithm may be in use. The Mapper output is just a copy of the input (Identity function); the Reducer should have three output files/directories (instead of just one) for each case.

- Count itemsets inside the database. The same MR count algorithm may be used for both old and . Counts in both DBs could be executed in parallel on the same MR cluster.

4.2.2. Early Pruning Optimizations

4.2.3. Early Pruning Example

4.2.4. Map-Reduce Optimized Algorithm

- When the is being split by the MR framework to Splits, it is being split to chunks of equal predefined sizes. Only the last chunk may be of different size. We need to make sure that the last chunk is larger than the previous chunk (rather than smaller). Fortunately, MR systems, like Hadoop, do append the last smaller input part to a previous chunk of predefined size. So, the last Split is actually larger than the others.

- If the total input divided by the minimum Split amounts is still too small, it is preferable to manually control the number of Splits. In most cases, it is better to sacrifice parallel computation for gaining speed with less workers or even using a single worker. Once again, it is possible to control the splitting process in Hadoop’s system via the configuration parameters.

- If is still very small for a single worker to process it effectively, it is better to use a non-incremental algorithm for the calculation of .

4.2.5. Reuse of Discarded Information

4.3. Experimental Evaluation

4.3.1. Data

4.3.2. Setup

4.3.3. Measurement

4.3.4. Experiment Internals

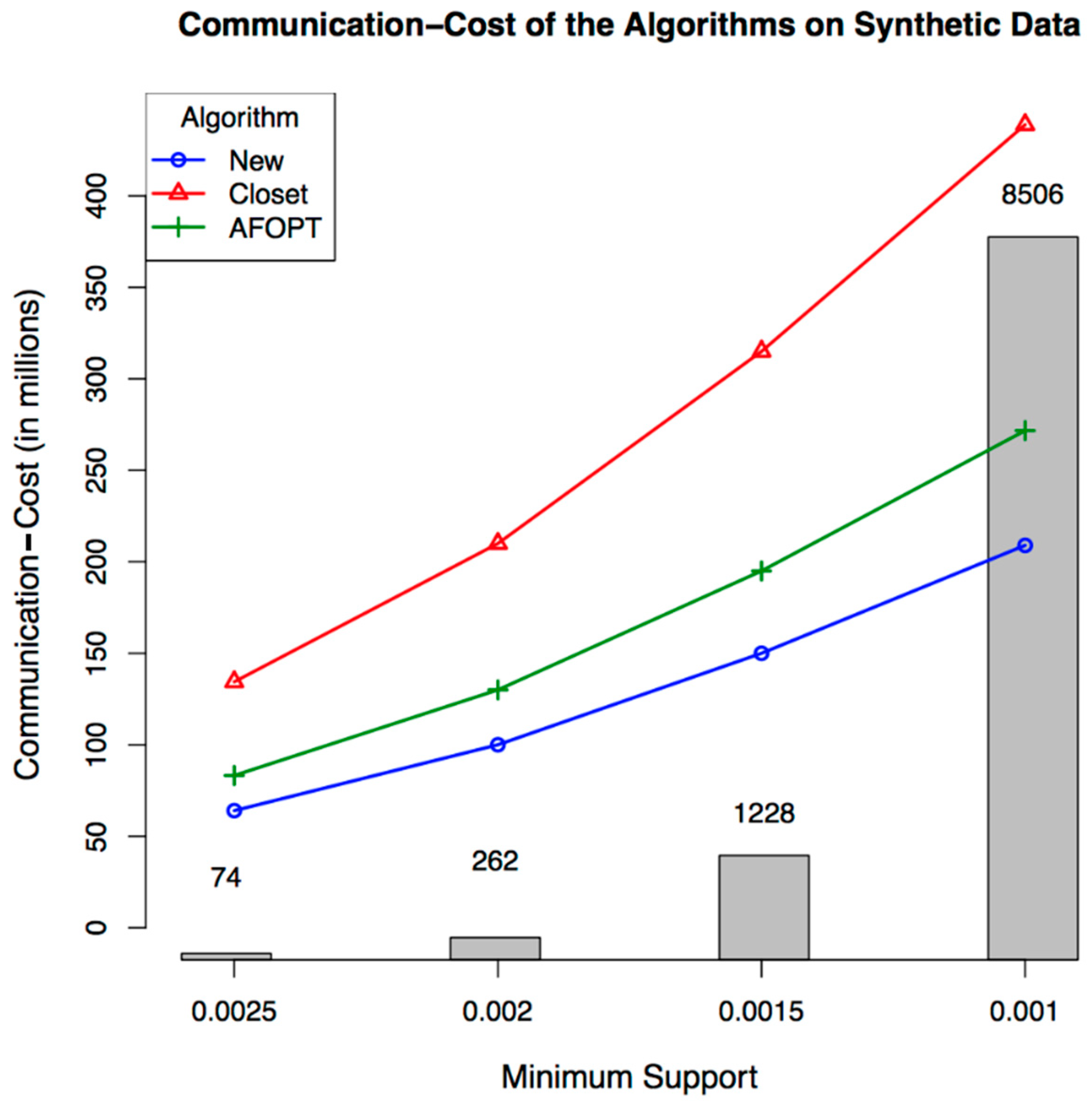

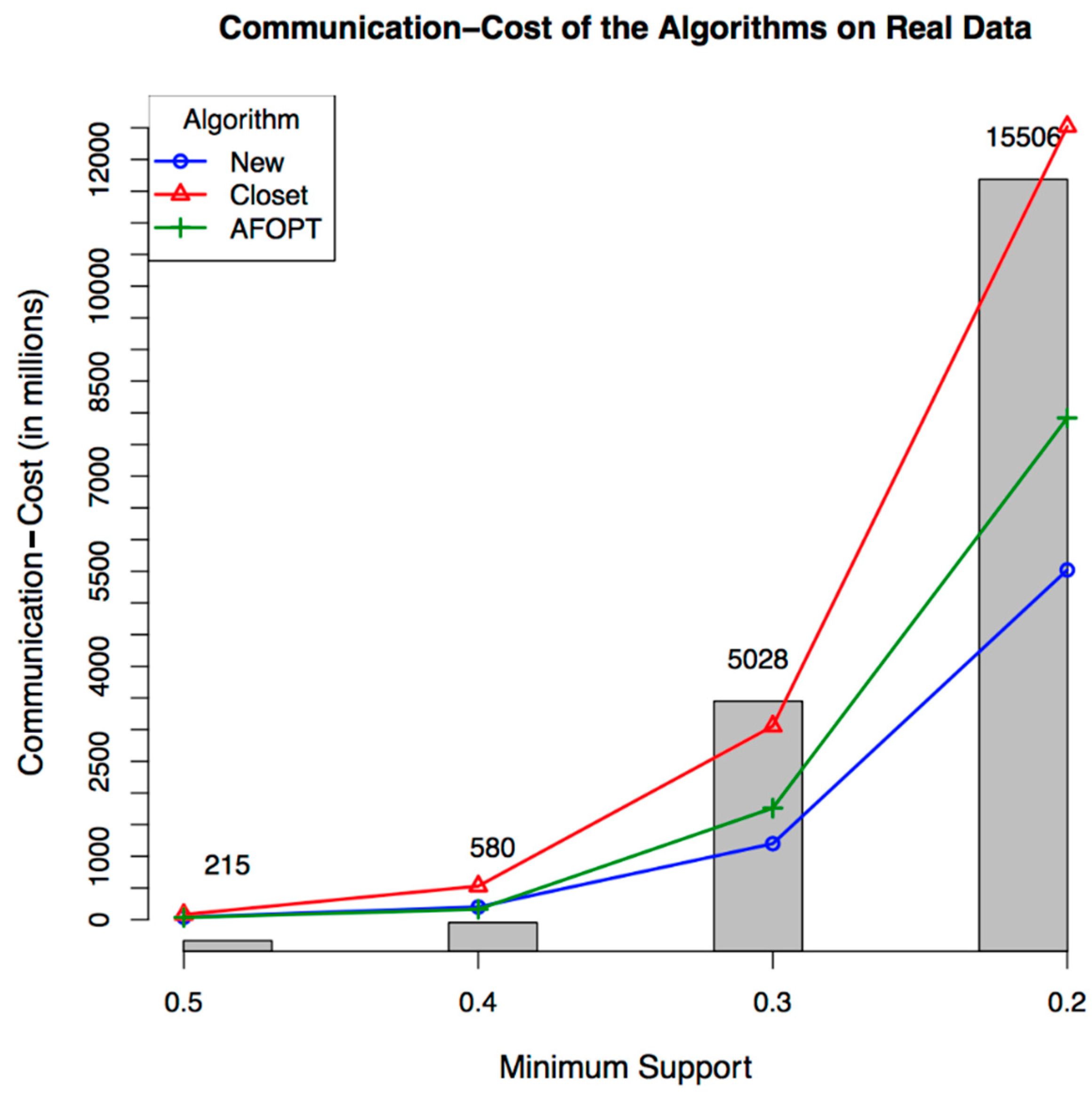

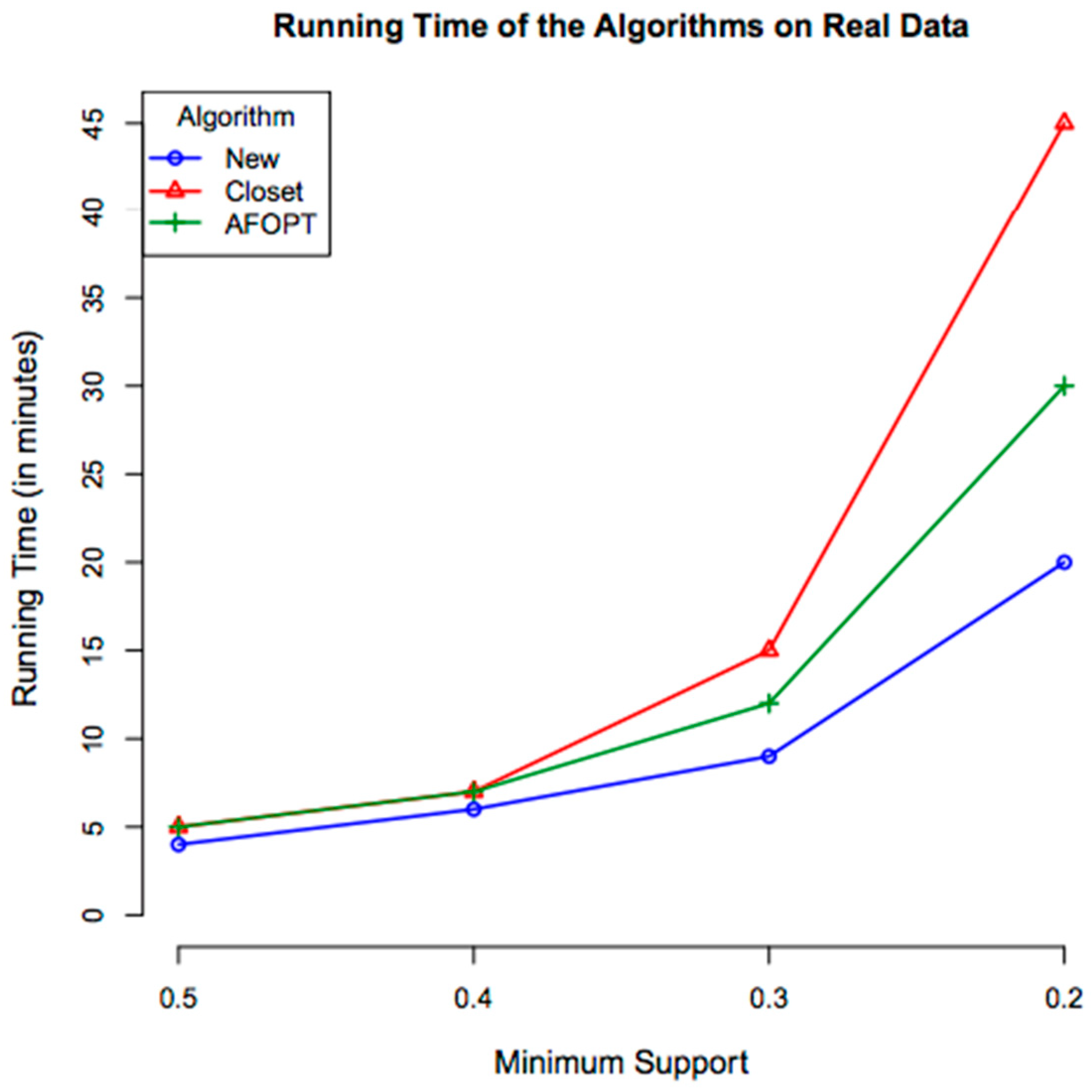

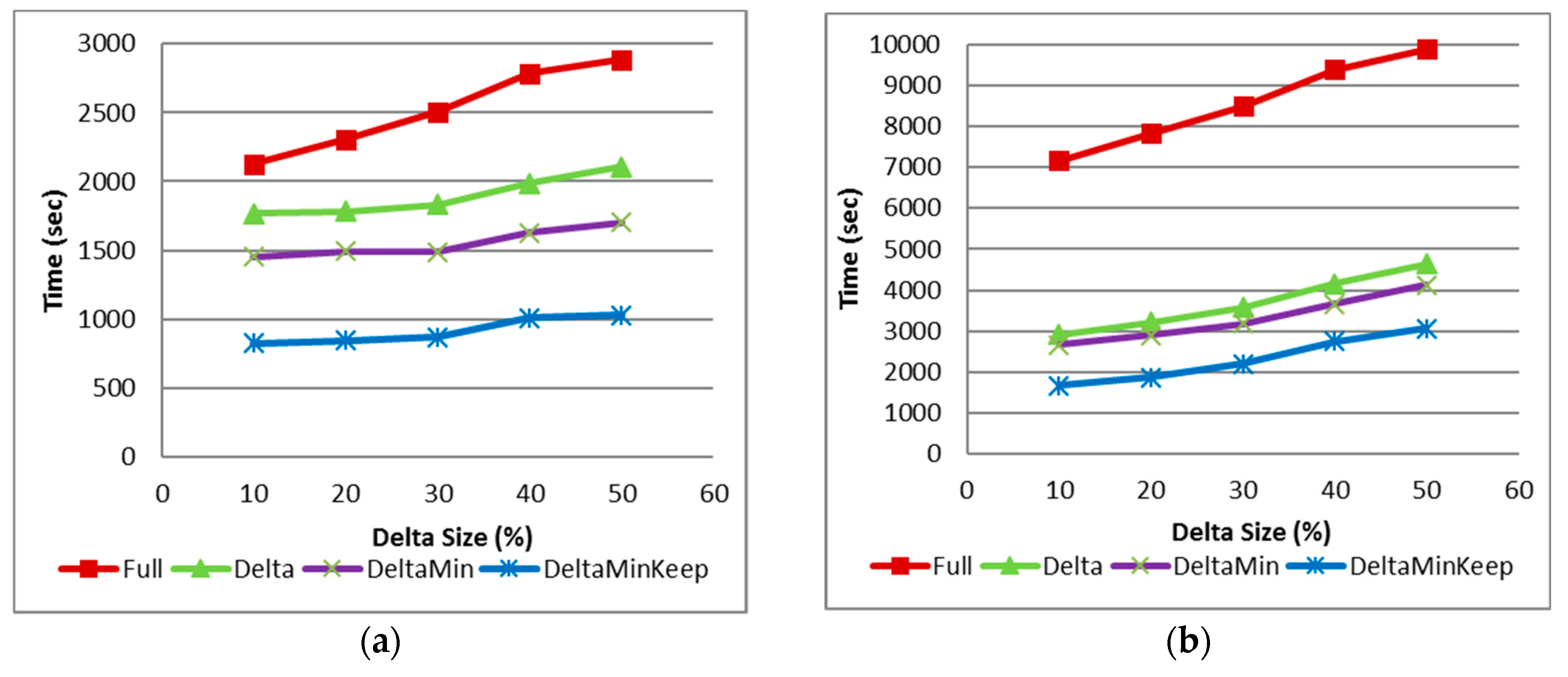

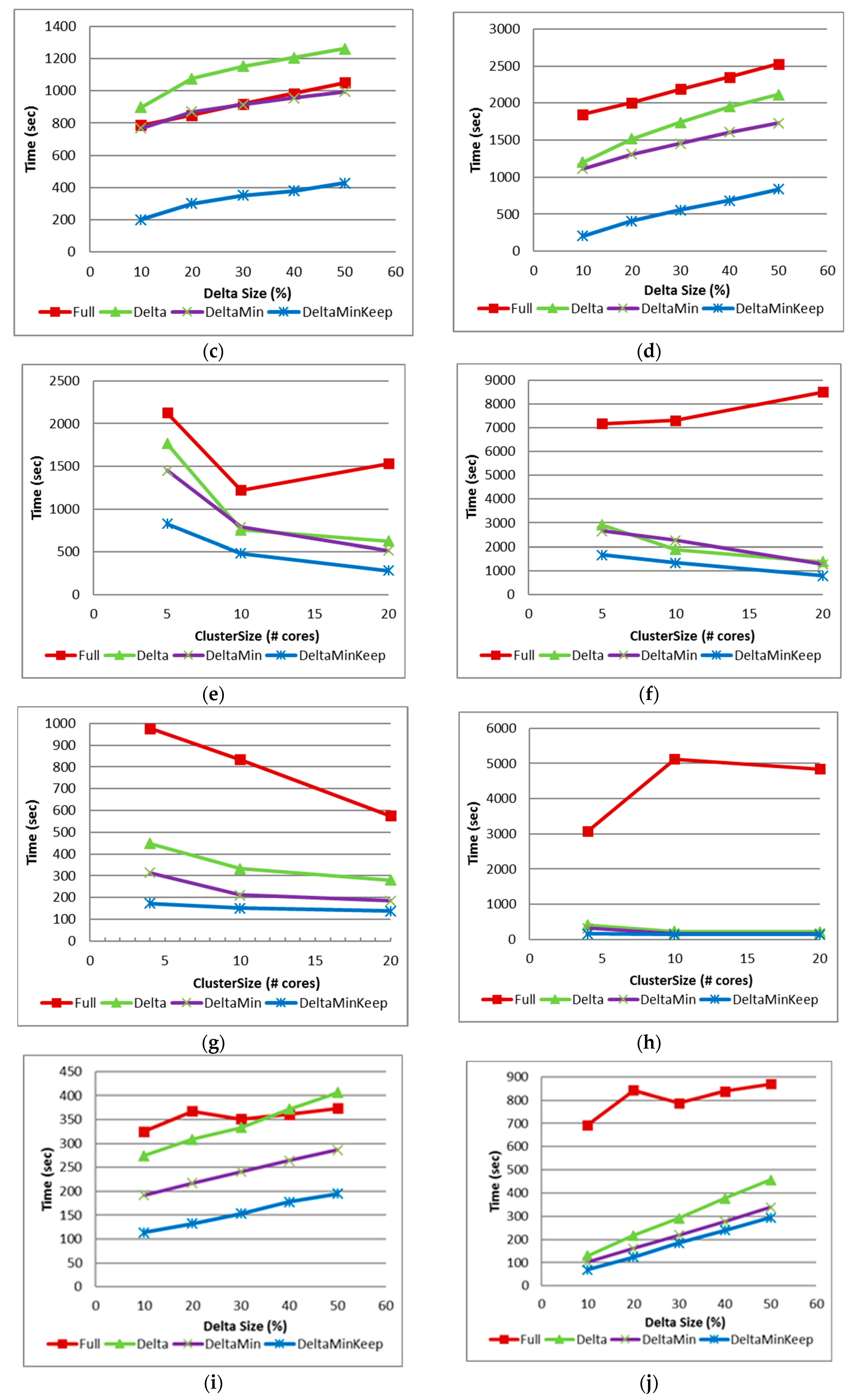

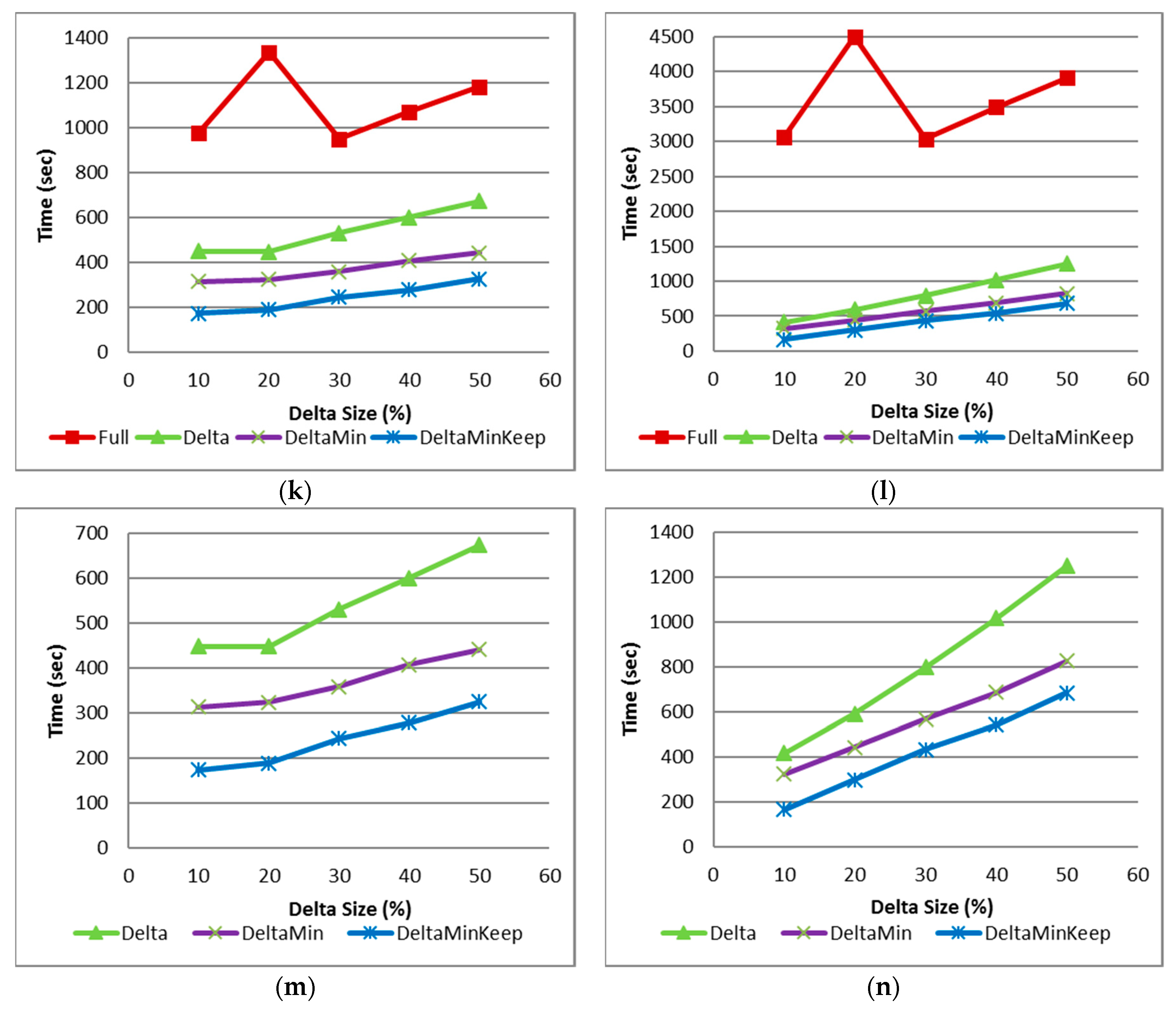

4.3.5. Results

4.3.6. Comparison to Previous Works

4.3.7. The Algorithm Relation to the Spark Architecture

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dean, J.; Ghemawat, S. MapReduce: Simplified Data Processing on Large Clusters; ACM: New York, NY, USA, 2008. [Google Scholar]

- Apache: Hadoop. Available online: http://hadoop.apache.org/ (accessed on 1 January 2016).

- Zaharia, M.; Chowdhury, M.; Das, T.; Dave, A.; Ma, J.; McCauley, M.; Franklin, M.; Shenker, S.; Stoica, I. Resilient distributed datasets: A fault-tolerant abstraction for in-memory cluster computing. In Proceedings of the 9th USENIX Conference on Networked Systems Design and Implementation, San Jose, CA, USA, 25–27 April 2012. [Google Scholar]

- Doulkeridis, C.N. A survey of large-scale analytical query processing in MapReduce. VLDB J. Int. J. Very Large Data Bases 2014, 23, 355–380. [Google Scholar] [CrossRef]

- Agrawal, R.; Imieliński, T.; Swami, A. Mining association rules between sets of items in large databases. In Proceedings of the 1993 ACM SIGMOD International Conference on Management of Data, Washington, DC, USA, 25–28 May 1993; pp. 207–216. [Google Scholar]

- Agrawal, R.; Srikant, R. Fast Algorithms for Mining Association Rules; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1994. [Google Scholar]

- Duaimi, M.G.; Salman, A. Association rules mining for incremental database. Int. J. Adv. Res. Comput. Sci. Technol. 2014, 2, 346–352. [Google Scholar]

- Han, J.; Cheng, H.; Xin, D.; Yan, X. Frequent pattern mining: Current status and future directions. Data Min. Knowl. Discovery 2007, 15, 55–86. [Google Scholar] [CrossRef]

- Cheng, J.; Ke, Y.; Ng, W. A survey on algorithms for mining frequent. Knowl. Inf, Syst. 2008, 16, 1–27. [Google Scholar] [CrossRef]

- Farzanyar, Z.; Cercone, N. Efficient mining of frequent itemsets in social network data based on MapReduce framework. In Proceedings of the 2013 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Niagara Falls, ON, Canada, 25–28 August 2013; pp. 1183–1188. [Google Scholar]

- Li, N.; Zeng, L.; He, Q.; Shi, Z. Parallel implementation of apriori algorithm based on MapReduce. In Proceedings of the 2012 13th ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel & Distributed Computing, Kyoto, Japan, 8–10 August 2012. [Google Scholar]

- Woo, J. Apriori-map/reduce algorithm. In Proceedings of the 2012 International Conference on Parallel and Distributed Processing Techniques and Applications (PDPTA 2012), Las Vegas, NV, USA, 16–19 July 2012. [Google Scholar]

- Yahya, O.; Hegazy, O.; Ezat, E. An efficient implementation of Apriori algorithm based on Hadoop-Mapreduce model. Int. J. Rev. Comput. 2012, 12, 59–67. [Google Scholar]

- Pasquier, N.; Bastide, Y.; Taouil, R.; Lakhal, L. Discovering frequent closed itemsets for association rules. In Proceedings of the Database Theory ICDT 99, Jerusalem, Israel, 10–12 January 1999; pp. 398–416. [Google Scholar]

- Cheung, D.W.; Han, J.; Wong, C.Y. Maintenance of discovered association rules in large databases: An incremental updating technique. In Proceedings of the Twelfth International Conference on Data Engineering, New Orleans, LA, USA, 26 February–1 March 1996; pp. 106–114. [Google Scholar]

- Thomas, S.; Bodagala, S.; Alsabti, K.; Ranka, S. An efficient algorithm for the incremental updation of association rules in large databases. In Proceedings of the Third International Conference on Knowledge Discovery and Data Mining, Newport Beach, CA, USA, 14–17 August 1997; pp. 263–266. [Google Scholar]

- Das, A.; Bhattacharyya, D.K. Rule Mining for Dynamic Databases; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Gonen, Y.; Gudes, E. An improved mapreduce algorithm for mining closed frequent itemsets. In Proceedings of the IEEE International Conference on Software Science, Technology and Engineering (SWSTE), Beer-Sheva, Israel, 23–24 June 2016; pp. 77–83. [Google Scholar]

- Kandalov, K.; Gudes, E. Incremental Frequent Itemsets Mining with MapReduce; Springer: Cham, Switzerland, 2017; pp. 247–261. [Google Scholar]

- Agrawal, R.; Shafer, J. Parallel mining of association rules. IEEE Trans. Knowl. Data Eng. 1996, 8, 962–969. [Google Scholar] [CrossRef]

- Zaki, M.J.; Parthasarathy, S.; Ogihara, M.; Li, W. New Algorithms for Fast Discovery of Association Rules; University of Rochester: Rochester, NY, USA, 1997. [Google Scholar]

- Lucchese, C.; Orlando, S.; Perego, R. Parallel mining of frequent closed patterns: Harnessing modern computer architectures. In Proceedings of the Seventh IEEE International Conference on Data Mining, Omaha, NE, USA, 28–31 October 2007; pp. 242–251. [Google Scholar]

- Lucchese, C.; Mastroianni, C.; Orlando, S.; Talia, D. Mining@home: Toward a public-resource computing framework for distributed data mining. Concurrency Comput. Pract. Exp. 2009, 22, 658–682. [Google Scholar] [CrossRef]

- Liang, Y.-H.; Wu, S.-Y. Sequence-growth: A scalable and effective frequent itemset mining algorithm for big data based on mapreduce framework. In Proceedings of the 2015 IEEE International Congress on Big Data, New York, NY, USA, 27 June–2 July 2015; pp. 393–400. [Google Scholar]

- Wang, S.-Q.; Yang, Y.-B.; Chen, G.-P.; Gao, Y.; Zhang, Y. Mapreduce based closed frequent itemset mining with efficient redundancy filtering. In Proceedings of the 2012 IEEE 12th International Conference on Data Mining Workshops, Brussels, Belgium, 10 December 2012; pp. 449–453. [Google Scholar]

- Liu, G.; Lu, H.; Yu, J.; Wang, W.; Xiao, X. Afopt: An efficient implementation of pattern growth approach. In Proceedings of the Third IEEE International Conference on Data Mining, Melbourne, FL, USA, 19–22 November 2003. [Google Scholar]

- Borthakur, D. The Hadoop Distributed File System: Architecture and Design. In: Hadoop Project Website. 2007. Available online: https://hadoop.apache.org/docs/r1.2.1/hdfs_design.pdf (accessed on 1 January 2016).

- Bhatotia, P.W.; Rodrigues, R.; Acar, U.A.; Pasquin, R. Incoop: MapReduce for incremental computations. In Proceedings of the 2nd ACM Symposium on Cloud Computing, Cascals, Portugal, 26–28 October 2011. [Google Scholar]

- Popa, L.; Budiu, M.; Yu, Y.; Isard, M. DryadInc: Reusing work in large-scale computations. In Proceedings of the USENIX Workshop on Hot Topics in Cloud Computing, San Diego, CA, USA, 15 June 2009. [Google Scholar]

- Afrati, F.N.; Ullman, J.D. Optimizing joins in a map-reduce environment. In Proceedings of the 13th International Conference on Extending Database Technology, Lausanne, Switzerland, 22–26 March 2010; pp. 99–110. [Google Scholar]

- Amazon: Elastic Mapreduce (EMR). Available online: https://aws.amazon.com/elasticmapreduce/ (accessed on 1 June 2015).

- Gunarathne, T.; Wu, T.-L.; Qiu, J.; Fox, G. MapReduce in the Clouds for Science. In Proceedings of the 2010 IEEE Second International Conference on Cloud Computing Technology and Science, Indianapolis, IN, USA, 30 November–3 December 2010; pp. 565–572. [Google Scholar]

- Blanas, S.; Patel, J.M.; Ercegovac, V.; Rao, J.; Shekita, E.J.; Tian, Y. A comparison of join algorithms for log processing in mapreduce. In Proceedings of the 2010 ACM SIGMOD International Conference on Management of Data, Indianapolis, IN, USA, 6–10 June 2010; pp. 975–986. [Google Scholar]

- Afrati, F.N.; Ullman, J.D. Optimizing multiway joins in a map-reduce environment. IEEE Trans. Knowl. Data Eng. 2011, 23, 1282–1298. [Google Scholar] [CrossRef]

- Goethals, B. Frequent Itemset Mining Dataset. Available online: http://fimi.ua.ac.be/data (accessed on 1 June 2015).

- Lucchese, C.; Orlando, S.; Perego, R.; Silvestri, F. Webdocs: A real-life huge transactional dataset. In Proceedings of the ICDM Workshop on Frequent Itemset Mining Implementations, Brighton, UK, 1 November 2004; p. 2. [Google Scholar]

- Agrawal, R.; Srikant, R. Quest Synthetic Data Generator IBM Almaden Research Center, San Jose, California. In: Mirror: http://sourceforge.net/projects/ibmquestdatagen/. Available online: http://www.almaden.ibm.com/cs/quest/syndata.html (accessed on 1 January 2016).

- Ekanayake, J.; Li, H.; Zhang, B.; Gunarathne, T.; Bae, S.; Qiu, J.; Fox, G. Twister: A runtime for iterative mapreduce. In Proceedings of the 19th ACM International Symposium on High Performance Distributed Computing, Chicago, IL, USA, 21–25 June 2010; pp. 810–818. [Google Scholar]

| Frequent in | Frequent in | Unknown |

| Not frequent in | Unknown | Not frequent in |

| TID | Transaction |

|---|---|

| Closed Item Set | Generator | Support |

|---|---|---|

| 3 | ||

| 4 | ||

| 4 | ||

| 2 | ||

| 2 | ||

| 3 |

| Transaction count | ||

| Min support level | ||

| A Count in the Split as found by the relevant mapper | 101 | Unknown (not locally frequent) |

| mincount of A | 101 | 0 (may not appear at all) |

| maxcount of A | 101 | |

| Total mincount of A | 101 | |

| Total maxcount of A | 200 | Less than min support level (201). Pruned away. |

| B Count in the Split as found by the relevant mapper | 101 | 100 |

| mincount of B | 101 | 100 |

| maxcount of B | 101 | 100 |

| Total mincount of B | 201 | Frequent in |

| Total maxcount of B | 201 |

| A Count | 5 | Unknown (was pruned) |

| mincount A | 5 | 0 |

| maxcount A | 5 | |

| Total mincount of A | 5 | |

| Total maxcount of A | 205 | Could be frequent in |

| B Count | 0 | 201 (was frequent in ) |

| mincount B | 0 | 201 |

| maxcount B | 0 | 201 |

| Total mincount of B | 201 | |

| Total maxcount of B | 201 | Less than minimum support level of . Pruned away. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonen, Y.; Gudes, E.; Kandalov, K. New and Efficient Algorithms for Producing Frequent Itemsets with the Map-Reduce Framework. Algorithms 2018, 11, 194. https://doi.org/10.3390/a11120194

Gonen Y, Gudes E, Kandalov K. New and Efficient Algorithms for Producing Frequent Itemsets with the Map-Reduce Framework. Algorithms. 2018; 11(12):194. https://doi.org/10.3390/a11120194

Chicago/Turabian StyleGonen, Yaron, Ehud Gudes, and Kirill Kandalov. 2018. "New and Efficient Algorithms for Producing Frequent Itemsets with the Map-Reduce Framework" Algorithms 11, no. 12: 194. https://doi.org/10.3390/a11120194

APA StyleGonen, Y., Gudes, E., & Kandalov, K. (2018). New and Efficient Algorithms for Producing Frequent Itemsets with the Map-Reduce Framework. Algorithms, 11(12), 194. https://doi.org/10.3390/a11120194