Parameter Estimation of a Class of Neural Systems with Limit Cycles

Abstract

:1. Introduction

1.1. Background

1.2. Parameter Estimation in Neural Model

1.3. Contributions

- We formulate the FHN neuron system as an identification model based on the explicit forward Euler method.

- We propose a recursive least-squares algorithm and a stochastic gradient algorithm to estimate the unknown parameters of the model.

- We extend the innovation concept in [24], and explore the multiinnovation recursive least-squares algorithm and multiinnovation stochastic gradient algorithm for parameter estimation of the FHN neuron system.

- We show that a faster convergence rate and better accuracy can be achieved using the innovation and repeated available data.

1.4. Organization

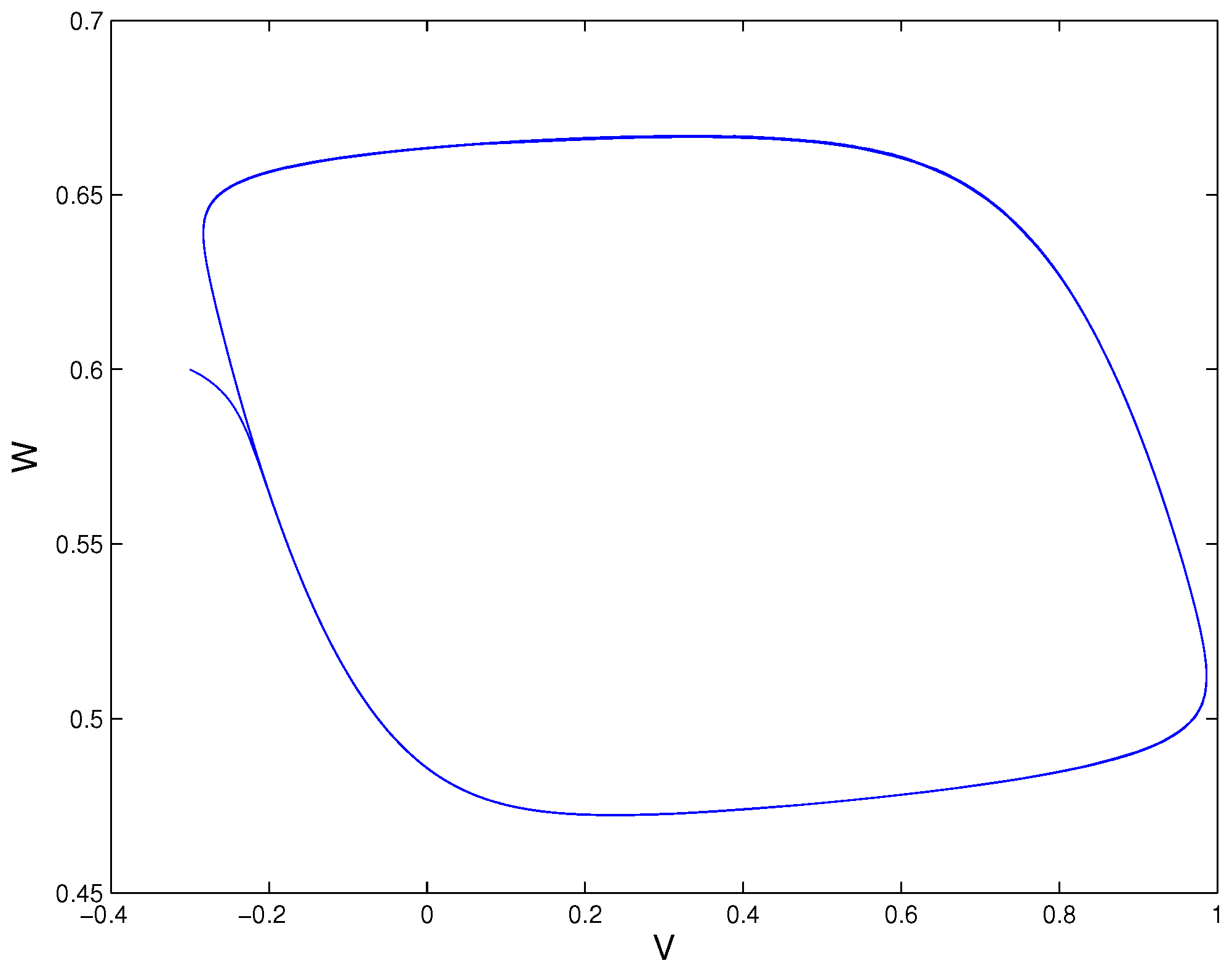

2. The Spiking Neuron Model

3. The Identification Model of Spiking Neurons

4. Parameter Estimation of the Spiking Neurons

4.1. Least-Squares Estimation Algorithms

| Algorithm 1 RLS algorithm |

|

| Algorithm 2 MIRLS algorithm |

|

4.2. Stochastic Gradient Estimation Algorithms

| Algorithm 3 SG algorithm |

|

| Algorithm 4 MISG algorithm |

|

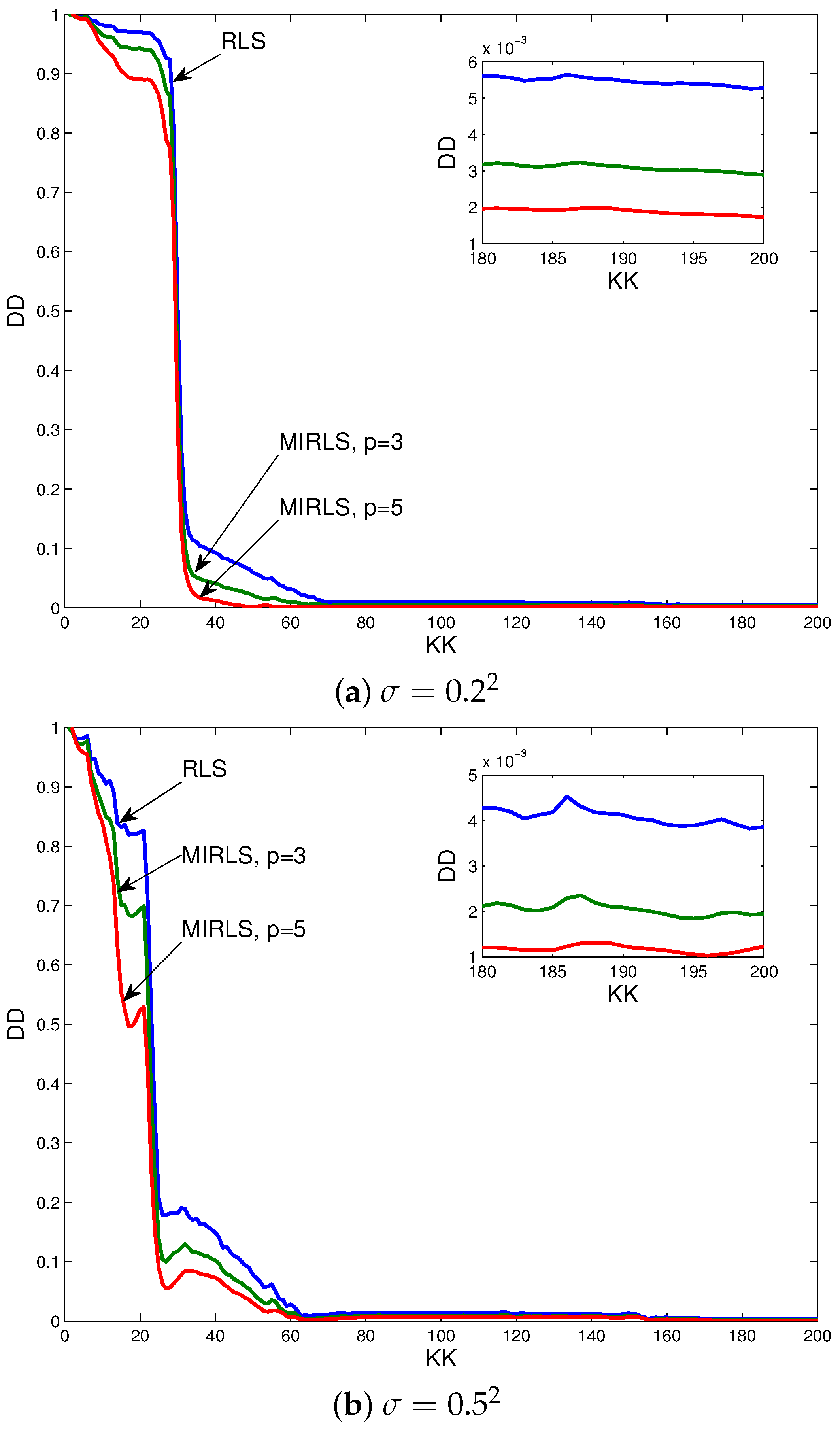

5. Simulations

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Buzsaki, G.; Draguhn, A. Neuronal oscillations in cortical networks. Science 2004, 304, 1926–1929. [Google Scholar] [CrossRef] [PubMed]

- Singer, W. Neuronal synchrony: A versitile code for the definition of relations. Neuron 1999, 24, 49–65. [Google Scholar] [CrossRef]

- Ljung, L. System Identification: Theory for the User; Prentice-Hall: Englewood Cliffs, NJ, USA, 1987. [Google Scholar]

- Ljung, L. Perspectives on system identification. Annu. Rev. Control 2010, 34, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Juang, J.N.; Phan, M.Q. Identification and Control of Mechanical Systems; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Moonen, M.; Ramos, J. A subspace algorithm for balanced state space system identification. IEEE Trans. Autom. Control 1993, 38, 1727–1729. [Google Scholar] [CrossRef]

- Ding, F. System Identification-New Theory and Methods; Science Press: Beijing, China, 2013. [Google Scholar]

- Pappalardo, C.M.; Guida, D. A time-domain system identification numerical procedure for obtaining linear dynamical models of multibody mechanical systems. Archiv. Appl. Mech. 2018, 88, 1325–1347. [Google Scholar] [CrossRef]

- Pappalardo, C.M.; Guida, D. System identification algorithm for computing the modal parameters of linear mechanical systems. Machines 2018, 6, 1–20. [Google Scholar]

- Lynch, E.P.; Houghton, C.J. Parameter estimation of neuron models using in-vitro and in-vivo electrophysiological data. Front. Neuroinf. 2015, 9, 10. [Google Scholar] [CrossRef] [PubMed]

- Duan, C.; Zhan, Y. The response of a linear monostable system and its application in parameters estimation for PSK signals. Phys. Lett. A 2016, 380, 1358–1362. [Google Scholar] [CrossRef]

- Pappalardo, C.M.; Guida, D. System identification and experimental modal analysis of a frame structure. Eng. Lett. 2018, 26, 56–68. [Google Scholar]

- Kenné, G.; Ahmed-Ali, T.; Lamnabhi-Lagarrigue, F.; Arzandé, A. Nonlinear systems time-varying parameter estimation: application to induction motors. Electr. Power Syst. Res. 2008, 78, 1881–1888. [Google Scholar] [CrossRef]

- Tabak, J.; Murphey, R.; Moore, L.E. Parameter estimation methods for single neuron models. J. Comput. Neurosci. 2000, 9, 215–236. [Google Scholar] [CrossRef] [PubMed]

- Mullowney, P.; Iyengar, S. Parameter estimation for a leaky integrate-and-fire neuronal model from ISI data. J. Comput. Neurosci. 2008, 24, 179–194. [Google Scholar] [CrossRef] [PubMed]

- Vavoulis, D.V.; Straub, V.A.; Aston, J.A.D.; Feng, F. A self-organizing state-space-model approach for parameter estimation in Hodgkin-Huxley-type models of single neurons. PLoS Comput. Biol. 2012, 8, e1002401. [Google Scholar] [CrossRef] [PubMed]

- Jensen, A.; Ditlevsen, S.; Kessler, M.; Papaspiliopoulos, O. Markov chain Monte Carlo approach to parameter estimation in the FitzHugh–Nagumo model. Phys. Rev. E 2012, 86, 041114. [Google Scholar] [CrossRef] [PubMed]

- Arnold, A.; Lloyd, A.L. An approach to periodic, time-varying parameter estimation using nonlinear filtering. Inverse Probl. 2018, 34, 105005. [Google Scholar] [CrossRef]

- Concha, A.; Carrido, R. Parameter estimation of the FitHugh–Nagumo neuron model using integrals over finite time periods. J. Comput. Nonlinear Dyn. 2015, 10, 021023. [Google Scholar] [CrossRef]

- Che, Y.; Geng, L.; Han, C.; Cui, S.; Wang, J. Parameter estimation of the FitzHugh–Nagumo model using noisy measurements for membrane potential. Chaos 2012, 22, 023139. [Google Scholar] [CrossRef] [PubMed]

- Ding, F. Hierarchical multi-innovation stochastic gradient algorithm for Hammerstein nonlinear system modeling. Appl. Math. Model. 2013, 37, 1694–1704. [Google Scholar] [CrossRef]

- Li, J.; Hua, C.; Tang, Y.; Guan, X. Stochastic gradient with changing forgetting factor-based parameter identification for Wiener systems. Appl. Math. Lett. 2014, 33, 40–45. [Google Scholar] [CrossRef]

- Chen, J.; Lv, L.; Ding, R. Multi-innovation stochastic gradient algorithms for dual-rate sampled systems with preload nonlinearity. Appl. Math. Lett. 2013, 26, 124–129. [Google Scholar] [CrossRef]

- Ding, F.; Chen, T. Performance analysis of multi-innovation gradient type identification methods. Automatica 2007, 43, 1–14. [Google Scholar] [CrossRef]

- Keener, J.; Sneyd, J. Mathematical Physiology; Springer: New York, NY, USA, 2009; pp. 1–47. [Google Scholar]

- Danzl, P.; Hespanha, J.; Moehlis, J. Event-based minimum-time control of oscillatory neuron models: phase randomization, maximal spike rate increase, and desynchronization. Biol. Cybern. 2009, 101, 387–399. [Google Scholar] [CrossRef] [PubMed]

- Ding, F.; Wang, Y.J.; Dai, J.Y.; Li, Q.S.; Chen, Q.J. A recursive least squares parameter estimation algorithm for output nonlinear autoregressive systems using the input-output data filtering. J. Franklin Inst. 2017, 354, 6938–6955. [Google Scholar] [CrossRef]

- Xu, L.; Ding, F.; Gu, Y.; Alsaedi, A.; Hayat, T. A multi-innovation state and parameter estimation algorithm for a state space system with d-step state-delay. Signal Process. 2017, 140, 97–103. [Google Scholar] [CrossRef]

| k | J | |||||||

|---|---|---|---|---|---|---|---|---|

| 10 | 3.7906 | −0.7233 | 0.6077 | 2.9444 | 2.6491 | 0.3230 | 98.2022 | |

| 20 | 1.6180 | 4.6890 | −6.8895 | 2.7357 | 1.8070 | 0.5639 | 97.0998 | |

| 50 | 94.7731 | 102.8236 | 8.3295 | 47.5574 | 0.9996 | 0.6066 | 5.9548 | |

| 100 | 99.1037 | 108.8017 | 9.7432 | 49.5408 | 1.0207 | 0.5576 | 1.0100 | |

| 150 | 99.2001 | 108.8906 | 9.7232 | 49.5928 | 1.0401 | 0.5390 | 0.9256 | |

| 200 | 99.5227 | 109.3771 | 9.8946 | 49.7620 | 1.0404 | 0.5346 | 0.5272 | |

| 10 | 19.0174 | −2.3066 | 7.1256 | 10.3718 | 4.2456 | 0.4154 | 91.6760 | |

| 20 | 11.4943 | 28.0891 | −11.5647 | 8.2026 | 2.0516 | 0.9504 | 82.3618 | |

| 50 | 93.5511 | 100.3815 | 7.4373 | 47.0843 | 1.2176 | 0.9968 | 7.7788 | |

| 100 | 98.8246 | 108.3058 | 9.5814 | 49.3799 | 1.0948 | 0.6696 | 1.4012 | |

| 150 | 98.9660 | 108.4155 | 9.5199 | 49.4549 | 1.1355 | 0.6290 | 1.2950 | |

| 200 | 99.7218 | 109.5163 | 9.8858 | 49.8592 | 1.1210 | 0.5971 | 0.3861 | |

| True values | 100.0000 | 110.0000 | 10.0000 | 50.0000 | 1.0000 | 0.5000 | ||

| k | J | |||||||

|---|---|---|---|---|---|---|---|---|

| 10 | 7.9850 | −1.6092 | 2.5997 | 4.8664 | 2.4115 | 0.3541 | 96.5310 | |

| 20 | 3.4710 | 8.9666 | −7.0001 | 3.6340 | 1.7240 | 0.5638 | 94.2987 | |

| 50 | 98.1459 | 107.4963 | 9.4579 | 49.1372 | 0.9894 | 0.6051 | 2.0867 | |

| 100 | 99.6327 | 109.4124 | 9.8139 | 49.8019 | 1.0195 | 0.5559 | 0.4751 | |

| 150 | 99.5767 | 109.3555 | 9.7988 | 49.7747 | 1.0364 | 0.5407 | 0.5280 | |

| 200 | 99.7563 | 109.6468 | 9.9260 | 49.8776 | 1.0393 | 0.5327 | 0.2896 | |

| 10 | 33.4907 | −4.5706 | 13.6947 | 17.0438 | 3.7755 | 0.4402 | 86.9092 | |

| 20 | 24.8108 | 41.9383 | −8.4833 | 14.4792 | 1.9920 | 0.9177 | 69.3805 | |

| 50 | 96.1344 | 104.1168 | 8.4045 | 48.2817 | 1.1906 | 0.9819 | 4.7325 | |

| 100 | 99.3021 | 108.8667 | 9.6535 | 49.6153 | 1.0919 | 0.6652 | 0.9166 | |

| 150 | 99.3244 | 108.8691 | 9.6029 | 49.6278 | 1.1276 | 0.6338 | 0.9145 | |

| 200 | 99.9346 | 109.7613 | 9.9147 | 49.9651 | 1.1189 | 0.5926 | 0.1935 | |

| True values | 100.0000 | 110.0000 | 10.0000 | 50.0000 | 1.0000 | 0.5000 | ||

| k | J | |||||||

|---|---|---|---|---|---|---|---|---|

| 500 | 13.3084 | −7.8497 | 13.6492 | 11.1506 | 1.0467 | 0.4382 | 96.3408 | |

| 1000 | 21.9925 | −4.8275 | 9.1816 | 21.6546 | 1.0218 | 0.5516 | 90.1498 | |

| 5000 | 61.4462 | 38.2353 | 2.5148 | 31.3570 | 1.0511 | 0.5234 | 53.3865 | |

| 10,000 | 79.7290 | 72.4560 | 5.9073 | 40.7280 | 1.0606 | 0.5298 | 27.9030 | |

| 15,000 | 88.3858 | 90.8851 | 7.0928 | 46.6020 | 1.0202 | 0.5696 | 14.5130 | |

| 20,000 | 94.5236 | 99.8808 | 8.9198 | 47.4423 | 0.9398 | 0.3133 | 7.5321 | |

| 500 | 15.7472 | −7.8692 | 14.3078 | 8.5323 | 1.0953 | 0.3400 | 95.9265 | |

| 1000 | 22.1267 | −4.0507 | 8.4011 | 23.2820 | 1.0782 | 0.6592 | 89.5044 | |

| 5000 | 61.7189 | 39.9100 | 1.8494 | 34.0418 | 1.1217 | 0.5866 | 52.0776 | |

| 10,000 | 81.1552 | 73.8568 | 5.7945 | 40.9238 | 0.8981 | 0.5475 | 26.7046 | |

| 15,000 | 90.2873 | 91.2729 | 7.7195 | 45.1400 | 1.0227 | 0.6214 | 13.8507 | |

| 20,000 | 95.0617 | 100.5981 | 8.9675 | 47.9177 | 0.8727 | 0.0131 | 6.9244 | |

| True values | 100.0000 | 110.0000 | 10.0000 | 50.0000 | 1.0000 | 0.5000 | ||

| k | J | |||||||

|---|---|---|---|---|---|---|---|---|

| 500 | 19.1634 | −11.8220 | 23.2437 | 16.5106 | 1.1374 | 0.4206 | 95.8047 | |

| 1000 | 35.0060 | −8.8759 | 18.5691 | 37.0688 | 1.0426 | 0.5921 | 86.7670 | |

| 5000 | 85.1399 | 57.0311 | −0.6404 | 42.6241 | 1.0992 | 0.5409 | 35.9599 | |

| 10,000 | 94.1694 | 90.4475 | 6.3213 | 47.8311 | 1.0994 | 0.5577 | 13.2635 | |

| 15,000 | 97.3389 | 103.1461 | 8.3135 | 49.9311 | 1.0338 | 0.6045 | 4.8003 | |

| 20,000 | 99.1619 | 107.5047 | 9.5947 | 49.7100 | 0.9374 | 0.2108 | 1.7150 | |

| 500 | 23.1916 | −11.9815 | 24.6474 | 12.4417 | 1.2785 | 0.2591 | 95.2371 | |

| 1000 | 35.3666 | −7.5615 | 17.1582 | 39.9121 | 1.1419 | 0.7780 | 85.7224 | |

| 5000 | 85.5678 | 59.2415 | −0.6955 | 44.1461 | 1.2350 | 0.6827 | 34.4612 | |

| 10,000 | 95.2375 | 91.8121 | 6.4314 | 47.7921 | 0.9014 | 0.6650 | 12.2575 | |

| 15,000 | 98.4425 | 103.4602 | 8.5951 | 49.2526 | 1.0869 | 0.7208 | 4.3983 | |

| 20,000 | 99.6694 | 108.1012 | 9.7009 | 50.1496 | 0.9396 | −0.2527 | 1.3341 | |

| True values | 100.0000 | 110.0000 | 10.0000 | 50.0000 | 1.0000 | 0.5000 | ||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lou, X.; Cai, X.; Cui, B. Parameter Estimation of a Class of Neural Systems with Limit Cycles. Algorithms 2018, 11, 169. https://doi.org/10.3390/a11110169

Lou X, Cai X, Cui B. Parameter Estimation of a Class of Neural Systems with Limit Cycles. Algorithms. 2018; 11(11):169. https://doi.org/10.3390/a11110169

Chicago/Turabian StyleLou, Xuyang, Xu Cai, and Baotong Cui. 2018. "Parameter Estimation of a Class of Neural Systems with Limit Cycles" Algorithms 11, no. 11: 169. https://doi.org/10.3390/a11110169

APA StyleLou, X., Cai, X., & Cui, B. (2018). Parameter Estimation of a Class of Neural Systems with Limit Cycles. Algorithms, 11(11), 169. https://doi.org/10.3390/a11110169