Transfer Learning-Based Multi-Sensor Approach for Predicting Keyhole Depth in Laser Welding of 780DP Steel

Abstract

1. Introduction

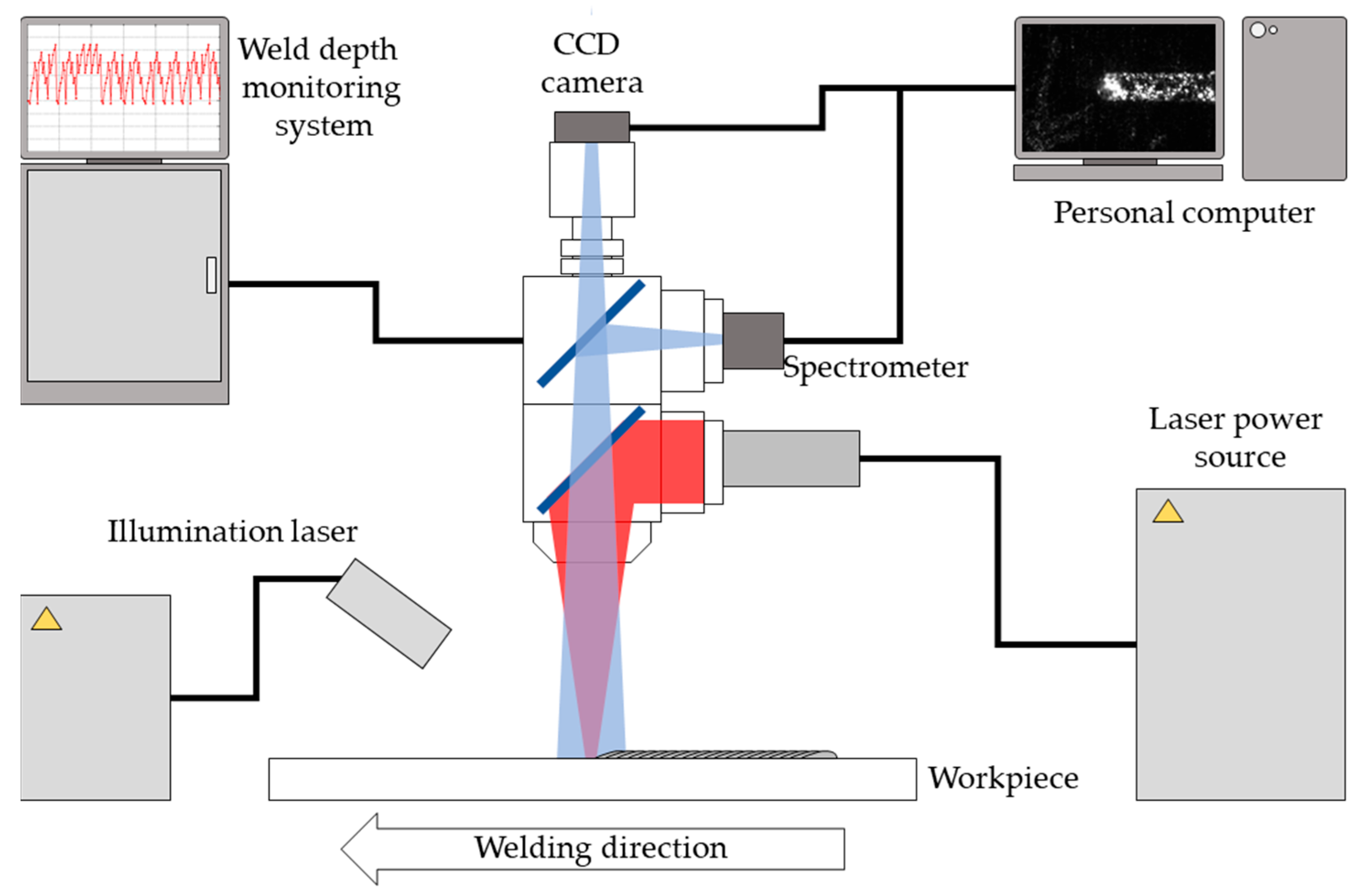

2. Experiments

3. Data Preprocessing and Models

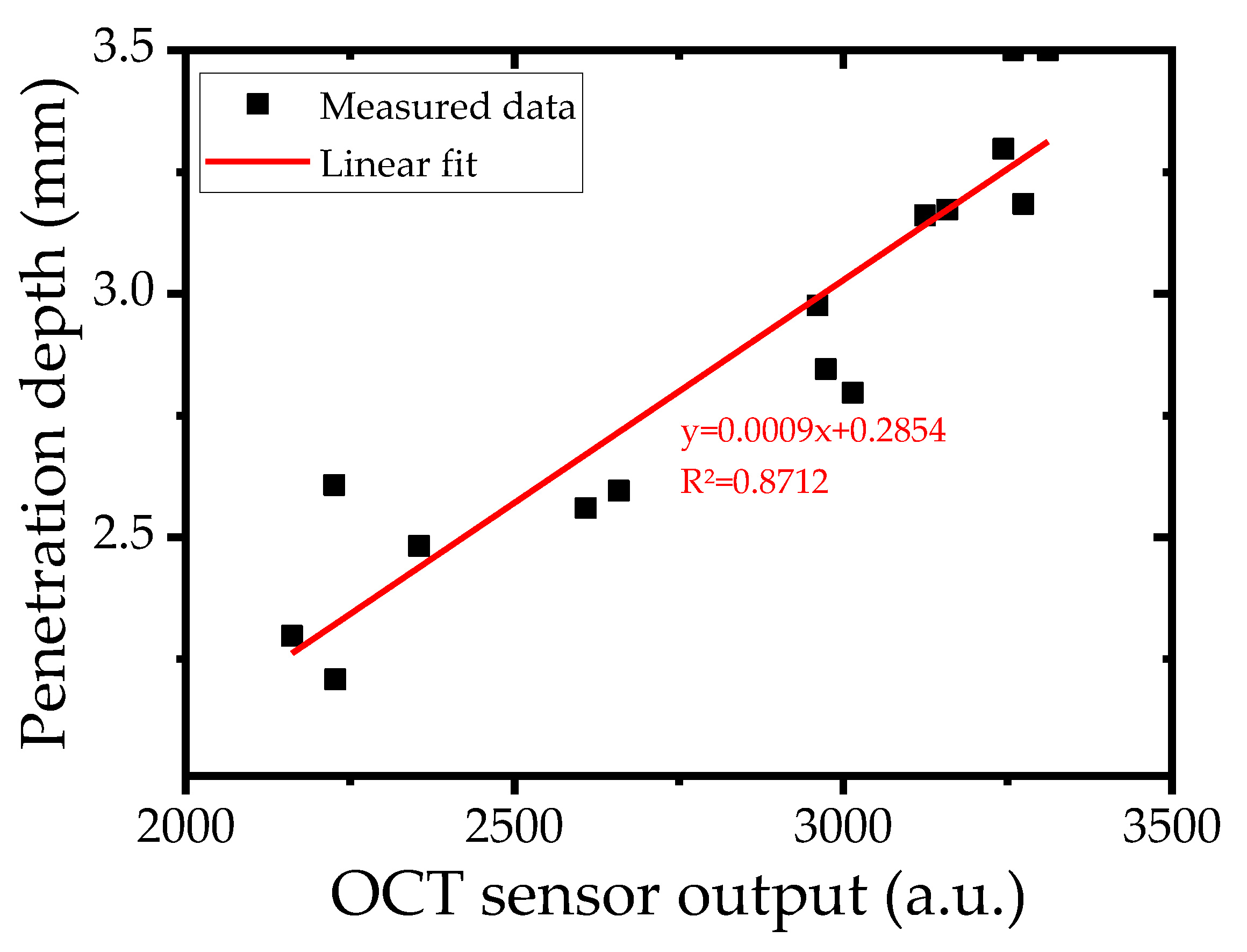

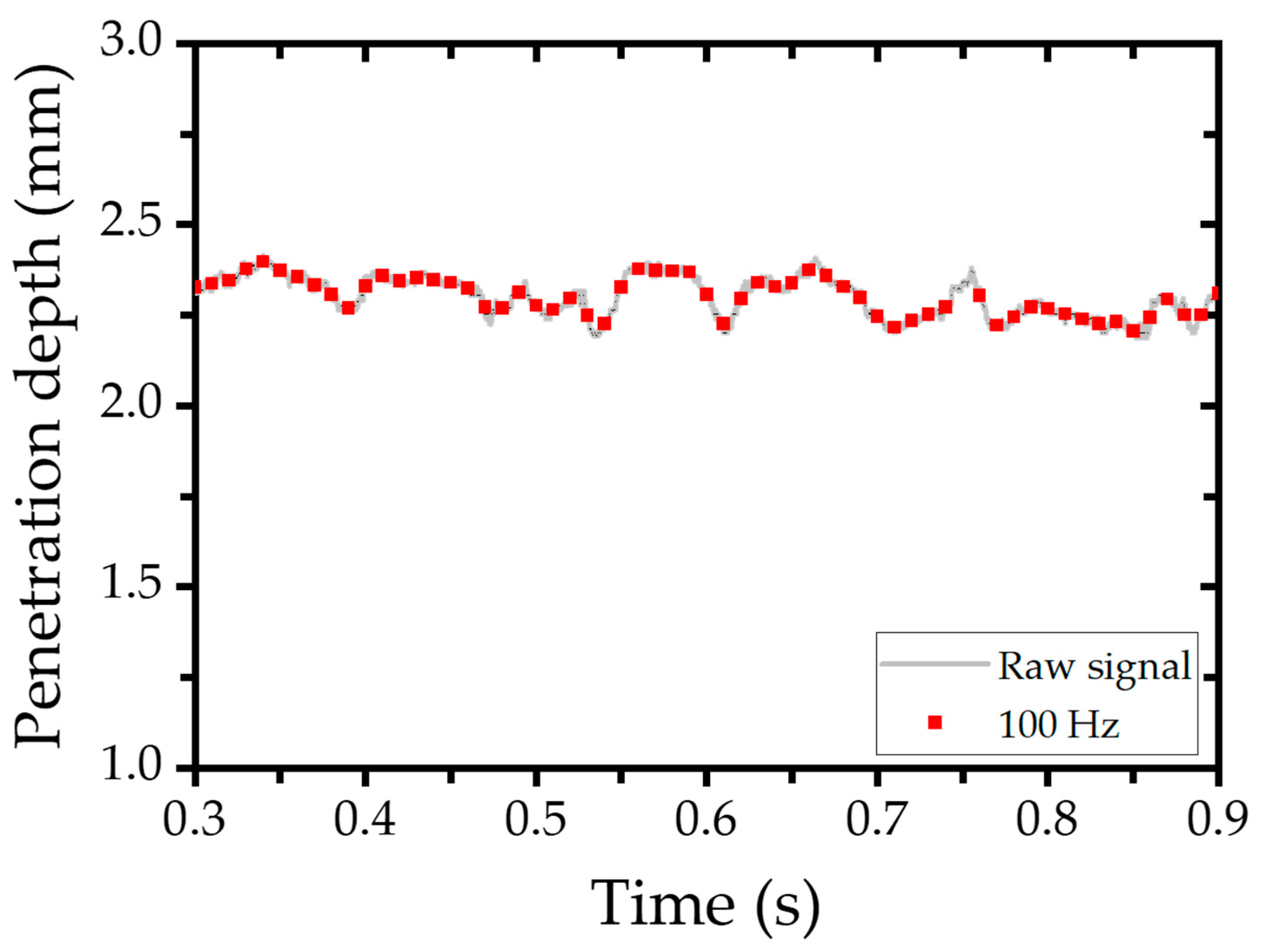

3.1. Penetration Depth Calibration

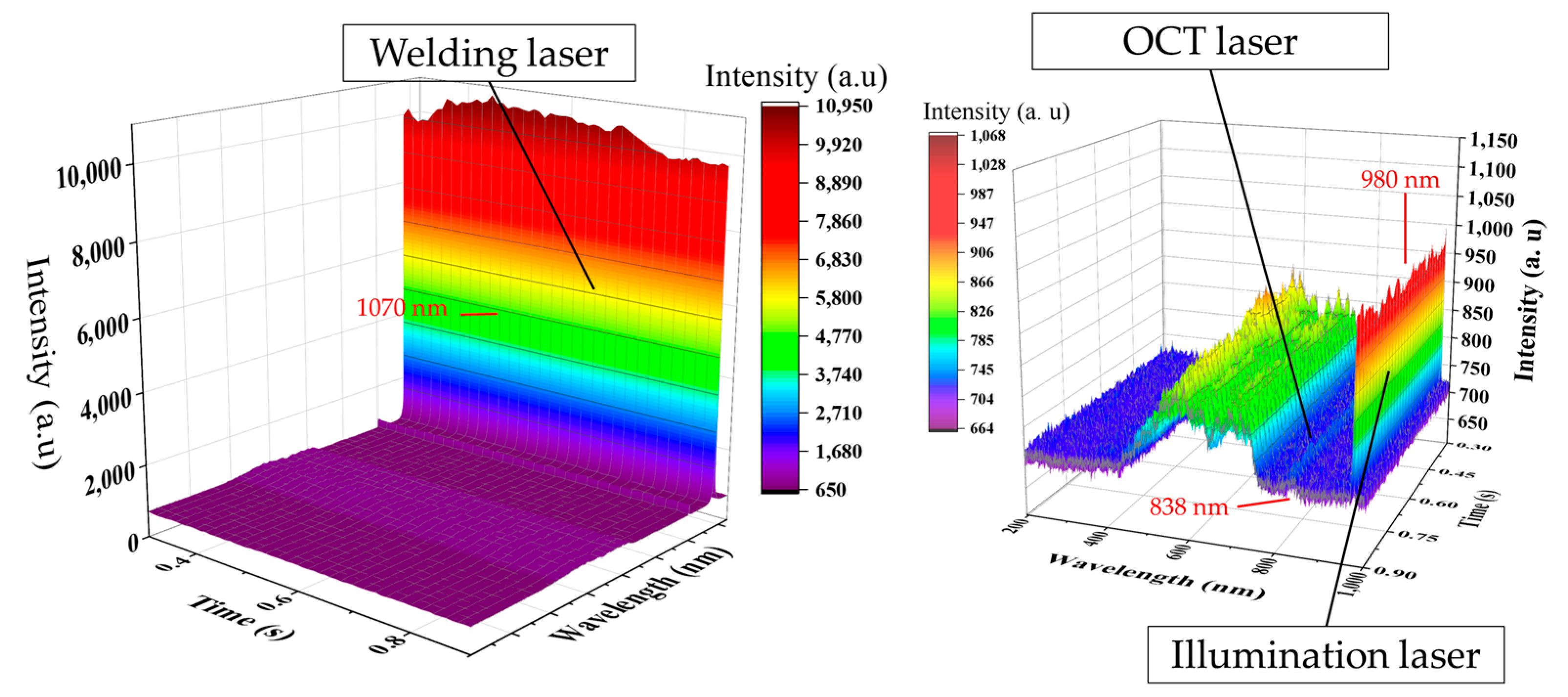

3.2. Data Preprocessing

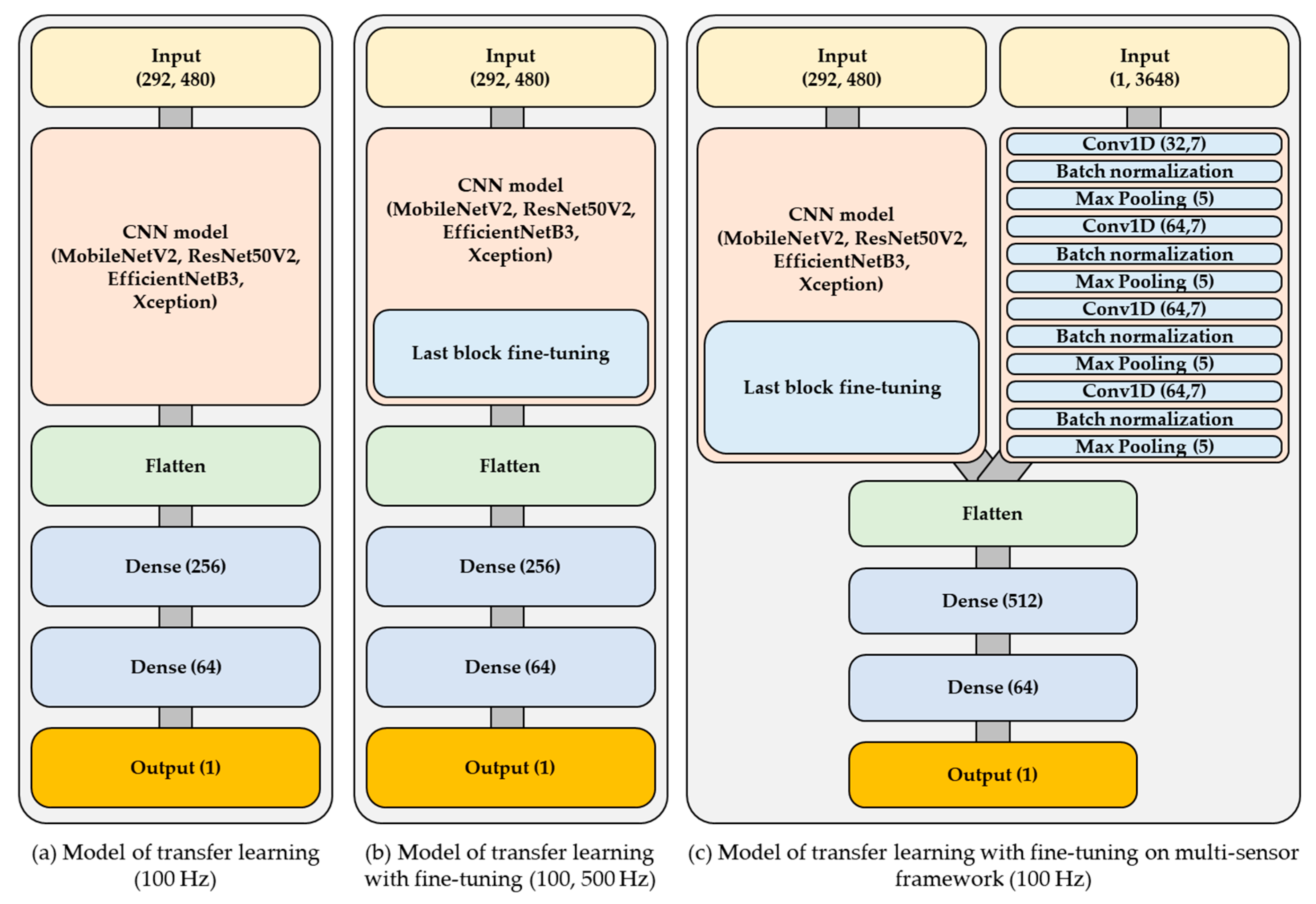

3.3. Deep Learning Models

3.4. Dataset and Optimization Method

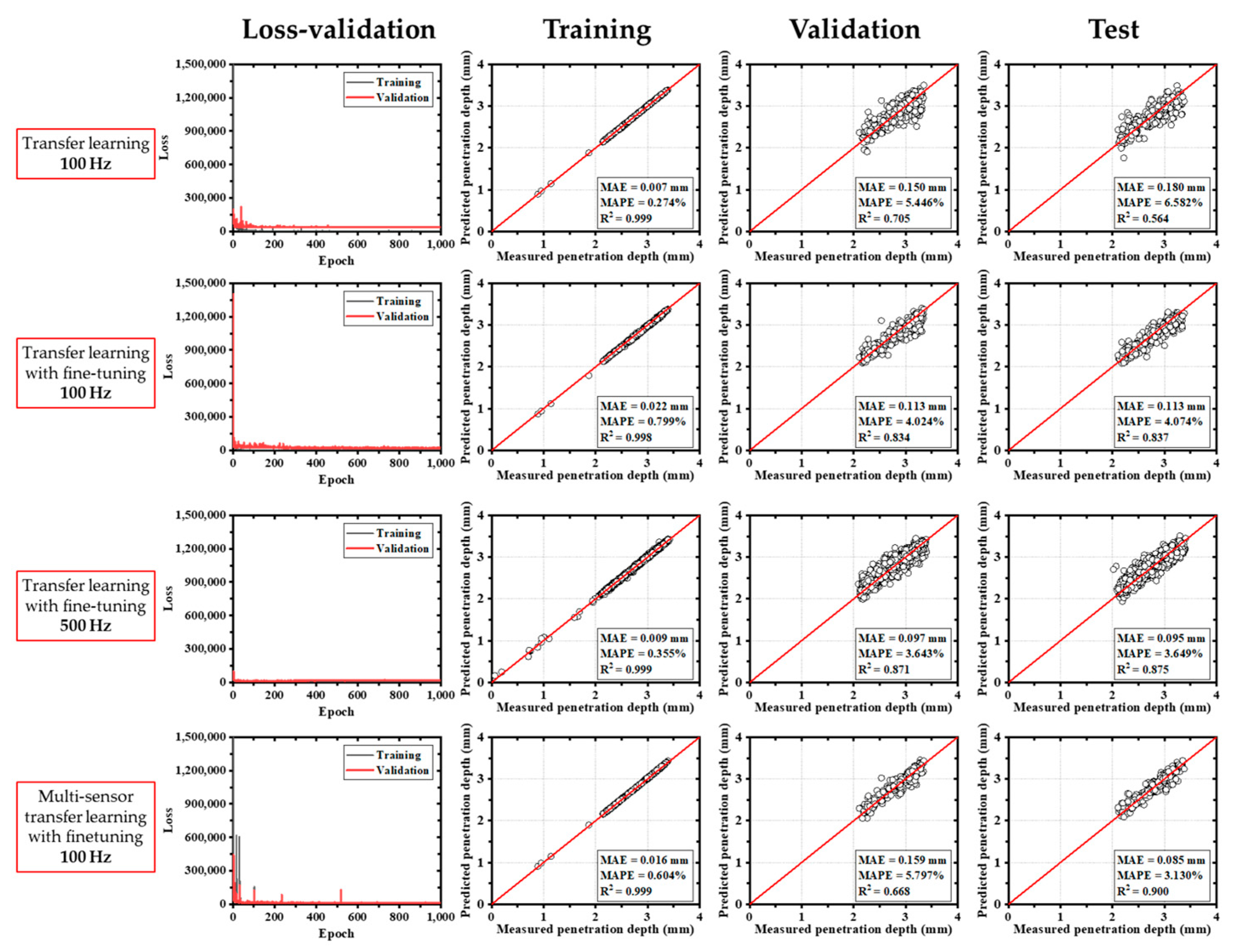

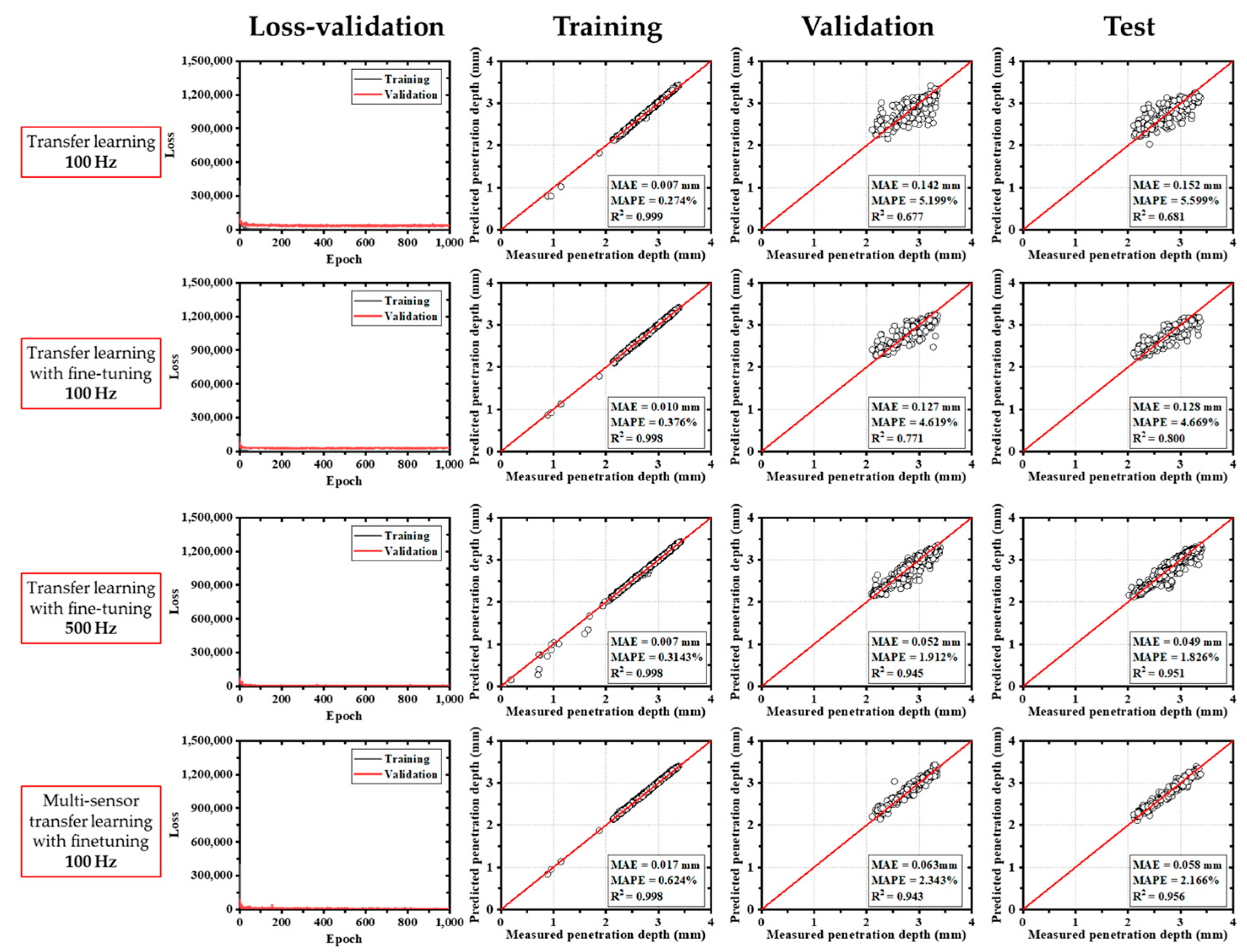

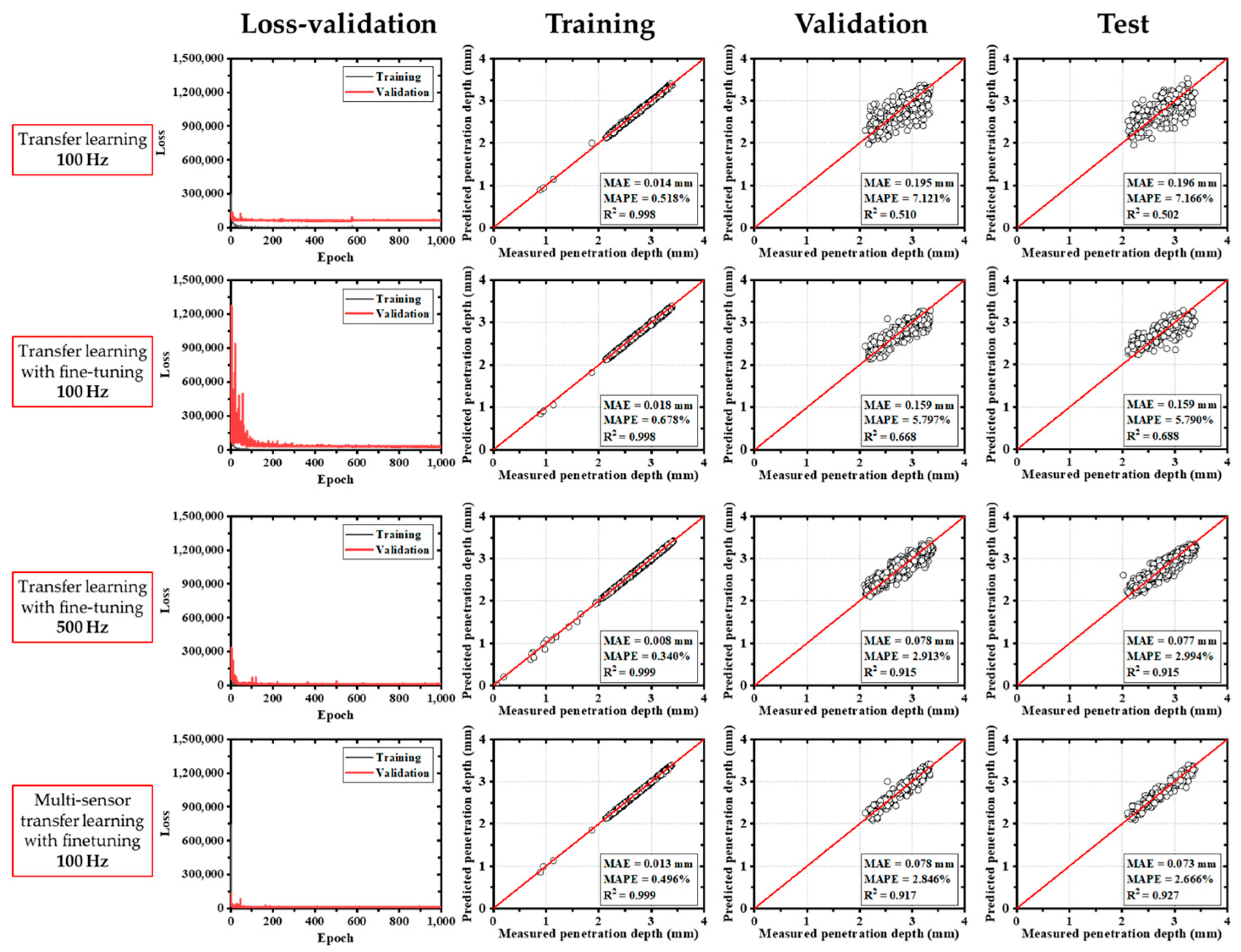

4. Results

5. Discussion

6. Conclusions

- (1)

- A transfer learning-based deep learning model was successfully implemented for predicting weld penetration depth. The best-performing configuration achieved a mean absolute error (MAE) of 0.049 mm and a coefficient of determination (R2) of 0.951, corresponding to approximately 1.5% error relative to the material thickness—indicating high prediction accuracy.

- (2)

- CCD imagery and spectrometer signals were found to be effective input features. The use of a bandpass filter and illumination laser enhanced the quality and reliability of the captured images. Additionally, the high-frequency OCT sensor provided robust reference measurements, minimizing keyhole instability and contributing to the model’s strong performance.

- (3)

- The experimental methodology, although based on BOP testing of 780DP steel, shows strong potential for generalization to other steel materials and welding conditions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Khan, M.S.; Soleimani, M.; Midawi, A.R.H.; Aderibigbe, I.; Zhou, Y.N.; Biro, E. A review on heat affected zone softening of dual-phase steels during laser welding. J. Manuf. Process. 2023, 102, 663–684. [Google Scholar] [CrossRef]

- Xia, M.; Biro, E.; Tian, Z.; Zhou, Y.N. Effects of Heat Input and Martensite on HAZ Softening in Laser Welding of Dual Phase Steels. ISIJ Int. 2008, 48, 809–814. [Google Scholar] [CrossRef]

- Zhao, Y.Y.; Zhang, Y.S.; Hu, W. Effect of welding speed on microstructure, hardness and tensile properties in laser welding of advanced high strength steel. Sci. Technol. Weld. Join. 2013, 18, 581–590. [Google Scholar] [CrossRef]

- de Andrade Ferreira, C.C.; Braga, V.; de Siqueira, R.H.M.; de Carvalho, S.M.; de Lima, M.S.F. Laser beam welding of DP980 dual phase steel at high temperatures. Opt. Laser Technol. 2020, 124, 105964. [Google Scholar] [CrossRef]

- Biro, E.; Vignier, S.; Kaczynski, C.; McDermid, J.R.; Lucas, E.; Embury, J.D.; Zhou, Y.N. Predicting Transient Softening in the Sub-Critical Heat-Affected Zone of Dual-Phase and Martensitic Steel Welds. ISIJ Int. 2013, 53, 110–118. [Google Scholar] [CrossRef]

- Senuma, T. Physical metallurgy of modern high strength steel sheets. ISIJ Int. 2001, 41, 520–532. [Google Scholar] [CrossRef]

- Lee, H.; Kim, C.; Song, J.H. An evaluation of global and local tensile properties of friction-stir welded DP980 dual-phase steel joints using a digital image correlation method. Materials 2015, 8, 8424–8436. [Google Scholar] [CrossRef] [PubMed]

- Perka, A.K.; John, M.; Kuruveri, U.B.; Menezes, P.L. Advanced High-Strength Steels for Automotive Applications: Arc and Laser Welding Process, Properties, and Challenges. Metals 2022, 12, 1051. [Google Scholar] [CrossRef]

- Blecher, J.J.; Galbraith, C.M.; Van Vlack, C.; Palmer, T.A.; Fraser, J.M.; Webster, P.J.L.; DebRoy, T. Real time monitoring of laser beam welding keyhole depth by laser interferometry. Sci. Technol. Weld. Join. 2014, 19, 560–564. [Google Scholar] [CrossRef]

- Wang, Z.; Shi, Y.; Cui, Y.; Yan, W. Three-Dimensional Weld Pool Monitoring and Penetration State Recognition for Variable-Gap Keyhole Tungsten Inert Gas Welding Based on Stereo Vision. Sensors 2024, 24, 7591. [Google Scholar] [CrossRef]

- Kroos, J.; Gratzke, U.; Vicanek, M.; Simon, G. Dynamic behaviour of the keyhole in laser welding. J. Phys. D Appl. Phys. 1993, 26, 481. [Google Scholar] [CrossRef]

- Matsunawa, A.; Kim, J.-D.; Seto, N.; Mizutani, M.; Katayama, S. Dynamics of keyhole and molten pool in laser welding. J. Laser Appl. 1998, 10, 247–254. [Google Scholar] [CrossRef]

- Matsunawa, A.; Mizutani, M.; Katayama, S.; Seto, N. Porosity formation mechanism and its prevention in laser welding. Weld. Int. 2003, 17, 431–437. [Google Scholar] [CrossRef]

- Stavridis, J.; Papacharalampopoulos, A.; Stavropoulos, P. Quality assessment in laser welding: A critical review. Int. J. Adv. Manuf. Technol. 2017, 94, 1825–1847. [Google Scholar] [CrossRef]

- Kim, C.-H.; Ahn, D.-C. Coaxial monitoring of keyhole during Yb:YAG laser welding. Opt. Laser Technol. 2012, 44, 1874–1880. [Google Scholar] [CrossRef]

- Ji, Y.; Grindal, A.W.; Webster, P.J.; Fraser, J.M. Real-time depth monitoring and control of laser machining through scanning beam delivery system. J. Phys. D Appl. Phys. 2015, 48, 155301. [Google Scholar] [CrossRef]

- Sokolov, M.; Franciosa, P.; Sun, T.; Ceglarek, D.; Dimatteo, V.; Ascari, A.; Fortunato, A.; Nagel, F. Applying optical coherence tomography for weld depth monitoring in remote laser welding of automotive battery tab connectors. J. Laser Appl. 2021, 33. [Google Scholar] [CrossRef]

- Stadter, C.; Schmoeller, M.; Zeitler, M.; Tueretkan, V.; Munzert, U.; Zaeh, M.F. Process control and quality assurance in remote laser beam welding by optical coherence tomography. J. Laser Appl. 2019, 31, 022408. [Google Scholar] [CrossRef]

- Patterson, T.; Panton, B.; Lippold, J. Analysis of the laser welding keyhole using inline coherent imaging. J. Manuf. Process. 2022, 82, 601–614. [Google Scholar] [CrossRef]

- Robertson, S.M.; Kaplan, A.F.; Frostevarg, J. Material ejection attempts during laser keyhole welding. J. Manuf. Process. 2021, 67, 91–100. [Google Scholar] [CrossRef]

- Volpp, J.; Vollertsen, F. Keyhole stability during laser welding—Part I: Modeling and evaluation. Prod. Eng. 2016, 10, 443–457. [Google Scholar] [CrossRef]

- Volpp, J. Keyhole stability during laser welding—Part II: Process pores and spatters. Prod. Eng. 2017, 11, 9–18. [Google Scholar] [CrossRef]

- Lee, K.; Yi, S.; Hyun, S.; Kim, C. Review on the recent welding research with application of CNN-based deep learning—Part 1: Models and applications. J. Weld. Join. 2021, 39, 10–19. [Google Scholar] [CrossRef]

- Lee, K.; Yi, S.; Hyun, S.; Kim, C. Review on the recent welding research with application of CNN-based deep learning—Part II: Model evaluation and visualizations. J. Weld. Join. 2021, 39, 20–26. [Google Scholar] [CrossRef]

- Lee, K.; Kang, S.; Kang, M.; Yi, S.; Kim, C. Estimation of Al/Cu laser weld penetration in photodiode signals using deep neural network classification. J. Laser Appl. 2021, 33, 042009. [Google Scholar] [CrossRef]

- Kang, S.; Lee, K.; Kang, M.; Jang, Y.H.; Kim, C. Weld-penetration-depth estimation using deep learning models and multisensor signals in Al/Cu laser overlap welding. Opt. Laser Technol. 2023, 161. [Google Scholar] [CrossRef]

- Khumaidi, A.; Yuniarno, E.M.; Purnomo, M.H. Welding defect classification based on convolution neural network (CNN) and Gaussian kernel. In Proceedings of the 2017 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, IN, USA, 28–29 August 2017; pp. 261–265. [Google Scholar]

- Jiao, W.; Wang, Q.; Cheng, Y.; Zhang, Y. End-to-end prediction of weld penetration: A deep learning and transfer learning based method. J. Manuf. Process. 2020. [Google Scholar] [CrossRef]

- Kang, S.; Kang, M.; Jang, Y.H.; Kim, C. Deep learning-based penetration depth prediction in Al/Cu laser welding using spectrometer signal and CCD image. J. Laser Appl. 2022, 34, 042035. [Google Scholar] [CrossRef]

- Li, C.; Wang, Q.; Jiao, W.; Johnson, M.; Zhang, Y. Deep learning-based detection of penetration from weld pool reflection images. Weld. J. 2020, 99, 239S–245S. [Google Scholar] [CrossRef]

- Kang, S.; Kang, M.; Jang, Y.H.; Kim, C. Spectrometer as a quantitative sensor for predicting the weld depth in laser welding. Opt. Laser Technol. 2024, 175, 110855. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.G.; Zhu, M.; Zhmoginov, A.; Chen, L. Inverted residuals and linear bottlenecks: Mobile networks for classification, detection and segmentation. CoRR abs/1801.04381 (2018). arXiv 2018, arXiv:1801.04381. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European conference on computer vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Tan, M.; Le, Q.V.E. rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Kaiser, L.; Gomez, A.N.; Chollet, F. Depthwise separable convolutions for neural machine translation. arXiv 2017, arXiv:1706.03059. [Google Scholar] [CrossRef]

- Luo, Z.; Wu, D.; Zhang, P.; Ye, X.; Shi, H.; Cai, X.; Tian, Y. Laser welding penetration monitoring based on time-frequency characterization of acoustic emission and CNN-LSTM hybrid network. Materials 2023, 16, 1614. [Google Scholar] [CrossRef]

- Schmoeller, M.; Weiss, T.; Goetz, K.; Stadter, C.; Bernauer, C.; Zaeh, M.F. Inline weld depth evaluation and control based on OCT keyhole depth measurement and fuzzy control. Processes 2022, 10, 1422. [Google Scholar] [CrossRef]

| C | Mn | Si | P | S | |

|---|---|---|---|---|---|

| Steel (780DP) | 0.12 | 2.6 | 0.6 | 0.3 | 0.003 |

| Tensile Strength (MPa) | Elongation (%) | |

|---|---|---|

| Steel (780DP) | Min. 780 | 14 |

| Laser power (W) | 1429~2750 |

| Welding speed (m/min) | 3, 4, 5, 6, 7 |

| Laser beam diameter (mm) | 0.27 |

| Focal length (mm) | 200 |

| Model | Transfer learning; uni-sensor 100 Hz | Transfer learning with fine-tuning; uni-sensor 100 Hz | ||||||

| M | R | E | X | M | R | E | X | |

| MAE (mm) | 0.007 | 0.007 | 0.007 | 0.014 | 0.009 | 0.022 | 0.010 | 0.018 |

| R2 | 0.999 | 0.999 | 0.999 | 0.998 | 0.999 | 0.998 | 0.998 | 0.998 |

| Model | Transfer learning with fine-tuning; uni-sensor 500 Hz | Transfer learning with fine-tuning; multi-sensor 100 Hz | ||||||

| M | R | E | X | M | R | E | X | |

| MAE (mm) | 0.009 | 0.009 | 0.007 | 0.008 | 0.010 | 0.016 | 0.017 | 0.013 |

| R2 | 0.999 | 0.999 | 0.998 | 0.999 | 0.999 | 0.999 | 0.998 | 0.999 |

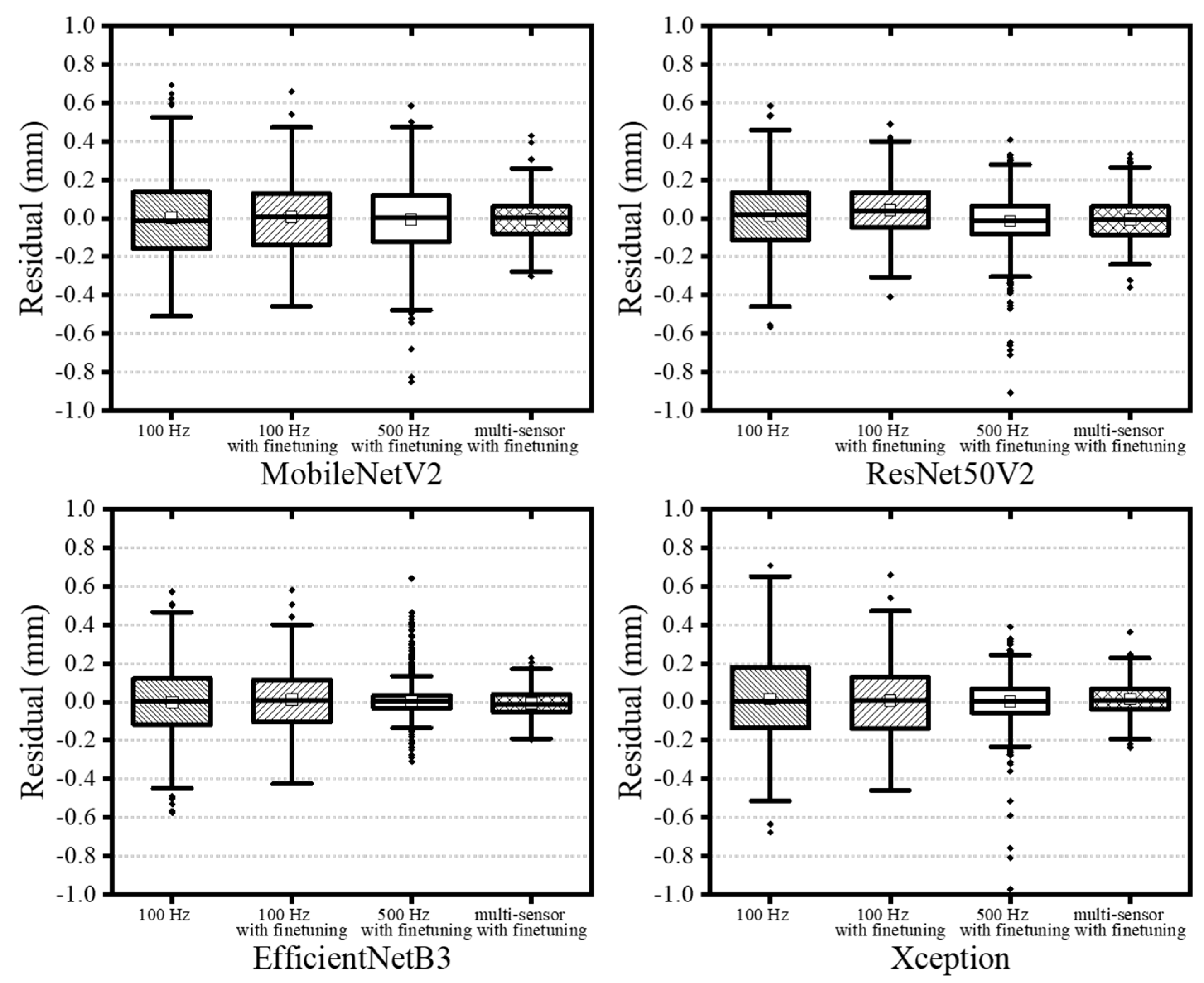

| Model | Transfer learning; uni-sensor 100 Hz | Transfer learning with fine-tuning; uni-sensor 100 Hz | ||||||

| M | R | E | X | M | R | E | X | |

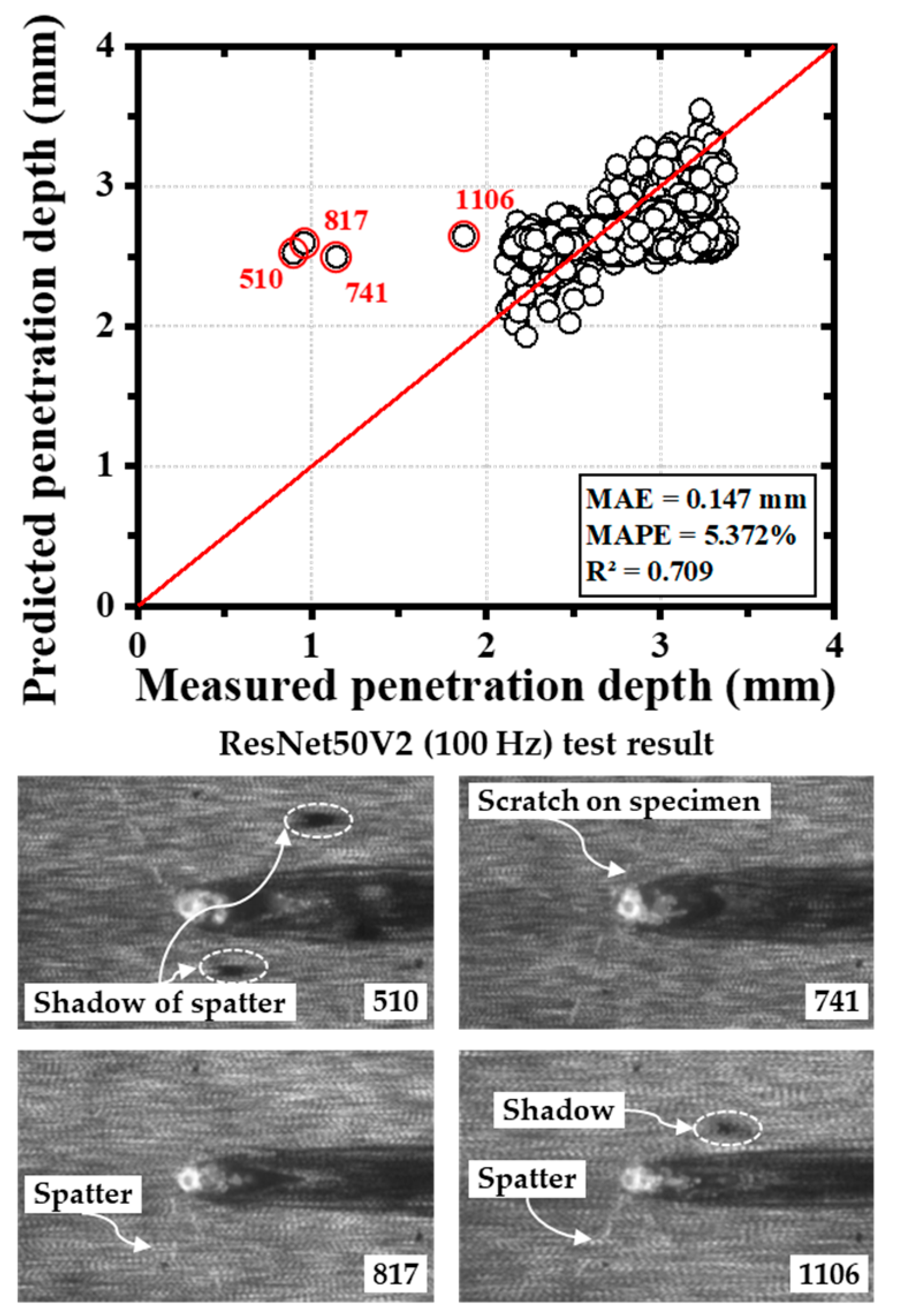

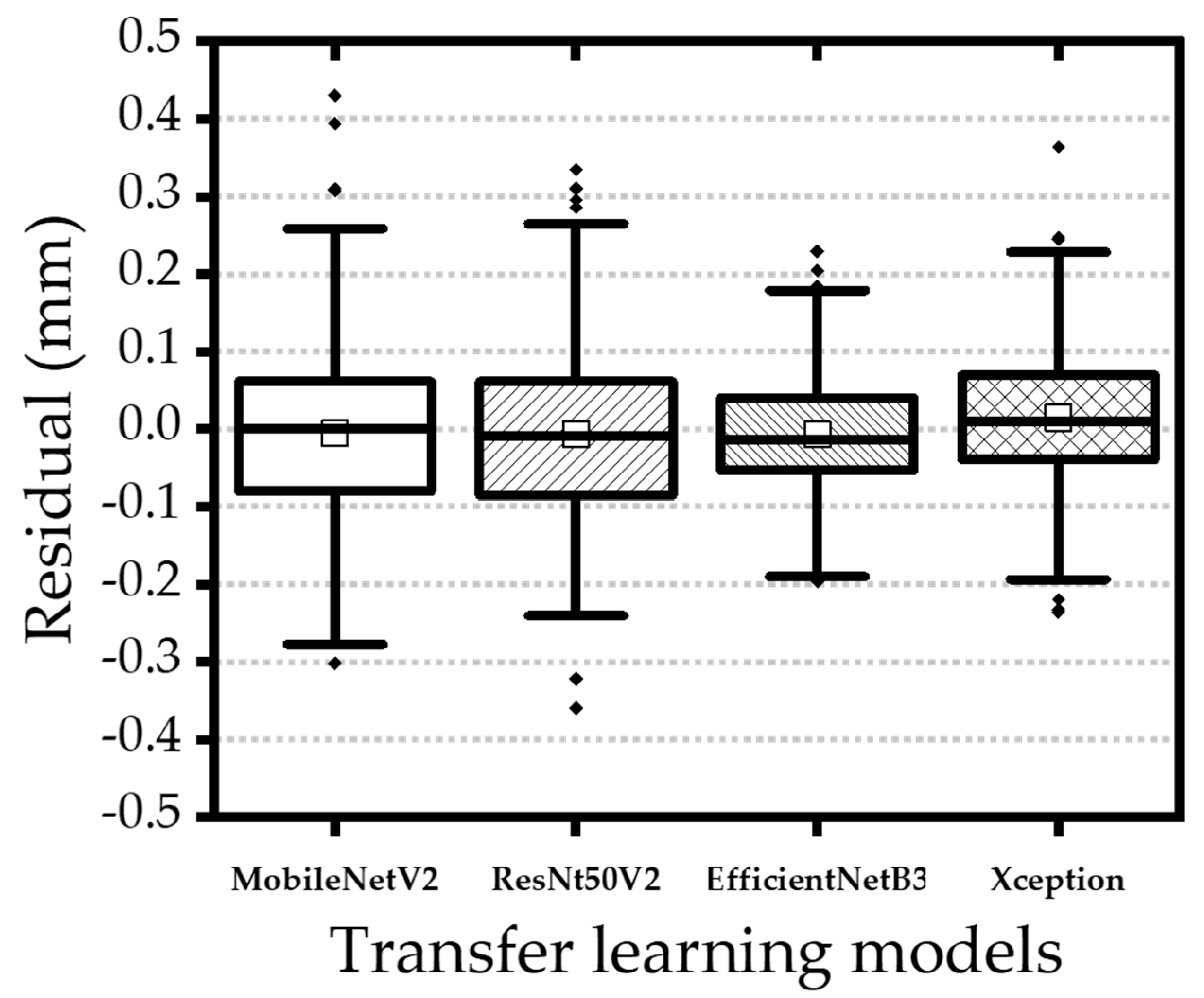

| MAE (mm) | 0.175 | 0.150 | 0.142 | 0.195 | 0.159 | 0.113 | 0.127 | 0.159 |

| R2 | 0.614 | 0.705 | 0.677 | 0.510 | 0.668 | 0.834 | 0.771 | 0.668 |

| Model | Transfer learning with fine-tuning; uni-sensor 500 Hz | Transfer learning with fine-tuning; multi-sensor 100 Hz | ||||||

| M | R | E | X | M | R | E | X | |

| MAE (mm) | 0.140 | 0.097 | 0.052 | 0.078 | 0.085 | 0.159 | 0.063 | 0.078 |

| R2 | 0.739 | 0.871 | 0.945 | 0.915 | 0.902 | 0.668 | 0.943 | 0.917 |

| Model | Transfer learning; uni-sensor 100 Hz | Transfer learning with fine-tuning; uni-sensor 100 Hz | ||||||

| M | R | E | X | M | R | E | X | |

| MAE (mm) | 0.180 | 0.180 | 0.152 | 0.196 | 0.159 | 0.113 | 0.128 | 0.159 |

| R2 | 0.564 | 0.564 | 0.681 | 0.502 | 0.688 | 0.837 | 0.800 | 0.688 |

| Model | Transfer learning with fine-tuning; uni-sensor 500 Hz | Transfer learning with fine-tuning; multi-sensor 100 Hz | ||||||

| M | R | E | X | M | R | E | X | |

| MAE (mm) | 0.165 | 0.095 | 0.049 | 0.077 | 0.086 | 0.085 | 0.058 | 0.073 |

| R2 | 0.617 | 0.875 | 0.951 | 0.915 | 0.901 | 0.900 | 0.956 | 0.927 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, B.-J.; Kim, Y.-M.; Kim, C. Transfer Learning-Based Multi-Sensor Approach for Predicting Keyhole Depth in Laser Welding of 780DP Steel. Materials 2025, 18, 3961. https://doi.org/10.3390/ma18173961

Kim B-J, Kim Y-M, Kim C. Transfer Learning-Based Multi-Sensor Approach for Predicting Keyhole Depth in Laser Welding of 780DP Steel. Materials. 2025; 18(17):3961. https://doi.org/10.3390/ma18173961

Chicago/Turabian StyleKim, Byeong-Jin, Young-Min Kim, and Cheolhee Kim. 2025. "Transfer Learning-Based Multi-Sensor Approach for Predicting Keyhole Depth in Laser Welding of 780DP Steel" Materials 18, no. 17: 3961. https://doi.org/10.3390/ma18173961

APA StyleKim, B.-J., Kim, Y.-M., & Kim, C. (2025). Transfer Learning-Based Multi-Sensor Approach for Predicting Keyhole Depth in Laser Welding of 780DP Steel. Materials, 18(17), 3961. https://doi.org/10.3390/ma18173961