Strip Surface Defect Detection Algorithm Based on YOLOv5

Abstract

1. Introduction

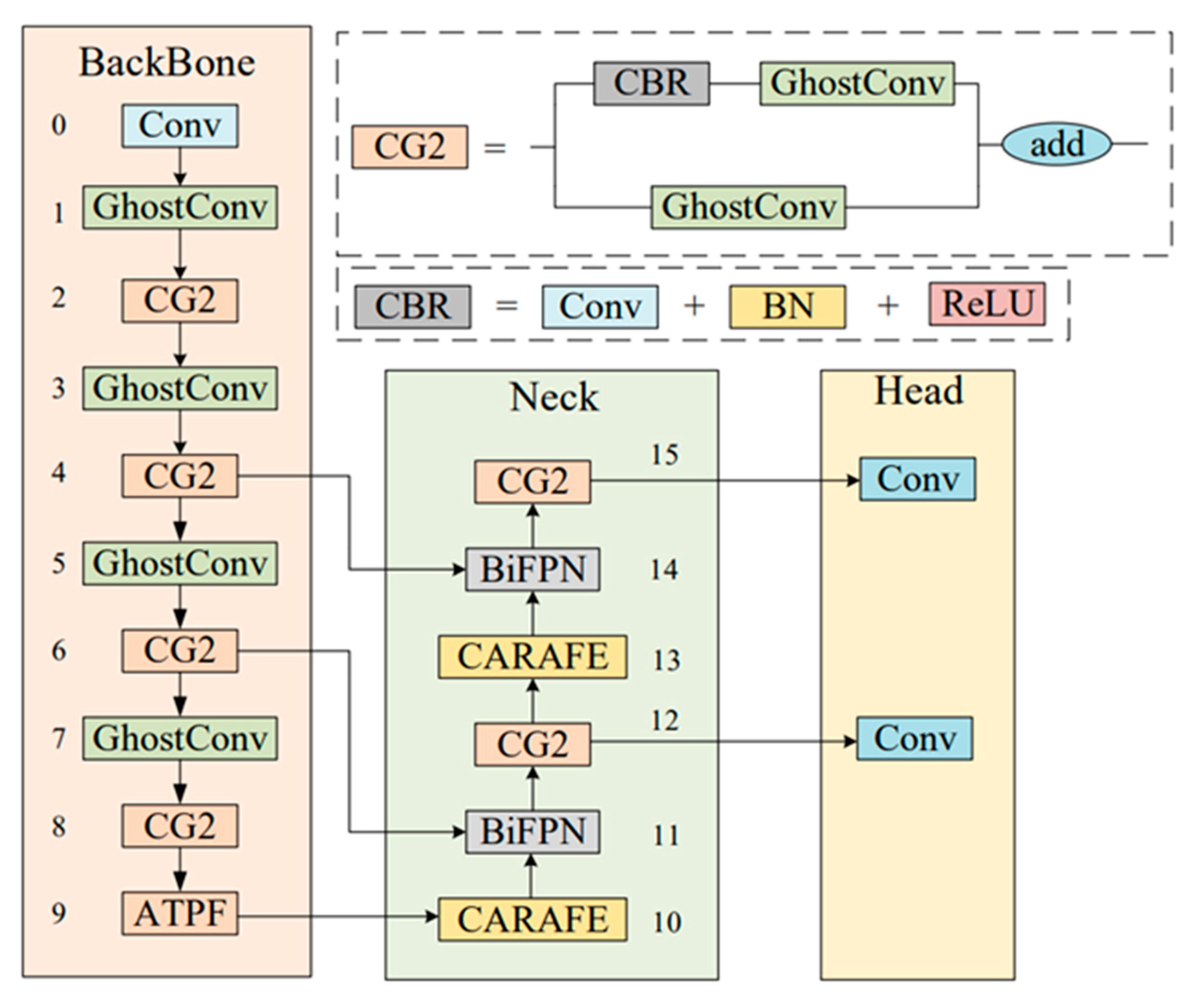

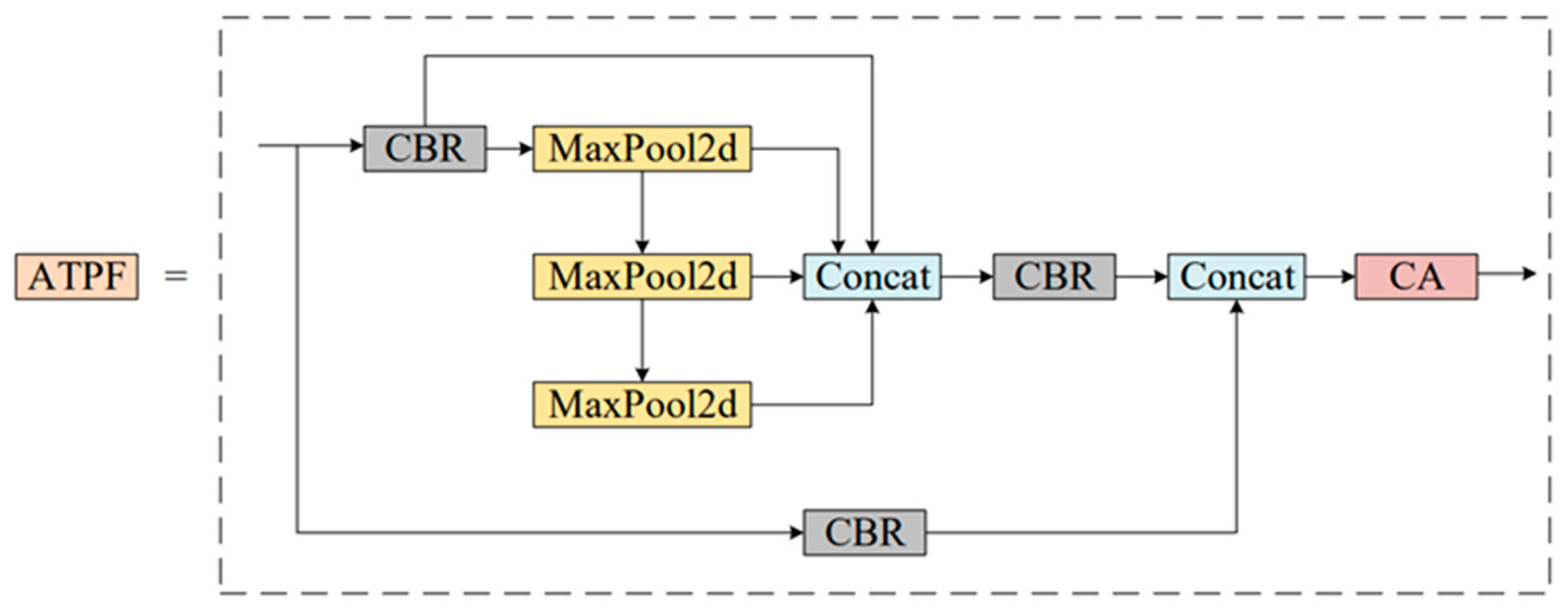

- This paper proposes an ATPF (Attention Pyramid-Fast) module which can fully extract features. This module can integrate features of different scales, pay attention to a large range of location information without too much computation and extract more useful feature information.

- Based on the ATPF module, a precise and fast model framework of strip surface defect detection, CG-Net, is designed to realize the automatic, rapid and high-precision detection of strip surface defects.

- On the NEU-DET dataset, the detection average accuracy (mAP50) reaches 75.9%, reaches 39.9% and the detection speed reaches 105 frames (FPS).

- On the NEU-CLS dataset, the detection average accuracy (mAP50) reaches 59.6%, reaches 32.6% and the detection speed reaches 110 frames (FPS), which is higher than that of some advanced networks such as YOLOv5s, YOLOv3-tiny, etc.

2. Related Work

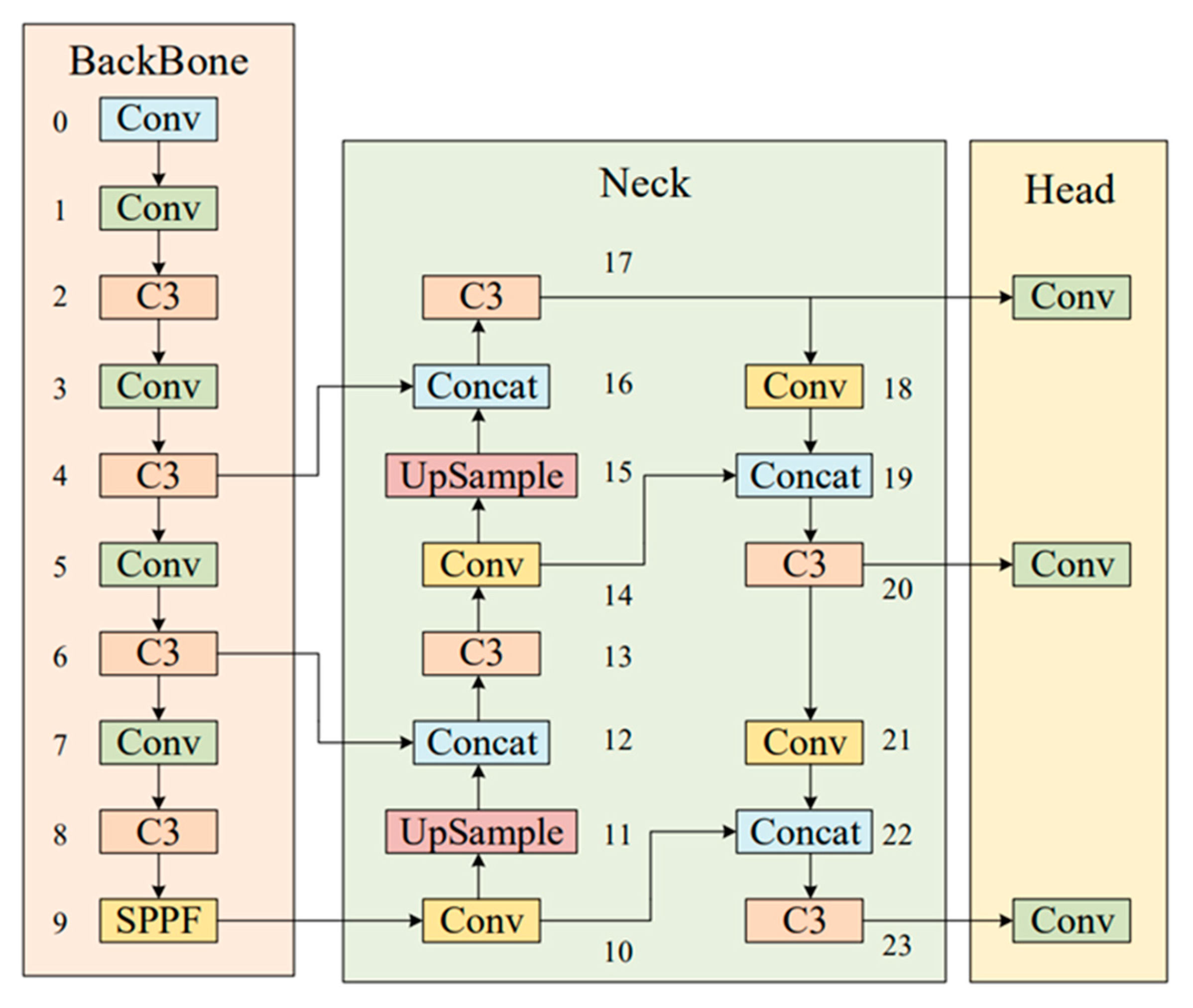

2.1. YOLOV5

- Input part: The input part preprocesses data training, including data preprocessing, including concatenation data enhancement [25] and adaptive image filling. To accommodate different datasets, YOLOv5 incorporates an adaptive anchor frame calculation on the input, which automatically sets the initial anchor frame size when the dataset changes.

- Main trunk: a cross-stage partial network (CSP) [26] and spatial pyramid pooling (SPPF) [27] are mainly used to extract feature graphs of different sizes from input images through multiple convolution and pooling. The bottleneck CSP is used to reduce the amount of calculation and improve the reasoning speed. The SPPF structure can realize the feature extraction of different scales from the same feature map and can generate a three-scale feature map, which is helpful in improving the detection accuracy.

- Neck: The structure combining FPN and PAN is adopted, combining the conventional FPN [28] layer with the bottom-up feature pyramid (PAN) [29] and integrating the extracted semantic features with the positional features. At the same time, the backbone layer and the detection layer are fused to make the model obtain more abundant feature information. The two structures together enhance the features extracted from different network layers in the backbone network fusion and further improve the detection capability.

- Head: The head output is mainly used to predict targets of different sizes on the feature map. YOLOV5 inherits the multi-scale prediction header of YOLOv4 and integrates three-layer feature mapping to improve the detection performance of different target sizes.

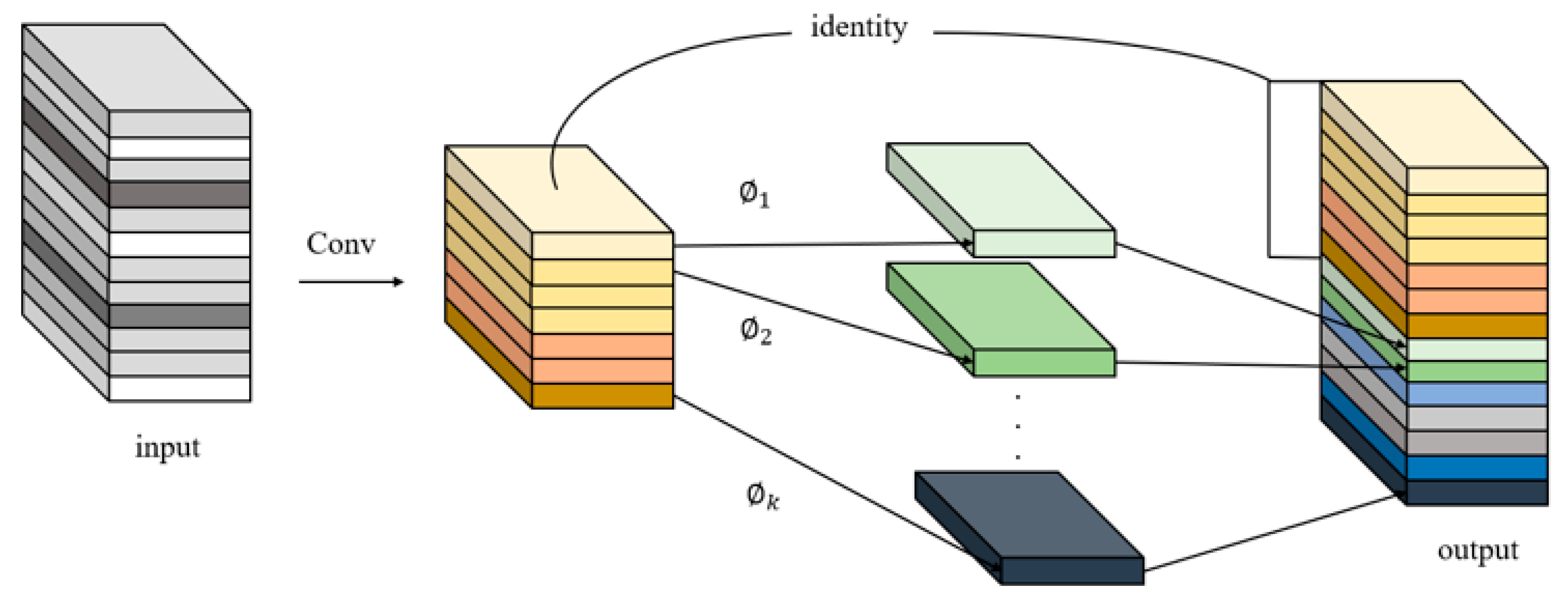

2.2. Lightweight Network

3. Method

3.1. CG2 Module

3.2. ATPF Module

3.3. CARAFE

3.4. BiFPN

4. Experimental Simulation and Analysis

4.1. Dataset

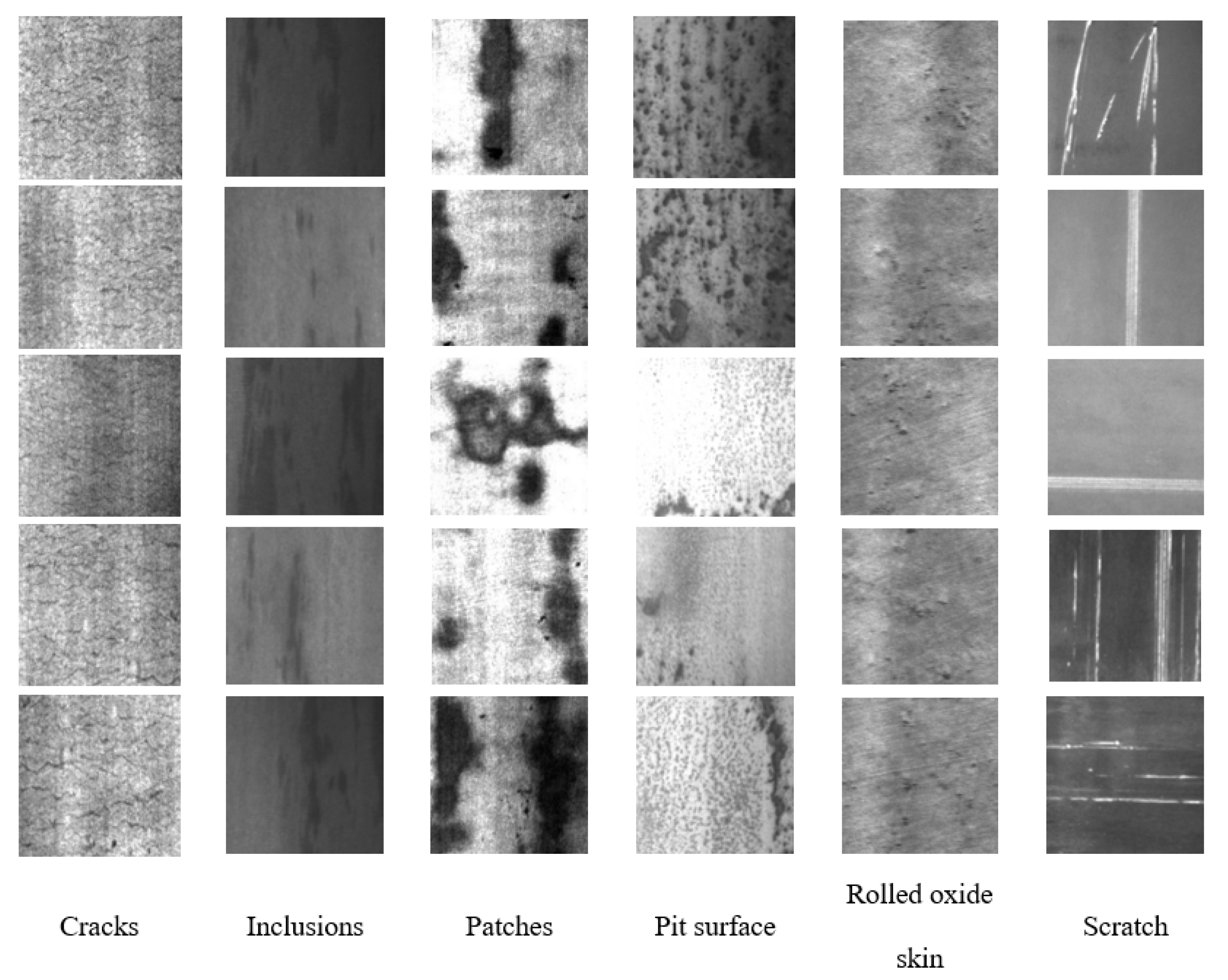

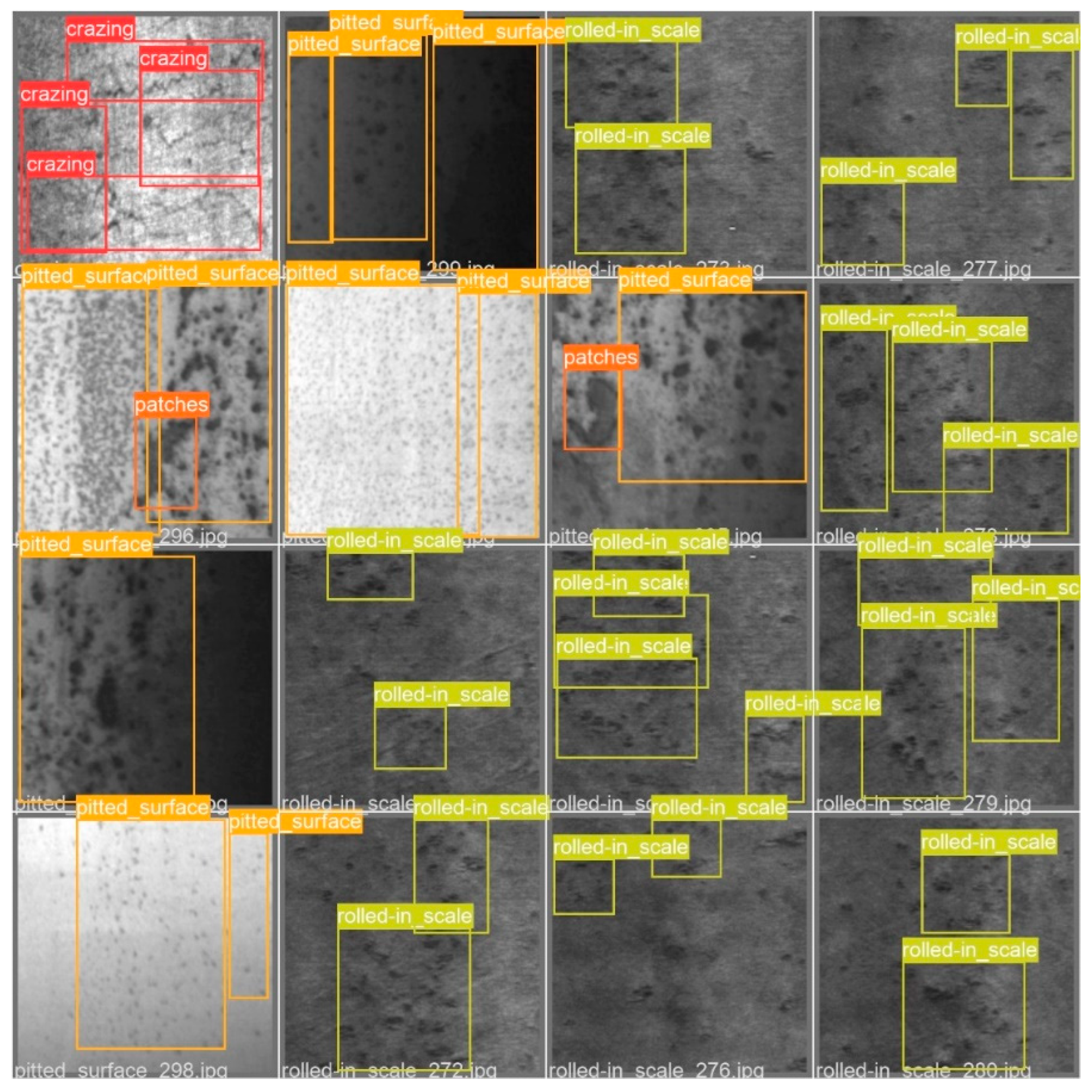

4.1.1. NEU-DET Dataset

4.1.2. NEU-CLS Dataset

4.2. Experimental Parameter Setting

4.3. Evaluation Index

- true positive (TP): it means the correct prediction was right in the case of the sample;

- false positive (FP): it means the error prediction was right in the case of the sample;

- false negative (FN): it means the sample error prediction for a negative example.

4.4. Ablation Experiment

4.5. Advanced Model Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kim, S.; Kim, W.; Noh, Y.-K.; Park, F.C. Transfer learning for automated optical inspection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2517–2524. [Google Scholar]

- Lv, X.; Duan, F.; Jiang, J.J.; Fu, X.; Gan, L.J.S. Deep metallic surface defect detection: The new benchmark and detection network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yuan, Y.; Balta, C.; Liu, J. A light-weight deep-learning model with multi-scale features for steel surface defect classification. Materials 2020, 13, 4629. [Google Scholar] [CrossRef] [PubMed]

- Subramanyam, V.; Kumar, J.; Singh, S.N. Temporal synchronization framework of machine-vision cameras for high-speed steel surface inspection systems. J. Real-Time Image Process. 2022, 19, 445–461. [Google Scholar] [CrossRef]

- Kang, Z.; Yuan, C.; Yang, Q. The fabric defect detection technology based on wavelet transform and neural network convergence. In Proceedings of the 2013 IEEE International Conference on Information and Automation (ICIA), Yinchuan, China, 26–28 August 2013; pp. 597–601. [Google Scholar]

- Anter, A.M.; Abd Elaziz, M.; Zhang, Z. Real-time epileptic seizure recognition using Bayesian genetic whale optimizer and adaptive machine learning. Future Gener. Comput. Syst. 2022, 127, 426–434. [Google Scholar] [CrossRef]

- Mandriota, C.; Nitti, M.; Ancona, N.; Stella, E.; Distante, A.J.M.V. Filter-based feature selection for rail defect detection. Mach. Vis. Appl. 2004, 15, 179–185. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- Liu, J.; Cui, G.; Xiao, C. A Real-time and Efficient Surface Defect Detection Method Based on YOLOv4. J. Real-Time Image Process. 2022. [Google Scholar] [CrossRef]

- Tang, M.; Li, Y.; Yao, W.; Hou, L.; Sun, Q.; Chen, J. A strip steel surface defect detection method based on attention mechanism and multi-scale maxpooling. Meas. Sci. Technol. 2021, 32, 115401. [Google Scholar] [CrossRef]

- Li, Z.; Tian, X.; Liu, X.; Liu, Y.; Shi, X. A two-stage industrial defect detection framework based on improved-yolov5 and optimized-inception-resnetv2 models. Appl. Sci. 2022, 12, 834. [Google Scholar] [CrossRef]

- Liu, T.; He, Z.; Lin, Z.; Cao, G.-Z.; Su, W.; Xie, S. An Adaptive Image Segmentation Network for Surface Defect Detection. IEEE Trans. Neural Netw. Learn. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- Shi, X.; Zhou, S.; Tai, Y.; Wang, J.; Wu, S.; Liu, J.; Xu, K.; Peng, T.; Zhang, Z. An Improved Faster R-CNN for Steel Surface Defect Detection. In Proceedings of the 2022 IEEE 24th International Workshop on Multimedia Signal Processing (MMSP), Shanghai, China, 26–28 September 2022; pp. 1–5. [Google Scholar]

- Tian, R.; Jia, M. DCC-CenterNet: A rapid detection method for steel surface defects. Measurement 2022, 187, 110211. [Google Scholar] [CrossRef]

- Wang, H.; Li, Z.; Wang, H. Few-shot steel surface defect detection. IEEE Trans. Instrum. Meas. 2021, 71, 1–12. [Google Scholar] [CrossRef]

- Wang, K.; Guo, P. A robust automated machine learning system with pseudoinverse learning. Cogn. Comput. 2021, 13, 724–735. [Google Scholar] [CrossRef]

- Wang, K.; Guo, P.; Xin, X.; Ye, Z. Autoencoder, low rank approximation and pseudoinverse learning algorithm. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 948–953. [Google Scholar]

- Liu, Y.; Wang, K.; Cheng, X. Human-Machine Collaborative Classification Model for Industrial Product Defect. In Proceedings of the 2021 17th International Conference on Computational Intelligence and Security (CIS), Chengdu, China, 19–22 November 2021; pp. 141–145. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2778–2788. [Google Scholar]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Detection of tomato plant phenotyping traits using YOLOv5-based single stage detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Lawal, O.M. YOLOv5-LiNet: A lightweight network for fruits instance segmentation. PLoS ONE 2023, 18, e0282297. [Google Scholar] [CrossRef]

- Shi, H.; Zhao, D. License Plate Recognition System Based on Improved YOLOv5 and GRU. IEEE Access 2023, 11, 10429–10439. [Google Scholar] [CrossRef]

- Li, C.; Yan, H.; Qian, X.; Zhu, S.; Zhu, P.; Liao, C.; Tian, H.; Li, X.; Wang, X.; Li, X. A domain adaptation YOLOv5 model for industrial defect inspection. Measurement 2023, 213, 112725. [Google Scholar] [CrossRef]

- Wu, C.; Wen, W.; Afzal, T.; Zhang, Y.; Chen, Y. A compact dnn: Approaching googlenet-level accuracy of classification and domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5668–5677. [Google Scholar]

- Kim, D.; Park, S.; Kang, D.; Paik, J. Improved center and scale prediction-based pedestrian detection using convolutional block. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 8–11 September 2019; pp. 418–419. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Wang, W.; Xie, E.; Song, X.; Zang, Y.; Wang, W.; Lu, T.; Yu, G.; Shen, C. Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8440–8449. [Google Scholar]

- Ma, S.; Zhang, X.; Jia, C.; Zhao, Z.; Wang, S.; Wang, S. Image and video compression with neural networks: A review. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 1683–1698. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Li, X.; Wang, Z.; Geng, S.; Wang, L.; Zhang, H.; Liu, L.; Li, D. Yolov3-Pruning (transfer): Real-time object detection algorithm based on transfer learning. J. Real-Time Image Process. 2022, 19, 839–852. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Z. FPFS: Filter-level pruning via distance weight measuring filter similarity. Neurocomputing 2022, 512, 40–51. [Google Scholar] [CrossRef]

- Lan, R.; Sun, L.; Liu, Z.; Lu, H.; Pang, C.; Luo, X. MADNet: A fast and lightweight network for single-image super resolution. IEEE Trans. Cybern. 2020, 51, 1443–1453. [Google Scholar] [CrossRef] [PubMed]

- Shin, Y.G.; Sagong, M.C.; Yeo, Y.J.; Kim, S.W.; Ko, S.J. Pepsi++: Fast and lightweight network for image inpainting. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 252–265. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Q.; Wang, Y.; Fan, Y.; Wu, X.; Zhang, S.; Kang, B.; Latecki, L.J. AGLNet: Towards real-time semantic segmentation of self-driving images via attention-guided lightweight network. Appl. Soft Comput. 2020, 96, 106682. [Google Scholar] [CrossRef]

- Liu, C.; Gao, H.; Chen, A. A real-time semantic segmentation algorithm based on improved lightweight network. In Proceedings of the 2020 International Symposium on Autonomous Systems (ISAS), Guangzhou, China, 6–8 December 2020; pp. 249–253. [Google Scholar]

- Liang, H.; Lee, S.C.; Seo, S. Automatic recognition of road damage based on lightweight attentional convolutional neural network. Sensors 2022, 22, 9599. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. Carafe: Content-aware reassembly of features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Bao, Y.; Song, K.; Liu, J.; Wang, Y.; Yan, Y.; Yu, H.; Li, X. Triplet-graph reasoning network for few-shot metal generic surface defect segmentation. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

| Number | GhostConv | CG2 | ATPF | CARAFE + BiFPN | Params(M) | GFLOPs(G) | FPS | ||

|---|---|---|---|---|---|---|---|---|---|

| 1 | — | — | — | — | 69.6 | 35.9 | 7.0 | 15.8 | 96 |

| 2 | √ | — | — | — | 74.1 | 38.0 | 3.9 | 11.4 | 120 |

| 3 | — | √ | — | — | 71.5 | 37.1 | 2.9 | 7.7 | 147 |

| 4 | — | — | √ | — | 72.2 | 38.6 | 4.6 | 13.1 | 114 |

| 5 | — | — | — | √ | 72.4 | 37.9 | 4.9 | 13.8 | 103 |

| 6 | √ | √ | √ | √ | 75.9 | 39.9 | 2.3 | 6.5 | 105 |

| Number | GhostConv | CG2 | ATPF | CARAFE + BiFPN | Params(M) | GFLOPs(G) | FPS | ||

|---|---|---|---|---|---|---|---|---|---|

| 1 | — | — | — | — | 57.6 | 30.6 | 7.0 | 15.8 | 99 |

| 2 | √ | — | — | — | 58.6 | 31 | 3.9 | 11.4 | 120 |

| 3 | — | √ | — | — | 58.4 | 30.8 | 2.9 | 7.7 | 145 |

| 4 | — | — | √ | — | 60.2 | 32.1 | 4.6 | 13.1 | 113 |

| 5 | — | — | — | √ | 57.9 | 30.8 | 4.9 | 13.8 | 103 |

| 6 | √ | √ | √ | √ | 60.8 | 32.6 | 2.3 | 6.5 | 110 |

| SE | CA | CBAM | ECA | P | R | ||

|---|---|---|---|---|---|---|---|

| √ | — | — | — | 66.4 | 67.8 | 73.5 | 39.0 |

| — | √ | — | — | 73.4 | 68.7 | 75.9 | 39.9 |

| — | — | √ | — | 71.4 | 68 | 73 | 39.5 |

| — | — | — | √ | 67.9 | 68.2 | 73.1 | 38.6 |

| SE | CA | CBAM | ECA | P | R | ||

|---|---|---|---|---|---|---|---|

| √ | — | — | — | 59.4 | 63.1 | 61.1 | 31.9 |

| — | √ | — | — | 54.3 | 64.2 | 60.8 | 32.6 |

| — | — | √ | — | 58.9 | 62.2 | 59.6 | 32.5 |

| — | — | — | √ | 54.8 | 62.1 | 58 | 30.9 |

| Method | Params(M) | GFLOPs(G) | FPS | ||

|---|---|---|---|---|---|

| YOLOv3 | 73.1 | 37.0 | 61.5 | 154.6 | 40 |

| YOLOv3-tiny | 54 | 22.4 | 8.6 | 12.9 | 172 |

| YOLOv5-s | 69.6 | 35.9 | 7.0 | 15.8 | 96 |

| MobileNetv3-YOLOv5 | 71.9 | 36.6 | 5.0 | 11.3 | 72 |

| ShuffleNetv2-YOLOv5 | 63.7 | 31.5 | 3.8 | 8.0 | 83 |

| GhostNet-YOLOv5 | 73.2 | 36.6 | 4.7 | 7.6 | 74 |

| YOLOv7-tiny | 69.3 | 32.6 | 6.0 | 13.1 | 99 |

| CG-Net | 75.9 | 39.9 | 2.3 | 6.5 | 105 |

| Method | Params(M) | GFLOPs(G) | FPS | ||

|---|---|---|---|---|---|

| YOLOv3 | 60.1 | 28.4 | 61.5 | 154.6 | 39 |

| YOLOv3-tiny | 38.9 | 14.1 | 8.6 | 12.9 | 222 |

| YOLOv5-s | 57.6 | 30.6 | 7.0 | 15.8 | 99 |

| MobileNetv3-YOLOv5 | 57.6 | 29.1 | 5.0 | 11.3 | 76 |

| ShuffleNetv2-YOLOv5 | 53.9 | 24.5 | 3.8 | 8.0 | 83 |

| GhostNet-YOLOv5 | 58.9 | 30.9 | 4.7 | 7.6 | 56 |

| YOLOv7-tiny | 54 | 26.1 | 6.0 | 13.1 | 101 |

| CG-Net | 60.8 | 32.6 | 2.3 | 6.5 | 110 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Yang, X.; Zhou, B.; Shi, Z.; Zhan, D.; Huang, R.; Lin, J.; Wu, Z.; Long, D. Strip Surface Defect Detection Algorithm Based on YOLOv5. Materials 2023, 16, 2811. https://doi.org/10.3390/ma16072811

Wang H, Yang X, Zhou B, Shi Z, Zhan D, Huang R, Lin J, Wu Z, Long D. Strip Surface Defect Detection Algorithm Based on YOLOv5. Materials. 2023; 16(7):2811. https://doi.org/10.3390/ma16072811

Chicago/Turabian StyleWang, Han, Xiuding Yang, Bei Zhou, Zhuohao Shi, Daohua Zhan, Renbin Huang, Jian Lin, Zhiheng Wu, and Danfeng Long. 2023. "Strip Surface Defect Detection Algorithm Based on YOLOv5" Materials 16, no. 7: 2811. https://doi.org/10.3390/ma16072811

APA StyleWang, H., Yang, X., Zhou, B., Shi, Z., Zhan, D., Huang, R., Lin, J., Wu, Z., & Long, D. (2023). Strip Surface Defect Detection Algorithm Based on YOLOv5. Materials, 16(7), 2811. https://doi.org/10.3390/ma16072811