Abstract

In this paper, eight variables of cement, blast furnace slag, fly ash, water, superplasticizer, coarse aggregate, fine aggregate and flow are used as network input and slump is used as network output to construct a back-propagation (BP) neural network. On this basis, the learning rate, momentum factor, number of hidden nodes and number of iterations are used as hyperparameters to construct 2-layer and 3-layer neural networks respectively. Finally, the response surface method (RSM) is used to optimize the parameters of the network model obtained previously. The results show that the network model with parameters obtained by the response surface method (RSM) has a better coefficient of determination for the test set than the model before optimization, and the optimized model has higher prediction accuracy. At the same time, the model is used to evaluate the influencing factors of each variable on slump. The results show that flow, water, coarse aggregate and fine aggregate are the four main influencing factors, and the maximum influencing factor of flow is 0.875. This also provides a new idea for quickly and effectively adjusting the parameters of the neural network model to improve the prediction accuracy of concrete slump.

1. Introduction

With the development of concrete technology and performance, high-performance concrete and self-compacting concrete have been widely used. These concrete constructions no longer simply consider the strength of the concrete, but also consider the durability and workability of the concrete. Concrete starts to be mixed in the mixing station until it is transported to the site for pouring, but it takes time for transportation and parking on the way, which makes the workability of the concrete worse. The slump loss of concrete directly affects the workability of concrete, causes difficulties for construction, may bring about construction accidents and affects the quality of hardened concrete. Therefore, analyzing the causes of excessive concrete slump has particular significance for preventing the loss of concrete slump, thereby improving the workability of concrete.

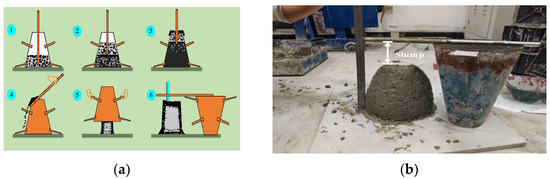

Regarding global construction material usage [1], concrete is one of the most in-demand and most adaptable materials. Slump is an important indicator to measure the uniformity of concrete quality. It is reflected in the fact that the slump slows down the hardening speed of concrete and causes the strength after hardening to be low, which greatly affects the quality of the project. The test procedure is shown in Figure 1 [2]. Precise control of slump [3,4] is a prerequisite for ensuring the excellent performance of concrete. In practical engineering, the slump measurement test often requires a lot of time, manpower and material resources, and it is difficult to obtain the test results quickly and accurately. Therefore, the study of a concrete slump prediction model [5,6] is of extraordinary significance for the theory and application of construction engineering.

Figure 1.

Slump test process, (a) schematic diagram of the slump test operation process, step1: first concrete addition, step2: second concrete addition, step3: third concrete addition, step4: smoothing the mouth of the barrel, step5: demoulding, step6: slump measurement; (b) schematic diagram of slump test measurement.

With the advancement of science and technology and the development of the construction industry, civil engineering has put forward higher and higher requirements for the performance of concrete materials, and the traditional mix design method [7] largely relies on the experience of designers. Recently, civil and architectural researchers have gradually introduced artificial neural network [8], genetic algorithm [9] and other artificial intelligence sciences into the optimization design of concrete mix ratio and have achieved a series of scientific research results. X. Hu [10] et al. proposed an ensemble model for concrete strength prediction, and gave measures to improve its prediction accuracy, such as optimizing features, ensemble algorithms, hyperparameter optimization, expanding sample data sets, richer data sources and data preprocessing, etc. M. Shariati [11] et al. used a hybrid artificial neural network genetic algorithm (ANN-GA) as a new method to predict the strength of concrete slag and fly ash. The results show that the ANN-GA model can not only be developed and adapted to the compressive strength prediction of concrete, but it can also produce better results compared to the artificial neural network back propagation (ANN-BP) model. I-Yeh [12,13] described methods for predicting slump and compressive strength of high-performance concrete. Venkata [14] et al. proposed a feasibility assessment of strength properties of self-compacting concrete based on artificial neural networks. Duan [15] et al. used artificial neural network to predict the compressive strength of recycled aggregate concrete and achieved good results. Ji [16] et al. proposed an in-depth algorithmic study of concrete mix ratios using neural network. Demir [17] predicted the elastic modulus of ordinary and high-strength concrete by using artificial neural networks. Vinay [18] predicted ready-mixed concrete slump based on genetic algorithm. Li Dihong [19] et al. predicted the comprehensive properties of concrete with back propagation neural network. I-Cheng Yeh [20] and Ashu Jain [21] respectively proposed an optimal design method of concrete mix ratio based on artificial neural networks. Wang Jizong [22], Chul-Hyun Lim [23], Liu Cuilan [24], and I-Cheng Yeh [25] applied the genetic algorithm to the optimal design of concrete mix ratios.

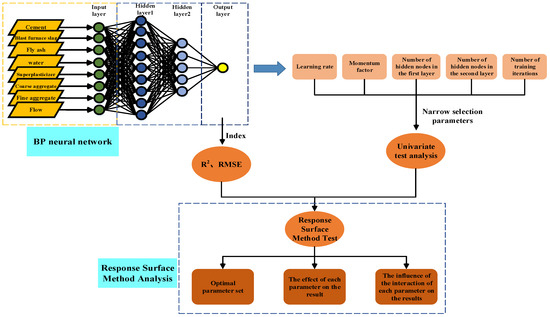

To sum up, although the neural network has been widely used in civil engineering, it is relatively rare to apply it to concrete slump prediction and model parameter optimization. Therefore, this paper plans to use the BP neural network model to predict the slump of concrete and uses eight variables, such as cement, blast furnace slag, fly ash, water, superplasticizer, coarse aggregate, fine aggregate and flow as the network input, and slump as the network output. The learning rate, momentum factor, number of hidden nodes and the number of iterations are used as hyperparameters to build 2-layer and 3-layer neural networks. Finally, the parameters of the prediction model are studied through single factor and RSM in order to obtain a wider range of optimal parameter solutions. The research process of this paper is shown in Figure 2.

Figure 2.

Research architecture diagram.

2. Establishment of a Concrete Slump Model Based on BP Neural Network

2.1. Introduction to BP Neural Network

Artificial neural networks, also known as neural networks (NNs), are composed of widely interconnected processing units. They can not only accurately classify [26,27] a large number of unrelated data but also have a good predictive function. The function between the input and output of each neuron is called the activation function, also known as the S-shaped growth curve. The structure of the neural network essentially imitates the structure and function of the human brain nervous system to establish a neural network model. It is a highly complex information processing system, and its network architecture is based on a specific mathematical model, as shown in Figure 2. The shown neural network architecture (take three layers as an example), including input layer, hidden layer and output layer [28,29,30]. Most neural networks are based on back propagation, and the back propagation training (back propagation: BP) algorithm adjusts the weights of neurons through the gradient descent method [31], the purpose is to minimize the error between the actual output and the expected output of the multilayer feedforward [32] neural network. In other words, until the mean square error of all training data is minimized to the specified error range.

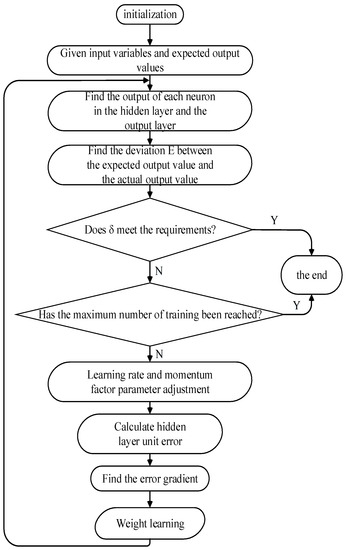

The BP neural network learning algorithm can be described as the most successful neural network learning algorithm. When using neural networks in display tasks, most of them use BP algorithm for training. The network model in this paper contains the basic structure of the BP neural network, namely the input layer, hidden layer and output layer. The signal is activated through the input layer and then the features are extracted through the hidden layer. The neural units of different hidden layers may have different weights and self-biases corresponding to different input layers. The excitation of the input layer is transmitted to the hidden layer, and finally the output layer generates results according to different hidden layers, layer weights and self-bias. The algorithm flow chart of the BP neural network is shown in Figure 3. The activation function adopts the Sigmoid function, and the operation principle of the Sigmoid function is as follows:

Figure 3.

BP neural network algorithm flow chart.

The first is the linear feature normalization function:

Linear normalization: Perform linear transformation on the original data to map the data between [0, 1] to achieve equal scaling of the data. The specific announcement is shown in formula (1). where X is the original data, Xmin is the minimum value of the data, and Xmax is the maximum value of the data.

The overall density of the probability is 1, and when the X vector is used as a constant exponential vector, the result is the inverse proportion of the whole function on the Y axis. So Sigmoid is defined as [33]:

In this way, the probability of the vector can be normalized, and its value is between plus and minus 1.

The neurons in each layer of the network adopt the gradient descent momentum learning method, which is expressed as follows [34]:

where:

dω = the amount of change in the weight (threshold) of a single neuron;

dωprev = the amount of change in neuron weight (threshold) value in the previous iteration;

mc = momentum factor;

lr = learning rate;

gω = weight (threshold) gradient.

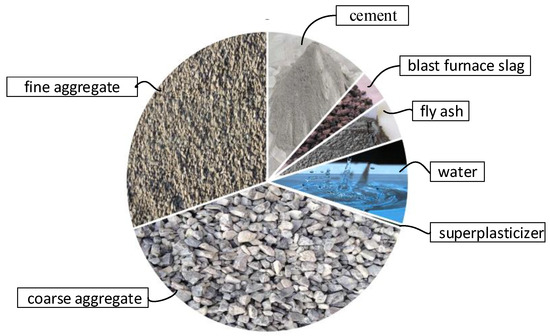

2.2. Samples and Network Input and Output

This test is a dataset composed of 103 sets of experimental data, and the data come from the network public test data of Prof. I-Cheng Yeh [35], including eight variables, such as cement, blast furnace slag, fly ash, water, superplasticizer, coarse aggregate, fine aggregate, flow (fluidity refers to the ability of the cement mortar mixture to generate fluidity and fill the mold uniformly and densely under the action of its own weight or mechanical vibration. Slump is one of the key indicators reflecting the flow properties of concrete. The two influence each other, and the flow of cement mortar affects the size of concrete slump. The introduction of the flow of the cement mortar makes the results of the model more convincing.). The concrete mix proportion is shown in Table 1 and Figure 4. Among them, cement, blast furnace slag, fly ash, water, superplasticizer, coarse aggregate, fine aggregate and flow are the inputs of neural network variables, and the slump is the output of variables.

Table 1.

Concrete mix ratio.

Figure 4.

Concrete composition.

2.3. Network Data Preprocessing

Data preprocessing can help improve the quality of data, which in turn helps improve the effectiveness and accuracy [36] of the data mining process. The specific work includes the following:

- (1)

- Shuffle the data: Shuffling the data before model training can effectively reduce the variance [37,38] and ensure that the model remains general while reducing overfitting.

- (2)

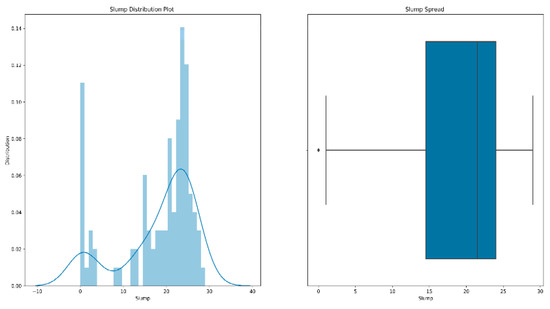

- Outlier processing: It is inevitable that we will have a few data points that are significantly different from other observations during training. A data point is considered to be an outlier [39] if it lies 1.5 times the interquartile range below the first quartile or above the third quartile. The existence of outliers will have a serious impact on the prediction results of the model. Therefore, our processing of outliers [40,41] is generally to delete them. Figure 5 is a box diagram of the model’s slump distribution.

Figure 5. Slump distribution map.

Figure 5. Slump distribution map.

From the figure, we can see that the slump of all the data is mostly concentrated between 14–24 cm. There are a few outliers below its lower limit, and the overall data are relatively healthy.

- (3)

- Split the dataset as follows: the training set accounts for 70%, the test set accounts for 30% and the feature variables are kept separate from the test set and training set data.

- (4)

- Standardized data: There are three main methods for data standardization, namely minimum–maximum standardization, z-score standardization and decimal standardization. This paper adopts the z-score method, also called standard deviation normalization, the mean value of the data processed by it is 0 and the variance is 1. It is currently the most commonly used standardization method. The formula is as follows:where is the mean value of the corresponding feature and σ is the standard deviation.

Part of the processed training data is shown in Table 2:

Table 2.

Normalized training set.

2.4. Model Parameter Selection

The optimization parameters of the slump prediction model considered in this study include the learning rate, momentum factor, the number of hidden nodes in the first layer, the number of hidden nodes in the second layer and the number of iterations. The coefficient of determination (R2) and the root mean square error (RMSE) are used as evaluation indicators for the network model. The range values are shown in Table 3, where the number of two-layer and three-layer BP neural network models are 9 × 10 × 10 × 10 and 9 × 10 × 10 × 10 × 10 groups, respectively. Due to the large number and types of parameters, it is quite difficult to find the optimal solution of the model parameters to establish a high-quality concrete slump prediction model. There are three main methods for parameter optimization: algorithm-based, machine-assisted method and manual parameter adjustment [42,43]. Based on the BP neural network algorithm, this study using machine learning as the main method, and optimizing the model parameters by manually adjusting the parameters.

Table 3.

Value range of neural network parameters.

The parameters of each optimization variable are shown in Table 4, where the value of learning rate is 0.1~1.0 and the increment is 0.1; the value of momentum factor is 0.2~1.0, and the increment is 0.1; the value of number of hidden nodes in the first layer is 0.1; the value is 3~12 and the increment is 1; the parameter selection of number of hidden nodes in the second layer is the same as that of number of hidden nodes in the first layer; the value of number of training iterations is 500~5000 and the increment is 500.

Table 4.

Some examples of batch slump modeling.

3. Research on Two-Layer Neural Network

3.1. Single Factor Design Experiment of Two-Layer Neural Network

As seen in Table 3, the two-layer neural network involves experimental factors, including learning rate, momentum factor, the number of hidden nodes in the first layer and the number of iterations. Considering that the level of each parameter is large, it will lead to too many trials, and it is not convenient to observe the optimal solution parameter solution. Therefore, this study uses a single-factor experimental design based on the bisection method to obtain the optimal parameter solution without considering the interaction of various factors, aiming to narrow the range of the level of each parameter.

Considering this, parameter optimization is carried out in the established slump model. First, the learning rate, momentum factor and the number of hidden nodes in the first layer are tentatively set to 0.5, 0.6 and 7, respectively, and the optimal number of iterations is obtained, as shown in Table 5. The two parameters of the number of nodes are fixed to obtain the optimal value of the learning rate, and the same processing method is adopted in turn to finally obtain the optimal value of each parameter, as shown in Figure 6.

Table 5.

The impact of different iteration times on network performance.

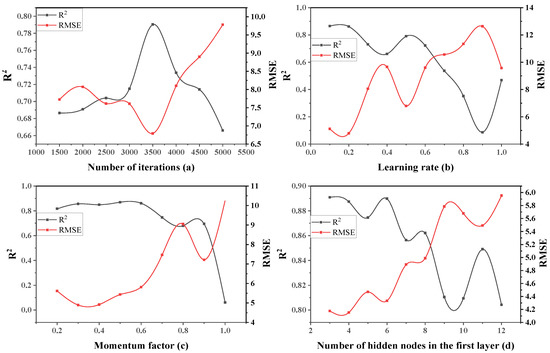

Figure 6.

The influence of each single factor on two-layer neural network performance.

It can be seen from Figure 6a that RMSE showed a trend of first decreasing and then increasing with the increasing number of iterations, R2 showed a trend of first increasing and then decreasing with the increasing number of iterations, and the network performance reached the best when the number of iterations was 3500. Figure 6b–d show that when the learning rate, momentum factor and the number of hidden nodes in the first layer are, respectively 0.2, 0.3 and 4, the network performance is in its best state.

According to this single-factor experimental design, a set of the optimal solutions of parameters without considering interaction are obtained, as shown in Table 6. Thereby narrowing the range of the level value of each factor.

Table 6.

Optimal parameter solution under single factor design with two-layer neural network.

3.2. Response Surface Method Test of Two-Layer Neural Network

Response surface optimization method, namely response surface methodology (RSM), is a method for optimizing experimental conditions, which is suitable for solving problems related to nonlinear data processing. Through the regression fitting of the process (as shown in the Equation (5)) and the drawing of the response surface and the contour line, the response value corresponding to each factor level can easily be obtained. On the basis of the response value of each factor level, the predicted optimal response value and the corresponding experimental conditions can be found.

where Y represents the response function (in our example, R2 and RMSE); B0 is a constant coefficient; Bi, Bii and Bij are the coefficients of linear term, quadratic term [44] and interaction term, respectively.

3.2.1. Model Establishment and Significance Test of a Two-Layer Neural Network

Single factor experiments show that the learning rate, the number of hidden nodes and the number of iterations have a significant impact on network performance. On this basis, R2 (Y1) and RMSE (Y2) should be taken as the response values and four factors that have a significant impact on network performance: learning rate (X1), momentum factor (X2), number of hidden nodes (X3) and iteration. The number of times (X4) is the investigation factor. The Box–Behnken test factors and levels are shown in Table 7, and the test results and analysis are shown in Table 8.

Table 7.

Parameter optimization of Box–Behnken test factors and levels with two-layer neural network.

Table 8.

Parameter optimization of Box–Behnken test results and analysis with two-layer neural network.

When using Design-Expert V10.07 software to fit Y1 and Y2 in Table 8, the regression Equation can be obtained:

The analysis of variance is performed on the above regression equation, and the results are shown in Table 9 and Table 10.

Table 9.

Regression equation coefficients and significance test (R2) (two-layer neural network).

Table 10.

Regression equation coefficients and significance test (RMSE) (two-layer neural network).

From Table 9 and Table 10, it can be seen that F1 = 8.41, F2 = 17.83 and the p value of Y1 and Y2 is less than 0.0001, which indicates that the model is very significant, and the lack of fit term is greater than 0.05, which is not significant. This indicates that the regression equation fits the experiment. The situation is good, and unknown factors have little interference on the experimental results, indicating that the residuals are all caused by random errors. This model has high reliability. After analysis of variance, the primary and secondary order of the influence of the four factors on R2 and RMSE is X1 > X3 > X4 > X2, that is, learning rate > number of hidden nodes > number of iterations > momentum factor. In Y1, the first-order terms X1 and X3 have extremely significant effects on the results; in Y2, the first-order terms X1 and X3 have extremely significant effects on the results, the interaction terms X1 × 2 have significant effects on the results and the quadratic term X22 has significant effects on the results.

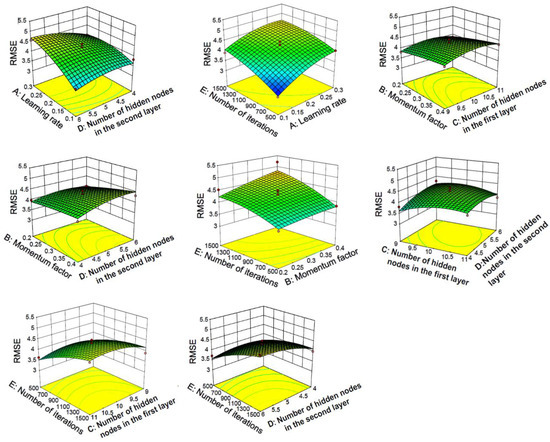

3.2.2. Response Surface Method Analysis of Two-Layer Neural Network

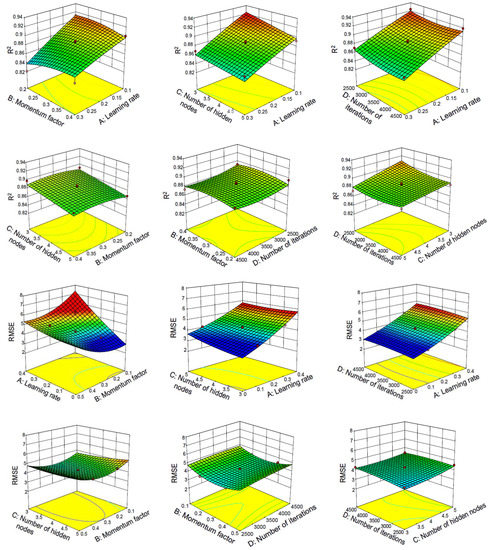

The response surface curve and contour lines of the interaction of learning rate, momentum factor, number of hidden nodes and number of iterations on R2 and RMSE are shown in Figure 7.

Figure 7.

Response surface and contour lines of the interaction of various factors on network performance with two-layer neural network.

The response surface diagram can intuitively indicate the degree of influence of factors on the response value. The more significant the influencing factor, the steeper the slope of the surface. The shape of the contour line can determine the strength of the interaction between the two variables. An ellipse indicates that the interaction between the two factors is significant, and a circle indicates that the interaction between the two factors is not significant.

Figure 7 shows the interaction of learning rate, momentum factor, number of iterations and number of hidden nodes on R2 and RMSE. As can be seen from the results, the interaction between the learning rate and the other three factors is the most significant, which is basically consistent with the significance conclusion obtained by the above analysis of variance. The optimal parameters of the model for predicting concrete slump based on a BP neural network are: lr = 0.1, mf = 0.3, noi = 2669 and nohn = 3, where lr stands for learning rate, mf stands for momentum factor, noi stands for number of iterations and nohn stands for the number of hidden nodes. The constructed model with the optimized parameters is used for verification on the training set, and the results are R2 = 0.927 and RMSE = 3.373. At the same time this optimized model is used for verification on the test set. After verification, the results are R2 = 0.91623 and RMSE = 3.60363. However, the R2 of the two-layer baseline model without RSM optimization is only 0.53484509.

3.2.3. Analysis of Slump Influencing Factors Based on Two-Layer Neural Network

Using the optimized parameters to build a two-layer neural network, the training set and the test set were evaluated and verified and the influencing factors of eight variables on the slump were obtained. A bar graph of the effect of each variable on slump is shown in Figure 8.

Figure 8.

A bar graph of each variable’s effect on slump with two-layer neural network.

It can be seen from Figure 8 that the order of influence on slump is flow > coarse aggregate > water > fine aggregate > fly ash > cement > blast furnace slag > superplasticizer. Flow and coarse aggregate are the top two influencing factors with 0.901 and 0.045, respectively. Superplasticizer and blast furnace slag are the two low influencing factors with 0.0006 and 0.0042, respectively.

4. Research on Three-Layer Neural Network

4.1. Single Factor Design Experiment of Three-Layer Neural Network

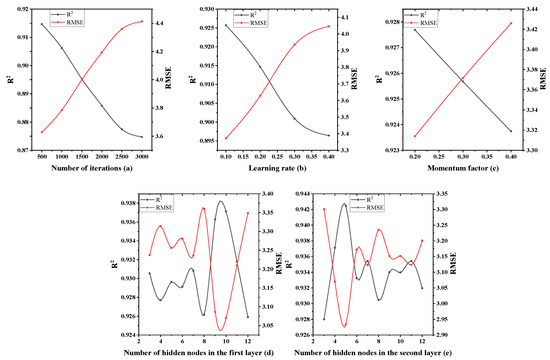

The single-factor optimization method of the parameters of the three-layer neural network model is similar to the two-layer neural network and, finally, the optimal value of each parameter is obtained, as shown in Figure 9.

Figure 9.

The influence of each single factor on three-layer neural network performance.

It can be seen from Figure 9a that RMSE and R2 show decreasing and increasing trends as the number of iterations increase, and the network performance reaches its best when the number of iterations is 500. Figure 9b–e shows that when the learning rate, momentum factor, the number of hidden nodes in the first layer and the number of hidden nodes in the second layer are, respectively, 0.1, 0.2, 10 and 5, the network performance is in its best state.

According to this single-factor experimental design, a set of optimal solutions of parameters without considering interaction are obtained, as shown in Table 11. Thereby narrowing the range of the level of each factor.

Table 11.

Optimal parameter solution under single factor design with three-layer neural network.

4.2. Response Surface Method Test of Three-Layer Neural Network

4.2.1. Model Establishment and Significance Test of Three-Layer Neural Network

Single-factor experiments show that the learning rate, momentum factor, and number of iterations have a significant impact on network performance. On this basis, R2 (Y1) and RMSE (Y2) can be taken as the response values, and five factors that have a significant impact on network performance (learning rate (X1), momentum factor (X2), and number of hidden nodes in the first layer (X3), the number of hidden nodes in the second layer (X4) and the number of iterations (X5)) are the inspection factors. The Box–Behnken test factors and levels are shown in Table 12, and the test results and analysis are shown in Table 13.

Table 12.

Parameter optimization Box–Behnken test factors and levels with three-layer neural network.

Table 13.

Parameter optimization Box–Behnken test results and analysis with three-layer neural network.

Using Design-Expert V10.07 software to fit Y1 and Y2 in Table 13, the regression equation can be obtained:

The analysis of variance is performed on the above regression equation, and the results are shown in Table 14 and Table 15.

Table 14.

Regression equation coefficients and significance test (R2) (three-layer neural network).

Table 15.

Regression equation coefficients and significance test (RMSE) (three-layer neural network).

From Table 14 and Table 15, it can be seen that F1 = 10.21, F2 = 9.92 and the p values of Y1 and Y2 are less than 0.0001, which is very significant, and the lack of fit term is greater than 0.05, which is insignificant and shows that the regression equation fits the experiment well. With unknown factors, the interference in the experimental results is small, indicating that the residuals are all caused by random errors and this model has high reliability. After analysis of variance, the primary and secondary order of the five factors on R2 and RMSE is X1 > X5 > X2 > X4 > X3, that is, learning rate > number of iterations > momentum factor > number of hidden nodes in the second layer > number of hidden nodes in the first layer. In Y1, the primary terms X1, X2, X3 and X5 have a significant impact on the results; X4 has a significant impact on the results; the interaction terms X1X3 have a significant impact on the results; the quadratic terms X12, X32, X42 and X52 have a significant impact on the results. In Y2, the primary terms X1, X2 and X5 have a significant impact on the results; X4 has a significant impact on the results; the interaction terms X1X4 have a significant impact on the results; the quadratic terms X12 and X32 have a significant impact on the results and X42 and X52 have a significant impact on the results.

4.2.2. Response Surface Method Analysis of Three-Layer Neural Network

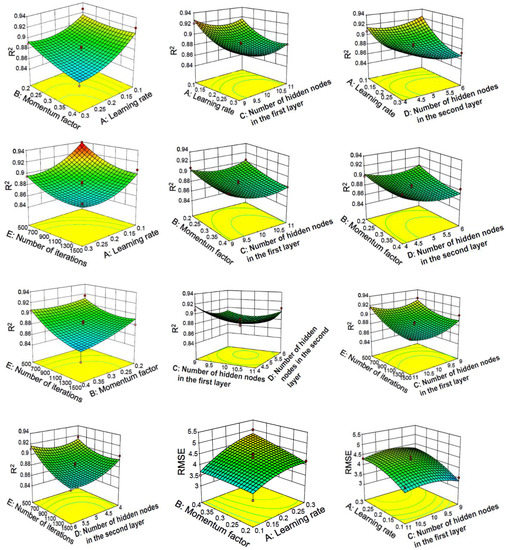

The response surface curve and contour lines of the interaction of learning rate, momentum factor, number of hidden nodes in the first layer, number of hidden nodes in the second layer and number of iterations on R2 and RMSE are shown in Figure 10.

Figure 10.

Response surface and contour lines of the interaction of various factors on network performance with three-layer neural network.

As shown in Figure 10, it can be seen from the steepness of the response surface that the learning rate, the number of iterations and the momentum factor have a significant impact on the response value. In contrast, the number of hidden nodes has a much weaker effect on the response value. Most of the contour lines are elliptical, indicating that the interaction between various factors is relatively large.

It can also be seen from Figure 10 that the three-layer neural network used for the verification of the test set has a similar conclusion to the two-layer neural network (optimization model R2 > baseline model R2, where the optimization model R2 = 0.94246, baseline model R2 = 0.94246) and the prediction performance of the three-layer neural network for the test set is better than that of the two-layer neural network.

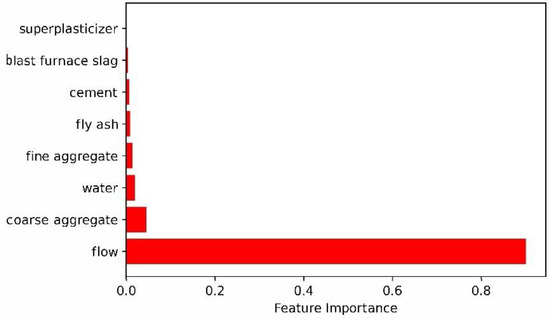

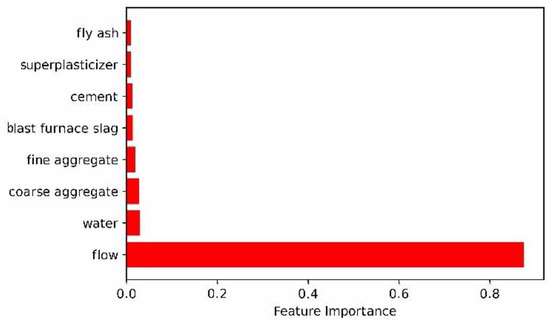

4.2.3. Analysis of Slump Influencing Factors Based on Three-Layer Neural Network

Using a method similar to the two-layer neural network, the influence factors of each variable on the slump in the three-layer neural network model can be obtained. A bar graph of each variable’s effect on slump is also shown in Figure 11.

Figure 11.

A bar graph of each variable’s effect on slump with three-layer neural network.

It can be seen from Figure 11 that the order of influence on slump is flow > water > coarse aggregate > fine aggregate > blast furnace slag > cement > superplasticizer > fly ash. Flow and water are the two top influencing factors with 0.875 and 0.030, respectively. Fly ash and superplasticizer are the two lowest influencing factors with 0.0099 and 0.0101, respectively.

5. Conclusions and Analysis

Through the response surface analysis method, the influence of each parameter on the neural network model at different levels is analyzed. As can be seen from the above:

- (1)

- In the two-layer neural network model, the learning rate has the most significant impact on the entire model and the change of other parameters has a weaker effect on the network model. The reason for this phenomenon is that as the learning rate increases, the network weight value is updated too much, the swing amplitude exceeds the training range of the model performance and, finally, the system prediction deviation becomes too large. It can also be seen that the network performance of the two-layer neural network is relatively stable. A two-layer neural network constructed by optimization parameters was used to evaluate the test set, and the results are R2 = 0.927 and RMSE = 3.373. At the same time, the unoptimized two-layer neural network was evaluated on the test set, and the result was only R2 = 0.91623.

- (2)

- In the three-layer neural network model, the interaction between the parameters is relatively strong and compared with the two-layer neural network, its predictive ability is stronger. A three-layer neural network constructed by optimization parameters was used to evaluate the test set, and the results are R2 = 0.955 and RMSE = 2.781. At the same time, the unoptimized three-layer neural network was evaluated on the test set, and the result was only R2 = 0.94246. From the response surface graph, the coefficient of determination and the root mean square error, it can be seen that the three-layer neural network is more stable and more accurate.

- (3)

- Interestingly, it can be seen from Figure 8 and Figure 11 that the four main factors affecting the slump are flow, water, coarse aggregate and fine aggregate, which also shows that the two-layer neural network and the three-layer neural network have the same law in evaluating the factors affecting the slump. Of course, there are differences between the two-layer neural network and three-layer neural network in the prediction of influencing factors of the slump. Two-layer neural network results show that coarse aggregate is the second factor affecting slump, while three-layer neural network results indicate that water is the second factor affecting slump. In addition, the influence factor of flow evaluated by the two-layer neural network is even more than 0.9, while the influence factor of each variable evaluated by the three-layer neural network on the slump is relatively reasonable. Therefore, the prediction performance of three-layer neural network is better than that of two-layer neural network.

This paper expounds that the RSM method is used to optimize the parameters of the BP neural network model of concrete slump, and the optimized parameters are used to build the model for the training set and the test set for verification, which are verified to have better performance than the unoptimized benchmark model. However, the research work in this paper still has the following shortcomings:

- (1)

- The basic data of concrete slump in this experiment are too small, which, more or less, affects the accuracy of the conclusion. Based on this, it could be considered to further expand the data to achieve a more accurate and reliable effect.

- (2)

- The BP neural network used in this paper has a similar “black box” effect, and many model parameters are not interpretable. The next step also requires the use of state-of-the-art deep learning algorithms (e.g., interpretable neural networks) for concrete slump prediction.

Author Contributions

Conceptualization, Y.Z., J.W. and L.F.; data curation, Y.L. (Yunrong Luo); formal analysis, Y.C. and L.L.; methodology, Y.L. (Yong Liu) and Y.C.; software, Y.Z.; supervision, L.F.; writing—original draft, Y.C.; writing—review and editing, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xu, G.Q.; Su, Y.P.; Han, T.L. Prediction model of compressive strength of green concrete based on BP neural network. Concrete 2013, 2, 33–35. [Google Scholar]

- Gambhir, M.L. Concrete Technology: Theory and Practice; Tata McGraw-Hill Education: Noida, India, 2013. [Google Scholar]

- Ramezani, M.; Kim, Y.H.; Hasanzadeh, B.; Sun, Z. Influence of carbon nanotubes on SCC flowability. In Proceedings of the 8th International RILEM Symposium on Self-Compacting Concrete, Washington DC, USA, 15–18 May 2016; pp. 97–406. [Google Scholar]

- Ramezani, M.; Dehghani, A.; Sherif, M.M. Carbon nanotube reinforced cementitious composites: A comprehensive review. Constr. Build. Mater. 2022, 315, 125100. [Google Scholar] [CrossRef]

- Sun, Y.L.; Liao, X.H.; Li, Y. Slump prediction of recycled concrete. Concrete 2013, 6, 81–83. [Google Scholar]

- Qi, C.Y. Study on Slump Loss Mechanism of Concrete and Slump-Preserving Materials; Wuhan University of Technology: Wuhan, China, 2015. [Google Scholar]

- Li, Y.L. Building Materials; China Construction Industry Press: Beijing, China, 1993. [Google Scholar]

- Ge, P.; Sun, Z.Q. Neural Network Theory and Implementation of MATLABR2007; Electronic Industry Press: Beijing, China, 2007. [Google Scholar]

- Lei, Y.J. MATLAB Genetic Algorithm Toolbox and Application; Xidian University Press: Xi’an, China, 2005. [Google Scholar]

- Hu, X.; Li, B.; Mo, Y.; Alselwi, O. Progress in artificial intelligence-based prediction of concrete performance. J. Adv. Concr. Technol. 2021, 19, 924–936. [Google Scholar] [CrossRef]

- Shariati, M.; Mafipour, M.S.; Mehrabi, P.; Ahmadi, M.; Wakil, K.; Trung, N.T.; Toghroli, A. Prediction of concrete strength in presence of furnace slag and fly ash using Hybrid ANN-GA (Artificial Neural Network-Genetic Algorithm). Smart Struct. Syst. 2020, 25, 183–195. [Google Scholar]

- Yeh, I.C. Design of high-performance concrete mixture using neural networks and nonlinear programming. J. Comput. Civ. Eng. 1999, 13, 36–42. [Google Scholar] [CrossRef]

- Yeh, I.C. Simulation of concrete slump using neural networks. Constr. Mater. 2009, 162, 11–18. [Google Scholar] [CrossRef]

- Koneru, V.S.; Ghorpade, V.G. Assessment of strength characteristics for experimental based workable self-compacting concrete using artificial neural network. Mater. Today Proc. 2020, 26, 1238–1244. [Google Scholar] [CrossRef]

- Duan, Z.H.; Kou, S.C.; Poon, C.S. Prediction of compressive strength of recycled aggregate concrete using artificial neural networks. Constr. Build. Mater. 2013, 40, 1200–1206. [Google Scholar] [CrossRef]

- Ji, T.; Lin, T.; Lin, X. A concrete mix proportion design algorithm based on artificial neural networks. Cem. Concr. Res. 2006, 36, 1399–1408. [Google Scholar] [CrossRef]

- Demir, F. Prediction of elastic modulus of normal and high strength concrete by artificial neural networks. Constr. Build. Mater. 2008, 22, 1428–1435. [Google Scholar] [CrossRef]

- Chandwani, V.; Agrawal, V.; Nagar, R. Modeling slump of ready mix concrete using genetic algorithms assisted training of Artificial Neural Networks. Expert Syst. Appl. 2015, 42, 885–893. [Google Scholar] [CrossRef]

- Li, D.H.; Gao, Q.; Xia, X.; Zhang, J.W. Prediction of comprehensive performance of concrete based on BP neural network. Mater. Guide 2019, 33, 317–320. [Google Scholar]

- Yeh, I.C. Analysis of strength of concrete using design of experiments and neural networks. J. Mater. Civ. Eng. 2006, 18, 597–604. [Google Scholar] [CrossRef]

- Jain, A.; Jha, S.K.; Misra, S. Modeling and analysis of concrete slump using artificial neural networks. J. Mater. Civ. Eng. 2008, 20, 628–633. [Google Scholar] [CrossRef]

- Wang, J.Z.; Lu, Z.C. Optimal design of mix ratio of high-strength concrete based on genetic algorithm. Concr. Cem. Prod. 2004, 6, 19–22. [Google Scholar]

- Lim, C.H.; Yoon, Y.S.; Kim, J.H. Genetic algorithm in mix proportioning of high-performance concrete. Cem. Concr. Res. 2004, 34, 409–420. [Google Scholar] [CrossRef]

- Liu, C.L.; Li, G.F. Optimal design of mix ratio of fly ash high performance concrete based on genetic algorithm. J. Lanzhou Univ. Technol. 2006, 32, 133–135. [Google Scholar]

- Yeh, I.C. Computer-aided design for optimum concrete mixtures. Cem. Concr. Compos. 2007, 29, 193–202. [Google Scholar] [CrossRef]

- Li, Y. Design and Optimization of Classifier Based on Neural Network; Anhui Agricultural University: Hefei, China, 2013. [Google Scholar]

- Gao, P.Y. Research on BP Neural Network Classifier Optimization Technology; Huazhong University of Science and Technology: Wuhan, China, 2012. [Google Scholar]

- Lei, W. Principle, Classification and Application of Artificial Neural Network. Sci. Technol. Inf. 2014, 3, 240–241. [Google Scholar]

- Jiao, Z.Q. Principle and Application of BP Artificial Neural Network. Technol. Wind. 2010, 12, 200–201. [Google Scholar]

- Ma, Q.M. Research on Email Classification Algorithm of BP Neural Network Based on Perceptron Optimization; University of Electronic Science and Technology of China: Chengdu, China, 2011. [Google Scholar]

- Yeh, I.C. Exploring concrete slump model using artificial neural networks. J. Comput. Civ. Eng. 2006, 20, 217–221. [Google Scholar] [CrossRef]

- Wang, B.B.; Zhao, T.L. A study on prediction of wind power based on improved BP neural network based on genetic algorithm. Electrical 2019, 12, 16–21. [Google Scholar]

- Gulcehre, C.; Moczulski, M.; Denil, M.; Bengio, Y. Noisy activation functions. Int. Conf. Mach. Learn. 2016, 48, 3059–3068. [Google Scholar]

- Li, Z.X.; Wei, Z.B.; Shen, J.L. Coral concrete compressive strength prediction model based on BP neural network. Concrete 2016, 1, 64–69. [Google Scholar]

- Yeh, I.C. Modeling slump flow of concrete using second-order regressions and artificial neural networks. Cem. Concr. Compos. 2007, 29, 474–480. [Google Scholar] [CrossRef]

- Shen, J.R.; Xu, Q.J. Prediction of Shear Strength of Roller Compacted Concrete Dam Layers Based on Artificial Neural Network and Fuzzy Logic System. J. Tsinghua Univ. 2019, 59, 345–353. [Google Scholar]

- Ramezani, M.; Kim, Y.H.; Sun, Z. Probabilistic model for flexural strength of carbon nanotube reinforced cement-based materials. Compos. Struct. 2020, 253, 112748. [Google Scholar] [CrossRef]

- Ramezani, M.; Kim, Y.H.; Sun, Z. Elastic modulus formulation of cementitious materials incorporating carbon nanotubes: Probabilistic approach. Constr. Build. Mater. 2021, 274, 122092. [Google Scholar] [CrossRef]

- Ramezani, M.; Kim, Y.H.; Sun, Z. Mechanical properties of carbon-nanotube-reinforced cementitious materials: Database and statistical analysis. Mag. Concr. Res. 2020, 72, 1047–1071. [Google Scholar] [CrossRef]

- Yu, C.; Weng, S.Y. Outlier detection and variable selection of mixed regression model based on non-convex penalty likelihood method. Stat. Decis. 2020, 36, 5–10. [Google Scholar]

- Zhao, H.; Shao, S.H.; Xie, D.P. Methods of processing outliers in analysis data. J. Zhoukou Norm. Univ. 2004, 5, 70–71+115. [Google Scholar]

- Fu, P.P.; Si, Q.; Wang, S.X. Hyperparameter optimization of machine learning algorithms: Theory and practice. Comput. Program. Ski. Maint. 2020, 12, 116–117+146. [Google Scholar]

- Kumar, M.; Mishra, S.K. Jaya based functional link multilayer perceptron adaptive filter for Poisson noise suppression from X-ray images. Multimed. Tools Appl. 2018, 77, 24405–24425. [Google Scholar] [CrossRef]

- Hammoudi, A.; Moussaceb, K.; Belebchouche, C.; Dahmoune, F. Comparison of artificial neural network (ANN) and response surface methodology (RSM) prediction in compressive strength of recycled concrete aggregates. Constr. Build. Mater. 2019, 209, 425–436. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).