Analysis of the Region of Interest According to CNN Structure in Hierarchical Pattern Surface Inspection Using CAM

Abstract

1. Introduction

2. Methods

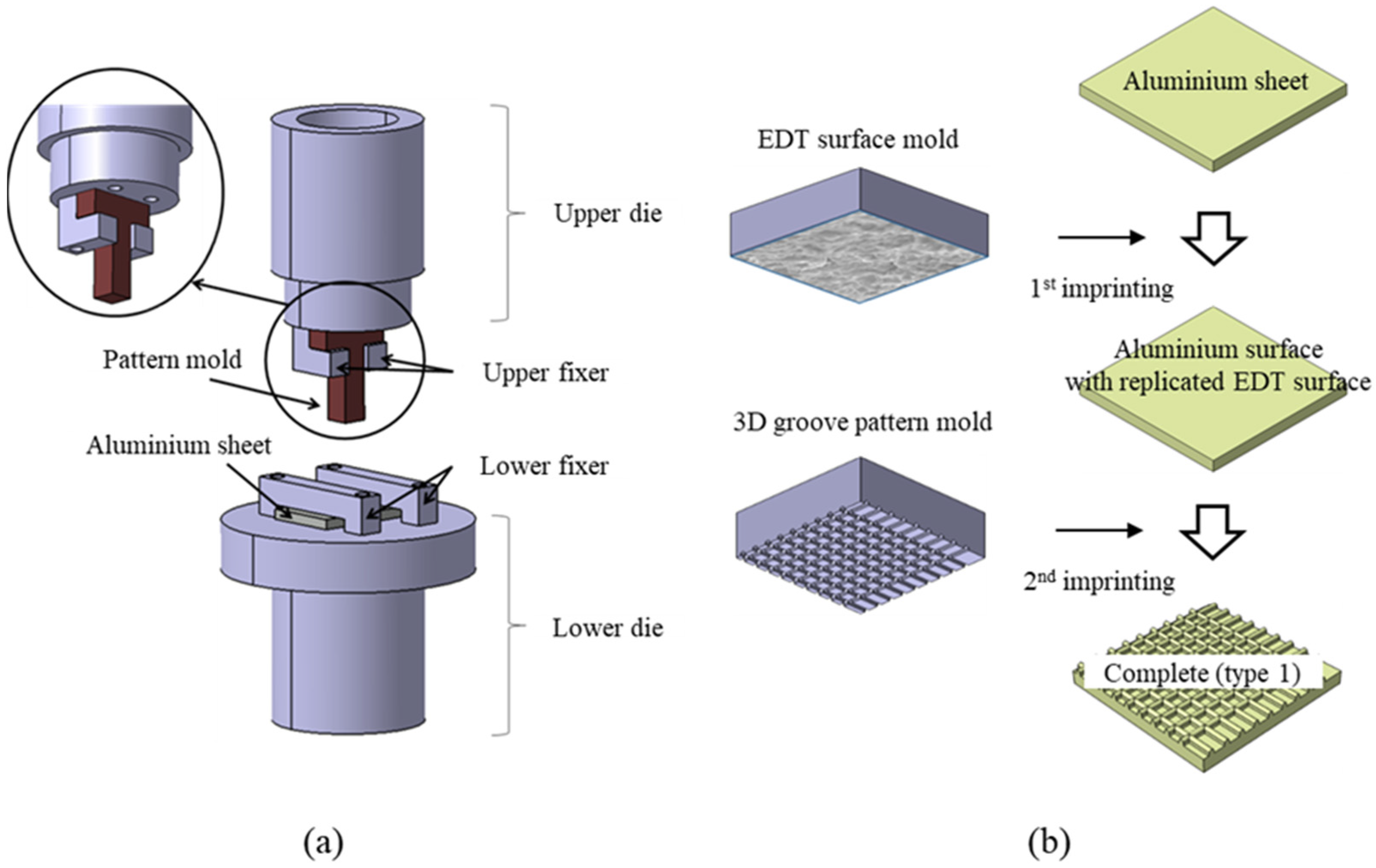

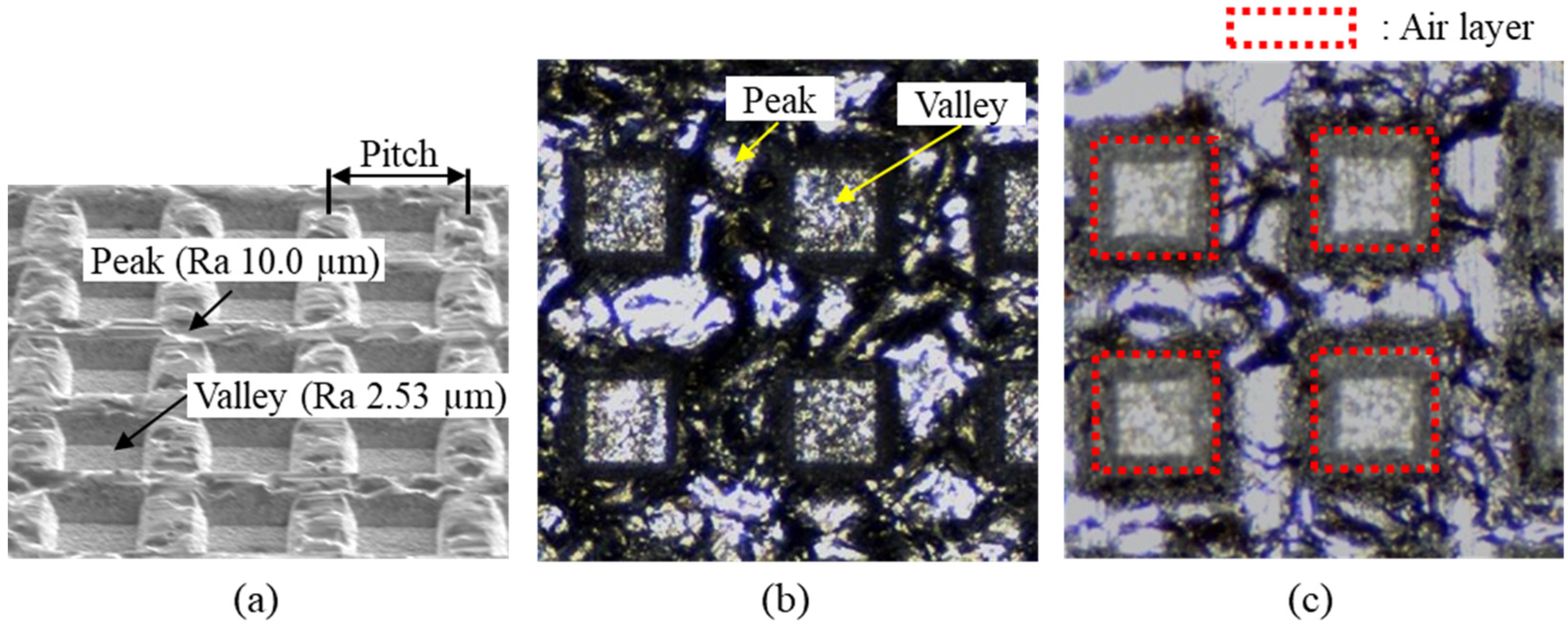

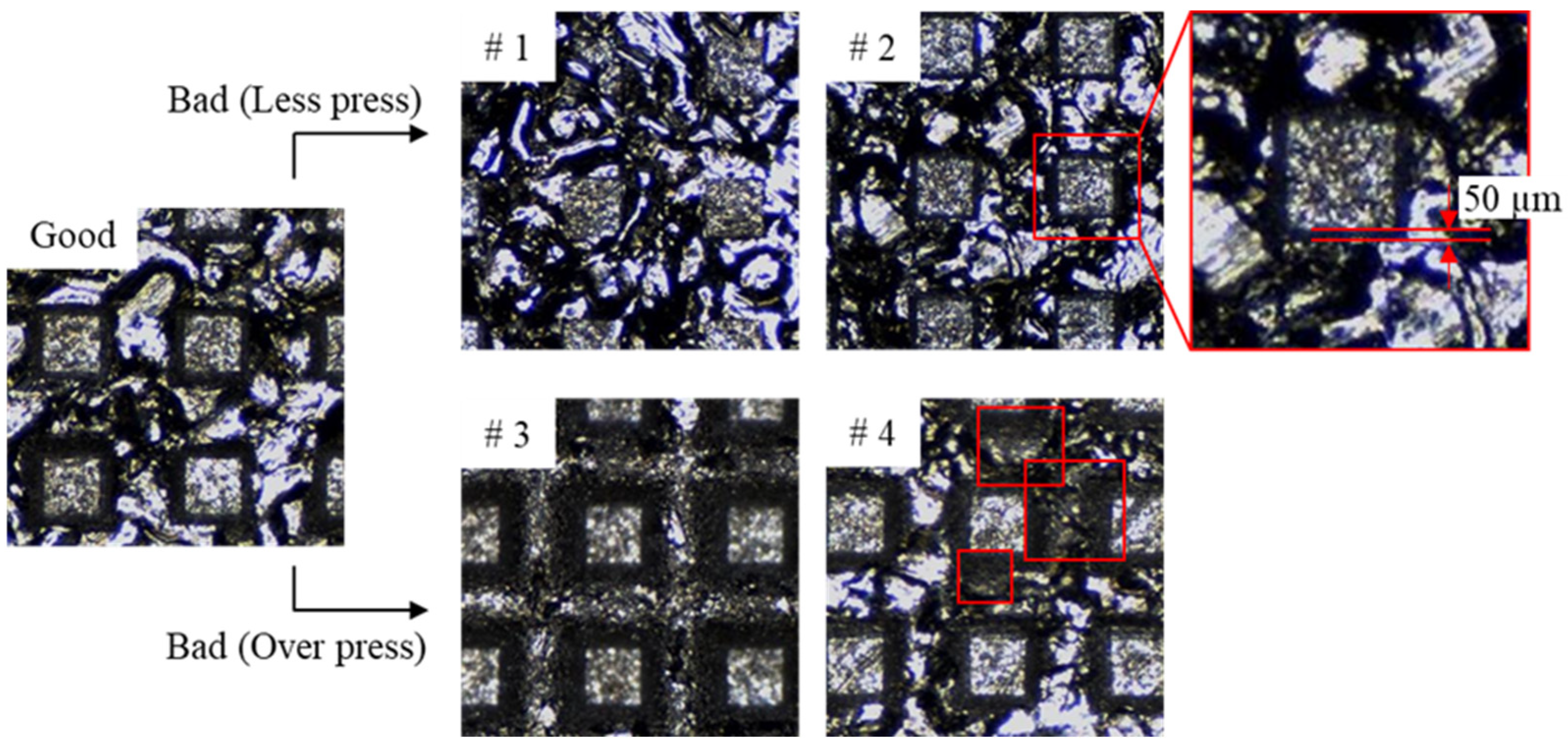

2.1. Imprinting Process

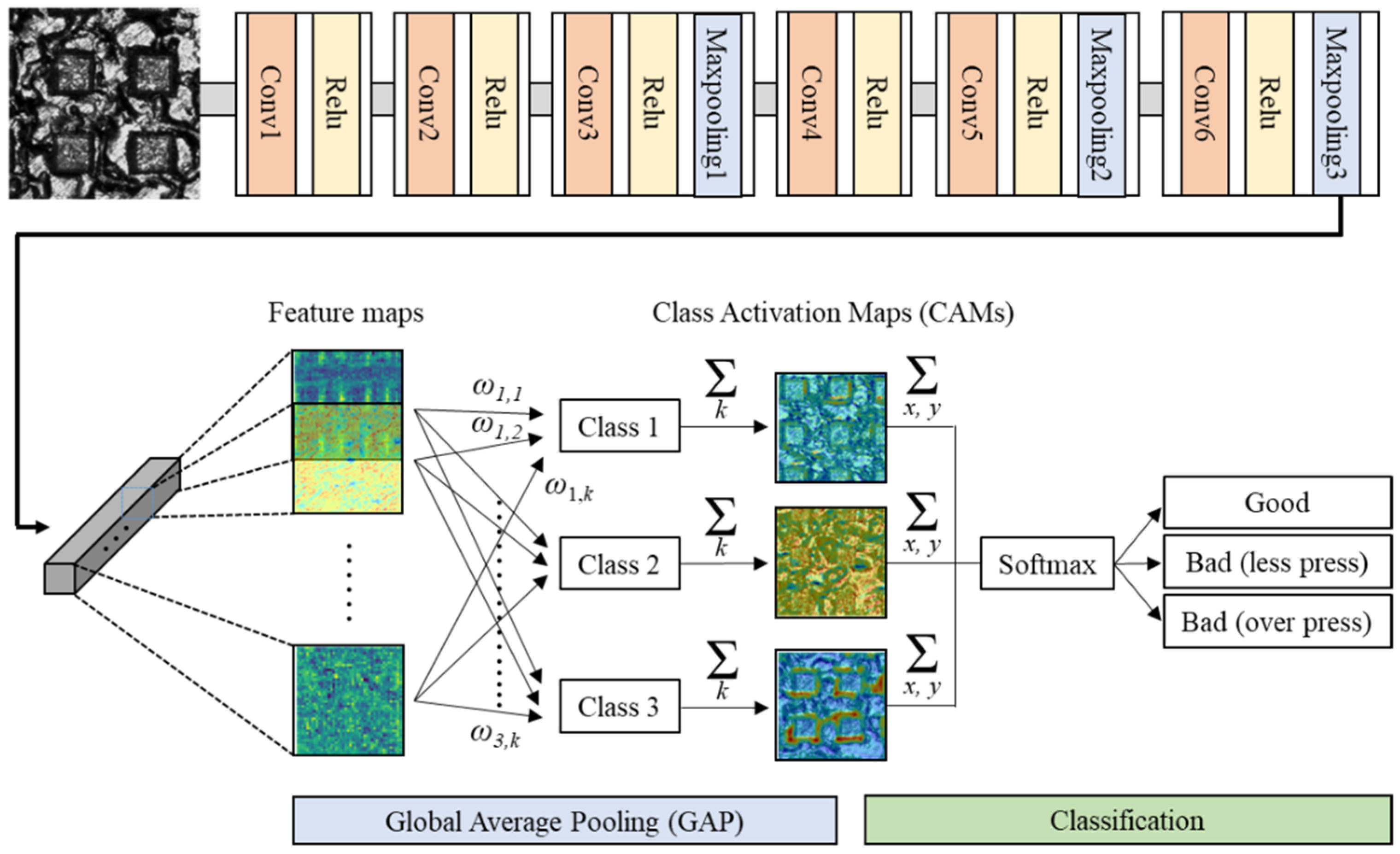

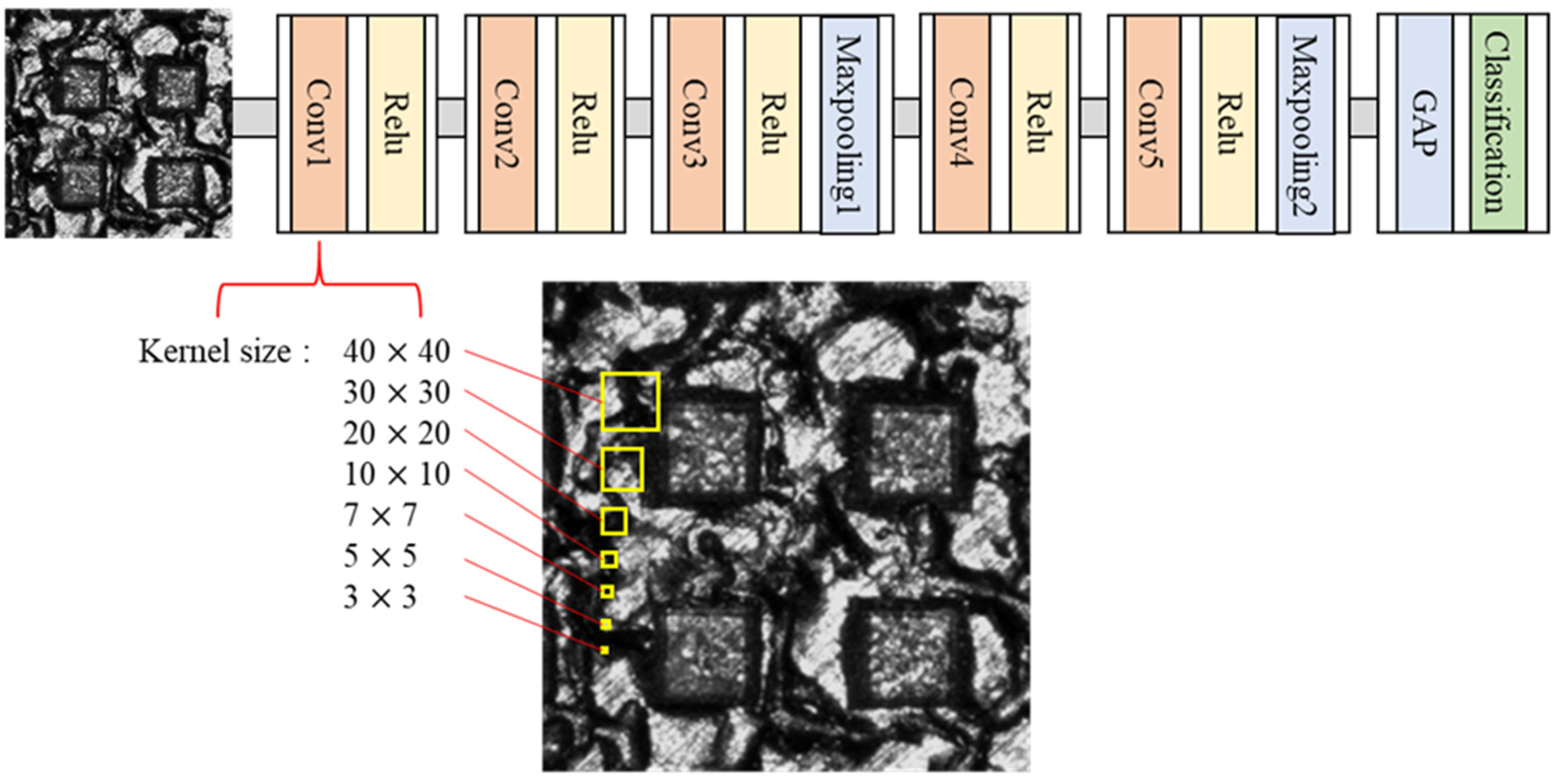

2.2. Defect Detection Method Based on CNN with CAM

2.3. Evaluation Metrics

3. Results and Discussion

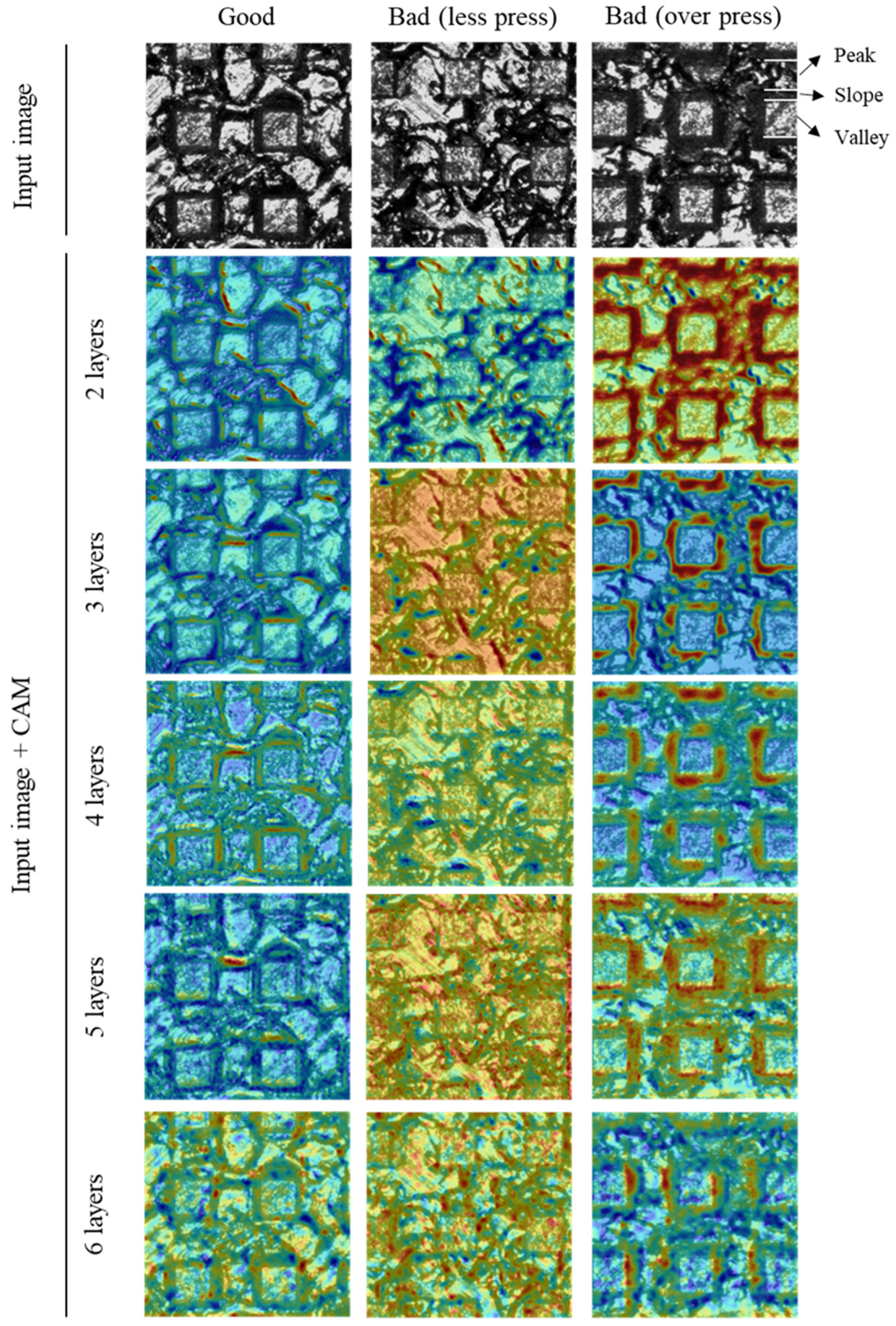

3.1. Effect of the Number of Convolutional Layers

3.1.1. The Reason Why TO_Rate Is Higher Than TL_Rate

3.1.2. The Effect of CNN Model Depth

3.1.3. The Reason Why Precision Is Higher Than Recall

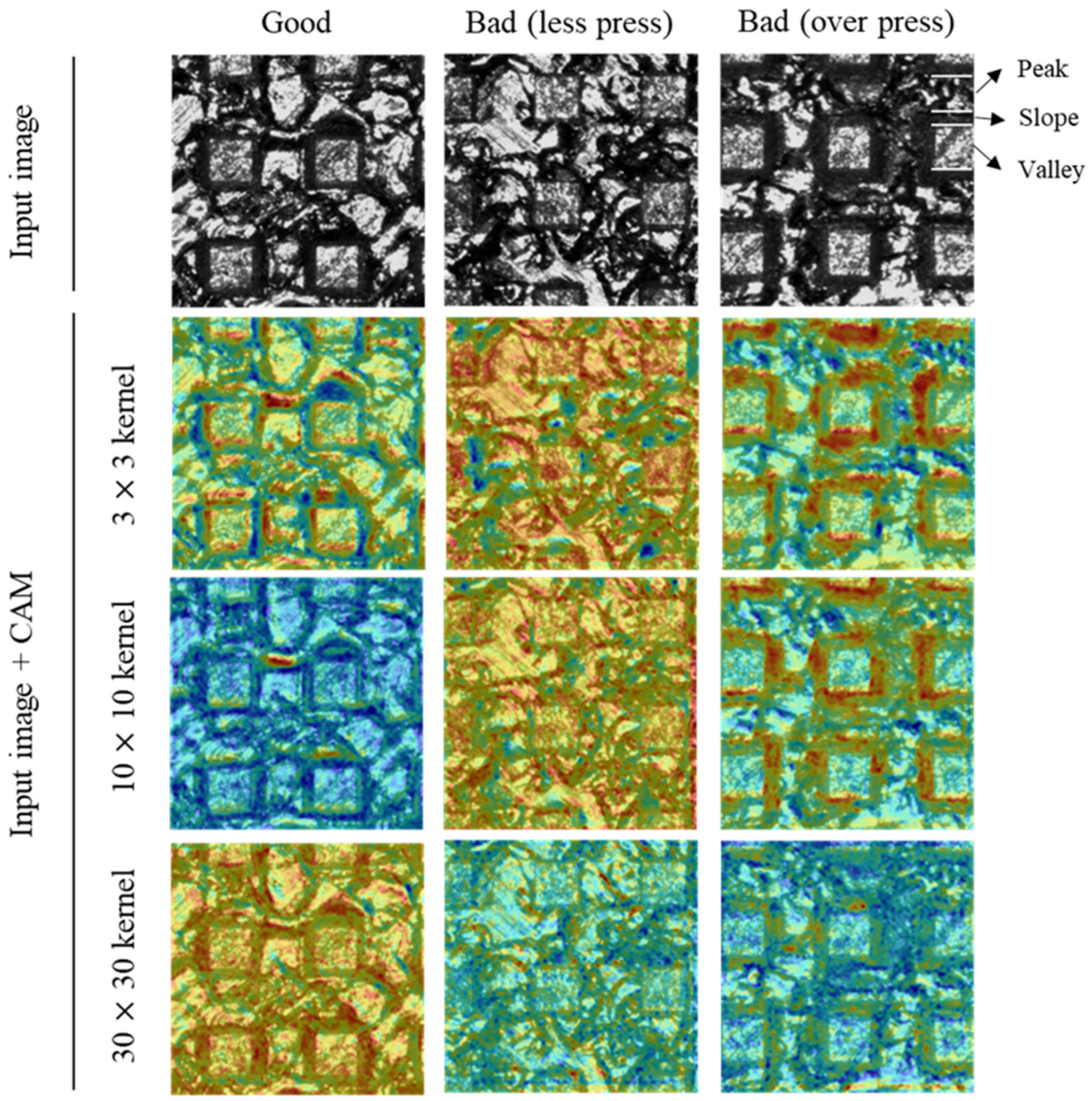

3.2. Effect of the Kernel Size

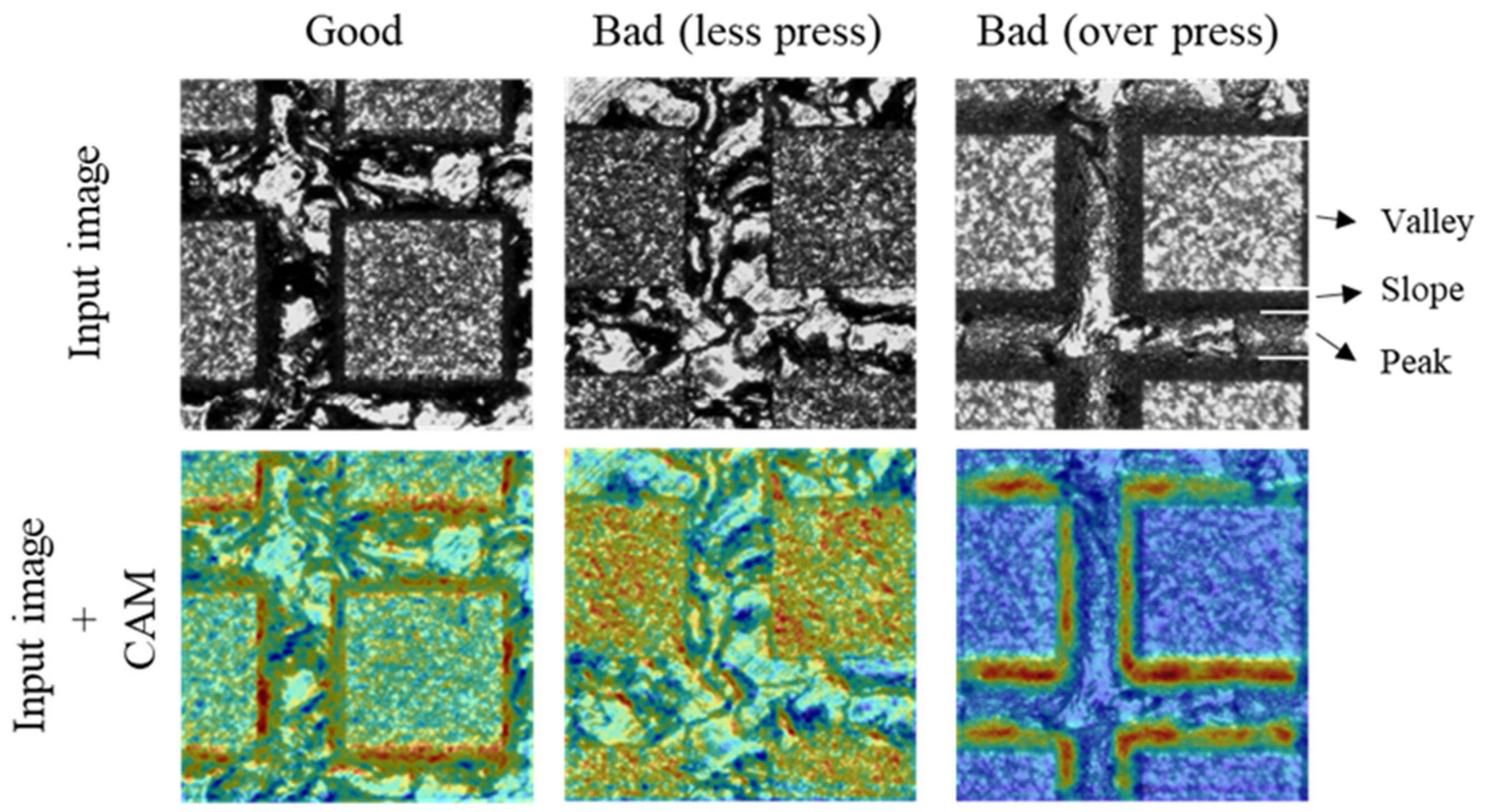

3.3. Compatibility

3.4. Comparison with Other CNN Structures

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Wang, T.; Chen, Y.; Qiao, M.; Snoussi, H. A fast and robust convolutional neural network-based defect detection model in product quality control. Int. J. Adv. Manuf. Technol. 2018, 94, 3465–3471. [Google Scholar] [CrossRef]

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of deep convolutional neural network architectures for automated feature extraction in industrial inspection. CIRP Ann. 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Ji, Y.; Zhang, H.; Zhang, Z.; Liu, M. CNN-based encoder-decoder networks for salient object detection: A comprehensive review and recent advances. Inf. Sci. 2021, 546, 835–857. [Google Scholar] [CrossRef]

- Jiao, J.; Zhao, M.; Lin, J.; Liang, K. A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 2020, 417, 36–63. [Google Scholar] [CrossRef]

- Słoński, M.; Schabowicz, K.; Krawczyk, E. Detection of flaws in concrete using ultrasonic tomography and convolutional neural networks. Materials 2020, 13, 1557. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Shi, P.; Deng, L.; Chu, H.; Kong, X. Residual Strength Evaluation of Corroded Textile-Reinforced Concrete by the Deep Learning-Based Method. Materials 2020, 13, 3226. [Google Scholar] [CrossRef] [PubMed]

- Słoński, M.; Tekieli, M. 2D digital image correlation and region-based convolutional neural network in monitoring and evaluation of surface cracks in concrete structural elements. Materials 2020, 13, 3527. [Google Scholar] [CrossRef]

- Liu, Y.; Yuan, Y.; Balta, C.; Liu, J. A Light-Weight Deep-Learning Model with Multi-Scale Features for Steel Surface Defect Classification. Materials 2020, 13, 4629. [Google Scholar] [CrossRef]

- Anthimopoulos, M.; Christodoulidis, S.; Ebner, L.; Christe, A.; Mougiakakou, S. Lung pattern classification for interstitial lung diseases using a deep convolutional neural network. IEEE Trans. Med. Imaging. 2016, 35, 1207–1216. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Nakazawa, T.; Kulkarni, D.V. Wafer map defect pattern classification and image retrieval using convolutional neural network. IEEE Trans. Semicon. Manuf. 2018, 31, 309–314. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, M.; Zheng, P.; Yang, H.; Zou, J. A smart surface inspection system using faster R-CNN in cloud-edge computing environment. Adv. Eng. Inform. 2020, 43, 101037. [Google Scholar] [CrossRef]

- Wang, J.; Ma, Y.; Zhang, L.; Gao, R.X.; Wu, D. Deep learning for smart manufacturing: Methods and applications. J. Manuf. Syst. 2018, 48, 144–156. [Google Scholar] [CrossRef]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated defect classification in sewer closed circuit television inspections using deep convolutional neural networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Chen, H.; Pang, Y.; Hu, Q.; Liu, K. Solar cell surface defect inspection based on multispectral convolutional neural network. J. Intell. Manuf. 2020, 31, 453–468. [Google Scholar] [CrossRef]

- Chen, F.C.; Jahanshahi, M.R. NB-CNN: Deep learning-based crack detection using convolutional neural network and Naïve Bayes data fusion. IEEE Trans. Ind. 2017, 65, 4392–4400. [Google Scholar] [CrossRef]

- Kim, S.; Kim, W.; Noh, Y.K.; Park, F.C. Transfer learning for automated optical inspection. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2517–2524. [Google Scholar]

- Zhou, Z.; Lu, Q.; Wang, Z.; Huang, H. Detection of micro-defects on irregular reflective surfaces based on improved faster R-CNN. Sensors 2019, 19, 5000. [Google Scholar] [CrossRef]

- Sun, X.; Gu, J.; Huang, R.; Zou, R.; Giron Palomares, B. Surface defects recognition of wheel hub based on improved faster R-CNN. Electronics 2019, 8, 481. [Google Scholar] [CrossRef]

- Nguyen, T.P.; Choi, S.; Park, S.J.; Park, S.H.; Yoon, J. Inspecting method for defective casting products with convolutional neural network (CNN). Int. J. Precis. Eng. Manuf. Green Tech. 2021, 8, 583–594. [Google Scholar] [CrossRef]

- Park, J.K.; Kwon, B.K.; Park, J.H.; Kang, D.J. Machine learning-based imaging system for surface defect inspection. Int. J. Precis. Eng. Manuf. Green Tech. 2016, 3, 303–310. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Sun, K.H.; Huh, H.; Tama, B.A.; Lee, S.Y.; Jung, J.H.; Lee, S. Vision-based fault diagnostics using explainable deep learning with class activation maps. IEEE Access 2020, 8, 129169–129179. [Google Scholar] [CrossRef]

- Chen, H.; Hu, Q.; Zhai, B.; Chen, H.; Liu, K. A robust weakly supervised learning of deep Conv-Nets for surface defect inspection. Neural. Comput. Appl. 2020, 32, 11229–11244. [Google Scholar] [CrossRef]

- Lin, H.; Li, B.; Wang, X.; Shu, Y.; Niu, S. Automated defect inspection of LED chip using deep convolutional neural network. J. Intell. Manuf. 2019, 30, 2525–2534. [Google Scholar] [CrossRef]

- Simpson, J.T.; Hunter, S.R.; Aytug, T. Superhydrophobic materials and coatings: A review. Rep. Prog. Phys. 2015, 78, 086501. [Google Scholar] [CrossRef]

- Zhang, X.; Shi, F.; Niu, J.; Jiang, Y.; Wang, Z. Superhydrophobic surfaces: From structural control to functional application. J. Mater. Chem. 2008, 18, 621–633. [Google Scholar] [CrossRef]

- Moon, I.Y.; Kim, B.H.; Lee, H.W.; Oh, Y.S.; Kim, J.H.; Kang, S.H. Superhydrophobic polymer surface with hierarchical patterns fabricated in hot imprinting process. Int. J. Precis. Eng. Manuf. Green Technol. 2020, 7, 493–503. [Google Scholar] [CrossRef]

- Moon, I.Y.; Kang, S.H.; Yoon, J. Hydrophobic aluminum alloy surfaces fabricated by imprinting process and their wetting state evaluation using air layer images. Int. J. Precis. Eng. Manuf. 2021, 22, 147–159. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Lauderdale, FL, USA, 11–13 April 2011; pp. 315–323. [Google Scholar]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar]

- Hossin, M.; Sulaiman, M.N. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1. [Google Scholar]

| Name | Structures | Kernel Size | Channel | Output Shape (x × y × k) |

|---|---|---|---|---|

| Input image | - | - | 1 (gray) | 360 × 360 × 1 |

| 1st layer | Conv 1 | 10 × 10 | 64 | 360 × 360 × 64 |

| 2nd layer | Conv 2 | 10 × 10 | 64 | 360 × 360 × 64 |

| 3rd layer | Conv 3 | 10 × 10 | 64 | 360 x 360 x 64 |

| Max pooling 1 | 2 × 2 | - | 180 × 180 × 64 | |

| 4th layer | Conv 4 | 10 × 10 | 64 | 180 × 180 × 64 |

| 5th layer | Conv 5 | 10 × 10 | 32 | 180 × 180 × 64 |

| Max pooling 2 | 2 × 2 | - | 90 × 90 × 32 | |

| 6th layer | Conv 6 | 10 × 10 | 32 | 90 × 90 × 32 |

| Max pooling 3 | 2 × 2 | - | 45 × 45 × 32 | |

| GAP | - | - | - | Nx × Ny × 3 |

| Type | Training Set | Test Set | Sum |

|---|---|---|---|

| Good | 1118 | 271 | 1389 |

| Bad (less press) | 1133 | 276 | 1409 |

| Bad (over press) | 1109 | 293 | 1402 |

| Total | 3360 | 840 | 4200 |

| - | Actual Condition | ||

|---|---|---|---|

| Normal | Fault | ||

| Predicted condition | Normal | TP | FP |

| Fault | FN | TN | |

| No. of Layers | Accuracy (%) | Precision (%) | Recall (%) | TL_Rate (%) | TO_Rate (%) |

|---|---|---|---|---|---|

| 2 layers | 78 | 75.6 | 82.7 | 66 | 75 |

| 3 layers | 87.9 | 92.7 | 81.4 | 92.5 | 97.5 |

| 4 layers | 88 | 96.4 | 82 | 97 | 98.7 |

| 5 layers | 92.7 | 97.1 | 88 | 95 | 99 |

| 6 layers | 92 | 92 | 92 | 94 | 96.4 |

| Kernel Size | Accuracy (%) | Precision (%) | Recall (%) | TL_Rate (%) | TO_Rate (%) |

|---|---|---|---|---|---|

| 3 × 3 | 92.7 | 90.5 | 95.4 | 88 | 92.2 |

| 5 × 5 | 93.7 | 91.7 | 96 | 83.5 | 95.7 |

| 7 ×7 | 92.4 | 93.2 | 91.4 | 90 | 98.9 |

| 10 × 10 | 92.7 | 97.1 | 88 | 95 | 99 |

| 20 × 20 | 91 | 94.2 | 87.4 | 94.4 | 98.5 |

| 30 × 30 | 75.7 | 98.7 | 52 | 99.2 | 96 |

| 40 × 40 | 60 | 94.1 | 21.4 | 100 | 97 |

| Name | Structures | Kernel Size | Channel | Output Shape (x × y × k) |

|---|---|---|---|---|

| Input image | - | - | 1 (gray) | 360 × 360 × 1 |

| 1st layer | Conv 1 | 7 × 7 | 16 | 360 × 360 × 16 |

| Max pooling 1 | 2 × 2 | - | 180 × 180 × 16 | |

| 2nd layer | Conv 2 | 5 × 5 | 32 | 180 × 180 × 32 |

| 3rd layer | Conv 3 | 5 × 5 | 32 | 180 × 180 × 32 |

| Max pooling 2 | 2 × 2 | - | 90 × 90 × 32 | |

| 4th layer | Conv 4 | 3 × 3 | 64 | 90 × 90 × 64 |

| 5th layer | Conv 5 | 3 × 3 | 64 | 90 × 90 × 64 |

| Max pooling 3 | 2 × 2 | - | 45 × 45 × 64 | |

| 6th layer | Fully-connected 1 | - | - | 512 |

| Fully-connected 2 | - | - | 512 | |

| Output | - | - | - | 3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moon, I.Y.; Lee, H.W.; Kim, S.-J.; Oh, Y.-S.; Jung, J.; Kang, S.-H. Analysis of the Region of Interest According to CNN Structure in Hierarchical Pattern Surface Inspection Using CAM. Materials 2021, 14, 2095. https://doi.org/10.3390/ma14092095

Moon IY, Lee HW, Kim S-J, Oh Y-S, Jung J, Kang S-H. Analysis of the Region of Interest According to CNN Structure in Hierarchical Pattern Surface Inspection Using CAM. Materials. 2021; 14(9):2095. https://doi.org/10.3390/ma14092095

Chicago/Turabian StyleMoon, In Yong, Ho Won Lee, Se-Jong Kim, Young-Seok Oh, Jaimyun Jung, and Seong-Hoon Kang. 2021. "Analysis of the Region of Interest According to CNN Structure in Hierarchical Pattern Surface Inspection Using CAM" Materials 14, no. 9: 2095. https://doi.org/10.3390/ma14092095

APA StyleMoon, I. Y., Lee, H. W., Kim, S.-J., Oh, Y.-S., Jung, J., & Kang, S.-H. (2021). Analysis of the Region of Interest According to CNN Structure in Hierarchical Pattern Surface Inspection Using CAM. Materials, 14(9), 2095. https://doi.org/10.3390/ma14092095