Using the Machine Vision Method to Develop an On-machine Insert Condition Monitoring System for Computer Numerical Control Turning Machine Tools

Abstract

:1. Introduction

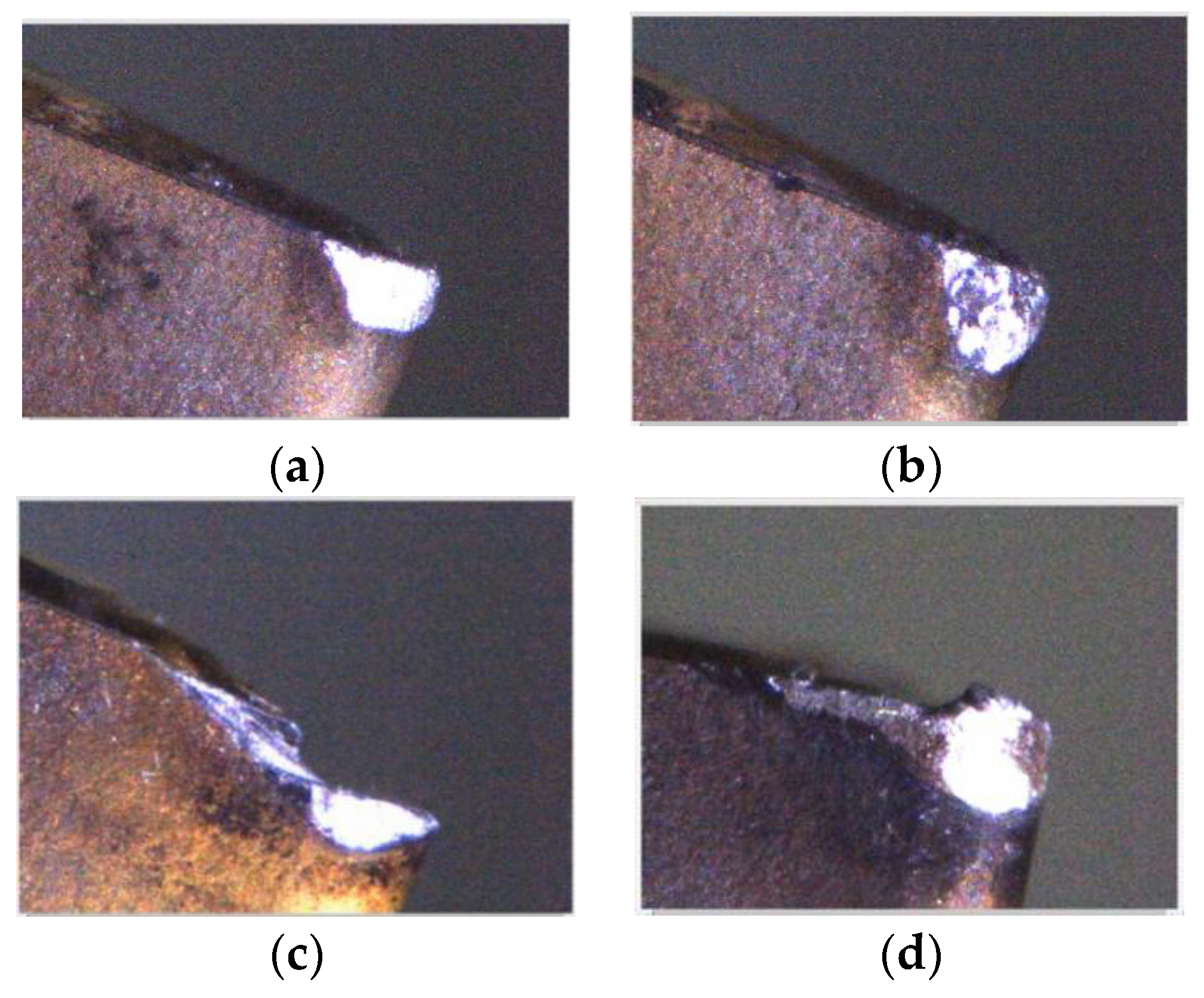

- development of an on-machine insert condition monitoring system that can be used to one-time identify the four insert conditions—fracture, BUE, chipping, and flank wear.

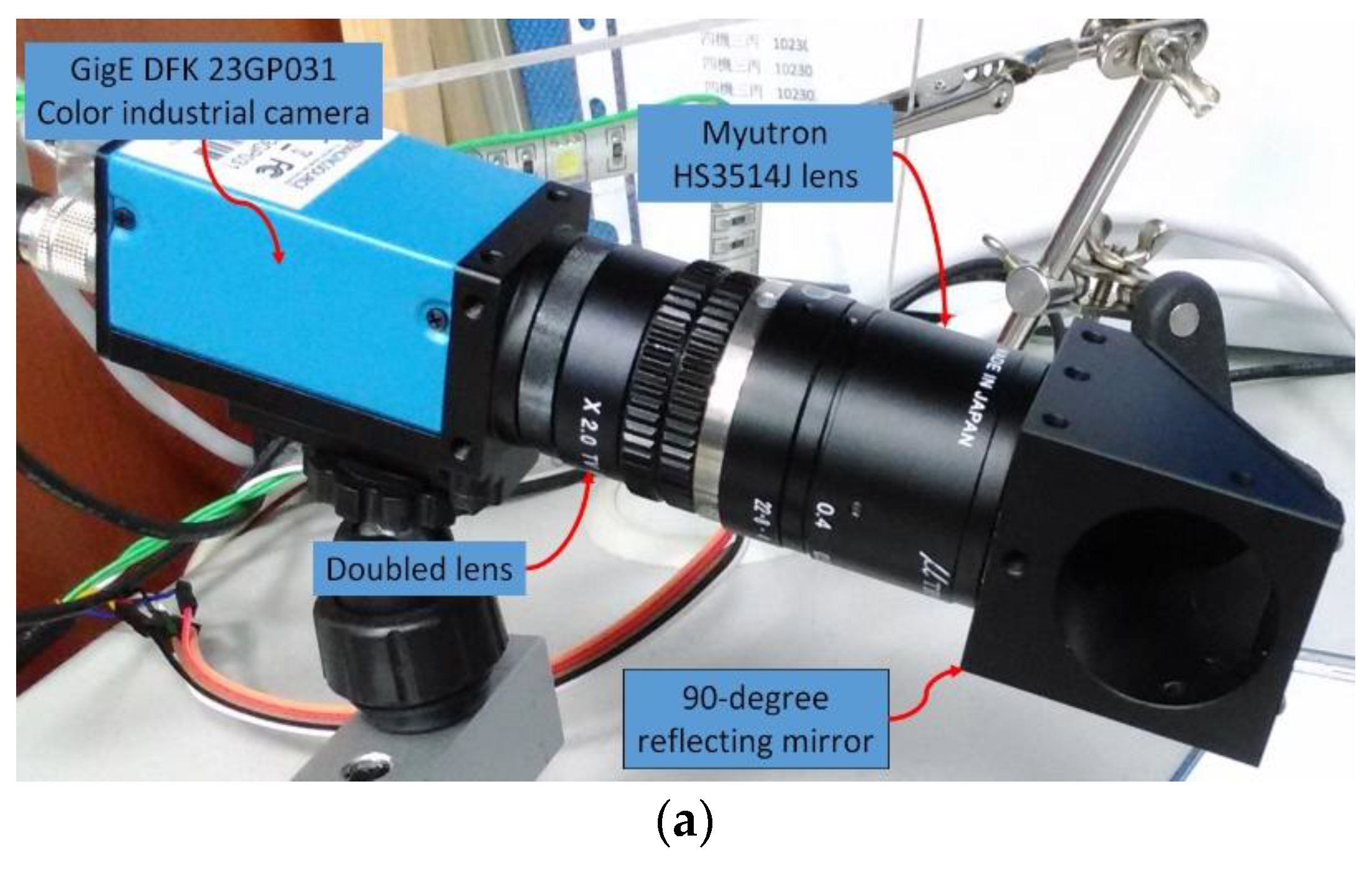

- development of a mountable visual system with different light sources to on-machine capture good-quality insert images that can be exactly analyzed under different lighting conditions.

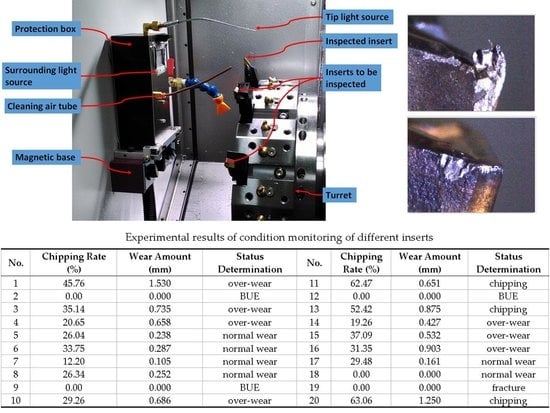

- development of a contour and texture fusion inspection method to reduce environmental problems and to accurately identify insert conditions during inspection.

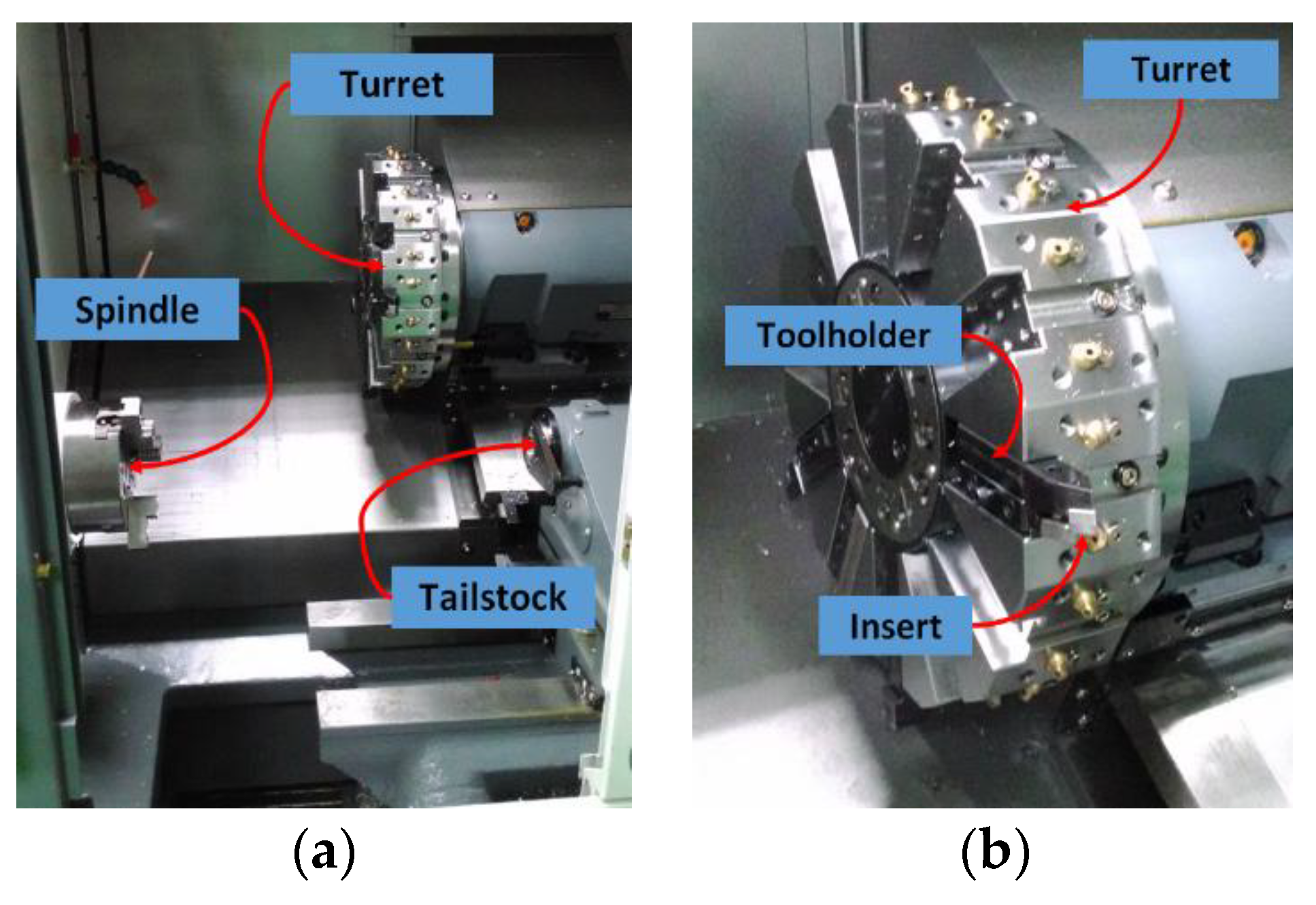

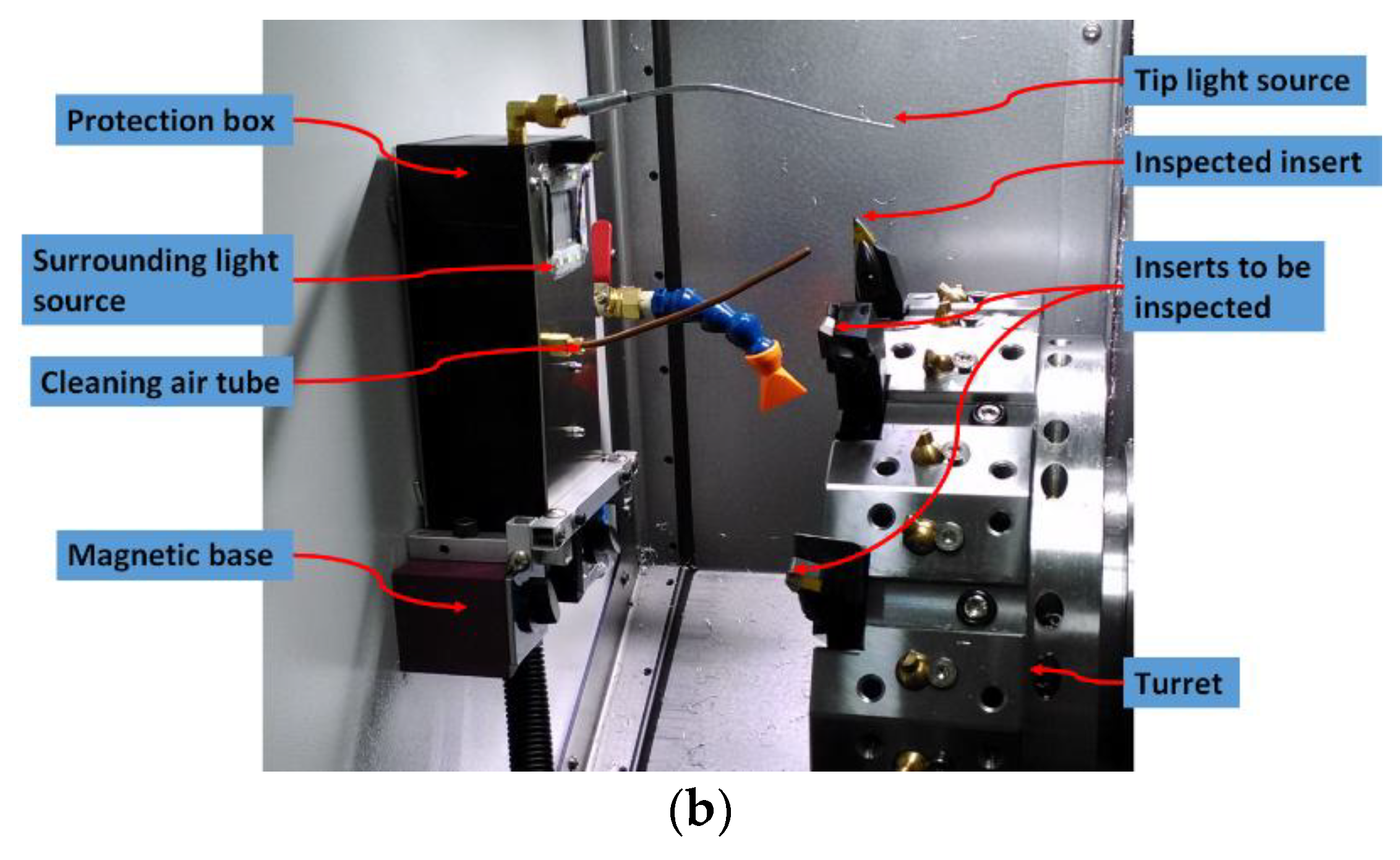

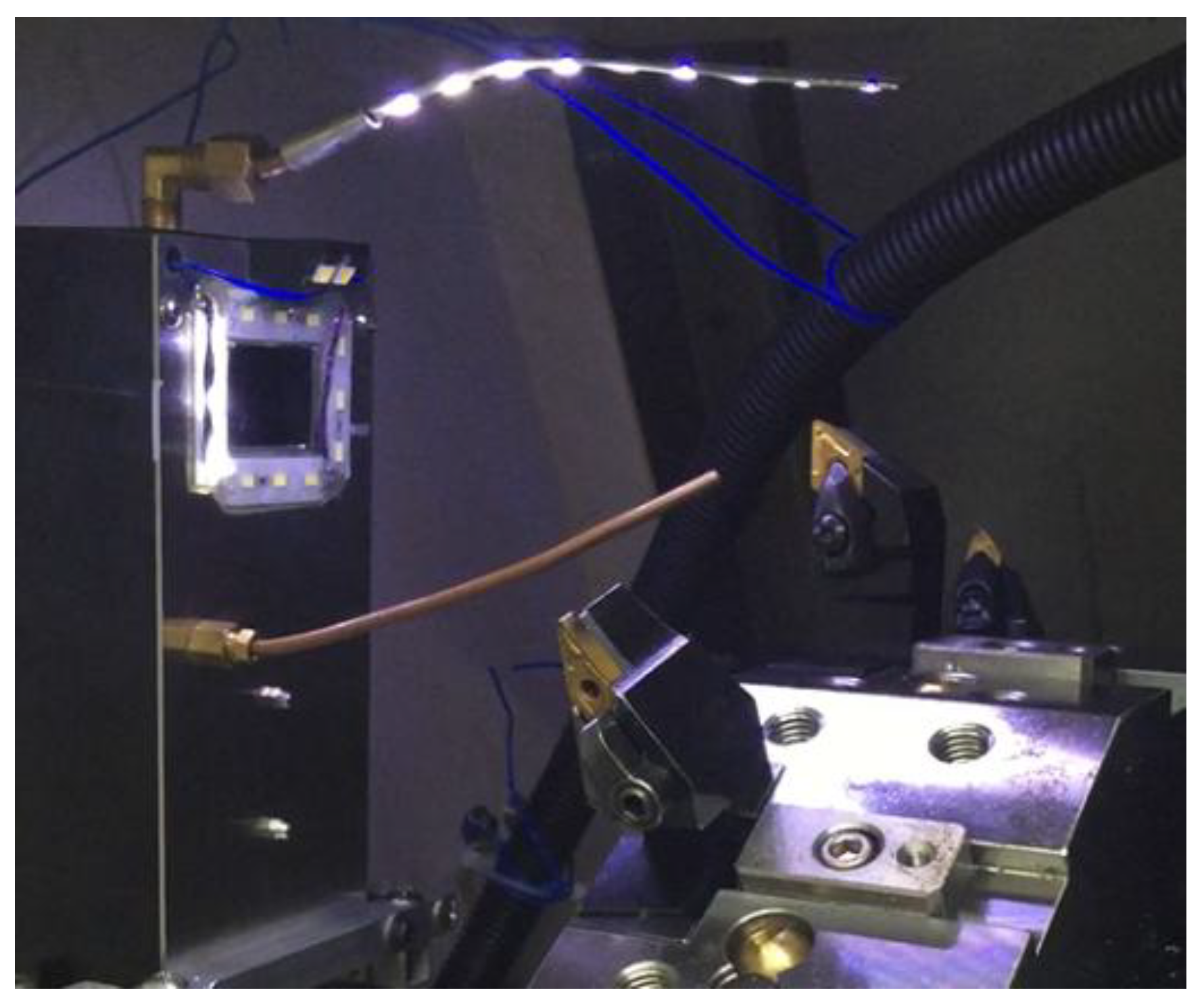

2. Introduction to the Experimental System and Equipment

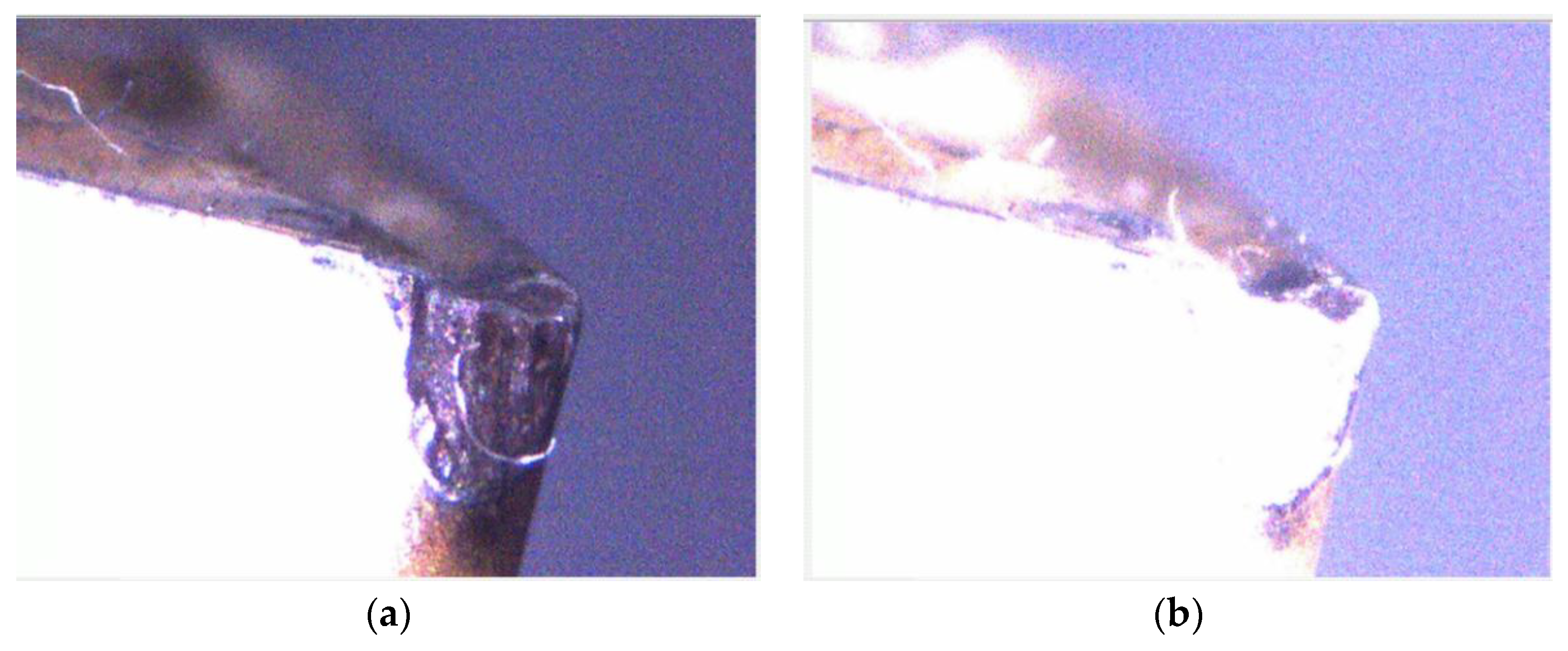

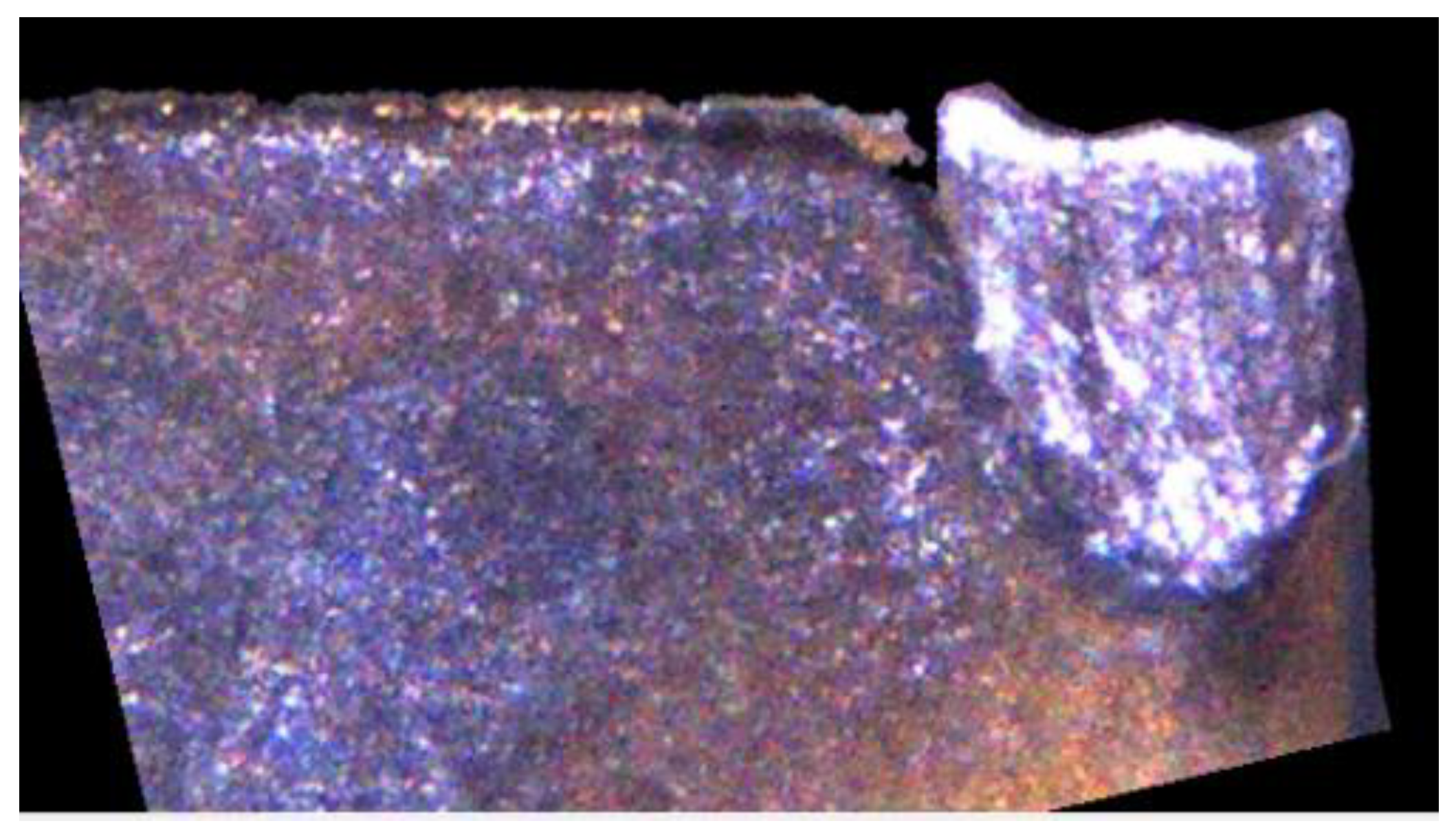

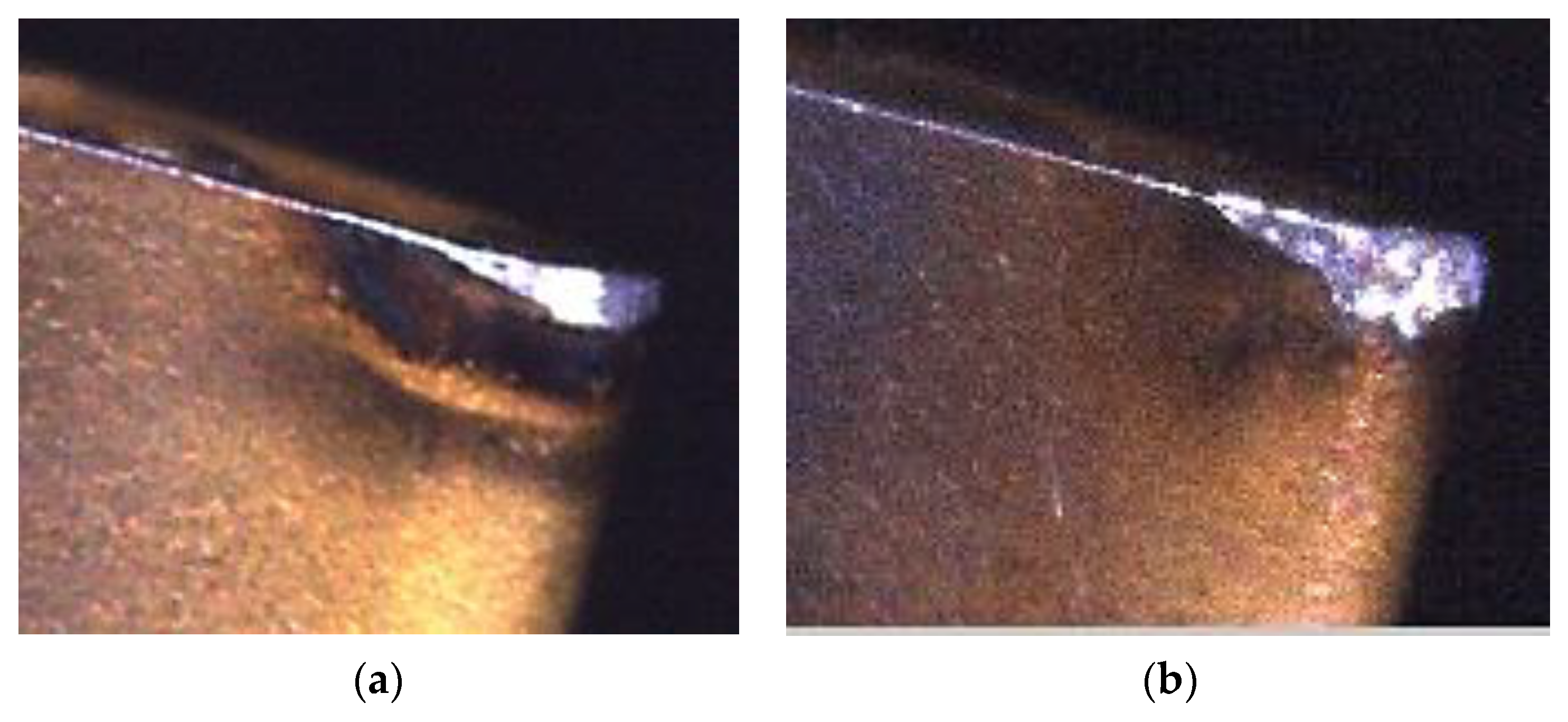

3. Insert Image Capture Process

4. Insert Condition Monitoring Classification Process

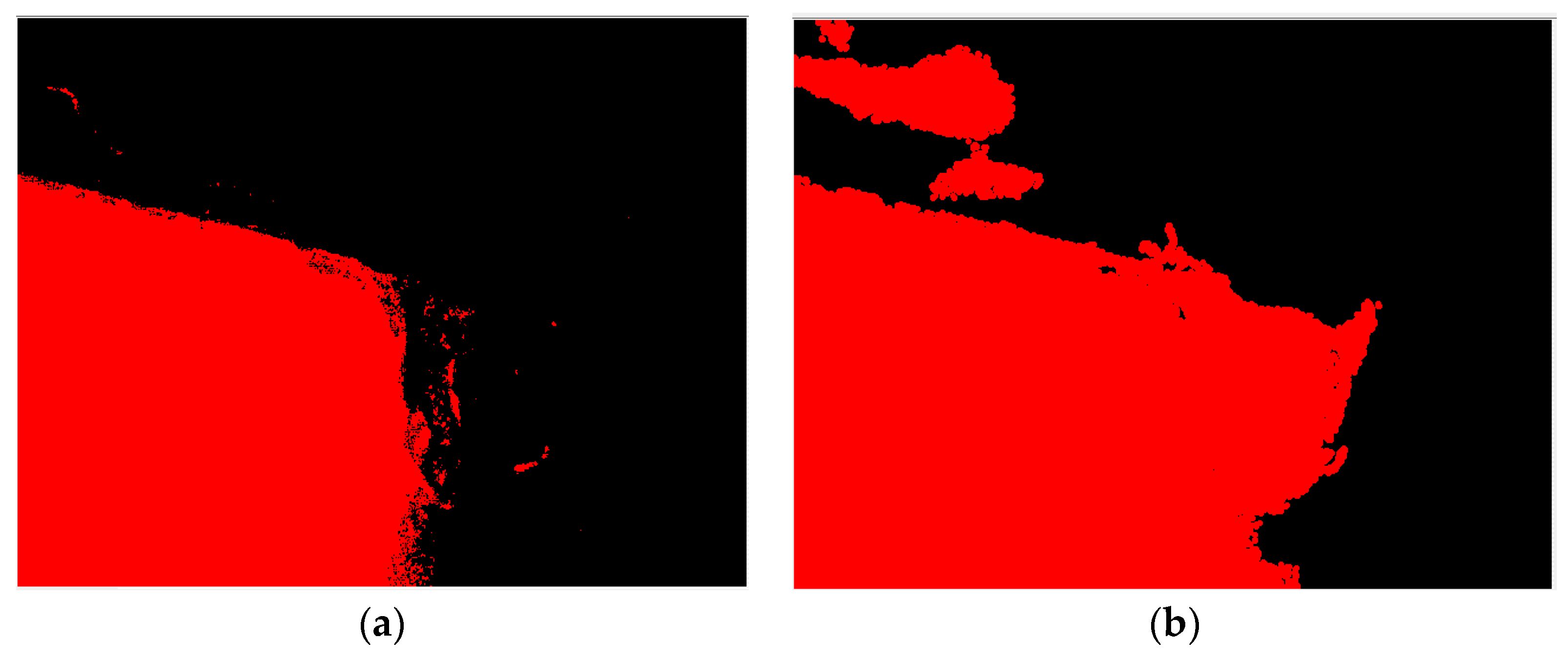

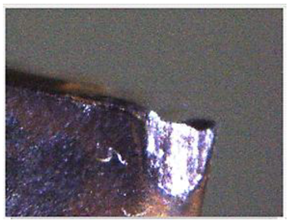

4.1. Insert Outer Profile Construction

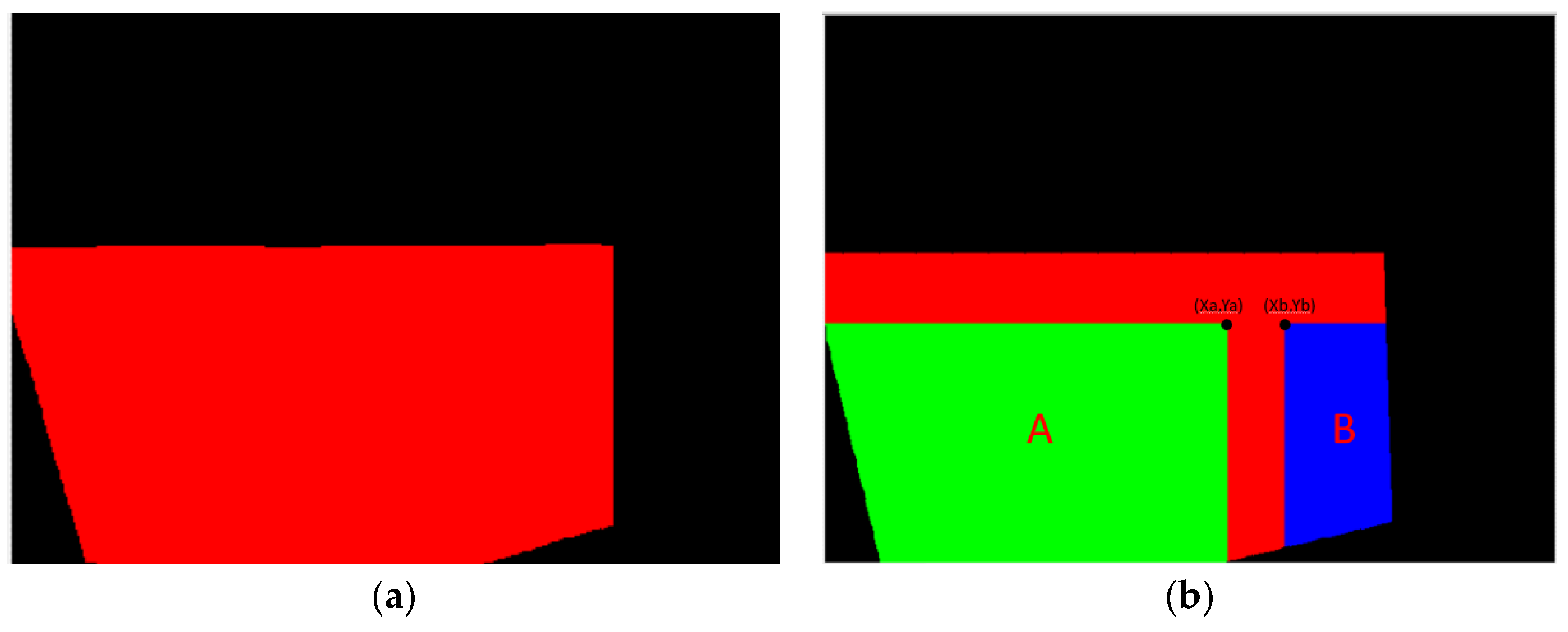

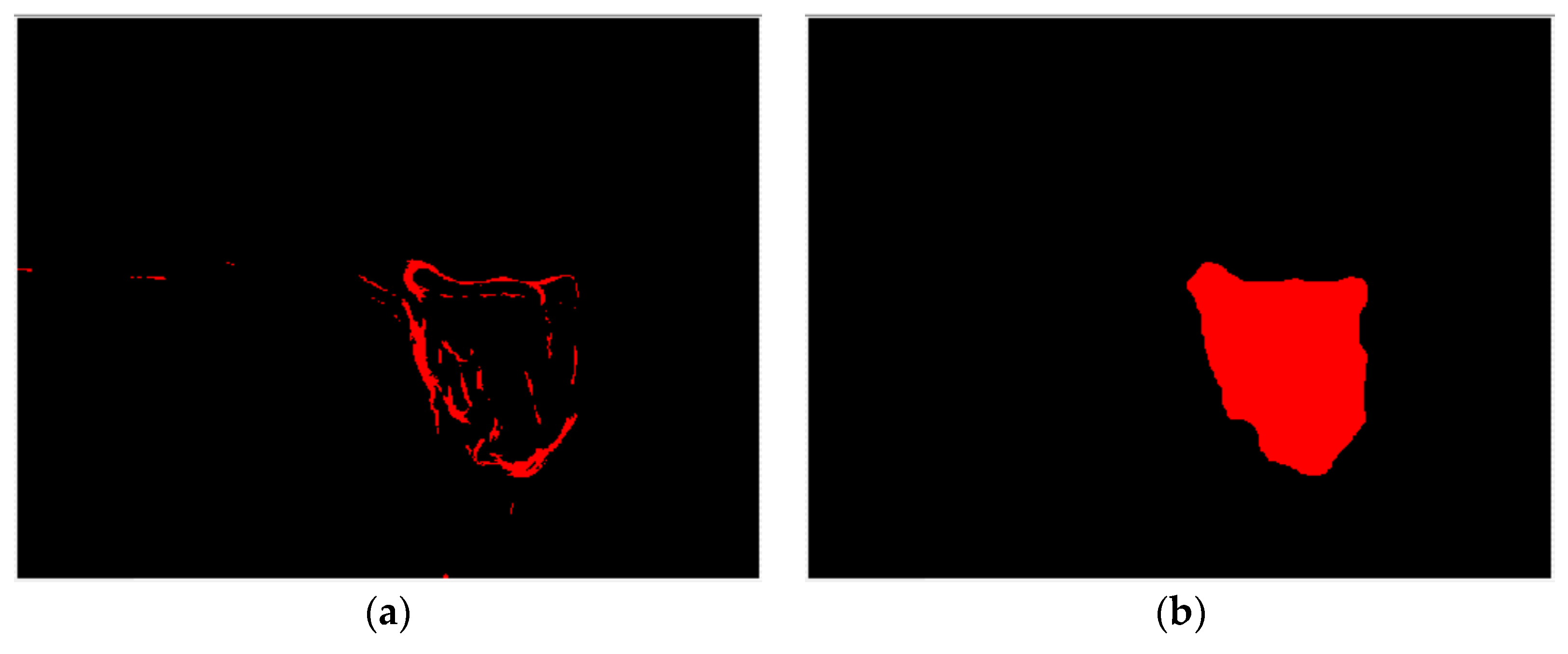

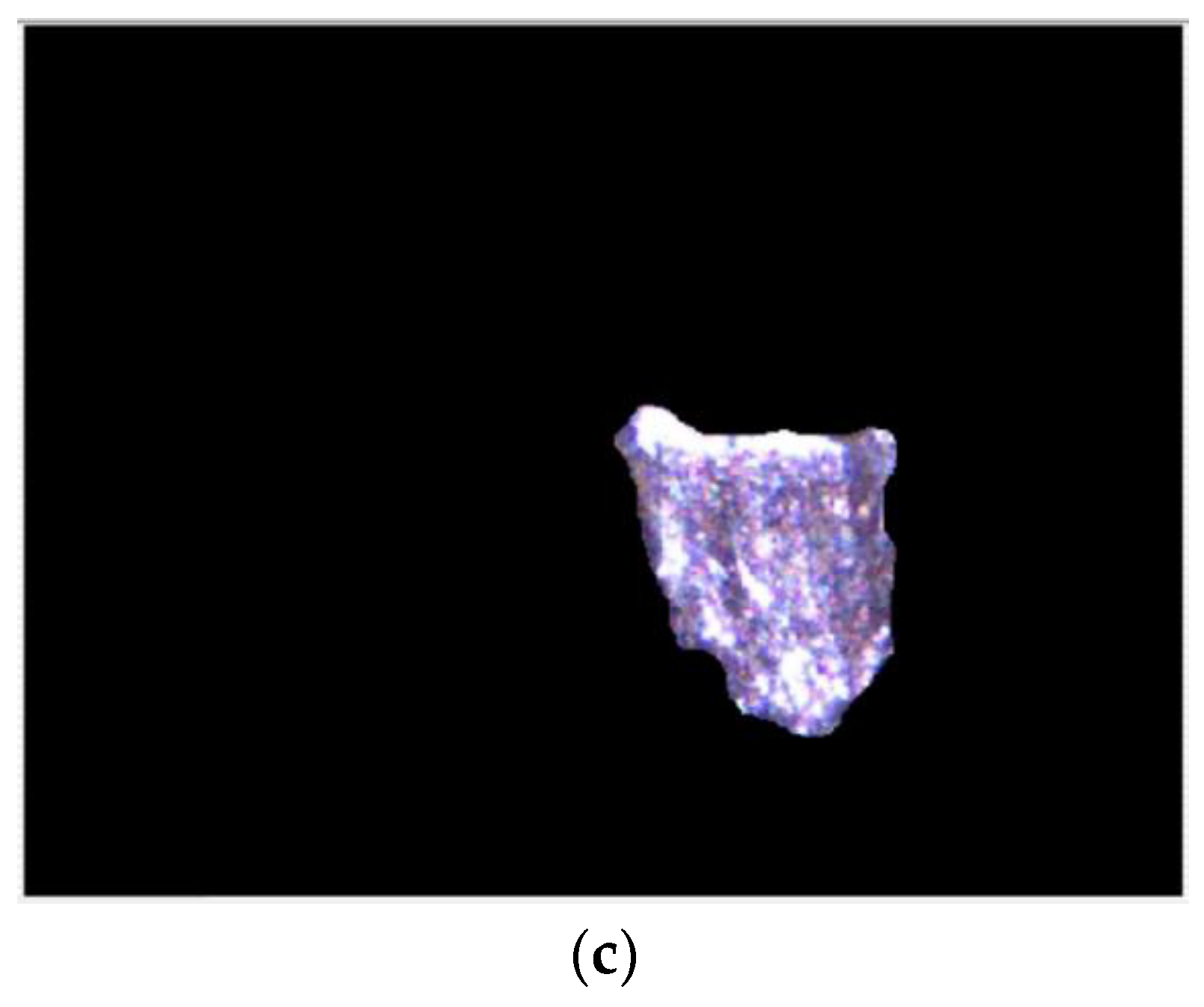

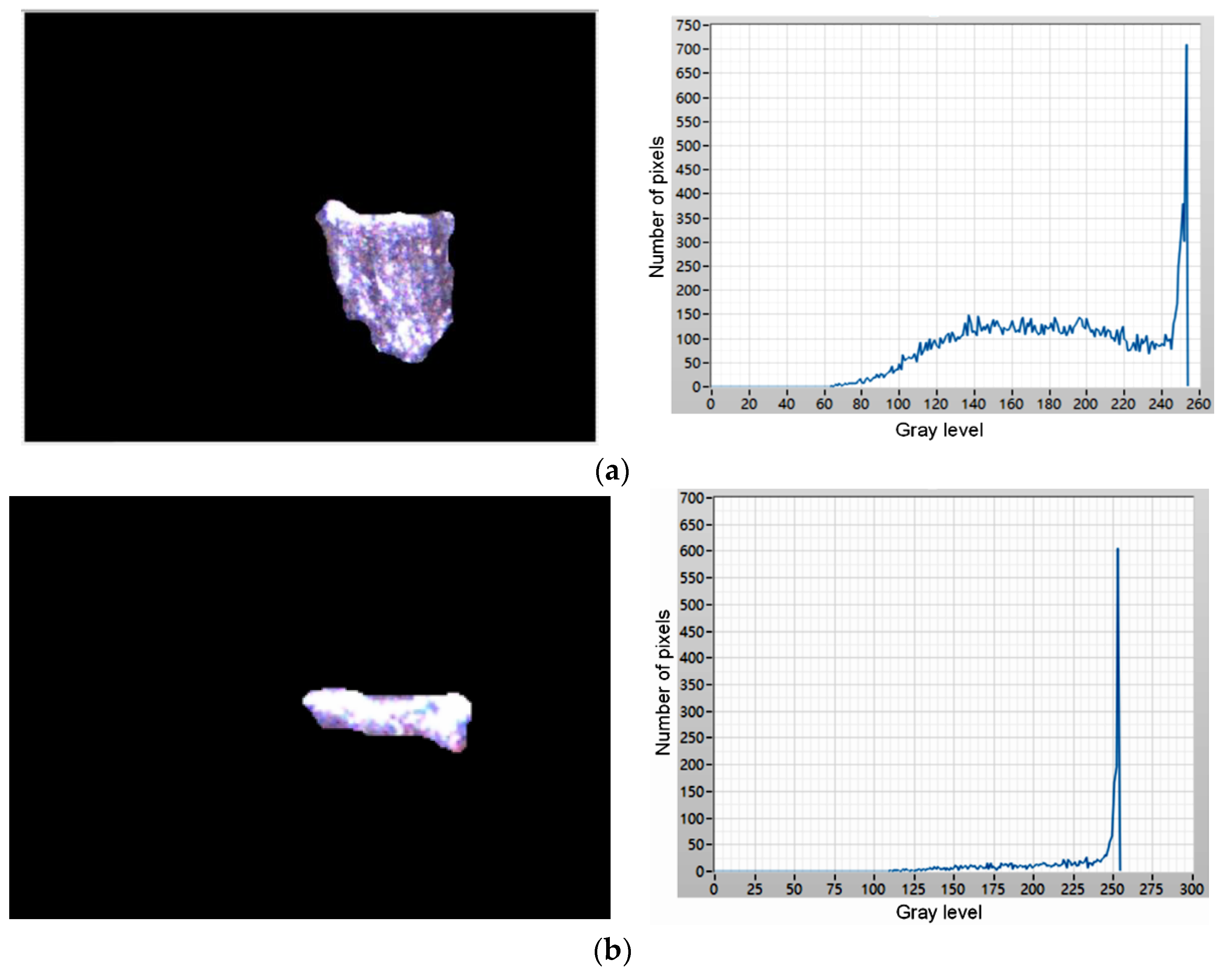

4.2. Insert Status Region Capture

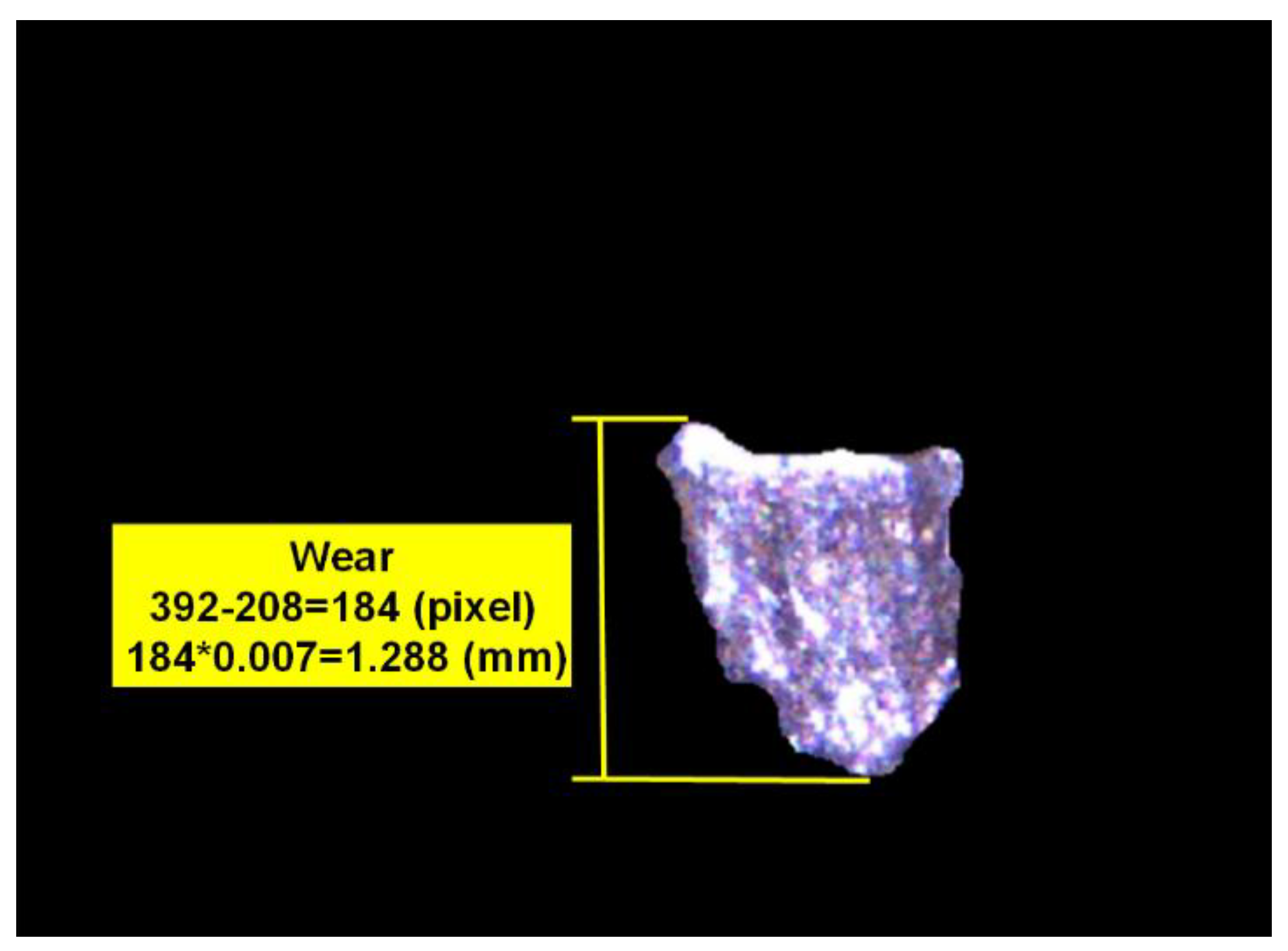

4.3. Wear Region Judgment and Calculation

5. Experiment Monitoring Insert Condition

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fernández-Valdivielso, A.; López De Lacalle, L.N.; Urbikain, G.; Rodriguez, A. Detecting the key geometrical features and grades of carbide inserts for the turning of nickel-based alloys concerning surface integrity. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2016, 230, 3725–3742. [Google Scholar] [CrossRef]

- Pereira, O.; Rodríguez, A.; Fernández-Abia, A.I.; Barreiro, J.; López de Lacalle, L.N. Cryogenic and minimum quantity lubrication for an eco-efficiency turning of AISI 304. J. Clean. Prod. 2016, 139, 440–449. [Google Scholar] [CrossRef]

- Yu, J. Machine tool condition monitoring based on an adaptive Gaussian mixture model. J. Manuf. Sci. Eng. Trans. ASME 2012, 134, 031004. [Google Scholar] [CrossRef]

- Jones, B.E. Sensors in industrial metrology. J. Phys. E Sci. Instrum. 1987, 20, 1113–1116. [Google Scholar] [CrossRef]

- Avinash, C.; Raguraman, S.; Ramaswamy, S.; Muthukrishnan, N. An Investigation on Effect of Workpiece Reinforcement Percentage on Tool Wear in Cutting Al-SiC Metal Matrix Composites. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Seattle, WA, USA, 11–15 November 2008; pp. 561–566. [Google Scholar]

- Ee, K.C.; Balaji, A.K.; Jawahir, I.S. Progressive tool-wear mechanisms and their effects on chip-curl/chip-form in machining with grooved tools: An extended application of the equivalent toolface (et) model. Wear 2003, 255, 1404–1413. [Google Scholar] [CrossRef]

- Nordgren, A.; Melander, A. Tool wear and inclusion behaviour during turning of a calcium-treated quenched and tempered steel using coated cemented carbide tools. Wear 1990, 139, 209–223. [Google Scholar] [CrossRef]

- Akbar, F.; Mativenga, P.T.; Sheikh, M.A. An evaluation of heat partition in the high-speed turning of AISI/SAE 4140 steel with uncoated and TiN-coated tools. Proc. Inst. Mech. Eng. Part B J. Eng. Manuf. 2008, 222, 759–771. [Google Scholar] [CrossRef]

- Ralston, P.A.S.; Ward, T.L.; Stottman, D.J.C. Computer observer for in-process measurement of lathe tool wear. Comput. Ind. Eng. 1988, 15, 217–222. [Google Scholar] [CrossRef]

- Massol, O.; Li, X.; Gouriveau, R.; Zhou, J.H.; Gan, O.P. An exTS based neuro-fuzzy algorithm for prognostics and tool condition monitoring. In Proceedings of the 11th International Conference on Control, Automation, Robotics and Vision, ICARCV 2010, Singapore, 7–10 December 2010; pp. 1329–1334. [Google Scholar]

- Rutelli, G.; Cuppini, D. Development of wear sensor for tool management system. J. Eng. Mater. Technol. Trans. ASME 1988, 110, 59–62. [Google Scholar] [CrossRef]

- Novak, A.; Wiklund, H. Reliability improvement of tool-wear monitoring. CIRP Ann. Manuf. Technol. 1993, 42, 63–66. [Google Scholar] [CrossRef]

- Szélig, K.; Alpek, F.; Berkes, O.; Nagy, Z. Automatic inspection in a CIM system. Comput. Ind. 1991, 17, 159–167. [Google Scholar] [CrossRef]

- Downey, J.; Bombiński, S.; Nejman, M.; Jemielniak, K. Automatic Multiple Sensor Data Acquisition System in a Real-Time Production Environment; Procedia CIRP: Capri, Italy, 2015; pp. 215–220. [Google Scholar]

- Scheffer, C.; Heyns, P.S. Monitoring of turning tool wear using vibration measurements and neural network classification. In Proceedings of the 25th International Conference on Noise and Vibration Engineering, ISMA, Leuven, 13–15 September 2000; pp. 921–928. [Google Scholar]

- Prasad, B.S.; Prabha, K.A.; Kumar, P.V.S.G. Condition monitoring of turning process using infrared thermography technique—An experimental approach. Infrared Phys. Technol. 2017, 81, 137–147. [Google Scholar] [CrossRef]

- Fu, P.; Li, W.; Guo, L. Fuzzy Clustering and Visualization Analysis of Tool Wear Status Recognition; Procedia Engineering: Shenzhen, China, 2011; pp. 479–486. [Google Scholar]

- Hamade, R.F.; Ammouri, A.H. Current Rise Index (CRI) maps of machine tool motors for tool-wear prognostic. In Proceedings of the ASME 2011 International Mechanical Engineering Congress and Exposition, IMECE 2011, Denver, CO, USA, 11–17 November 2011; pp. 867–872. [Google Scholar]

- Dutta, S.; Datta, A.; Chakladar, N.D.; Pal, S.K.; Mukhopadhyay, S.; Sen, R. Detection of tool condition from the turned surface images using an accurate grey level co-occurrence technique. Precis. Eng. 2012, 36, 458–466. [Google Scholar] [CrossRef]

- Dutta, S.; Pal, S.K.; Sen, R. On-machine tool prediction of flank wear from machined surface images using texture analyses and support vector regression. Precis. Eng. 2016, 43, 34–42. [Google Scholar] [CrossRef]

- Datta, A.; Dutta, S.; Pal, S.K.; Sen, R. Progressive cutting tool wear detection from machined surface images using Voronoi tessellation method. J. Mater. Process. Technol. 2013, 213, 2339–2349. [Google Scholar] [CrossRef]

- Kwon, Y.; Ertekin, Y.; Tseng, T.L. Characterization of tool wear measurement with relation to the surface roughness in turning. Mach. Sci. Technol. 2004, 8, 39–51. [Google Scholar] [CrossRef]

- Kassim, A.A.; Mannan, M.A.; Jing, M. Machine tool condition monitoring using workpiece surface texture analysis. Mach. Vis. Appl. 2000, 11, 257–263. [Google Scholar] [CrossRef]

- Prasad, B.S.; Sarcar, M.M.M. Experimental investigation to predict the condition of cutting tool by surface texture analysis of images of machined surfaces based on amplitude parameters. Int. J. Mach. Machinabil. Mater. 2008, 4, 217–236. [Google Scholar] [CrossRef]

- Prasad, B.S.; Sarcar, M.M.M.; Ben, B.S. Surface textural analysis using acousto optic emission- and vision-based 3D surface topography-a base for online tool condition monitoring in face turning. Int. J. Adv. Manuf. Technol. 2011, 55, 1025–1035. [Google Scholar] [CrossRef]

- Dutta, S.; Pal, S.K.; Mukhopadhyay, S.; Sen, R. Application of digital image processing in tool condition monitoring: A review. CIRP J. Manuf. Sci. Technol. 2013, 6, 212–232. [Google Scholar] [CrossRef]

- Dutta, S.; Pal, S.K.; Sen, R. Progressive tool flank wear monitoring by applying discrete wavelet transform on turned surface images. Meas. J. Int. Meas. Confed. 2016, 77, 388–401. [Google Scholar] [CrossRef]

- Bhat, N.N.; Dutta, S.; Pal, S.K.; Pal, S. Tool condition classification in turning process using hidden Markov model based on texture analysis of machined surface images. Meas. J. Int. Meas. Confed. 2016, 90, 500–509. [Google Scholar] [CrossRef]

- Mannan, M.A.; Mian, Z.; Kassim, A.A. Tool wear monitoring using a fast Hough transform of images of machined surfaces. Mach. Vis. Appl. 2004, 15, 156–163. [Google Scholar] [CrossRef]

- Bartow, M.J.; Calvert, S.G.; Bayly, P.V. Fiber bragg grating sensors for dynamic machining applications. In Proceedings of the SPIE—The International Society for Optical Engineering, Troutdale, OR, USA, 20 November 2003; pp. 21–31. [Google Scholar]

- Wang, W.H.; Wong, Y.S.; Hong, G.S. 3D measurement of crater wear by phase shifting method. Wear 2006, 261, 164–171. [Google Scholar] [CrossRef]

- Dawson, T.G.; Kurfess, T.R. Quantification of tool wear using white light interferometry and three-dimensional computational metrology. Int. J. Mach. Tools Manuf. 2005, 45, 591–596. [Google Scholar] [CrossRef]

- Klancnik, S.; Ficko, M.; Balic, J.; Pahole, I. Computer vision-based approach to end mill tool monitoring. Int. J. Simul. Model. 2015, 14, 571–583. [Google Scholar] [CrossRef]

- Kerr, D.; Pengilley, J.; Garwood, R. Assessment and visualisation of machine tool wear using computer vision. Int. J. Adv. Manuf. Technol. 2006, 28, 781–791. [Google Scholar] [CrossRef]

- Lanzetta, M. A new flexible high-resolution vision sensor for tool condition monitoring. J. Mater. Process. Technol. 2001, 119, 73–82. [Google Scholar] [CrossRef]

- Giusti, F.; Santochi, M.; Tantussi, G. On-line sensing of flank and crater wear of cutting tools. CIRP Ann. Manuf. Technol. 1987, 36, 41–44. [Google Scholar] [CrossRef]

- Bahr, B.; Motavalli, S.; Arfi, T. Sensor fusion for monitoring machine tool conditions. Int. J. Comput. Integr. Manuf. 1997, 10, 314–323. [Google Scholar] [CrossRef]

- Giusti, F.; Santochi, M.; Tantussi, G. A flexible tool wear sensor for NC lathes. CIRP Ann. Manuf. Technol. 1984, 33, 229–232. [Google Scholar] [CrossRef]

- Rangwala, S.; Dornfeld, D. Integration of Sensors via Neural Networks for Detection of Tool Wear States; American Society of Mechanical Engineers, Production Engineering Division (Publication) PED: Boston, MA, USA, 1987; pp. 109–120. [Google Scholar]

- Kurada, S.; Bradley, C. A machine vision system for tool wear assessment. Tribol. Int. 1997, 30, 295–304. [Google Scholar] [CrossRef]

- Yuan, Q.; Ji, S.M.; Zhang, L. Study of monitoring the abrasion of metal cutting tools based on digital image technology. In Proceedings of the SPIE—The International Society for Optical Engineering, Beijing, China, 11–14 May 2004; pp. 397–402. [Google Scholar]

- Wang, W.H.; Hong, G.S.; Wong, Y.S. Flank wear measurement by a threshold independent method with sub-pixel accuracy. Int. J. Mach. Tools Manuf. 2006, 46, 199–207. [Google Scholar] [CrossRef]

- Li, P.; Li, Y.; Yang, M.; Zheng, J.; Yuan, Q. Monitoring technology research of tool wear condition based on machine vision. In Proceedings of the World Congress on Intelligent Control and Automation (WCICA), Chongqing, China, 25–27 June 2008; pp. 2783–2787. [Google Scholar]

- Shahabi, H.H.; Ratnam, M.M. On-line monitoring of tool wear in turning operation in the presence of tool misalignment. Int. J. Adv. Manuf. Technol. 2008, 38, 718–727. [Google Scholar] [CrossRef]

- Pfeifer, T.; Wiegers, L. Reliable tool wear monitoring by optimized image and illumination control in machine vision. Meas. J. Int. Meas. Confed. 2000, 28, 209–218. [Google Scholar] [CrossRef]

- Barreiro, J.; Castejón, M.; Alegre, E.; Hernández, L.K. Use of descriptors based on moments from digital images for tool wear monitoring. Int. J. Mach. Tools Manuf. 2008, 48, 1005–1013. [Google Scholar] [CrossRef]

- Alegre, E.; Alaiz-Rodríguez, R.; Barreiro, J.; Ruiz, J. Use of contour signatures and classification methods to optimize the tool life in metal machining. EST J. Eng. 2009, 15, 3–12. [Google Scholar] [CrossRef]

- D’Addona, D.M.; Teti, R. Image data processing via neural networks for tool wear prediction. In Proceedings of the 8th CIRP International Conference on Intelligent Computation in Manufacturing Engineering, ICME, Ischia, Italy, 18–20 July 2012; Elsevier B.V.: Ischia, Italy, 2013; pp. 252–257. [Google Scholar]

| No. | Chipping Rate (%) | Wear Amount (mm) | Status Determination | No. | Chipping Rate (%) | Wear Amount (mm) | Status Determination |

|---|---|---|---|---|---|---|---|

| 1 | 45.76 | 1.530 | over-wear | 11 | 62.47 | 0.651 | chipping |

| 2 | 0.00 | 0.000 | BUE | 12 | 0.00 | 0.000 | BUE |

| 3 | 35.14 | 0.735 | over-wear | 13 | 52.42 | 0.875 | chipping |

| 4 | 20.65 | 0.658 | over-wear | 14 | 19.26 | 0.427 | over-wear |

| 5 | 26.04 | 0.238 | normal wear | 15 | 37.09 | 0.532 | over-wear |

| 6 | 33.75 | 0.287 | normal wear | 16 | 31.35 | 0.903 | over-wear |

| 7 | 12.20 | 0.105 | normal wear | 17 | 29.48 | 0.161 | normal wear |

| 8 | 26.34 | 0.252 | normal wear | 18 | 0.00 | 0.000 | normal wear |

| 9 | 0.00 | 0.000 | BUE | 19 | 0.00 | 0.000 | fracture |

| 10 | 29.26 | 0.686 | over-wear | 20 | 63.06 | 1.250 | chipping |

| No. | Chipping Rate (%) | Wear Amount (mm) |

| 1 | 52.886 | 1.302 | |

| 2 | 52.993 | 1.288 | |

| 3 | 52.922 | 1.288 | |

| 4 | 52.831 | 1.302 | |

| 5 | 53.335 | 1.288 | |

| 6 | 53.003 | 1.288 | |

| 7 | 52.820 | 1.302 | |

| 8 | 52.746 | 1.295 | |

| 9 | 51.878 | 1.281 | |

| 10 | 52.760 | 1.302 | |

| Average value | 52.817 | 1.294 | |

| Standard deviation | 0.352 | 0.008 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, W.-H.; Yeh, S.-S. Using the Machine Vision Method to Develop an On-machine Insert Condition Monitoring System for Computer Numerical Control Turning Machine Tools. Materials 2018, 11, 1977. https://doi.org/10.3390/ma11101977

Sun W-H, Yeh S-S. Using the Machine Vision Method to Develop an On-machine Insert Condition Monitoring System for Computer Numerical Control Turning Machine Tools. Materials. 2018; 11(10):1977. https://doi.org/10.3390/ma11101977

Chicago/Turabian StyleSun, Wei-Heng, and Syh-Shiuh Yeh. 2018. "Using the Machine Vision Method to Develop an On-machine Insert Condition Monitoring System for Computer Numerical Control Turning Machine Tools" Materials 11, no. 10: 1977. https://doi.org/10.3390/ma11101977

APA StyleSun, W.-H., & Yeh, S.-S. (2018). Using the Machine Vision Method to Develop an On-machine Insert Condition Monitoring System for Computer Numerical Control Turning Machine Tools. Materials, 11(10), 1977. https://doi.org/10.3390/ma11101977