1. Introduction

With the global energy transition, renewable energy, energy storage systems (ESSs), and flexible adjustable loads (FALs) have further penetrated into power systems on a large scale [

1]; for distribution networks (DNs), they are challenged by both the source and load uncertainty and the interaction between them [

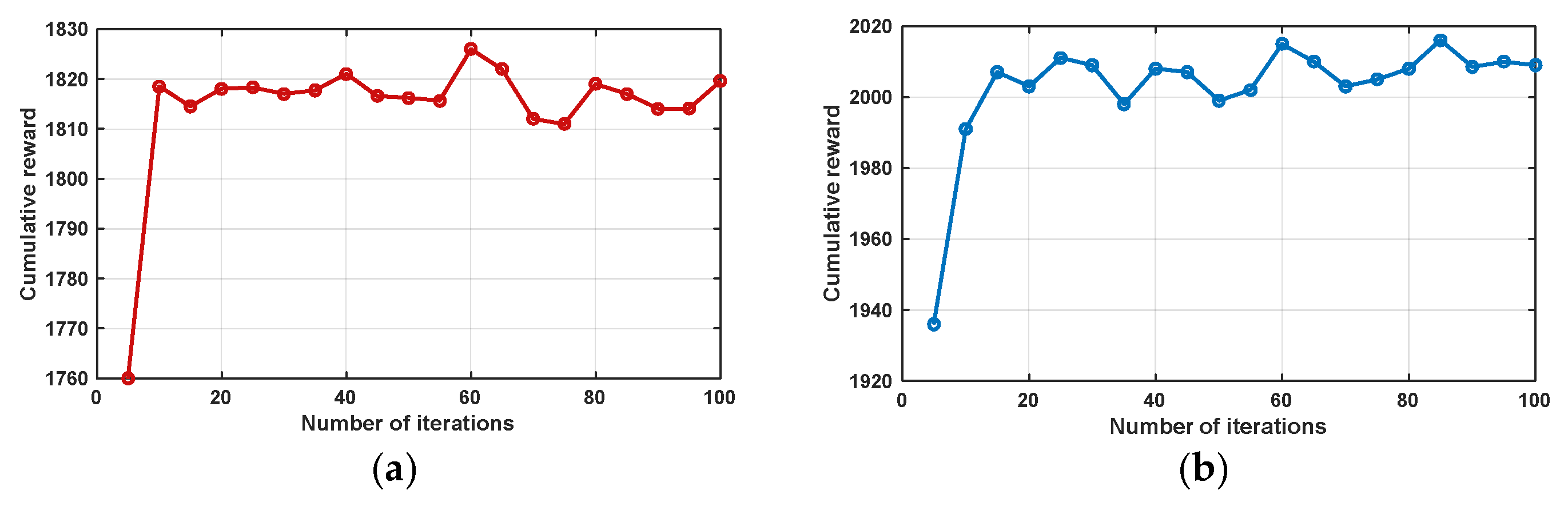

2], so the traditional DN management method (i.e., the full centralized control-based method [

3,

4]) demonstrates limited effectiveness in addressing the regulation of DNs with a high proportion of distributed generators (DGs), ESSs, and FALs [

5]. To improve the security, the control flexibility, the renewable energy resource’s utilization rate (RERUR), and the operation efficiency of DNs, the aggregation concept [

6,

7,

8] and the related dispatching strategy are regarded as an attractive technological path; the scattered DGs, ESSs, and FALs in DNs can be organized and controlled in the form of clusters (or groups), by which the number of the central controller’s control object can be significantly reduced to the number of the cluster, the controller of each cluster can only focus on regulating resources within the cluster, and all the cluster controllers can work in parallel; hence, more flexible, robust, and efficient operation performance can be obtained [

9]. However, how to achieve this aggregation/group process, ensuring that the aggregators can intelligently respond to grid demands while optimizing resource allocation, is a new and crucial technological challenge in realizing the above concept.

For the control and allocation of the DN’s resources, conventional control algorithms [

10,

11,

12] always provide deterministic optimal or quasi-optimal solutions for every controllable resource. However, with the expansion of the physical and mathematical scale of the focused problem, the computational complexity and the requirement of model accuracy increase significantly, which limits the adaptability of the conventional algorithm to the dynamic and uncertain objects in DNs [

13]. On the other hand, compared with the traditional control methods, the heuristic algorithm shows better adaptability to modern DNs [

14,

15,

16], but the relatively slow convergence speed still inhibits its further application.

Reinforcement learning (RL) [

17], a machine learning method, provides a new perspective for the dynamic management of DNs; it can automatically discover the optimal control strategy through trial and error from the environment without pre-knowing the explicit model of the plan, which shows applicable potential in DNs with the characteristics of time—variety, complexity, and uncertainty—e.g., by adjusting the output of DGs, FALs, and ESSs to realize load balance, voltage control, and even frequency regulation in isolated networks [

18]. By introducing hierarchical constrained RL and a gated recurrent unit, Ref. [

19] proposes an interaction power optimization strategy among several microgrids, by which the dispatching robustness and stability are improved, and in which the decision space is also simplified. To reuse historical dispatching knowledge, Ref. [

20] proposes a power system with a real-time dispatching optimization method integrated with deep transfer RL technology; by expanding the input and output channels of the deep-learning network, the transfer of knowledge of the state and action spaces in the expanded scenario is realized, and the learning efficiency of agents is also improved. Ref. [

21] presents a deep RL method to analyze and solve the optimal power flow (OPF) problem in DNs, in which the historical data is extracted from the operational knowledge and then approximated by deep neural networks, and quasi-optimal decision-making performance can be realized.

In addition, for DNs with high penetration of adjustable resources (ARs), multi-agent RL is regarded as an effective technical path for optimal dispatching. By learning the coordinated control strategy through a counter-training model, Ref. [

22] presents a multi-agent deep RL method (MADRL) to mitigate voltage issues in DNs. In [

23], an attention mechanism is adopted to decompose the DN into several sub-DNs, and a modified MADRL is developed to execute distributed voltage-reactive control. Furthermore, to enhance agents’ interregional cooperation ability, an interregional auxiliary rewards mechanism is proposed in [

24], and the evolutionary game strategy optimization method is further developed to improve the voltage regulation performance in DNs.

As discussed above, the application of RL shows its great potential in the field of DN optimal regulation, but little research discusses DNs’ dynamic cluster partitioning by use of an RL-based method, though this is normally regarded as a critical step in highly effective organization and control of modern DNs [

25,

26,

27,

28,

29]. During the cluster partitioning phase, resources most conducive to achieving the DN’s control objectives can be aggregated according to their inherent characteristics and grid topology, which alleviates computational burdens in subsequent grid regulation stages and enhances control efficiency [

27]. Furthermore, once the cluster partitioning model is well-trained, its execution becomes liberated from the constraints of traditional optimization models, leading to improved computational efficiency [

28]. On this basis, it also strengthens the DN’s decision-making capabilities regarding cluster responses under extreme operating conditions [

29]. Hence, exploring an appropriate RL strategy that can be applied for DN dynamic cluster partitioning will be valuable for smart DN management level improvement.

Based on the above discussion, this paper proposes an intelligent dynamic partitioning for DNs, and a self-consistent regulation framework is also embedded. The main contributions of this paper are as follows:

- (1)

An environmental model with continuous state space, discrete action space and two dispatching performance-oriented reward functions is first developed for DN cluster partitioning.

- (2)

A novel random forest Q-learning network (RF-QN) with a node-based multi-agent parallel calculating framework and weight self-adjusting mechanism is developed and trained to implement the cluster partitioning, from which the generalization and robustness of the trained model are improved by taking advantage of both deep learning and decision trees.

- (3)

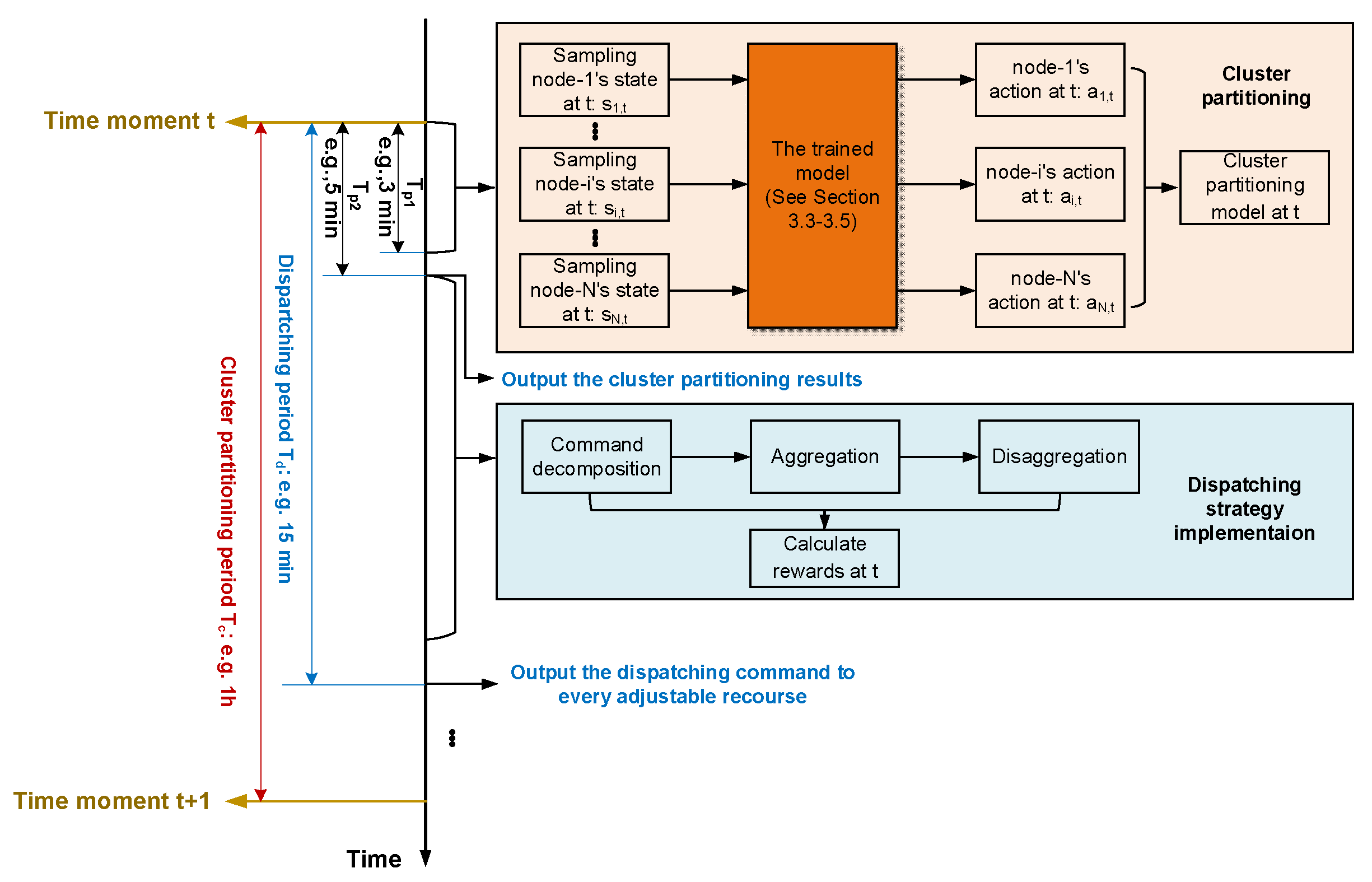

A dispatching framework with an intelligent dynamic cluster generating mechanism in each cluster partitioning period and a parallel cooperation mechanism between clusters in each regulating moment is proposed in this paper. It leverages the advantages of RL in handling uncertain and complex decision-making processes; while ensuring efficient utilization of the ARs within each cluster, the operation flexibility and robustness of the whole system are improved.

The remainder of this article is organized as follows. The cluster partitioning environmental model is developed in

Section 2. The deep RL cluster partitioning strategy and its training method for implementing dynamic cluster partitioning are presented in

Section 3. Then, the overall dynamic cluster partitioning and dispatching framework is discussed in

Section 4.

Section 5 gives the simulation results, and the conclusion is given in

Section 6.

3. Deep Reinforcement Learning Cluster Partitioning Strategy

As discussed in

Section 2, the environmental model for dynamic cluster partitioning is formulated as a Markov decision process with continuous state space (reflecting real-time power variations) and discrete action space (representing node-level cluster assignment choices). Within this framework, each node observes its operational state—including power limits, installed capacity, and upstream cluster affiliation—and selects an action to either join the same cluster as its upstream neighbor or initiate a new cluster. Following the partitioning decision, a dispatch-oriented reward is computed based on renewable energy utilization, network loss, and voltage deviation. Hence, dynamic cluster partitioning can be demonstrated to find a balance between the fluctuating power demand and supply in every time section concerned to create an optimal resource grouping model, which is useful in a deep reinforcement learning algorithm.

3.1. Classical Deep Q-Learning Network (DQN) Model

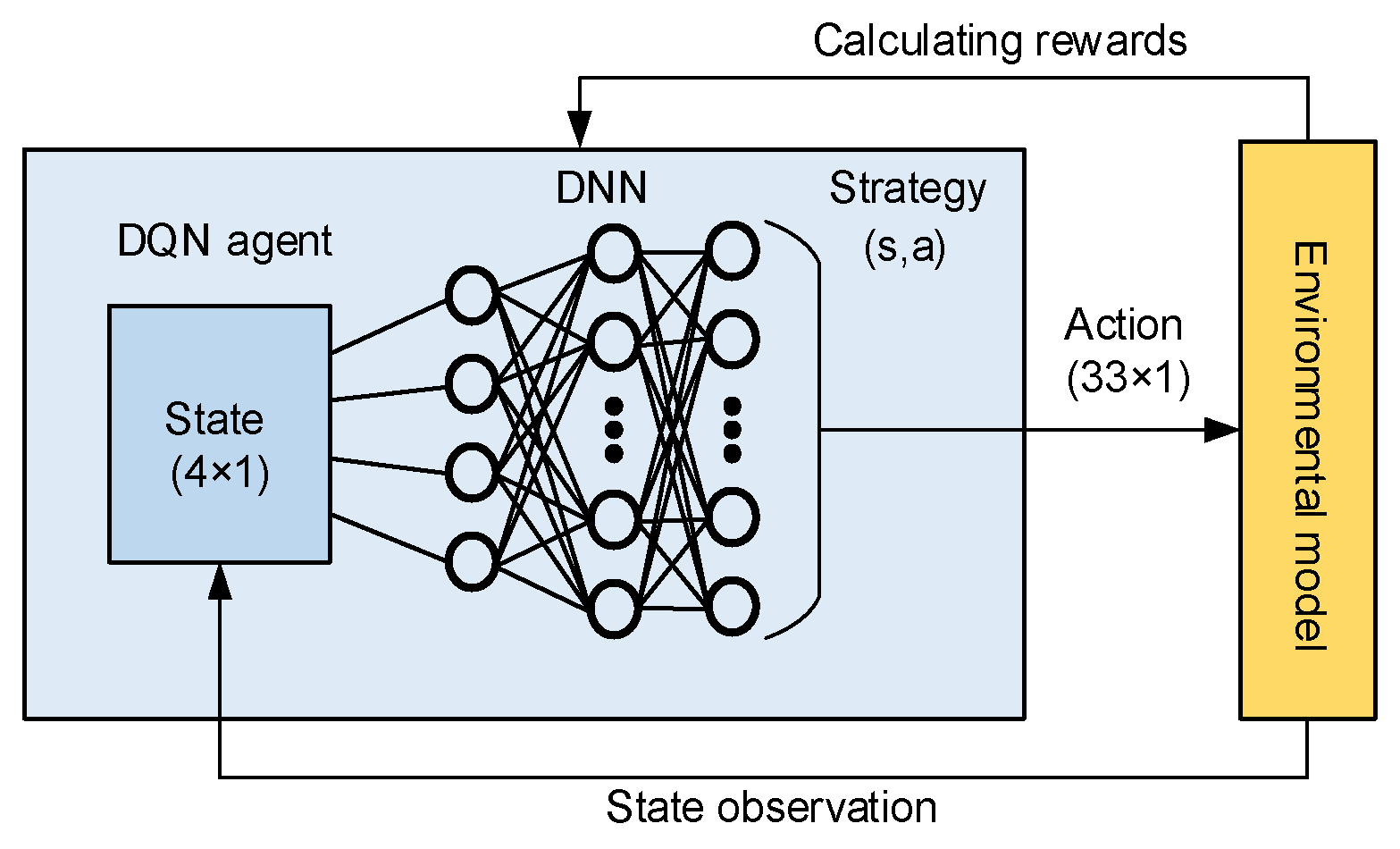

As can be seen from

Figure 1, a deep neural network (DNN) is embedded in a deep Q-learning network (DQN) [

31], which enables the DQN to fit an approximate Q-function by learning from the continuous state space (e.g., (1)) and thereby predicting the expected rewards for each possible state–action pair

.

By defining the Q-value of the DNN as

, and the true Q-value as

, the loss function in the fitting process can be expressed as

Minimizing in the training process, the DNN can achieve value network regression, and find the value in every step to realize dynamic cluster partitioning.

3.2. Random Forest Q-Learning Network (RF-QN) Model

To address the limitations of classical DQNs in handling noisy grid data and high-dimensional state spaces, we propose a random forest (RF) Q-learning network (RF-QN) that synergizes ensemble learning with reinforcement learning. As shown in

Figure 2, the RF-QN replaces the DNN in a traditional DQN with an RF regression system. The RF-QN leverages ensemble learning to achieve better generalization (through feature subsampling and voting) and inherent stability against noisy grid data [

32]. The integration provides three distinct advantages [

33]: (1) RF’s parallelizable structure enables faster training, (2) decision trees naturally handle sparse data regimes, reducing experience buffer dependency, and (3) explicit feature importance outputs make cluster partitioning decisions interpretable.

The loss function of the RF-QN is

where

is the Q-function of RF.

Minimizing in the training process, RF can achieve value network regression, and find a more suitable value for dynamic cluster partitioning.

3.3. Training and Parameter Update Principles

For the DNN-integrated DQN [

31], the update principle of the Q-value can be expressed as

where

is the learning rate,

is the discount factor,

indicates the current reward,

is the status of the next moment, and

is the action of the next moment.

Based on the update principle shown in (12),

can be approximated as

and (10) can be rewritten as

By incrementally updating the Q-value network fitted by the DNN, it gradually approaches the temporal difference target until it is closer to the true Q-value network.

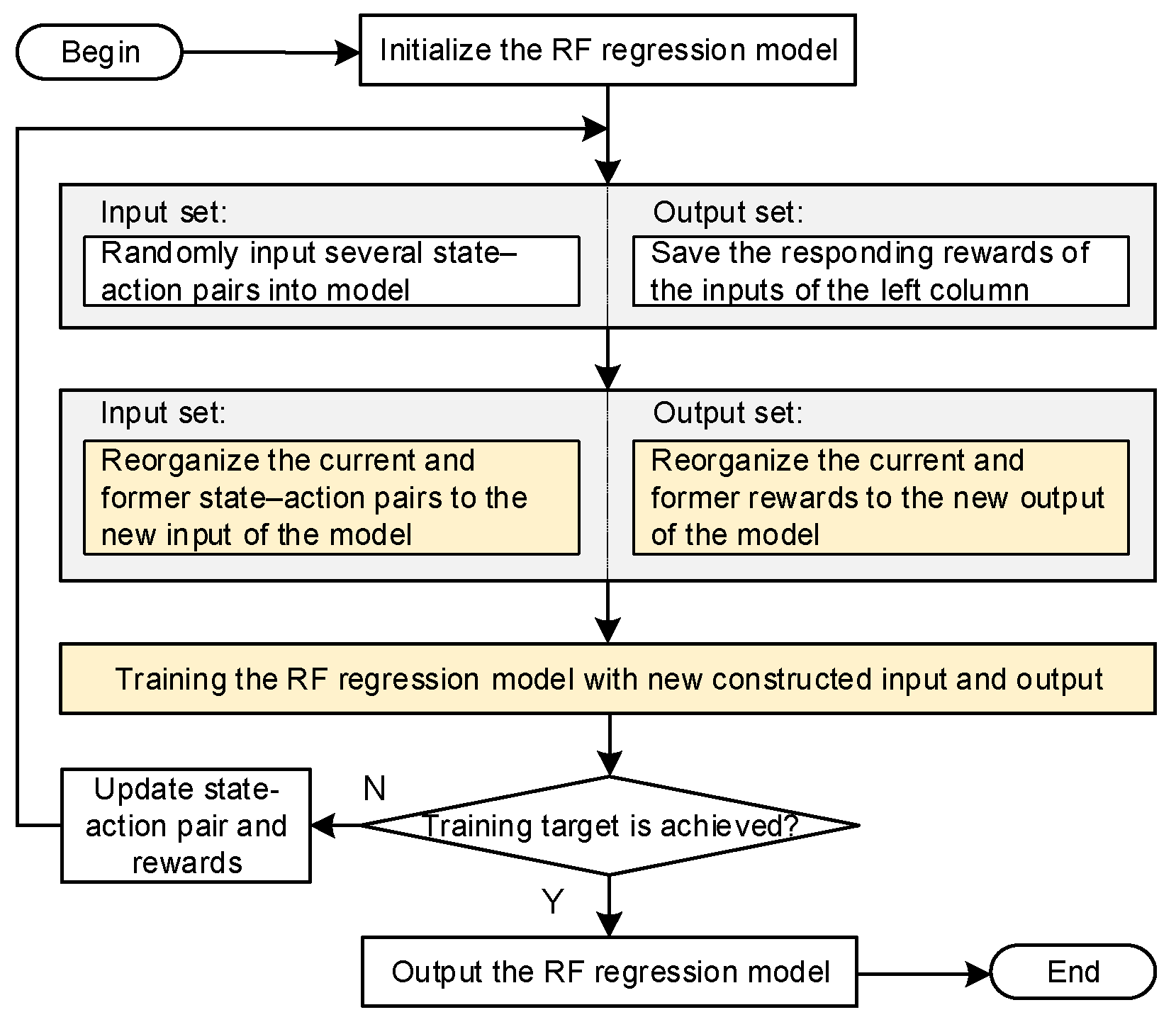

For the proposed RF-QN, different from the DNN, the RF regression model cannot update parameter weights in real time. A training result rumination update regression model is proposed in this paper to address this; the framework is shown in

Figure 3.

As can be seen from

Figure 3, we should first initiate an RF regression model; then, we randomly select several state–action pairs

as the input set (note: they should be restricted within their operation boundary) and save the related rewards of the regressive model as the output set. Then, before training the new regression model, we combine the current state–action pair and the rewards with the input and output sets from the previous step to construct new input and output sets, and the reorganized input and output sets can be adopted to train the model. This process is repeated until the expected accuracy or the maximum training number is reached. The mechanism of reconstructing the trained results with the current input and output set facilitates the updating of the RF’s weights, and in this process the immature results will be gradually abandoned.

3.4. Specific Training Procedure

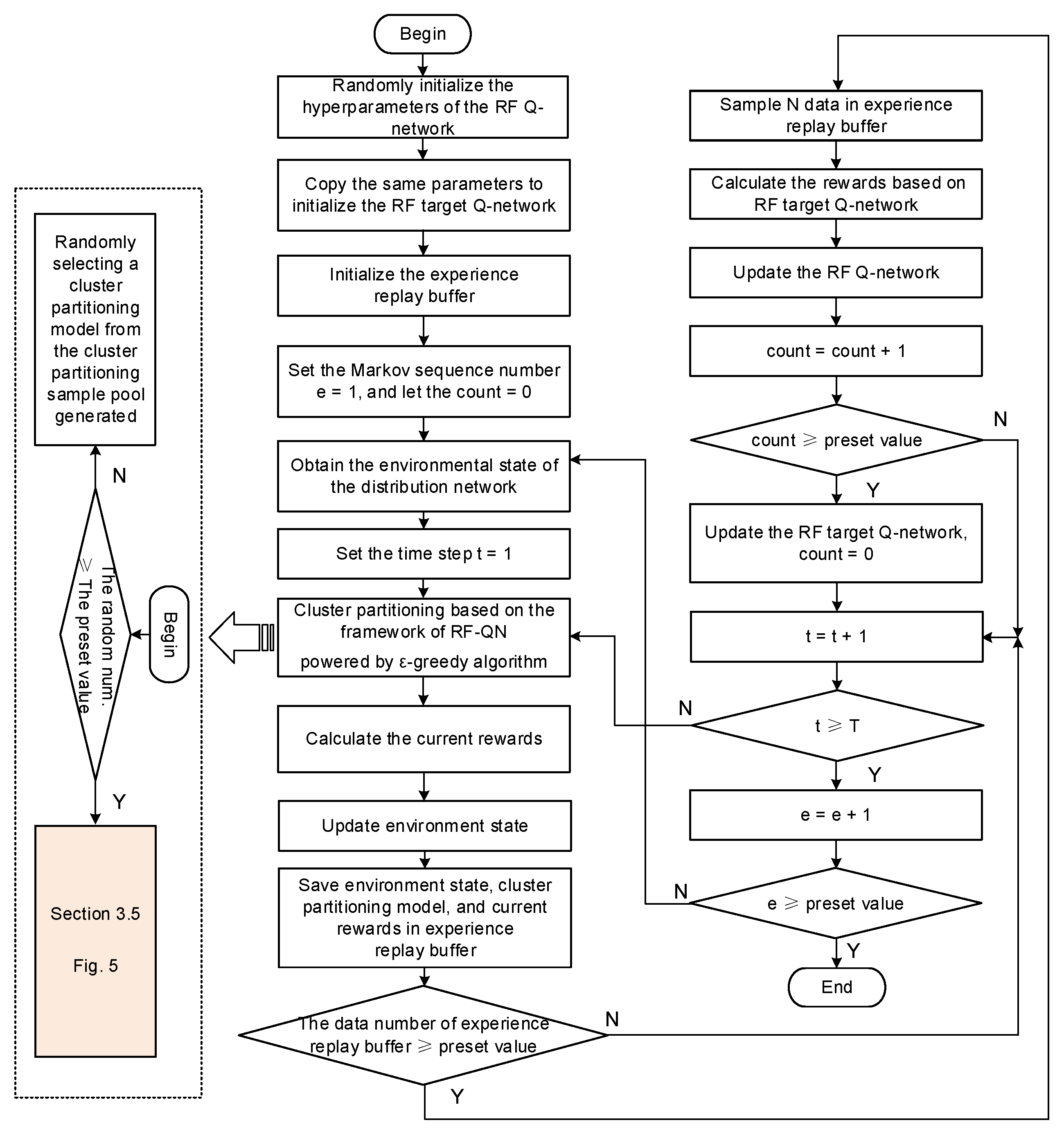

Figure 4 illustrates the detailed training procedure of the proposed RF-QN for dynamic cluster partitioning, which consists of the following steps:

Step 1: Initialize the hyperparameters of the RF Q-network and assign a cluster number from 1 to node-1.

Step 2: Compute the current and target Q-networks of the RF model.

Step 3: Initialize the experience replay buffer (note: the buffer stores and retrieves experiences for each agent independently).

Step 4: Let Markov sequence number e = 1 and the training counter count = 0.

Step 5: Obtain the environmental state of the concerned DN, including the output power and the installing capacity of ARs (for specifications, refer to

Section 3.5).

Step 6: Let the time step t = 1.

Step 7: Initialize the environmental states of the concerned DN and assign each node to a cluster using the ε-greedy algorithm, and record the current states, actions (i.e., cluster partitioning modes), and rewards in the experience replay buffer.

According to the ε-greedy algorithm, when the random number is larger than the preset value, the system will randomly select a cluster partitioning model from the sample pool; otherwise, the procedure will follow the process shown in

Figure 5 (see

Section 3.5).

Step 8: Calculate the current reward (see (4)) and update the environmental state (i.e., the output power and the installing capacity of ARs) at time step t.

Step 9: Store the current states, actions, and rewards in the experience replay buffer. If the buffer reaches its preset capacity, randomly sample data to train the RF Q-network with the following objective:

where

is the trained target Q-network/function.

Step 10: Update the Q-network and increment by 1.

Step 11: If count exceeds the preset threshold, update the target Q-network as .

Step 12: Increment by 1 and proceed to the next training round until .

Step 13: Terminate the training process once all Markov sequences are completed (i.e., a preset value) and .

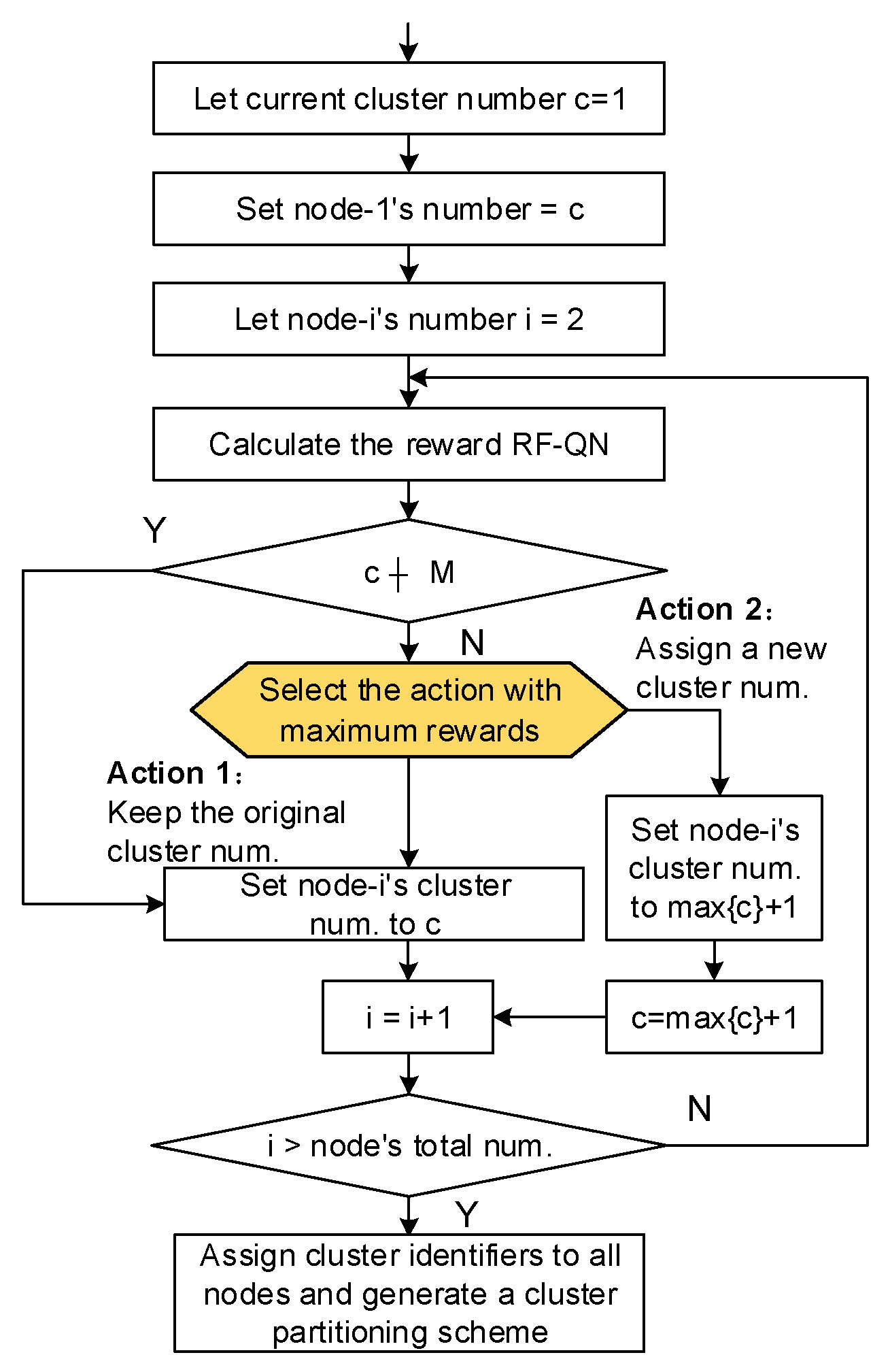

3.5. The RF-QN Model-Driven Cluster Partitioning Method

To adapt the training process given in

Figure 4, the cluster partitioning method driven by the RF-QN model is shown in

Figure 5. The basic idea here is assigning a cluster number to each node in DN, after which nodes with the same cluster identifier will be grouped together. It can be seen from

Figure 5 that the number of the current cluster

and node-1’s cluster number are first set as 1. And then, the following nodes, e.g., node-

, will be numbered based on the cluster number of the upstream node, and two possible cluster numbers can be assigned to node-

—action 1:

; and action 2:

(see (2) and (3) in

Section 2). Furthermore, the action with the largest RF-QN reward will finally be implemented, and node-

’s cluster number

can then be determined based on (3). Finally, the cluster number

and node number

are updated until all nodes are assigned to the cluster number.

6. Conclusions

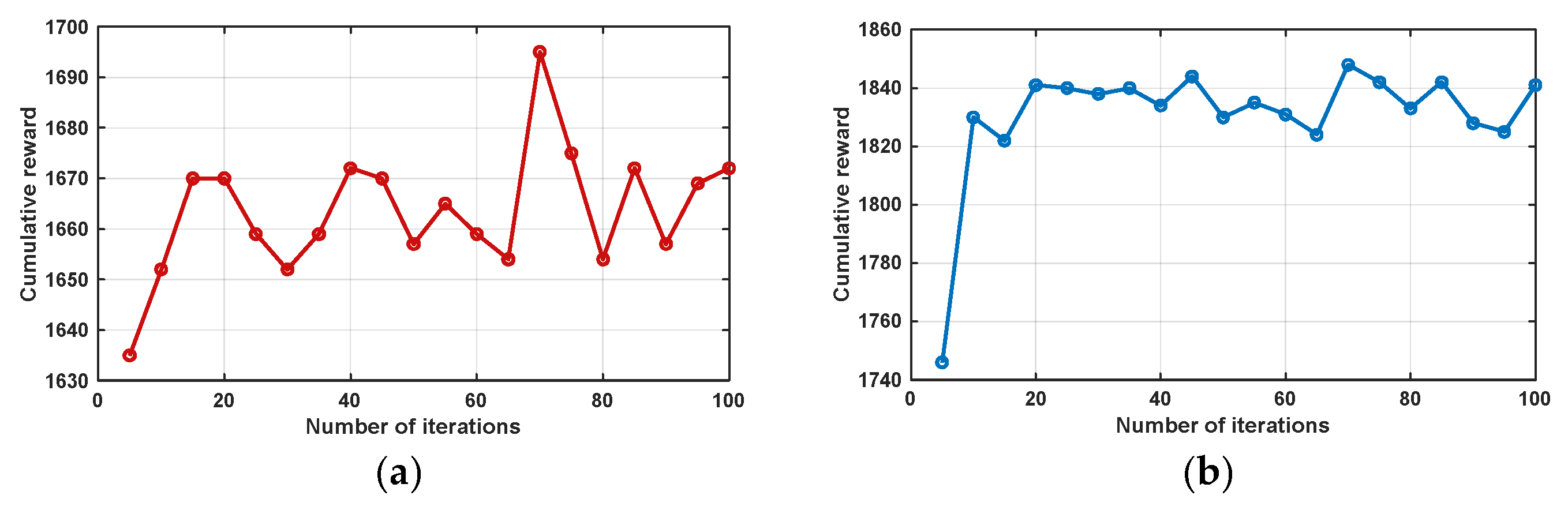

This paper aimed to develop an intelligent dynamic cluster partitioning and regulation strategy for distribution networks with high penetration of distributed and flexible resources, with the goals of improving operational efficiency, enhancing renewable energy utilization, and maintaining voltage stability while reducing computational complexity. To achieve these objectives, we first constructed an environment model based on a Markov decision process, which formally describes the state space, action space, and dispatch-oriented reward function for cluster partitioning. We then proposed a novel RF-QN (random forest Q-learning network) with a node-based multi-agent framework, incorporating a rumination update mechanism that enables adaptive weight adjustment during training, thereby enhancing the model’s generalization and robustness. Furthermore, an integrated dispatching framework was designed to realize dynamic cluster partitioning and cooperative regulation in practical operations.

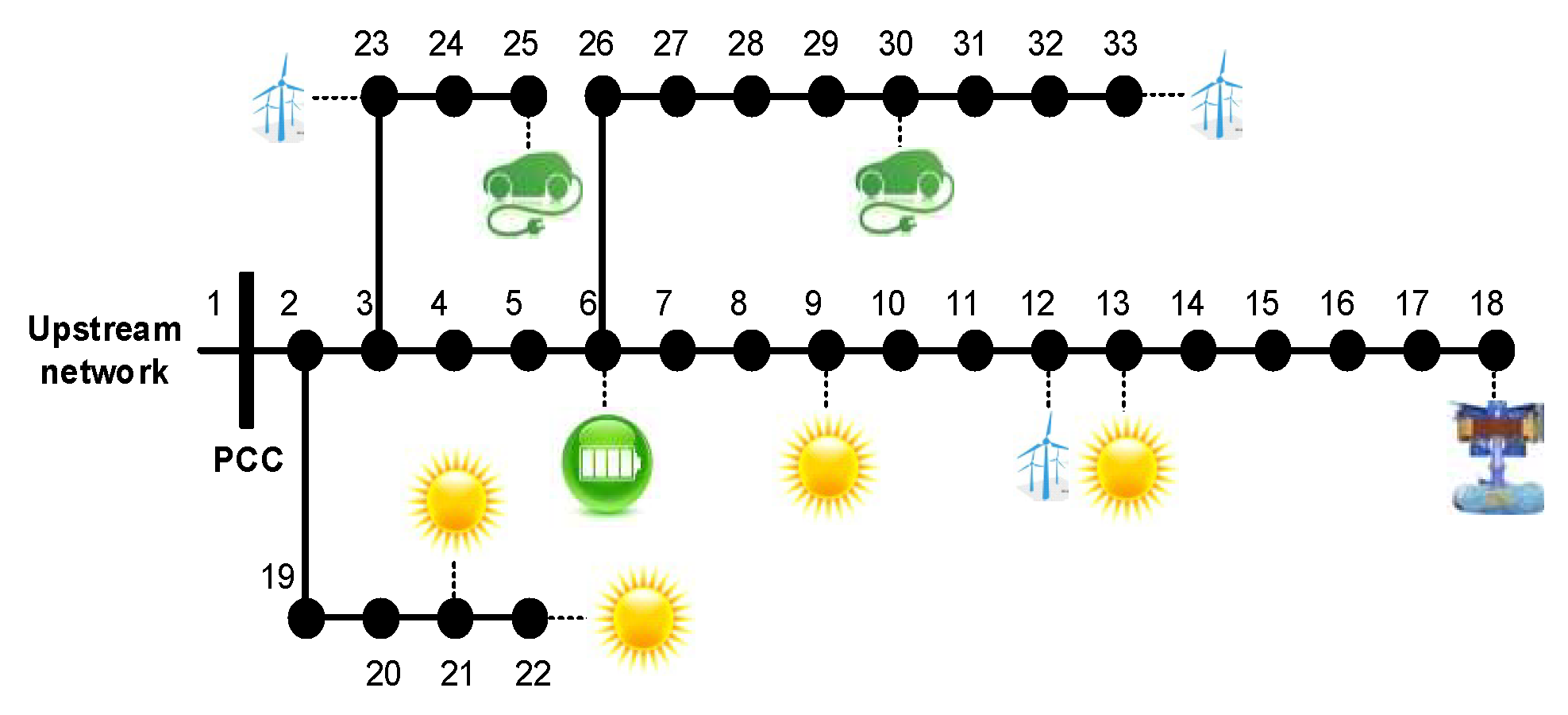

The proposed strategy was validated on a modified IEEE-33-node test system. The results demonstrate the following:

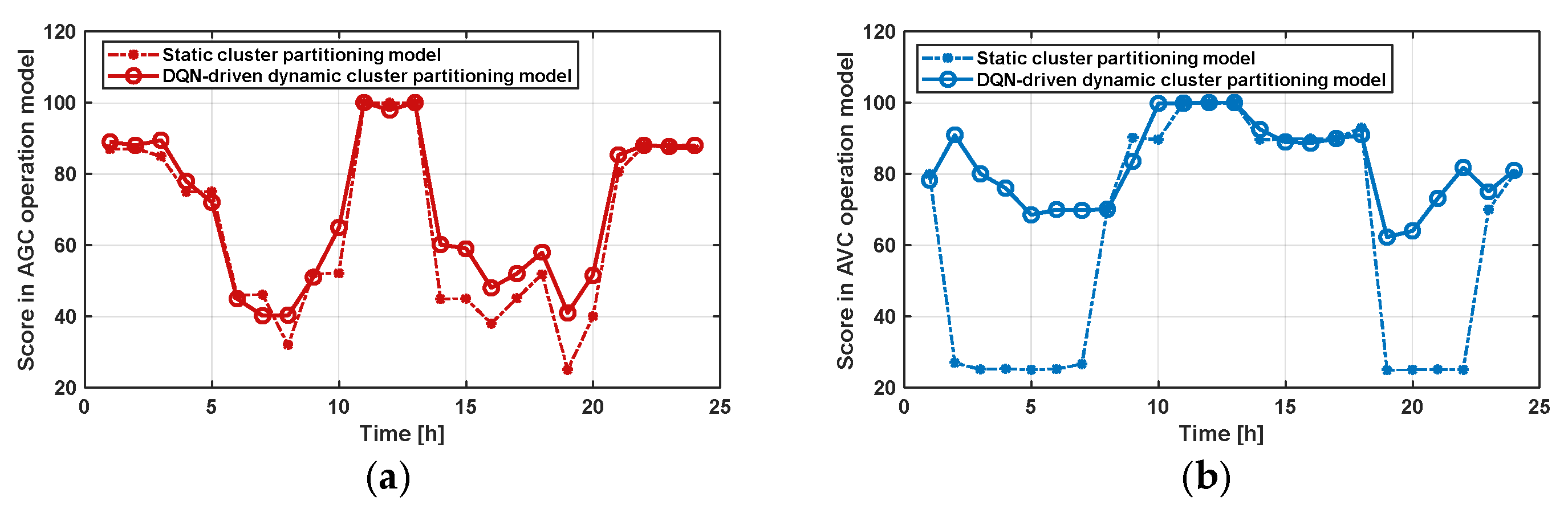

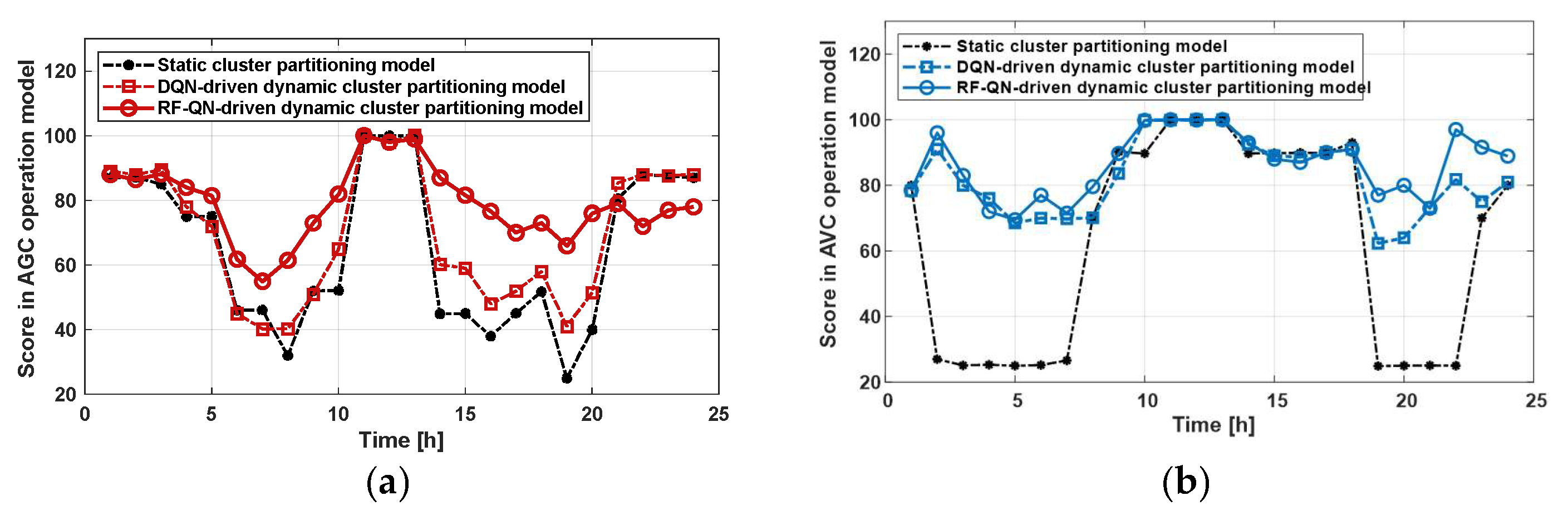

Significant Performance Improvement: Compared with static clustering and DQN-based dynamic clustering, the RF-QN approach increases the renewable energy utilization rate to 94.73%, reduces network losses to 0.0493 p.u., and lowers the voltage deviation rate to 2.984% under the AVC scenario (see

Table 5).

Operational Efficiency: The cluster partitioning and dispatch execution time remains under one minute, meeting the requirements of real-time or intra-hour grid operation.

Practical Applicability: The framework is particularly suitable for modern distribution networks with high shares of photovoltaic, wind, energy storage, and electric vehicle charging loads, where traditional centralized or static control methods struggle with variability and uncertainty.

In conclusion, the objectives of this study have been successfully achieved. The proposed intelligent dynamic cluster partitioning strategy not only provides a scalable and efficient solution for resource aggregation and dispatch but also offers a novel integration of random forest and reinforcement learning that balances interpretability, robustness, and computational performance. Future work will focus on extending the method to larger-scale networks and more extreme operational scenarios to further validate its adaptability and resilience.