3.1. Fuzzy PIDF Plus FOPD

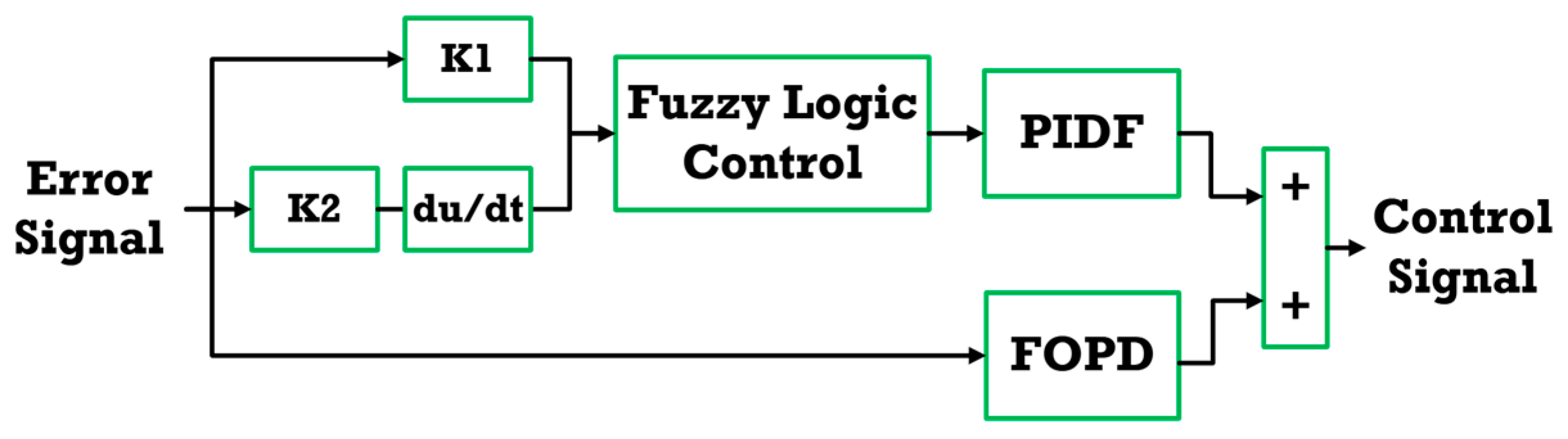

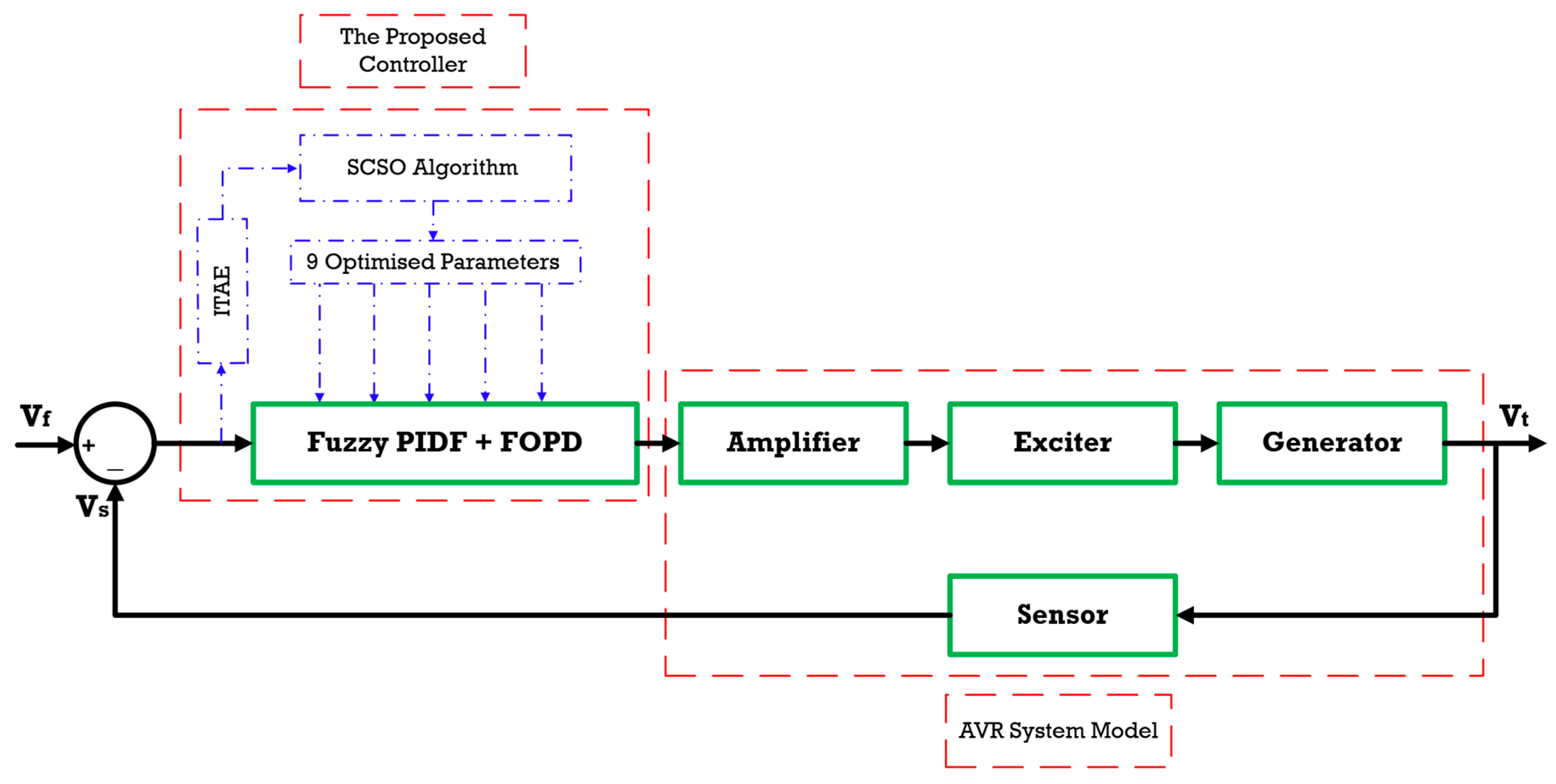

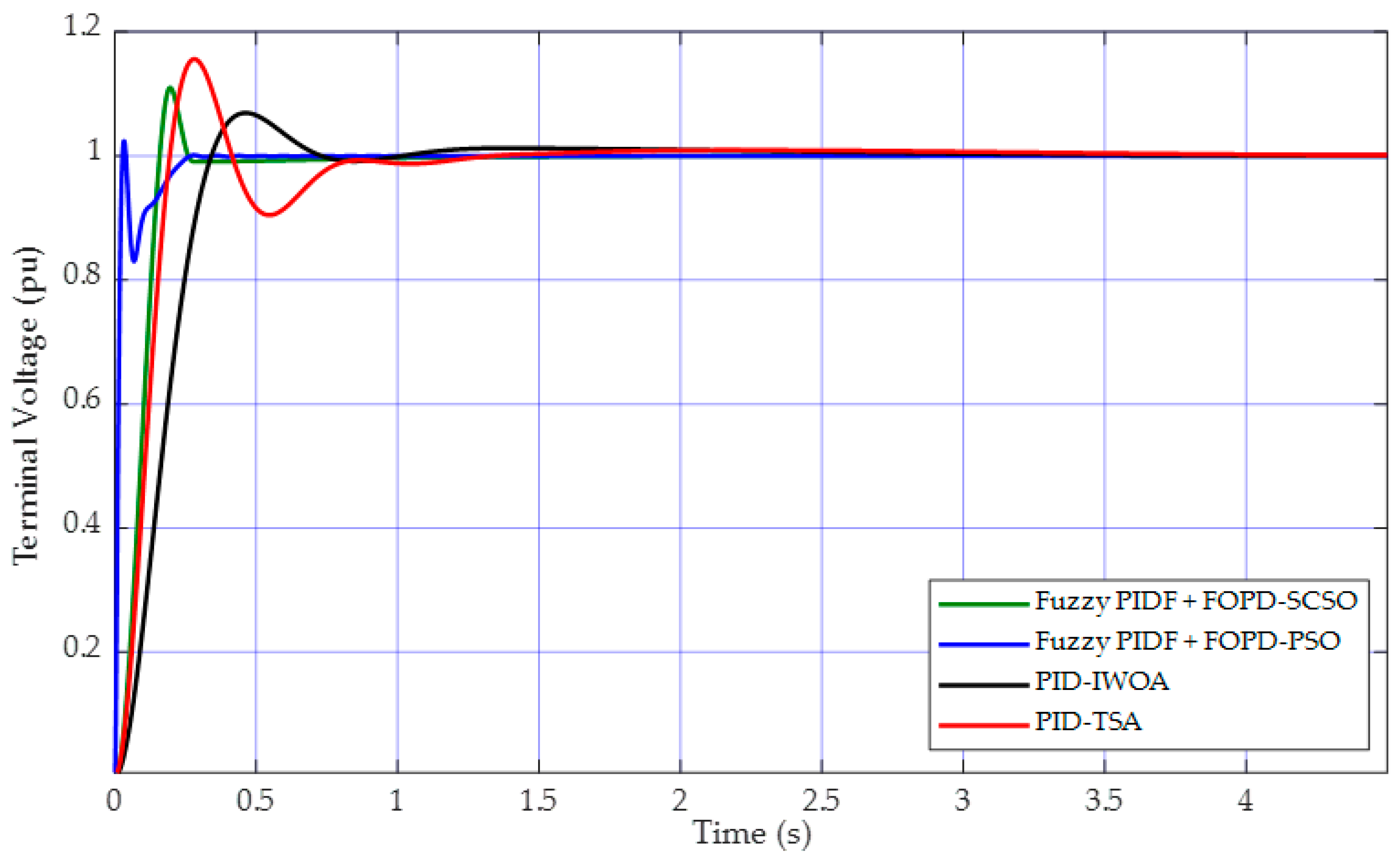

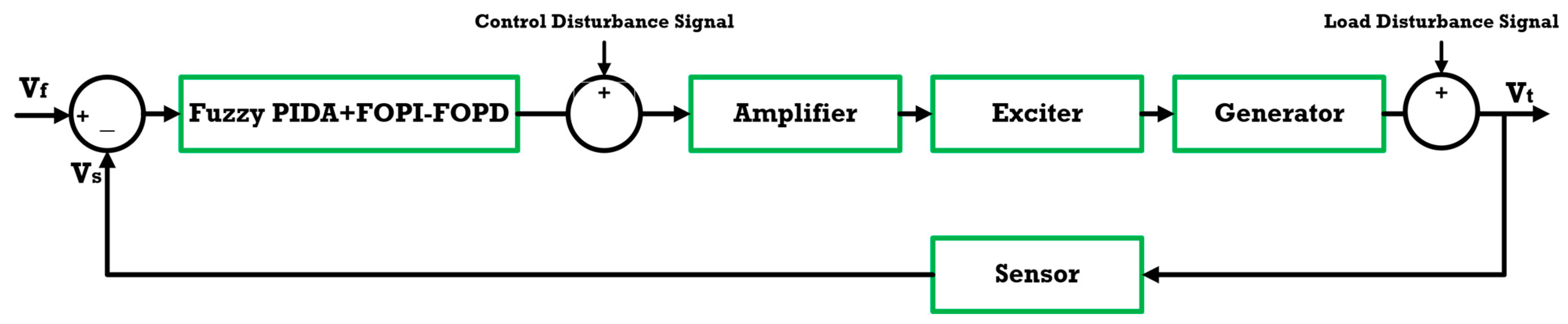

Figure 5 illustrates the structural schematic of the proposed control strategy, which integrates three core components: a fuzzy logic controller, a PIDF controller, and an FOPD controller. The fuzzy logic controller is meticulously designed with two primary input variables: the error signal and its derivative. These inputs are normalized using scaling factors, denoted as

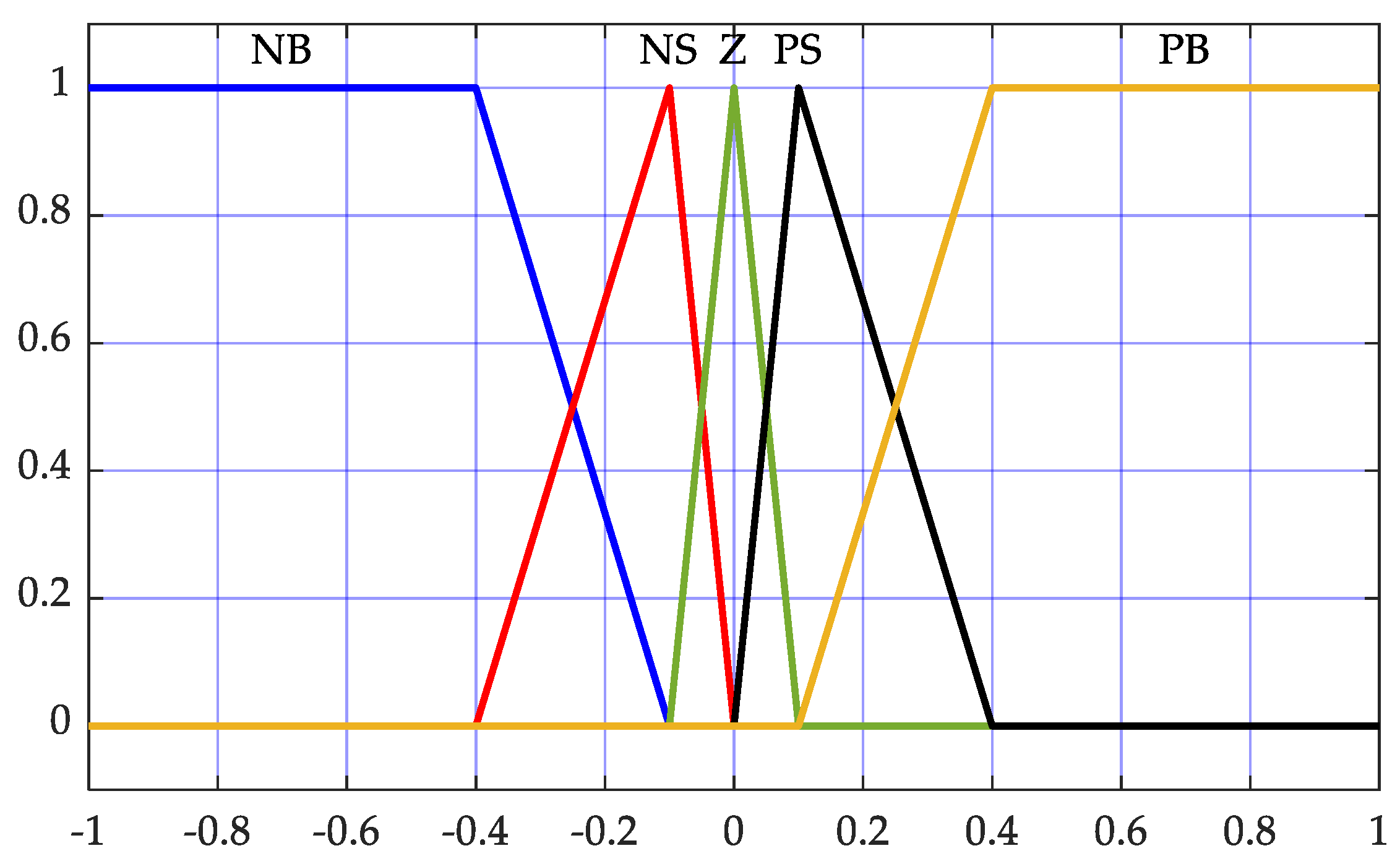

, respectively. The controller produces a single output, which is subsequently directed as an input to the PIDF controller. To maintain the computational simplicity and efficiency of the fuzzy controller, the design incorporates five triangular membership functions for both input and output variables, as depicted in

Figure 6. These membership functions are defined as Negative Big (NB), Negative Small (NS), Zero (Z), Positive Small (PS), and Positive Big (PB). The output of the fuzzy controller is determined through a rule base comprising 25 fuzzy rules, which are systematically outlined in

Table 4. These rules were formulated based on an in-depth analysis of the dynamic characteristics of the testbed model. The Mamdani inference mechanism is employed for the fuzzification process, facilitating the conversion of crisp input data into fuzzy sets. For defuzzification, the centroid method is applied, converting the fuzzy output into a precise, real-valued control signal. This methodological approach ensures both the computational efficiency and robust performance of the fuzzy logic controller. The PIDF controller is another form of the conventional PID controller, incorporating an additional gain parameter, referred to as the filter action gain with the derivative (

). The transfer function of the PIDF controller is presented in Equation (2), where

are the proportional, integral, derivative, and filter gains, respectively.

Concurrently, the FOPD controller processes the error signal as its input. The transfer function of the FOPD controller is presented in Equation (3); μ is the order of differentiator,

is the proportional gain for the FOPD controller, and

is the derivative gain.

The final control signal is synthesized by integrating the output of the fuzzy-PIDF controller with that of the FOPD controller. This unified control architecture markedly enhances the overall performance of the proposed control strategy.

3.2. Sand Cat Swarm Optimization (SCSO), PSO Algorithm, and Objective Function

The Sand Cat Swarm Optimization (SCSO) algorithm is a nature-inspired metaheuristic derived from the unique behaviors of sand cats in their natural habitat [

38]. The SCSO algorithm offers several advantages, making it highly effective for complex engineering problems. It balances exploration and exploitation phases through adaptive sensitivity control, which helps avoid local optima and improves convergence rates. With reduced parameter dependency, SCSO simplifies implementation and requires less tuning. Its robust search capability ensures adaptability to dynamic environments, such as fluctuating load conditions in power systems. The algorithm is computationally efficient with low memory requirements, making it suitable for real-time applications. SCSO is versatile, being applicable to both global optimization and real-world problems, including smart grids, robotics, and bioinformatics. Its decentralized agent behavior allows for effective scalability in high-dimensional problem spaces, mitigating the curse of dimensionality. Additionally, SCSO’s flexibility enables it to handle nonlinear or discontinuous constraints, making it suitable for multi-objective optimization and energy-efficient design tasks.

The algorithm emulates the sand cat’s specialized mechanisms for searching and hunting prey. Sand cats possess distinctive characteristics compared to domestic cats, such as the ability to detect low-frequency sounds, adapt to the harsh desert environment, and employ specialized hunting strategies. While their physical appearance is similar to that of domestic cats, sand cats are distinguished by a dense layer of fur on their palms and soles, aiding in their survival in arid terrains.

A notable behavioral adaptation of sand cats is their extraordinary capacity to detect low-frequency sounds, particularly those below 2 kHz, which they use to locate prey. This unique foraging and hunting ability forms the foundation of the SCSO algorithm, which is designed to identify solutions close to the global optimum. According to the No Free Lunch (NFL) theorem [

39,

40], no single metaheuristic algorithm can guarantee optimal performance for every optimization problem. Nevertheless, the SCSO algorithm has demonstrated reliable performance across a wide range of optimization tasks, making it a competitive choice among existing metaheuristic approaches.

The execution of the SCSO algorithm commences with the initialization of the parameter search space, a foundational step shared among population-based metaheuristic algorithms. The search space is randomly populated within specified lower and upper bounds, which are defined by the constraints of the optimization problem. These bounds establish the limits within which the search is conducted. The dimensionality of the search space is dictated by the number of decision variables (represented as columns), while the number of search agents corresponds to the rows. This randomly generated search space comprises candidate solutions that are progressively refined through iterative processes, enabling the algorithm to converge toward an optimal solution.

To evaluate the quality of candidate solutions, a well-defined fitness (or cost) function tailored to the specific optimization problem is employed. Depending on the problem’s objective—whether maximization or minimization—the algorithm steers the search process toward the optimal solution. During each iteration, the SCSO algorithm ensures that all candidate solutions remain within the specified boundaries of the search space.

The search agents then explore the search space continuously, updating their positions and progressing toward regions where the optimal solution is likely to reside. This process mimics the sand cat’s hunting mechanism, where it methodically approaches the prey’s predicted location. The exploration and exploitation strategies employed by the SCSO algorithm are designed to balance global exploration and local refinement, ensuring robust convergence to solutions near the global optimum. The specific mechanisms governing the search and hunting strategies differ among metaheuristic algorithms, contributing to their unique strengths and applicability to various optimization challenges.

In the SCSO algorithm, the prey-searching mechanism is augmented by the unique capability of each sand cat to detect and exploit low-frequency noise emissions. The sensitivity range (R) for each search agent is predefined within the interval [2,0]. The parameter denotes the general sensitivity range, which systematically decreases from 2 to 0 over the course of iterations, as regulated by Equations (4)–(6).

In Equation (4), the parameter , which corresponds to the sand cat’s ability to perceive low-frequency signals below 2 kHz, is assigned a value of 2. The iterative process is characterized by , representing the current iteration number, and denoting the maximum number of iterations. Additionally, refers to the current position of the search agent, indicates the best position encountered so far, and denotes a randomly selected position within the search space, as defined in Equations (7), (8a) and (8b).

In the SCSO algorithm, search agents exhibit a circular motion to explore the search space effectively and investigate potential global solutions. This circular movement facilitates diversification by enabling agents to explore different directions. The random angle

, uniformly distributed between 0 and 360 degrees, is incorporated as a cosine function (cos(

)) to model this behavior [

40].

The primary structural equations governing the SCSO algorithm are presented in Equation (9). These equations encapsulate the algorithm’s unique mechanisms for balancing exploration and exploitation, enabling robust convergence toward the global optimum by leveraging the sand cat’s biologically inspired hunting strategies.

The fundamental operational principle of the Sand Cat Swarm Optimization (SCSO) method is to identify potential optimal solutions within a randomly initialized search space, drawing inspiration from the sand cat’s behavior of seeking and attacking prey. Depending on the problem at hand, the objective of the algorithm can be framed as either the minimization or maximization of an appropriate cost function.

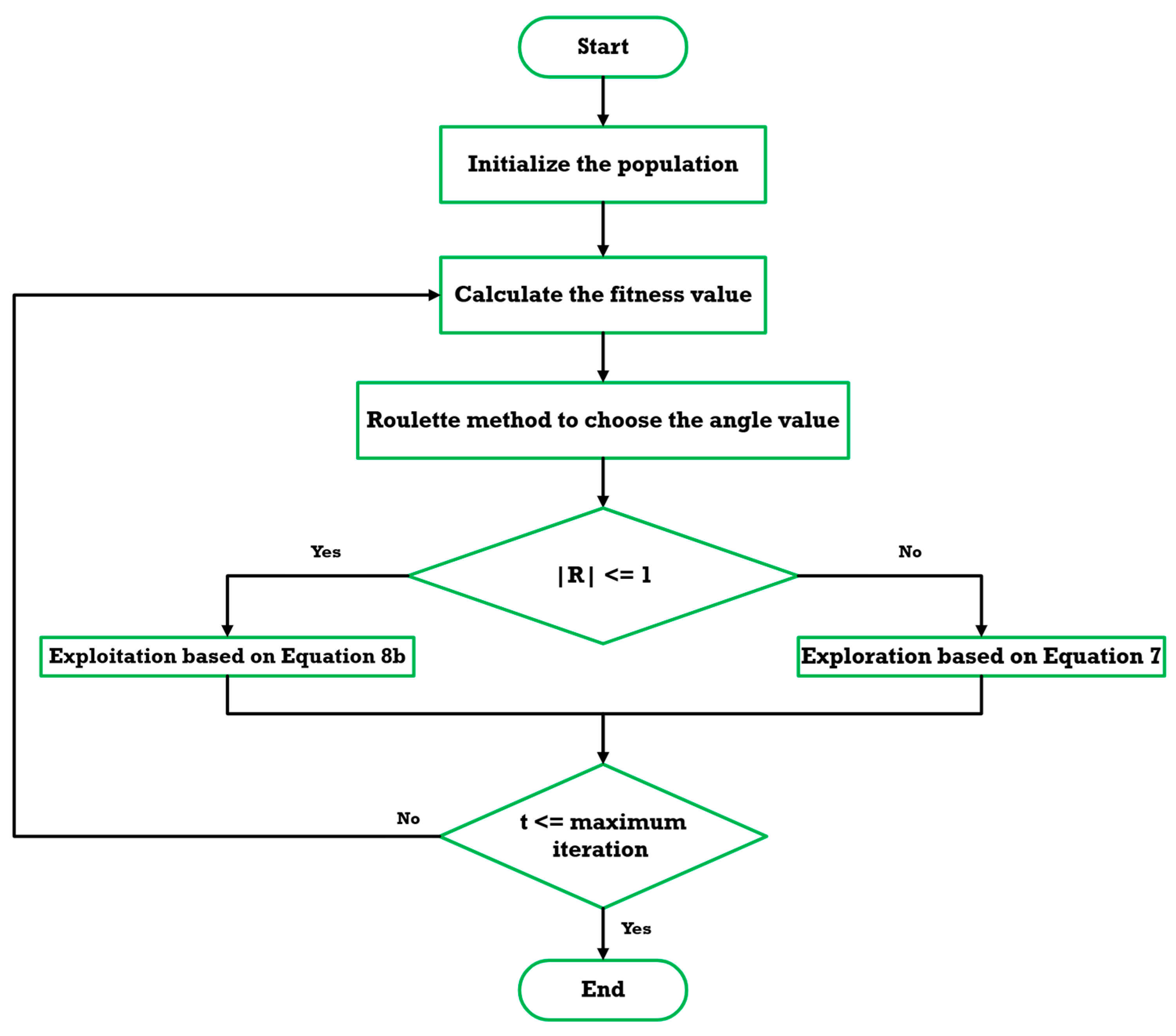

Figure 7 illustrates the flowchart of the SCSO algorithm. Based on this flowchart and the following procedural steps, the optimal control parameters can be determined:

Step 1: The initial phase entails configuring the parameters for the SCSO-based control algorithm and establishing the lower and upper bounds for the unknown parameters within the designated search space. In the context of the AVR system, these parameters represent the gains of the proposed controller, encompassing a total of nine controller gains, as illustrated in

Figure 8. Furthermore, it is necessary to define the number of search agents and the maximum number of iterations to guide the optimization process.

Step 2: Each search agent evaluates the fitness value of its corresponding candidate solution based on the problem’s defined cost function. This step facilitates the assessment of solution quality in the context of the optimization objective.

Step 3: The SCSO algorithm is then executed to determine the optimal solution. Based on the fitness function, the algorithm identifies the best score and the corresponding optimal position within the search space. Subsequently, the positions of the search agents are updated according to Equation (4). It is important to emphasize that selecting an appropriate performance index for the metaheuristic algorithm is critical to achieving effective optimization.

Step 4: A predefined stopping criterion is incorporated as part of the SCSO algorithm. In this case, the stopping criterion is satisfied when the maximum number of iterations is reached, signaling the algorithm to terminate.

PSO is a nature-inspired optimization algorithm that simulates the collective behavior of birds or fish. It initializes a population of particles (candidate solutions) that move within the search space, adjusting their positions based on personal experience and the best-performing neighbor. Each particle updates its velocity and position using a combination of its own best-known solution and the global best solution found by the swarm. This iterative process enables PSO to efficiently explore and exploit the search space, making it effective for solving complex optimization problems. More explanation about the mechanism of the algorithm and how it can be implemented is well explained in [

16,

41]. The parameters of the PSO used in this study are set as shown in

Table 5.

Here are the definitions of the PSO parameters:

- ➢

No. Particles is the number of particles, the total number of particles (candidate solutions) in the swarm. In this case, 50 particles are used.

- ➢

No. Iteration is the maximum iterations, the maximum number of iterations the algorithm will run before stopping, set to 50.

- ➢

Wmax is the maximum inertia weight, the upper limit of the inertia weight, which controls the influence of a particle’s previous velocity on its current velocity. A larger value encourages global exploration.

- ➢

Wmin is the minimum inertia weight, the lower limit of the inertia weight, promoting local exploitation as the algorithm progresses.

- ➢

C1 is the cognitive coefficient, the acceleration coefficient that controls the influence of a particle’s personal best solution on its velocity update.

- ➢

C2 is the social coefficient, the acceleration coefficient that controls the influence of the global best solution (best solution found by any particle) on the particle’s velocity update.

- ➢

Vmax is the maximum velocity, the upper limit of the particle’s velocity, preventing excessive movement. It is calculated as 20% of the search space range (upper bounds−lower bounds).

- ➢

Vmin is the minimum velocity, the lower limit of the particle’s velocity, ensuring that velocity does not drop below a certain threshold. It is set as −Vmax.

These parameters govern the exploration and exploitation balance in the PSO algorithm, affecting its convergence speed and solution quality.

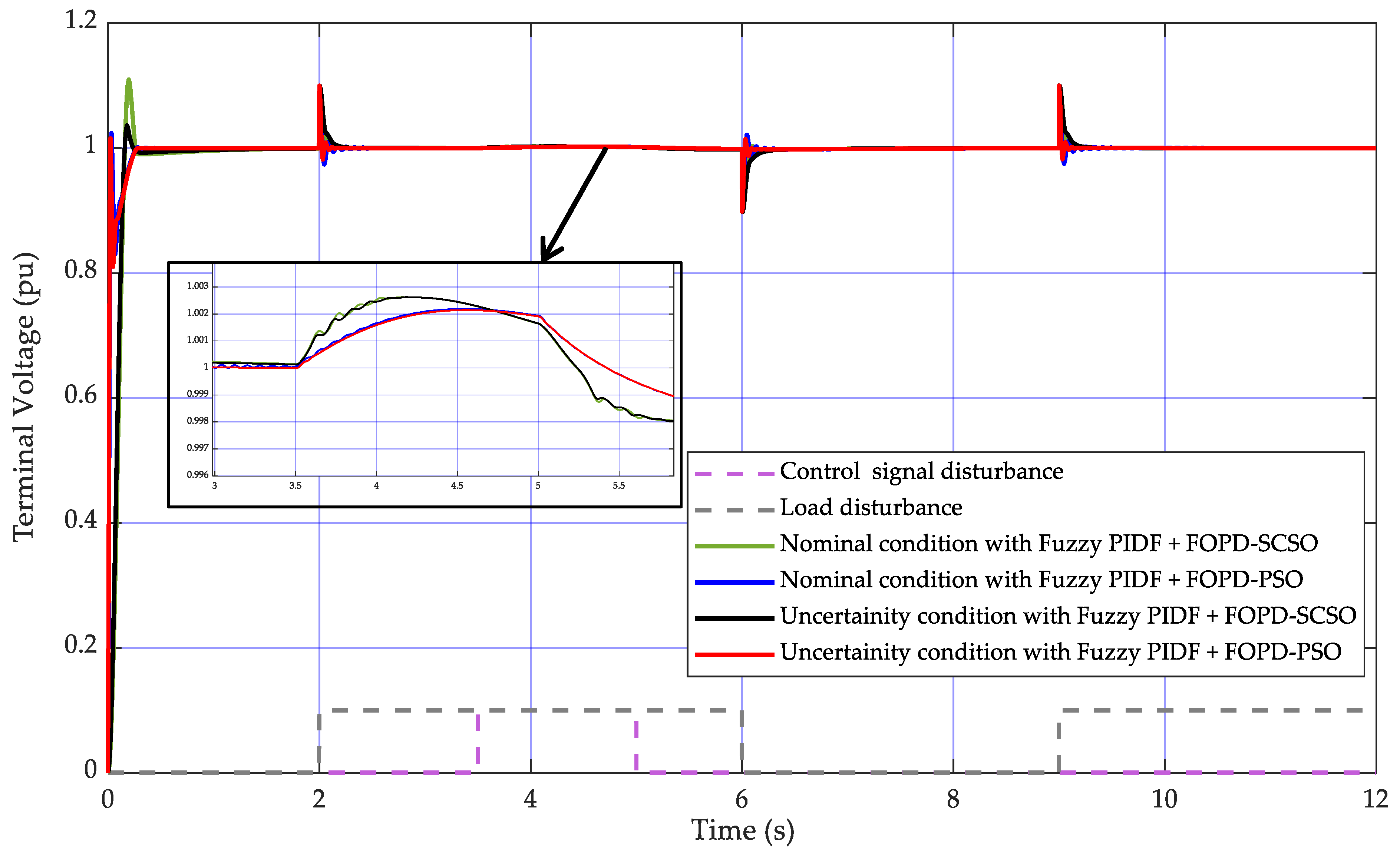

In this study, the gains of the proposed Fuzzy PIDF + FOPD used for AVR applications were fine-tuned using the SCSO algorithm by minimizing the Integral Time Absolute Error (ITAE) cost function, which is mathematically expressed as in Equation (10):

To ensure a sensible computational time for parameter tuning, the algorithm’s population size and the number of iterations were both set to 50. Additionally, the sensitivity range (

) was defined from 0 to 2, while the phase control range (R) was set as [−2

to 2

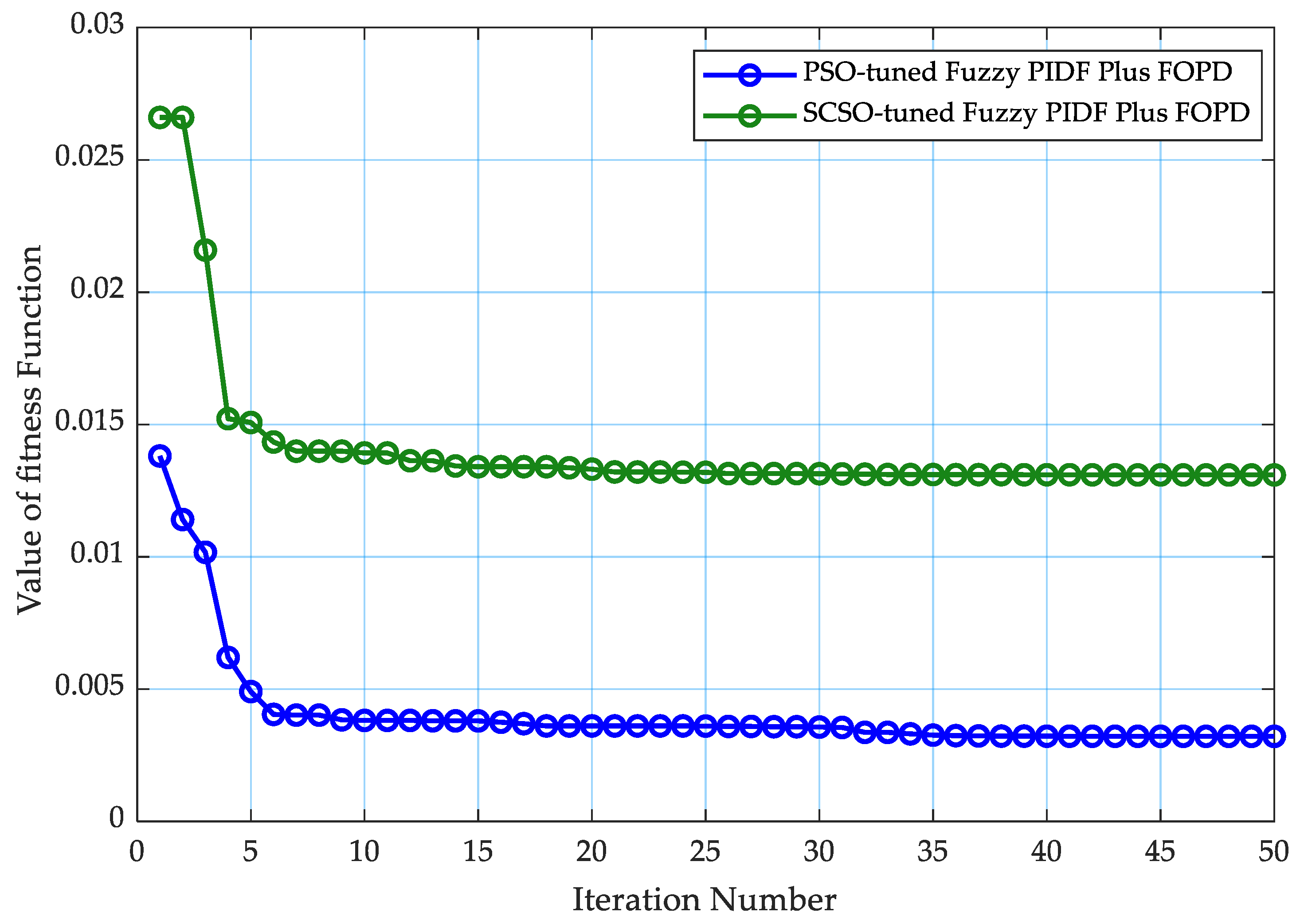

]. The convergence curves of the PSO algorithm- and SCSO algorithm-tuned proposed controller are illustrated in

Figure 9. The optimal parameters of the proposed AVR controller, obtained using the SCSO and PSO algorithms by minimizing the Integral of Time-weighted Absolute Error (ITAE), are summarized in

Table 6.