Enhanced Deep Representation Learning Extreme Learning Machines for EV Charging Load Forecasting by Improved Artemisinin Optimization and Multivariate Variational Mode Decomposition

Abstract

1. Introduction

2. Method

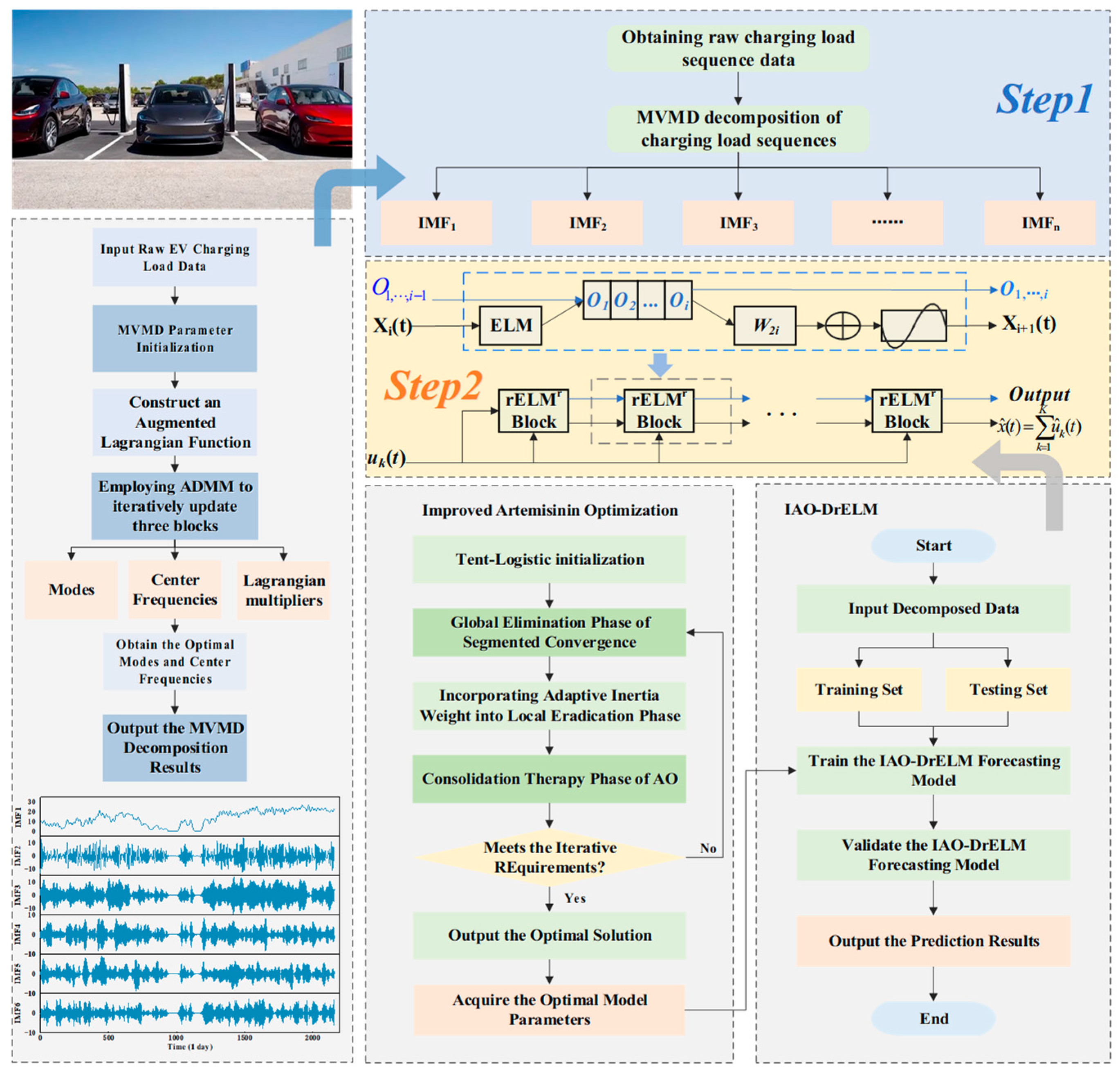

2.1. Multivariate Variational Mode Decomposition (MVMD)

2.2. Artemisinin Optimization (AO)

- (1)

- Initialization phase

- (2)

- Global elimination phase

- (3)

- Local eradication phase

- (4)

- Consolidation therapy phase

2.3. Deep Representation Learning Extreme Learning Machines (DrELMs)

2.4. Improved Artemisinin Optimization Algorithm

- (1)

- Tent-Logistic Double Chaotic Mapping Initialization

- (2)

- Segmented Nonlinear Convergence Drug Factors

- (3)

- Adaptive Inertia Weight Step Coefficient

3. Flowchart of the Charging Load Forecasting Model

4. Case Study

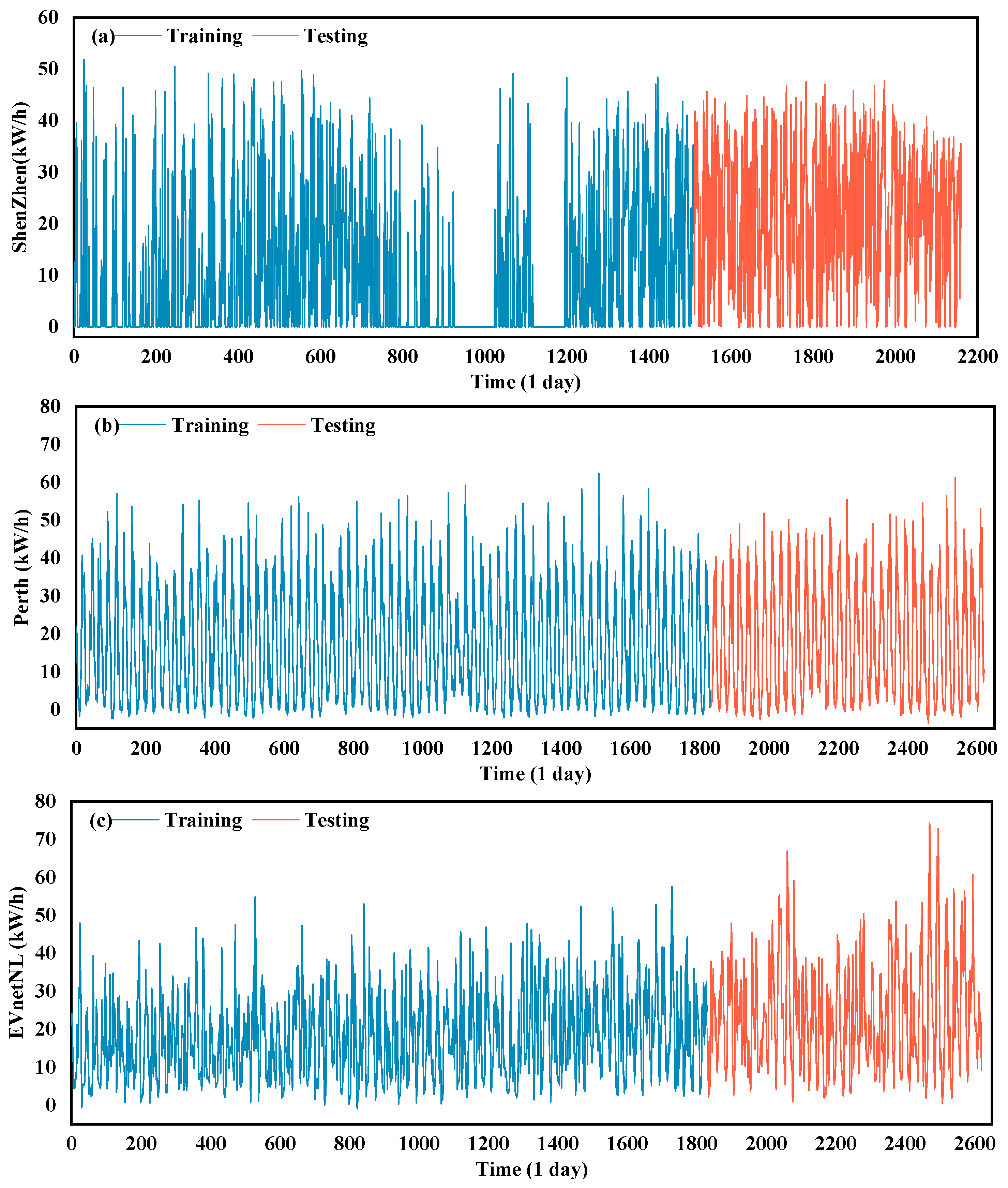

4.1. Data Sources

4.2. Data Processing

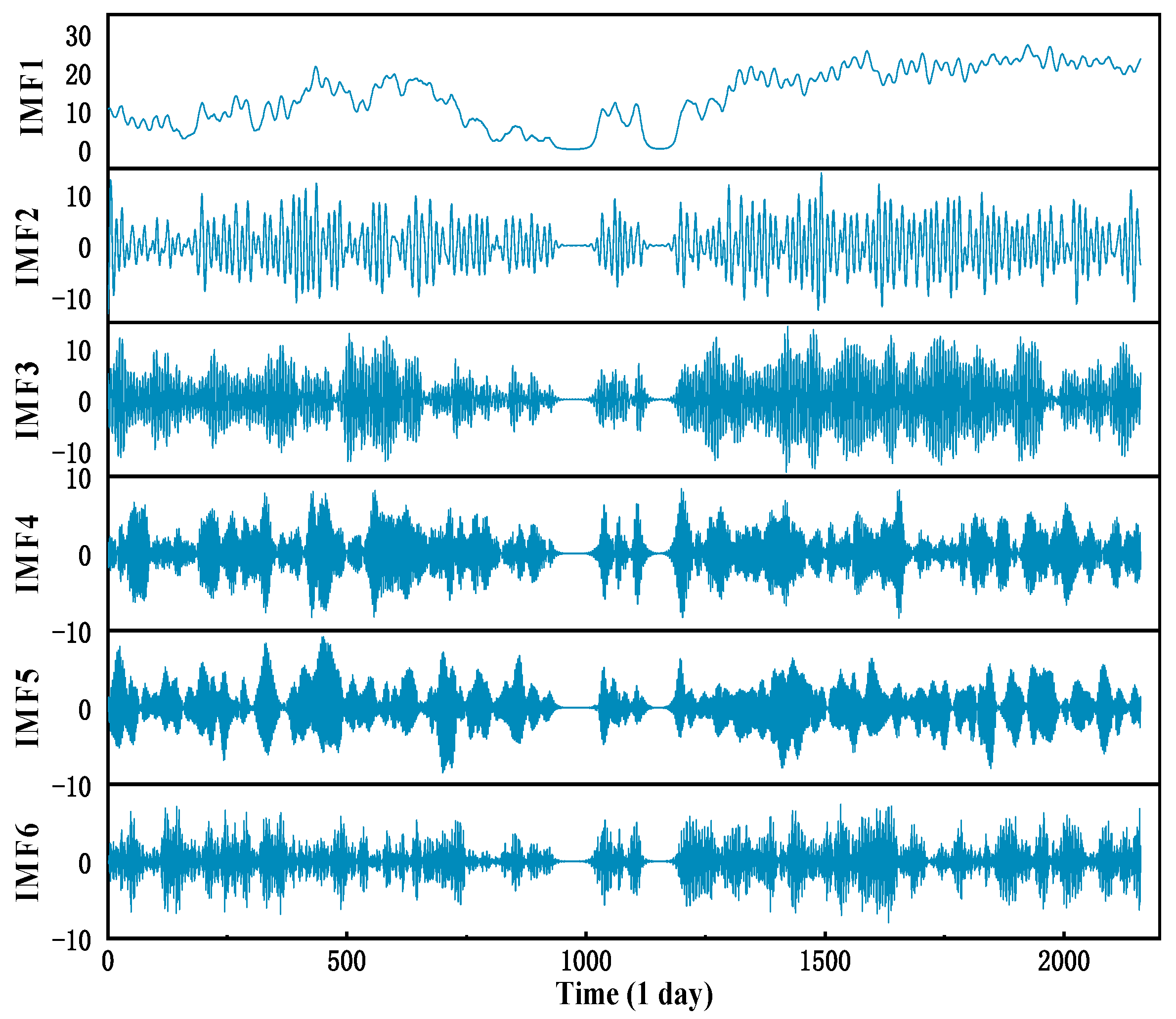

4.3. MVMD Decomposition

4.4. Model Performance Evaluation Metrics

5. Experimental Results and Discussion

5.1. Performance Comparison Between the DrELM and Sequential Models

- (1)

- Within a single model framework, the DrELM outperforms BP, LSTM, ELM, GRU, and Transformer across all four metrics: MSE, RMSE, MAE, and R2. Compared with the baseline ELM model, the DrELM reduces the MSE, RMSE, and MAE by 24.17%, 12.92%, and 9.68%, respectively, while increasing R2 to 89.43%. The results demonstrate that the DrELM has stronger nonlinear fitting and generalization capabilities when handling complex time series. It also explains why the DrELM model was selected as the core predictor.

- (2)

- Following the introduction of signal decomposition techniques, all decomposition-based hybrid models (EMD/VMD/MVMD-DrELM) significantly outperformed the standalone DrELM model. Compared with the DrELM, the MVMD-DrELM achieved reductions in MSE, RMSE, and MAE of 22.01%, 11.69%, and 12.98%, respectively, whilst the R2 value increased to 91.79%. The results demonstrate that decomposing the original signal into multiple, relatively stationary sub-modes effectively mitigates the interference posed by sequence non-stationarity to the prediction model.

- (3)

- All error metrics for the MVMD-DrELM outperform those of the VMD-DrELM and the EMD-DrELM. Compared with the VMD-DrELM, its MSE, RMSE, and MAE were further reduced by 10.73%, 5.52%, and 4.84%, respectively. The results further validate the superiority of MVMD in processing multivariate signals, avoiding modal aliasing and information loss. The model reconstructs the final load sequence by linearly weighting and summing the predictions of each modal component. Experimental results demonstrate that this strategy maintains prediction consistency without introducing significant reconstruction error, further validating the feasibility and stability of the proposed framework.

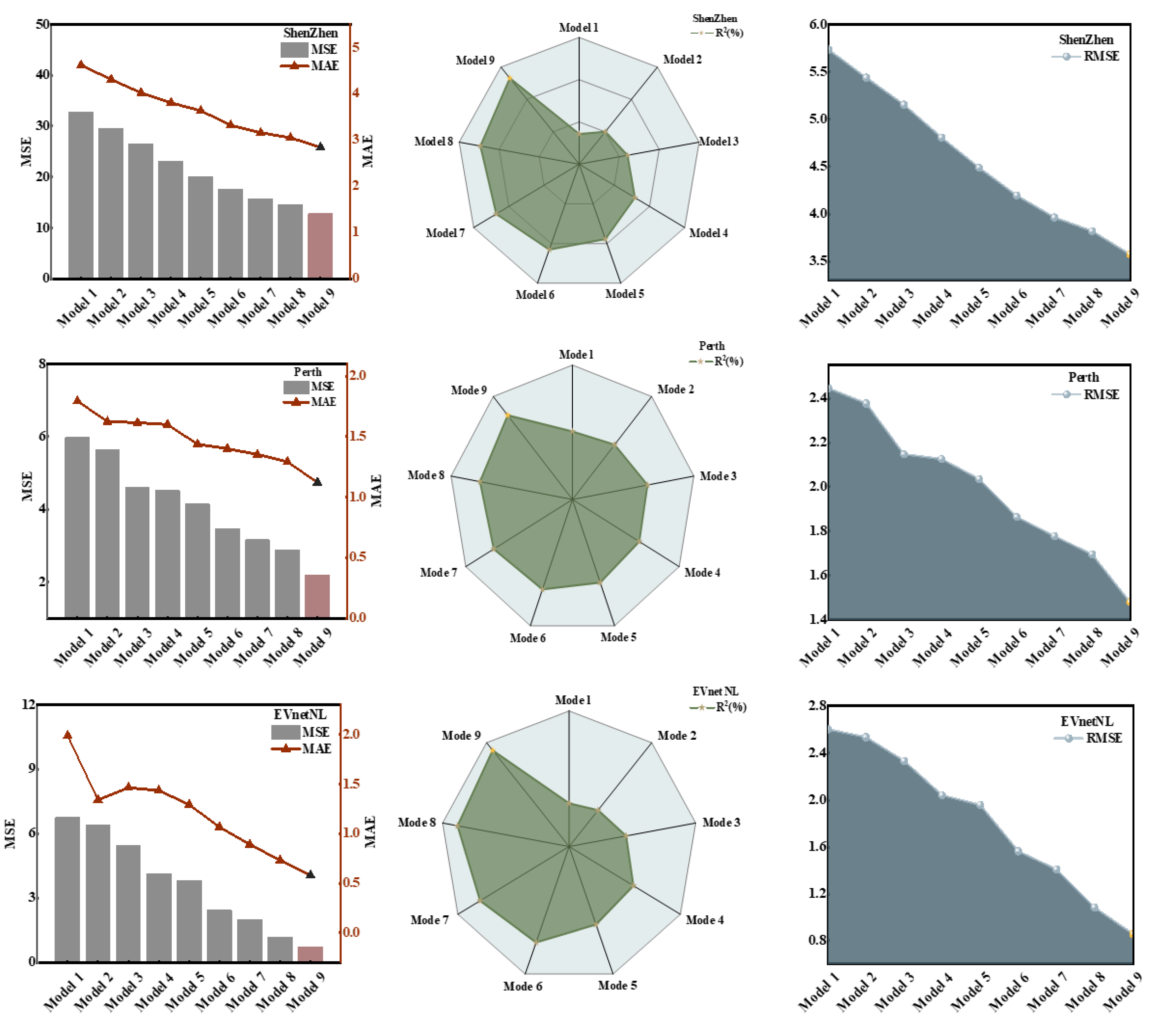

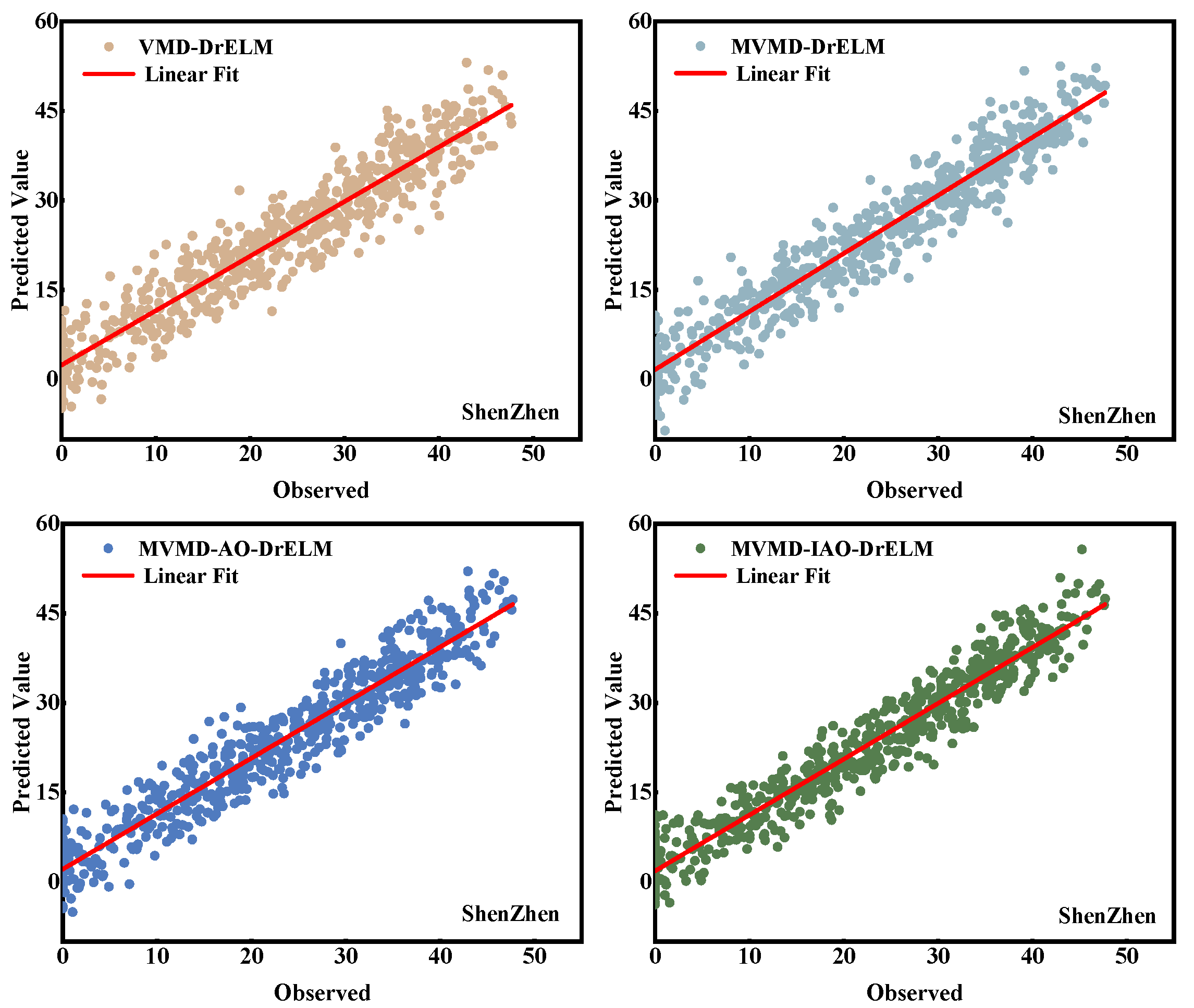

5.2. Analysis of the MVMD-IAO-DrELM Model Prediction Results

- (1)

- After comparing the proposed hybrid deep learning prediction model MVMD-IAO-DrELM with eight other models, the error metric results show that this model has the most accurate prediction results. The predictive model proposed in this paper performed as follows in terms of the four error metrics: the MSE value was 12.7700; the RMSE value was 3.5735; the MAE value was 2.8405; and the R2 value was 93.29%.

- (2)

- The DrELM model is a bit better than the other single models: CNN, LSSVM, ELM, and RELM. Compared with the basic ELM model, the DrELM achieved reductions in MSE, RMSE, and MAE of 24.17%, 12.92%, and 9.68%, respectively.

- (3)

- During model training on the Shenzhen dataset, the MSE, RMSE, and MAE values of the MVMD-DrELM were 15.6927, 3.9614, and 3.1604, respectively. These values were reduced by 22.01%, 11.69%, and 12.98%, respectively, compared with the DrELM single model.

- (4)

- In the experiment, this paper also used VMD signal decomposition and MVMD signal decomposition for comparison. The experimental results show that the signal decomposition effect of MVMD is slightly better than that of VMD, with the error indicators MSE, RMSE, and MAE decreasing by 10.73%, 5.52%, and 4.84%, respectively.

- (5)

- The MVMD-AO-DrELM showed a 7.15% reduction in MSE, a 3.64% reduction in RMSE, and a 3.51% reduction in MAE when compared with the experimental results of the MVMD-DrELM. The experimental results show that the AO algorithm can optimize the parameters of the DrELM model, improving its efficiency and accuracy.

- (6)

- Compared with the MVMD-AO-DrELM model, the MVMD-IAO-DrELM model proposed in this paper exhibits lower error metrics (MSE, RMSE, and MAE) and a higher R2 Coefficient of Determination. The experimental results demonstrate the positive impact of the improved IAO algorithm on the prediction model parameter optimization.

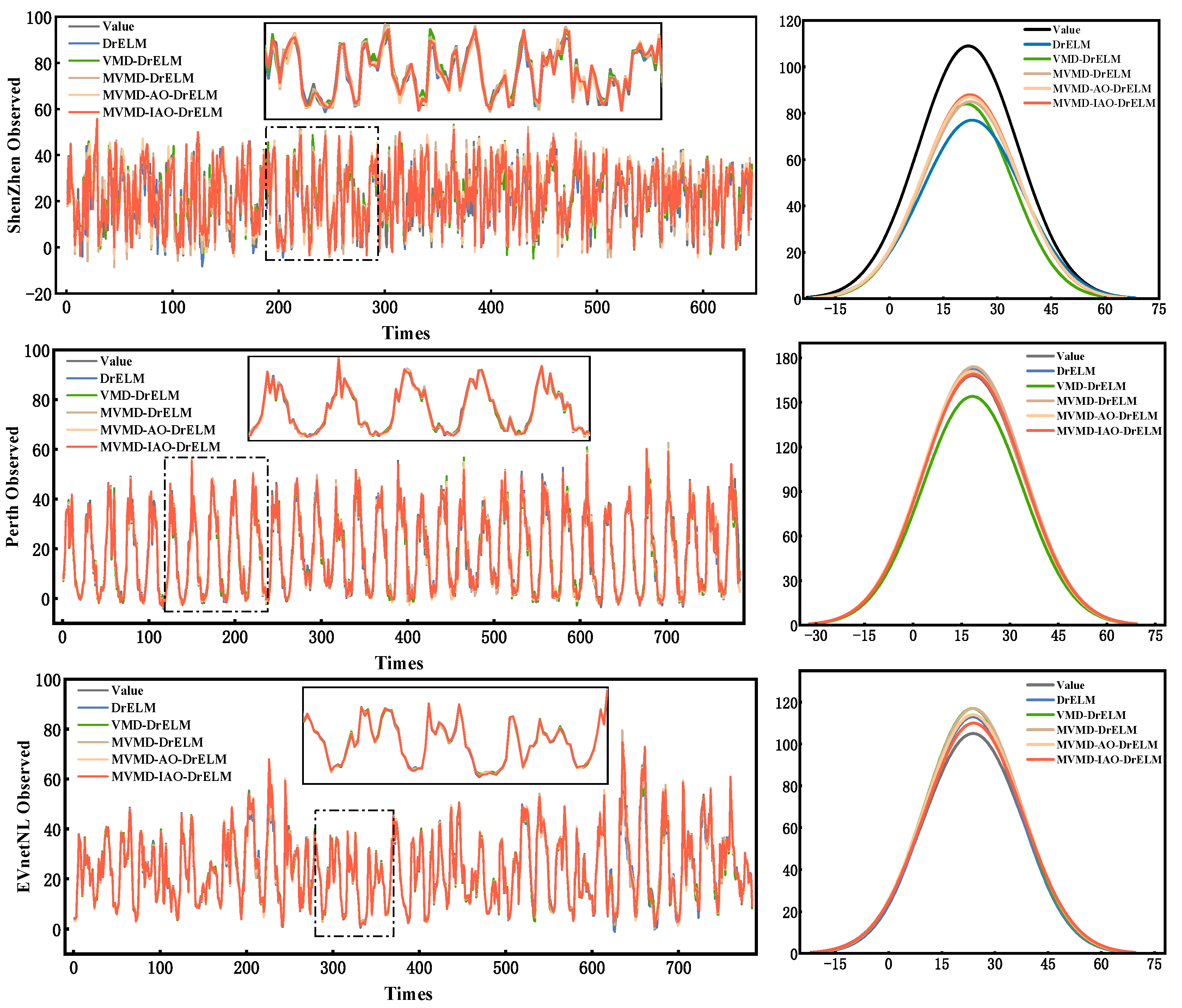

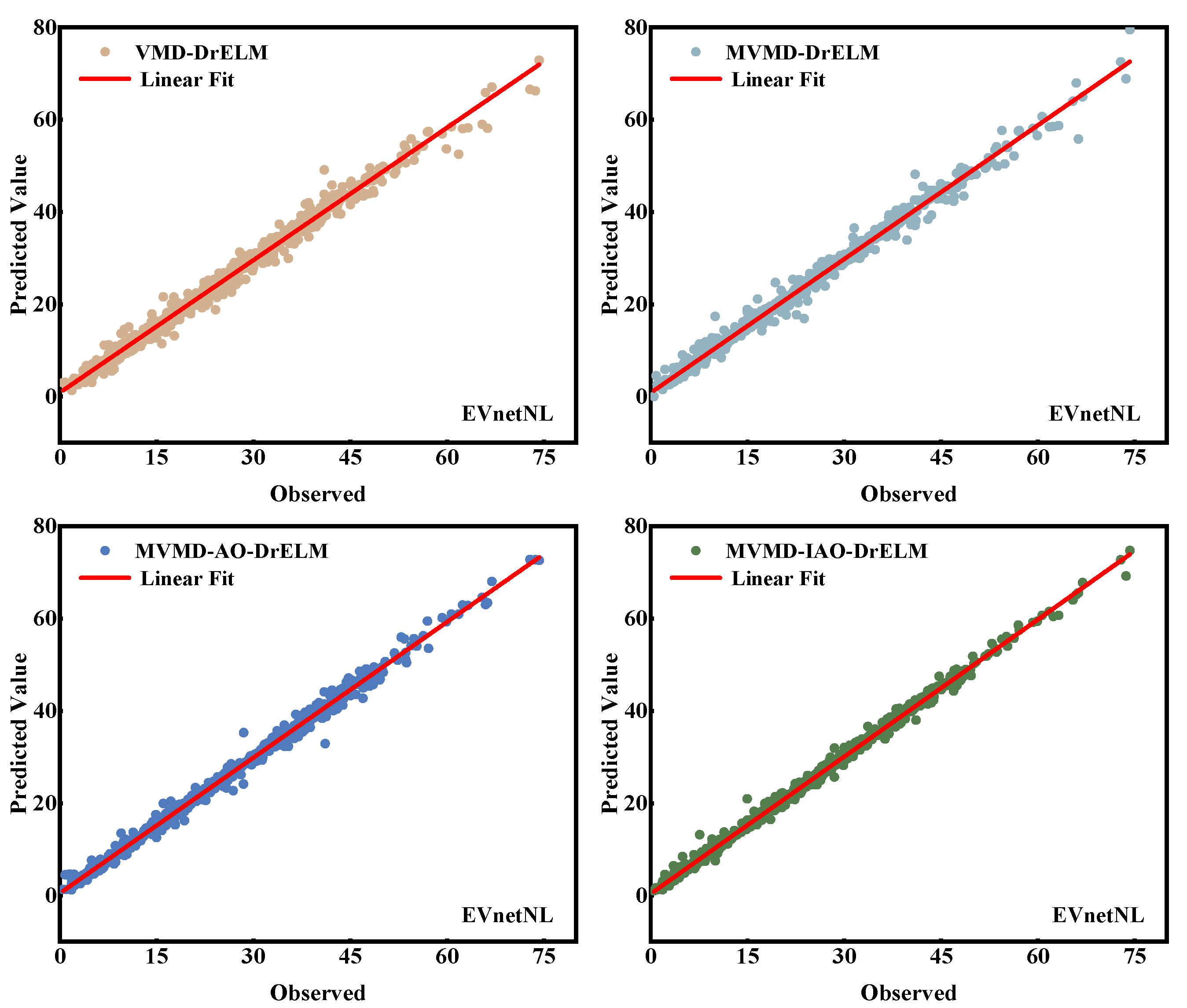

5.3. Combinatorial Predictive Model Generalization Ability Test

6. Conclusions

- (1)

- Prioritizing improving the accuracy and reliability of the model when dealing with situations involving missing data and noise interference.

- (2)

- Investigating how trained models can adapt quickly to new regions or charging station types with minimal additional training, thereby improving their generalization capabilities and practical utility.

- (3)

- Strengthening the spatiotemporal feature extraction capabilities of the model by integrating external conditions such as weather, public holidays, and traffic volume.

- (4)

- Considering integrating the model with the energy management systems to optimize the coordinated operation between EVs and the power grid holistically.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbreviations | Full Form |

| AO | Artemisinin Optimization Algorithm |

| CNN | Convolutional Neural Network |

| DrELM | Deep Representation Learning Extreme Learning Machine |

| EEMD | Ensemble Empirical Mode Decomposition |

| ELM | Extreme Learning Machine |

| EMS | Energy Management System |

| EMD | Empirical Mode Decomposition |

| EVs | Electric Vehicles |

| GRU | Gate Recurrent Unit |

| IAO | Improved Artemisinin Optimization Algorithm |

| LSSVM | Least Squares Support Vector Machine |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| MVMD | Multivariate Variational Mode Decomposition |

| R2 | Coefficient of Determination |

| RELM | Regularized Extreme Learning Machine |

| RF | Random Forest |

| RMSE | Root Mean Squared Error |

| RNN | Recurrent Neural Network |

| SVM | Support Vector Machine |

| TCN | Temporal Convolutional Network |

| VMD | Variational Mode Decomposition |

| XGBoost | Extreme Gradient Boosting |

| Nomenclature | |

| Symbol | Description |

| Original time-series charging load data | |

| Final predicted charging load | |

| decomposed modal component from MVMD | |

| modal component | |

| Central frequencies | |

| Total number of decomposed modes | |

| Partial derivative operation with respect to time | |

| A penalty factor | |

| Lagrangian multiplier | |

| Size of the population | |

| Dimension | |

| Upper and lower bounds | |

| Probabilistic coefficient | |

| Drug concentration factor | |

| th iteration | |

| Maximum number of iterations | |

| Random and mutually exclusive indices | |

| Normalized fitness value | |

| Current optimal solution | |

| Number of ELM layers included in the model | |

| Hidden layer nodes | |

| Input weight matrix | |

| Hidden layer output | |

| Output weight vector | |

| Target value matrix | |

| Prediction results in the th layer | |

| Kernel function | |

| Weighting parameter | |

| Parameter controls the upper and lower bounds of the weights | |

| Prediction function | |

| Total number of sample data | |

| True value | |

| Average value of the true value | |

| Predicted value |

References

- Global EV Outlook 2024: Moving Towards Increased Affordability; International Energy Agency: Paris, France, 2024; Available online: https://www.iea.org/reports/global-ev-outlook-2024 (accessed on 15 March 2025).

- Liu, Y.S.; Tayarani, M.; Gao, H.O. An activity-based travel and charging behavior model for simulating battery electric vehicle charging demand. Energy 2022, 258, 124938. [Google Scholar] [CrossRef]

- Xia, F.; Chen, H.; Yan, M.; Gan, W.; Zhou, Q.; Ding, T.; Wang, X.; Wang, L.; Chen, L. Market-Based Coordinated Planning of Fast Charging Station and Dynamic Wireless Charging System Considering Energy Demand Assignment. IEEE Trans. Smart Grid 2023, 15, 1913–1925. [Google Scholar] [CrossRef]

- Siddiqui, J.; Ahmed, U.; Amin, A.; Alharbi, T.; Alharbi, A.; Aziz, I.; Khan, A.R.; Mahmood, A. Electric Vehicle charging station load forecasting with an integrated DeepBoost ap-proach. Alex. Eng. J. 2025, 116, 331–341. [Google Scholar] [CrossRef]

- Palou, J.T.V.; González, J.S.; Santos, J.M.R.; Fernández, J.M.R. A novel weight-based ensemble method for emerging energy players: An application to electric vehicle load prediction. Energy AI 2025, 20, 100510. [Google Scholar] [CrossRef]

- Akil, M.; Dokur, E.; Bayindir, R. Analysis of Electric Vehicle Charging Demand Forecasting Model based on Monte Carlo Simulation and EMD-BO-LSTM. In Proceedings of the 2022 10th International Conference on Smart Grid (icSmartGrid), Istanbul, Turkey, 27–29 June 2022; pp. 356–362. [Google Scholar]

- Akinola, I.T.; Sun, Y.; Adebayo, I.G.; Wang, Z. Daily peak demand forecasting using Pelican Algorithm optimised Support Vector Machine (POA-SVM). Energy Rep. 2024, 12, 4438–4448. [Google Scholar] [CrossRef]

- Yaghoubi, E.; Khamees, A.; Razmi, D.; Lu, T. A systematic review and meta-analysis of machine learning, deep learning, and ensemble learning approaches in predicting EV charging behavior. Eng. Appl. Artif. Intell. 2024, 135, 108789. [Google Scholar] [CrossRef]

- Jiang, P.; Li, R.; Liu, N.; Gao, Y. A novel composite electricity demand forecasting framework by data processing and optimized support vector machine. Appl. Energy 2020, 260, 114243. [Google Scholar] [CrossRef]

- Pesantez, E.J.; Li, B.; Lee, C.; Zhao, Z.; Butala, M.; Stillwell, A.S. A Comparison Study of Predictive Models for Electricity Demand in a Diverse Urban Environment. Energy 2023, 283, 129142. [Google Scholar] [CrossRef]

- Sarkin Adar, Z.M.; Alhayd, A.; Todeschini, G. Predicting EV Charging Duration Using Machine Learning and Charging Transactions at Three Sites. In Proceedings of the 2024 IEEE International Conference on Industrial Technology (ICIT), Bristol, UK, 25–27 March 2024; pp. 1–6. [Google Scholar]

- Ren, X.; Zhang, F.; Zhu, H.; Liu, Y. Quad-kernel deep convolutional neural network for intra-hour photovoltaic power forecasting. Appl. Energy 2022, 323, 119682. [Google Scholar] [CrossRef]

- Danish, M.U.; Grolinger, K. Kolmogorov–Arnold recurrent network for short term load forecasting across diverse consumers. Energy Rep. 2025, 13, 713–727. [Google Scholar] [CrossRef]

- Wang, S.; Zhuge, C.; Shao, C.; Wang, P.; Yang, X.; Wang, S. Short-term electric vehicle charging demand prediction: A deep learning approach. Appl. Energy 2023, 340, 121032. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, Z.; Shen, Z.-J.M.; Sun, F. Data-driven framework for large-scale prediction of charging energy in electric vehicles. Appl. Energy 2021, 282, 116175. [Google Scholar] [CrossRef]

- Yue, W.; Liu, Q.; Ruan, Y.; Qian, F.; Meng, H. A prediction approach with mode decomposition-recombination technique for short-term load forecasting. Sustain. Cities Soc. 2022, 85, 104034. [Google Scholar] [CrossRef]

- Guo, H.; Chen, L.; Wang, Z.; Li, L. Day-ahead prediction of electric vehicle charging demand based on quadratic decomposition and dual attention mechanisms. Appl. Energy 2025, 381, 125198. [Google Scholar] [CrossRef]

- Zhang, X.; Kong, X.; Yan, R.; Liu, Y.; Xia, P.; Sun, X.; Zeng, R.; Li, H. Data-driven cooling, heating and electrical load prediction for building integrated with electric vehicles considering occupant travel behavior. Energy 2023, 264, 126274. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, Y.; Yang, Y.; Yuan, S. Load forecasting via Grey Model-Least Squares Support Vector Machine model and spatial-temporal distribution of electric consumption intensity. Energy 2022, 255, 124468. [Google Scholar] [CrossRef]

- Kim, H.J.; Kim, M.K. Spatial-Temporal Graph Convolutional-Based Recurrent Network for Electric Vehicle Charging Stations Demand Forecasting in Energy Market. IEEE Trans. Smart Grid 2024, 15, 3979–3993. [Google Scholar] [CrossRef]

- Yin, W.; Ji, J.; Wen, T.; Zhang, C. Study on orderly charging strategy of EV with load forecasting. Energy 2023, 278, 127818. [Google Scholar] [CrossRef]

- Rehman, N.U.; Aftab, H. Multivariate Variational Mode Decomposition. IEEE Trans. Signal Process. 2019, 67, 6039–6052. [Google Scholar] [CrossRef]

- Rilling, G.; Flandrin, P. One or Two Frequencies? The Empirical Mode Decomposition Answers. IEEE Trans. Signal Process. 2007, 56, 85–95. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational mode decomposition. IEEE Trans. Signal Process. 2013, 62, 531–544. [Google Scholar] [CrossRef]

- Zeng, H.; Wu, B.; Fang, H.; Lin, J. Interpretable wind speed forecasting through two-stage decomposition with comprehensive relative importance analysis. Appl. Energy 2025, 392, 126015. [Google Scholar] [CrossRef]

- Yuan, C.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Wu, Z.; Chen, H. Artemisinin optimization based on malaria therapy: Algorithm and applications to medical image segmentation. Displays 2024, 84, 102740. [Google Scholar] [CrossRef]

- Yu, W.; Zhuang, F.; He, Q.; Shi, Z. Learning deep representations via extreme learning machines. Neurocomputing 2015, 149, 308–315. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks. Neurocomputing 2004, 2, 985–990. [Google Scholar]

- Wang, J.; Chen, Q.; Lang, X.; Liu, S.; Liu, Y.; Su, H. A modified multivariate variational mode decomposition for multi-channel signal processing. In Proceedings of the 2023 8th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 17–19 November 2023; pp. 384–390. [Google Scholar]

| Models | MSE | RMSE | MAE | R2 (%) |

|---|---|---|---|---|

| BP | 33.2369 | 5.7651 | 4.5577 | 82.52% |

| LSTM | 27.1997 | 5.2153 | 4.1614 | 85.87% |

| ELM | 26.5322 | 5.1509 | 4.0213 | 86.05% |

| GRU | 26.9878 | 5.1950 | 4.0671 | 85.91% |

| Transformer | 24.5070 | 4.9505 | 3.9446 | 87.14% |

| DrELM | 20.1206 | 4.4856 | 3.6319 | 89.43% |

| EMD-DrELM | 18.0196 | 4.2449 | 3.3955 | 90.54% |

| VMD-DrELM | 17.5789 | 4.1927 | 3.3212 | 90.76% |

| MVMD-DrELM | 15.6927 | 3.9614 | 3.1604 | 91.79% |

| Models | MSE | RMSE | MAE | R2 (%) |

|---|---|---|---|---|

| CNN | 32.8351 | 5.7302 | 4.6190 | 83.57% |

| LSSVM | 29.5549 | 5.4364 | 4.3112 | 85.02% |

| ELM | 26.5322 | 5.1509 | 4.0213 | 86.05% |

| RELM | 23.0818 | 4.8044 | 3.8071 | 87.93% |

| DrELM | 20.1206 | 4.4856 | 3.6319 | 89.43% |

| VMD-DrELM | 17.5789 | 4.1927 | 3.3212 | 90.76% |

| MVMD-DrELM | 15.6927 | 3.9614 | 3.1604 | 91.79% |

| MVMD-AO-DrELM | 14.5710 | 3.8172 | 3.0494 | 92.35% |

| MVMD-IAO-DrELM | 12.7700 | 3.5735 | 2.8405 | 93.29% |

| Name | Models |

|---|---|

| Model 1 | CNN |

| Model 2 | LSSVM |

| Model 3 | ELM |

| Model 4 | RELM |

| Model 5 | DrELM |

| Model 6 | VMD-DrELM |

| Model 7 | MVMD-DrELM |

| Model 8 | MVMD-AO-DrELM |

| Model 9 | MVMD-IAO-DrELM |

| Models | MSE | RMSE | MAE | R2 (%) |

|---|---|---|---|---|

| CNN | 5.9688 | 2.4431 | 1.7932 | 97.53% |

| LSSVM | 5.6400 | 2.3749 | 1.6236 | 97.66% |

| ELM | 4.6047 | 2.1458 | 1.6157 | 98.09% |

| RELM | 4.5140 | 2.1246 | 1.5995 | 98.13% |

| DrELM | 4.1381 | 2.0342 | 1.4372 | 98.29% |

| VMD-DrELM | 3.4728 | 1.8635 | 1.4006 | 98.56% |

| MVMD-DrELM | 3.1582 | 1.7771 | 1.3523 | 98.69% |

| MVMD-AO-DrELM | 2.8724 | 1.6948 | 1.2919 | 98.81% |

| MVMD-IAO-DrELM | 2.1917 | 1.4805 | 1.1209 | 99.09% |

| Models | MSE | RMSE | MAE | R2 (%) |

|---|---|---|---|---|

| CNN | 6.7531 | 2.5987 | 1.9900 | 96.59% |

| LSSVM | 6.4075 | 2.5313 | 1.3411 | 96.75% |

| ELM | 5.4273 | 2.3296 | 1.4646 | 97.25% |

| RELM | 4.1482 | 2.0367 | 1.4381 | 97.89% |

| DrELM | 3.8171 | 1.9538 | 1.2935 | 98.06% |

| VMD-DrELM | 2.4338 | 1.5601 | 1.0671 | 98.76% |

| MVMD-DrELM | 1.9749 | 1.4053 | 0.8919 | 99.00% |

| MVMD-AO-DrELM | 1.1682 | 1.0808 | 0.7311 | 99.41% |

| MVMD-IAO-DrELM | 0.7346 | 0.8571 | 0.5801 | 99.63% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, A.; Li, H.; Tang, Z.; Zhang, Z. Enhanced Deep Representation Learning Extreme Learning Machines for EV Charging Load Forecasting by Improved Artemisinin Optimization and Multivariate Variational Mode Decomposition. Energies 2025, 18, 6061. https://doi.org/10.3390/en18226061

Zhong A, Li H, Tang Z, Zhang Z. Enhanced Deep Representation Learning Extreme Learning Machines for EV Charging Load Forecasting by Improved Artemisinin Optimization and Multivariate Variational Mode Decomposition. Energies. 2025; 18(22):6061. https://doi.org/10.3390/en18226061

Chicago/Turabian StyleZhong, Anjie, Honghai Li, Zhongyi Tang, and Zhirong Zhang. 2025. "Enhanced Deep Representation Learning Extreme Learning Machines for EV Charging Load Forecasting by Improved Artemisinin Optimization and Multivariate Variational Mode Decomposition" Energies 18, no. 22: 6061. https://doi.org/10.3390/en18226061

APA StyleZhong, A., Li, H., Tang, Z., & Zhang, Z. (2025). Enhanced Deep Representation Learning Extreme Learning Machines for EV Charging Load Forecasting by Improved Artemisinin Optimization and Multivariate Variational Mode Decomposition. Energies, 18(22), 6061. https://doi.org/10.3390/en18226061