Abstract

The early stage of architectural design plays a decisive role in determining building energy performance, yet conventional evaluation is typically deferred to later phases, restricting timely and data-informed feedback. This paper proposes EnergAI, a generative design framework that incorporates energy optimization objectives directly into the scheme generation process through large language models (e.g., GPT-4o, DeepSeek-V3.1-Think, Qwen-Max, and Gemini-2.5 pro). A dedicated dataset, LowEnergy-FormNet, comprising 2160 cases with site parameters, massing descriptors, and simulation outputs, was constructed to model site, form, and energy relationships. The framework encodes building massing into a parametric vector representation and employs hierarchical prompt strategies to establish a closed-loop compatibility with ClimateStudio. Experimental evaluations demonstrate that geometry-oriented and fuzzy-goal prompts achieve average annual reductions of approximately 16–17% in energy use intensity and 3–4% in energy cost compared with human designs, while performance-oriented structured prompts deliver the most reliable improvements, eliminating high-energy outliers and yielding an average EUI-saving rate above 50%. In cross-model comparisons under an identical toolchain, GPT-4o delivered the strongest and most stable optimization, achieving 63.3% mean EUI savings, nearly 13% higher than DeepSeek-V3.1-Think, Qwen-Max, and Gemini-2.5 baselines. These results demonstrate the feasibility and indicate the potential robustness of embedding performance constraints at the generation stage, providing a feasible approach to support proactive, data-informed early design.

1. Introduction

The early stage of architectural design is widely regarded as a core phase with a far-reaching impact on the final scheme. It is not only the period richest in design ideation and freest in spatial exploration, but also a critical window for determining a building’s energy consumption level and overall performance [1,2,3]. Yet in current practice, energy simulation is commonly deferred: performance assessment is often postponed until the middle or late design phases, or even the construction-document stage. This back-loaded control paradigm forces early design to rely primarily on experience or heuristic “rules,” thereby depriving the process of timely, data-driven feedback on energy performance [4,5,6,7]. Consistent with this view, reviews and multi-country surveys show Building Energy Simulation (BEM) is rarely embedded at the concept stage—used later mainly for validation—due to time costs, rapid design churn, and limited resources/skills; Østergård et al. (2016) highlighted that simulation tools, though valuable for early-stage decision-making, are often too complex for conceptual workflows [8]. Soebarto et al. (2015) similarly found that many architects consider BEM useful but too time-consuming to apply within the creative design process [9]. Similar patterns appear in UK practice and architect surveys, which cite time/budget pressure, weak interoperability/usability, and unclear roles as persistent barriers to early-stage modeling [10,11]. Two salient consequences follow. First, low-energy strategies remain fundamentally disconnected from the generative logic of architectural massing and spatial articulation, relegated instead to piecemeal retrofitting measures. These include localized post-occupancy adaptations and incremental equipment upgrades that yield only marginal performance gains through reactive optimization [12,13]. Second, once massing decisions are fixed early on, later energy optimization incurs higher design and construction costs, may compromise spatial quality and aesthetic intent, and typically yields limited returns [14,15]. Therefore, advancing sustainable architecture requires integrating real-time energy feedback into early design, enabling a proactive, design-driven approach to energy optimization rather than passive remediation [16,17].

With the rise of artificial intelligence, traditional design workflows are being progressively reconfigured. Generative design and performance-driven optimization methods have rapidly evolved into important instruments for early-stage exploration [18]. These approaches can rapidly produce diverse building forms. For example, Generative Adversarial Networks (GANs) have been widely used for façade morphology and style, massing layout, and early concept generation [19]. Variational Autoencoders (VAEs) have shown strong performance in residential plan generation and reconstruction [20]. However, despite their expressive power, most AI-driven form generators remain geometry-oriented—their objectives are centered on spatial efficiency or morphological diversity, with limited attention to performance constraints such as energy use or environmental impact [21,22,23]. In existing workflows, Grasshoppe and EnergyPlus coupling enables energy-aware optimization; each morphological change still requires re-simulation, delaying creative feedback [24]. Moreover, current datasets and generative models often emphasize façade or volumetric variety but lack explicit associations between building form and energy use, limiting their applicability to performance-driven design [25,26]. This reveals a persistent gap between form-making and energy reasoning during early-stage exploration [27,28,29]. For clarity, the full list of abbreviations used throughout this paper is provided in Appendix A (Table A1).

With the emergence of large language models (LLMs), generative design has entered a new phase that extends beyond geometric manipulation to semantic understanding. LLMs have achieved remarkable cross-domain generalization in fields such as autonomous driving, medical imaging, and social media [30,31,32,33]. For example, LLM4Drive enhances perception–reasoning–decision pipelines in autonomous systems [34,35]; in radiology, LLMs support image segmentation, interpretation, and automated report generation [36]; and in social-media contexts, SoMeLVLM achieves robust colloquial text–image fusion on large-scale corpora [37]. These examples demonstrate LLMs’ potential for multimodal understanding and contextual reasoning [38]. In architecture, however, LLM applications remain in early exploration, focusing primarily on natural-language-driven parametric modeling, BIM semantic parsing, and automated report generation [39,40]. Representative works such as ArchiLens and SAAF illustrate how LLMs can quantify architectural styles and enable façade segmentation for downstream parametric editing [41,42]. Yet the closed-loop process integrating geometry generation, performance evaluation, constraint solving, and re-generation remains underdeveloped, with limited exploration of synchronization between massing generation and energy optimization [43].

While LLMs open new avenues for semantic reasoning in design, energy evaluation continues to depend on traditional simulation frameworks, with Building Energy Simulation (BEM) serving as the cornerstone for assessing and optimizing building performance throughout the design life cycle [44,45]. Tools such as EnergyPlus, developed by the U.S. Department of Energy, provide dynamic, time-series analysis with high accuracy and are widely adopted as benchmarks in both research and practice [46]. For early design stages, integrated environments such as Ladybug Tools and Honeybee embed climate, daylighting, and solar-access analyses within Grasshopper, enabling rapid iterations and visual feedback [47,48]. However, these workflows still suffer from deferred assessment, high modeling complexity, and limited interoperability between BIM and BEM systems [49]. Manual geometry simplification, repeated parameter reconstruction, and slow data exchange continue to constrain their applicability in early conceptual phases. Moreover, as Royapoor et al. noted, the calibration of whole-building models often involves multi-metric and long-horizon acceptance criteria, further increasing comprehension and labor costs [50]. Consequently, even though simulation tools provide quantitative rigor, their lack of real-time feedback hampers proactive decision-making.

In response to the delayed feedback and fragmented workflows of conventional BEM-based evaluation, Performance-Driven Design (PDD) has emerged as a methodological framework that seeks to align architectural form-making with quantifiable objectives—such as energy efficiency, comfort, or daylight performance—through iterative simulation, trade-off analysis, and multi-objective optimization [51]. Yet mainstream implementations still rely on parametric modeling and evolutionary algorithms that, while effective optimizers, underrepresent architectural semantics and creative intent, and inherit the same feedback delays as conventional BEM workflows [52,53]. Rule-based generators similarly struggle to generalize across complex contexts or capture higher-level design logic [54,55,56]. Recent studies have begun to extend PDD with LLMs, enabling unified reasoning across semantics, functions, and performance metrics [57,58,59]. For instance, LLMs can extract spatial and quantitative constraints from natural-language briefs, generate parametric models, and interpret simulation outputs for design refinement [31]; FloorPlan-DeepSeek employs vectorized autoregressive “next-room prediction” to synthesize plans based on programmatic constraints [60]; and UrbanSense demonstrates how LLMs can translate urban performance metrics into interpretable textual feedback [61]. Nevertheless, current LLM-driven PDD frameworks lack an integrated dataset–semantics–performance coupling, particularly at the early massing stage, where energy objectives should guide geometric reasoning [62,63,64,65,66].

Building on the above gaps, this study proposes EnergAI, an AI-driven generative design framework that integrates energy optimization objectives into the initial stage of massing generation. The framework investigates the potential of large language models (LLMs) to guide early-stage architectural form generation through combined semantic reasoning and quantitative energy feedback, as well as the extent to which LLMs can approximate or enhance energy performance during conceptual design.

To address these questions, this study accomplishes two main tasks as follows:

- (1)

- Dataset. The LowEnergy-FormNet dataset, a 2160-case dataset pairing site parameters (e.g., climate zone, orientation, and massing ratios) with simulation outputs (site EUI and energy cost) to model site–form–energy links.

- (2)

- Framework. EnergAI—a large language model (LLM)-centered, early-stage performance-driven framework that generates low-energy massing from site constraints, aligning façade and energy performance.

Through these methodological advances, EnergAI aims to respond to the methodological and stage-level limitations observed in previous studies, which have largely focused on post-design analysis or localized optimization. By incorporating energy reasoning into the generative stage, the proposed framework seeks to contribute to a more integrated understanding between form generation and performance evaluation, providing a potential pathway toward coupling semantic reasoning with quantitative assessment in early architectural design.

2. Datasets

To investigate the relationships among site conditions, building form, and energy consumption, we developed a dedicated dataset called LowEnergy-FormNet. In this context, each “design scheme” corresponds to a unique building massing design (i.e., an architectural form with specific geometric and façade parameters) prepared for energy simulation analysis. The LowEnergy-FormNet dataset encompasses a total of 2160 building design schemes, covering both human-generated and AI-assisted examples. Specifically, 960 schemes were manually created by a group of 40 professional architects, who leveraged their expertise to refine each building’s shape and features for lower energy use. In parallel, another 1200 schemes were produced via a large language model (LLM)-assisted workflow, in which a separate cohort of 40 designers guided an LLM to generate building forms through prompt engineering. These AI-driven schemes span three distinct prompt strategy categories: (1) geometry-oriented prompts that explicitly instruct form modifications (e.g., adjusting proportions or orientation), (2) fuzzy-goal prompts that express broad energy-saving objectives without specifying actions, and (3) performance-oriented prompts that directly target specific energy performance metrics. The hierarchical structure and functional mechanism of the three types of prompts are detailed in Section 3.2. Throughout the LLM generation process, human designers played an active supervisory role, formulating the initial prompts, iteratively adjusting them, and filtering the LLM’s outputs, to ensure the resulting designs were feasible and aligned with the energy optimization goals.

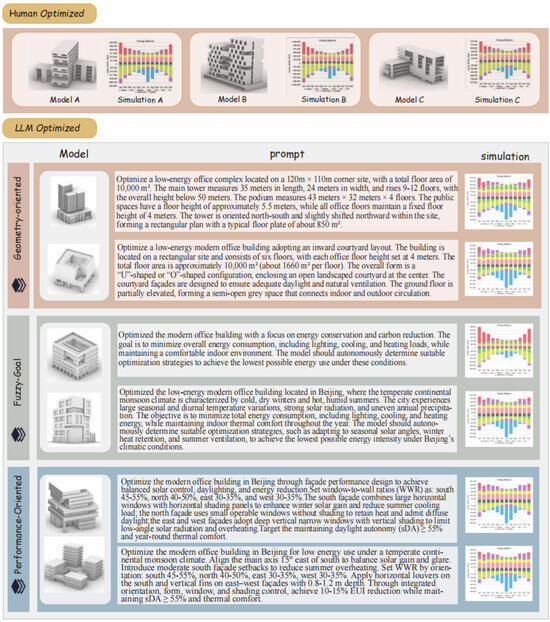

All design schemes in LowEnergy-FormNet are richly annotated with their input parameters and simulation outputs. Each entry records the relevant design parameters (such as climate zone, site context, building orientation, footprint dimensions, height, volumetric ratios, window-to-wall ratios, and shading depths) along with the resulting energy performance metrics (including annual site EUI and energy cost). For the LLM-generated subset, the complete text prompt used to generate each scheme is also preserved, providing full transparency into the AI’s guidance instructions. This comprehensive labeling enables detailed analysis of how variations in form or prompt strategy impact energy outcomes. Figure 1 shows two subsets of human design and human-regulated LLM design in the dataset, along with their corresponding annotations.

Figure 1.

Examples of design schemes and corresponding annotations in the dataset.

3. Methodology

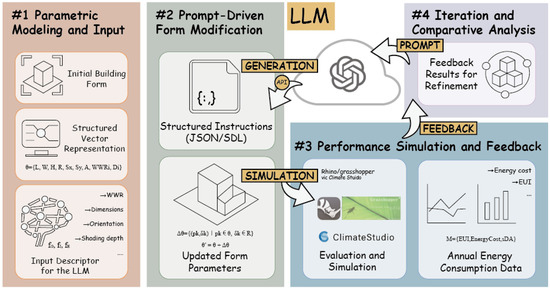

In this section, we propose the EnergAI early-stage performance optimization framework based on the LowEnergy-FormNet dataset. Centered on large language models (LLMs), this framework redefines the logic of performance-driven design in the early stages of architectural development. Specifically, the initial building form is first parameterized and transformed into a structured vector input, enabling effective parsing and reasoning within the semantic space of the LLM. Guided by prompt engineering, the LLM generates formalized modification instructions while preserving the original design intent, thereby achieving a cross-modal mapping from semantics to geometry. Subsequently, the building form is evaluated through a performance simulation engine that conducts multidimensional environmental-energy calculations. The resulting key performance indicators are then fed back into the LLM, forming a closed-loop iterative mechanism. Figure 2 shows the workflow.

Figure 2.

EnergAI closed-loop iterative workflow of parametric input, LLM prompting, performance simulation, and semantic feedback.

3.1. Parametric Input of Building Form

In this study, parametric modeling of the building form serves not only as the starting point of the experiment, but also as the intermediary representation through which the large language model (LLM) can interpret and manipulate building geometry. To ensure experimental controllability and reproducibility, the baseline building form was first transformed into a parametric representation, with key geometric and constructional features defined in a vectorized structure.

Specifically, the building form can be represented as a parameter vector:

where L, W, and H denote the length, width, and height of the massing, respectively; R denotes the overall orientation rotation angle; Sx and Sy represent the setback depths along the X and Y directions; A denotes the atrium ratio; WWRi indicates the window-to-wall ratio for each façade orientation (north, east, south, and west); and Di represents the depth of shading components for the corresponding façades.

θ = {L, W, H, R, Sx, Sy, A, WWRi, Di}

To guarantee the feasibility of geometry and energy simulation, the parameter vector must satisfy a set of constraints:

where Ω represents the feasible design space, and the constraint functions gj (θ) encompass regulatory boundaries (e.g., minimum window-to-wall ratio and net height limits) and geometric validity (e.g., non-self-intersection and minimum shape coefficient), as well as experimental settings (e.g., allowable deviation of total floor area within ±10%). To improve the transparency and reproducibility of the constraint formulation, the numerical ranges and regulatory references of each parameter are summarized in Table 1. These limits define the feasible design space Ω in Equation (2), ensuring that the generated building forms comply with the energy efficiency and building design standards applicable to cold-climate regions in northern China (Beijing, ASHRAE Zone 4).

θ ∈ Ω where Ω = {θ∣gj (θ) ≤ 0, j = 1, …, m}

Table 1.

Parameter ranges and corresponding regulatory or empirical references defining the feasible constraint space Ω (Beijing, ASHRAE Zone 4).

These parameter ranges are derived from both official building codes (GB 50176-2016 [74], GB 50189-2015 [67], GB 50352-2019 [70], GB 55015-2021 [73], and GB/T 50378-2019 [69]), empirical design data summarized in the Architectural Design Data Collection (3rd edition) (Vol. I and Vol. III) [68,72], and Beijing Urban Planning and Design Control Regulations (2020) [71]. Together they define a regulation-compliant and professionally validated constraint space for generative office-building design in cold climate regions.

Accordingly, the input to the LLM consists of two parts: the numerical parameter vector θ and the textual description d(θ) of the form, expressed as follows:

I = {θ, d(θ)}

The task of the LLM is to generate a structured set of modifications Δθ based on the input I, formulated as follows:

where pk represents the parameter to be modified, and δk denotes the magnitude of the modification. The updated building form parameters can then be expressed as folllows:

Δθ = {(pk, δk) ∣ pk ∈θ, δk ∈ R}

θ′ = θ + Δθ

The resulting output is encoded in JSON/DSL format, enabling direct transfer into the Rhino/Grasshopper environment for subsequent performance simulation using ClimateStudio (https://www.solemma.com/climatestudio, accessed on 2 November 2025).

3.2. Hierarchical Design of Prompt Strategies

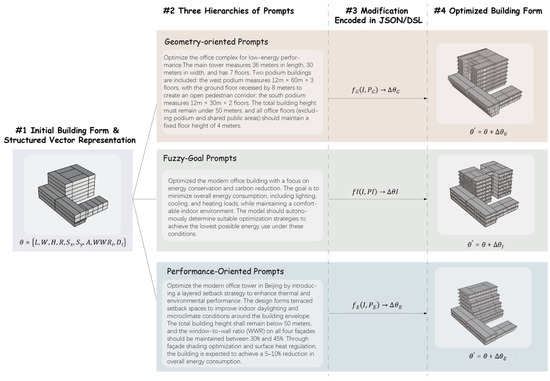

To systematically examine the role of prompt engineering in guiding large language models (LLMs) for performance-driven design optimization, this study develops a hierarchical framework of prompt strategies. The framework is designed to span different levels, ranging from geometry-oriented to performance-oriented prompts, thereby revealing the behavioral variations in LLMs under different cognitive constraints. A prompt can be abstracted as a mapping function:

f: I × P → Δθ

Here, I denotes the input information set (including the parametric form vector, textual description, and geometric illustration), P represents the set of prompt strategies, and Δθ refers to the parameter modification set generated by the LLM.

Different prompt strategies essentially constrain the mapping f in terms of its boundary conditions and the richness of information provided. Figure 3 shows the working mechanisms of the three prompt strategies. Detailed examples and formatting of the hierarchical prompt strategies can be found in Appendix B (Table A2).

Figure 3.

Hierarchical structure of prompt strategies.

3.2.1. Geometry-Oriented Prompts

The core characteristic of geometry-oriented prompts lies in constraining form operations through explicit instructions, such as adjusting massing proportions, altering the window-to-wall ratio, or modifying shading depth. Such prompts emphasize direct control over specific geometric actions and facilitate the examination of whether an LLM can establish implicit logical connections between geometric modifications and energy performance outcomes. The corresponding mapping can be expressed as follows:

fG (I, PG) → ΔθG, ΔθG ⊆ {L, W, H, R, Sx, Sy}

Here, PG refers to geometry-related prompts, such as “rotate the massing by 15°” or “reduce the south façade window-to-wall ratio.” Such prompts highlight operational directness, but do not necessarily guarantee improvements in performance objectives.

3.2.2. Fuzzy-Goal Prompts

Fuzzy-goal prompts specify only an overarching performance improvement objective (e.g., “reduce annual energy consumption” or “decrease cooling load”), without prescribing explicit design interventions. At this level, the LLM is required to autonomously select optimization pathways, thereby demonstrating its implicit understanding and reasoning capacity with respect to performance objectives. Fuzzy-goal prompts provide only the performance objective without specifying particular actions, and can be formally expressed as follows:

fI(I, PI) → ΔθI, where PI = {Objective: minimize EUI}

At this stage, the LLM must autonomously determine modification pathways within the full parameter space θ:

ΔθI ⊆ θ

Such prompts are designed to test whether the LLM possesses the capability to translate performance objectives into reasonable design actions in the absence of explicit geometric constraints.

3.2.3. Performance-Oriented Prompts

Performance-oriented prompts not only specify explicit energy consumption objectives but also further delineate actionable design dimensions—for instance, reducing EUI through adjustments to building form, orientation, or shading. Such prompts couple performance objectives with corresponding design interventions, thereby minimizing uncertainty in the model’s output. The corresponding mapping can be expressed as follows:

fE(I, PE) → ΔθE, ΔθE ⊆ {WWRi, Di, R, Sx, Sy}

A typical example of PE is the following: “By adjusting orientation and shading, reduce annual cooling load by 10% while ensuring sDA ≥ 55%.” This type of prompt establishes explicit logical constraints between performance objectives and corresponding design operations. Through the above classification, this study establishes a hierarchical structure of prompt strategies, ranging from “operational intuition (geometry-oriented),” to “goal reasoning (fuzzy-goal),” and finally to “performance-design coupled reasoning (performance-oriented).” The three mapping functions, fG, fI, and fE, together constitute the distinct reasoning modes that an LLM can exhibit in design optimization tasks:

fG: To examine whether the LLM can reveal implicit energy-saving potential under explicit geometric operations;

fI: To evaluate whether the LLM can autonomously select effective design strategies when driven purely by performance objectives;

fE: To assess whether the LLM, under dual constraints of explicit objectives and specified design dimensions, can approximate the performance-optimal solution.

This mathematical formalization not only helps to delineate the experimental boundaries of different prompt strategies but also provides a unified framework for subsequent performance comparisons and sensitivity analyses.

3.3. Configuration of ClimateStudio

To ensure the scientific validity and comparability of the energy consumption and daylighting simulation results, this study employs ClimateStudio within the Rhino/Grasshopper environment as the performance evaluation engine, with rigorous standardization applied to its input parameters and operating conditions. First, the climatic boundary conditions are based on Typical Meteorological Year (TMYx 2004-2018) datasets, with the Beijing Capital International Airport weather station (CHN_BJ_545110) selected as the representative climatic site. This weather file provides hourly climate inputs across the entire year, including temperature, solar radiation, humidity, and wind speed. According to the Köppen classification, the climate corresponds to “temperate continental climate with dry cold winters and hot summers (Dwa)”, and under ASHRAE standards it is categorized as a mixed climate zone (Zone 4). In Beijing, the annual average temperature is 13 °C, the annual total solar radiation is 1764 kWh/m2, and the average wind speed is 3 m/s. These boundary conditions established a stable and representative climatic foundation for the energy simulations.

Regarding operational parameters, indoor thermal environment control conditions were defined in accordance with ASHRAE 62.1 and 90.1 standards: a cooling design temperature of 35.3 °C (99.6% confidence level) and a heating design temperature of −11 °C (0.04% confidence level). Annual Cooling Degree Days (CDD, base 18 °C, 999) and Heating Degree Days (HDD, base 18 °C, 2752) are used to quantify the characteristics of cooling and heating loads. Building usage conditions reflect a typical office program, encompassing occupant density, lighting power density, equipment loads, and operational schedules, thereby ensuring consistency and controllability in performance comparisons across experimental cases.

At the modeling level, all building volumes are subdivided by floor, with each floor represented by one core zone and four perimeter zones and configured into two systems. Through the Grasshopper interface, parameter modifications generated by the LLM are automatically mapped onto updates of the building geometry, window-to-wall ratio (WWR), shading components, and adjacent boundary conditions (ground and insulated boundaries). These geometric and physical data are ultimately transmitted to ClimateStudio to generate three core performance metrics: annual energy use intensity (EUI), annual energy cost, and spatial daylight autonomy (sDA) on a daily basis.

Through the standardized configuration of climatic data, operational parameters, and simulation settings, this study achieves cross-case comparability and cross-iteration reproducibility under varying experimental conditions, thereby ensuring the rigor and academic reliability of the performance analysis results.

3.4. LLM-ClimateStudio Experimental Interface

To enable performance-oriented design driven by natural language prompts, this study establishes an experimental interface linking the output of the large language model (LLM) with the ClimateStudio simulation environment. The primary objective of this interface is to translate the parameter modification set Δθ generated by the LLM into executable parametric geometry updates in Grasshopper and subsequently invoke ClimateStudio within the Rhino/Grasshopper environment to perform energy and daylighting simulations.

In this workflow, the form parameter vector θ′, modified by the LLM, is mapped into a parametric geometry model G(θ′), which, together with the fixed set of external environmental conditions, E = {EPW, Schedule, Material}, forms the input set for performance simulation. The performance simulation function can be formally expressed as follows:

M = F(G(θ′), E)

Here, F(·) denotes the simulation operator of ClimateStudio, and the resulting performance indicator set is

M = {EUI, EnergyCost, sDA}

The implementation mechanism of the experimental interface consists of three steps: (1) the Δθ output of the LLM is parsed into JSON/DSL format by automated scripts and transmitted to Grasshopper parameter nodes; (2) the parametric geometry G(θ′) is updated in real time within the Rhino environment, ensuring that geometric and physical properties satisfy the constraint conditions θ′ ∈ Ω; (3) the updated geometry and environmental parameters E are then transmitted to ClimateStudio, which runs performance simulations and generates the indicator set M. The results obtained are not only stored as experimental data but also fed back to the LLM as signals to drive further iterations and refinements of the prompts.

Through this interface design, a closed-loop coupling between LLM semantic generation and building performance simulation is achieved. Natural language prompts can directly act upon the architectural parameter vector θ, enabling iterative adjustments of design schemes and providing a stable and comparable data foundation for subsequent experiments on prompt strategy comparisons and sensitivity analyses.

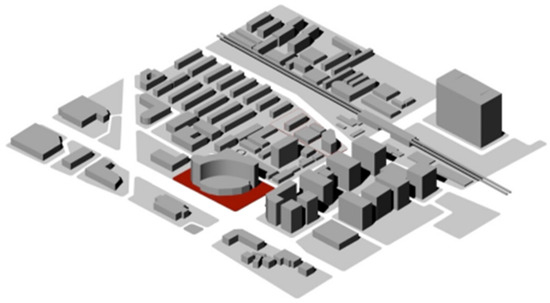

3.5. Case Study

The experimental design task was established in a real-world context with a clear goal of minimizing building energy consumption under given constraints. The site for the project is a flat 120 m × 120 m rectangular lot located at a busy intersection in Beijing’s Haidian District (as shown in Figure 4). Within this site and local regulatory limits, the challenge was to conceive an office building of approximately 10,000 m2 total floor area (±10%), with an overall height below 50 m and a fixed 4 m floor-to-floor height. Critically, the brief prioritized energy efficiency: designers were tasked with creating a scheme that achieves the lowest possible operational energy use intensity (EUI) and energy cost, while still meeting the functional and spatial requirements of the office program.

Figure 4.

Experimental site.

4. Experiment and Results

To pursue the low-energy design objective, the project was undertaken by two parallel teams of professionals using different methods. One team followed a conventional manual design process, relying on their architectural training and iterative refinement to improve the building’s performance. The other team employed the LLM-driven generative approach proposed in this study, leveraging a large language model to help explore energy-saving design alternatives through prompt engineering. In the LLM-guided workflow, the team implemented three levels of prompt strategies—(1) geometry-oriented prompts that suggested explicit geometric changes, (2) fuzzy-goal prompts that provided a general directive to reduce energy use, and (3) performance-oriented prompts that set specific numeric targets for improvement. Both teams ultimately produced a set of candidate schemes addressing the same design brief. Each scheme was then evaluated with a detailed energy simulation using ClimateStudio to obtain its annual EUI and energy cost. This allowed a direct quantitative comparison between the human-designed baseline and the LLM-generated designs under each prompt strategy. The following subsections present the performance results for each prompt category and analyze how each approach fared in reducing energy use compared to the manual design baseline.

4.1. Geometry-Oriented Prompt Generation Results

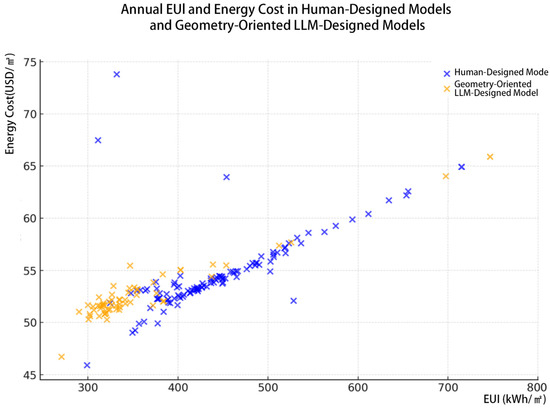

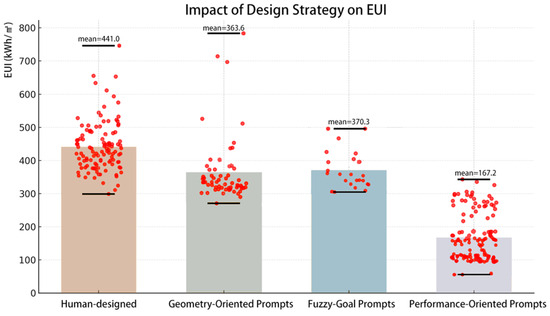

The models generated by the geometry-oriented prompts outperformed the human-designed models in overall energy performance: their average annual EUI was about 363.6 kWh/m2, which is significantly lower than the human models’ EUI of 441.0 kWh/m2 (approximately a 17% reduction). Correspondingly, the geometry-guided LLMs’ average annual energy cost was about USD 52.7/m2, which was also lower than the human models’ energy cost, USD 54.8/m2 (around a 4% reduction). This indicates that, with only geometric manipulation instructions, the LLM’s design schemes exhibit a certain advantage in annual energy use intensity and cost compared to the manually designed schemes. Notably, the best-case EUI among the LLM-generated models consumed about 270.6 kWh/m2, which is ~9.5% lower than the best human-designed case (~298.8 kWh/m2), showing that geometry-oriented generation can in some instances produce designs with lower energy use than human design. At the same time, the worst-case EUI of the LLM-generated models was about 783.6 kWh/m2, which is clearly higher than the LLM average and slightly above the human model’s worst case (746.4 kWh/m2), but overall still on the same order of magnitude. This suggests that the geometry-oriented prompt strategy, to a certain extent, avoids the occurrence of the extremely high-energy cases seen in the human designs, resulting in a more tightly clustered energy performance distribution for the generated schemes.

As shown in Figure 5, most scatter points for the geometry-oriented LLM-designed schemes lie in the lower-left region of the plot (EUI roughly 270–500 kWh/m2 and annual cost ~46–60 USD/m2), whereas the human schemes’ points are more dispersed. Human-designed EUIs mostly range from 300 to 600 kWh/m2 with a few high-energy outliers, and the upper limit of the annual cost reaches about 74 USD/m2. The energy costs of most human-designed schemes cluster in the low 50s for USD/m2, overlapping with those pf the LLM schemes. Overall, the geometry-guided LLM schemes’ energy performance was more concentrated and tended to be better: the majority of LLM schemes had an EUI below the human average, and there were very few anomalous cases with exceptionally high costs. This means that because the geometry prompts guided more reasonable massing modifications, the LLM-generated schemes achieved a certain degree of energy savings as a whole. However, since the prompt at this stage did not directly set an energy optimization goal, the energy-saving effects among the LLM schemes are still unstable, and in a few cases, the energy use did not decrease and even increased.

Figure 5.

Scatter plot of annual EUI vs. annual energy cost for human-designed models and geometry-oriented LLM-designed models.

In summary, the first-stage results with geometry-oriented prompts show a trend of reduced average energy use compared to the manual designs, but the improvement margin is limited. This provides a reference for the subsequent use of refined prompts with explicit performance targets to further enhance energy optimization.

4.2. Fuzzy-Goal Prompt Generation Results

In the fuzzy-goal prompt experiment, the prompt only set a general performance improvement goal (e.g., “reduce annual energy consumption” or “reduce cooling load”) without specifying concrete design operations. This setup forced the LLM to autonomously choose an optimization path, thereby providing a more direct test of its implicit understanding and reasoning abilities regarding the energy-efficiency goal. The results show that fuzzy-objective prompts can still effectively guide the LLM to achieve energy optimization. Even without restricting geometric modifications, the energy metrics of LLM-generated schemes still declined significantly compared to the manually designed models: their annual EUI averaged around 370.3 kWh/m2, far below the human models’ 441.0 kWh/m2 (approximately a 16% reduction); the annual energy cost mean was about USD 52.9/m2, which was also lower than the human models’ USD 54.8/m2 (around a 3% reduction). This further validates the feasibility and potential of large language models in early-stage building energy optimization. Compared to the human baseline, the LLM schemes under fuzzy objectives show clear advantages in both annual EUI and energy cost. Furthermore, none of the schemes generated by fuzzy-goal prompts exhibited counterexamples of worsened energy performance—the energy use of the vast majority of schemes was reduced.

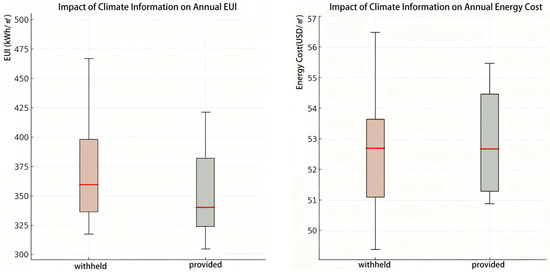

The experiment was further divided into two groups: in one group, the prompt explicitly provided detailed geographic and climate information of the project location (Beijing), and in the other group, no such information was given. As shown in Figure 6 and Table 2, providing this site-specific climate information had very little effect on the average energy-saving outcome: the difference in annual EUI and energy cost means between the two groups was less than 1.5%. The group given Beijing’s climate conditions achieved an average EUI of about 373 kWh/m2, whereas the group without climate information had an average EUI of about 368 kWh/m2; both groups’ energy cost means were around USD 53/m2, which were virtually the same. However, the distribution of results differed markedly between the two groups. When climate information was provided, the LLM achieved better performance in some schemes, with the lowest EUI dropping to about 305 kWh/m2, but at the same time, that group’s worst energy-efficiency result was slightly higher than that of the “not informed” group, indicating that incorporating site climate knowledge caused some schemes to have higher energy use. Overall, providing climate information increased the variability of the outcomes: within its solution space there emerged both extremely low-EUI, excellent schemes and a few somewhat worse suboptimal schemes compared to the other group, exhibiting a dispersed pattern of “both better and worse.” This suggests that introducing climate knowledge enabled the LLM to explore more potential optimization paths tailored to the locale, but could have also, due to prompt interpretation biases, led a minority of results away from the optimum. Despite the added uncertainty from the climate information, in both cases the majority of schemes generated by fuzzy-objective prompts were still universally better than the human baseline, and no obvious high-energy anomalies occurred.

Figure 6.

Impact of climate information on EUI and energy cost.

Table 2.

Comparison of EUI and energy cost between fuzzy-goal prompts with site climate information provided vs. withheld.

Overall, the fuzzy-goal prompt maintained a high level of average energy savings, comparable to or even slightly better than the geometry-oriented prompt, while also demonstrating strong flexibility in practical application.

4.3. Performance-Oriented Structured Prompt Generation Results

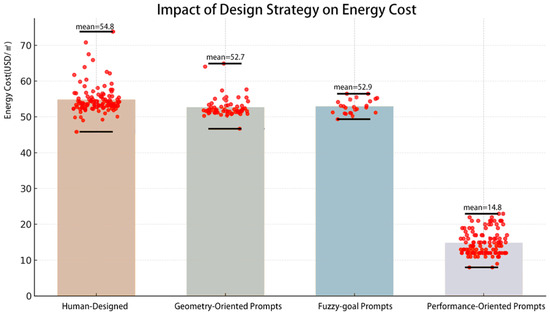

4.3.1. Overall Performance

As shown in Figure 7 and Figure 8, the performance-oriented structured prompt model performs best overall compared to the previous generation methods, with almost all of its schemes achieving drastically lower EUI and energy cost. The structured prompts cause the LLM-generated schemes’ EUI to decrease significantly, and the annual energy cost likewise drops substantially. Notably, both the human schemes and the LLM geometry-based schemes contained a few high-energy outliers, whereas the structured prompt schemes virtually eliminated any extremely high-energy designs, making the overall distribution more concentrated and demonstrating the robustness of our method. For example, the worst-case EUI of the human schemes was as high as 746 kWh/m2, and the worst geometry-based LLM scheme was about 784 kWh/m2, while among the performance-oriented LLM schemes, the highest EUI was only around 343 kWh/m2, and virtually no instances of increased energy use appeared. This shows that by directly embedding performance targets in the prompt, the LLM’s optimized schemes are far more reliable in terms of energy control—not only is the average level particularly low, but even the worst cases are effectively constrained.

Figure 7.

Impact of design strategy on EUI and individual EUI data points.

Figure 8.

Impact of design strategy on energy cost and individual energy cost data points.

4.3.2. Prompt Dimension Sensitivity

Before analyzing the sensitivity of each prompt dimension, we first examine the high-frequency terms across all prompt categories (Figure 9). This preliminary analysis helps to reveal the semantic structure of the prompt dataset and identify the design features most emphasized by the LLM during generation.

Figure 9.

Word clouds.

By clustering the dataset of 800 natural language descriptions into four prompt dimensions—massing, orientation, fenestration, and shading—and noting for each performance-oriented structured prompt scheme whether it included (“Yes”) or did not include (“No”) a prompt related to that dimension, we can compute the EUI improvement rate for each case. This allows us to measure the relative contribution of each prompt dimension to energy savings. The resulting word clouds in Figure 8 list the top repeated terms within each cluster: massing is dominated by windows, WWR, and atrium; orientation by ventilation, south and WWR; fenestration by WWR, different windows and glazed curtain wall; and shading by WWR, deep overhang and horizontal shading. Some recurring control elements thus emerge as the key factors shared across the four prompt dimensions, frequently appearing in each and working together to drive down building energy use.

- Massing dimension: From Table 3 data, optimizing the building massing yields the greatest contribution to energy savings. When massing-related prompts are included, the schemes’ average energy saving rate is around 50.8%, about 5 percentage points higher than when massing prompts are not included. This indicates that optimizing the building form (e.g., compact massing, block shape, and appropriate cutouts or recesses) can significantly reduce energy consumption.

Table 3. Statistics of the influence of different prompt dimensions on EUI-saving rate (EUI-saving rate = (Baseline EUI − Current EUI)/Baseline EUI).

Table 3. Statistics of the influence of different prompt dimensions on EUI-saving rate (EUI-saving rate = (Baseline EUI − Current EUI)/Baseline EUI). - Shading dimension: The second most impactful measure is solar shading. Schemes with shading prompts have an average saving rate of ~50.9%, about 4.1 points higher than those without shading. Studies have shown that external shading devices (such as overhangs and louvers) can effectively reduce solar heat gains and cooling loads, and our analysis likewise confirms the pronounced effect of shading on lowering energy use.

- Orientation dimension: Optimizing building orientation has a modest impact on energy use. Schemes including orientation-related prompts achieve an average saving rate of ~50.6%, about 2–3 percentage points higher than those without. The marginal benefit of orientation is relatively small and quite stable, with little variation across different baseline schemes (standard deviation only 0.040). This suggests that, when holding other parameters constant, moderately adjusting the orientation yields limited influence on energy consumption.

- Fenestration dimension: Fenestration (windows/openings) has the smallest impact among the four dimensions, with the difference in results being not very significant. Further improvements might be achieved by refining the dataset or exploring combinations of multiple dimensions to better leverage fenestration measures.

In summary, massing and shading are the most critical factors for energy savings, followed by orientation, and finally fenestration. The sensitivity analysis also shows that the improvements in saving rate for the massing and shading categories vary more across different baseline schemes (standard deviations about 0.12 and 0.08, respectively), suggesting that their effectiveness depends on the specific initial form and climate context. The improvements from orientation and fenestration, on the other hand, are relatively stable or minimal. The importance of the structured prompt dimensions can be ranked as massing > shading > orientation > fenestration. This aligns with common knowledge in building physics: building form and shading have the greatest impact on the thermal performance, orientation is secondary, and the window-to-wall ratio (fenestration) only becomes significant when combined with other measures.

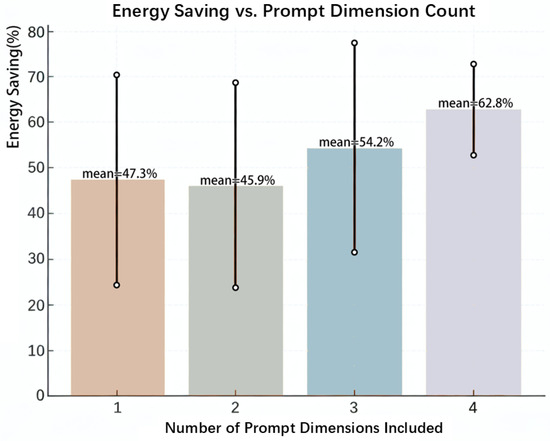

4.3.3. Synergy of Prompt Combinations

Beyond examining single dimensions, we also analyzed the effect of multi-dimension prompt combinations on energy savings. The structured-prompt-generated schemes were grouped according to the number of prompt dimensions they covered. As shown in Figure 10, schemes that encompass a greater number of dimensions achieve higher energy savings. Relative to the baseline EUI, schemes covering only one dimension have an average energy saving rate of about 47.3%; covering two dimensions yields ~45.9% (nearly the same as the single-dimension case); covering three dimensions increases the average saving rate to ~54.2%; and covering four dimensions raises it to about 62.8%. Notably, the standard deviation of savings simultaneously narrows for schemes covering three or four dimensions, indicating that multi-dimensional synergy not only raises average performance but also improves predictability. When a prompt combination covers multiple energy-saving dimensions, it produces synergistic benefits, whereas many schemes covering only one or two dimensions have energy saving rates below 50%. This indicates that optimizing building energy performance requires a comprehensive consideration of factors such as massing, orientation, fenestration, and shading, rather than adjusting a single parameter in isolation. There are positive coupling effects among these dimensions: for instance, jointly optimizing massing and shading can greatly enhance energy savings, while measures like window-to-wall ratio adjustments need to be coordinated with orientation and shading to achieve a larger effect.

Figure 10.

Mean energy-saving rate versus the number of prompt dimensions, with range bars indicated by black lines.

In Table 4, the massing–orientation–shading combination delivers the most outstanding energy savings: the sample’s average EUI improvement rate reaches 0.7055, the median is 0.7169, with even the lowest case at 0.6649 and the highest at 0.7218. This demonstrates that modifying the building volume and orientation while adding external shading can yield significant and stable energy benefits.

Table 4.

Performance of the top 10 specific combinations in improving EUI-saving rate.

Among other combinations, the full four-dimension combination massing–orientation–fenestration–shading achieves an average improvement of 0.6032 (median 0.6629), but with large variability (minimum 0.4860 and maximum 0.7004). This suggests that, although the optimization measures are comprehensive, the coupling relationships between elements are complex, and improper design choices could suppress the overall effect. Shading alone as a strategy provides a relatively high benefit (average improvement 0.5940), confirming that shading measures by themselves can offer substantial energy savings.

The massing–orientation combination and massing–fenestration combination both have average improvements close to 0.59, while the orientation–fenestration–shading and massing–fenestration–shading combinations fall in the 0.55–0.58 range. This indicates that pairing orientation or fenestration adjustments with massing optimization provides a clear boost to savings, but adding multiple adjustment factors beyond that does not yield a linear increase in benefits. Moreover, for single dimensions, orientation (0.4809), massing (0.4283), and fenestration (0.4031) showed moderate performance, indicating that these measures implemented alone have limited energy-saving effects; in some samples they even produced negative improvements, meaning an unreasonable building form or window configuration can lead to higher energy use.

Overall, shading measures played a crucial role in all combinations and, when combined with orientation or massing, they significantly enhanced energy performance. Sensible adjustments to window-to-wall ratio and massing details can further optimize energy use, but stacking too many strategies requires caution to avoid conflicts that diminish their effect. It is recommended that in early design one should prioritize the synergy of orientation and shading, and then, in combination with site conditions, selectively optimize the massing and window-to-wall ratio to achieve stable and efficient energy-saving goals.

4.4. Geometry-Guided Prompt Generation Results

Building on the best prompt dimension combination from Section 4.3.3 (massing–orientation–shading), we conducted a comparison of energy optimization performance across different LLMs under the same level of prompt detail (using performance-oriented structured prompts of equal granularity). Four models—GPT, DeepSeek, Qwen, and Gemini—were applied to optimize the same set of baseline building models. The results show that the schemes generated by the GPT model performed the best in terms of energy optimization, with an average EUI-saving rate of approximately 63.3%, which was significantly higher than those of the other three models (which were around 50%). Correspondingly, GPT’s annual energy cost-saving rate averaged about 73.9%, also clearly better than those of DeepSeek, Qwen, and Gemini (the latter three had cost-saving rates in the ~68–70% range). This indicates that under the same prompt conditions, GPT can more accurately comprehend building energy performance requirements and implement effective modifications. By contrast, DeepSeek and Qwen achieved similar energy-saving results, with average EUI-saving rates of about 50.5% and 50.7%, and energy cost-saving rates around 68.5% and 69.8%, respectively. Gemini was slightly lower, with an average EUI-saving rate of roughly 49.9% and an energy cost-saving rate of about 68.8%. Overall, aside from GPT, the magnitude of energy reduction achieved by the other models was of the same order, and none reached the higher level of savings realized by GPT. Massing and window-to-wall ratio were used to achieve stable and efficient energy-saving goals.

As shown in Table 5 and Table 6, under the same prompt strategy and baseline conditions, the GPT model demonstrated a building energy-efficient design generation capability that surpassed the other three LLMs, achieving a greater magnitude of energy reduction. This may be attributed to GPT’s deeper understanding of the performance objectives and a more diverse design generation approach—it can propose aggressive optimization schemes that attain over 65% energy savings, while also ensuring that all schemes have significant improvements with very few mistakes. DeepSeek and Qwen fall into a second tier, at roughly 80% of GPT’s performance level on average, with similar and relatively stable results. This indicates that these two models can achieve certain energy-saving benefits under optimization prompts, but they lack breakthrough ultra-efficient schemes. Meanwhile, Gemini-2.5 exhibited a high degree of consistency and a moderate level of energy improvement, with nearly all generated schemes converging around a 50% saving rate. It is speculated that its strategy for responding to prompts was relatively uniform, and therefore it failed to explore more highly efficient energy-saving designs.

Table 5.

Performance of different LLMs in terms of EUI-saving rate.

Table 6.

Performance of different LLMs in terms of annual energy cost-saving rate.

5. Discussion

This study presents the EnergAI framework, which explores the potential of integrating large language models (LLMs) into early-stage architectural design for energy optimization. By embedding performance objectives directly within prompts, the framework illustrates a feasible pathway for linking semantic reasoning with quantitative performance control. Compared with the conventional “generate-evaluate” workflow, EnergAI incorporates energy constraints during the generative phase, thereby shortening the iteration cycle and facilitating more efficient decision-making. Experimental results indicate that when structured prompts specify parameters such as massing, orientation, and shading, the average energy use intensity (EUI) reduction approaches 50%, while high-energy outliers are effectively suppressed. These findings suggest a possible direction for connecting design semantics with performance-oriented reasoning in future generative design studies.

From an application perspective, EnergAI can rapidly identify potential energy-saving opportunities in the early design phase and deliver real-time feedback, lowering the threshold for non-experts to engage in energy cost control while significantly reducing design iterations and rework costs for professional architects. Furthermore, it can serve as an auxiliary tool for green building evaluation and review, offering quantitative support for energy compliance, performance benchmarking, and policy alignment.

Moreover, emphasizing energy efficiency may constrain certain aspects of design freedom, such as geometry, orientation, and architectural expression. To mitigate this, EnergAI was designed to preserve semantic-level diversity within its prompt structure, maintaining creative variability while pursuing consistent energy objectives. Future extensions should incorporate additional performance criteria—such as daylighting, thermal comfort, structural integrity, and cost—to enable LLMs to support multi-objective optimization and generate more balanced design outcomes. Also, the framework can be further extended by developing a module that integrates heuristic reasoning with language-model-based decision-making to automatically optimize window-to-wall ratios and glazing configurations. In the future, these extensions could enable the system to balance multiple objectives under predefined constraints through natural-language-driven reasoning. Such an advancement would enhance EnergAI’s façade-level reasoning capacity and further improve its applicability in real-world architectural design workflows.

Despite its promising outcomes, EnergAI has several limitations. While embedding energy constraints in the generative process proved effective, all simulations were conducted in Beijing (ASHRAE Zone 4), representing a mixed climate. The model’s optimization behavior and prompt sensitivity may vary under other climates—for example, shading and ventilation dominate in hot-humid zones, whereas compact massing and thermal inertia prevail in hot-dry regions. These findings highlight the climate dependence of key design parameters and position the study as a proof of concept within a specific boundary. Future work will extend EnergAI across diverse ASHRAE zones to test its generalization and enhance climate-adaptive prompt design. In addition, the dataset used in this study mainly comprises modern office buildings with relatively homogeneous morphology and energy-use patterns. While the model performs well on such data, its generalizability to other building types and scales remains to be verified.

6. Conclusions

This study addresses the early conceptual design stage and introduces EnergAI, a framework that embeds energy performance objectives directly into the generative process of large language models (LLMs). Through multi-level comparative experiments, EnergAI achieved consistent improvements in energy-saving performance over baseline generative methods, confirming the effectiveness of incorporating performance objectives at the onset of design rather than applying post hoc adjustments. Some key findings have been provided as follows:

- Geometry-oriented and fuzzy-goal prompts result in average annual reductions of about 16–17% in energy use intensity (EUI) and 3–4% in energy costs, compared to designs created by humans.

- Performance-oriented structured prompts provide the most reliable improvements, effectively eliminating high-energy outliers and achieving an average EUI reduction of over 50%.

- In cross-model comparisons under an identical toolchain, GPT-4o delivered the strongest and most stable optimization, achieving 63.3% mean EUI savings, nearly 13% higher than DeepSeek-V3.1-Think, Qwen-Max and Gemini-2.5 baselines.

From a methodological standpoint, EnergAI establishes a quantifiable semantic coupling mechanism between design semantics and energy objectives, transforming the role of LLMs from passive generators of form to active agents of performance reasoning. This mechanism enables the model to interpret performance goals—such as energy use intensity and solar exposure—not merely as numerical constraints but as semantic drivers that shape spatial configuration, orientation, and massing decisions. Such integration redefines generative AI as a tool for reasoning about design logic rather than imitating stylistic patterns.

From an engineering perspective, EnergAI provides a replicable and extensible technical pathway for embedding performance-driven intelligence into architectural workflows. Its structured prompt system and feedback-guided iteration allow early-phase design exploration to incorporate simulation-informed performance data without manual post-processing. This workflow shortens the conventional generation–evaluation–adjustment loop, supports scalable deployment across different building typologies, and bridges the gap between data-driven analysis and creative synthesis. Ultimately, it contributes to the realization of AI-assisted, evidence-based sustainable design at both the conceptual and operational levels.

Overall, the research contributes a novel pathway linking artificial intelligence and architectural performance, demonstrating measurable progress toward data-informed, sustainability-oriented generative design. While current limitations in dataset diversity and model generalization remain, EnergAI lays a robust foundation for future extensions across multiple climatic regions, performance domains, and multi-objective optimization contexts, offering both academic and practical value to the evolving field of intelligent architectural design.

7. Data and Code Availability

The dataset and code supporting the findings of this study are hosted on GitHub at (https://github.com/LazarusPit0104/EnergAI, accessed on 1 November 2025). We used the repository at tag v1.0.0 (commit 1ed588c, released 5 November 2025). Experiments were conducted with Python 3.10.13 and PyTorch 2.3.1 (CUDA 12.1); the exact software environment is pinned in the accompanying environment.yml and requirements.txt files included with the release. The repository currently provides a partial release of the LowEnergy-FormNet dataset for validation and benchmarking. The dataset is distributed under the Creative Commons Attribution 4.0 International (CC BY 4.0) license, permitting reuse, redistribution, and adaptation with appropriate citation.

The complete dataset, training scripts, and model weights used in this research will be made publicly available upon publication under the MIT License, thereby ensuring transparency, reproducibility, and open scientific collaboration. Any additional materials required to reproduce the results are available from the corresponding author upon reasonable request.

Author Contributions

Conceptualization, J.Z., X.M. and S.L.; Methodology, J.Z., P.L. and X.M.; Validation, P.L. and R.L.; Formal analysis, J.Z., R.L., Y.D. and J.B.; Investigation, P.L., J.Y. and C.H.; Resources, J.Y.; Data curation, J.Z., R.L., J.Y., Y.D. and J.B.; Writing—original draft, J.Z. and P.L.; Writing—review & editing, R.L., J.Y., C.H., X.D., X.M. and S.L.; Visualization, P.L., Y.D. and J.B.; Supervision, X.M. and S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

This appendix shows the abbreviations mentioned in the text and their full names.

Table A1.

The table of abbreviations.

Table A1.

The table of abbreviations.

| Full Name | Abbreviation |

|---|---|

| Building Energy Modeling | BEM |

| Generative Adversarial Networks | GANs |

| Variational Autoencoders | VAEs |

| Energy Use Intensity | EUI |

| Large Language Model | LLM |

| Building Information Modeling | BIM |

| Performance-Driven Design | PDD |

| Cooling Degree Days | CDD |

| Heating Degree Days | HDD |

| Window-to-Wall Ratio | WWR |

| Daylight Autonomy | sDA |

Appendix B

In this section, we provide a list of detailed examples of three types of prompts.

Table A2.

The table of detailed prompts.

Table A2.

The table of detailed prompts.

| Types of Prompts | Examples |

|---|---|

| Geometry-Oriented Prompts |

|

| |

| |

| |

| Fuzzy-Goal Prompts |

|

| |

| Performance-Oriented Prompts |

|

| |

| |

|

References

- Negendahl, K. Building performance simulation in the early design stage: An introduction to integrated dynamic models. Autom. Constr. 2015, 54, 39–53. [Google Scholar] [CrossRef]

- Hemsath, T.L. Conceptual energy modeling for architecture, planning and design: Impact of using building performance simulation in early design stages. In Building Simulation; Air Infiltration and Ventilation Centre (AIVC): Ghent, Belgium, 2013. [Google Scholar]

- Garcia, E.G.; Zhu, Z. Interoperability from building design to building energy modeling. J. Build. Eng. 2015, 1, 33–41. [Google Scholar] [CrossRef]

- Donn, M. Simulation and the building performance gap. Build. Cities 2025, 6, 713–728. [Google Scholar] [CrossRef]

- Han, T.; Huang, Q.; Zhang, A.; Zhang, Q. Simulation-based decision support tools in the early design stages of a green building—A review. Sustainability 2018, 10, 3696. [Google Scholar] [CrossRef]

- Baba, A.; Mahdjoubi, L.; Olomolaiye, P.; Booth, C. State-of-the-art on buildings performance energy simulations tools for architects to deliver low impact building (LIB) in the UK. Int. J. Dev. Sustain. 2013, 2, 1867–1884. [Google Scholar]

- Hong, T.; Langevin, J.; Sun, K. Building Simulation: Ten Challenges. In Building Simulation; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11, pp. 871–898. [Google Scholar]

- Østergård, T.; Jensen, R.L.; Maagaard, S.E. Building simulations supporting decision making in early design—A review. Renew. Sustain. Energy Rev. 2016, 61, 187–201. [Google Scholar] [CrossRef]

- Soebarto, V.; Hopfe, C.; Crawley, D.; Rawal, R. Capturing the views of architects about building performance simulation to be used during design processes. In Proceedings of the BS2015: 14th Conference of International Building Performance Simulation Association, Hyderabad, India, 7–9 December 2015; pp. 7–9. [Google Scholar]

- Mahmoud, R.; Kamara, J.M.; Burford, N. Opportunities and limitations of building energy performance simulation tools in the early stages of building design in the UK. Sustainability 2020, 12, 9702. [Google Scholar] [CrossRef]

- Pitts, A. Passive house and low energy buildings: Barriers and opportunities for future development within UK practice. Sustainability 2017, 9, 272. [Google Scholar] [CrossRef]

- de Wilde, P. Ten questions concerning building performance analysis. Build. Environ. 2019, 153, 110–117. [Google Scholar] [CrossRef]

- Troup, L.; Phillips, R.; Eckelman, M.J.; Fannon, D. Effect of window-to-wall ratio on measured energy consumption in US office buildings. Energy Build. 2019, 203, 109434. [Google Scholar] [CrossRef]

- Shaghaghian, Z.; Shahsavari, F.; Delzendeh, E. Studying buildings outlines to assess and predict energy performance in buildings: A probabilistic approach. arXiv 2021, arXiv:2111.08844. [Google Scholar] [CrossRef]

- Sacks, R.; Eastman, C.; Lee, G.; Teicholz, P. BIM Handbook: A Guide to Building Information Modeling for Owners, Designers, Engineers, Contractors, and Facility Managers; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Attia, S.; Gratia, E.; De Herde, A.; Hensen, J.L. Simulation-based decision support tool for early stages of zero-energy building design. Build. Environ. 2012, 49, 2–15. [Google Scholar] [CrossRef]

- Ratti, C.; Raydan, D.; Steemers, K. Building form and environmental performance: Archetypes, analysis and an arid climate. Energy Build. 2003, 35, 49–59. [Google Scholar] [CrossRef]

- Zhong, C.; Yi’an Shi, L.H.C.; Wang, L. AI-enhanced performative building design optimisation and exploration. In Proceedings of the 29th International Conference on Computer-Aided Architectural Design Research in Asia, CAADRIA, Singapore, 24 April 2024; Volume 1, pp. 59–68. [Google Scholar]

- Luo, J.; Yu, B.; Peng, H.; Shi, Y.; Li, Y.; Fingrut, A. Deep Generative Modeling Tasks: Automatic Generation of Building Facades with Pix2Pix GAN for Hong Kong City Expansion and Renovation. Available online: https://www.researchgate.net/profile/Boyuan_Yu8/publication/374029492_Deep_generative_modeling_tasks_Automatic_generation_of_building_facades_with_Pix2Pix_GAN_for_Hong_Kong_city_expansion_and_renovation/links/65713ac0286c65604a947c91/Deep-generative-modeling-tasks-Automatic-generation-of-building-facades-with-Pix2Pix-GAN-for-Hong-Kong-city-expansion-and-renovation.pdf (accessed on 2 November 2025).

- Luo, G.; Zhou, X.; Liao, Y.; Ding, Y.; Liu, J.; Xia, Y.; Qi, H. Automated Residential Bubble Diagram Generation Based on Dual-Branch Graph Neural Network and Variational Encoding. Appl. Sci. 2025, 15, 4490. [Google Scholar] [CrossRef]

- Samuelson, H.; Claussnitzer, S.; Goyal, A.; Chen, Y.; Romo-Castillo, A. Parametric energy simulation in early design: High-rise residential buildings in urban contexts. Build. Environ. 2016, 101, 19–31. [Google Scholar] [CrossRef]

- Turrin, M.; von Buelow, P.; Stouffs, R. Design explorations of performance driven geometry in architectural design using parametric modeling and genetic algorithms. Adv. Eng. Inform. 2011, 25, 656–675. [Google Scholar] [CrossRef]

- Lin, S.-H.E.; Gerber, D.J. Designing-in performance: A framework for evolutionary energy performance feedback in early stage design. Autom. Constr. 2014, 38, 59–73. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Y.; Liu, G.; Han, Z.; Stouffs, R. A predictive model for daylight performance based on multimodal generative adversarial networks at the early design stage. Energy Build. 2024, 305, 113876. [Google Scholar] [CrossRef]

- Nauata, N.; Chang, K.-H.; Cheng, C.-Y.; Mori, G.; Furukawa, Y. House-gan: Relational generative adversarial networks for graph-constrained house layout generation. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 162–177. [Google Scholar]

- Kim Gi, H.; Shin Ry, H.; Kim Woo, D. DataNet Project: A framework for linking multi-faceted building energy datasets for effective performance analysis. In Proceedings of the ASim Conference 2024, IBPSA-Asia, Osaka, Japan, 8–10 December 2024; Volume 5, pp. 483–490. [Google Scholar]

- Ghimire, P.; Kim, K.; Acharya, M. Opportunities and challenges of generative AI in construction industry: Focusing on adoption of text-based models. Buildings 2024, 14, 220. [Google Scholar] [CrossRef]

- Meselhy, A.; Almalkawi, A. A review of artificial intelligence methodologies in computational automated generation of high performance floorplans. Npj Clean Energy 2025, 1, 2. [Google Scholar] [CrossRef]

- Ferrando, M.; Causone, F.; Hong, T.; Chen, Y. Urban building energy modeling (UBEM) tools: A state-of-the-art review of bottom-up physics-based approaches. Sustain. Cities Soc. 2020, 62, 102408. [Google Scholar] [CrossRef]

- Wandelt, S.; Zheng, C.; Wang, S.; Liu, Y.; Sun, X. Large language models for intelligent transportation: A review of the state of the art and challenges. Appl. Sci. 2024, 14, 7455. [Google Scholar] [CrossRef]

- Okur, E.; Kumar, S.H.; Sahay, S.; Nachman, L. Audio-visual understanding of passenger intents for in-cabin conversational agents. arXiv 2020, arXiv:2007.03876. [Google Scholar]

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Amin, M.; Hou, L.; Clark, K.; Pfohl, S.R.; Cole-Lewis, H.; et al. Toward expert-level medical question answering with large language models. Nat. Med. 2025, 31, 943–950. [Google Scholar] [CrossRef]

- Brigham, N.G.; Gao, C.; Kohno, T.; Roesner, F.; Mireshghallah, N. Breaking news: Case studies of generative AI’s use in journalism. arXiv 2024, arXiv:2406.13706. [Google Scholar]

- Yang, Z.; Jia, X.; Li, H.; Yan, J. Llm4drive: A survey of large language models for autonomous driving. arXiv 2023, arXiv:2311.01043. [Google Scholar] [CrossRef]

- Wu, Y.; Li, D.; Chen, Y.; Jiang, R.; Zou, H.P.; Huang, W.; Li, Y.; Fang, L.; Wang, Z.; Yu, P.S. Multi-agent autonomous driving systems with large language models: A survey of recent advances. arXiv 2025, arXiv:2502.16804. [Google Scholar] [CrossRef]

- D’Antonoli, T.A.; Stanzione, A.; Bluethgen, C.; Vernuccio, F.; Ugga, L.; Klontzas, M.E.; Cuocolo, R.; Cannella, R.; Koçak, B. Large language models in radiology: Fundamentals, applications, ethical considerations, risks, and future directions. Diagn. Interv. Radiol. 2024, 30, 80. [Google Scholar] [CrossRef]

- Zhang, X.; Kuang, H.; Mou, X.; Lyu, H.; Wu, K.; Chen, S.; Luo, J.; Huang, X.-J.; Wei, Z. Somelvlm: A large vision language model for social media processing. arXiv 2024, arXiv:2402.13022. [Google Scholar] [CrossRef]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A survey of large language models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [PubMed]

- Kampelopoulos, D.; Tsanousa, A.; Vrochidis, S.; Kompatsiaris, I. A review of LLMs and their applications in the architecture, engineering and construction industry. Artif. Intell. Rev. 2025, 58, 250. [Google Scholar] [CrossRef]

- Yin, J.; Zhong, J.; Zeng, P.; Li, P.; Zheng, H.; Zhang, M.; Lu, S. ArchShapeNet: An interpretable 3D-CNN framework for evaluating architectural shapes. Int. J. Archit. Comput. 2025, 23, 753–774. [Google Scholar] [CrossRef]

- Zhong, J.; Yin, J.; Li, P.; Zeng, P.; Zang, M.; Luo, R.; Lu, S. ArchiLense: A Framework for Quantitative Analysis of Architectural Styles Based on Vision Large Language Models. arXiv 2025, arXiv:2506.07739. [Google Scholar] [CrossRef]

- Li, P.; Yin, J.; Zhong, J.; Luo, R.; Zeng, P.; Zhang, M. Segment Any Architectural Facades (SAAF): An automatic segmentation model for building facades, walls and windows based on multi-modal semantics guidance. arXiv 2025, arXiv:2506.09071. [Google Scholar]

- Zeng, P.; Yin, J.; Huang, Y.; Zhong, J.; Lu, S. AI-based generation and optimization of energy-efficient residential layouts controlled by contour and room number. In Building Simulation; Tsinghua University Press: Beijing China, 2025; pp. 1–29. [Google Scholar]

- Pan, Y.; Zhu, M.; Lv, Y.; Yang, Y.; Liang, Y.; Yin, R.; Yang, Y.; Jia, X.; Wang, X.; Zeng, F.; et al. Building energy simulation and its application for building performance optimization: A review of methods, tools, and case studies. Adv. Appl. Energy 2023, 10, 100135. [Google Scholar] [CrossRef]

- Coakley, D.; Raftery, P.; Keane, M. A review of methods to match building energy simulation models to measured data. Renew. Sustain. Energy Rev. 2014, 37, 123–141. [Google Scholar] [CrossRef]

- Crawley, D.B.; Lawrie, L.K.; Winkelmann, F.C.; Buhl, W.; Huang, Y.; Pedersen, C.O.; Strand, R.K.; Liesen, R.J.; Fisher, D.E.; Witte, M.J.; et al. EnergyPlus: Creating a new-generation building energy simulation program. Energy Build. 2001, 33, 319–331. [Google Scholar] [CrossRef]

- Roudsari, M.S.; Pak, M.; Viola, A. Ladybug: A parametric environmental plugin for grasshopper to help designers create an environmentally-conscious design. In Building Simulation; IBPSA: Las Cruces, NM, USA, 2013; Volume 13, pp. 3128–3135. [Google Scholar]

- Ghobad, L.; Glumac, S. Daylighting and energy simulation workflow in performance-based building simulation tools. In Proceedings of the 2018 Building Performance Analysis Conference and Simbuild, Chicago, IL, USA, 26–28 September 2018; pp. 26–28. [Google Scholar]

- Bazjanac, V. IFC BIM-Based Methodology for Semi-Automated Building Energy Performance Simulation. 2008. Available online: https://escholarship.org/content/qt0m8238pj/qt0m8238pj.pdf (accessed on 2 November 2025).

- Royapoor, M.; Roskilly, T. Building model calibration using energy and environmental data. Energy Build. 2015, 94, 109–120. [Google Scholar] [CrossRef]

- Lamberts, R.; Hensen, J.L. (Eds.) Building Performance Simulation for Design and Operation; Spoon Press: London, UK, 2011. [Google Scholar]

- Shea, K.; Aish, R.; Gourtovaia, M. Towards integrated performance-driven generative design tools. Autom. Constr. 2005, 14, 253–264. [Google Scholar] [CrossRef]

- Nguyen, A.-T.; Reiter, S.; Rigo, P. A review on simulation-based optimization methods applied to building performance analysis. Appl. Energy 2014, 113, 1043–1058. [Google Scholar] [CrossRef]

- Huang, J.; Pytel, A.; Zhang, C.; Mann, S.; Fourquet, E.; Hahn, M.; Kinnear, K.; Lam, M.; Cowan, W. An Evaluation of Shape/Split Grammars for Architecture. Waterloo. 2009. Available online: https://cs.uwaterloo.ca/research/tr/2009/CS-2009-23A.pdf (accessed on 2 November 2025).

- Smelik, R.M.; Tutenel, T.; Bidarra, R.; Benes, B. A survey on procedural modelling for virtual worlds. Comput. Graph. Forum 2014, 33, 31–50. [Google Scholar] [CrossRef]

- Schwarz, M.; Müller, P. Advanced procedural modeling of architecture. ACM Trans. Graph. (TOG) 2015, 34, 1–12. [Google Scholar] [CrossRef]

- Lu, S.; Li, T.; Zhong, J.; Zeng, P.; Hao, T.; Zheng, H.; Li, P.; Jin, Z.; Peter, R.; Yin, J. FloorplanMAE: A self-supervised framework for complete floorplan generation from partial inputs. arXiv 2025, arXiv:2506.08363. [Google Scholar]

- Lu, S.; Li, T.; Zhong, J.; Zeng, P.; Hao, T.; Zheng, H.; Li, P.; Jin, Z.; Peter, R.; Yin, J. Drag2Build++: A drag-based 3D architectural mesh editing workflow based on differentiable surface modeling. Front. Archit. Res. 2025; in press. [Google Scholar]

- Du, C.; Esser, S.; Nousias, S.; Borrmann, A. Text2BIM: Generating building models using a large language model-based multi-agent framework. arXiv 2024, arXiv:2408.08054. [Google Scholar]

- Yin, J.; Zeng, P.; Zhong, J.; Li, P.; Zhang, M.; Luo, R.; Lu, S. FloorPlan-DeepSeek (FPDS): A multimodal approach to floorplan generation using vector-based next room prediction. arXiv 2025, arXiv:2506.21562. [Google Scholar]

- Yin, J.; Zhong, J.; Li, P.; Zeng, P.; Zhang, M.; Luo, R.; Lu, S. UrbanSense: AFramework for Quantitative Analysis of Urban Streetscapes leveraging Vision Large Language Models. arXiv 2025, arXiv:2506.10342. [Google Scholar]

- Papaioannou, I.; Korkas, C.; Kosmatopoulos, E. Smart Building Recommendations with LLMs: A Semantic Comparison Approach. Buildings 2025, 15, 2303. [Google Scholar] [CrossRef]

- Li, L.; Qi, Z.; Ma, Q.; Gao, W.; Wei, X. Evolving multi-objective optimization framework for early-stage building design: Improving energy efficiency, daylighting, view quality, and thermal comfort. In Building Simulation; Tsinghua University Press: Beijing, China, 2024; Volume 17, pp. 2097–2123. [Google Scholar]

- Ali, U.; Bano, S.; Shamsi, M.H.; Sood, D.; Hoare, C.; Zuo, W.; Hewitt, N.; O’Donnell, J. Urban residential building stock synthetic datasets for building energy performance analysis. Data Brief 2024, 53, 110241. [Google Scholar] [CrossRef]

- Onatayo, D.; Onososen, A.; Oyediran, A.O.; Oyediran, H.; Arowoiya, V.; Onatayo, E. Generative AI applications in architecture, engineering, and construction: Trends, implications for practice, education & imperatives for upskilling—A review. Architecture 2024, 4, 877–902. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, H.; Liu, X.; Ji, G. Exploring the synergy of building massing and façade design through evolutionary optimization. Front. Archit. Res. 2022, 11, 761–780. [Google Scholar] [CrossRef]

- GB 50189-2015; Design Standard for Energy Efficiency of Public Buildings. Ministry of Housing and Urban-Rural Development of the People’s Republic of China: Beijing, China, 2015. (In Chinese)

- China Academy of Building Research. Architectural Design Data Collection, 3rd ed.; Vol. I: Architectural General Theory; China Architecture & Building Press: Beijing, China, 2016. (In Chinese) [Google Scholar]

- GB/T 50378-2019; Green Building Evaluation Standard (2024 Edition). Ministry of Housing and Urban-Rural Development of the People’s Republic of China: Beijing, China, 2019. (In Chinese)

- GB 50352-2019; Unified Standard for Civil Building Design. Ministry of Housing and Urban-Rural Development of the People’s Republic of China: Beijing, China, 2019. (In Chinese)

- Beijing Municipal Commission of Planning and Natural Resources. Beijing Urban Design Guidelines; Beijing Municipal Commission of Planning and Natural Resources: Beijing, China, 2020. (In Chinese) [Google Scholar]

- China Academy of Building Research. Architectural Design Data Collection, 3rd ed.; Vol. III: Office Buildings; China Architecture & Building Press: Beijing, China, 2016. (In Chinese) [Google Scholar]

- GB 55015-2021; General Code for Building Energy Conservation and Renewable Energy Utilization. Ministry of Housing and Urban-Rural Development of the People’s Republic of China: Beijing, China, 2021. (In Chinese)

- GB 50176-2016; Thermal Design Code for Civil Buildings. Ministry of Housing and Urban-Rural Development of the People’s Republic of China: Beijing, China, 2016. (In Chinese)

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).