1. Introduction

The increasing penetration of wind energy into national power grids demands accurate and reliable forecasting tools to ensure grid stability, optimize dispatch, and reduce balancing costs [

1,

2,

3,

4]. Among the various forecasting horizons, day-ahead wind power prediction plays a crucial role in energy market participation and operational planning [

5]. However, the accuracy of such forecasts strongly depends on the availability and quality of historical wind speed and wind power generation data, which is often limited or unevenly distributed across different regions. In many regions, wind resource monitoring networks are often concentrated in a few given areas, resulting in data-rich locations surrounded by data-scarce ones, while, at the same time, operational data from early-life wind farms are, by definition, limited. This spatial imbalance creates a significant challenge: building accurate forecasting models in areas with limited historical data, where conventional statistical or machine learning models may underperform due to insufficient training samples.

In the meantime, various methods have been developed for wind power forecasting [

6,

7,

8]. These methods fall into three categories: physical models using numerical weather prediction (NWP); statistical models that analyze stochastic processes, comprising both traditional statistical methods and machine learning; and hybrid models, bringing together the two previous approaches [

9]. Furthermore, and with regard to the forecasting horizon examined, we can identify three main categories of wind power forecasting models, with these including ultra-short-term forecasting (0–4 h), short-term forecasting (0–72 h) and medium- to long-term forecasting, spanning weeks to months. Similarly, and on the spatial end, models are also normally divided into three categories, with these capturing individual-level (a single wind turbine), wind farm-level, and regional-level forecasting of wind power [

8]. Focusing on statistical models, and on machine learning specifically, a next-level categorization encompasses traditional ML models on the one hand, and deep learning models on the other, with the latter registering as more pivotal over the recent period in the field of wind power forecasting. Amongst deep learning models, and according to [

8], spatial forecasting is normally addressed by convolutional neural networks (CNNs) [

10] and deep belief networks (DBNs) [

11], while the temporal forecasting dimension relies on the use of recurrent neural networks (RNNs) [

12], long short-term memory (LSTM) networks [

13,

14], and gated recurrent units (GRUs) [

15]. Finally, in an effort to achieve input optimization in wind power forecasting, signal decomposition is often applied, like with the integration of wavelet transform in CNNs [

16].

Acknowledging the above, a hybrid CNN-LSTM model [

16,

17] was developed in the current study for short-term (day-ahead) wind power forecasting, integrating LSTM effectiveness within a wavelet-enhanced deep learning architecture. To that end, the main aim of this research is to evaluate the spatiotemporal generalization capability of such a model at the national level in Greece, with its training relying exclusively on a single, data-rich location of the Greek mainland (central Greece). Our hypothesis is that such a model—if properly designed and trained—can perform competitively in forecasting multi-step, day-ahead wind power at geographically distinct and data-scarce locations, without the need for location-specific retraining. To test this hypothesis, we trained the hybrid model—combining discrete wavelet transform (DWT) with a deep learning architecture—on 11 years of hourly wind data from the aforementioned reference location, and then evaluated its performance across different geographical locations in Greece, each with only one year of data available. The key contributions of this paper are as follows:

We present a wavelet-enhanced deep learning model for day-ahead wind power forecasting, trained solely on one data-rich location.

We systematically assess the spatiotemporal forecasting performance of this model across multiple data-scarce sites.

We demonstrate the potential of such models to support national-scale wind forecasting and planning in regions with uneven data availability.

Overall, the results provide insight into the feasibility of reusing forecasting models which are trained on rich datasets for large-scale applications in the field of wind power forecasting. The remainder of this paper is organized as follows: In

Section 2, we present input data for the study and the methodological framework developed. Next, in

Section 3, we provide application results of our research, supported by a systematic analysis across different dimensions. Finally, in

Section 4 of the paper, we discuss the implications of our research and lay down the main conclusions of this study.

2. Data and Methodology

2.1. Input Data

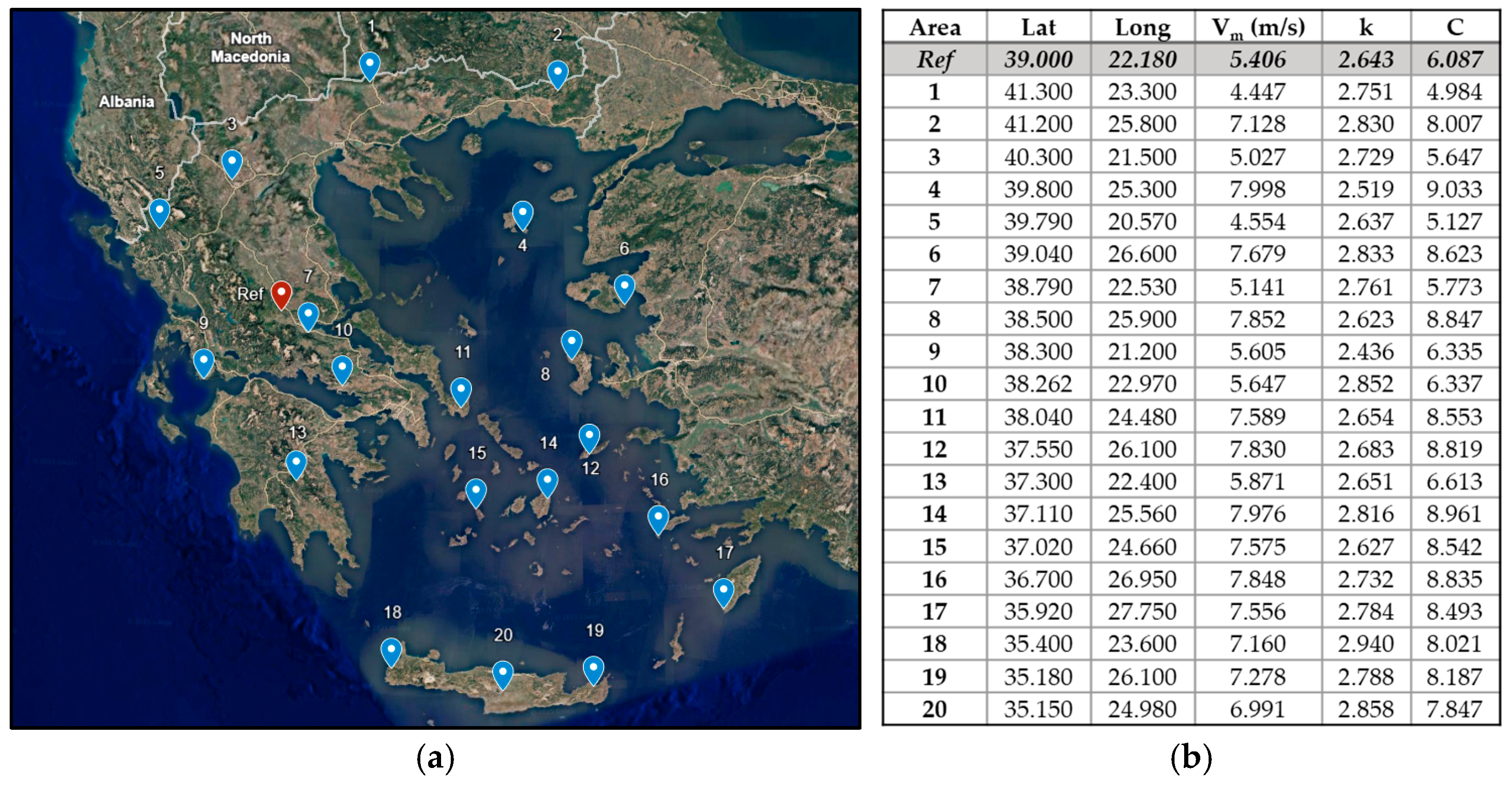

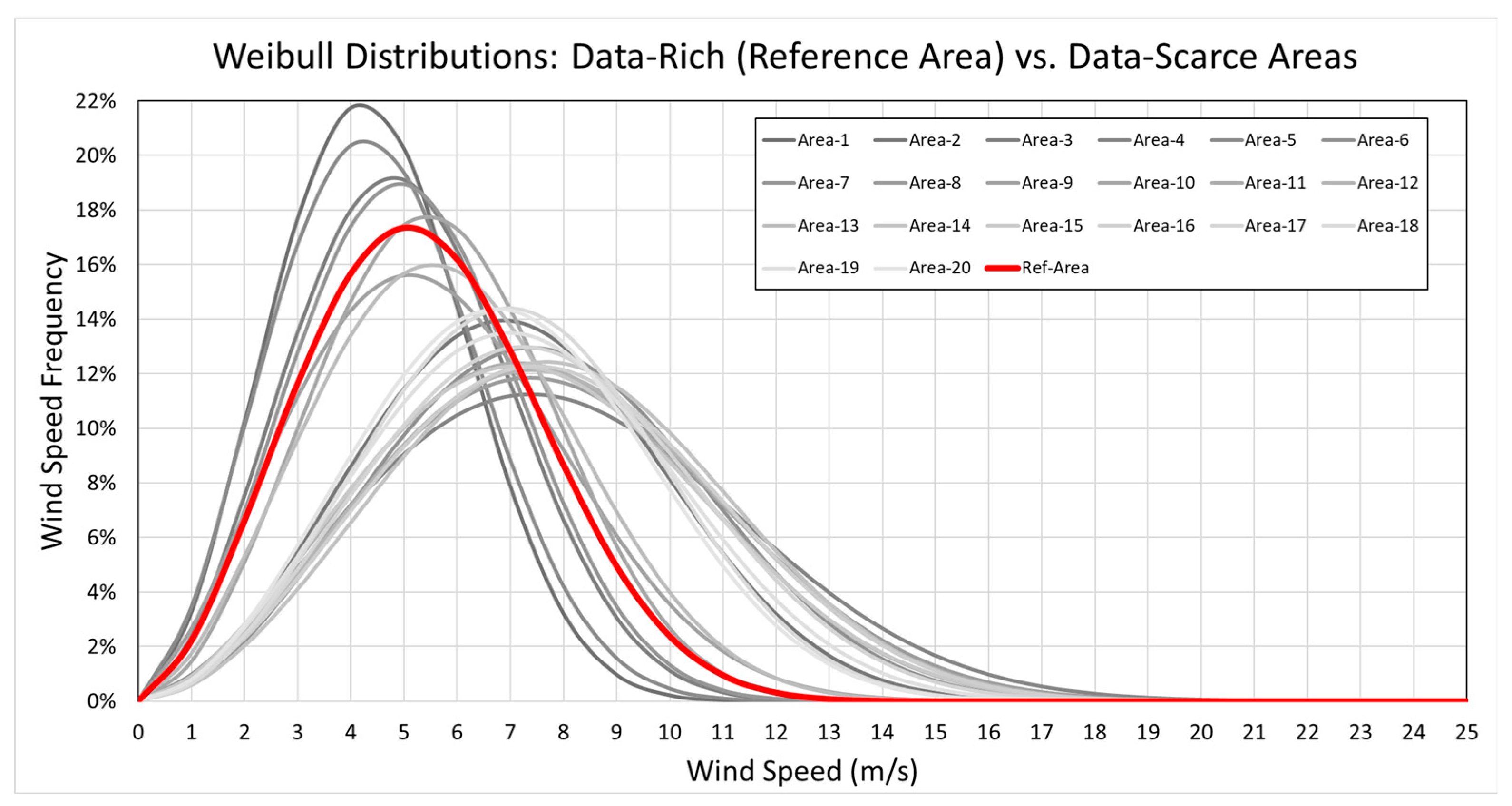

For this study, a vast time-series dataset was exploited. This allowed the examination of broadly different wind potential cases, which essentially reflects on the different wind regimes noted between the Greek mainland and the Aegean Sea (

Figure 1 and

Figure 2). In more detail, we made use of open-source meteorological data (wind speed at hub height, air temperature, and air density) at a scale of 0.625° × 0.5°, available from the reanalysis MERRA 2 model [

18], and applied different dataset time horizons for the data-rich reference area (12-year dataset of hourly values) and the data-scarce assumed geographical locations (1-year dataset of hourly values for twenty areas in total—

Figure 1), adopting a hub height of 80 m.

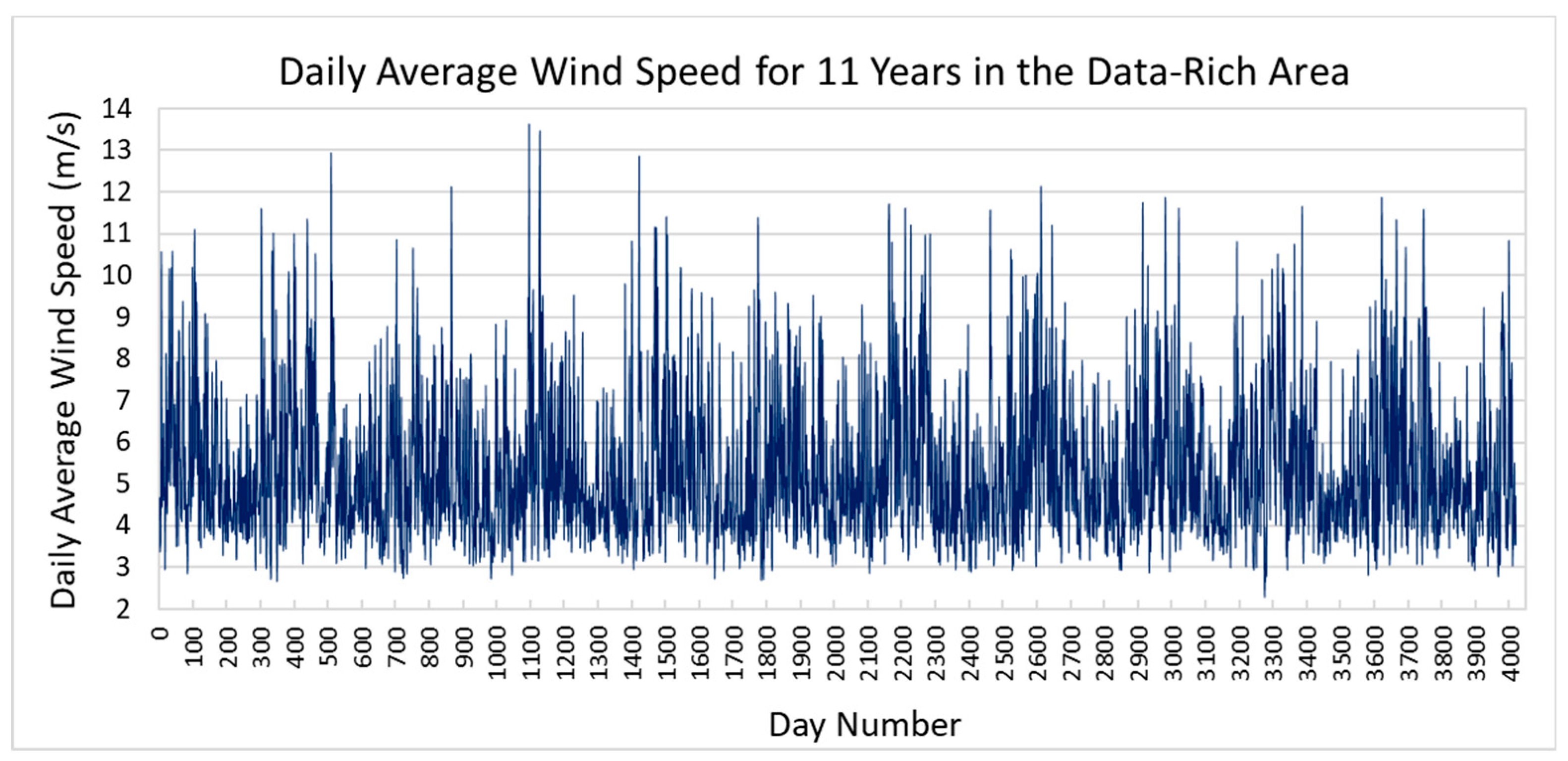

More specifically, the data-rich area features a dataset that spans from 2012 to 2023, following the Coordinated Universal Time (UTC) standard, and includes a total of 105,192 data points in each column, while, for the rest of the areas, the time span is limited to 2023 alone. The first 11-year period, selected to capture long-term interannual variations at affordable computational costs, was used for model training (see also

Figure 3 for the relevant daily average wind speed values), while, to assess the predictive performance of the model, the year 2023 was used for all examined areas, including the reference one (

Table 1). As such, the evaluation dataset contains 8760 data points, recorded at 1-h intervals. The preprocessing steps applied to the testing datasets mirrored those used during model training, ensuring consistency and readiness for prediction.

Due to the nature of time-series data, six time-related features were generated in order to assist in capturing potential patterns and periodicities. These are presented in

Table 2. The processing of time-based data often involves extracting features that capture temporal patterns, which are not explicitly apparent in raw timestamps [

19]. Cyclical representations of the time features were created for the 11-year dataset, as this method preserves the continuity of periodic variables like hours, days, weeks of year, months, and years.

In the context of this study, for hours, days, and months, sine and cosine transformations were applied, a common approach in time-series modeling so as to handle the circular nature of these variables. For instance, midnight and 23:00 p.m. are close to each other, and without this transform, a machine-learning model might not be able to capture this cyclical relationship. In the same context, a seventh feature was also created, corresponding to the estimated theoretical wind power available (see also

Table 2).

Finally, as far as the model target output is concerned (i.e., wind turbine power), the theoretical power curve of a commercial wind turbine was incorporated, which associates wind speed to the wind turbine power output. The wind power curve was obtained from the turbine’s specifications, indicating the anticipated power output at different wind speeds. The wind turbine used was a Vestas V60 (2000 kW), determined by a cut-in wind speed of 3 m/s and a cut-off wind speed of 24 m/s, with its characteristics used in both training and testing datasets.

At this point, it should be noted that the given wind turbine does not necessarily stand as a best-fit solution across all areas examined and that, amongst them, both very low- and very high-quality wind potential cases are present, thus challenging model performance in a broad space of investigation (see also values of mean annual wind speed

Vm and relevant Weibull parameters,

k and

C, for each of the areas—

Figure 1 and the corresponding Weibull curves in

Figure 2).

2.2. Wavelet Transform and Methodological Framework Overview

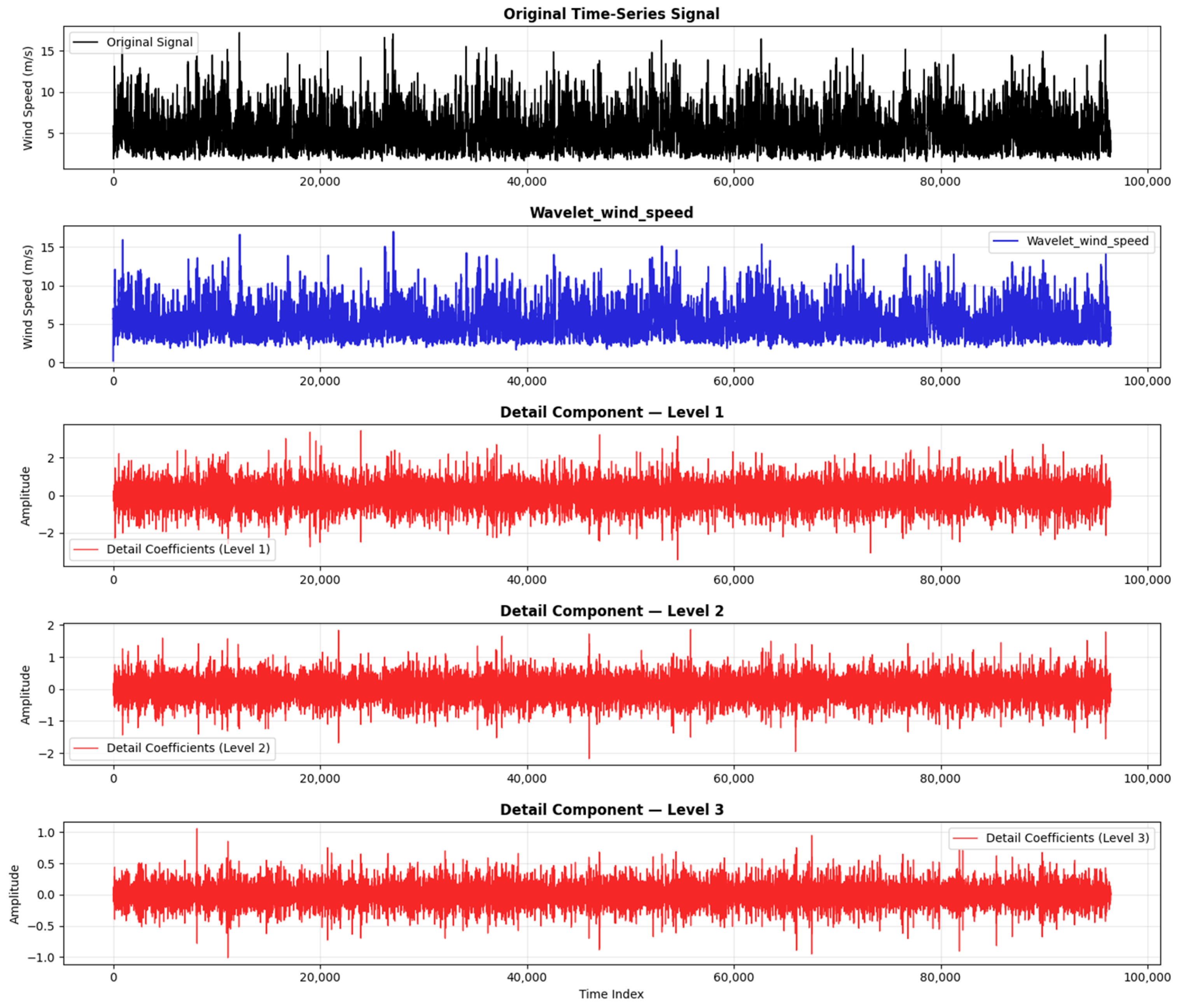

In time-series data analysis, accounting for inherent noise that can obscure underlying patterns is crucial. For a non-stationary signal like wind speed, which exhibits significant variability due to atmospheric flow dynamics, a signal processing technique that effectively captures local spectral characteristics of time-varying signals is essential.

Wavelet transform (WT) has emerged as a powerful hybridization approach when combined with various machine learning and deep learning models. It decomposes a complex signal into smaller components across different frequency–time scales, allowing for the extraction of features with varying resolutions, especially in the temporal domain. These derived signals subsequently serve as inputs for various prediction models [

20], deeming WT suitable for forecasting in dynamic environments like wind power generation [

21].

The fundamental concept of WT is to decompose a signal into different levels of resolution, known as multiresolution analysis. This decomposition allows for the extraction of both low-frequency trends and high-frequency transient information, which can prove to be significant in forecasting. Mathematically, the most general form of wavelet analysis is given by the continuous wavelet transform (CWT). For a signal

x(t), the formula for the continuous wavelet transform is as follows:

where

ψ*a,b is the wavelet function scaled by a (scale factor) and translated by b (time shift);

a controls frequency resolution, with higher values corresponding to lower frequencies;

b determines the time localization of the wavelet;

* denotes the complex conjugate.

While CWT provides a rich representation of the signal, it is computationally intensive and results in a highly redundant representation. Therefore, for practical applications, a discretized version of the transform—namely the discrete wavelet transform (DWT)—is often used [

22]. DWT preserves the main idea of multi-resolution decomposition, but achieves it through dyadic scaling and translation, making it computationally efficient. DWT decomposes a signal into approximate coefficients and detailed coefficients, using a series of filtering operations, and comprises the technique used in the current research (see also

Figure 4) as follows:

where

In more detail, DWT was applied across all non-time-based features (hub-height wind speed, air temperature, air density, and theoretical wind power), which were then fed into the CNN-LSTM. This is better illustrated in the data of

Table 3, also contextualized in the overall methodological framework currently applied, as presented in

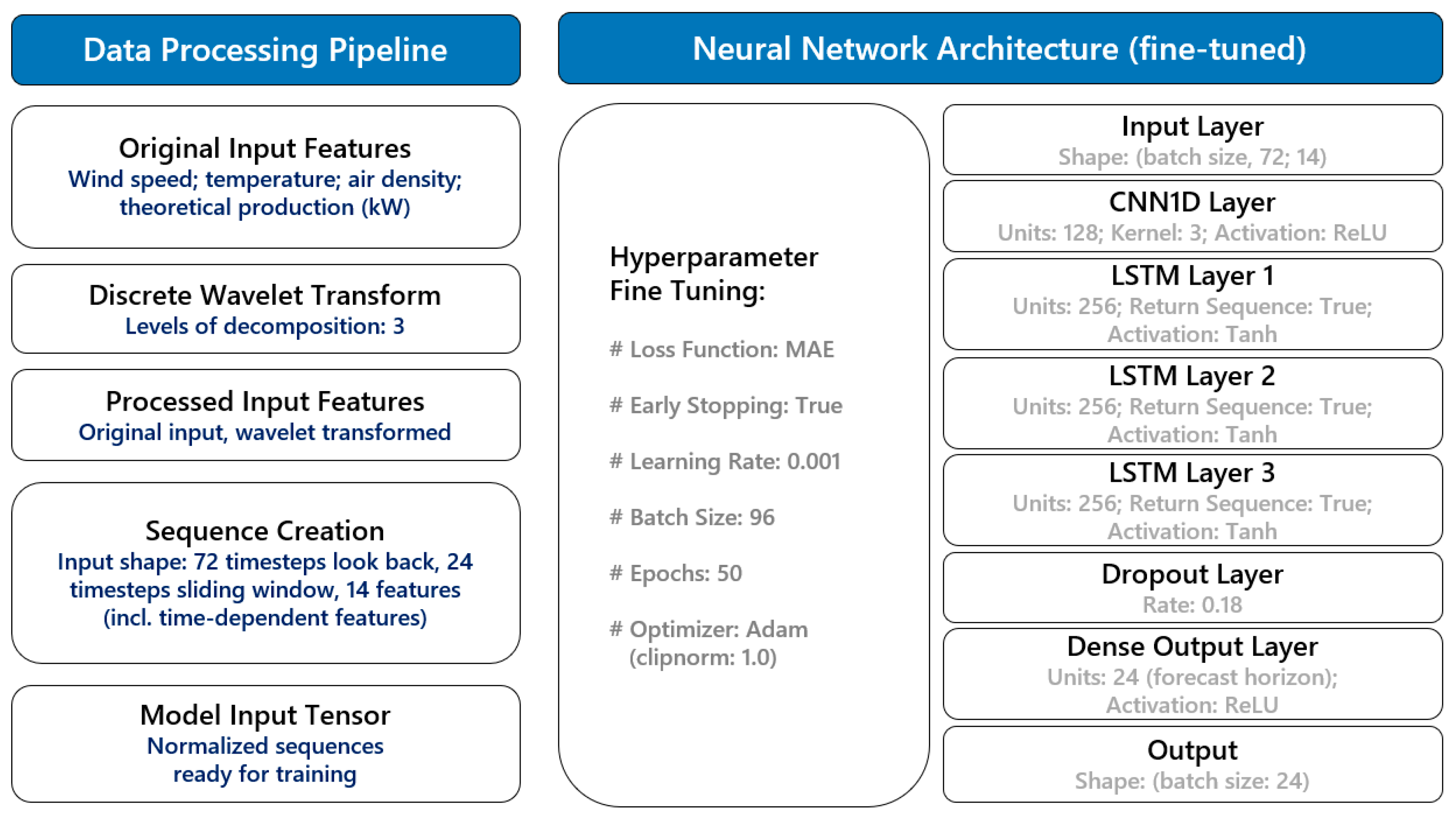

Figure 5.

The block diagram given in

Figure 5 details the components of the data processing pipeline, together with the architecture of the forecasting model developed, aspects of which are further analyzed in the following sub-sections of the paper, i.e.,

Section 2.3,

Section 2.4,

Section 2.5 and

Section 2.6.

2.3. Sliding Window and Look-Back Period

The sliding window technique is a very effective method used in time-series forecasting. It is usually applied in recursive forecasting models, where future values are predicted based on past data. In the frame of wind power forecasting, the sliding window approach involves a fixed number of past data points as input features for predicting the next value in the series. Wind power is directly influenced by wind speed patterns that span multiple hours, and using a sliding window allows the model to consider these patterns explicitly. The look-back period is a critical parameter that can be tuned based on the specific characteristics of the wind turbine and local weather conditions. A longer window might help capture seasonal trends or longer cycles, while a shorter window is more suited to short-term variability. By providing a fixed-size window of past data, the model can learn the patterns and trends to make accurate forecasts for the future. In this research, direct forecasting was applied, with a look-back period of 72 h and a sliding window of 24 h. The direct forecasting approach involves training a model specifically for a forecasting horizon, unlike the recursive approach, where predictions are used as inputs for subsequent predictions. This means that a dedicated model is trained for each prediction step, such as forecasting wind power output for hour t + 1 h, t + 2 h, and so on, without relying on intermediate predictions.

2.4. Hyperparameter Tuning

In developing deep neural networks, determining the optimal combination of hyperparameters is a crucial step that directly affects the model’s performance. Hyperparameters are configuration variables set before the learning process begins, and they govern various aspects of model training, including network architecture, learning rates, and the size of mini-batches. Finding the best set of hyperparameters is crucial because they significantly influence both the convergence of the training process and the model’s generalization ability on unseen data. Hyperparameter tuning is the process of finding the best hyperparameter values for a model. Some common hyperparameters include the number of neurons, which controls each layer’s capacity to represent complex patterns. Too few neurons may result in underfitting, while too many can lead to overfitting. Another hyperparameter is the batch size, which is the number of samples the model processes before updating the weights. Having a smaller batch size leads to more frequent updates, while a larger one provides smoother gradients but slower training. Learning rate is a critical hyperparameter that controls how much the model’s parameters are adjusted with each update. A learning rate that is too high can cause the model to converge too quickly to a suboptimal solution, while a learning rate that is too low can slow down the training process. Lastly, the dropout rate is a regularization hyperparameter that prevents overfitting by randomly turning off a proportion of neurons during training. In the search for the best combination of these hyperparameters, various techniques can be employed, such as grid search, random search, and more sophisticated methods, like Bayesian optimization or Hyperband. In this study, an Optuna search was applied to automate the process of finding the best configuration based on predefined metrics, resulting in the final models for this research. The bundle of parameters eventually adopted corresponded to 128 neurons for the input CNN layer and 256 neurons for each of the hidden LSTM layers, with a learning rate of 0.001 and a dropout rate of 0.18.

2.5. Optimizers

Optimizers are algorithms or methods that adjust the weights of the neural network to minimize the loss function during training. The choice of optimizer has a direct impact on how quickly the model converges and how well it generalizes to new data. The target of the optimizer is to find the global or near-global minimum. Several optimizers are commonly used in deep learning, each with its advantages and disadvantages. The optimizers that were tested in the context of this study are the following:

Stochastic Gradient Descent (SGD): One of the most basic optimizers, SGD updates the model’s weights by calculating the gradient of the loss function with respect to the parameters using only a small batch of data. Although SGD is often seen as a simple and effective optimizer, it can prove slow in converging and may also become stuck in local minima.

Adam (Adaptive Moment Estimation): A more advanced optimizer that combines the benefits of both SGD and momentum-based methods. Adam adjusts the learning rate dynamically by keeping track of past gradients, which leads to faster convergence. It is widely used because of its ability to handle sparse gradients and its robustness across different tasks.

RMSprop (Root Mean Square Propagation): RMSprop also adapts the learning rate based on the magnitude of recent gradients, making it suitable for problems in which the gradients vary widely across parameters.

Nadam (Nesterov-accelerated Adaptive Moment Estimation): An extension of Adam, Nadam incorporates Nesterov momentum, which helps improve the optimizer’s convergence speed by looking ahead at the gradient direction. The given optimizer combines the benefits of adaptive learning rates from Adam and the acceleration from Nesterov momentum, making it effective in handling noisy gradients and offering faster convergence.

Choosing the right optimizer is essential because it affects the model’s ability to converge efficiently. A poor choice may result in slow training, oscillating gradients, or even divergence. To find the best working optimizer for a specific task requires a trial-and-error process; this is the reason that the deployment of automated mechanisms is pivotal, in order to find the best-suited optimizer and, by extension, the best-fitting hyperparameters. In this context, the optimizer eventually adopted in this study was Adam.

2.6. Activation Functions

Activation functions are crucial components in neural networks, as they define the output of each neuron. The purpose of an activation function is to introduce non-linearity into the network, enabling it to model complex relationships in the data. Without activation functions, the network would behave like a linear model regardless of its depth, limiting its capacity to solve non-linear problems. There are several widely used activation functions, each suited to different types of tasks and architectures. In this study, the following activation functions were used:

ReLU (Rectified Linear Unit): ReLU is perhaps the most commonly used activation function in deep learning models nowadays. It works by outputting the input directly if it is positive; otherwise, it returns zero. This simple non-linearity helps to introduce sparsity into the model, allowing only a fraction of neurons to be active at any given time. This sparsity makes ReLU very computationally efficient, while its non-linearity allows the model to capture complex patterns. ReLU was used in both the CNN and dense output layers of the model of the current study.

Tanh (Hyperbolic Tangent): Tanh also squashes input values, but to a range between −1 and 1, which allows for stronger gradient signals than the sigmoid function. Tanh is typically used in cases for which the model needs to output a value that ranges between negative and positive values. However, like the sigmoid function, it is prone to vanishing gradients in deeper networks. Tanh was used in the LSTM layers of the model in the current study.

2.7. Statistical Evaluation Indices

To evaluate the models’ performance, three key metrics were employed.

This metric calculates the average absolute difference between the predicted and actual values. A lower MAE indicates that the model is making predictions that are close to the actual values on average. MAE is particularly useful for understanding the overall error magnitude and is easy to interpret.

MAPE is expressed as a percentage, making it easier to interpret across different datasets. It measures the accuracy of the model by quantifying the average percentage difference between predicted and actual values. A lower MAPE indicates better model performance, with smaller deviations from the actual data.

R2, also known as the coefficient of determination, represents the proportion of variance in the dependent variable that is predictable from the independent variables. An R2 equal to 0 indicates that the model explains none of the variability, and an R2 equal to 1 indicates that the model explains all of the variability.

4. Discussion and Conclusions

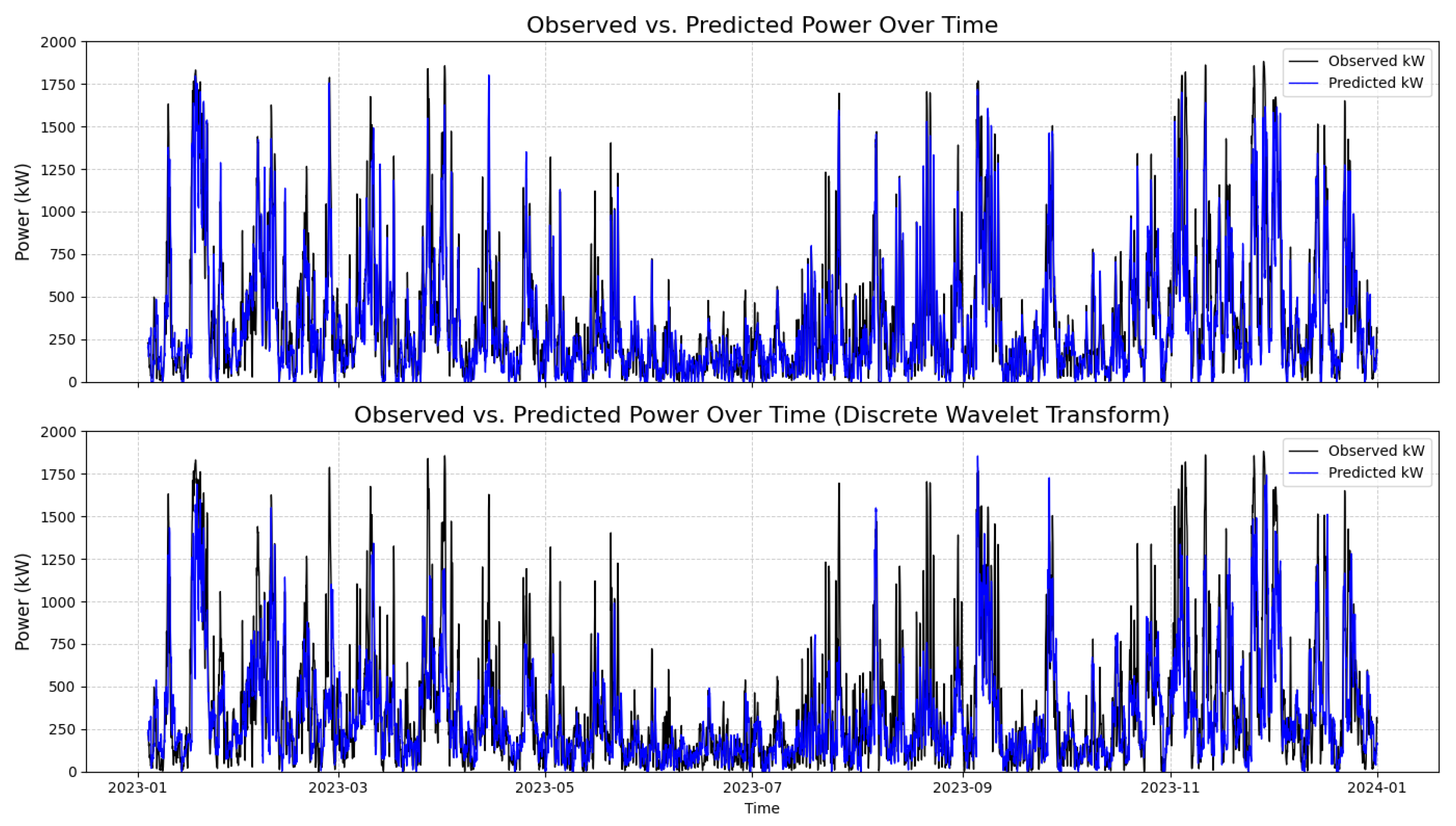

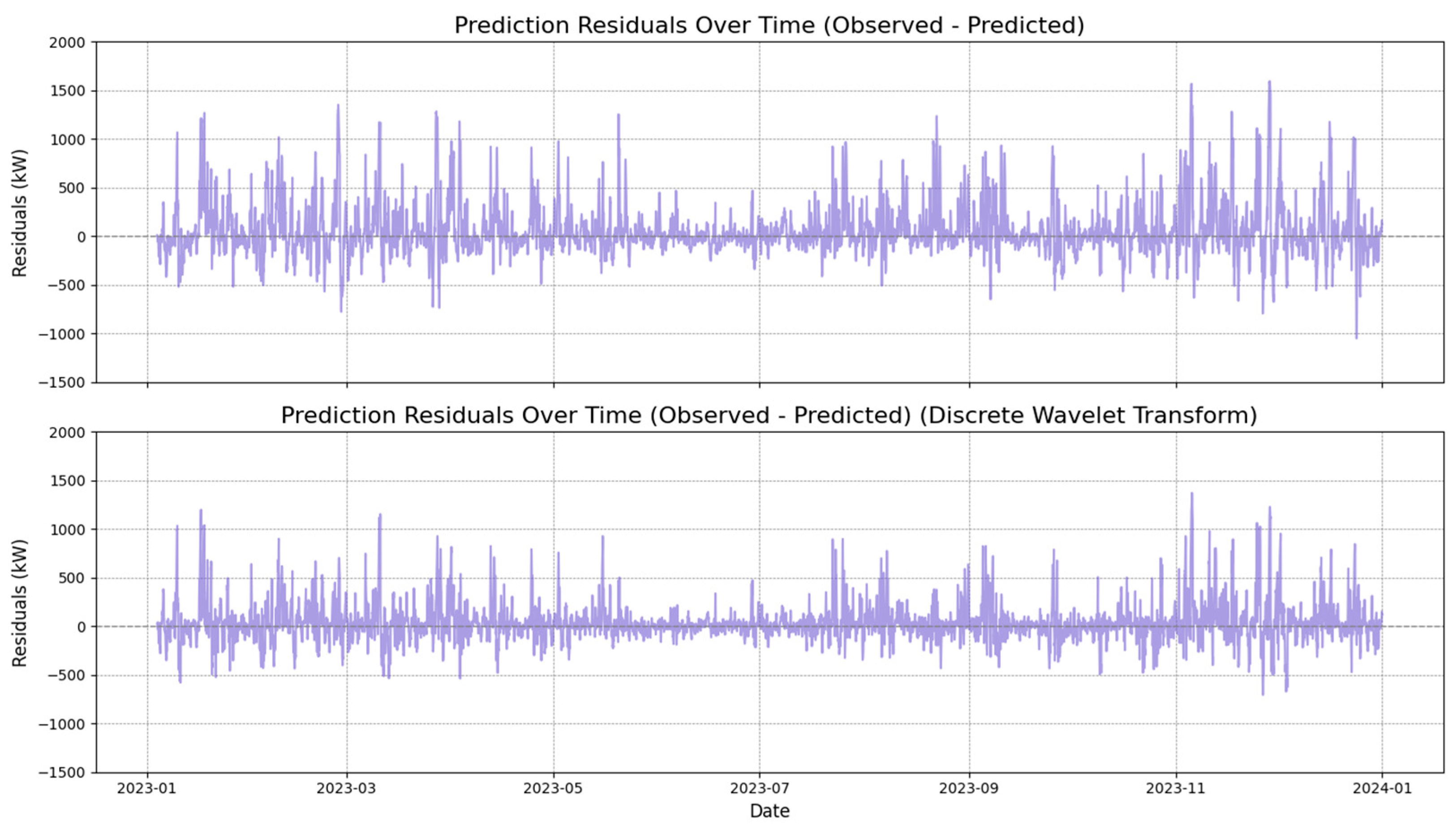

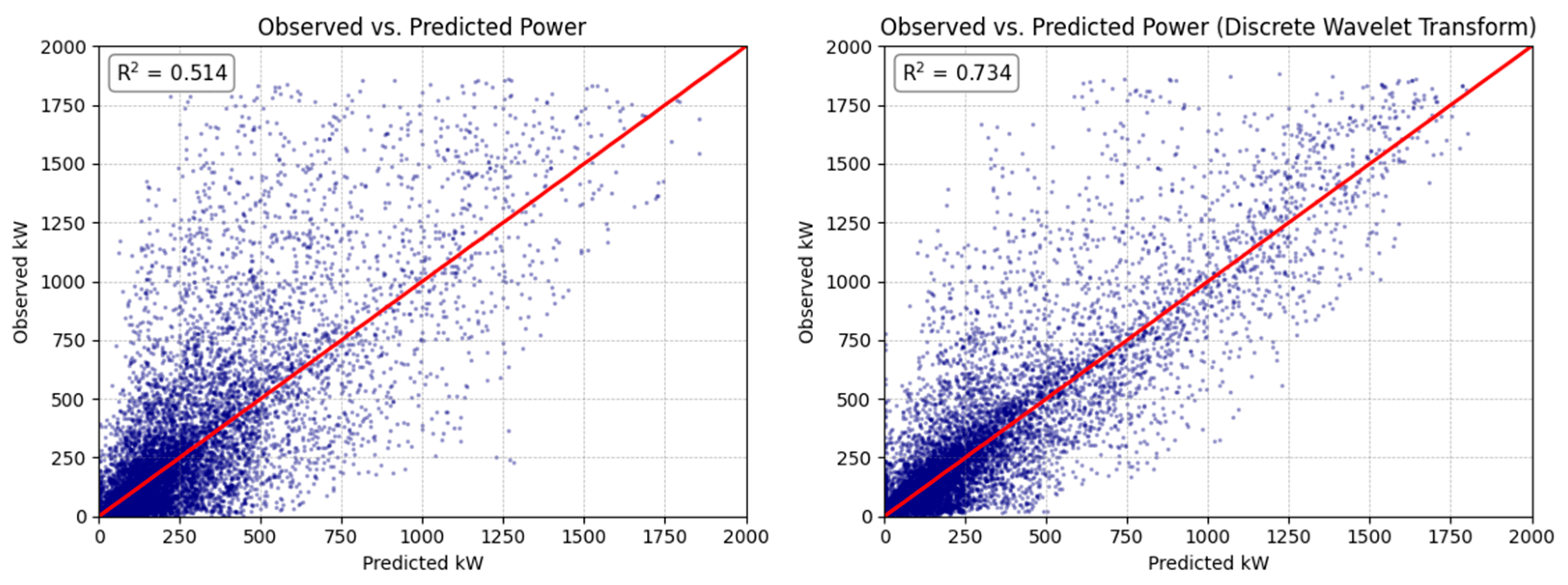

In the current study, we explored different aspects of spatiotemporal forecasting dynamics, mapping the performance of a hybrid DWT-enhanced forecasting model. The model was trained on rich, long-term, historical data of wind power from a given location on the Greek mainland (central Greece), and was then used in order to test the hypothesis of sufficient day-ahead forecasting performance under its application in data-scarce areas across the broader Greek territory.

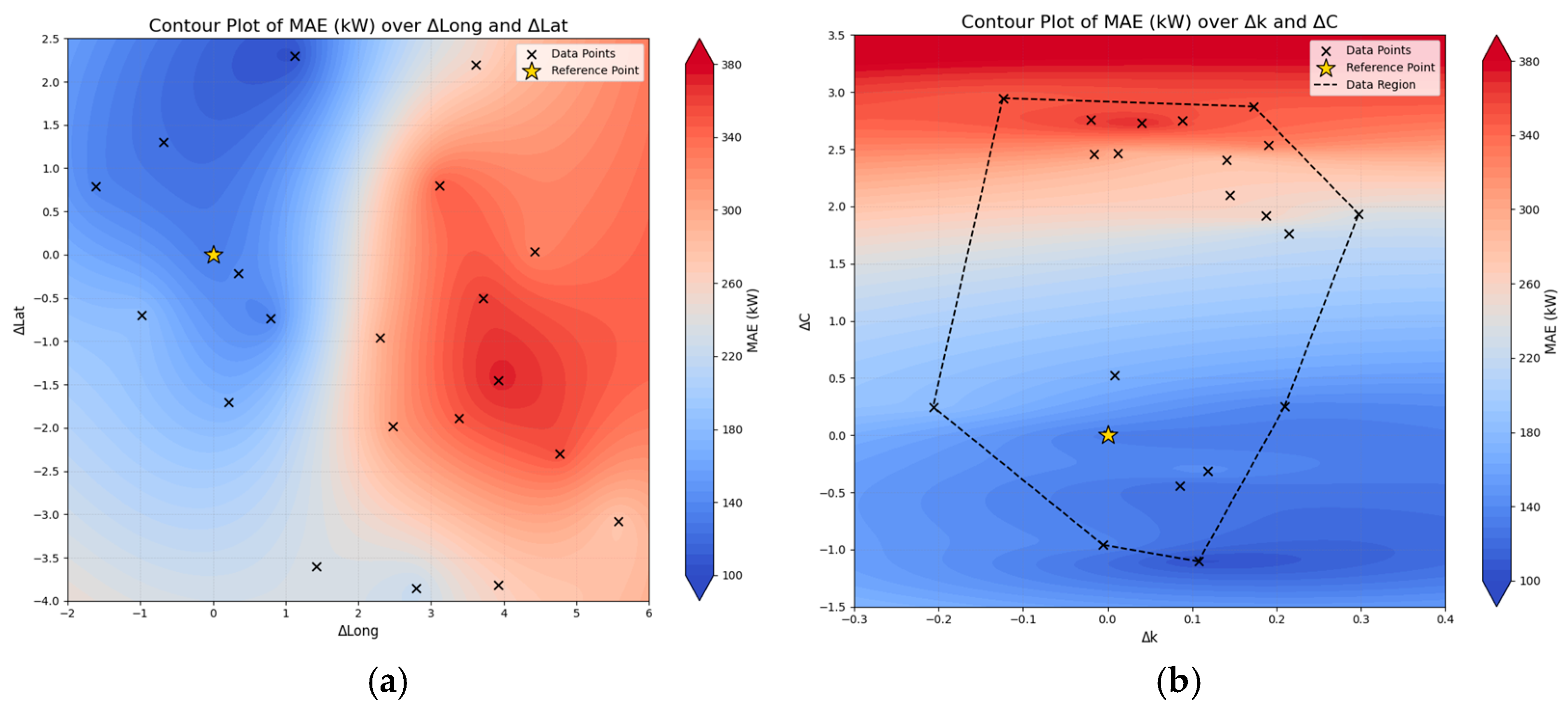

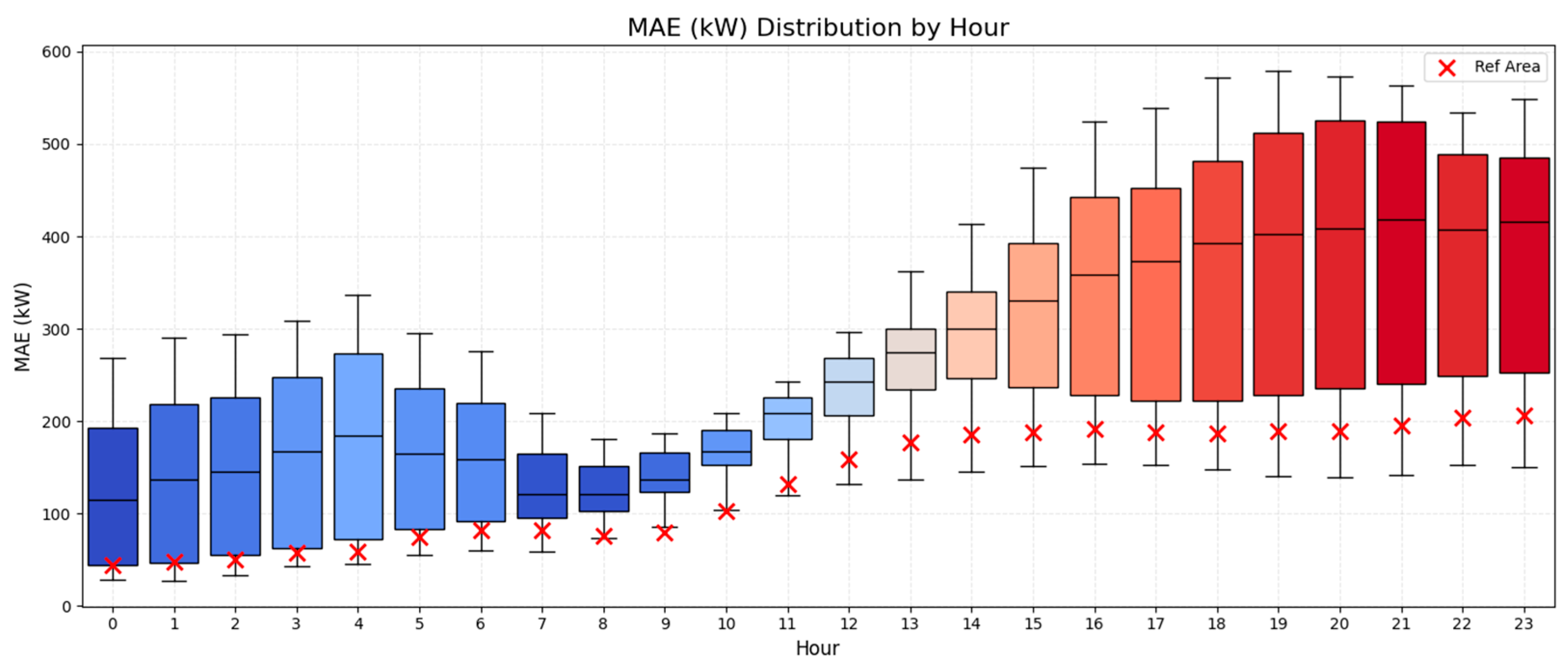

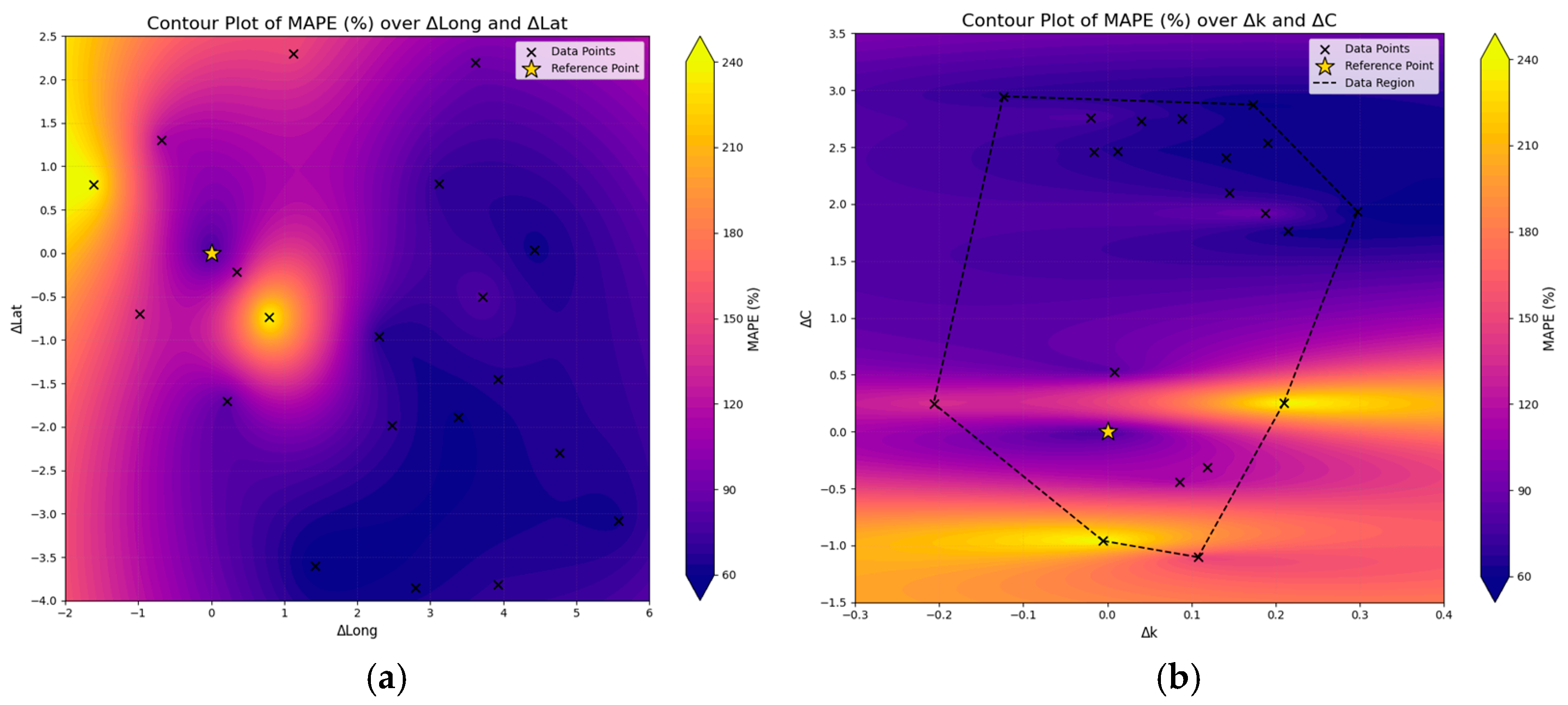

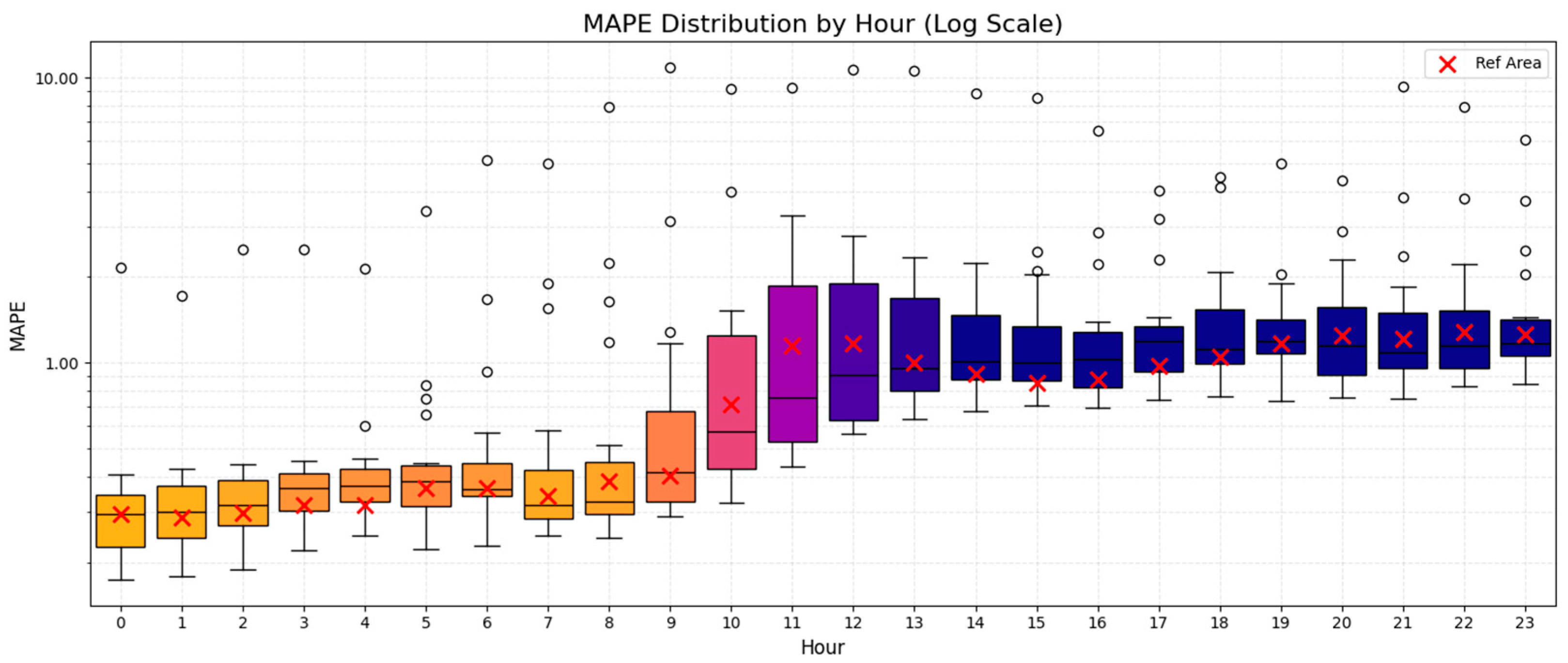

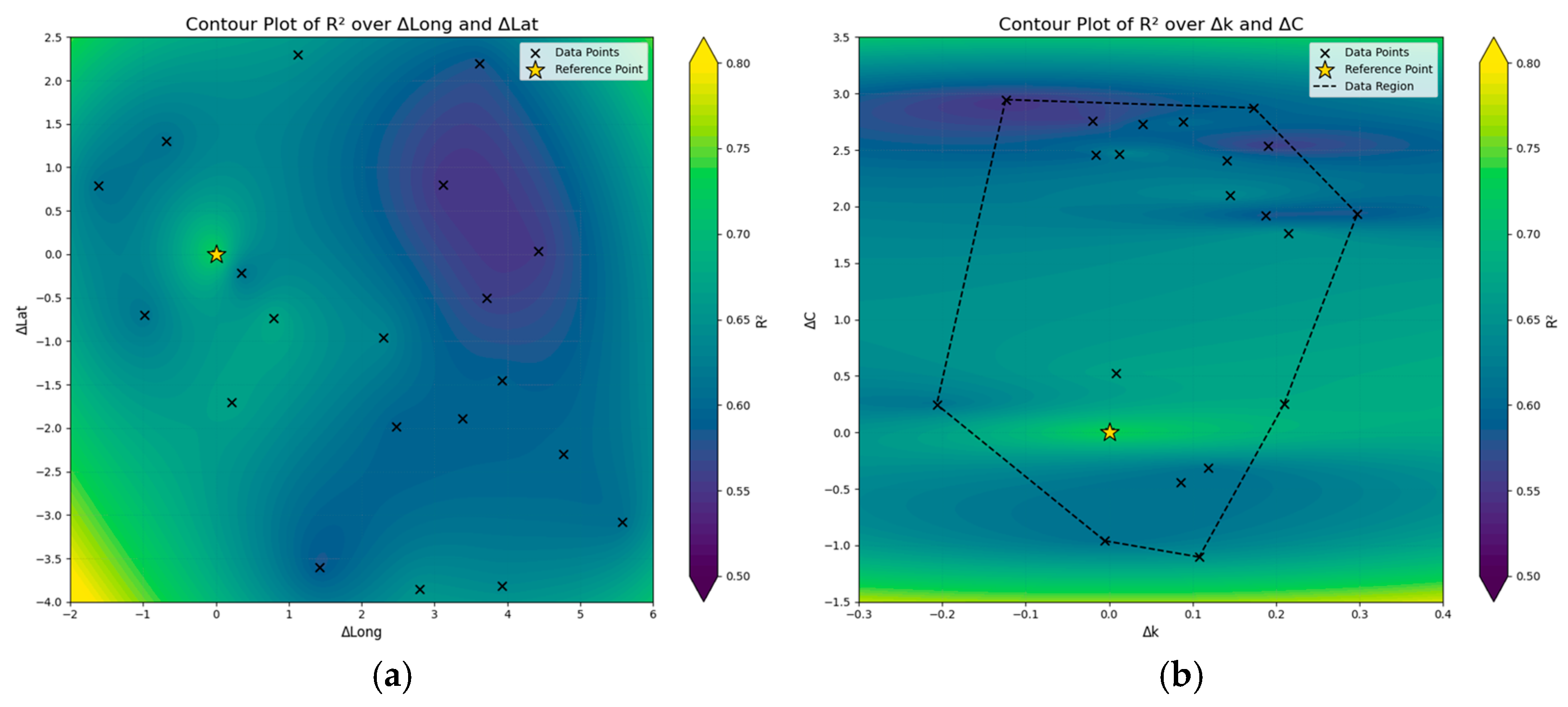

The analysis encompassed three problem dimensions, i.e., the spatial dimension, the temporal dimension, and also a third dimension considering local wind potential characteristics, relying at the same time on three key statistical indices, i.e., MAE, MAPE, and R2. The spatial and wind potential dimensions were approached in the context of an annual evaluation regarding the estimation of relevant indices, while the temporal one provided more detailed insights on the performance of the model, further disaggregating results over the 24-h period of the day-ahead forecasting horizon.

Our analysis to that end designated the emergence of two main regions for which the model presented different patterns of performance. The first region corresponds to the Greek mainland, where the area used to train the model is also located, and the second region refers to the Aegean Sea. This geographical distinction also coincides with the establishment of two regions carrying different wind potential characteristics. The Aegean Sea appreciates high- and very high-quality wind potential, while the same is not valid for the most part of the Greek mainland.

To that end, MAE presented itself to be quite higher in the case of the Aegean Sea region, driven by the higher-quality wind potential, while, in the case of MAPE, an inverse behavior was noted, demonstrating that the model performs more efficiently in higher-quality wind potential areas with regard to the given metric. The coefficient of determination (R2), on the other hand, was consistent with the trends noted in MAE, exhibiting lower levels of fitness for the Aegean Sea area. While the model presented different patterns of performance, especially between the two main regions identified, its overall performance can be deemed as sufficient, showcasing its capacity to capture the dynamics of wind potential variation over the broader geographical area of Greece.

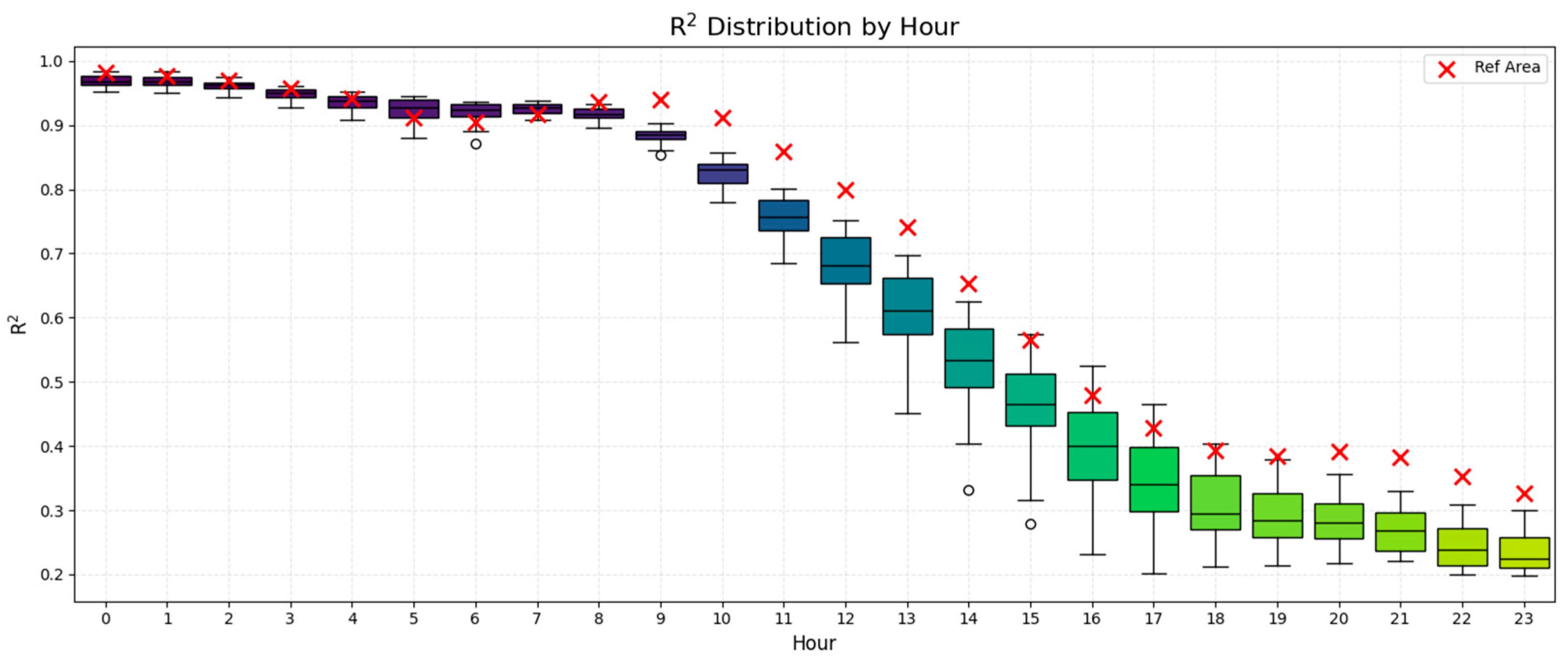

With regard to the temporal dimension of the problem, our analysis designated different patterns of variation over the 24-h forecasting horizon. MAE and MAPE behaved in a similar fashion, with the relevant values presenting an increase in the course of the day, while demonstrating a somewhat stabilizing trend over the last quarter of the day. Uncertainty, perceived as the spatially-driven variations in indices within the pool of examined areas, minimized for MAE and maximized for MAPE, around noontime hours. The coefficient of determination, on the other hand, exhibited higher uncertainty during afternoon hours, with an intense decreasing behavior over the entire 24-h span of the day. At the same time, the model showed high levels of adjustment to the reference area for MAE and R2, with MAPE, however, presenting better results for a significant portion of examined areas and time periods over the course of the day.

In accordance with the above, the temporal dimension seems to carry a more drastic impact on the model performance. This becomes more pronounced in the case of R2 and MAE and fades in the case of MAPE. On the other hand, MAE holds greater sensitivity against wind potential characteristics and is slightly less influenced by the geographical distribution of examined areas, whereas MAPE is largely affected by Δk and, to a smaller extent, by ΔLong. Finally, R2 is found to vary within a limited range of values in relation to the spatial and wind potential dimensions during the second half of the 24-h horizon. This is also relevant to the overall model performance, since, according to the temporal dimension analysis, statistical indices present diminishing behaviors past the first 12 h of the day. To that end, and despite being challenged by the presence of regions with highly distinctive wind potential characteristics, it can be argued that, under the assumption of a ~12-h forecasting horizon, the model is able to successfully outweigh the impact of spatial variation at the national level, with limited-only distortions across the Greek territory.

Acknowledging that, application of similar intra-day or shorter-horizon forecasting models suggests a prospective solution that may address the problem of data scarcity in several areas, which, by leveraging data-rich areas for the training of relevant models, may provide firm estimations on the anticipated wind power generation patterns. Furthermore, this also positions our research in the planning domain, adding value to common wind farm design approaches through the application of a deeper analysis that touches upon operational aspects, as well.

Additionally, and in the context of further research, the developed methodology can be evaluated both in a broader geographical context and become validated on the basis of actual operational data from already existing wind farms. By expanding the problem space of our analysis, new dynamics could emerge, while, by challenging the capacity of the proposed approach to provide sufficient forecasting results for actual wind projects, commitment of otherwise necessary training resources and big data availability could be effectively addressed. Beyond that, application of clustering techniques, such as in [

25], may lead to a deeper understanding of the model’s performance as far as regional correlation aspects amongst the examined areas are concerned.