Efficient Autonomy: Autonomous Driving of Retrofitted Electric Vehicles via Enhanced Transformer Modeling

Abstract

1. Introduction

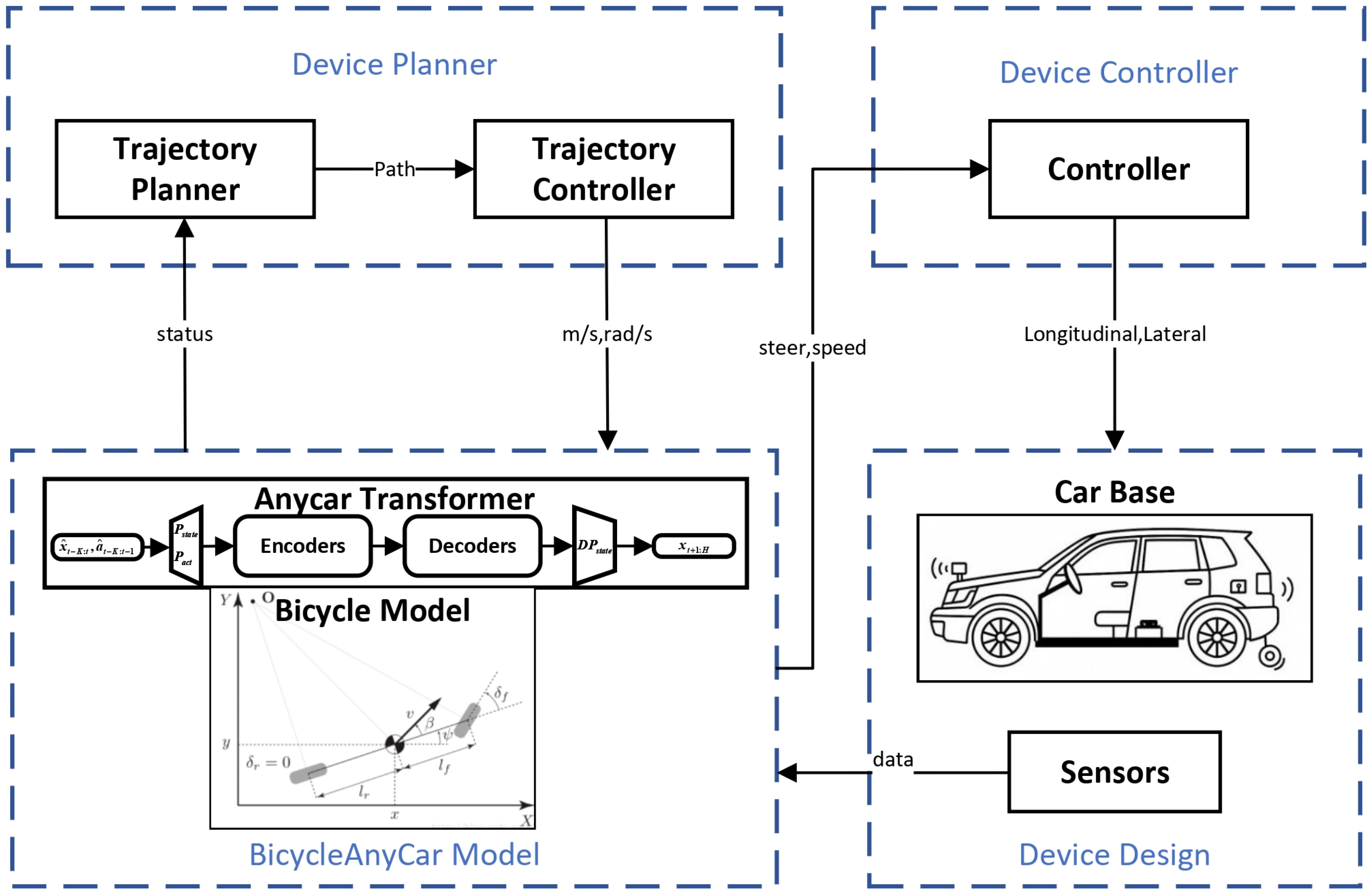

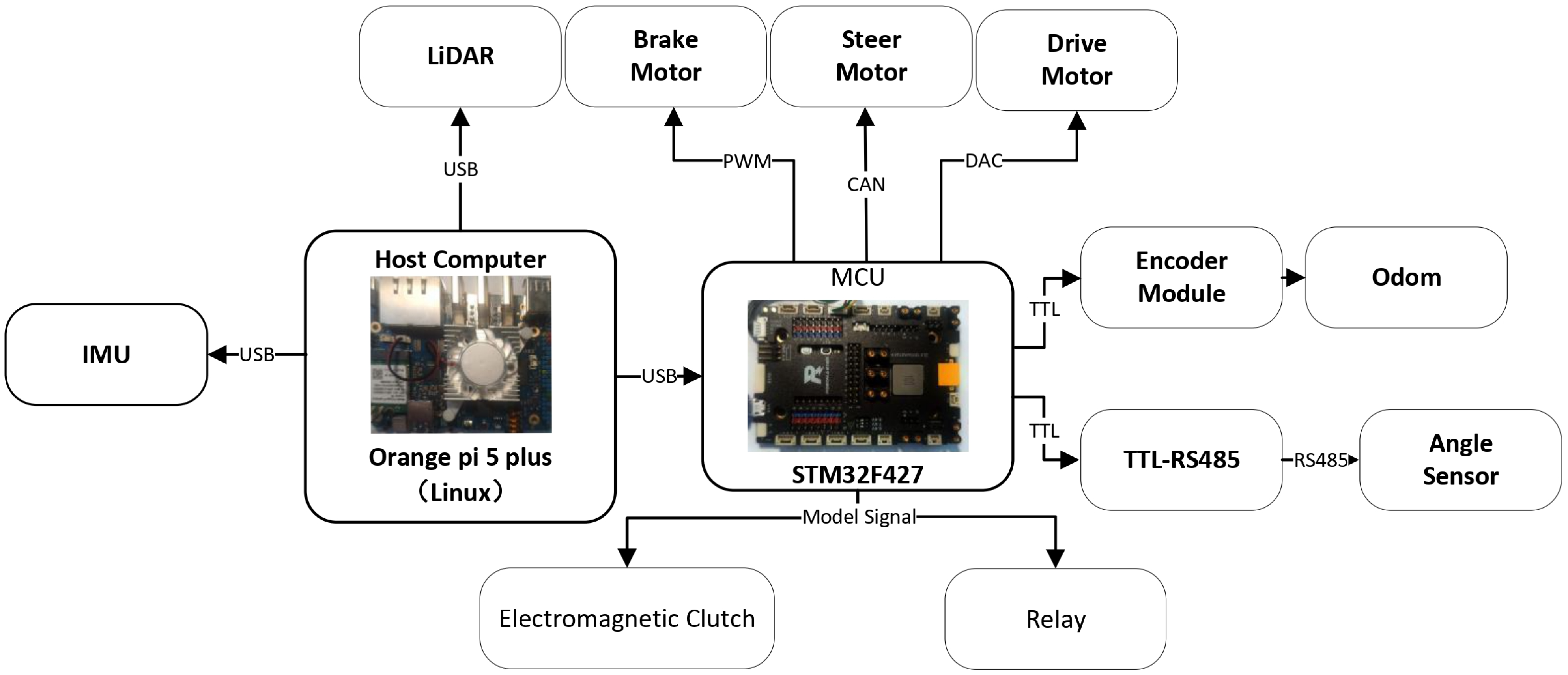

2. Design and Development of an Autonomous Driving System

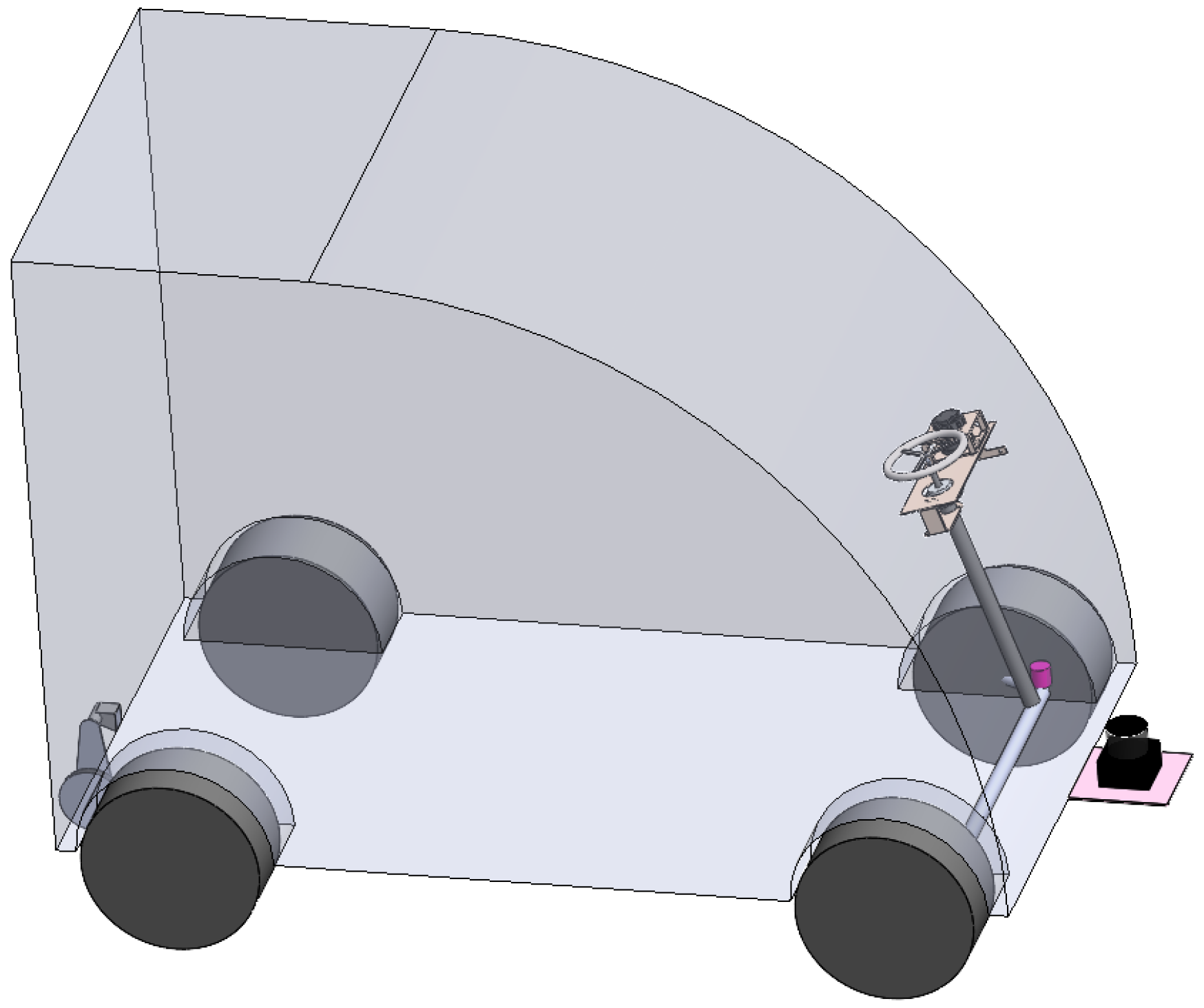

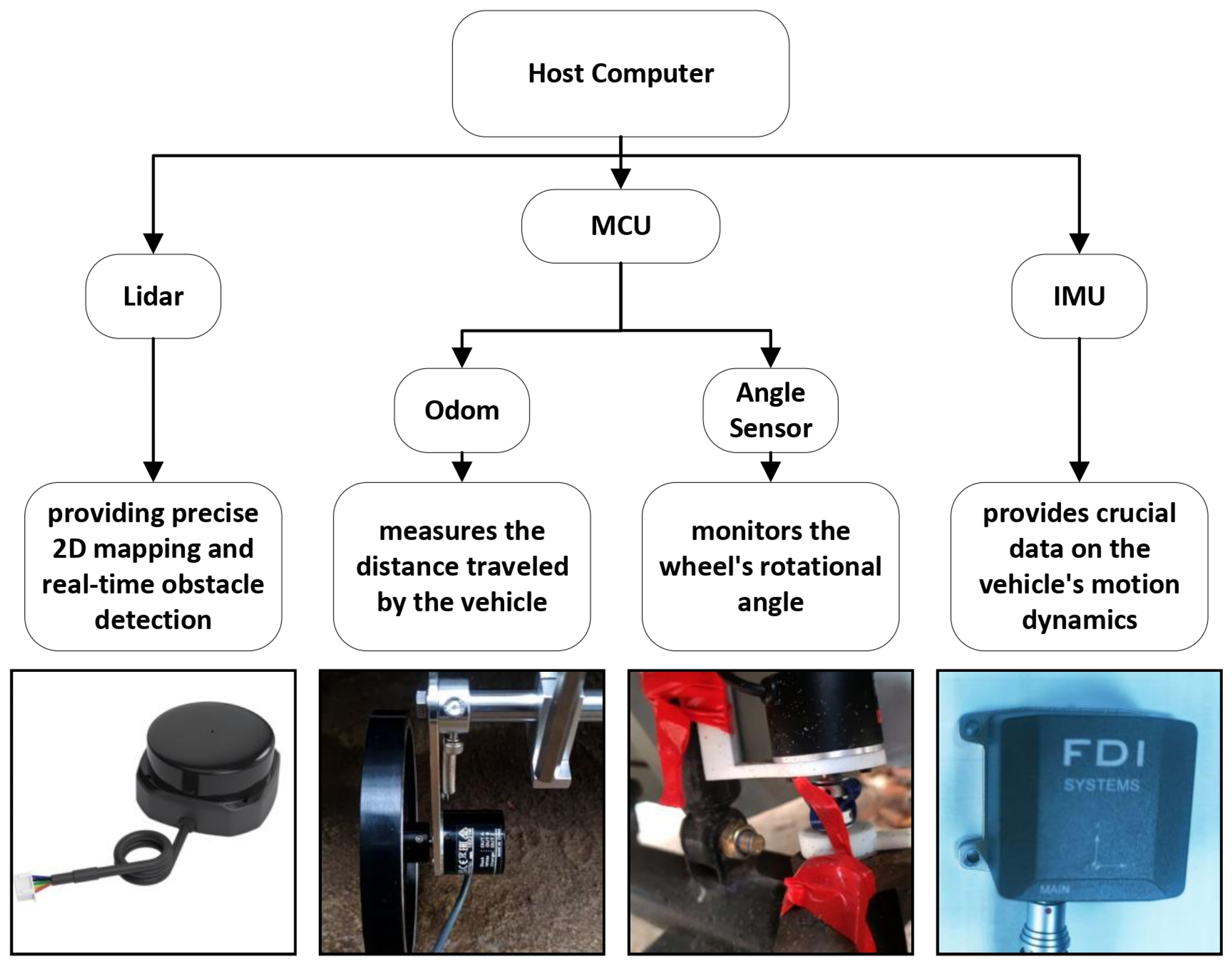

2.1. Device Description

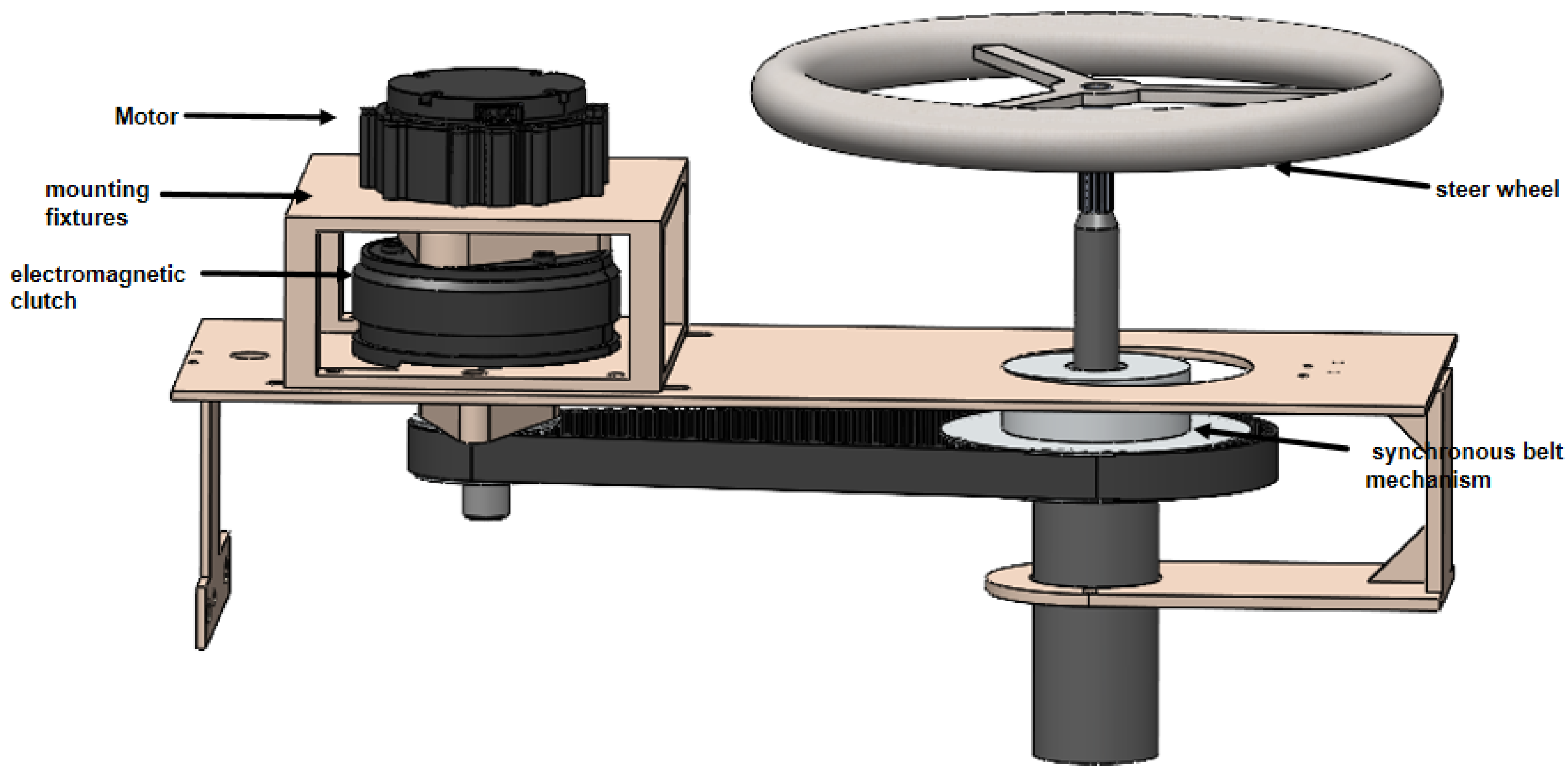

2.1.1. Steering Wheel

2.1.2. Brake

2.1.3. Accelerator

2.1.4. Gear Shift

2.1.5. Sensors

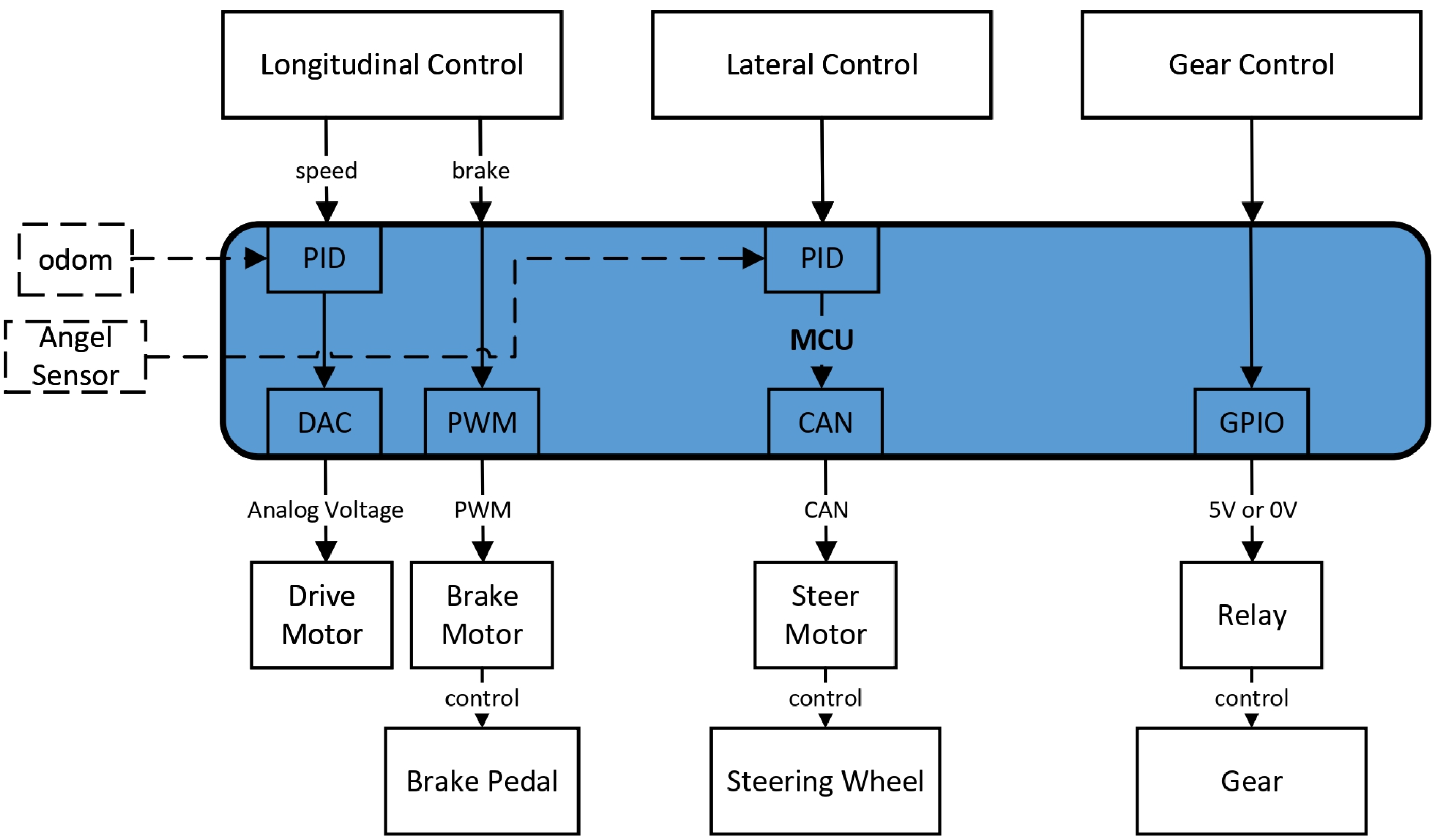

2.2. Device Controller

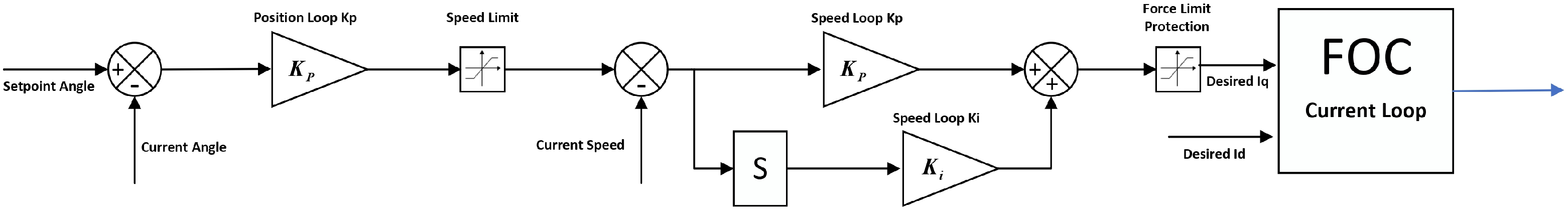

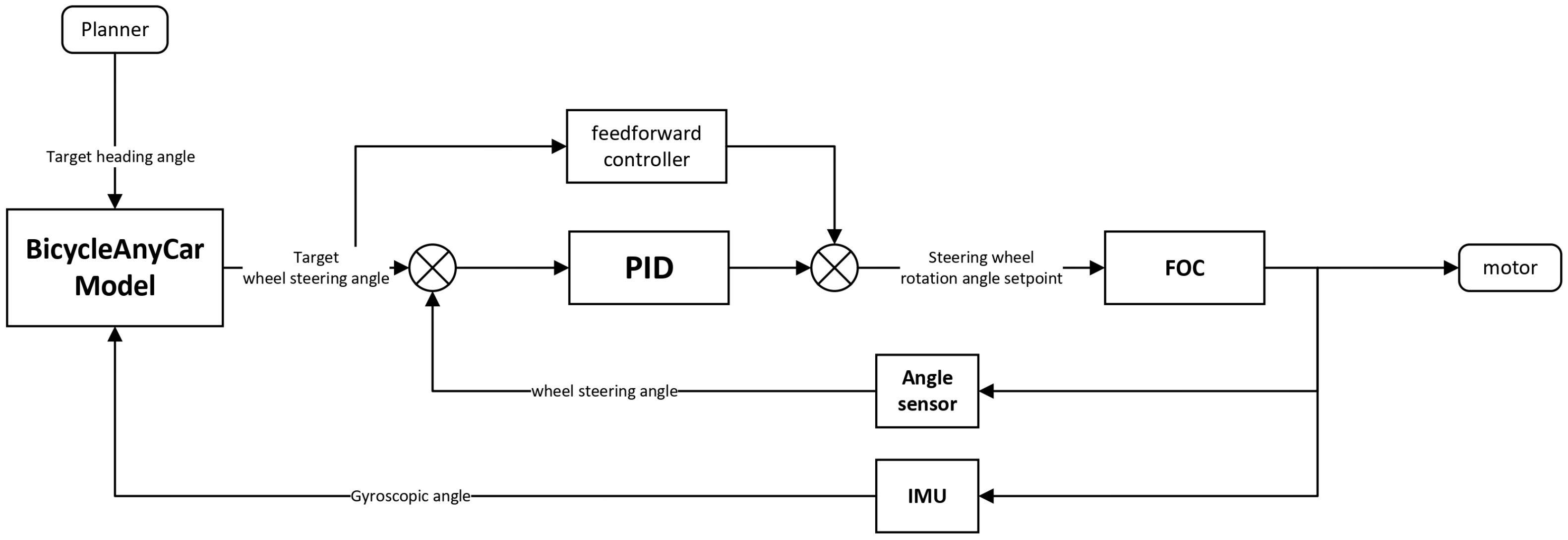

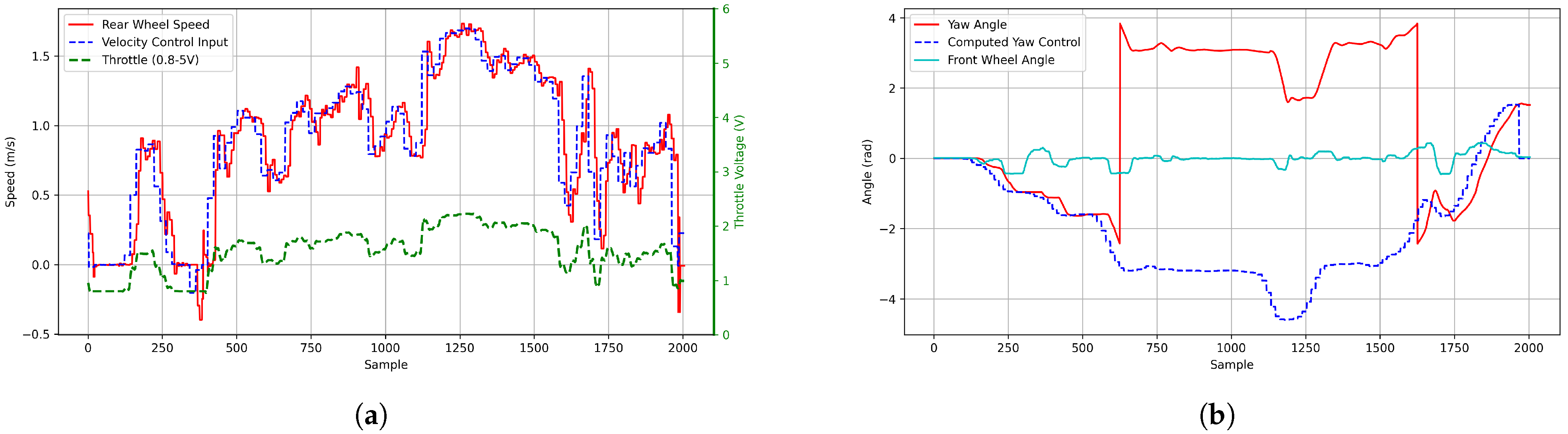

2.2.1. Lateral Control

2.2.2. Longitudinal Control

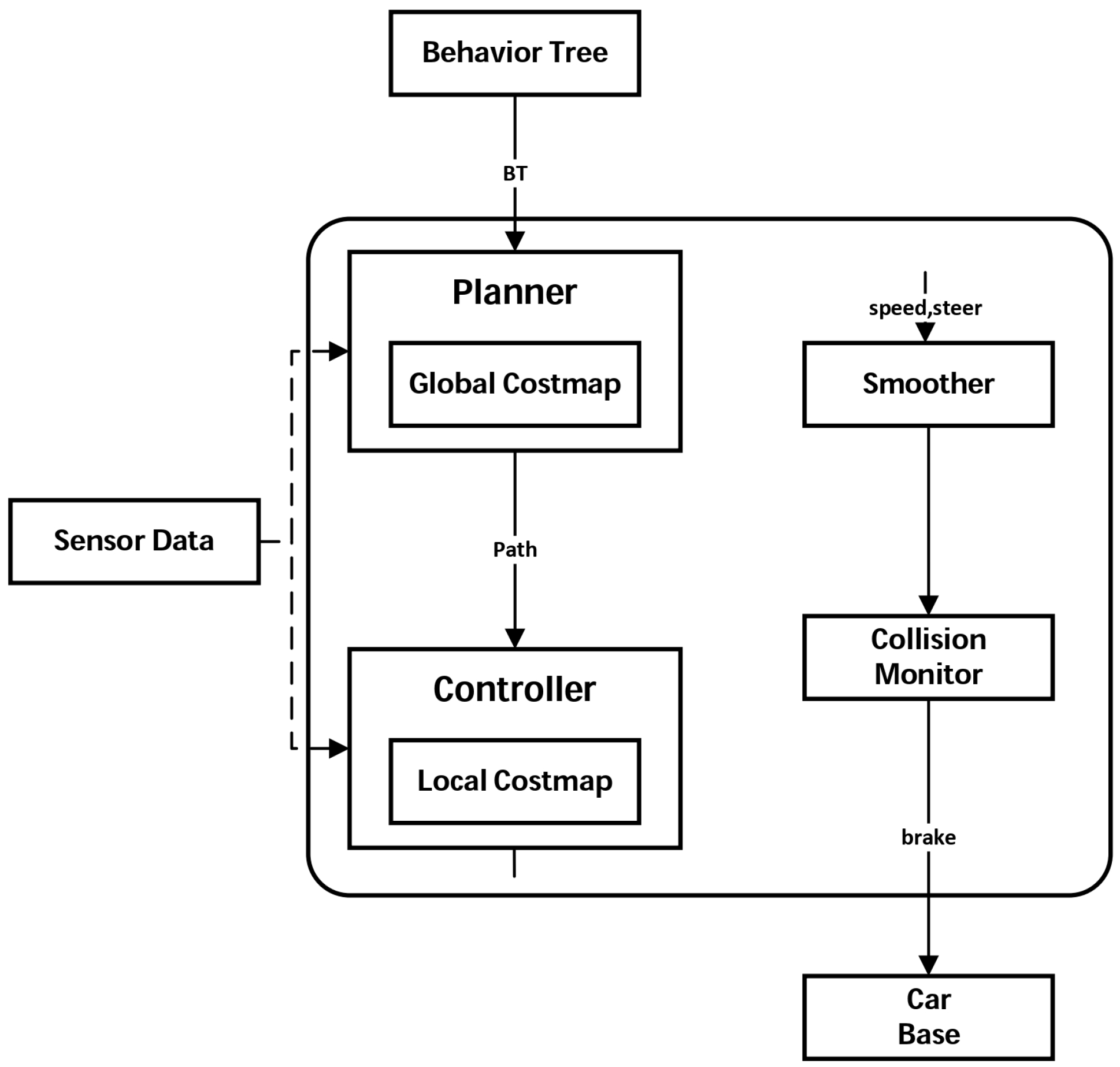

2.3. Device Planner

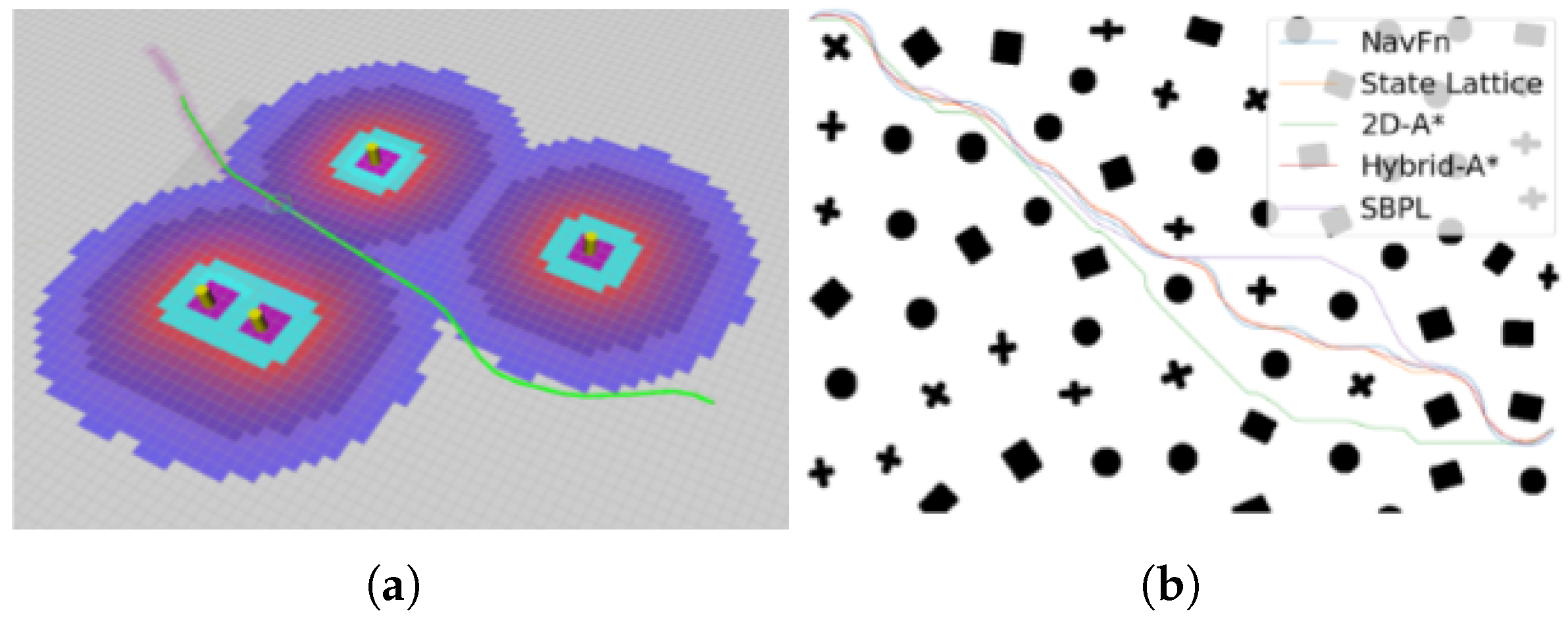

2.3.1. Trajectory Plan

- The first heuristic function is Constrained Heuristics, which considers only the vehicle’s non-holonomic constraints without considering obstacles. This heuristic ignores environmental obstacles and other information, focusing solely on the vehicle’s kinematic properties, and calculates the shortest path from the endpoint to other points.

- The second heuristic function is the dual of the first, called Unconstrained Heuristics, which considers only obstacle information without considering the vehicle’s non-holonomic constraints. It then applies the 2D dynamic programming method (essentially the traditional 2D A* algorithm) to calculate the shortest path from each node to the destination.

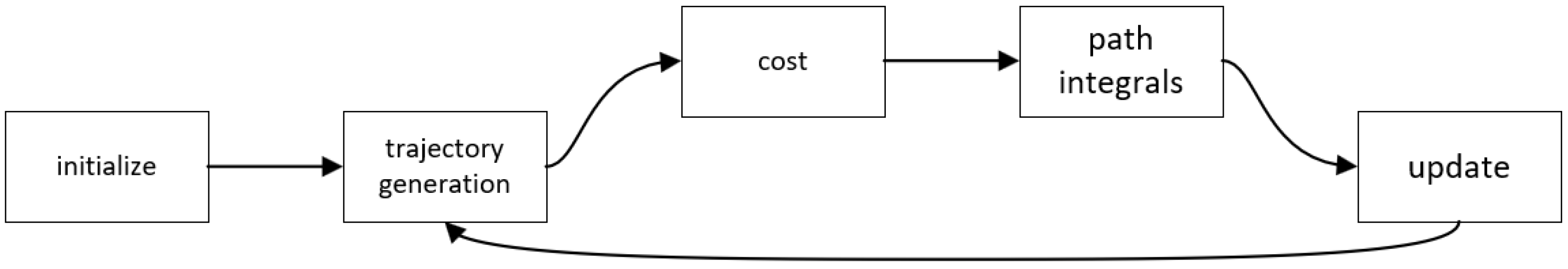

2.3.2. Trajectory Control

- Trajectory generation is based on the current control sequence and the vehicle’s dynamic model, generating a series of possible trajectories through multiple simulations.

- Cost calculation evaluates the performance of each generated trajectory using a cost function, considering factors such as target navigation and collision avoidance.

- Path integration is a key concept of MPPI. It calculates the final control sequence via weighted averaging of all trajectories at each time step.

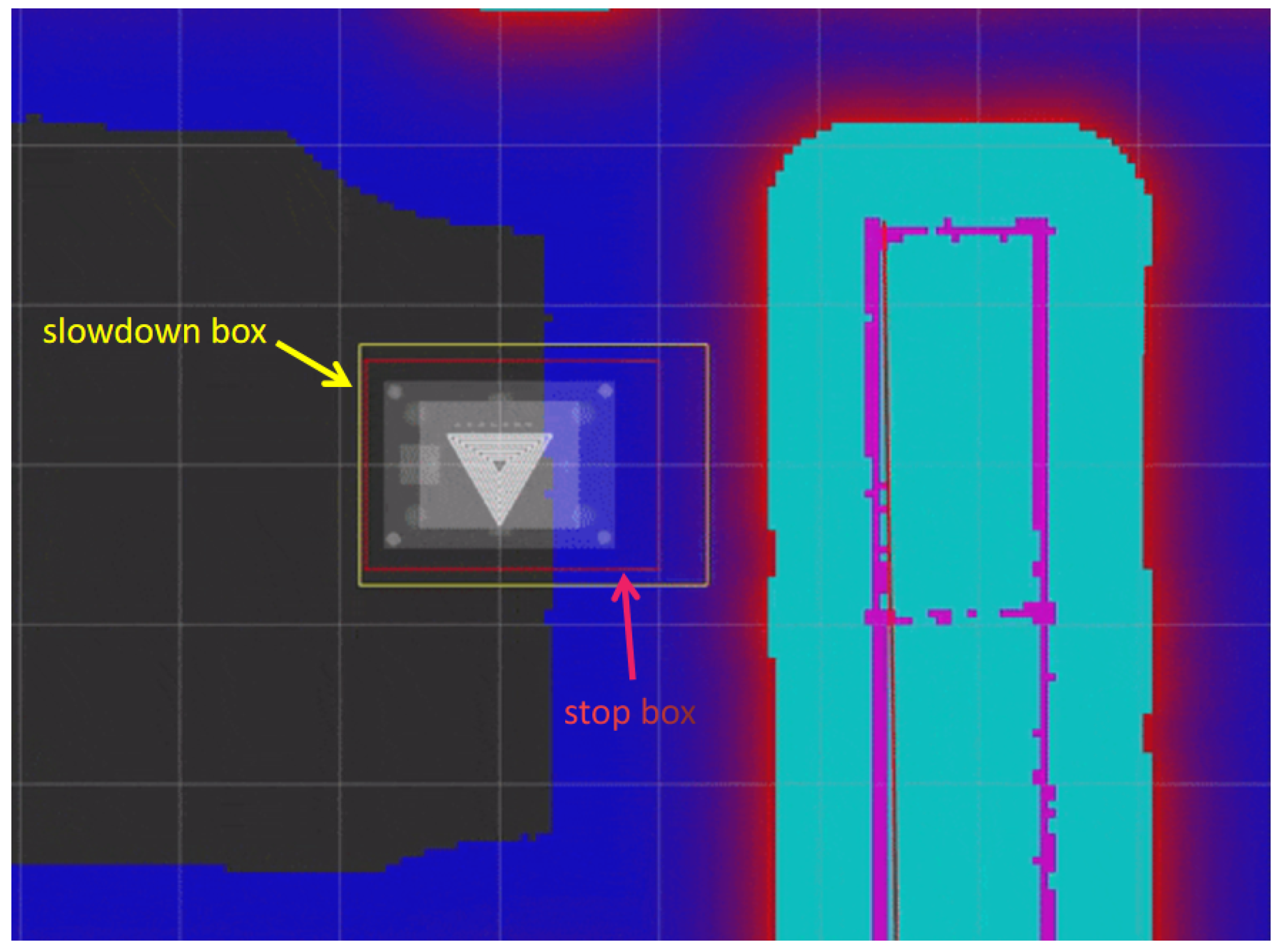

2.3.3. Collision Monitor

2.3.4. Localization

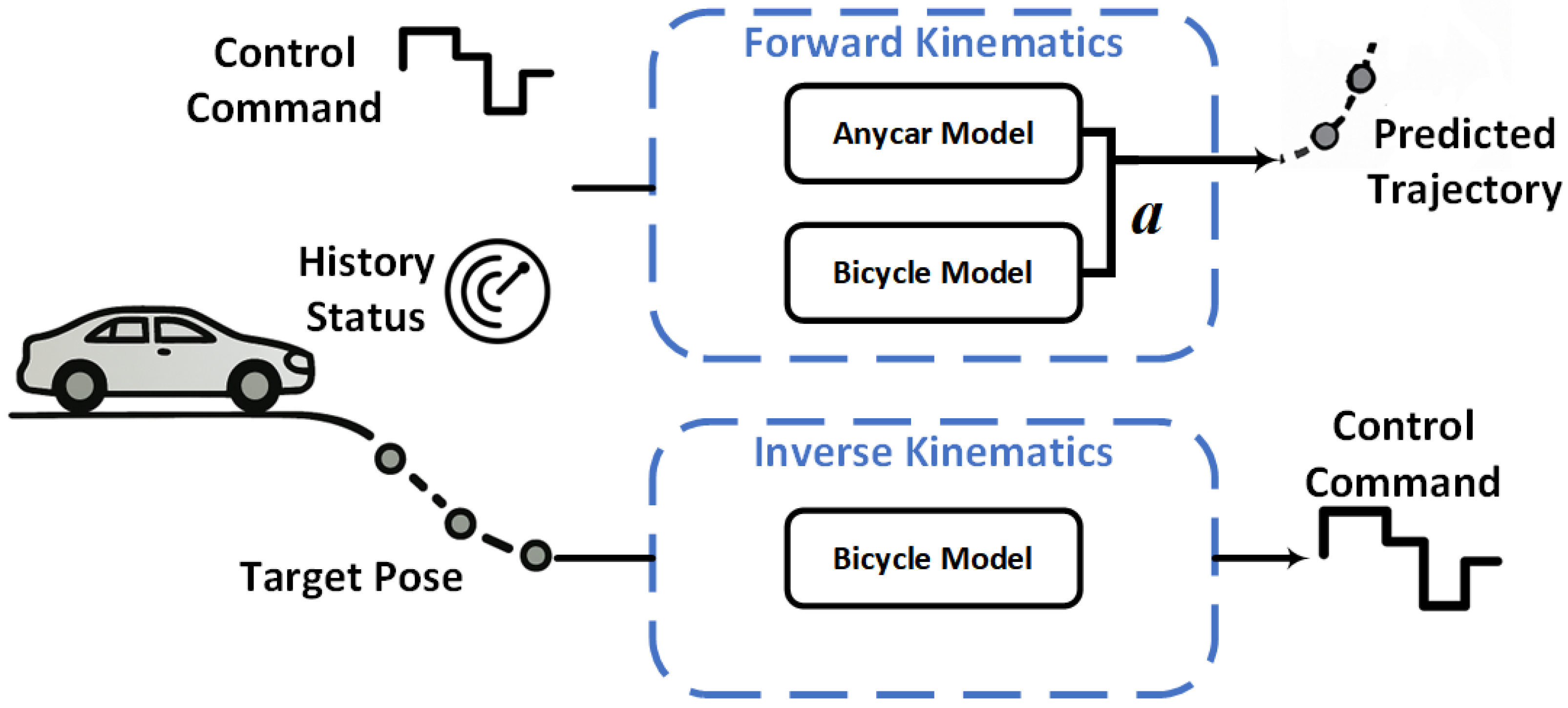

3. BicycleAnyCar Model

3.1. Bicycle Model

| Symbolic | Name/Unit |

|---|---|

| C | The rear axle center of the vehicle |

| Distance from C to the front axle center | |

| Distance from C to the rear axle center | |

| R | Turning radius of C |

| Turning radius of the rear axle center | |

| Yaw angle | |

| Front wheel steering angle | |

| Sideslip angle | |

| v | Velocity of C |

| w | Angular velocity of C about point O |

3.2. Transformer Model

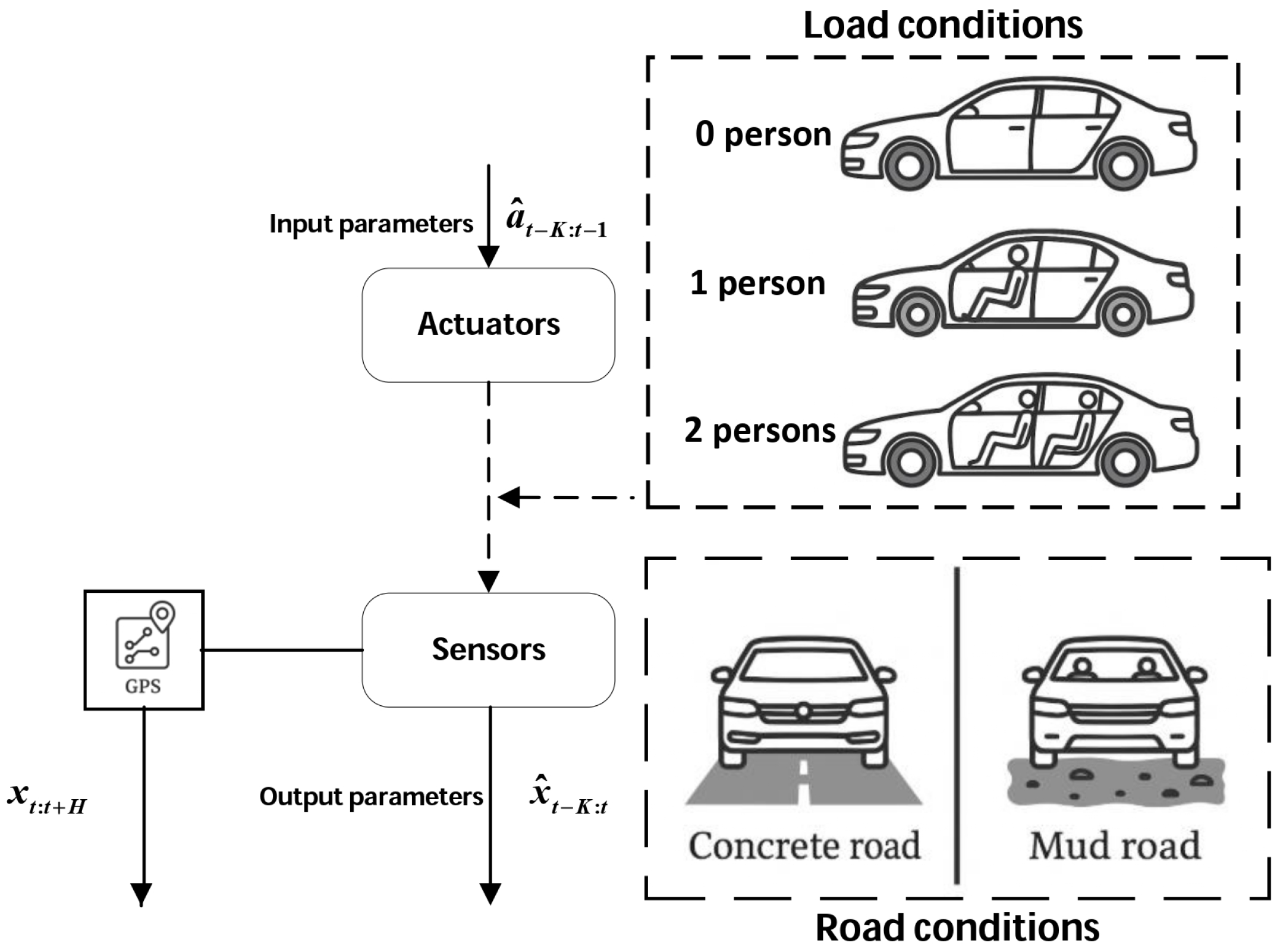

- Load Perturbation Modeling Mechanism: Multiple simulation scenarios featuring variable payload mass and distribution configurations are constructed to emulate the dynamic loading and unloading patterns observed in real-world freight operations. This augmentation enhances the model’s capability to perceive and represent the impact of load variations on vehicular dynamics.

- Execution Latency Injection Strategy: Artificial disturbances—such as actuator response delays and servo control offsets—are introduced during simulated control processes to capture the non-ideal system behaviors commonly induced by retrofitted platforms. These perturbations reflect the influence of heterogeneous transmission systems and customized controller integration, thereby improving the realism and robustness of training data.

- Environmental Heterogeneity Sampling Mechanism: Data acquisition scenarios are extended to encompass a range of terrain compositions (e.g., asphalt, soil) and traction load conditions, which enrich the diversity of the input state space. This design facilitates improved modeling of external environmental factors and their complex interactions with vehicle-level dynamics.

- Under conditions such as high-speed driving, sharp turns, or complex terrain, is set to a lower range (e.g., 0.2–0.4), thereby emphasizing the physically grounded bicycle model to ensure trajectory stability and physical consistency.

- In contrast, within unstructured environments, scenarios with significant sensor noise, or frequent dynamic disturbances, is increased to a higher range (e.g., 0.6–0.8), allowing the transformer model to leverage its strengths in temporal representation and error tolerance.

4. Results

4.1. Overview of Experimental Platform and Testing Environment

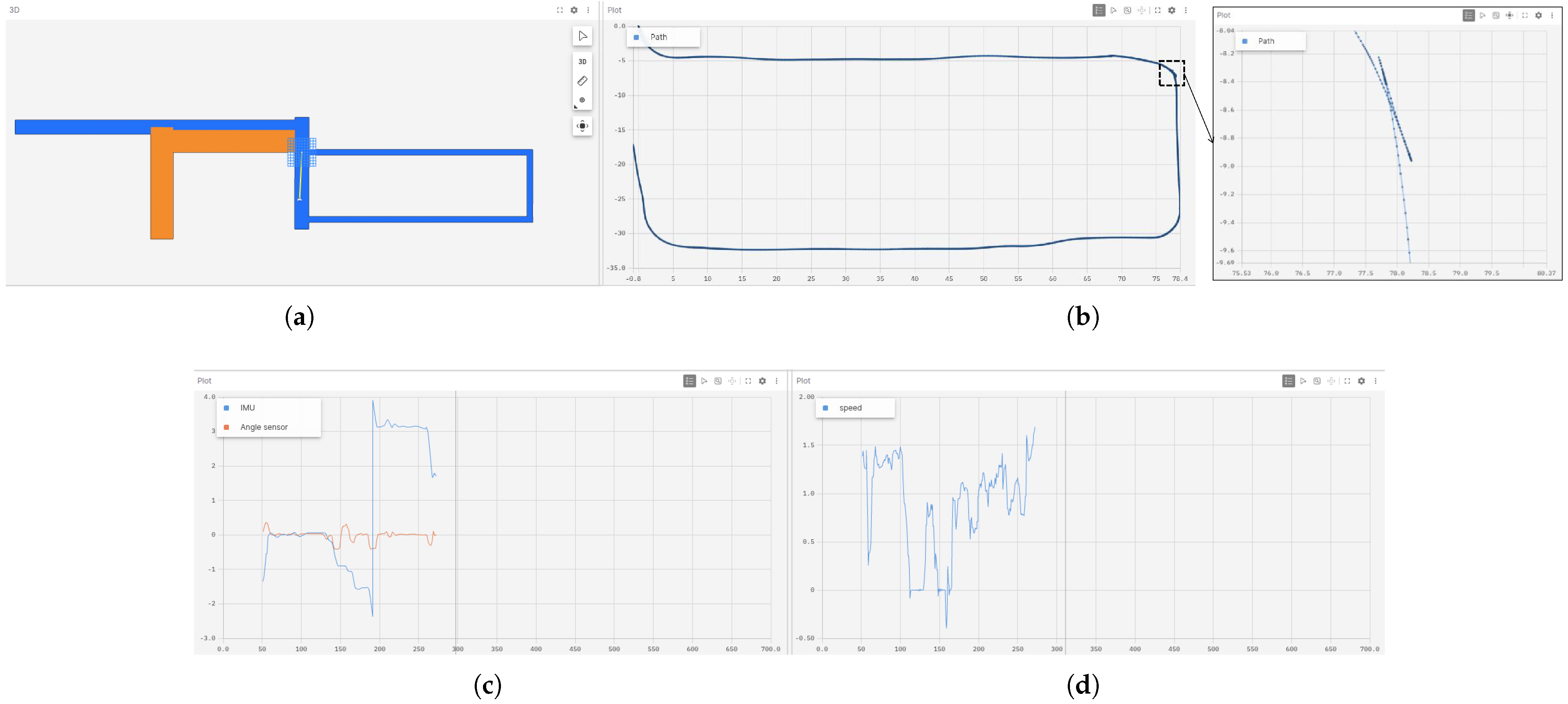

4.2. Vehicle Model Validation Test

- First, a bicycle model is constructed based on the fusion of odometry (odom) data and wheel steering angle sensor inputs. The resulting trajectory is depicted as a green dashed line in Figure 20.

- Second, building upon the aforementioned model, an Extended Kalman Filter is incorporated to integrate data from an IMU, thereby enhancing the system’s capability to perceive and estimate dynamic motion. This trajectory is illustrated as a red dashed line.

- Finally, the method proposed in this paper further leverages a sequence learning model based on the transformer architecture, enabling deep modeling and synergistic inference of multi-source data. The resulting trajectory, represented by a blue solid line, demonstrates the significant advantages of the proposed approach in terms of both path accuracy and stability.

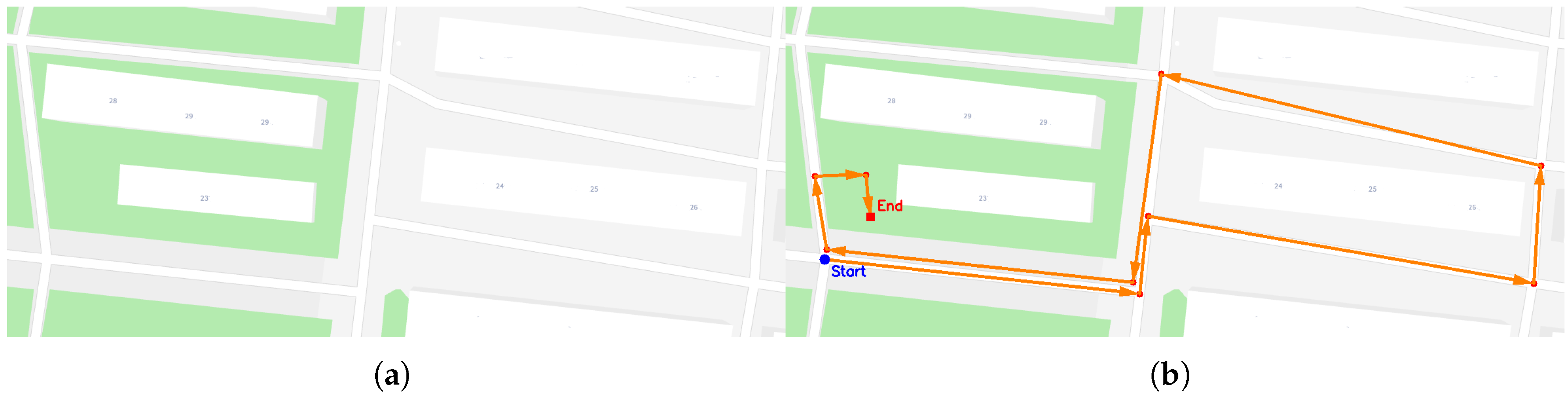

4.3. Autonomous Navigation System Evaluation

5. Conclusions

- A modular unmanned ground vehicle (UGV) system is developed for low-risk environments, featuring dual-mode autonomous and manual control. The system offers high deployment flexibility and cost-efficiency, making it suitable for open-field scenarios such as farms and mining sites, in contrast to conventional high-cost, fully integrated autonomous platforms.

- A distributed algorithmic framework is proposed for low-cost platforms, comprising lateral and longitudinal PID controllers deployed on microcontrollers, and a hybrid A* global planner with an MPPI-based local trajectory optimizer running on the host computing unit. This architecture enables effective coordination between planning and control under resource-constrained hardware conditions, enhancing both system stability and deployment scalability.

- Building upon the AnyCar architecture and leveraging a custom dataset and experimental platform, this study designs and optimizes a vehicle dynamics modeling approach—termed the BicycleAnyCar Model. By integrating the classical bicycle kinematic model with deep temporal modeling capabilities, the proposed method effectively captures nonlinear dynamic behaviors inherent to retrofitted platforms. Experimental results demonstrate that it significantly outperforms conventional models in both trajectory estimation accuracy and control stability.

- The system’s trajectory tracking performance and control resilience are validated on a retrofitted platform under real-world disturbances such as actuation delay, demonstrating strong engineering adaptability and scalability.

- Currently, the transformer model is deployed on a remote 3090 GPU server. Although single-pass inference latency is maintained within 0.05 s, the overall responsiveness in navigation tasks is still challenged by network-induced delays, which may interfere with time-sensitive control cycles. To improve system real-time capability and robustness, a local inference acceleration strategy is proposed—compressing segments of the transformer model and deploying them on edge devices or onboard platforms, combined with low-latency inference engines to reduce dependence on remote communication channels.

- During navigation experiments, sporadic failures have been observed in vehicle posture adjustment or the execution of low-level control commands, leading to situations where the navigation module is unable to resolve a feasible trajectory, thus causing the vehicle to enter a stalled state. To address this, a recovery mechanism with redundant control logic is recommended. This mechanism would activate emergency routines—such as trajectory backtracking and autonomous posture correction—when navigation failure or control deviation is detected, thereby enhancing system recoverability and continuity under dynamically changing operational conditions.

- Under future research directions, continued refinement of the proposed methodology will focus on developing more efficient and generalizable modeling and control strategies to improve system robustness and responsiveness in complex environments. In addition, systematic benchmarking against mainstream machine learning architectures—such as LSTM, GRU, and GNN—will be conducted to comprehensively evaluate the performance and cross-domain applicability of the approach.

- To align with the broader analytical focus on power consumption and cost-effective transportation, an integrated energy monitoring mechanism will be introduced in subsequent development stages, enabling systematic evaluation of control strategies in terms of energy efficiency and operational economy.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abdel-Aty, M.; Ding, S. A matched case-control analysis of autonomous vs human-driven vehicle accidents. Nat. Commun. 2024, 15, 4931. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.X.; Feng, S. Curse of rarity for autonomous vehicles. Nat. Commun. 2024, 15, 4808. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhao, J.; Long, P.; Wang, L.; Qian, L.; Lu, F.; Song, X.; Manocha, D. An autonomous excavator system for material loading tasks. Sci. Robot. 2021, 6, eabc3164. [Google Scholar] [CrossRef] [PubMed]

- Kato, S.; Takeuchi, E.; Ishiguro, Y.; Ninomiya, Y.; Takeda, K.; Hamada, T. An open approach to autonomous vehicles. IEEE Micro 2015, 35, 60–68. [Google Scholar] [CrossRef]

- Tang, J.; Wang, J.; Ji, K.; Xu, L.; Yu, J.; Shi, Y. A Unified Diffusion Framework for Scene-Aware Human Motion Estimation from Sparse Signals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE/CVF, Seattle, WA, USA, 17–21 June 2024; pp. 21251–21262. [Google Scholar]

- Sun, W.; Chen, Z.; Wang, Y.; Li, J. SparseDrive: End-to-End Autonomous Driving via Sparse Scene Representation. In Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA), IEEE, Atlanta, GA, USA, 19–23 May 2025. [Google Scholar]

- Jiang, B.; Chen, S.; Xu, Q.; Liao, B.; Chen, J.; Zhou, H.; Zhang, Q.; Liu, W.; Huang, C.; Wang, X. VAD: Vectorized Scene Representation for Efficient Autonomous Driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), IEEE/CVF, Paris, France, 2–6 October 2023; pp. 8340–8350. [Google Scholar]

- Zhao, G.; Chen, Z.; Liao, W. Reinforcement-tracking: An end-to-end trajectory tracking method based on self-attention mechanism. Int. J. Automot. Technol. 2024, 25, 541–551. [Google Scholar] [CrossRef]

- Song, Z.; Jia, C.; Liu, L.; Pan, H.; Zhang, Y.; Wang, J.; Zhang, X.; Xu, S.; Yang, L.; Luo, Y. Don’t Shake the Wheel: Momentum-Aware Planning in End-to-End Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE/CVF, Nashville, TN, USA, 11–15 June 2025; pp. 22432–22441. [Google Scholar]

- Williams, G.; Drews, P.; Goldfain, B.; Rehg, J.M.; Theodorou, E.A. Aggressive Driving with Model Predictive Path Integral Control. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), IEEE, Stockholm, Sweden, 16–21 May 2016; pp. 1433–1440. [Google Scholar]

- Yin, J.; Zhang, Z.; Theodorou, E.; Tsiotras, P. Trajectory Distribution Control for Model Predictive Path Integral Control Using Covariance Steering. In Proceedings of the 2022 IEEE International Conference on Robotics and Automation (ICRA), IEEE, Philadelphia, PA, USA, 23–27 May 2022; pp. 1478–1484. [Google Scholar]

- Li, Y.; Zhu, Q.; Elahi, A. Quadcopter trajectory tracking based on model predictive path integral control and neural network. Drones 2024, 9, 9. [Google Scholar] [CrossRef]

- Viadero-Monasterio, F.; Meléndez-Useros, M.; Zhang, N.; Zhang, H.; Boada, B.L.; Boada, M.J.L. Motion Planning and Robust Output-Feedback Trajectory Tracking Control for Multiple Intelligent and Connected Vehicles in Unsignalized Intersections. IEEE Trans. Veh. Technol. 2025. [Google Scholar] [CrossRef]

- Zheng, J.; Chen, T.; He, J.; Wang, Z.; Gao, B. Review on Security Range Perception Methods and Path-Planning Techniques for Substation Mobile Robots. Energies 2024, 17, 4106. [Google Scholar] [CrossRef]

- Xia, X.; Hashemi, E.; Xiong, L.; Khajepour, A. Autonomous vehicle kinematics and dynamics synthesis for sideslip angle estimation based on consensus Kalman filter. IEEE Trans. Control Syst. Technol. 2022, 31, 179–192. [Google Scholar] [CrossRef]

- Chen, T.; Cai, Y.; Chen, L.; Xu, X. Sideslip angle fusion estimation method of three-axis autonomous vehicle based on composite model and adaptive cubature Kalman filter. IEEE Trans. Transp. Electrif. 2023, 10, 316–330. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, F.; Pei, K. Cross-modal matching cnn for autonomous driving sensor data monitoring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, IEEE, Montreal, QC, Canada, 11–17 October 2021; pp. 3110–3119. [Google Scholar]

- Duan, J.; Guan, Y.; Li, S.E.; Ren, Y.; Sun, Q.; Cheng, B. Distributional soft actor-critic: Off-policy reinforcement learning for addressing value estimation errors. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6584–6598. [Google Scholar] [CrossRef] [PubMed]

- Amin, F.; Gharami, K.; Sen, B. TrajectoFormer: Transformer-based trajectory prediction of autonomous vehicles with spatio-temporal neighborhood considerations. Int. J. Comput. Intell. Syst. 2024, 17, 87. [Google Scholar] [CrossRef]

- Kotb, M.; Weber, C.; Hafez, M.B.; Wermter, S. QT-TDM: Planning with transformer dynamics model and autoregressive q-learning. IEEE Robot. Autom. Lett. 2024, 10, 112–119. [Google Scholar] [CrossRef]

- Yu, C.; Yang, Y.; Liu, T.; You, Y.; Zhou, M.; Xiang, D. State Estimation Transformers for Agile Legged Locomotion. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE, Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 6810–6817. [Google Scholar]

- Xiao, W.; Xue, H.; Tao, T.; Kalaria, D.; Dolan, J.M.; Shi, G. Anycar to Anywhere: Learning Universal Dynamics Model for Agile and Adaptive Mobility. In Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA), IEEE, Atlanta, GA, USA, 19–23 May 2025. [Google Scholar]

- Zhou, Y.; Liu, C.; Li, Z.; Yu, Y. A Model Predictive Control Strategy with Minimum Model Error Kalman Filter Observer for HMEV-AS. Energies 2025, 18, 1557. [Google Scholar] [CrossRef]

- Pires, T.R.; Fernandes, J.F.; Branco, P.J.C. Driving Profile Optimization for Energy Management in the Formula Student Técnico Prototype. Energies 2024, 17, 6313. [Google Scholar] [CrossRef]

- Kurzer, K. Path Planning in Unstructured Environments: A Real-time Hybrid A* Implementation for Fast and Deterministic Path Generation for the KTH Research Concept Vehicle. Master’s Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2016. [Google Scholar]

- Dolgov, D.; Thrun, S.; Montemerlo, M.; Diebel, J. Practical Search Techniques in Path Planning for Autonomous Driving. In Proceedings of the AAAI 2008 Spring Symposium, Stanford University, Palo Alto, CA, USA, 26–28 March 2008; pp. 18–80. [Google Scholar]

- Yin, Y.; Wang, Z.; Zheng, L.; Su, Q.; Guo, Y. Autonomous UAV navigation with adaptive control based on deep reinforcement learning. Electronics 2024, 13, 2432. [Google Scholar] [CrossRef]

- Xi, M.; Dai, H.; He, J.; Li, W.; Wen, J.; Xiao, S.; Yang, J. A lightweight reinforcement-learning-based real-time path-planning method for unmanned aerial vehicles. IEEE Internet Things J. 2024, 11, 21061–21071. [Google Scholar] [CrossRef]

- Alatise, M.B.; Hancke, G.P. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Xu, B.; Wu, Z.; Chambers, J.A. A new adaptive extended Kalman filter for cooperative localization. IEEE Trans. Aerosp. Electron. Syst. 2017, 54, 353–368. [Google Scholar] [CrossRef]

- Gupta, A.; Fernando, X. Simultaneous localization and mapping (slam) and data fusion in unmanned aerial vehicles: Recent advances and challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Wang, S.; Ahmad, N.S. A Comprehensive Review on Sensor Fusion Techniques for Localization of a Dynamic Target in GPS-Denied Environments. IEEE Access 2024, 13, 2252–2285. [Google Scholar] [CrossRef]

- Celestini, D.; Gammelli, D.; Guffanti, T.; D’Amico, S.; Capello, E.; Pavone, M. Transformer-based model predictive control: Trajectory optimization via sequence modeling. IEEE Robot. Autom. Lett. 2024, 9, 9820–9827. [Google Scholar] [CrossRef]

| Path | Number of Valid Trajectory Points | Total Number of Sampled Points | Trajectory Coverage Ratio |

|---|---|---|---|

| B | 896 | 1640 | 54.6% |

| C | 1872 | 1974 | 94.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Zheng, X.; Peng, Z.-J.; Zhang, C.-C.; Tang, J.-J.; Mao, K.-M. Efficient Autonomy: Autonomous Driving of Retrofitted Electric Vehicles via Enhanced Transformer Modeling. Energies 2025, 18, 5247. https://doi.org/10.3390/en18195247

Wang K, Zheng X, Peng Z-J, Zhang C-C, Tang J-J, Mao K-M. Efficient Autonomy: Autonomous Driving of Retrofitted Electric Vehicles via Enhanced Transformer Modeling. Energies. 2025; 18(19):5247. https://doi.org/10.3390/en18195247

Chicago/Turabian StyleWang, Kai, Xi Zheng, Zi-Jie Peng, Cong-Chun Zhang, Jun-Jie Tang, and Kuan-Min Mao. 2025. "Efficient Autonomy: Autonomous Driving of Retrofitted Electric Vehicles via Enhanced Transformer Modeling" Energies 18, no. 19: 5247. https://doi.org/10.3390/en18195247

APA StyleWang, K., Zheng, X., Peng, Z.-J., Zhang, C.-C., Tang, J.-J., & Mao, K.-M. (2025). Efficient Autonomy: Autonomous Driving of Retrofitted Electric Vehicles via Enhanced Transformer Modeling. Energies, 18(19), 5247. https://doi.org/10.3390/en18195247