A Hybrid Framework for Offshore Wind Power Forecasting: Integrating CNN-BiGRU-XGBoost with Advanced Feature Engineering and Analysis

Abstract

1. Introduction

2. Wind Power Data Analysis and Preprocessing Methods

2.1. Data Source

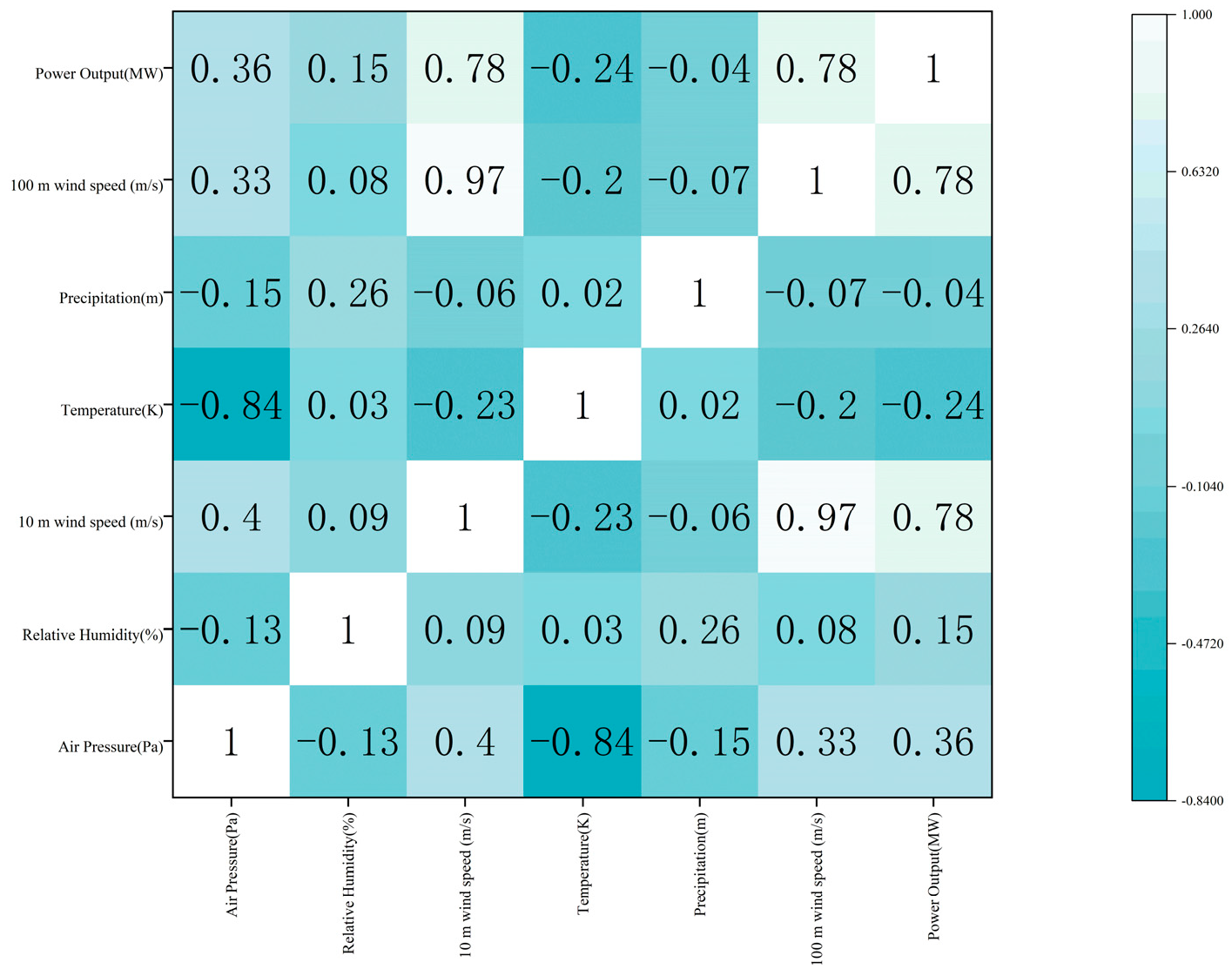

2.2. Correlation Analysis

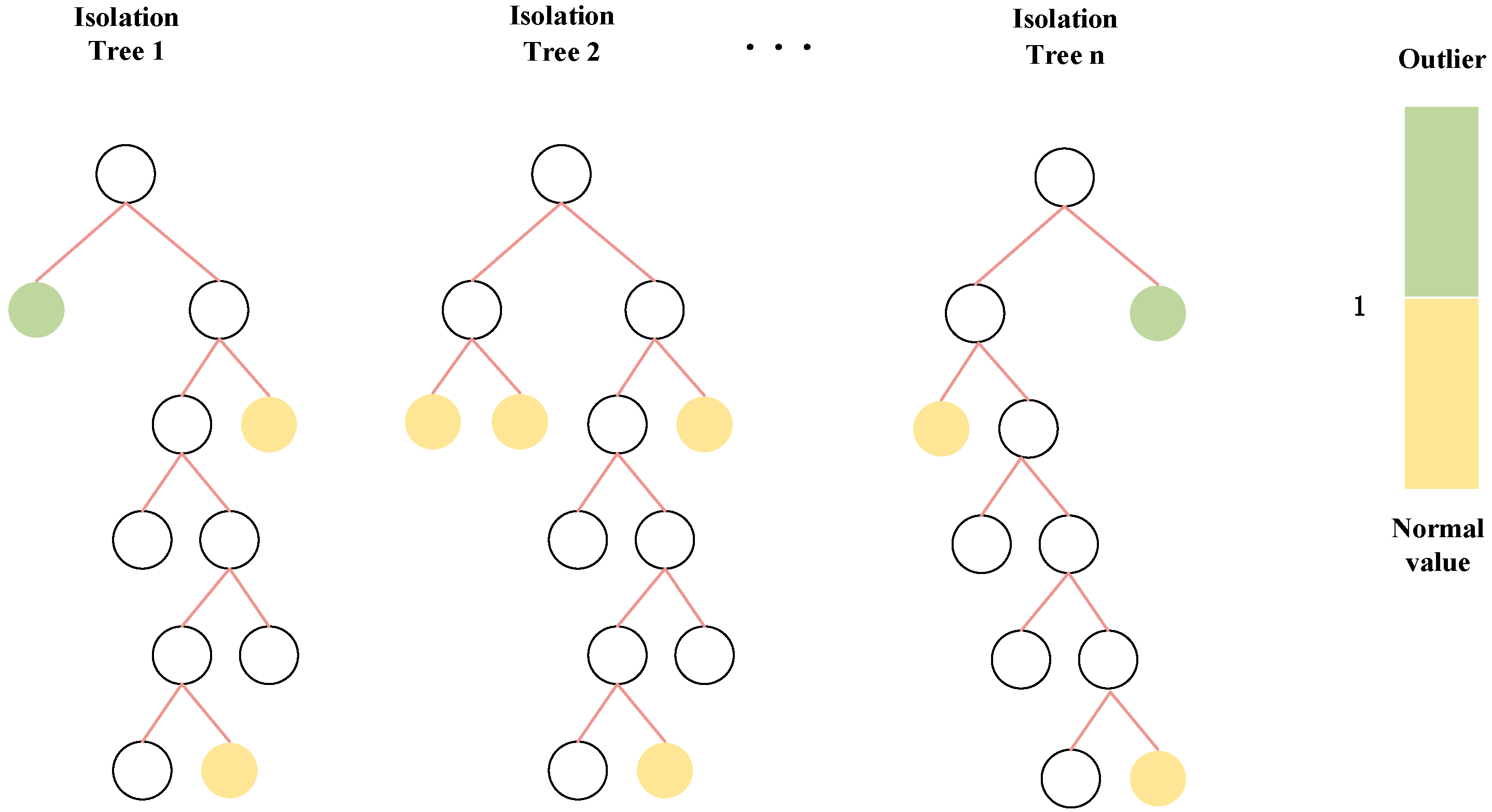

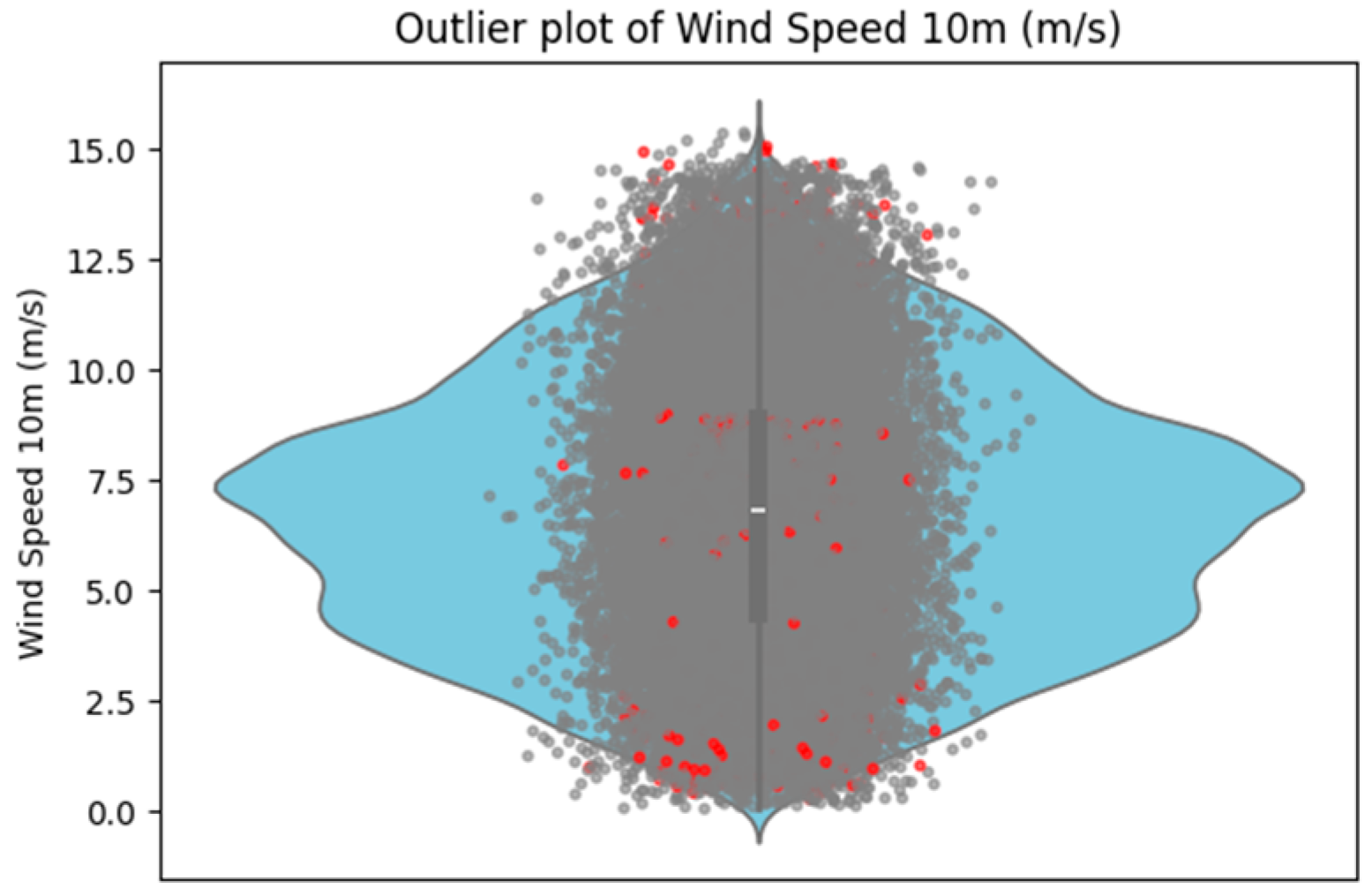

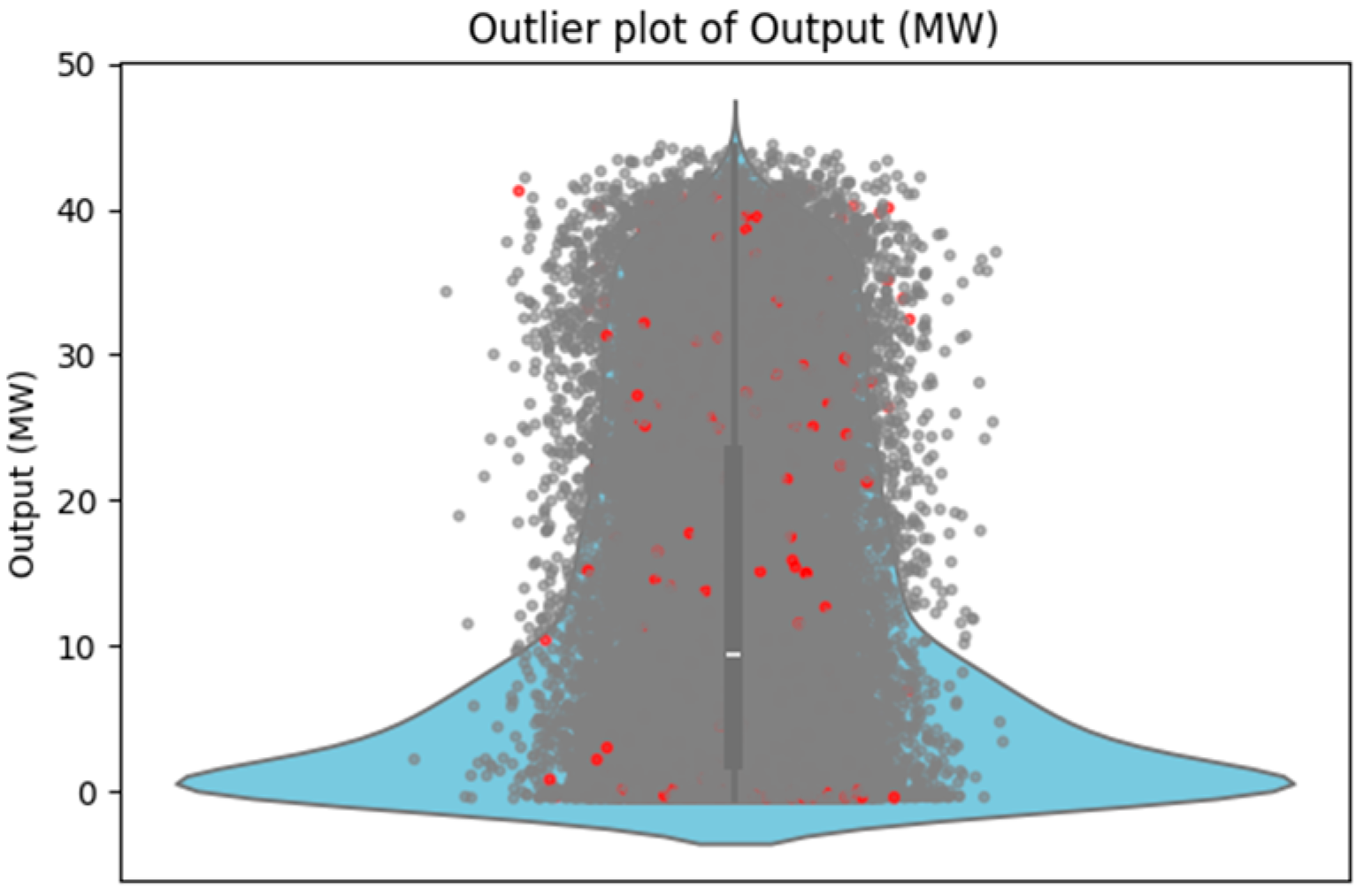

2.3. Data Processing

2.4. Experimental Setup

3. Research Methods

3.1. CNN-BiGRU

3.2. XGBoost Algorithm

3.3. Randomized SearchCV

3.4. Ridge Regression

4. Construction and Evaluation of the Forecasting Model

4.1. Model Construction

- (1)

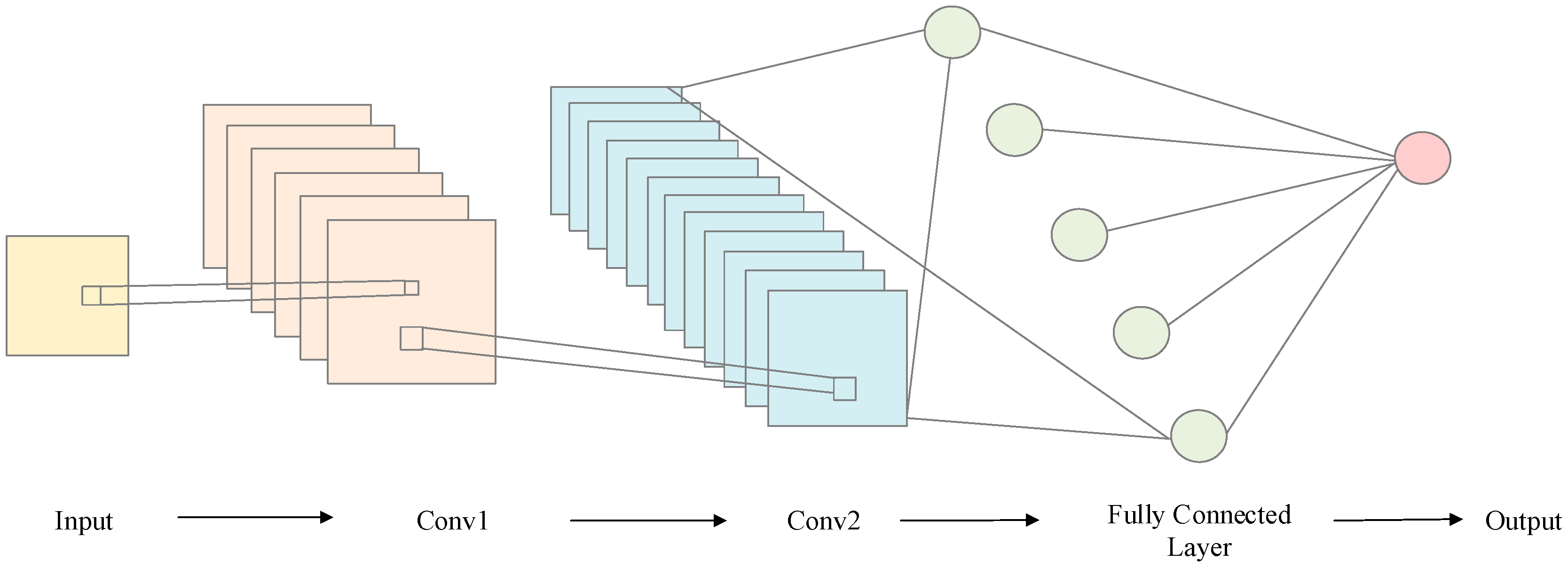

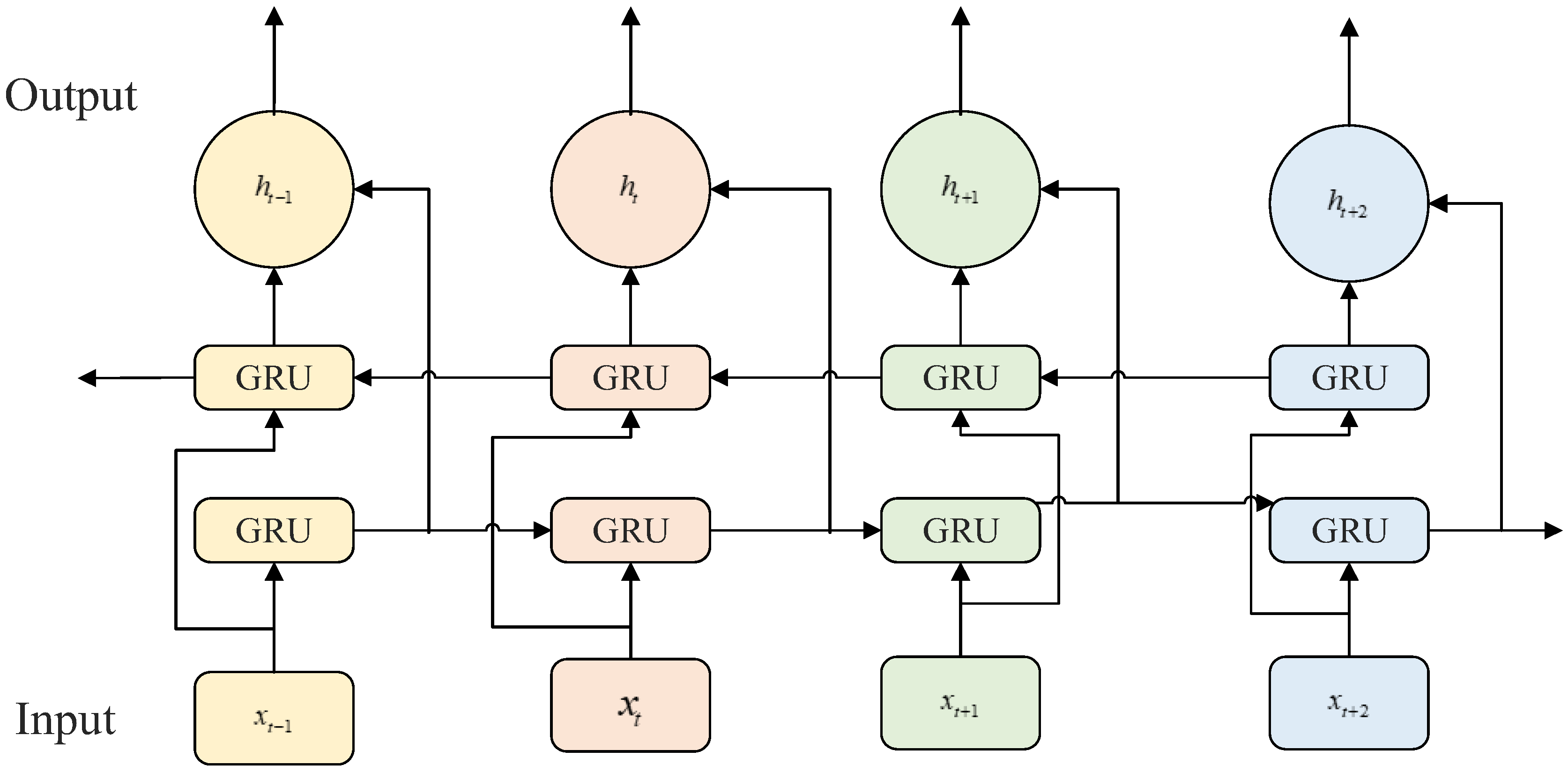

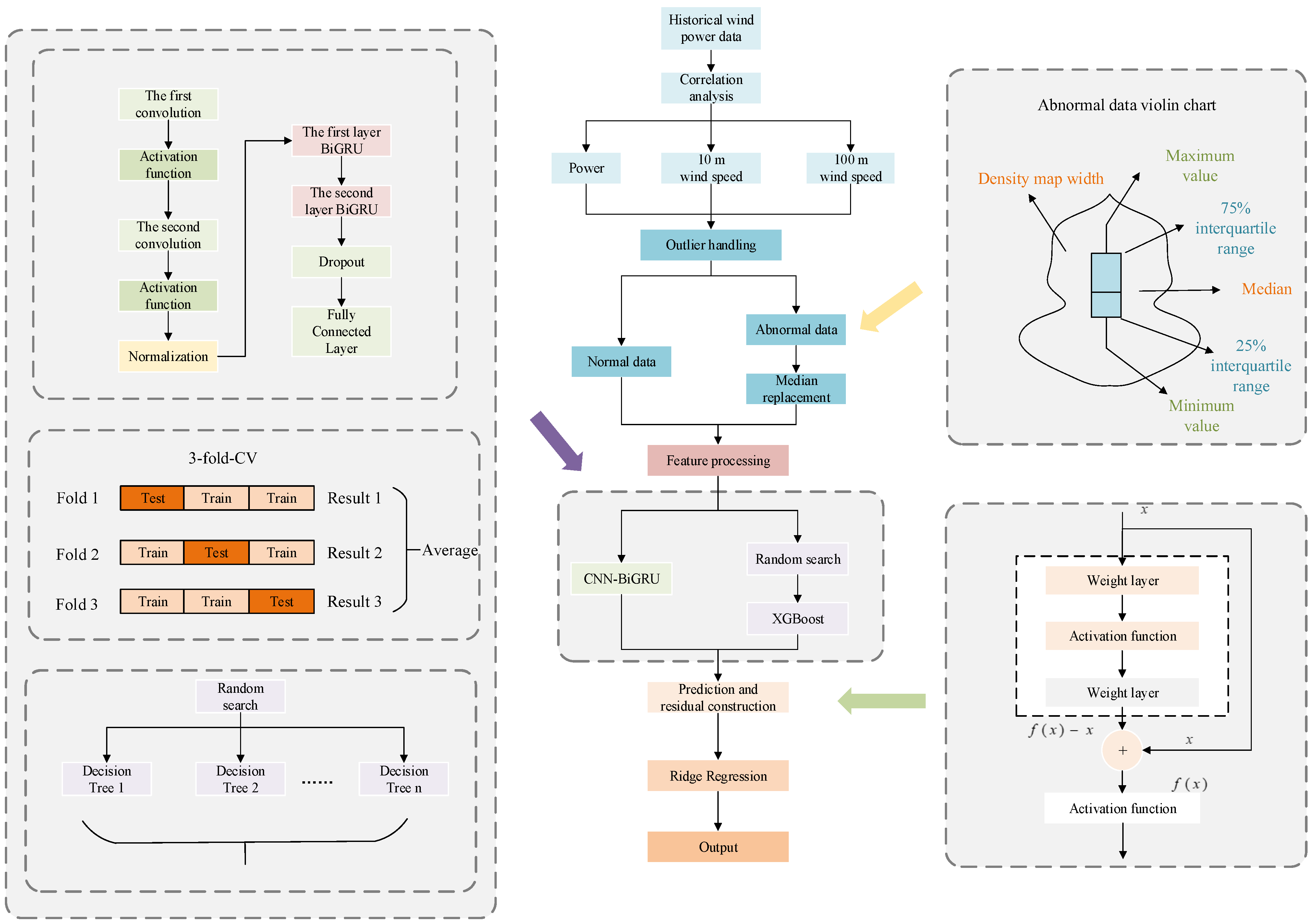

- Select the number of filters and kernel sizes for the Convolutional Neural Network (CNN). Unlike conventional CNN structures, the pooling layer is removed in this design. For the Bidirectional GRU (BiGRU), the number of units in the two layers and the dropout rate are determined to construct a deep learning model capable of extracting local features and modeling both short- and long-term dependencies in time series data. The workflow of the CNN-BiGRU forecasting model is shown in Figure 10.

- (2)

- Introduce XGBoost as the base learner. Key parameters such as learning rate, maximum tree depth, minimum child weight, subsample ratio, feature sampling ratio, and maximum number of iterations are selected. XGBoost’s powerful nonlinear modeling capability is leveraged to further improve model performance, forming a second independent prediction pathway.

- (3)

- The prediction results from the CNN-BiGRU and XGBoost models, along with their corresponding residuals, are used as input features to form a four-dimensional fusion vector. Ridge regression is then employed to enhance convergence efficiency and improve the model’s ability to fit error structures. The overall modeling process is illustrated in Figure 11.

4.2. Evaluation Metrics

- (1)

- Root Mean Square Error (RMSE)

- (2)

- Mean Absolute Error (MAE)

- (3)

- Coefficient of determination (R2)

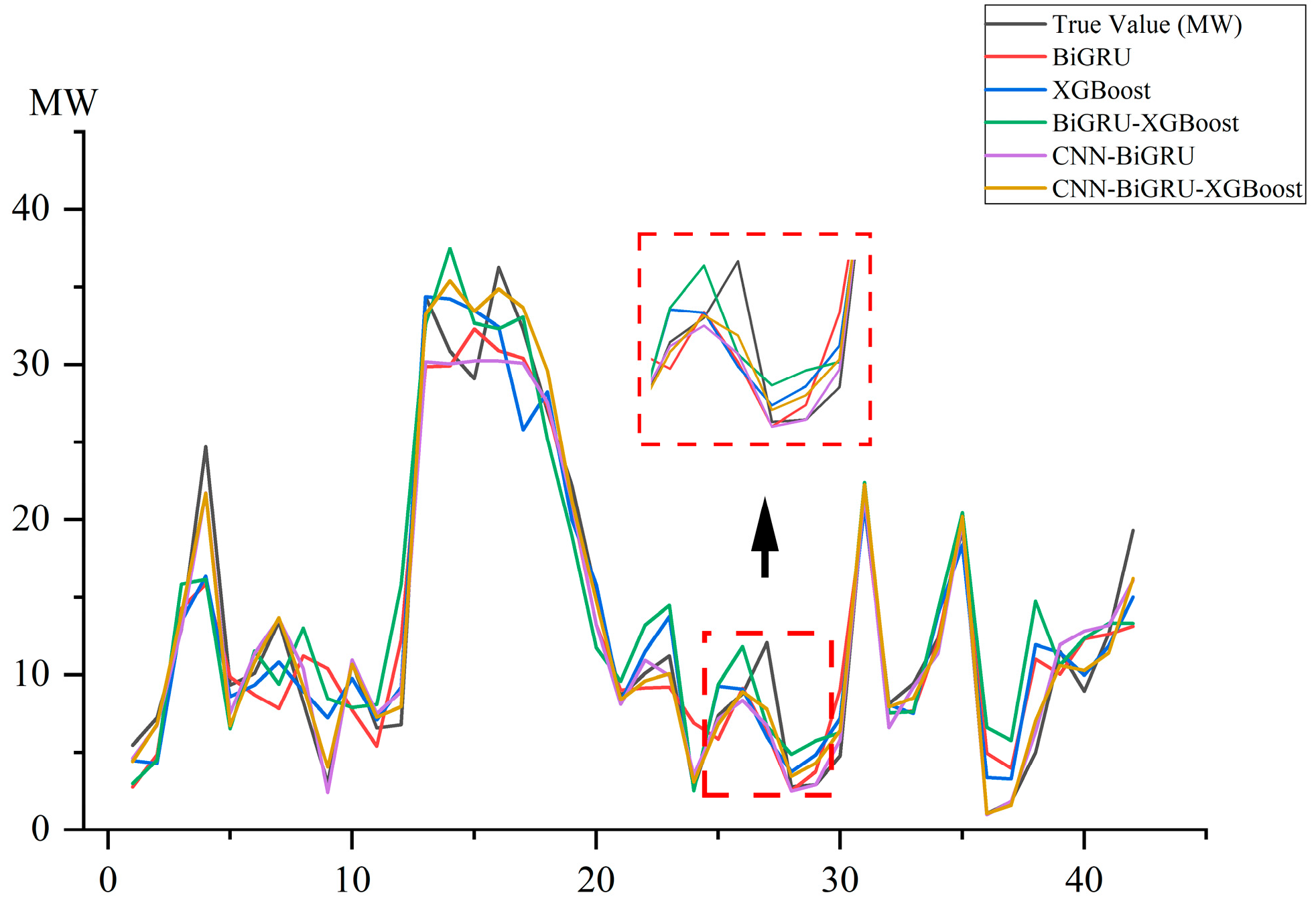

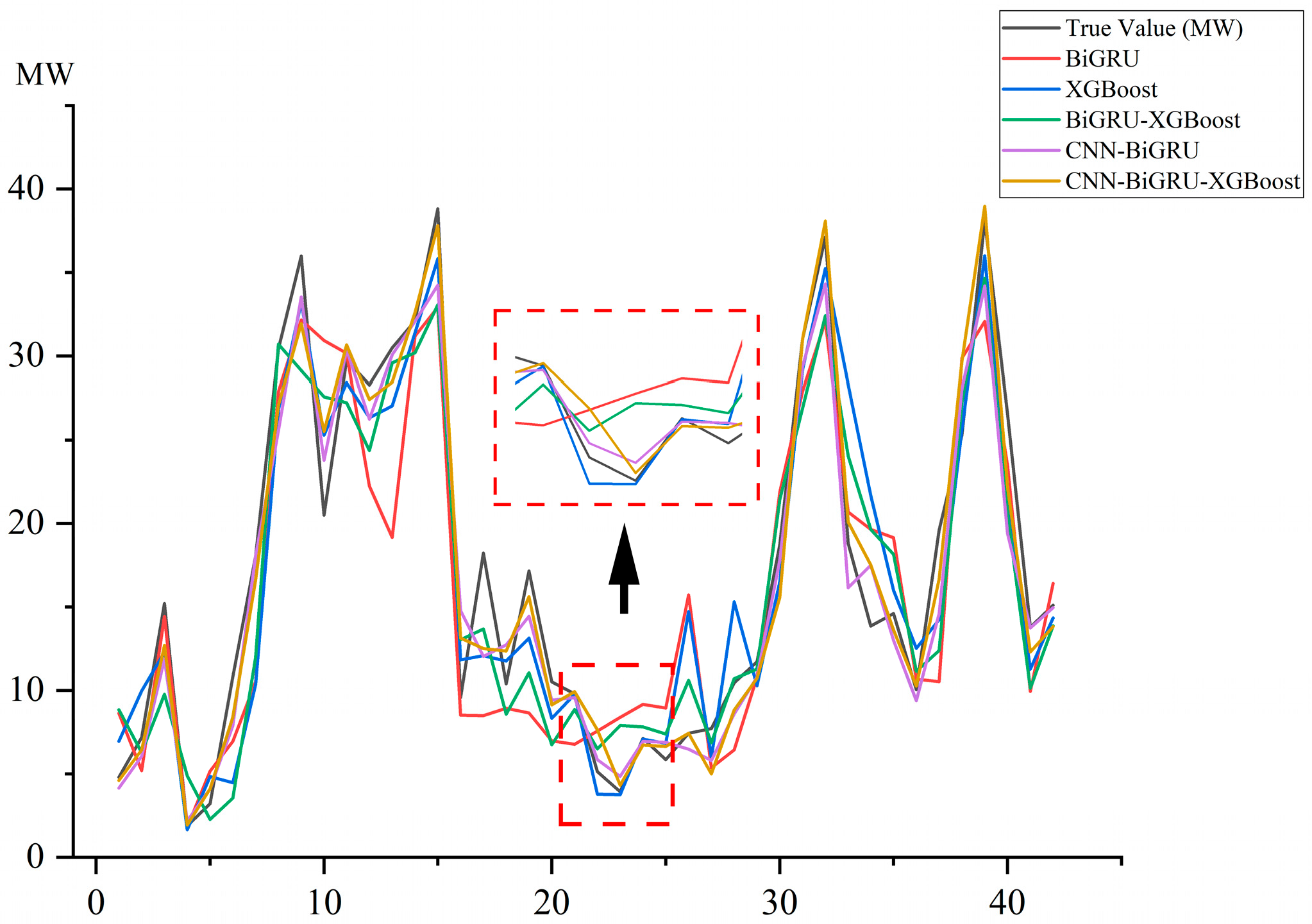

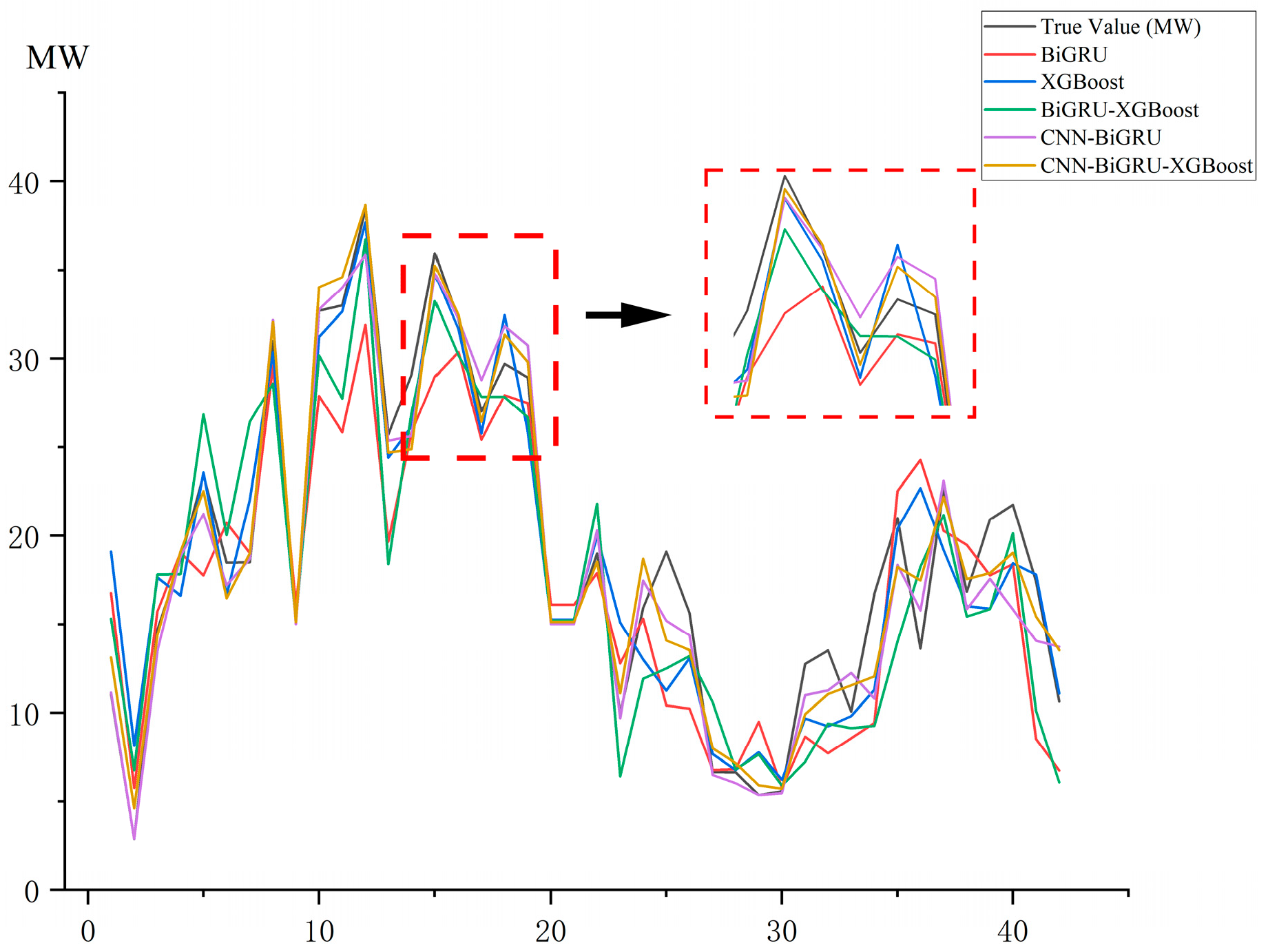

4.3. Results Analysis and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wu, K.; Yuan, Y.; Cheng, B.; Wu, J. Research of Wind Power Prediction Model Based on RBF Neural Network. In Proceedings of the 2013 International Conference on Computational and Information Sciences, Shiyang, China, 21–23 June 2013; pp. 237–240. [Google Scholar] [CrossRef]

- Artipoli, G.; Durante, F. Physical Modelling in Wind Energy Forecasting. Dewi Mag. 2014, 44, 10–15. [Google Scholar]

- Nayak, A.K.; Sharma, K.C.; Bhakar, R.; Mathur, J. ARIMA based statistical approach to predict wind power ramps. In Proceedings of the 2015 IEEE Power and Energy Society Conference, Denver, CO, USA, 26–30 July 2015; pp. 1–5. [Google Scholar]

- Shi, H.; Li, Z.; Ma, X. Wind power prediction based on DBN and multiple linear regression. Comput. Simul. 2023, 40, 90–95. [Google Scholar]

- Si, H. Research on Wind Power Prediction Algorithm Based on Support Vector Machine Theory. Master’s Thesis, North China University of Water Resources and Hydropower, Zhengzhou, China, 2019. [Google Scholar]

- Wang, M.; Rong, T.; Li, X.; Wei, C.; Zhang, A. Ultra-short-term prediction of offshore wind power based on learnable wavelet self-attention model. High Volt. Technol. 2024, 51, 1422–1433. [Google Scholar] [CrossRef]

- Yin, L.; Tong, B.; Li, W. Short-term wind power prediction based on multiscale convolutional-residual networks. Integr. Intell. Energy 2024, 47, 1–10. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, H. Short-term wind power prediction based on DE-XGBoost. Inf. Technol. 2024, 7, 136–142. [Google Scholar]

- Ren, D.; Ma, J.; Liu, H.; Li, Y.; Chen, C.; Qin, T.; He, Z.; Wu, Q. The IVMD-CNN-GRU-Attention Model for Wind Power Prediction with Sample Entropy Fusion (December 2023). IEEE Access 2024, 12, 2169–3536. [Google Scholar] [CrossRef]

- Gao, J.; Ye, X.; Lei, X.; Huang, B.; Wang, X.; Wang, L. A Multichannel-Based CNN and GRU Method for Short-Term Wind Power Prediction. Electronics 2023, 12, 4479. [Google Scholar] [CrossRef]

- Jiang, J.; Wang, F.; Tang, R.; Zhang, L.; Xu, X. TS_XGB: Ultra-Short-Term Wind Power Forecasting Method Based on Fusion of Time-Spatial Data and XGBoost Algorithm. Procedia Comput. Sci. 2022, 199, 1103–1111. [Google Scholar]

- Yang, Z. Research on Short-Term Wind Power Prediction Based on Hybrid CNN-BiLSTM-AM Model. Master’s Thesis, Beijing Jiaotong University, Beijing, China, 2023. [Google Scholar]

- You, J.; Cai, H.; Shi, D.; Guo, L. An Improved Short-Term Electricity Load Forecasting Method: The VMD–KPCA–xLSTM–Informer Model. Energies 2025, 18, 2240. [Google Scholar] [CrossRef]

- Yang, M.; Chen, X.; Huang, B. Ultra-short-term multi-step wind power prediction based on fractal scaling factor transformation. J. Renew. Sustain. Energy 2018, 10, 053310. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Zhou, G.B.; Wu, J.; Zhang, C.L.; Zhou, Z.H. Minimal gated unit for recurrent neural networks. Int. J. Autom. Comput. 2016, 13, 226–234. [Google Scholar] [CrossRef]

- Mauladdawilah, H.; Balfaqih, M.; Balfagih, Z.; Pegalajar, M.d.C.; Gago, E.J. Deep Feature Selection of Meteorological Variables for LSTM-Based PV Power Forecasting in High-Dimensional Time-Series Data. Algorithms 2025, 18, 496. [Google Scholar] [CrossRef]

- Shan, C.; Liu, S.; Peng, S.; Huang, Z.; Zuo, Y.; Zhang, W.; Xiao, J. A Wind Power Forecasting Method Based on Lightweight Representation Learning and Multivariate Feature Mixing. Energies 2025, 18, 2902. [Google Scholar] [CrossRef]

- Cozad, A.; Sahinidis, N.V.; Miller, D.C. Learning surrogate models for simulation-based optimisation. AIChE J. 2014, 60, 2211–2227. [Google Scholar] [CrossRef]

- Wei, F.; Zhang, Q.; Yin, Z. Development and application of national standards for big data system category. Inf. Technol. Stand. 2020, 7, 48–51. [Google Scholar]

- Lei, N.; Xiong, X. Suomi NPP VIIRS Solar Diffuser BRDF Degradation Factor at Short-Wave Infrared Band Wavelengths. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6212–6216. [Google Scholar] [CrossRef]

| Module | Parameter | Setting Value |

|---|---|---|

| ARIMA | autoregressive order | 5 |

| degree of differencing | 1 | |

| moving average order | 0 | |

| SVM | penalty coefficient | 100 |

| insensitive to loss parameters | 0.01 | |

| CNN | Number of convolutional filters | 64, 128 |

| Kernel size | 3 | |

| BiGRU | Number of units | 128, 64 |

| Dropout rate | 0.3 | |

| XGBoost | Number of base learners | 300, 500 (tuning range) |

| Maximum tree depth | 6, 8 (tuning range) | |

| Minimum child weight | 1, 5 (tuning range) | |

| Number of CV folds | 3 | |

| Number of random searches | 20 | |

| Random seed | 42 |

| Contamination Rate (%) | RMSE | MAE | R2 |

|---|---|---|---|

| 1 | 1.5226 | 0.9755 | 0.9828 |

| 5 | 1.4076 | 0.9216 | 0.9857 |

| 10 | 2.8592 | 1.4332 | 0.9622 |

| No Outlier Treatment | 3.0665 | 1.5638 | 0.9601 |

| Meta-Learner | RMSE | MAE | R2 |

|---|---|---|---|

| Ridge | 1.4076 | 0.9216 | 0.9857 |

| Lasso | 2.8599 | 1.5642 | 0.9652 |

| ElasticNet | 2.4963 | 1.2964 | 0.9703 |

| Season | Model | RMSE | MAE | R2 |

|---|---|---|---|---|

| Spring | TNN | 3.3838 | 2.3019 | 0.8936 |

| LSTM | 4.5341 | 2.1293 | 0.9552 | |

| ARIMA-BiGRU-XGBoost | 4.5354 | 3.9571 | 0.7865 | |

| SVM-BiGRU-XGBoost | 3.2344 | 2.2349 | 0.8914 | |

| CNN-BiGRU-XGBoost | 1.5131 | 0.9623 | 0.9862 | |

| Summer | TNN | 3.6641 | 2.4553 | 0.8924 |

| LSTM | 3.5521 | 2.3821 | 0.9455 | |

| ARIMA-BiGRU-XGBoost | 4.7579 | 3.2256 | 0.8164 | |

| SVM-BiGRU-XGBoost | 3.6167 | 2.2939 | 0.8939 | |

| CNN-BiGRU-XGBoost | 1.4962 | 0.9519 | 0.9876 | |

| Autumn | TNN | 3.3213 | 2.4588 | 0.9342 |

| LSTM | 3.3455 | 2.5419 | 0.9281 | |

| ARIMA-BiGRU-XGBoost | 4.6878 | 4.0207 | 0.8674 | |

| SVM-BiGRU-XGBoost | 3.1227 | 2.2005 | 0.9411 | |

| CNN-BiGRU-XGBoost | 1.5565 | 1.0422 | 0.9858 | |

| Winter | TNN | 3.6245 | 2.6541 | 0.9122 |

| LSTM | 2.9377 | 1.8751 | 0.9488 | |

| ARIMA-BiGRU-XGBoost | 4.8608 | 4.1057 | 0.8399 | |

| SVM-BiGRU-XGBoost | 3.6313 | 2.7069 | 0.9105 | |

| CNN-BiGRU-XGBoost | 1.6479 | 1.1571 | 0.9813 |

| Season | Model | RMSE | MAE | R2 |

|---|---|---|---|---|

| Spring | BiGRU | 4.1164 | 2.9740 | 0.8241 |

| XGBoost | 3.4382 | 2.4600 | 0.8773 | |

| BiGRU-XGBoost | 4.0352 | 2.9418 | 0.8310 | |

| CNN-BiGRU | 2.5786 | 1.4556 | 0.9309 | |

| CNN-BiGRU-XGBoost | 1.5131 | 0.9623 | 0.9862 | |

| Summer | BiGRU | 4.2426 | 2.9217 | 0.8539 |

| XGBoost | 3.7731 | 2.5709 | 0.8845 | |

| BiGRU-XGBoost | 4.3831 | 3.0199 | 0.8441 | |

| CNN-BiGRU | 2.1538 | 1.2628 | 0.9624 | |

| CNN-BiGRU-XGBoost | 1.4962 | 0.9519 | 0.9876 | |

| Autumn | BiGRU | 4.1562 | 2.9817 | 0.8957 |

| XGBoost | 3.2693 | 2.3971 | 0.9354 | |

| BiGRU-XGBoost | 3.9361 | 2.8529 | 0.9064 | |

| CNN-BiGRU | 2.1283 | 1.4298 | 0.9727 | |

| CNN-BiGRU-XGBoost | 1.5565 | 1.0422 | 0.9858 | |

| Winter | BiGRU | 4.3391 | 3.2338 | 0.8725 |

| XGBoost | 3.8517 | 2.8889 | 0.8995 | |

| BiGRU-XGBoost | 4.5001 | 3.3596 | 0.8628 | |

| CNN-BiGRU | 2.3680 | 1.6028 | 0.9620 | |

| CNN-BiGRU-XGBoost | 1.6479 | 1.1571 | 0.9813 |

| RMSE | MAE | R2 | |||||

|---|---|---|---|---|---|---|---|

| Season | Segment | Peaks (≥80% Max) | Valleys (≤20% Max) | Peaks (≥80% Max) | Valleys (≤20% Max) | Peaks (≥80% Max) | Valleys (≤20% Max) |

| Spring | ARIMA-BiGRU-XGBoost | 9.3556 | 4.7814 | 9.1362 | 4.5449 | 0.8113 | 0.7397 |

| SVM-BiGRU-XGBoost | 5.2874 | 1.9466 | 4.399 | 1.3865 | 0.8342 | 0.8013 | |

| CNN-BiGRU-XGBoost | 2.4892 | 1.0047 | 2.207 | 0.6939 | 0.9613 | 0.9133 | |

| Summer | ARIMA-BiGRU-XGBoost | 12.5977 | 2.2705 | 12.433 | 1.5485 | 0.7907 | 0.7628 |

| SVM-BiGRU-XGBoost | 5.7427 | 1.8867 | 4.1554 | 1.4506 | 0.7817 | 0.8288 | |

| CNN-BiGRU-XGBoost | 1.8729 | 1.0169 | 1.4544 | 0.6359 | 0.96846 | 0.9727 | |

| Autumn | ARIMA-BiGRU-XGBoost | 7.1143 | 5.1437 | 6.8367 | 4.9463 | 0.8674 | 0.8365 |

| SVM-BiGRU-XGBoost | 3.8818 | 1.9793 | 2.3923 | 1.4157 | 0.9411 | 0.7841 | |

| CNN-BiGRU-XGBoost | 2.0201 | 1.0046 | 1.3183 | 0.6737 | 0.9612 | 0.9679 | |

| Winter | ARIMA-BiGRU-XGBoost | 8.7629 | 5.6997 | 8.5122 | 5.0295 | 0.8171 | 0.7088 |

| SVM-BiGRU-XGBoost | 5.0923 | 4.3397 | 4.2429 | 2.5472 | 0.8954 | 0.8363 | |

| CNN-BiGRU-XGBoost | 2.8766 | 1.3691 | 1.9626 | 0.6756 | 0.9613 | 0.9731 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Pan, J.; Wang, J. A Hybrid Framework for Offshore Wind Power Forecasting: Integrating CNN-BiGRU-XGBoost with Advanced Feature Engineering and Analysis. Energies 2025, 18, 5153. https://doi.org/10.3390/en18195153

Li Y, Pan J, Wang J. A Hybrid Framework for Offshore Wind Power Forecasting: Integrating CNN-BiGRU-XGBoost with Advanced Feature Engineering and Analysis. Energies. 2025; 18(19):5153. https://doi.org/10.3390/en18195153

Chicago/Turabian StyleLi, Yongguo, Jiayi Pan, and Jiangdong Wang. 2025. "A Hybrid Framework for Offshore Wind Power Forecasting: Integrating CNN-BiGRU-XGBoost with Advanced Feature Engineering and Analysis" Energies 18, no. 19: 5153. https://doi.org/10.3390/en18195153

APA StyleLi, Y., Pan, J., & Wang, J. (2025). A Hybrid Framework for Offshore Wind Power Forecasting: Integrating CNN-BiGRU-XGBoost with Advanced Feature Engineering and Analysis. Energies, 18(19), 5153. https://doi.org/10.3390/en18195153