Short-Term Load Forecasting in the Greek Power Distribution System: A Comparative Study of Gradient Boosting and Deep Learning Models

Abstract

1. Introduction

1.1. Motivation and Incitement

1.2. Literature Review and Research Gaps

1.3. Identified Gaps

- Studies using the ENTSO-E hourly load dataset for Greece often report relatively high forecasting errors, showing the difficulty of accurately predicting national-level demand.

- Existing DL-based approaches provide high accuracy but are often computationally expensive and lack interpretability.

- Many studies focus on single-model forecasting, whereas hybrid and ensemble approaches could better handle diverse demand patterns.

- The use of real-time IoT and smart meter data in STLF remains underexplored, limiting adaptability in dynamic grid environments.

1.4. Novelty, Contributions, and Paper Organization

- Comprehensive Model Evaluation: Benchmarking and comparing LightGBM, LSTM, GRU, CNN, and CNN-LSTM hybrid models for short-term load forecasting on a real-world Greek dataset.

- Realistic and Extensive Dataset: Using a multivariate dataset spanning nine years, enabling testing under realistic operational conditions.

- Advanced Data Preprocessing: Implementing KNN imputation, feature engineering, and data normalization to improve prediction quality.

- Evaluation with Multiple Metrics: Assessing models using MAE, RMSE, MAPE, , and NRMSE, providing a comprehensive evaluation framework.

- Addressing Forecasting Challenges: Tackling common STLF issues such as overfitting, computational resource limitations, and hyperparameter optimization to ensure model robustness.

- Explicit trade-off analysis: We quantify and discuss the trade-offs between accuracy, model complexity, and generalization across DL architectures and LightGBM, providing actionable guidance for model selection under real-world constraints.

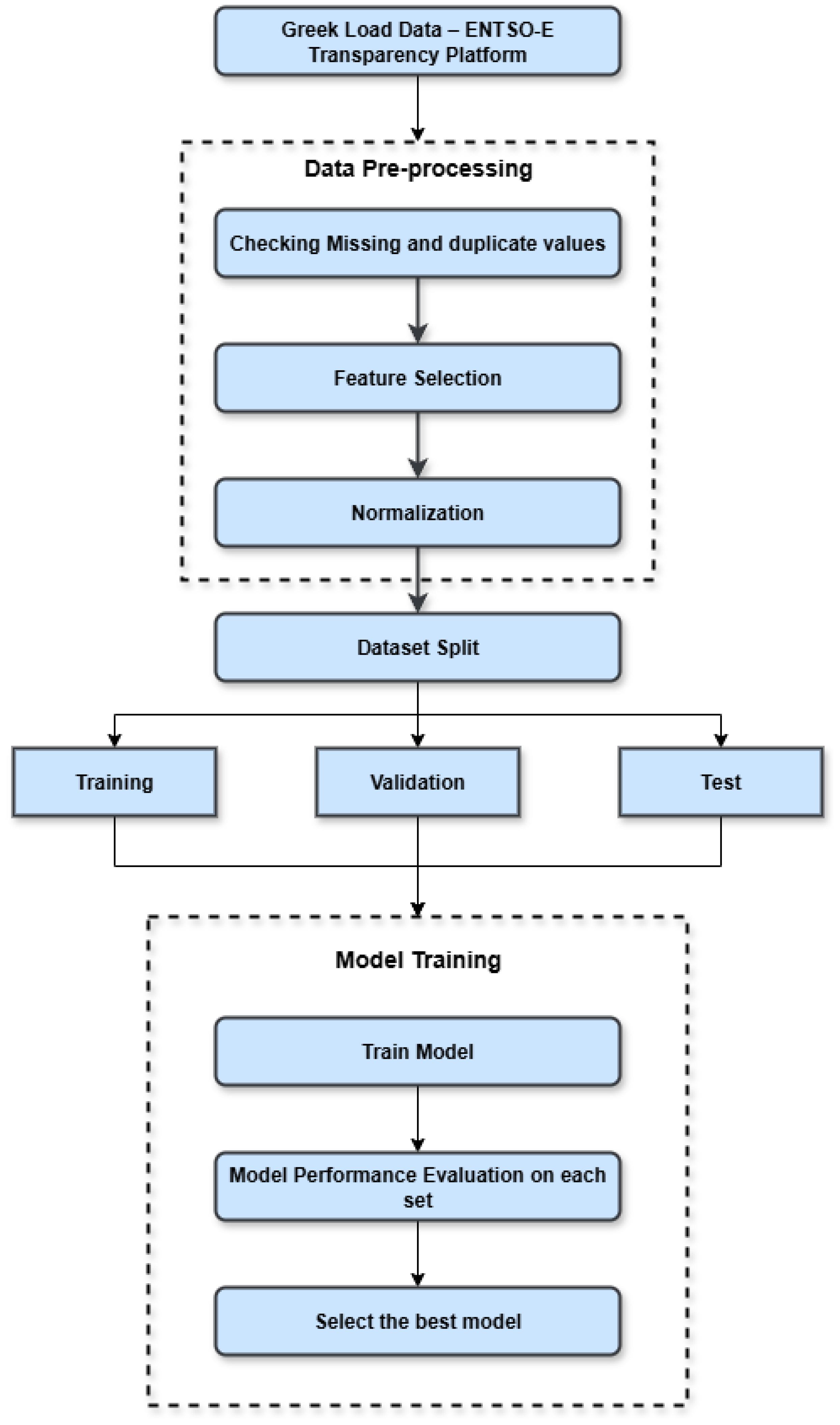

2. Data Curation and Analysis

2.1. Dataset Description

2.2. Data Preprocessing and Feature Engineering

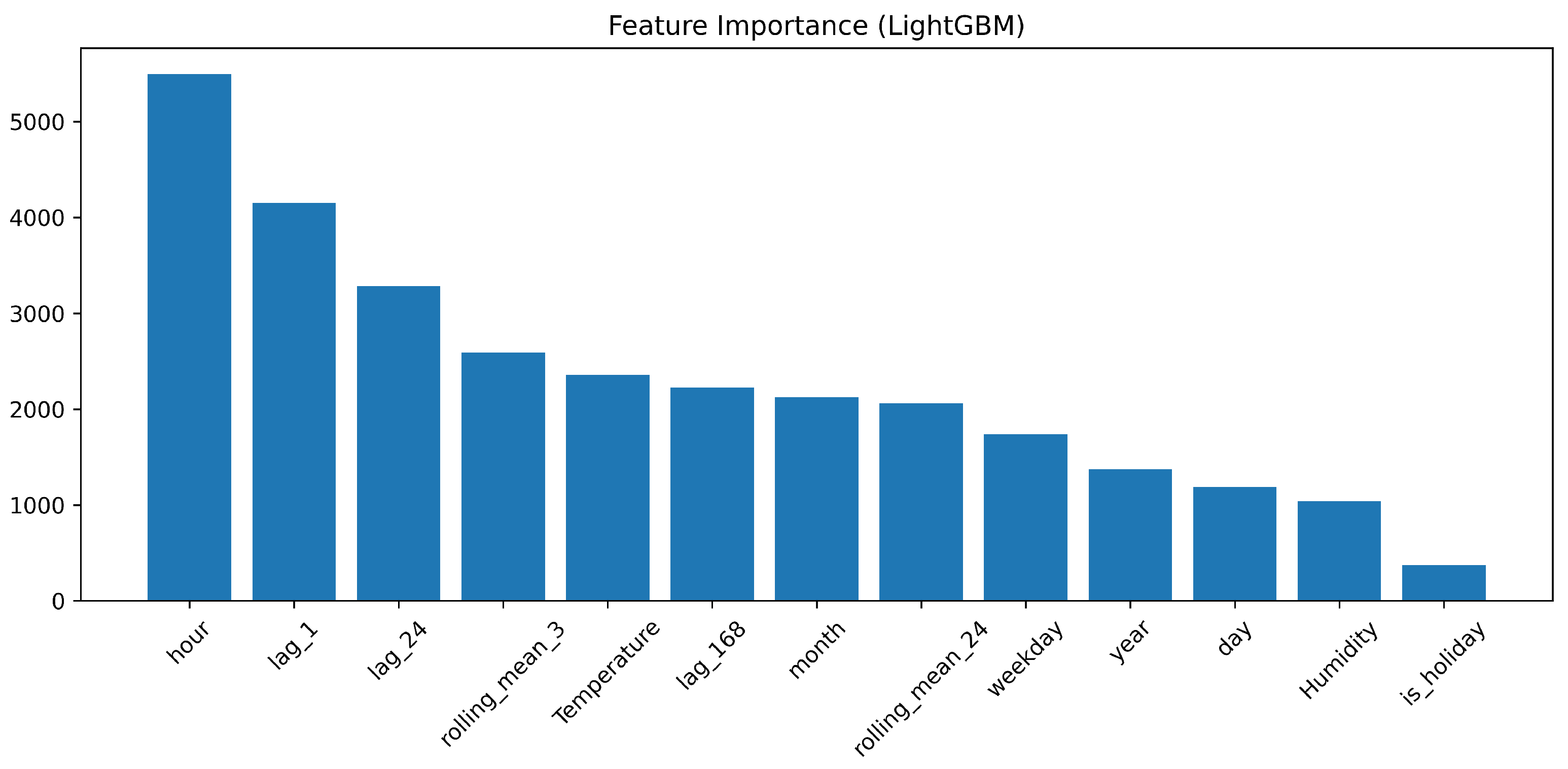

- lag_1: Load at the previous hour;

- lag_24: Load at the same hour on the previous day;

- lag_168: Load at the same hour on the previous week.

- rolling_mean_3: 3 h moving average;

- rolling_mean_24: 24 h moving average.

2.3. Feature Importance Analysis

3. Model Architectures and Experimental Setup

3.1. CNN (Convolutional Neural Network)

3.2. LSTM (Long Short-Term Memory)

3.3. Hybrid Model (CNN-LSTM)

3.4. GRU (Gated Recurrent Unit)

3.5. LightGBM (Light Gradient Boosting Machine)

3.6. Experimental Setup and Model Training

4. Results and Discussion

4.1. Performance Evaluation Metrics

4.1.1. Mean Absolute Error (MAE)

4.1.2. Root Mean Squared Error (RMSE)

4.1.3. Mean Squared Error (MSE)

4.1.4. Mean Absolute Percentage Error (MAPE)

4.1.5. Normalized Root Mean Squared Error (NRMSE)

4.1.6. Coefficient of Determination ()

4.2. Prediction Results and Comparative Analysis

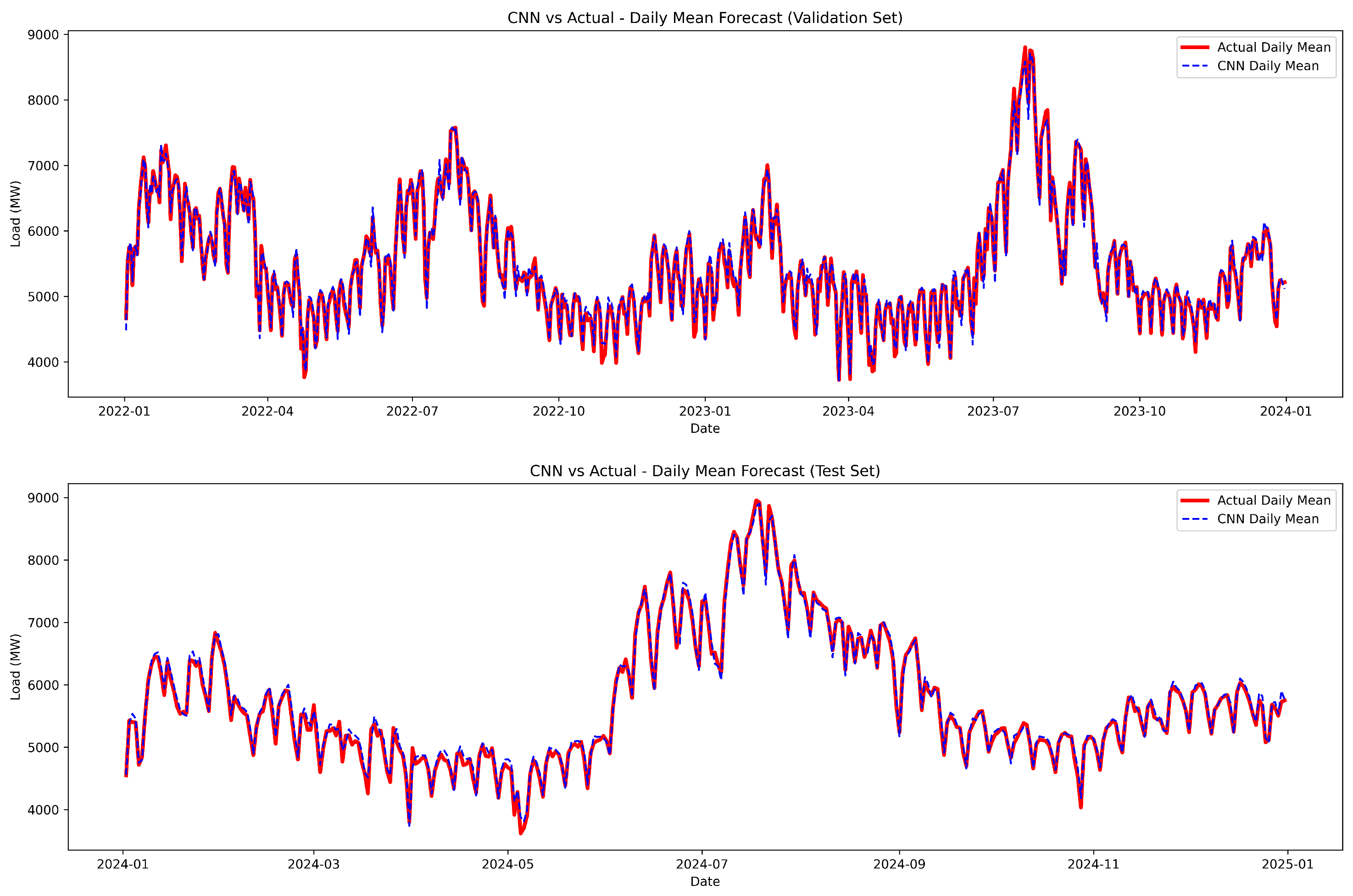

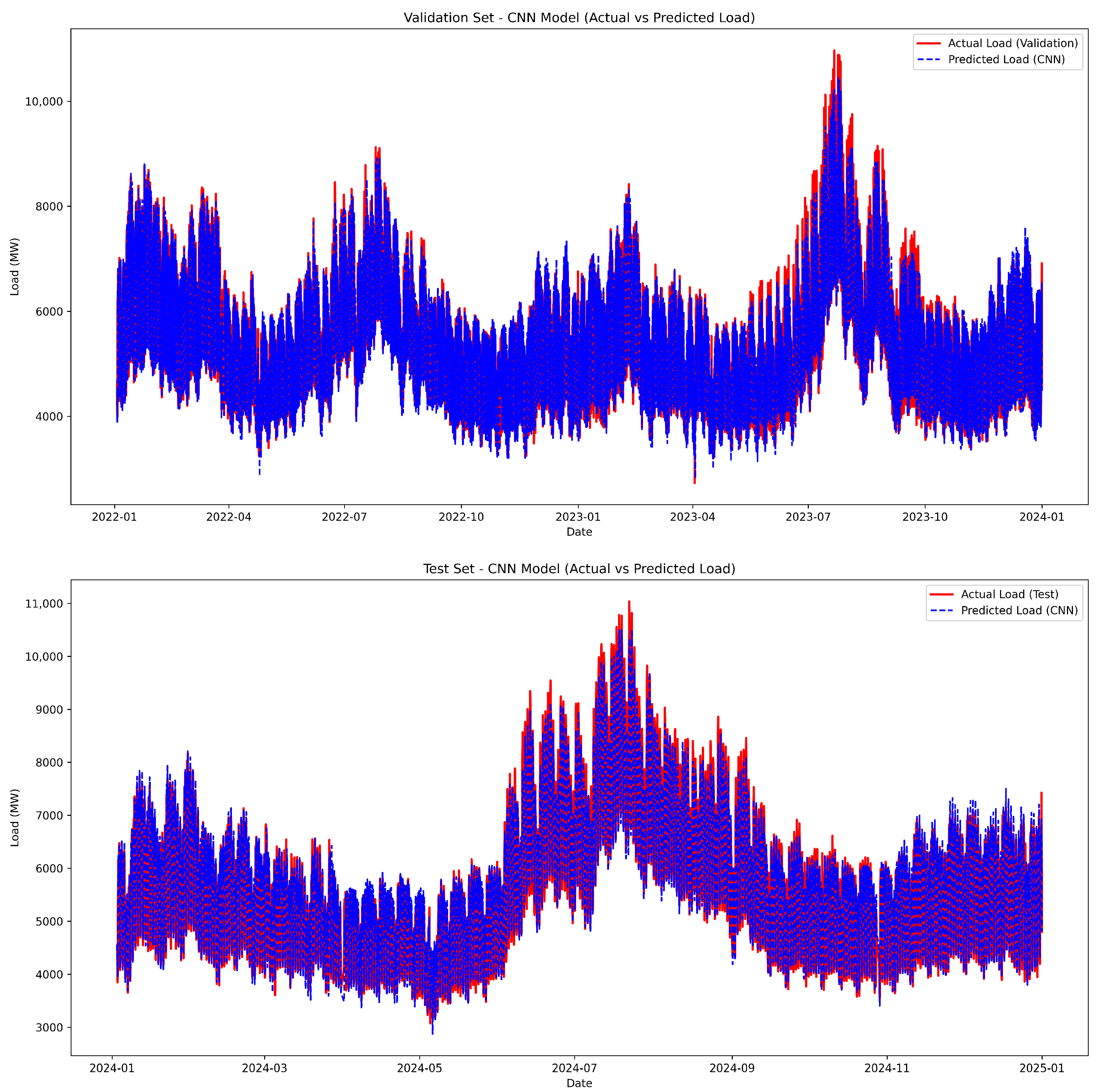

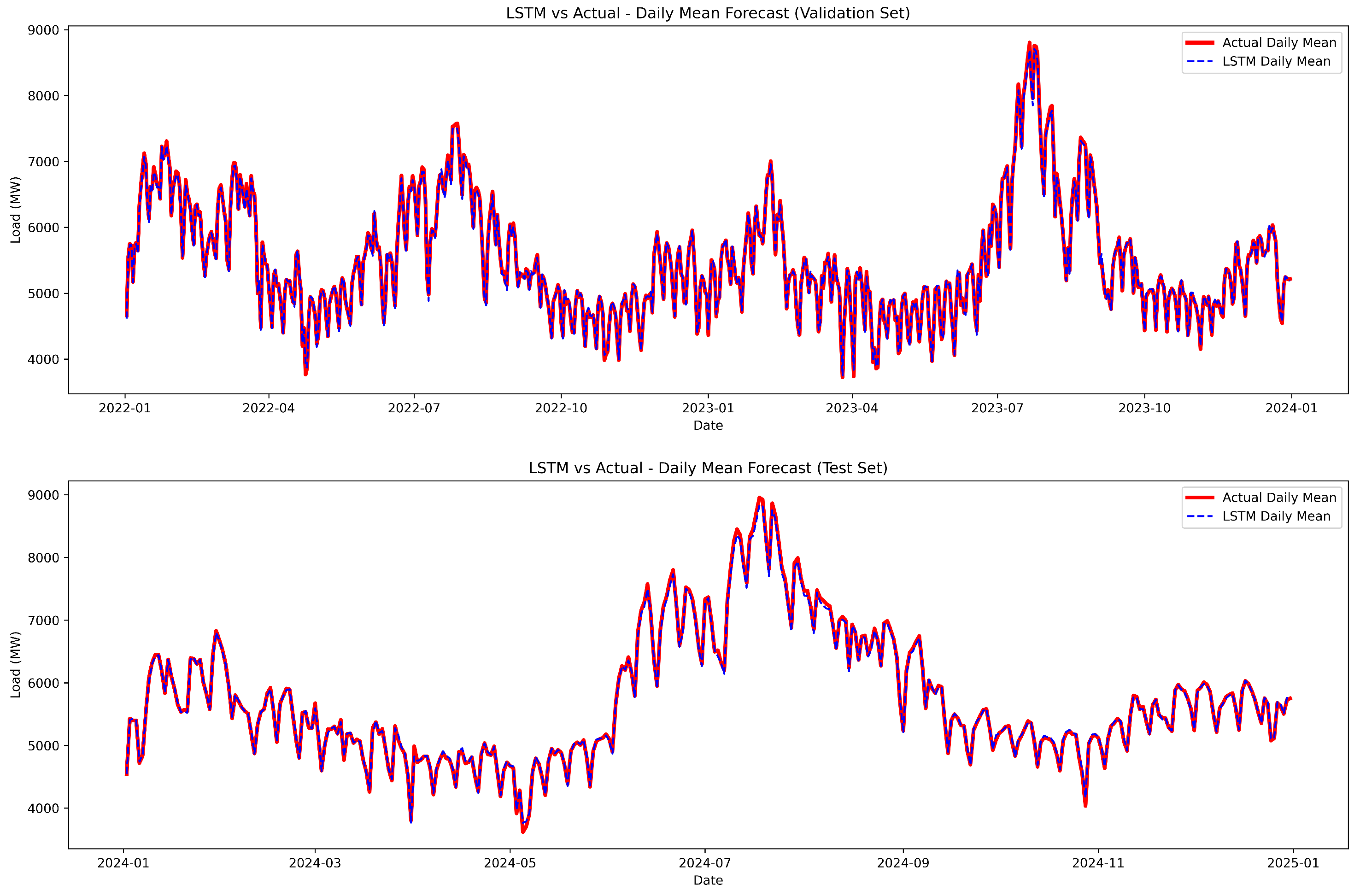

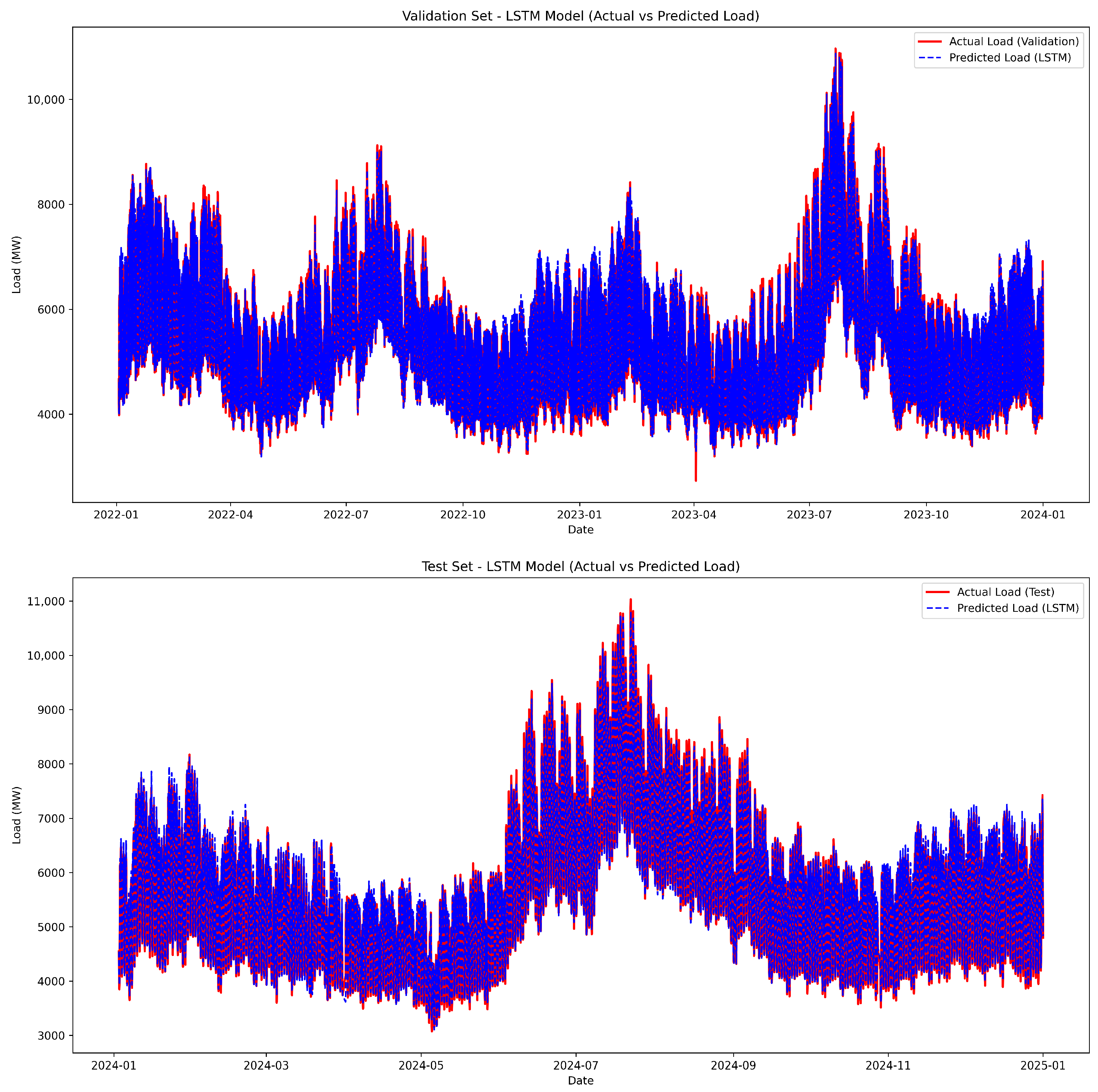

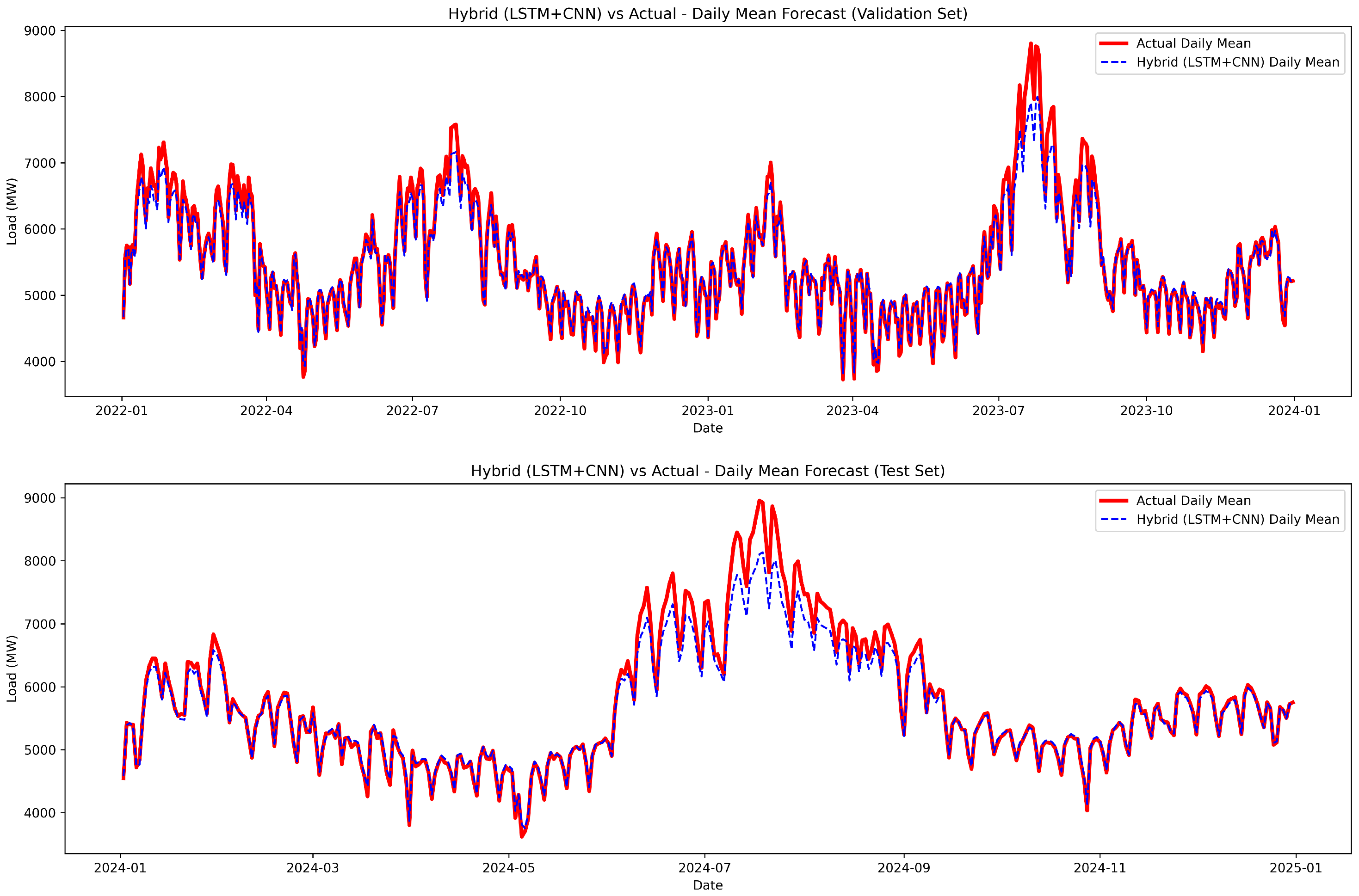

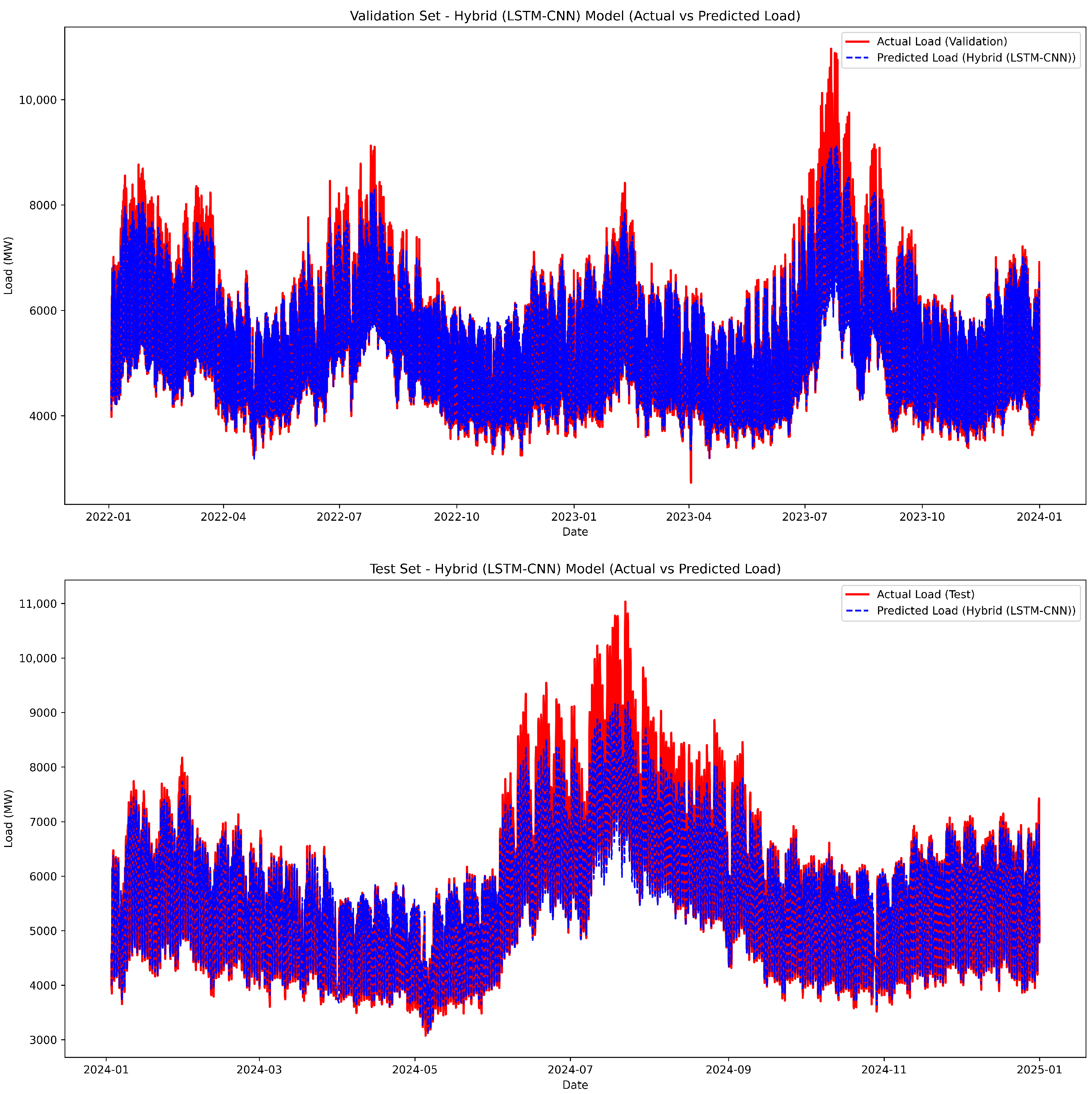

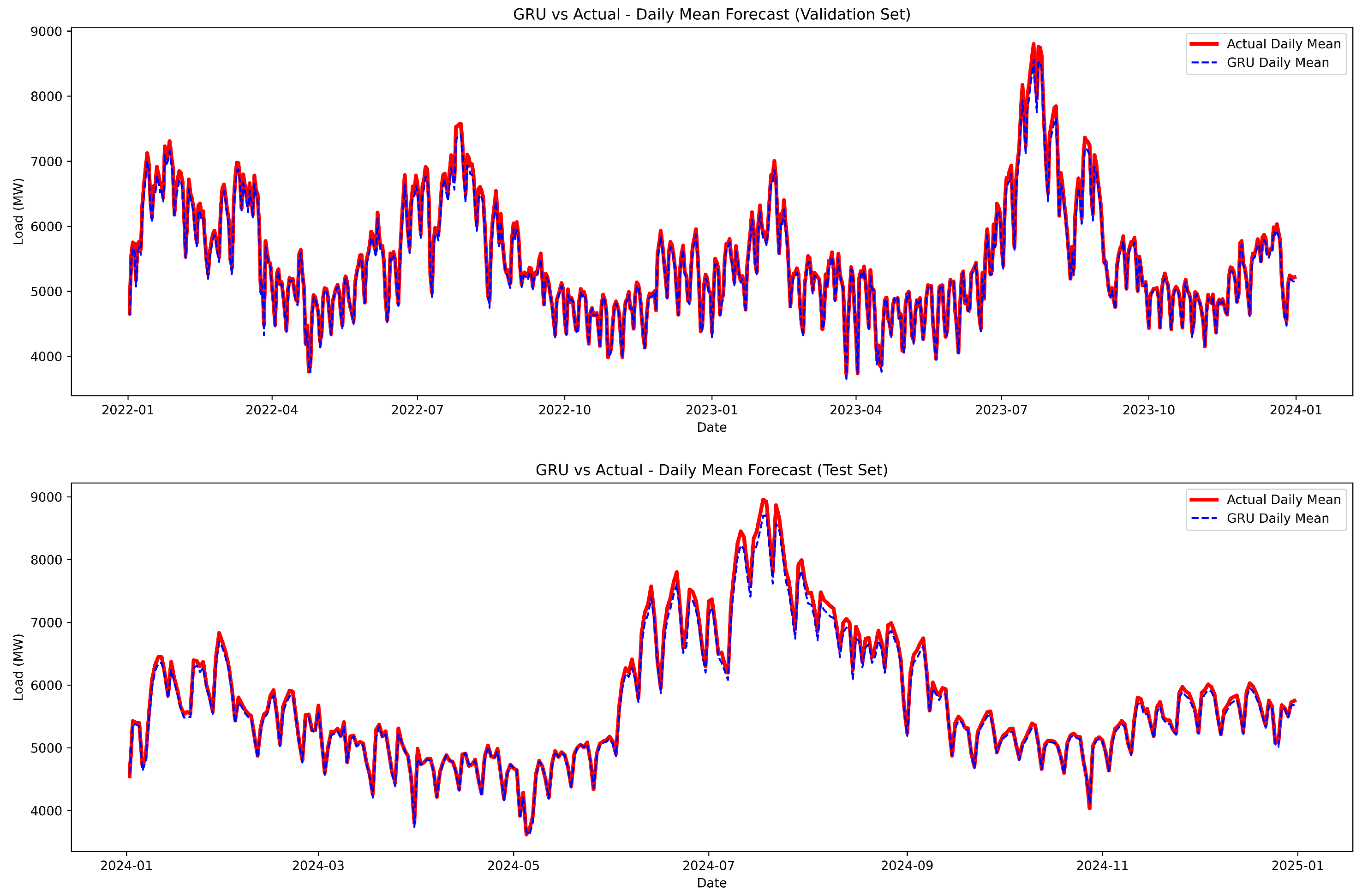

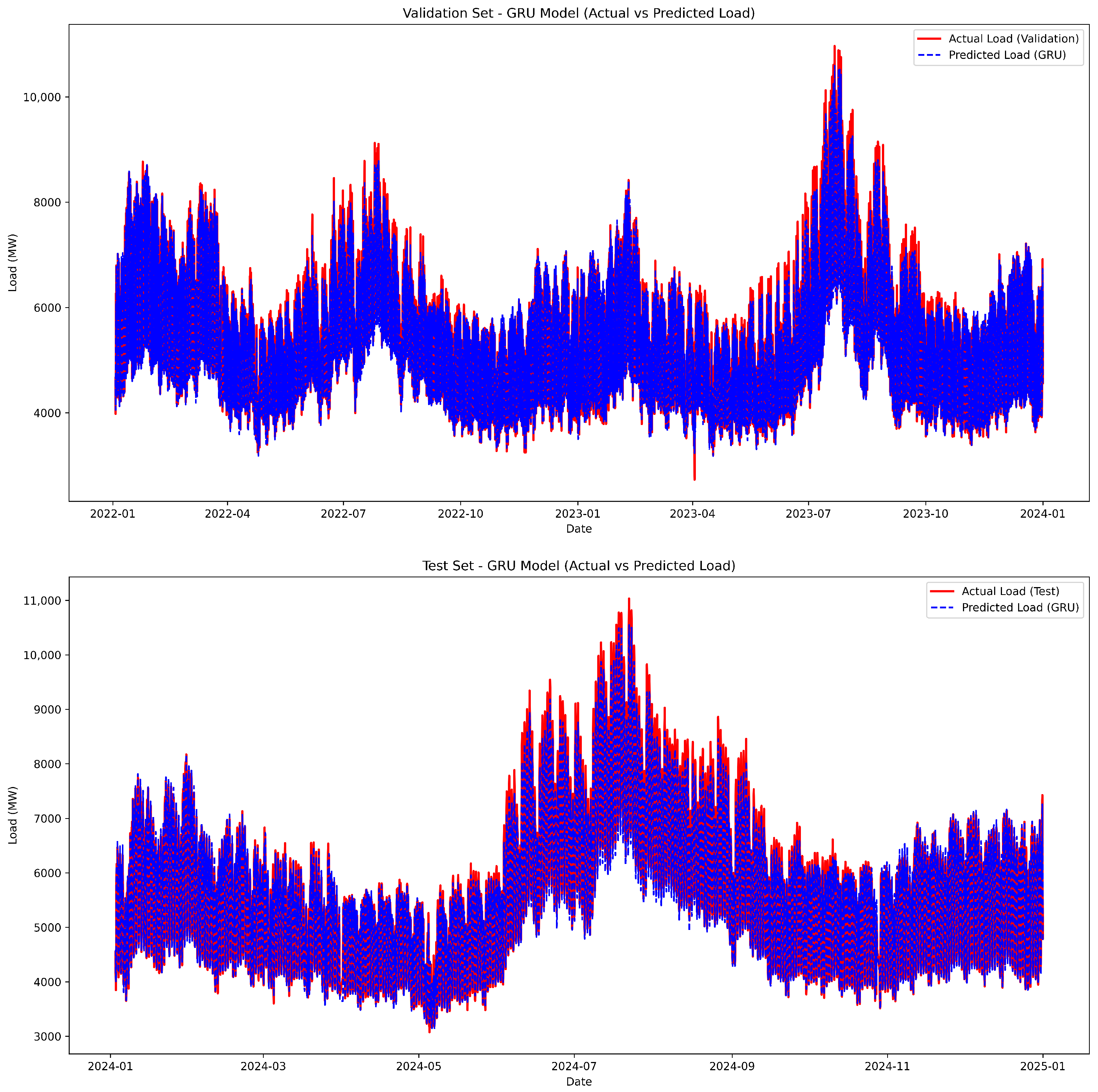

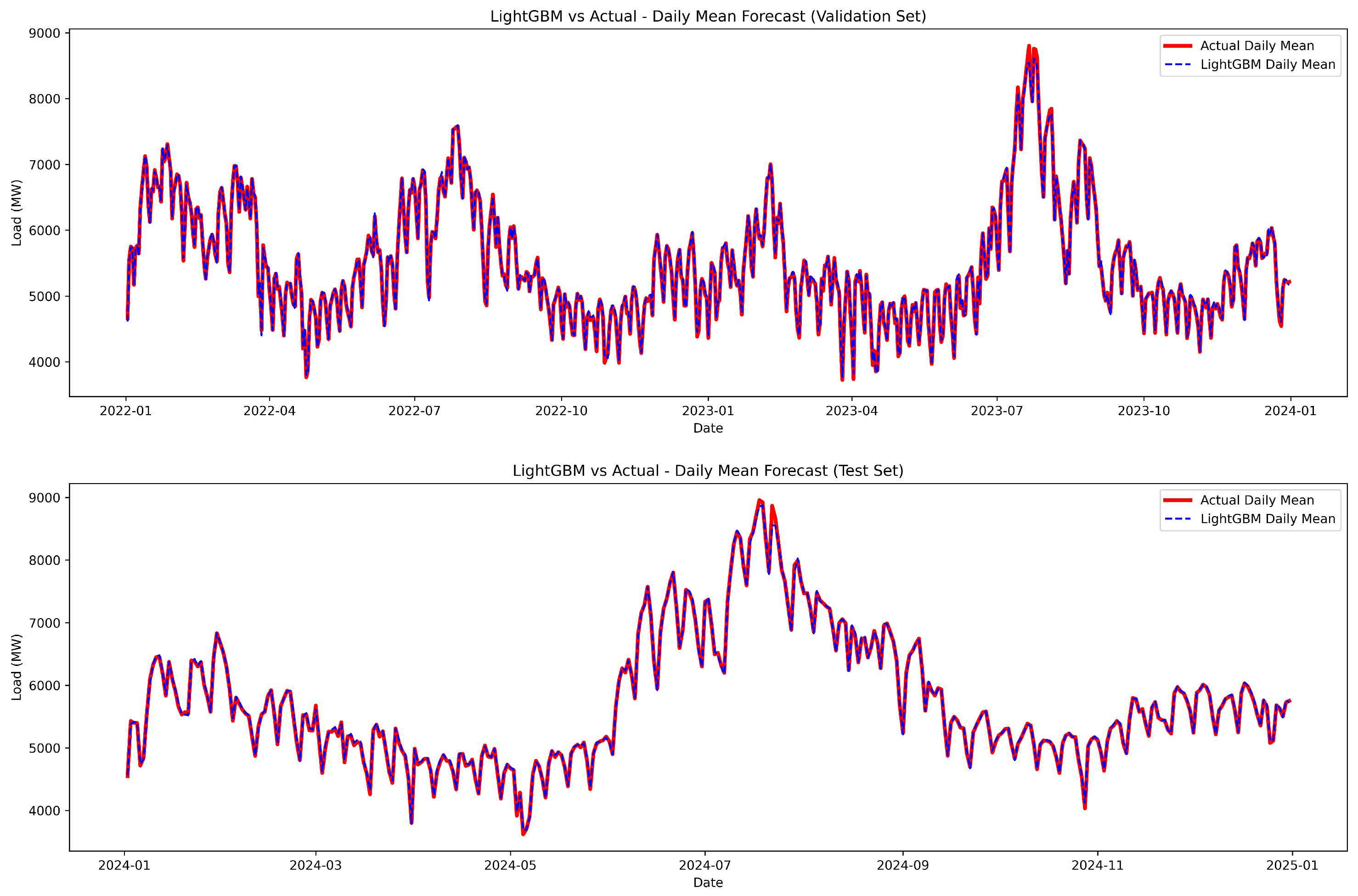

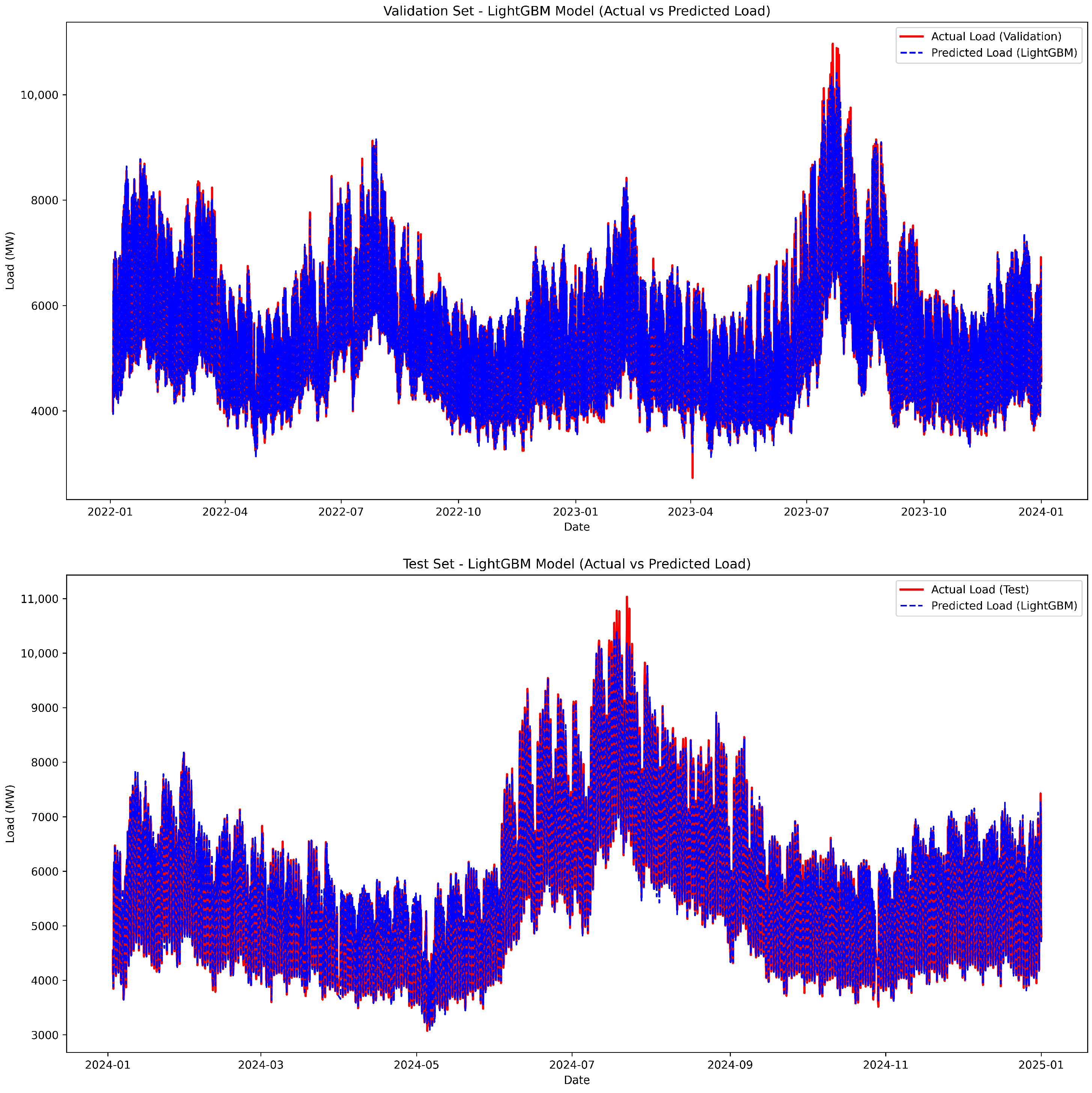

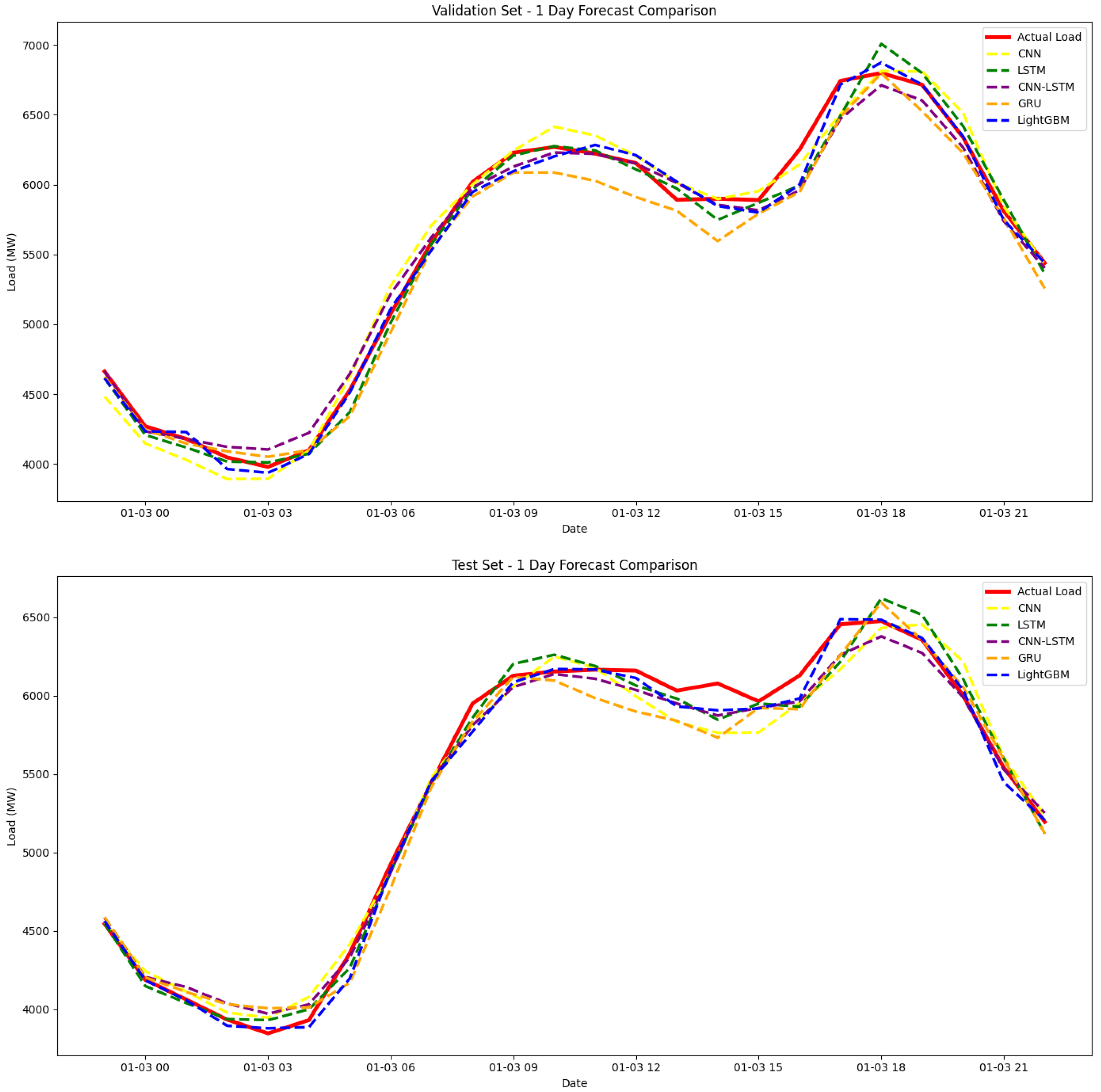

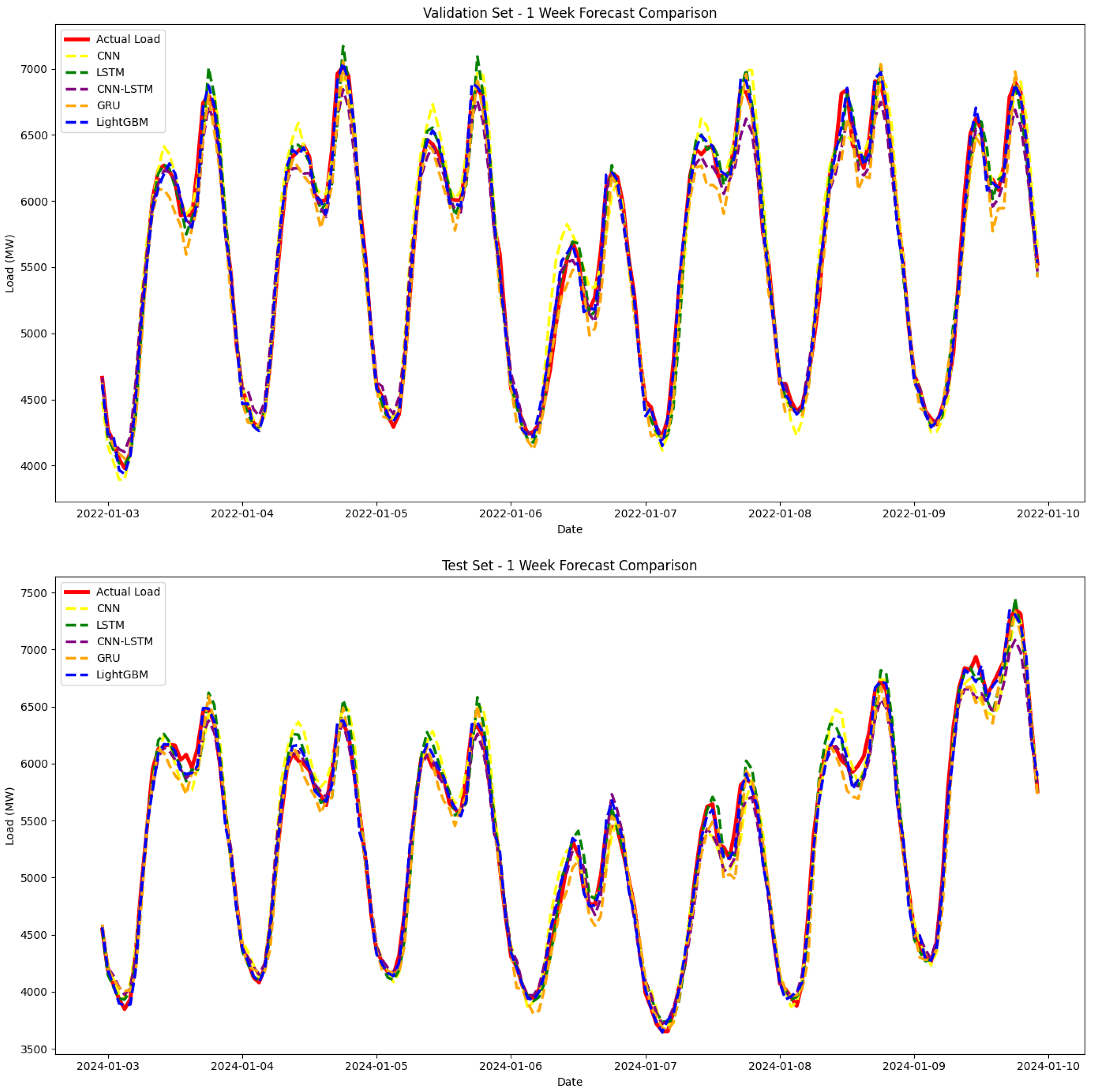

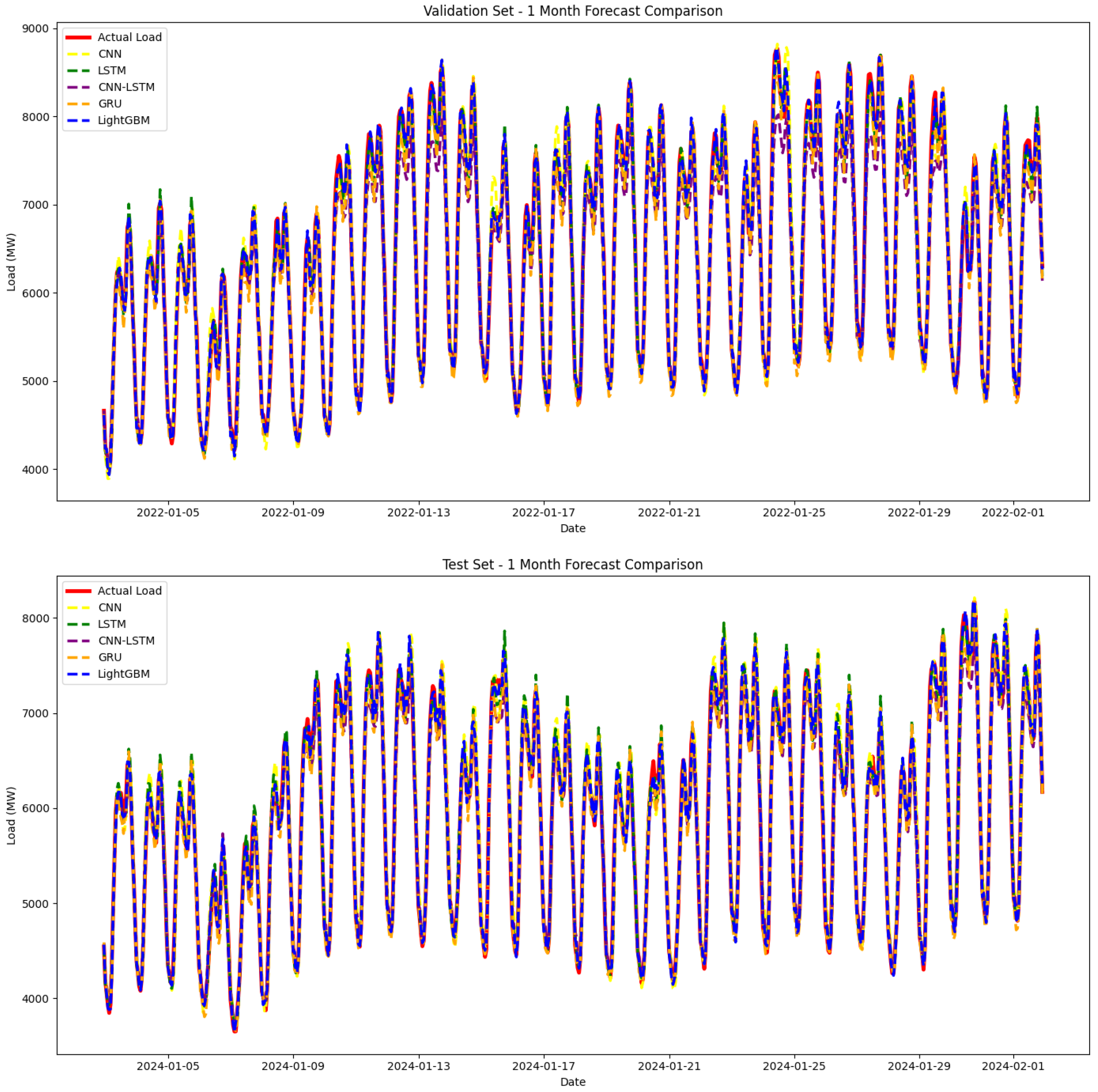

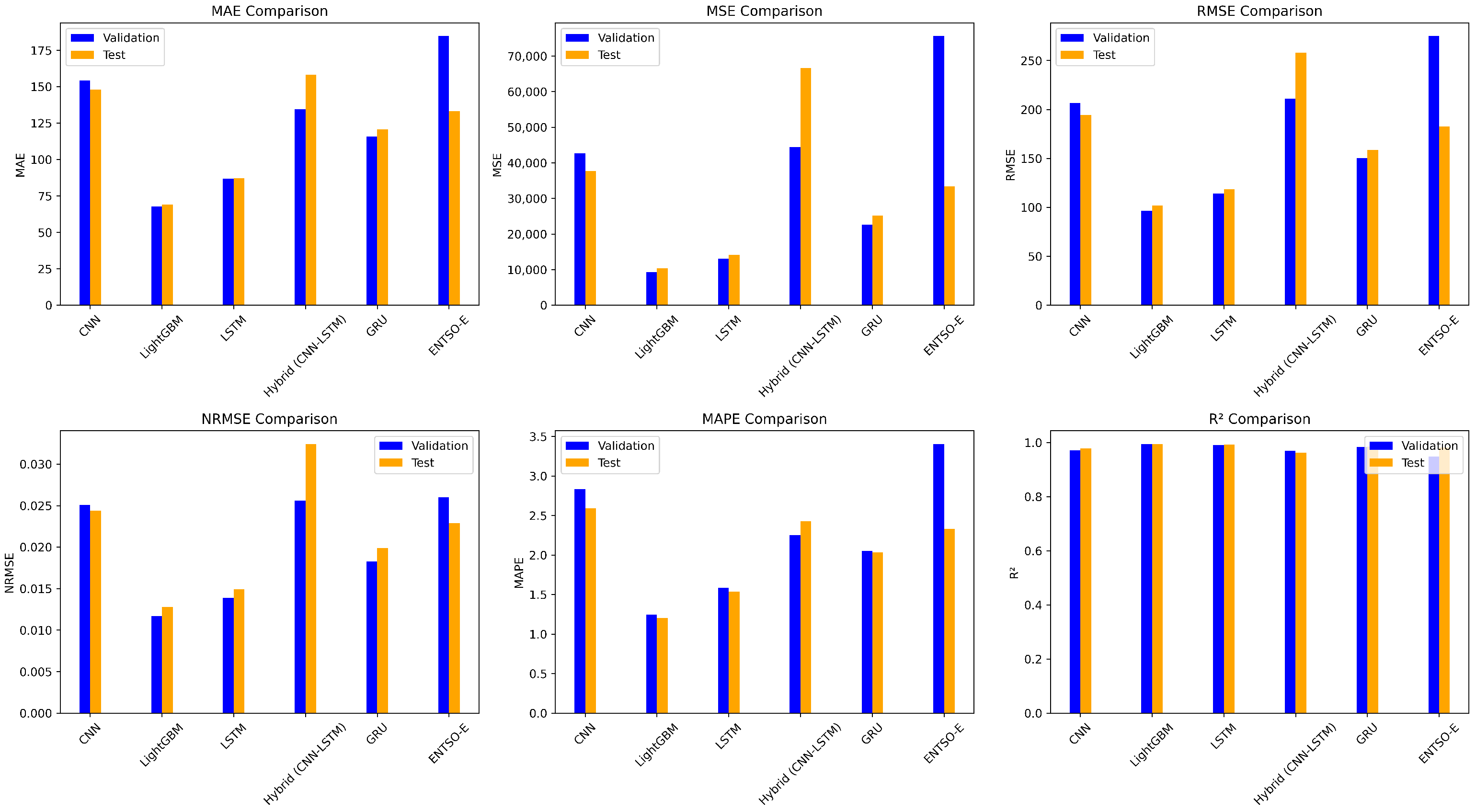

4.3. Practical Deployment Challenges

- Data Latency: LSTM, CNN, and hybrid (CNN-LSTM) models are more computationally intensive and may face delays in providing real-time predictions, particularly during periods of rapid demand changes. Minimizing latency in such cases is critical for operational decision-making.

- Computational Cost: LSTM and CNN models require more computational power, which could be a barrier in smart grid environments with resource constraints. GRU, being computationally simpler, performs relatively better in terms of training time and inference speed. LightGBM stands out for its faster training time and lower memory requirements, making it suitable for real-time applications at scale.

- Real-Time Constraints: Smart grids require frequent updates to forecasts based on incoming data. LSTM and hybrid (CNN-LSTM) models might require additional time to process and update their predictions, making them less ideal for applications where immediate decisions are critical. LightGBM, due to its feature-engineering approach and faster processing times, is better suited for real-time forecasting applications.

4.4. Computational Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| API | Application Programming Interface |

| ARIMA | Autoregressive Integrated Moving Average |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| CNN-LSTM | Convolutional Neural Network - Long Short-Term Memory |

| DBD-FELF | Dynamic Block-Diagonal Fuzzy Electric Load Forecaster |

| DL | Deep Learning |

| EEMD | Ensemble Empirical Mode Decomposition |

| EMD | Empirical Mode Decomposition |

| ENTSO-E | European Network of Transmission System Operators for Electricity |

| FF ANN | Feed-Forward Artificial Neural Network |

| GBR | Gradient Boosting Regressor |

| GPU | Graphics Processing Unit |

| GRU | Gated Recurrent Unit |

| IoT | Internet of Things |

| KNN | K-Nearest Neighbor |

| LightGBM | Light Gradient Boosting Machine |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| ML | Machine Learning |

| MSE | Mean Squared Error |

| MW | Megawatt |

| MWh | Megawatt-hour |

| NRMSE | Normalized Root Mean Squared Error |

| Coefficient of Determination | |

| RBFNNs | Radial Basis Function Neural Networks |

| RMSE | Root Mean Squared Error |

| RNNs | Recurrent Neural Networks |

| SSA | Singular Spectrum Analysis |

| STLF | Short-Term Load Forecasting |

| SVR | Support Vector Regression |

| SVD | Singular Value Decomposition |

| TL | Transfer Learning |

| VSTLF | Very Short-Term Load Forecasting |

| XGBoost | Extreme Gradient Boosting Regressor |

References

- Koukaras, P.; Mustapha, A.; Mystakidis, A.; Tjortjis, C. Optimizing building short-term load forecasting: A comparative analysis of machine learning models. Energies 2024, 17, 1450. [Google Scholar] [CrossRef]

- Ullah, K.; Ahsan, M.; Hasanat, S.M.; Haris, M.; Yousaf, H.; Raza, S.F.; Tandon, R.; Abid, S.; Ullah, Z. Short-term load forecasting: A comprehensive review and simulation study with CNN-LSTM hybrids approach. IEEE Access 2024, 12, 111858–111881. [Google Scholar] [CrossRef]

- Yuan, M.; Xie, J.; Liu, C.; Xu, Z. Short-term load forecasting for an industrial building based on diverse load patterns. Energy 2025, 334, 137481. [Google Scholar] [CrossRef]

- Junior, M.Y.; Freire, R.Z.; Seman, L.O.; Stefenon, S.F.; Mariani, V.C.; dos Santos Coelho, L. Optimized hybrid ensemble learning approaches applied to very short-term load forecasting. Int. J. Electr. Power Energy Syst. 2024, 155, 109579. [Google Scholar]

- Cheng, X.; Wang, L.; Zhang, P.; Wang, X.; Yan, Q. Short-term fast forecasting based on family behavior pattern recognition for small-scale users load. Clust. Comput. 2022, 25, 2107–2123. [Google Scholar] [CrossRef]

- Varelas, G.; Tzimas, G.; Alefragis, P. A new approach in forecasting Greek electricity demand: From high dimensional hourly series to univariate series transformation. Electr. J. 2023, 36, 107305. [Google Scholar] [CrossRef]

- Arvanitidis, A.I.; Bargiotas, D.; Daskalopulu, A.; Laitsos, V.M.; Tsoukalas, L.H. Enhanced short-term load forecasting using artificial neural networks. Energies 2021, 14, 7788. [Google Scholar] [CrossRef]

- Sideratos, G.; Ikonomopoulos, A.; Hatziargyriou, N.D. A novel fuzzy-based ensemble model for load forecasting using hybrid deep neural networks. Electr. Power Syst. Res. 2020, 178, 106025. [Google Scholar] [CrossRef]

- Stamatellos, G.; Stamatelos, T. Short-term load forecasting of the greek electricity system. Appl. Sci. 2023, 13, 2719. [Google Scholar] [CrossRef]

- Stratigakos, A.; Bachoumis, A.; Vita, V.; Zafiropoulos, E. Short-term net load forecasting with singular spectrum analysis and LSTM neural networks. Energies 2021, 14, 4107. [Google Scholar] [CrossRef]

- Kandilogiannakis, G.; Mastorocostas, P.; Voulodimos, A.; Hilas, C. Short-Term Load Forecasting of the Greek Power System Using a Dynamic Block-Diagonal Fuzzy Neural Network. Energies 2023, 16, 4227. [Google Scholar] [CrossRef]

- Stergiou, K.; Karakasidis, T.E. Application of deep learning and chaos theory for load forecasting in Greece. Neural Comput. Appl. 2021, 33, 16713–16731. [Google Scholar] [CrossRef]

- Tzortzis, A.M.; Pelekis, S.; Spiliotis, E.; Karakolis, E.; Mouzakitis, S.; Psarras, J.; Askounis, D. Transfer learning for day-ahead load forecasting: A case study on European national electricity demand time series. Mathematics 2023, 12, 19. [Google Scholar] [CrossRef]

- Panapakidis, I.P.; Skiadopoulos, N.; Christoforidis, G.C. Combined forecasting system for short-term bus load forecasting based on clustering and neural networks. IET Gener. Transm. Distrib. 2020, 14, 3652–3664. [Google Scholar] [CrossRef]

- Katya, E. Exploring Feature Engineering Strategies for Improving Predictive Models in Data Science. Res. J. Comput. Syst. Eng. 2023, 4, 201–215. [Google Scholar] [CrossRef]

- Murti, D.M.P.; Pujianto, U.; Wibawa, A.P.; Akbar, M.I. K-Nearest Neighbor (K-NN) based Missing Data Imputation. In Proceedings of the 2019 5th International Conference on Science in Information Technology (ICSITech), Yogyakarta, Indonesia, 23–24 October 2019; pp. 83–88. [Google Scholar] [CrossRef]

- Bichri, H.; Chergui, A.; Hain, M. Investigating the Impact of Train/Test Split Ratio on the Performance of Pre-Trained Models with Custom Datasets. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 2. [Google Scholar] [CrossRef]

- Wang, C.; Li, X.; Shi, Y.; Jiang, W.; Song, Q.; Li, X. Load forecasting method based on CNN and extended LSTM. Energy Rep. 2024, 12, 2452–2461. [Google Scholar] [CrossRef]

- Jalali, S.M.J.; Ahmadian, S.; Khosravi, A.; Shafie-khah, M.; Nahavandi, S.; Catalão, J.P.S. A Novel Evolutionary-Based Deep Convolutional Neural Network Model for Intelligent Load Forecasting. IEEE Trans. Ind. Inform. 2021, 17, 8243–8253. [Google Scholar] [CrossRef]

- Koukaras, P.; Bezas, N.; Gkaidatzis, P.; Ioannidis, D.; Tzovaras, D.; Tjortjis, C. Introducing a novel approach in one-step ahead energy load forecasting. Sustain. Comput. Inform. Syst. 2021, 32, 100616. [Google Scholar] [CrossRef]

- Alhussein, M.; Aurangzeb, K.; Haider, S.I. Hybrid CNN-LSTM model for short-term individual household load forecasting. IEEE Access 2020, 8, 180544–180557. [Google Scholar] [CrossRef]

- Yunita, A.; Pratama, M.I.; Almuzakki, M.Z.; Ramadhan, H.; Akhir, E.A.P.; Firdausiah Mansur, A.B.; Basori, A.H. Performance analysis of neural network architectures for time series forecasting: A comparative study of RNN, LSTM, GRU, and hybrid models. MethodsX 2025, 15, 103462. [Google Scholar] [CrossRef]

- Park, J.; Hwang, E. A two-stage multistep-ahead electricity load forecasting scheme based on LightGBM and attention-BiLSTM. Sensors 2021, 21, 7697. [Google Scholar] [CrossRef]

- Nti, I.K.; Teimeh, M.; Nyarko-Boateng, O.; Adekoya, A.F. Electricity load forecasting: A systematic review. J. Electr. Syst. Inf. Technol. 2020, 7, 13. [Google Scholar] [CrossRef]

- Steurer, M.; Hill, R.J.; Pfeifer, N. Metrics for evaluating the performance of machine learning based automated valuation models. J. Prop. Res. 2021, 38, 99–129. [Google Scholar] [CrossRef]

- Tanoli, I.K.; Mehdi, A.; Algarni, A.D.; Fazal, A.; Khan, T.A.; Ahmad, S.; Ateya, A.A. Machine learning for high-performance solar radiation prediction. Energy Rep. 2024, 12, 4794–4804. [Google Scholar] [CrossRef]

| Metric | Train | Validation | Test |

|---|---|---|---|

| MAE | 126.8867 | 154.0706 | 148.0502 |

| MSE | 31,255.7571 | 42,715.0626 | 37,676.0284 |

| RMSE | 176.7930 | 206.6762 | 194.1031 |

| 0.9763 | 0.9706 | 0.9789 | |

| MAPE (%) | 2.2022 | 2.8368 | 2.5900 |

| NRMSE | 0.0235 | 0.0251 | 0.0244 |

| Metric | Train | Validation | Test |

|---|---|---|---|

| MAE | 92.2249 | 86.7305 | 87.2611 |

| MSE | 17,917.3677 | 13,067.9785 | 14,085.0742 |

| RMSE | 133.8558 | 114.3153 | 118.6806 |

| 0.9864 | 0.9910 | 0.9921 | |

| MAPE (%) | 1.5973 | 1.5870 | 1.5339 |

| NRMSE | 0.0178 | 0.0139 | 0.0149 |

| Metric | Train | Validation | Test |

|---|---|---|---|

| MAE | 139.1704 | 134.6142 | 158.2293 |

| MSE | 44,559.4056 | 44,445.2303 | 66,591.6128 |

| RMSE | 211.0910 | 210.8204 | 258.0535 |

| 0.9662 | 0.9694 | 0.9628 | |

| MAPE (%) | 2.1921 | 2.2496 | 2.4263 |

| NRMSE | 0.0280 | 0.0256 | 0.0324 |

| Metric | Train | Validation | Test |

|---|---|---|---|

| MAE | 127.2923 | 115.6403 | 120.6414 |

| MSE | 29,515.2823 | 22,622.5201 | 25,217.7061 |

| RMSE | 171.8001 | 150.4078 | 158.8008 |

| 0.9776 | 0.9844 | 0.9859 | |

| MAPE (%) | 2.1491 | 2.0508 | 2.0330 |

| NRMSE | 0.0228 | 0.0183 | 0.0199 |

| Metric | Train | Validation | Test |

|---|---|---|---|

| MAE | 45.5114 | 67.8065 | 69.1205 |

| MSE | 4644.3277 | 9260.6456 | 10,335.7831 |

| RMSE | 68.1493 | 96.2322 | 101.6651 |

| 0.9965 | 0.9936 | 0.9942 | |

| MAPE (%) | 0.8022 | 1.2450 | 1.2025 |

| NRMSE | 0.0090 | 0.0117 | 0.0128 |

| Metric | ANN [7] | SVD-ARIMA [6] | FF ANN [9] | ENTSO-E | LightGBM |

|---|---|---|---|---|---|

| MAE | 112.9198 | 220.5342 | - | 133.3072 | 69.1205 |

| MSE | 22,111.6668 | - | - | 33,334.4298 | 10,335.7831 |

| RMSE | - | 267.3871 | - | 182.5771 | 101.6651 |

| - | - | - | 0.9813 | 0.9942 | |

| MAPE (%) | 1.92 | 4.3286 | 2.61 | 2.3282 | 1.2025 |

| NRMSE | - | - | 0.036 | 0.0229 | 0.0128 |

| Model | Training Time | Inference Speed | Resource Usage |

|---|---|---|---|

| LightGBM | 3.55 s (CPU) | >10,000/s | Low, <10 MB |

| CNN | ∼8 min (GPU) | ∼4500/s | Moderate |

| LSTM | ∼12 min (GPU) | ∼3200/s | High |

| GRU | ∼10 min (GPU) | ∼3600/s | Medium |

| CNN-LSTM | ∼18 min (GPU) | ∼2800/s | Highest |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shiblee, M.F.H.; Koukaras, P. Short-Term Load Forecasting in the Greek Power Distribution System: A Comparative Study of Gradient Boosting and Deep Learning Models. Energies 2025, 18, 5060. https://doi.org/10.3390/en18195060

Shiblee MFH, Koukaras P. Short-Term Load Forecasting in the Greek Power Distribution System: A Comparative Study of Gradient Boosting and Deep Learning Models. Energies. 2025; 18(19):5060. https://doi.org/10.3390/en18195060

Chicago/Turabian StyleShiblee, Md Fazle Hasan, and Paraskevas Koukaras. 2025. "Short-Term Load Forecasting in the Greek Power Distribution System: A Comparative Study of Gradient Boosting and Deep Learning Models" Energies 18, no. 19: 5060. https://doi.org/10.3390/en18195060

APA StyleShiblee, M. F. H., & Koukaras, P. (2025). Short-Term Load Forecasting in the Greek Power Distribution System: A Comparative Study of Gradient Boosting and Deep Learning Models. Energies, 18(19), 5060. https://doi.org/10.3390/en18195060