Acoustic-Based Condition Recognition for Pumped Storage Units Using a Hierarchical Cascaded CNN and MHA-LSTM Model

Abstract

1. Introduction

2. Methods and Datasets

2.1. Method

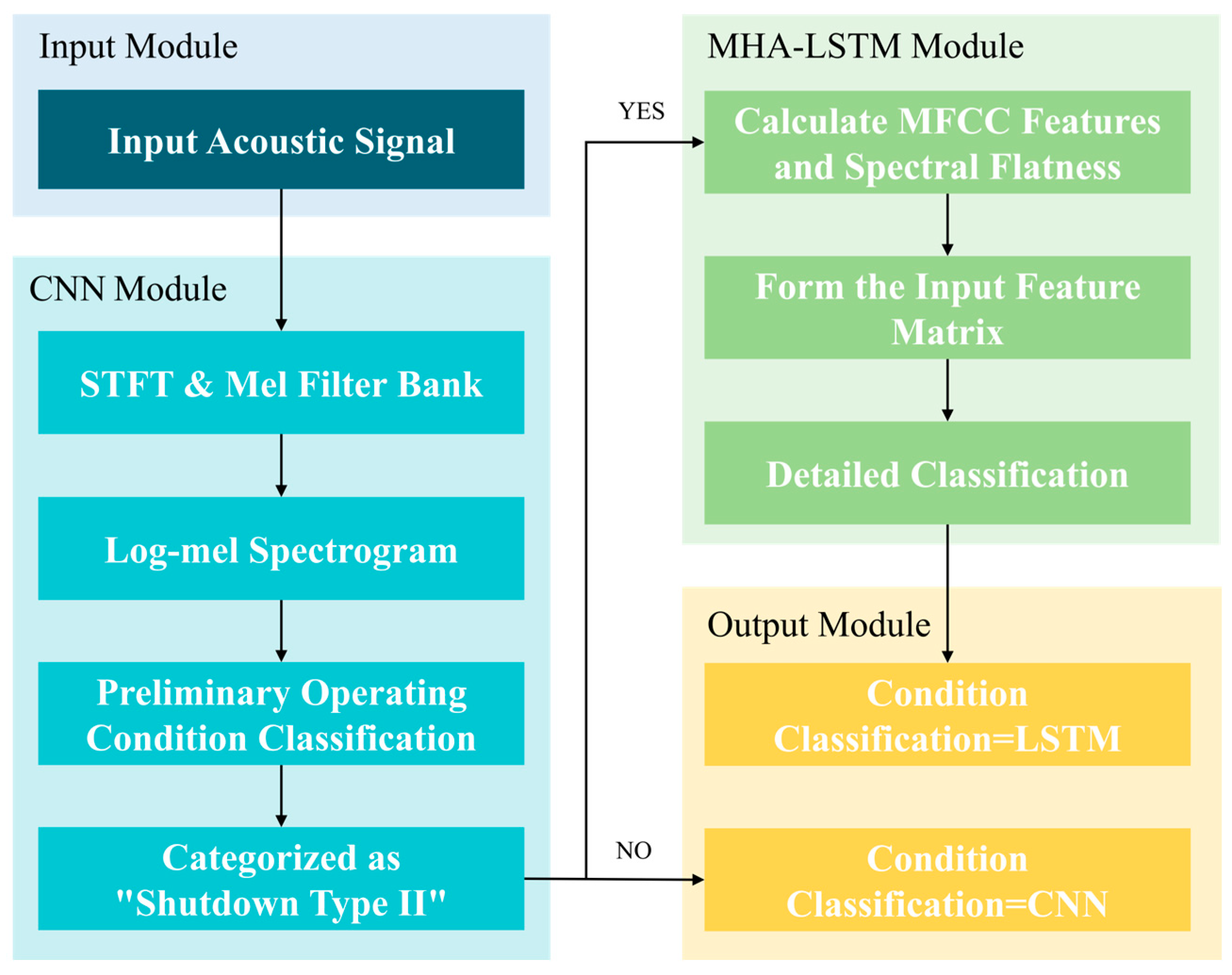

2.1.1. Hierarchical Cascade

2.1.2. Convolutional Neural Network

2.1.3. Long Short-Term Memory Network

2.1.4. Multi-Head Attention

2.1.5. MHA-LSTM

2.1.6. Assessment of Indicators

2.2. Datasets

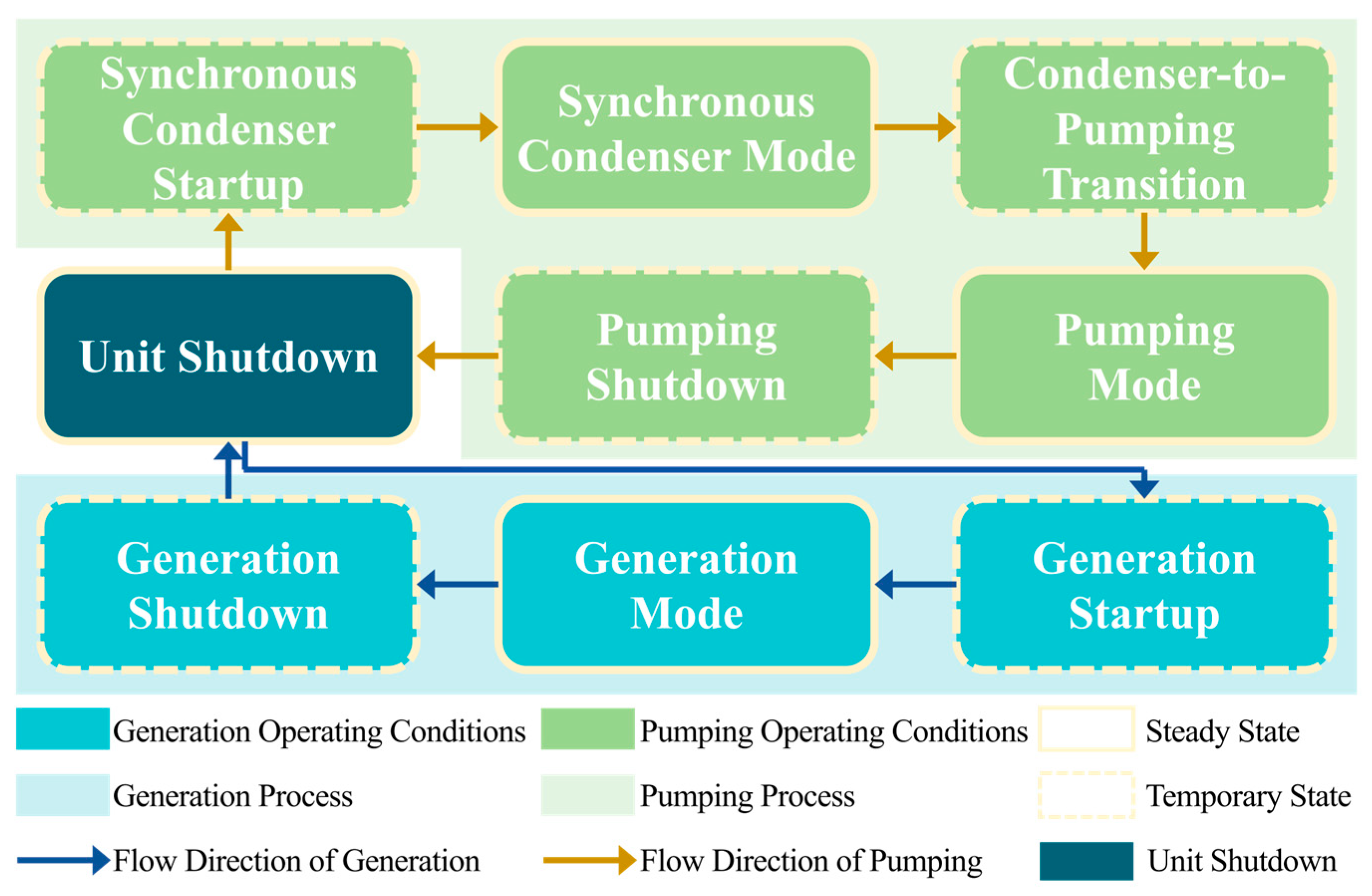

2.2.1. Working Condition Type

2.2.2. Data Collection

3. Results

3.1. Frequency-Domain Feature Analysis

3.1.1. Data Preprocessing

3.1.2. Mel Spectrogram Comparison

3.2. Analysis of Model Results

3.2.1. CNN Model Results

3.2.2. MHA-LSTM Model Results

3.2.3. CNN+MHA-LSTM Model Results

3.3. Comparative Evaluation of Models

4. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bogdanov, D.; Ram, M.; Aghahosseini, A.; Gulagi, A.; Oyewo, A.S.; Child, M.; Caldera, U.; Sadovskaia, K.; Farfan, J.; Barbosa, L.D.S.N.S. Low-Cost Renewable Electricity as the Key Driver of the Global Energy Transition towards Sustainability. Energy 2021, 227, 120467. [Google Scholar] [CrossRef]

- Wen, F.; Lu, G.; Huang, J. Integrated Energy System towards Carbon Peak and Neutrality Targets. J. Glob. Energy Interconnect. 2022, 5, 116–117. [Google Scholar]

- China to Vigorously Develop Wind and Solar Power in the Next Decade. Available online: https://www.gov.cn/xinwen/2020-12/15/content_5569658.htm (accessed on 7 July 2025).

- Chen, H.; Li, H.; Xu, Y.; Chen, M.; Wang, L.; Dai, X.; Xu, D.; Tang, X.; Li, X.; Hu, Y. Research Progress on Energy Storage Technologies of China in 2022. Energy Storage Sci. Technol. 2023, 12, 1516. [Google Scholar]

- Zhang, W. Study on Hybrid Intelligent Fault Diagnosis and State Tendency Prediction for Hydroelectric Generator Units. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2019. [Google Scholar]

- Wei, B.; Ji, C. Study on Rotor Operation Stability of High-Speed Large-Capacity Generator-Motor: The Accident of Rotor Pole in Huizhou Pumped-Storage Power Station. Shuili Fadian/Water Power 2010, 36, 57–60. [Google Scholar]

- Velasquez, V.; Flores, W. Machine learning approach for predictive maintenance in hydroelectric power plants. In Proceedings of the 2022 IEEE Biennial Congress of Argentina (ARGENCON), Buenos Aires, Argentina, 7–9 September 2022; pp. 1–6. [Google Scholar]

- Wang, H.; Ma, Z. Regulation Characteristics and Load Optimization of Pump-Turbine in Variable-Speed Operation. Energies 2021, 14, 8484. [Google Scholar] [CrossRef]

- Yang, K.; OuYang, G.; Ye, L. Research upon fault diagnosis expert system based on fuzzy neural network. In Proceedings of the 2009 WASE International Conference on Information Engineering, Taiyuan, China, 10–11 July 2009; pp. 410–413. [Google Scholar]

- Feng, Z.; Liang, M.; Chu, F. Recent Advances in Time–Frequency Analysis Methods for Machinery Fault Diagnosis: A Review with Application Examples. Mech. Syst. Signal Process. 2013, 38, 165–205. [Google Scholar] [CrossRef]

- Mechefske, C.K. Objective Machinery Fault Diagnosis Using Fuzzy Logic. Mech. Syst. Signal Process. 1998, 12, 855–862. [Google Scholar] [CrossRef]

- Wu, J.; Wang, Y.; Bai, M.R. Development of an Expert System for Fault Diagnosis in Scooter Engine Platform Using Fuzzy-Logic Inference. Expert Syst. Appl. 2007, 33, 1063–1075. [Google Scholar] [CrossRef]

- Hui, K.H.; Hee, L.M.; Leong, M.S.; Abdelrhman, A.M. Time-Frequency Signal Analysis in Machinery Fault Diagnosis: Review. Adv. Mater. Res. 2014, 845, 41–45. [Google Scholar] [CrossRef]

- Singh, G.K.; Ahmed Saleh Al Kazzaz, S.A. Induction Machine Drive Condition Monitoring and Diagnostic Research—A Survey. Electr. Power Syst. Res. 2003, 64, 145–158. [Google Scholar] [CrossRef]

- Yu, L.; Yao, X.; Yang, J.; Li, C. Gear Fault Diagnosis through Vibration and Acoustic Signal Combination Based on Convolutional Neural Network. Information 2020, 11, 266. [Google Scholar] [CrossRef]

- Ball, A.D.; Gu, F.; Li, W. The condition monitoring of diesel engines using acoustic measurements part 2: Fault detection and diagnosis. In Proceedings of the SAE 2000 World Congress, Detroit, MI, USA, 6–9 March 2000; SAE International: Warrendale, PA, USA, 2000; pp. 1–10. [Google Scholar]

- Zhu, Z.; Peng, G.; Chen, Y.; Gao, H. A Convolutional Neural Network Based on a Capsule Network with Strong Generalization for Bearing Fault Diagnosis. Neurocomputing 2019, 323, 62–75. [Google Scholar] [CrossRef]

- Kumar, A.; Gandhi, C.; Zhou, Y.; Kumar, R.; Xiang, J. Improved Deep Convolution Neural Network (CNN) for the Identification of Defects in the Centrifugal Pump Using Acoustic Images. Appl. Acoust. 2020, 167, 107399. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Dao, F.; Zeng, Y.; Qian, J. Fault Diagnosis of Hydro-Turbine via the Incorporation of Bayesian Algorithm Optimized CNN-LSTM Neural Network. Energy 2024, 290, 130326. [Google Scholar] [CrossRef]

- Zhou, J.; Shan, Y.; Liu, J.; Xu, Y.; Zheng, Y. Degradation Tendency Prediction for Pumped Storage Unit Based on Integrated Degradation Index Construction and Hybrid CNN-LSTM Model. Sensors 2020, 20, 4277. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Zhong, G.; Yu, H. A Review on the Attention Mechanism of Deep Learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, T.; Jia, M.; Cai, J. Remaining Useful Life Prediction of Motor Bearings Using Multi-Sensor Fusion and MHA-LSTM. Yiqi Yibiao Xuebao (Chin. J. Sci. Instrum.) 2024, 45, 84–93. [Google Scholar]

- Fan, X.; Meng, F.; Deng, J.; Semnani, A.; Zhao, P.; Zhang, Q. Transformative Reconstruction of Missing Acoustic Well Logs Using Multi-Head Self-Attention BiRNNs. Geoenergy Sci. Eng. 2025, 245, 213513. [Google Scholar] [CrossRef]

- Yang, Z.; Li, C.; Wang, X.; Chen, H. Intelligent Fault Monitoring and Diagnosis of Tunnel Fans Using a Hierarchical Cascade Forest. ISA Trans. 2023, 136, 442–454. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, T.; Chen, C.; Wang, G.; Zhang, Z.; Xiao, T. A Hierarchical Method Based on Improved Deep Forest and Case-Based Reasoning for Railway Turnout Fault Diagnosis. Eng. Fail. Anal. 2021, 127, 105446. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 2002, 86, 2278–2324. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, X.; Han, S.; Lin, J.; Han, Q. Fault Diagnosis for Abnormal Wear of Rolling Element Bearing Fusing Oil Debris Monitoring. Sensors 2023, 23, 3402. [Google Scholar] [CrossRef]

- Zhang, H.; Li, K.; Liu, T.; Liu, Y.; Hu, J.; Zuo, Q.; Jiang, L. Analysis the Composition of Hydraulic Radial Force on Centrifugal Pump Impeller: A Data-Centric Approach Based on CFD Datasets. Appl. Sci. 2025, 15, 7597. [Google Scholar] [CrossRef]

| Layer | Kernel Size | Stride | Number of Channels | Output Size |

|---|---|---|---|---|

| Conv2D | 3 × 3 | 1 × 1 | 32 | 222 × 222 × 32 |

| MaxPooling2D | 2 × 2 | 2 × 2 | 32 | 111 × 111 × 32 |

| Conv2D | 3 × 3 | 1 × 1 | 64 | 109 × 109 × 64 |

| MaxPooling2D | 2 × 2 | 2 × 2 | 64 | 54 × 54 × 64 |

| Conv2D | 3 × 3 | 1 × 1 | 128 | 52 × 52 × 128 |

| MaxPooling2D | 2 × 2 | 2 × 2 | 128 | 26 × 26 × 128 |

| Flatten | / | / | / | 26 × 26 × 128 |

| Dense | / | / | 128 | 128 |

| Dropout | / | / | / | 128 |

| Dense (Softmax) | / | / | 8 | 8 |

| Condition Type | Total Samples | Training Set | Testing Set |

|---|---|---|---|

| unit shutdown | 500 | 350 | 150 |

| generation mode | 500 | 350 | 150 |

| generation startup | 177 | 124 | 53 |

| generation shutdown | 470 | 329 | 141 |

| pumping shutdown | 267 | 187 | 80 |

| pumping mode | 500 | 350 | 150 |

| Synchronous Condenser startup | 301 | 211 | 90 |

| condenser-to-pumping transition | 167 | 117 | 50 |

| Classification | Precision | Recall | F1 Score |

|---|---|---|---|

| Unit Shutdown | 0.99 | 1.00 | 0.99 |

| Shutdown Class II | 0.96 | 0.92 | 0.94 |

| Generation Mode | 0.97 | 0.99 | 0.98 |

| Generation Startup | 0.74 | 0.85 | 0.79 |

| Pumping Mode | 0.95 | 0.97 | 0.96 |

| Synchronous Condenser Mode | 0.95 | 0.96 | 0.96 |

| Synchronous Condenser Startup | 0.85 | 0.81 | 0.83 |

| Condenser-to-Pumping Transition | 0.89 | 0.78 | 0.83 |

| Classification | Prediction | Recall |

|---|---|---|

| generation shutdown | 0.95 | 0.95 |

| pumping shutdown | 0.91 | 0.91 |

| Classification | Precision | Recall | F1 Score |

|---|---|---|---|

| Unit Shutdown | 0.99 | 1.00 | 0.99 |

| Generation Shutdown | 0.91 | 0.84 | 0.87 |

| Generation Mode | 0.97 | 0.99 | 0.98 |

| Generation Startup | 0.74 | 0.85 | 0.79 |

| Pumping Shutdown | 0.86 | 0.88 | 0.87 |

| Pumping Mode | 0.95 | 0.97 | 0.96 |

| Synchronous Condenser Mode | 0.95 | 0.96 | 0.96 |

| Synchronous Condenser Startup | 0.85 | 0.81 | 0.83 |

| Condenser-to-Pumping Transition | 0.89 | 0.78 | 0.83 |

| Classification Model | Accuracy (%) | Precision (%) |

|---|---|---|

| CNN | 57.56 | 55.45 |

| LSTM | 76.46 | 66.65 |

| GRU | 78.50 | 69.81 |

| CNN+MHA-LSTM | 92.22 | 92.26 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, L.; Hu, N.; Zheng, H.; Zhou, X.; Wang, J.; Li, W.; Lu, Y.; Zhang, Z.; Lin, J. Acoustic-Based Condition Recognition for Pumped Storage Units Using a Hierarchical Cascaded CNN and MHA-LSTM Model. Energies 2025, 18, 4269. https://doi.org/10.3390/en18164269

Kong L, Hu N, Zheng H, Zhou X, Wang J, Li W, Lu Y, Zhang Z, Lin J. Acoustic-Based Condition Recognition for Pumped Storage Units Using a Hierarchical Cascaded CNN and MHA-LSTM Model. Energies. 2025; 18(16):4269. https://doi.org/10.3390/en18164269

Chicago/Turabian StyleKong, Linghua, Nan Hu, Hongyong Zheng, Xulei Zhou, Jian Wang, Weijiao Li, Yang Lu, Ziwei Zhang, and Jianyi Lin. 2025. "Acoustic-Based Condition Recognition for Pumped Storage Units Using a Hierarchical Cascaded CNN and MHA-LSTM Model" Energies 18, no. 16: 4269. https://doi.org/10.3390/en18164269

APA StyleKong, L., Hu, N., Zheng, H., Zhou, X., Wang, J., Li, W., Lu, Y., Zhang, Z., & Lin, J. (2025). Acoustic-Based Condition Recognition for Pumped Storage Units Using a Hierarchical Cascaded CNN and MHA-LSTM Model. Energies, 18(16), 4269. https://doi.org/10.3390/en18164269