Modeling the Higher Heating Value of Spanish Biomass via Neural Networks and Analytical Equations

Abstract

1. Introduction

2. Materials and Methods

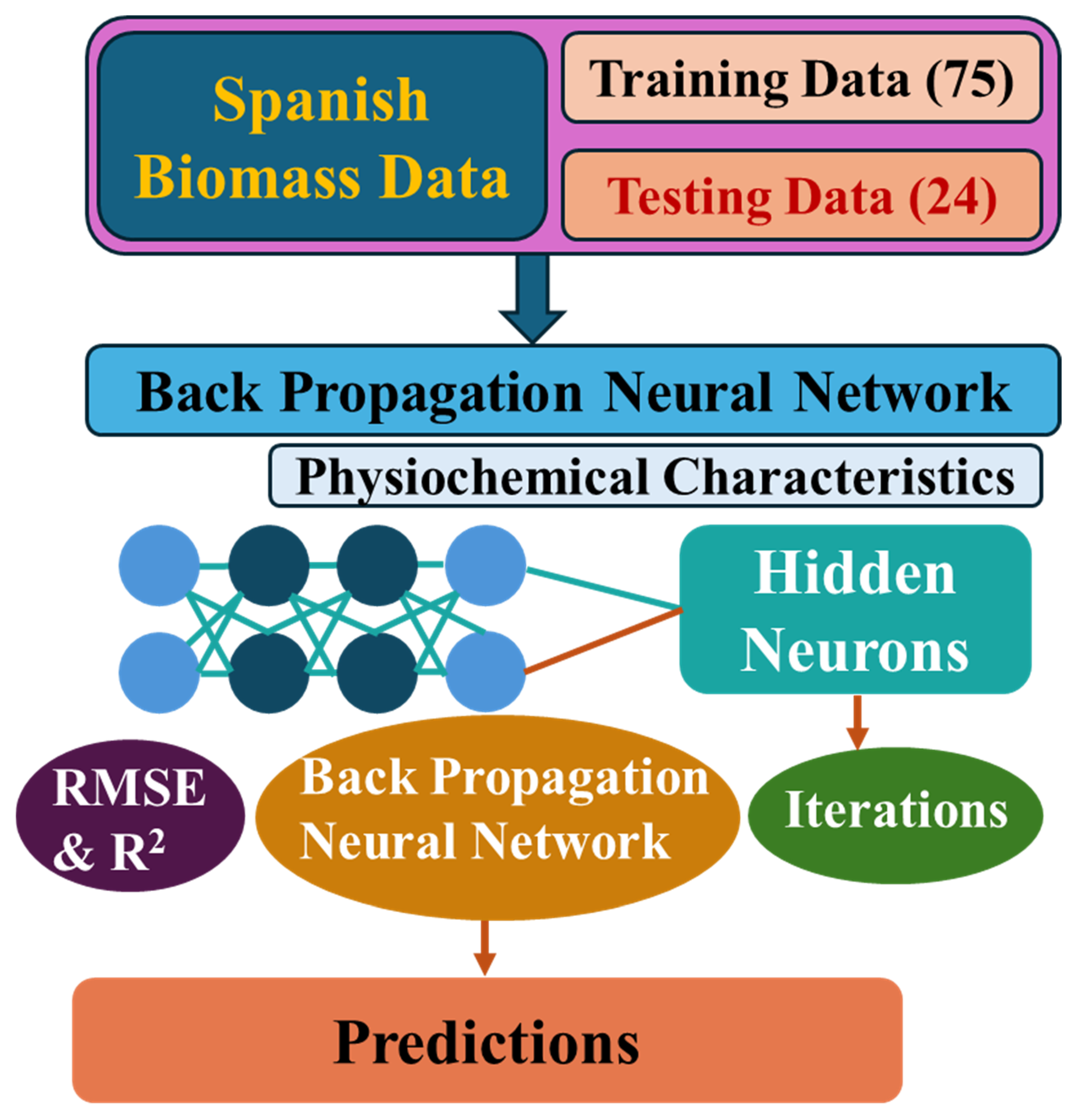

2.1. Dataset and Preprocessing

2.2. ANN Model Architecture

2.3. Training Procedure

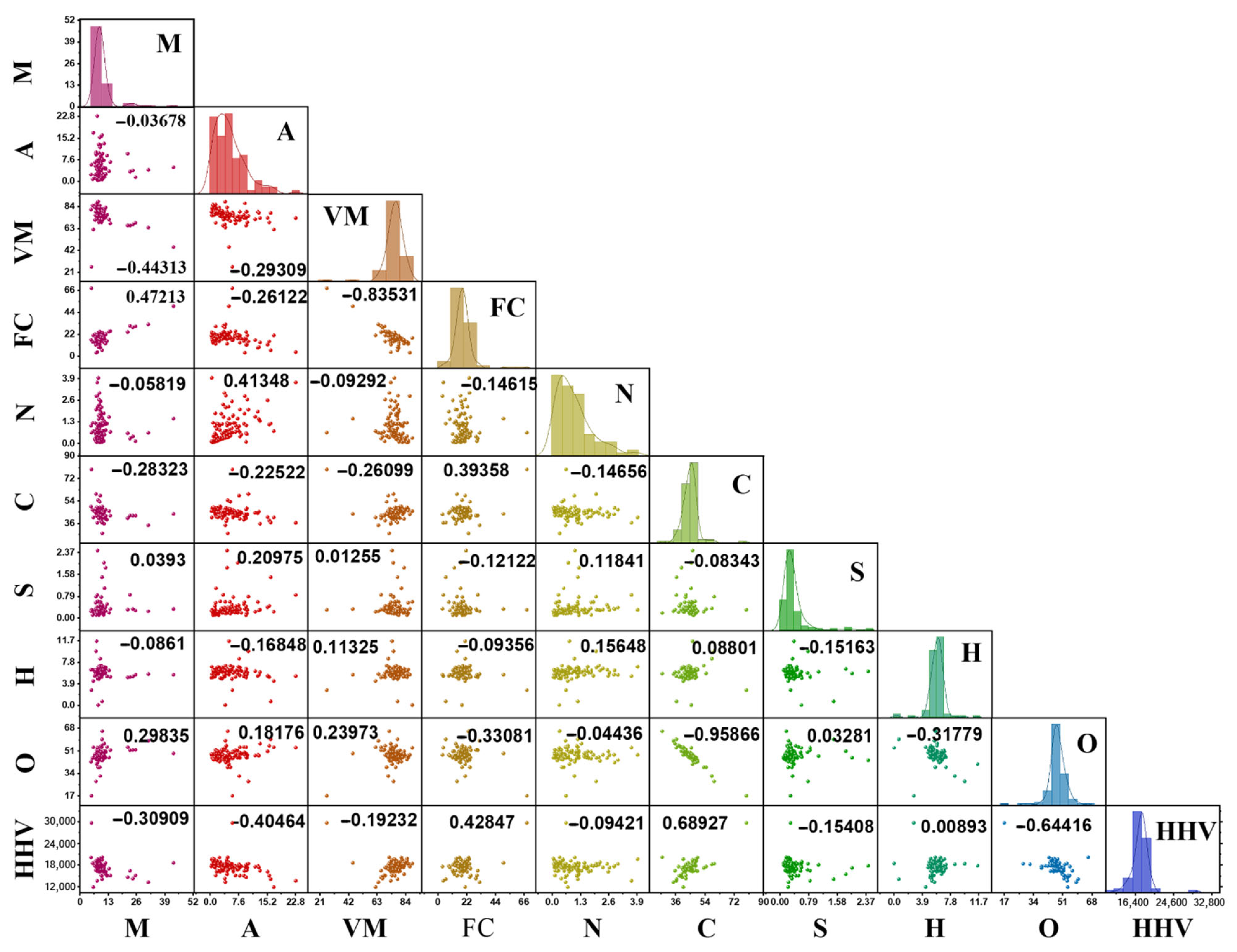

2.4. Scatter Matrix Plots

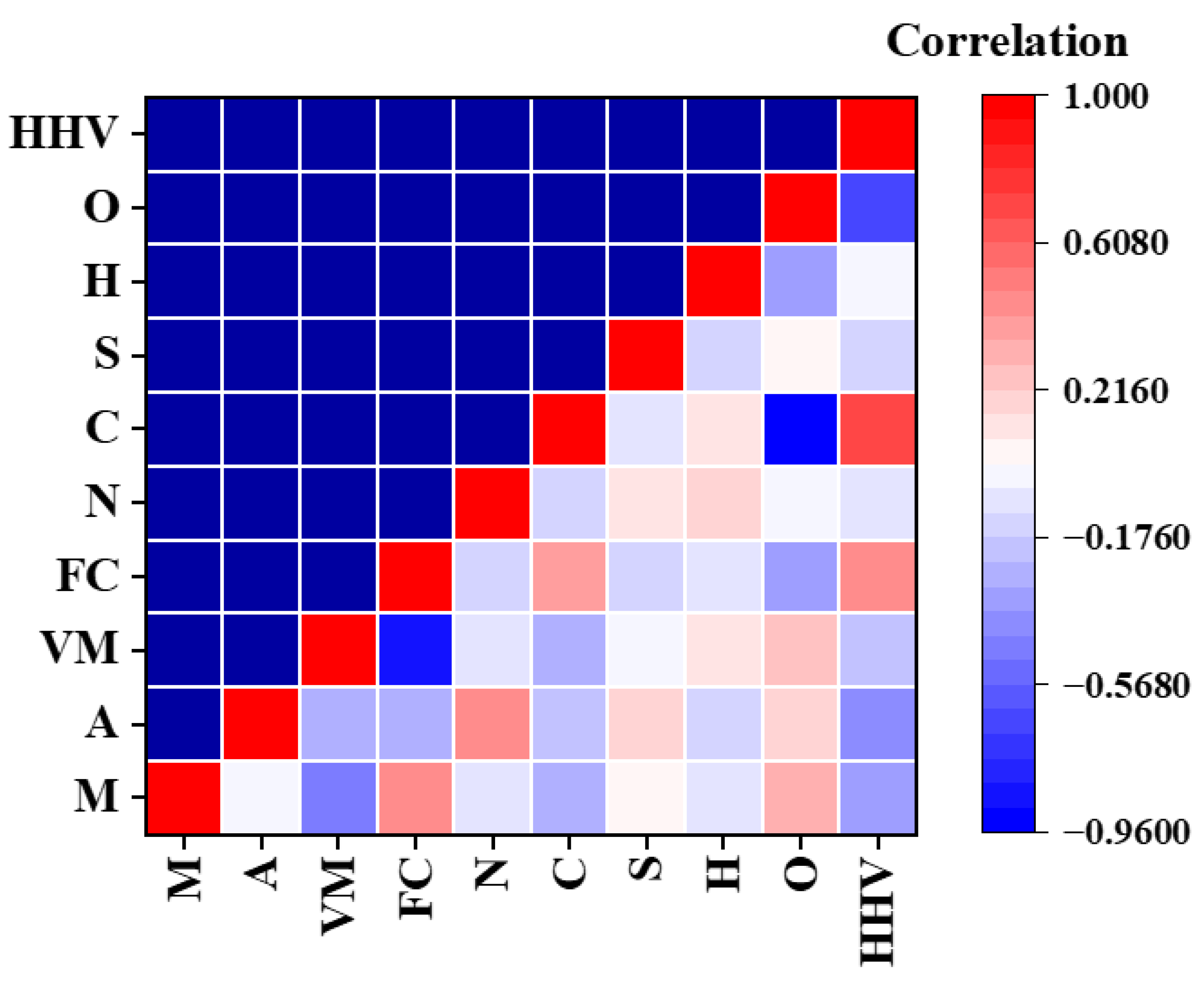

2.5. Correlation Heatmap

3. Results and Discussion

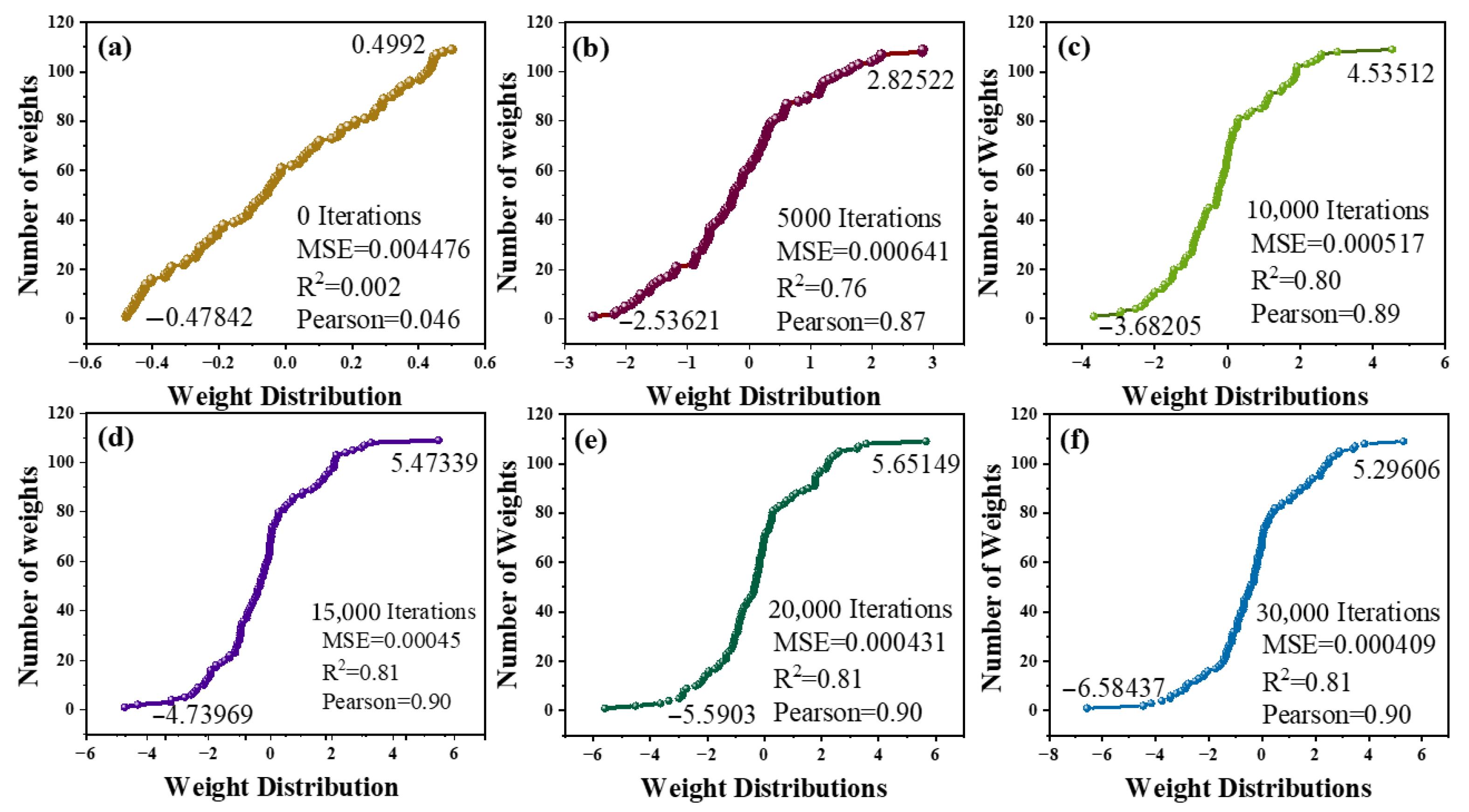

3.1. Transformation of the ANN Model Synaptic Weights

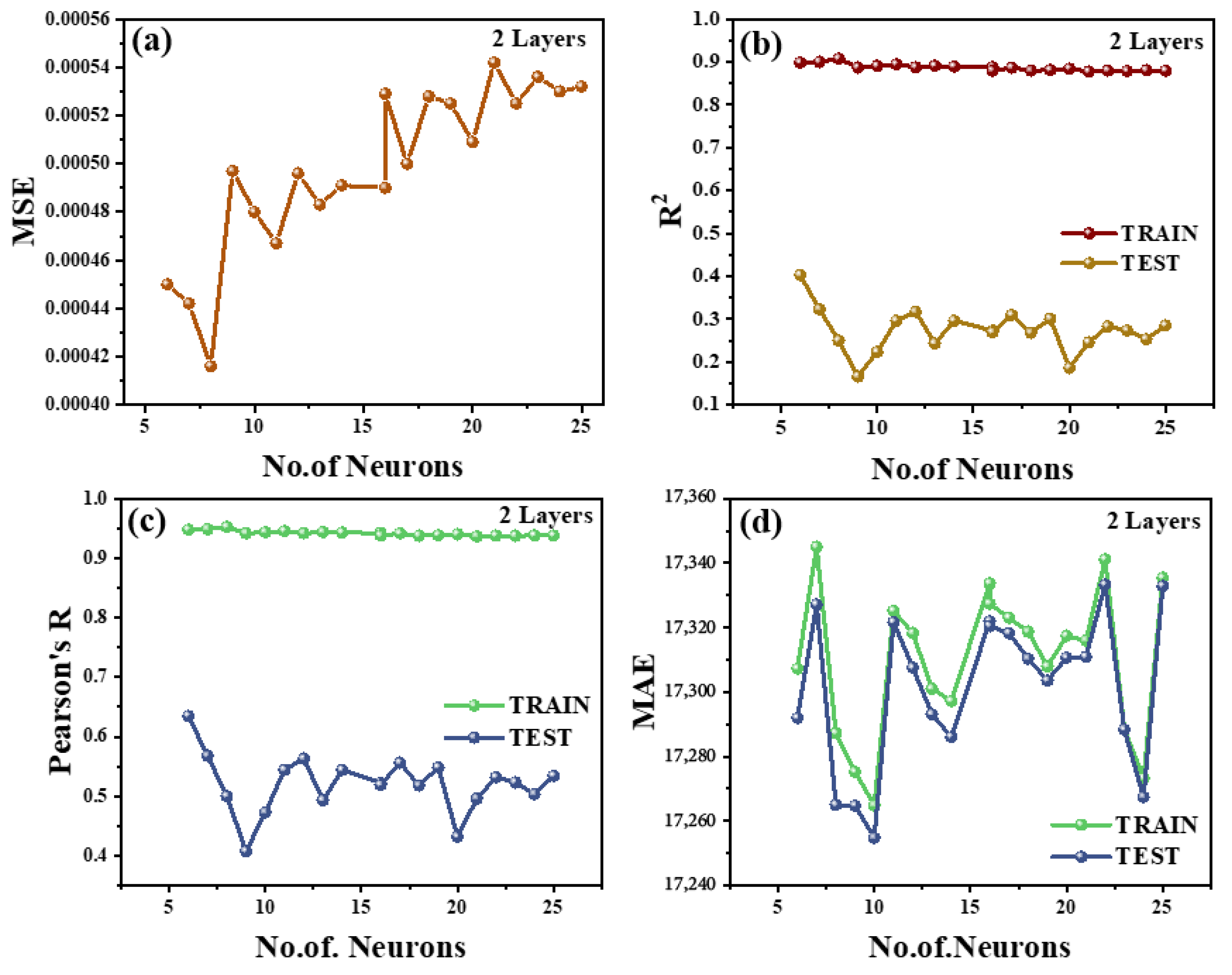

3.2. Neural Network Architecture and Hyperparameter Tuning Results

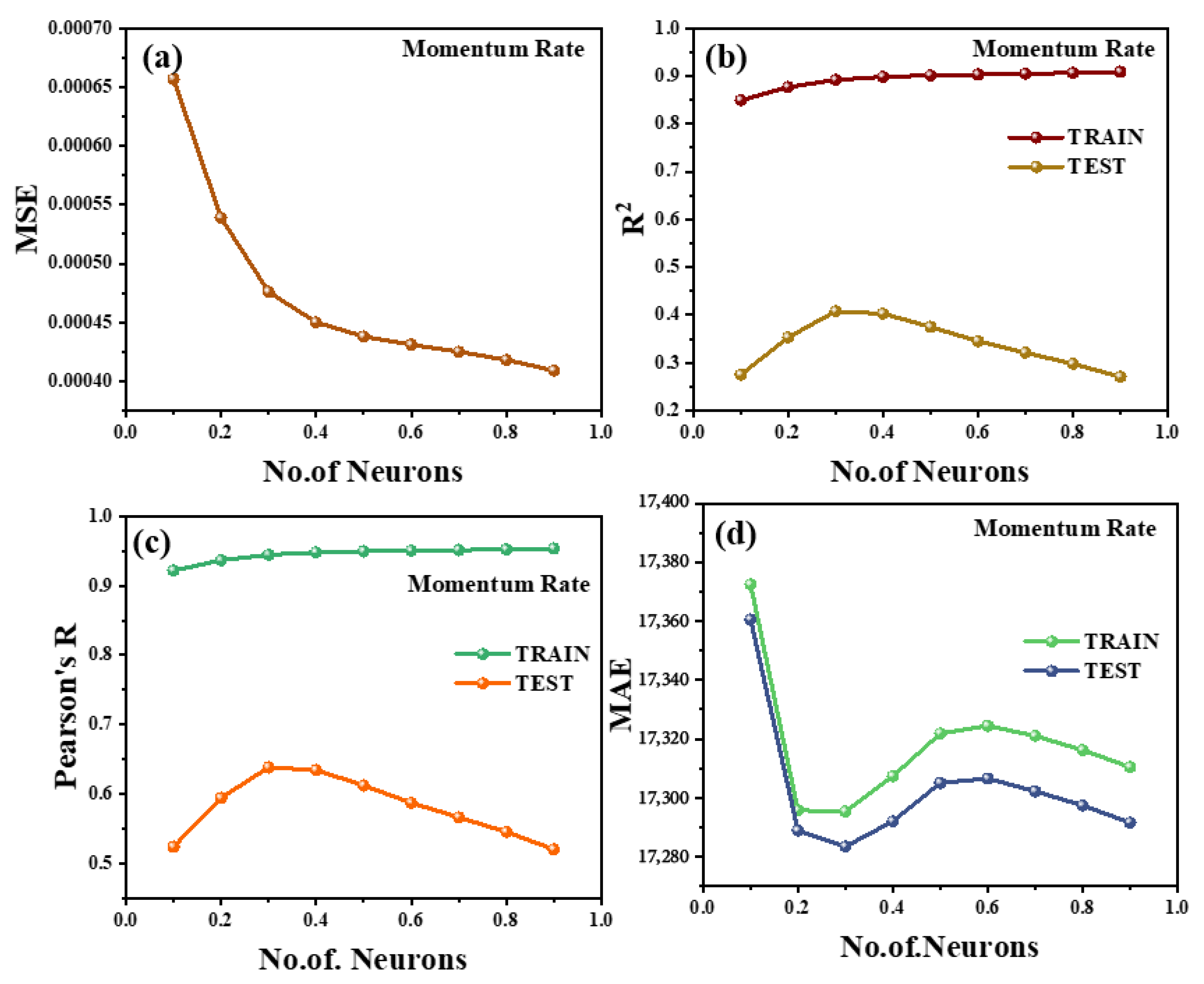

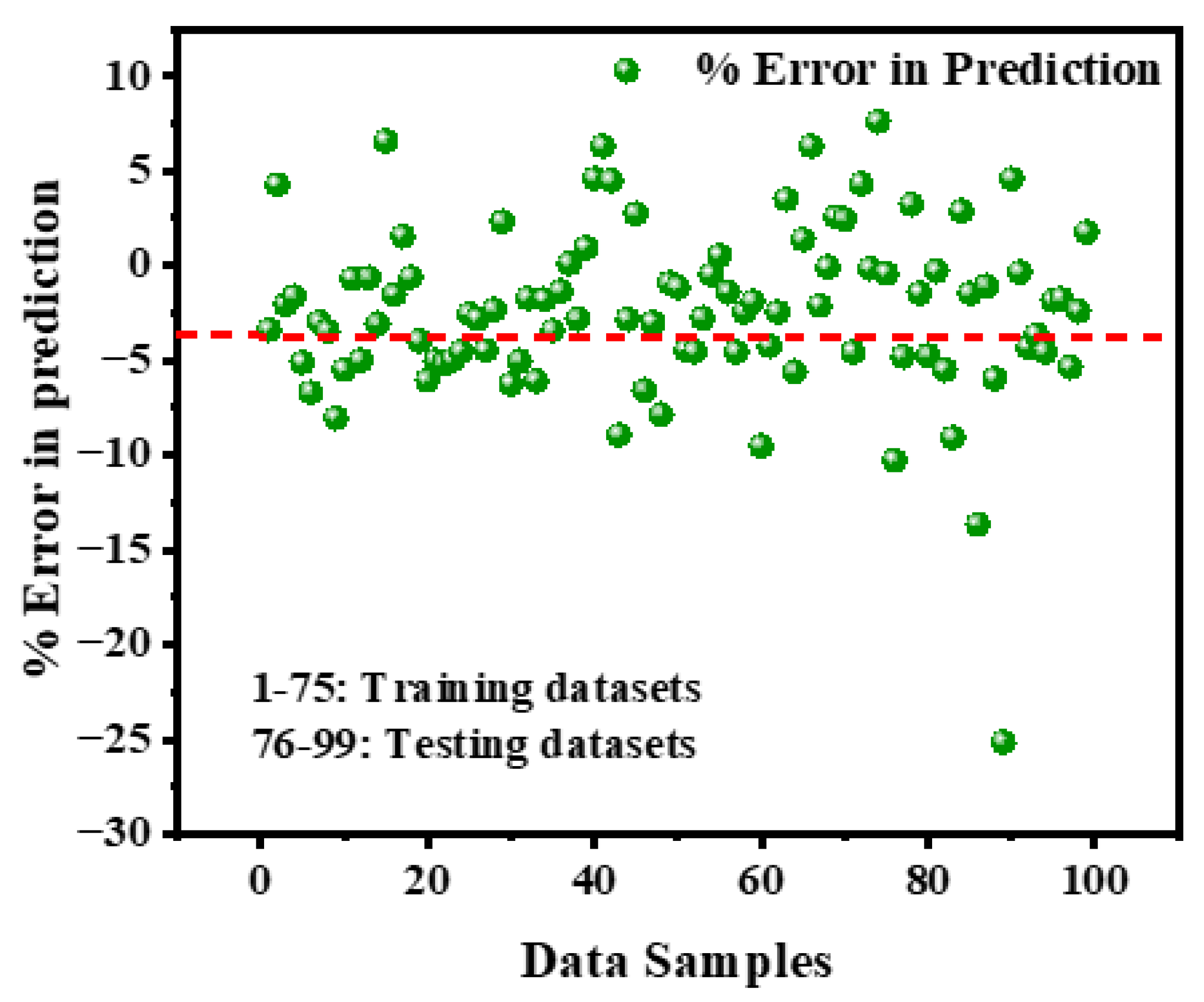

3.3. Momentum Rate (α) Tuning

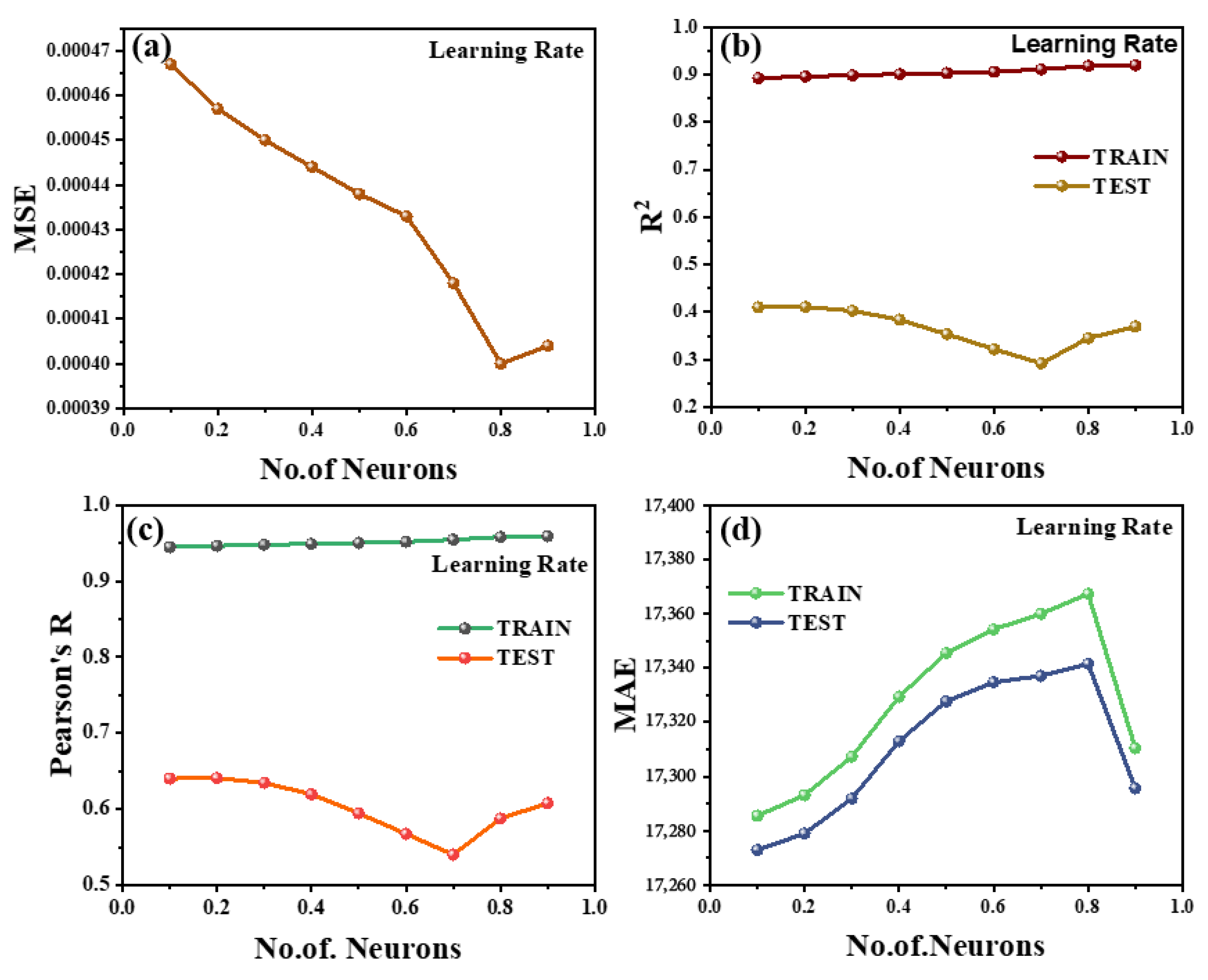

3.4. Learning Rate (η) Tuning

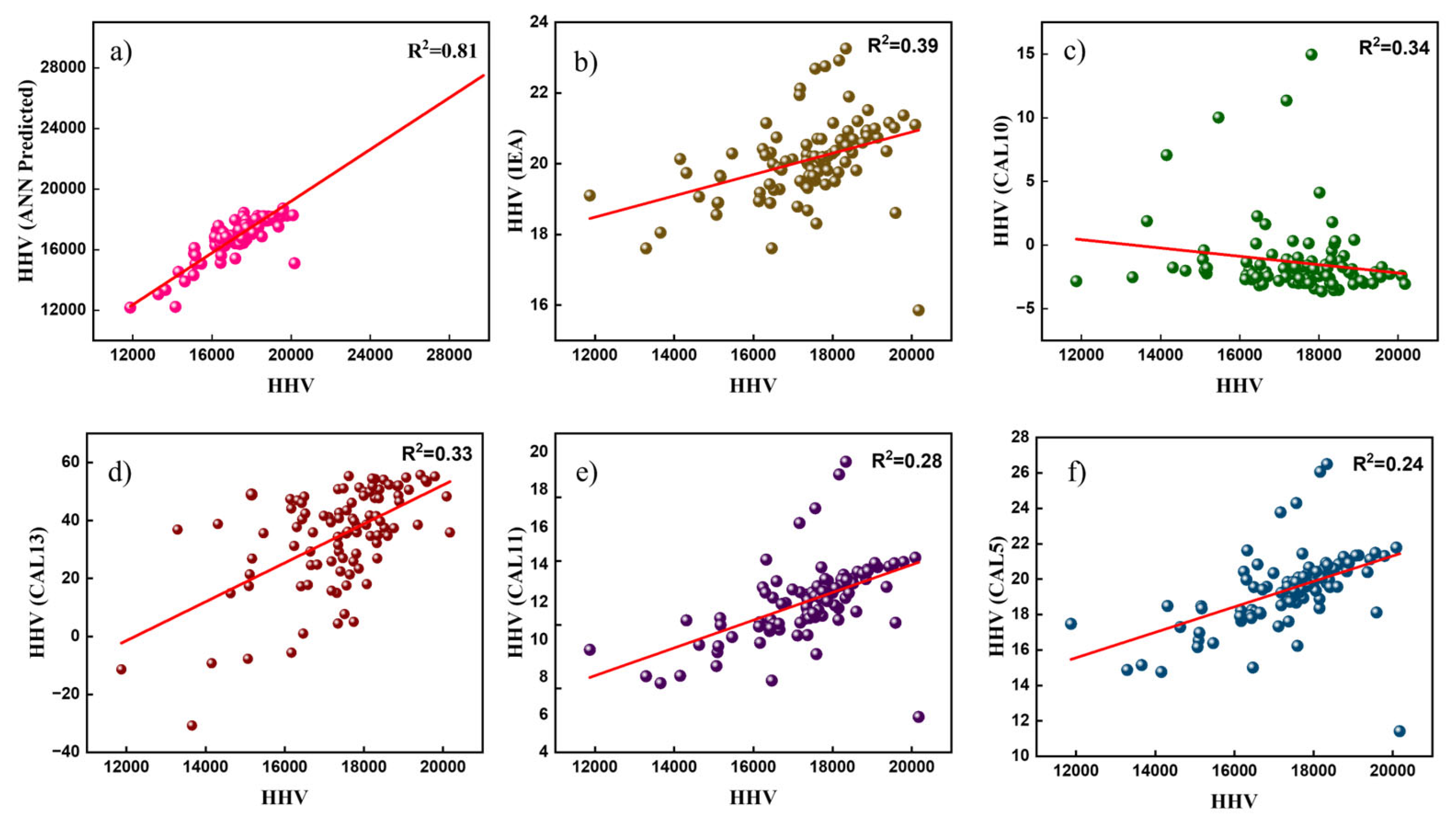

3.5. Comparison Between ANN Models with Analytical Equation Predictions

3.6. Comparison with Multivariable Linear Regression

3.7. Generalization Performance on Non-Spanish Biomass Samples

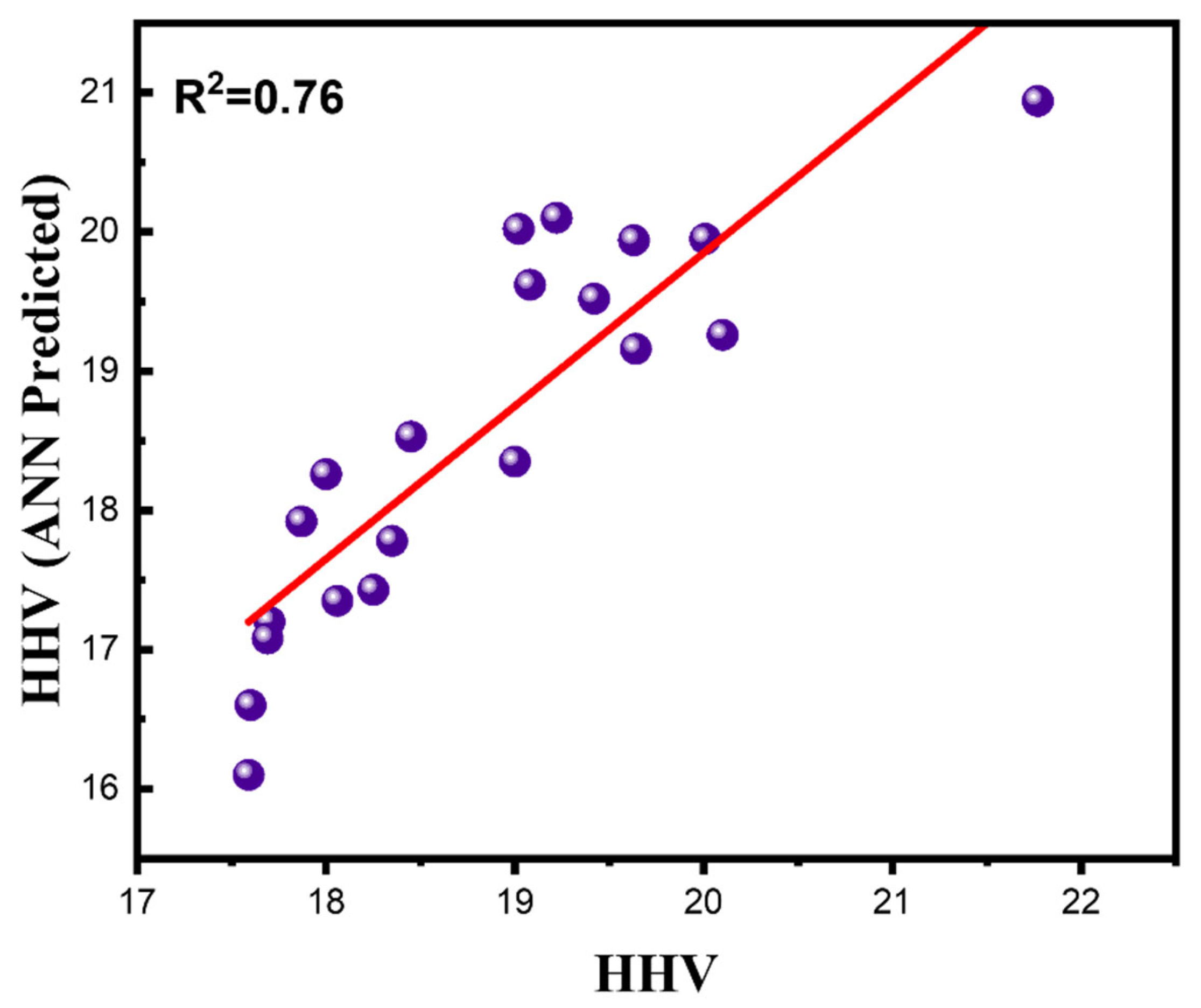

3.8. Index of Relative Importance (IRI) for the Estimation of the Qualitative Influence of Input Variables on Output

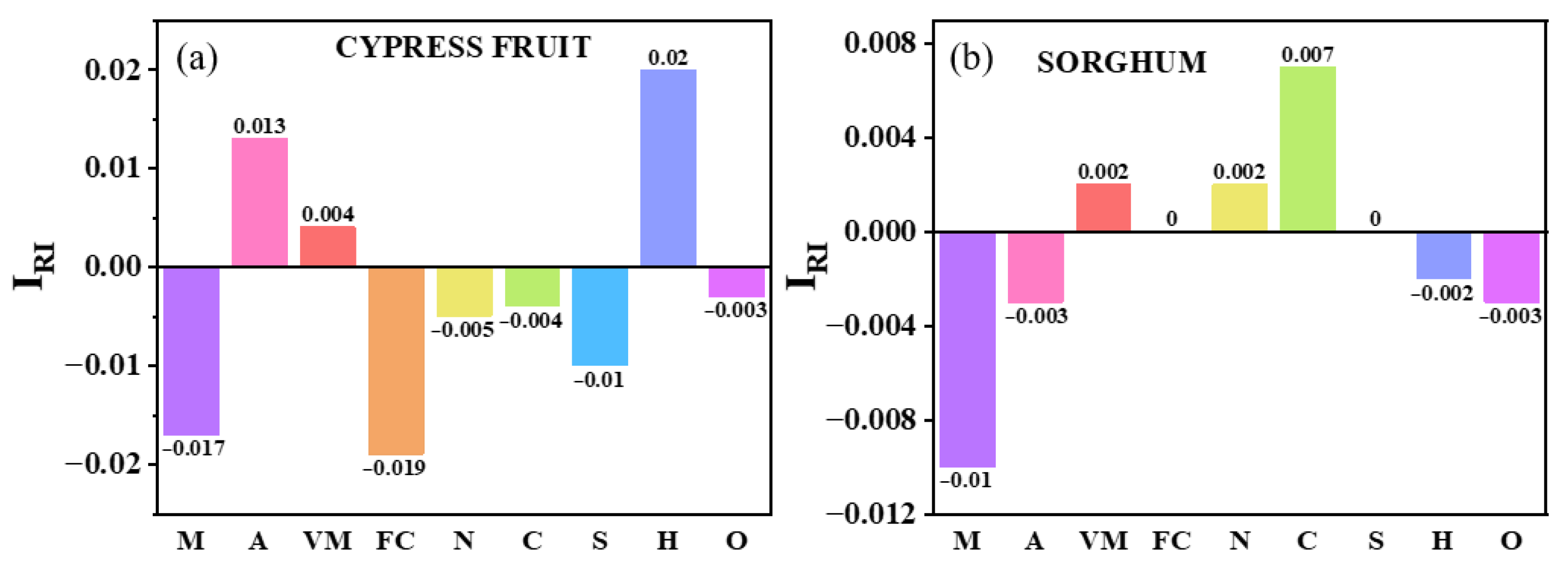

3.9. GUI Implementation

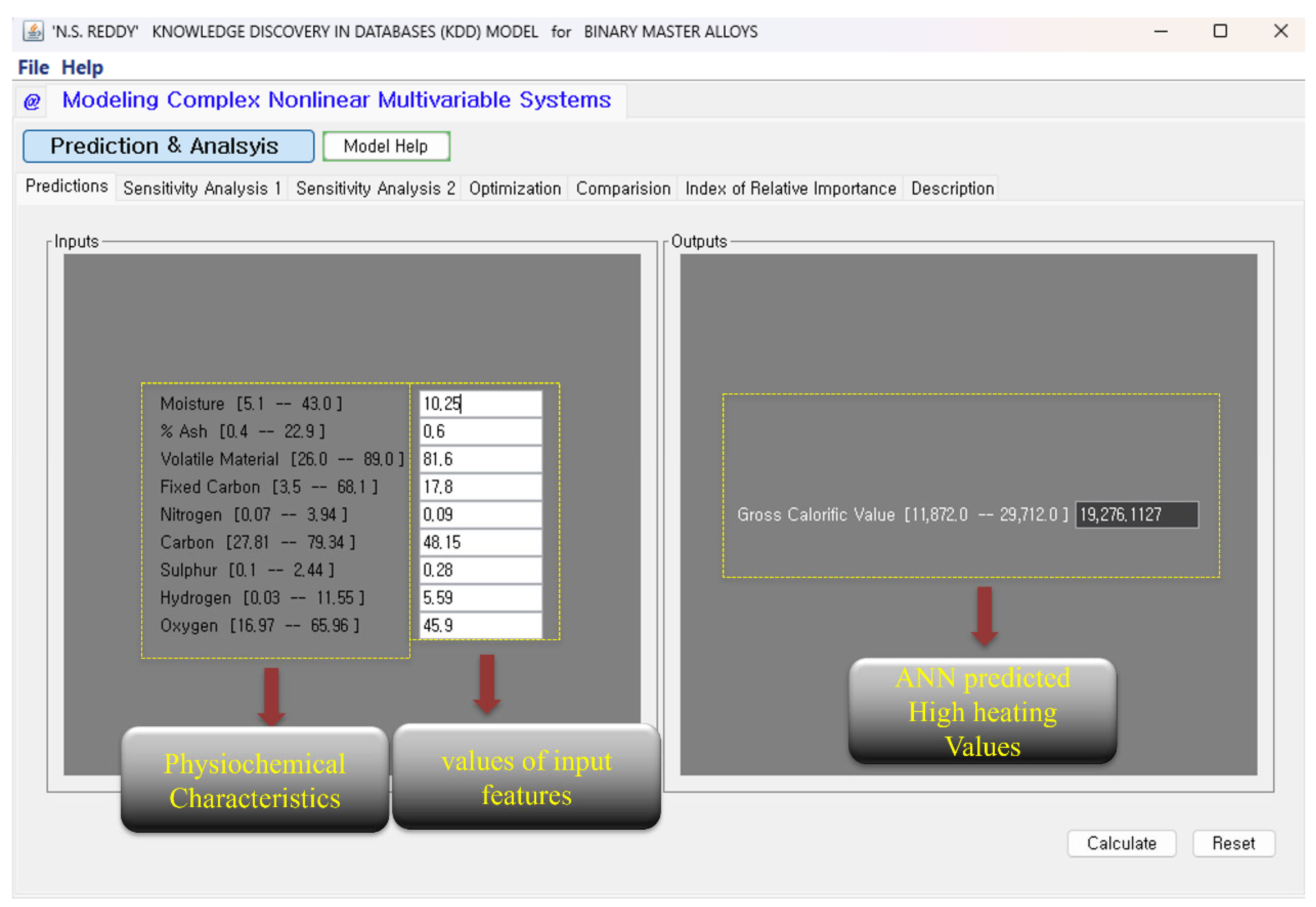

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Mignogna, D.; Szabó, M.; Ceci, P.; Avino, P. Biomass Energy and Biofuels: Perspective, Potentials, and Challenges in the Energy Transition. Sustainability 2024, 16, 7036. [Google Scholar] [CrossRef]

- Perea-Moreno, M.-A.; Samerón-Manzano, E.; Perea-Moreno, A.-J. Biomass as Renewable Energy: Worldwide Research Trends. Sustainability 2019, 11, 863. [Google Scholar] [CrossRef]

- Kujawska, J.; Kulisz, M.; Oleszczuk, P.; Cel, W. Improved Prediction of the Higher Heating Value of Biomass Using an Artificial Neural Network Model Based on the Selection of Input Parameters. Energies 2023, 16, 4162. [Google Scholar] [CrossRef]

- Shehab, M.; Stratulat, C.; Ozcan, K.; Boztepe, A.; Isleyen, A.; Zondervan, E.; Moshammer, K. A comprehensive analysis of the risks associated with the determination of biofuels’ calorific value by bomb calorimetry. Energies 2022, 15, 2771. [Google Scholar] [CrossRef]

- Noushabadi, A.S.; Dashti, A.; Ahmadijokani, F.; Hu, J.; Mohammadi, A.H. Estimation of higher heating values (HHVs) of biomass fuels based on ultimate analysis using machine learning techniques and improved equation. Renew. Energy 2021, 179, 550–562. [Google Scholar] [CrossRef]

- Qian, C.; Li, Q.; Zhang, Z.; Wang, X.; Hu, J.; Cao, W. Prediction of higher heating values of biochar from proximate and ultimate analysis. Fuel 2020, 265, 116925. [Google Scholar] [CrossRef]

- Aladejare, A.E.; Onifade, M.; Lawal, A.I. Application of metaheuristic based artificial neural network and multilinear regression for the prediction of higher heating values of fuels. Int. J. Coal Prep. Util. 2022, 42, 1830–1851. [Google Scholar] [CrossRef]

- Zhou, L.; Song, Y.; Ji, W.; Wei, H. Machine learning for combustion. Energy AI 2022, 7, 100128. [Google Scholar] [CrossRef]

- Ishtiaq, M.; Tiwari, S.; Panigrahi, B.B.; Seol, J.B.; Reddy, N.S. Neural Network-Based Modeling of the Interplay between Composition, Service Temperature, and Thermal Conductivity in Steels for Engineering Applications. Int. J. Thermophys. 2024, 45, 1–31. [Google Scholar] [CrossRef]

- Pattanayak, S.; Loha, C.; Hauchhum, L.; Sailo, L. Application of MLP-ANN models for estimating the higher heating value of bamboo biomass. Biomass Convers. Biorefinery 2021, 11, 2499–2508. [Google Scholar] [CrossRef]

- Abdollahi, S.A.; Ranjbar, S.F.; Jahromi, D.R. Applying feature selection and machine learning techniques to estimate the biomass higher heating value. Sci. Rep. 2023, 13, 16093. [Google Scholar] [CrossRef] [PubMed]

- Güleç, F.; Pekaslan, D.; Williams, O.; Lester, E. Predictability of higher heating value of biomass feedstocks via proximate and ultimate analyses—A comprehensive study of artificial neural network applications. Fuel 2022, 320, 123944. [Google Scholar] [CrossRef]

- Aghel, B.; Yahya, S.I.; Rezaei, A.; Alobaid, F. A Dynamic Recurrent Neural Network for Predicting Higher Heating Value of Biomass. Int. J. Mol. Sci. 2023, 24, 5780. [Google Scholar] [CrossRef]

- García, R.; Pizarro, C.; Lavín, A.G.; Bueno, J.L. Spanish biofuels heating value estimation. Part I: Ultimate analysis data. Fuel 2014, 117, 1130–1138. [Google Scholar] [CrossRef]

- García, R.; Pizarro, C.; Lavín, A.G.; Bueno, J.L. Spanish biofuels heating value estimation. Part II: Proximate analysis data. Fuel 2014, 117, 1139–1147. [Google Scholar] [CrossRef]

- García, R.; Pizarro, C.; Lavín, A.G.; Bueno, J.L. Characterization of Spanish biomass wastes for energy use. Bioresour. Technol. 2012, 103, 249–258. [Google Scholar] [CrossRef]

- Demirbas, A. Combustion characteristics of different biomass fuels. Prog. Energy Combust. Sci. 2004, 30, 219–230. [Google Scholar] [CrossRef]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Singapore, 2006; Volume 4. [Google Scholar]

- Li, J.; Yuan, X.; Kuruoglu, E.E. Exploring Weight Distributions and Dependence in Neural Networks with α-Stable Distributions. IEEE Trans. Artif. Intell. 2024, 5, 5519–5529. [Google Scholar] [CrossRef]

- Zhang, J.; Li, H.; Sra, S.; Jadbabaie, A. Neural network weights do not converge to stationary points: An invariant measure perspective. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Ismailov, V.E. A three layer neural network can represent any multivariate function. J. Math. Anal. Appl. 2023, 523, 127096. [Google Scholar] [CrossRef]

- Demirbaş, A. Calculation of higher heating values of biomass fuels. Fuel 1997, 76, 431–434. [Google Scholar] [CrossRef]

- Graboski, M.; Bain, R. Properties of biomass relevant to gasification. In Biomass Gasification-Principles and Technology; Noyes Data Corporation: Park Ridge, NJ, USA, 1981; pp. 41–69. [Google Scholar]

- Adapa, P.K.; Adapa, P.K.; Schoenau, G.J.; Tabil, L.G.; Sokhansanj, S.; Crerar, B. Pelleting of fractionated alfalfa products. In Proceedings of the 2003 ASAE Annual Meeting, American Society of Agricultural and Biological Engineers, Las Vegas, NV, USA, 27–30 July 2003. [Google Scholar]

- Callejón-Ferre, A.; Velázquez-Martí, B.; López-Martínez, J.; Manzano-Agugliaro, F. Greenhouse crop residues: Energy potential and models for the prediction of their higher heating value. Renew. Sustain. Energy Rev. 2011, 15, 948–955. [Google Scholar] [CrossRef]

- Kathiravale, S. Modeling the heating value of Municipal Solid Waste☆. Fuel 2003, 82, 1119–1125. [Google Scholar] [CrossRef]

- Abu-Qudais, M.; A Abu-Qdais, H. Energy content of municipal solid waste in Jordan and its potential utilization. Energy Convers. Manag. 2000, 41, 983–991. [Google Scholar] [CrossRef]

- Meraz, L.; Domínguez, A.; Kornhauser, I.; Rojas, F. A thermochemical concept-based equation to estimate waste combustion enthalpy from elemental composition☆. Fuel 2003, 82, 1499–1507. [Google Scholar] [CrossRef]

- Sheng, C.; Azevedo, J. Estimating the higher heating value of biomass fuels from basic analysis data. Biomass Bioenergy 2005, 28, 499–507. [Google Scholar] [CrossRef]

- Mason, D.M.; Gandhi, K. Formulas for Calculating the Heating Value of Coal and Coal Char: Development, Tests, and Uses; No. CONF-800814-25; Institute of Gas Technology: Chicago, IL, USA, 1980. [Google Scholar]

- Channiwala, S.A.; Parikh, P.P. A unified correlation for estimating HHV of solid, liquid and gaseous fuels. Fuel 2002, 81, 1051–1063. [Google Scholar] [CrossRef]

- Jenkins, B.M.; Baxter, L.L.; Miles, T.R., Jr.; Miles, T.R. Combustion properties of biomass. Fuel Process. Technol. 1998, 54, 17–46. [Google Scholar] [CrossRef]

- Matveeva, A.; Bychkov, A. How to train an artificial neural network to predict higher heating values of biofuel. Energies 2022, 15, 7083. [Google Scholar] [CrossRef]

- Brandić, I.; Pezo, L.; Voća, N.; Matin, A. Biomass Higher Heating Value Estimation: A Comparative Analysis of Machine Learning Models. Energies 2024, 17, 2137. [Google Scholar] [CrossRef]

- Daskin, M.; Erdoğan, A.; Güleç, F.; Okolie, J.A. Generalizability of empirical correlations for predicting higher heating values of biomass. Energy Sources Part A Recover. Util. Environ. Eff. 2024, 46, 5434–5450. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Wang, Y.; Qu, L. Predicting higher heating value of sewage sludges via artificial neural network based on proximate and ultimate analyses. Water 2023, 15, 674. [Google Scholar] [CrossRef]

- Esteves, B.; Sen, U.; Pereira, H. Influence of chemical composition on heating value of biomass: A review and bibliometric analysis. Energies 2023, 16, 4226. [Google Scholar] [CrossRef]

- Thipkhunthod, P.; Pharino, C.; Kitchaicharoen, J.; Phungrassami, H. Predicting the heating value of sewage sludges in Thailand from proximate and ultimate analyses. Fuel 2005, 84, 849–857. [Google Scholar] [CrossRef]

- Callejón-Ferré, A.J.; López-Martínez, J.A.; Manzano-Agugliaro, F.; Díaz-Pérez, M. Energy potential of greenhouse crop residues in southeastern Spain and evaluation of their heating value predictors. Renew. Sustain. Energy Rev. 2011, 15, 948–955. [Google Scholar] [CrossRef]

- Chang, Y.F.; Chang, C.N.; Chen, Y.W. Multiple regression models for lower heating value of municipal solid waste in Taiwan. J. Environ. Manag. 2007, 85, 891–899. [Google Scholar] [CrossRef]

- Yin, C.Y. Prediction of higher heating value of biomass from proximate and ultimate analyses. Fuel 2011, 90, 1128–1132. [Google Scholar] [CrossRef]

- Cordero, T.; Marquez, F.; Rodriguez-Mirasol, J.; Rodriguez, J.J. Predicting heating values of lignocellulosics from proximate analysis. Fuel 2001, 80, 1567–1571. [Google Scholar] [CrossRef]

- Parikh, J.; Channiwala, S.A.; Ghosal, G.K. A correlation for calculating HHV from proximate analysis of solid fuels. Fuel 2005, 84, 487–494. [Google Scholar] [CrossRef]

- Majumder, A.K.; Jain, R.; Banerjee, S.; Barnwal, J.P. An approach for developing an analytical relation of the HHV from proximate analysis of coal. Fuel 2008, 87, 3077–3081. [Google Scholar] [CrossRef]

- Ahmaruzzaman, M. A review on the utilization of fly ash. Prog. Energy Combust. Sci. 2010, 36, 327–363. [Google Scholar] [CrossRef]

- Jiménez, L.; González, F. Thermal behaviour of lignocellulosic residues treated with phosphoric acid. Fuel 1991, 70, 947–950. [Google Scholar] [CrossRef]

- Goutal, M. Sur le pouvoir calorifique de la houille. Comptes Rendus L’académie Sci. 1902, 134, 477–479. [Google Scholar]

- Kucukbayrak, S.; Goksel, M.; Gok, S. Estimation of calorific values of Turkish lignites using neural networks and regression analysis. Fuel 1991, 70, 979–981. [Google Scholar] [CrossRef]

- Matsuoka, K.; Kuramoto, K.; Suzuki, Y. Modification of Dulong’s formula to estimate heating value of gas, liquid and solid fuels. Fuel Process Technol. 2016, 152, 399–405. [Google Scholar]

- Mason, D.M.; Gandhi, K. Formulas for heating value of coal and coal char. Fuel Process Technol. 1983, 7, 11–22. [Google Scholar] [CrossRef]

- Arvidsson, M.; Morandin, M.; Harvey, S. Biomass gasification-based syngas production for a conventional oxo synthesis plant-greenhouse gas emission balances and economic evaluation. J. Clean. Prod. 2015, 99, 192–205. [Google Scholar] [CrossRef]

- Skodras, G.; Grammelis, O.P.; Basinas, P.; Kakaras, E.; Sakellaropoulos, G. Pyrolysis and combustion characteristics of biomass and waste-derived feedstock. Ind. Eng. Chem. Res. 2006, 45, 3791–3799. [Google Scholar] [CrossRef]

- Brandic, I.; Pezo, L.; Bilandzija, N.; Peter, A.; Suric, J.; Voca, N. Comparison of Different Machine Learning Models for Modelling the Higher Heating Value of Biomass. Mathematics 2023, 11, 2098. [Google Scholar] [CrossRef]

- Lehtonen, E.; Anttila, P.; Hakala, K.; Luostarinen, S.; Lehtoranta, S.; Merilehto, K.; Lehtinen, H.; Maharjan, A.; Mäntylä, V.; Niemeläinen, O.; et al. An open web-based GIS service for biomass data in Finland. Environ. Model. Softw. 2024, 176, 105972. [Google Scholar] [CrossRef]

- Capareda, S.C. Sustainable Biochar for Water and Wastewater Treatment Comprehensive Biomass Characterization in Preparation for Conversion, 1st ed.; Mohan, D., Pittman, C.U., Mlsna, T.E., Eds.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 1–37. [Google Scholar]

- Yahya, A.M.; Adeleke, A.A.; Nzerem, P.; Ikubanni, P.P.; Ayuba, S.; Rasheed, H.A.; Gimba, A.; Okafor, I.; Okolie, J.A.; Paramasivam, P. Comprehensive Characterization of Some Selected Biomass for Bioenergy Production. ACS Omega 2023, 8, 43771–43791. [Google Scholar] [CrossRef]

- Dashti, A.; Noushabadi, A.S.; Raji, M.; Razmi, A.; Ceylan, S.; Mohammadi, A.H. Estimation of biomass higher heating value (HHV) based on the proximate analysis: Smart modeling and correlation. Fuel 2019, 257, 115931. [Google Scholar] [CrossRef]

- Database for the Physico-Chemical Composition of (Treated) Lignocellulosic Biomass, Micro- and Macroalgae, Various Feedstocks for Biogas Production and Biochar. Available online: https://phyllis.nl/ (accessed on 1 July 2024).

- Simon, F.; Girard, A.; Krotki, M.; Ordoñez, J. Modelling and simulation of the wood biomass supply from the sustainable management of natural forests. J. Clean. Prod. 2021, 282, 124487. [Google Scholar] [CrossRef]

- Malhi, A.; Knapic, S.; Främling, K. Explainable Agents for Less Bias in Human-Agent Decision Making. In Proceedings of the Explainable, Transparent Autonomous Agents and Multi-Agent Systems: Second International Workshop (EXTRAAMAS 2020), Auckland, New Zealand, 9–13 May 2020. [Google Scholar]

| Variables | Minimum | Mean | Maximum | Std. Deviation |

|---|---|---|---|---|

| Moisture (M) | 5.1 | 10.25 | 43 | 5.15 |

| Ash(A) | 0.4 | 5.35 | 22.9 | 4.23 |

| Volatile Material (VM) | 45.2 | 76.07 | 89 | 5.97 |

| Fixed Carbon (FC) | 3.5 | 18.68 | 50.2 | 5.80 |

| Nitrogen (N) | 0.07 | 1.01 | 3.94 | 0.82 |

| Carbon (C) | 27.81 | 44.62 | 59.59 | 4.33 |

| Sulfur (S) | 0.1 | 0.38 | 2.44 | 0.36 |

| Hydrogen (H) | 0.03 | 5.95 | 11.55 | 1.22 |

| Oxygen (O) | 27.86 | 48.01 | 65.96 | 4.84 |

| Hyperparameter | Final Value |

|---|---|

| Input features | 9 (M, A, VM, FC, C, H, S, O) |

| Output Feature | 1 (HHV) |

| Network Architecture | 9-6-6-1 |

| Activation Function | Logistic (Sigmoid) |

| Learning Rate | 0.3 |

| Momentum Rate | 0.4 |

| Iterations | 15,000 |

| Training/Test Split | 75/24 (75%/25%) |

| Normalization Method | Min–Max scaling [0.1 to 0.9] |

| S. No | Model | Fuel Type | HHV Prediction Equation | R2 | Reference |

|---|---|---|---|---|---|

| 1 | IEA | Coal | HHV = 0.3491·C + 1.1783·H − 0.0151·N + 0.1005·S − 0.1034·O − 0.0211·Ash | 0.39 | [22,23,24] |

| 2 | CAL10 | Biomass | HHV = 4.622 + 7.912·H−1 − 0.001·Ash2 + 0.006·C2 + 0.018·N2 | 0.34 | [25] |

| 3 | CAL4 | Biomass | HHV = –1.563 − 0.0251·Ash + 0.475·C − 0.385·H + 0.102·N | 0.33 | [25] |

| 4 | CAL13 | Biomass | HHV = 86.191 − 2.051·Ash − 1.781·C − 237.722·Ash−1 + 0.030·Ash2 + 0.025·C2 + 0.026·N2 | 0.33 | [25] |

| 5 | CAL11 | Biomass | HHV = 23.668 − 7.032·H − 0.002·Ash2 + 0.005·C2 + 0.771·H2 + 0.019·N2 | 0.28 | [25] |

| 6 | CAL5 | Biomass | HHV = –0.465 − 0.0342·Ash − 0.019·VM + 0.483·C − 0.388·H + 0.124·N | 0.24 | [25] |

| 7 | CAL6 | Biomass | HHV = –0.603 − 0.033·Ash − 0.019·VM + 0.485·C − 0.380·H + 0.124·N + 0.030·S | 0.24 | [25] |

| 8 | CAL8 | Biomass | HHV = –0.417 − 0.012·VM − 0.035·(Ash + C) + 0.518·(C + N) − 0.393·(H + N) | 0.22 | [25] |

| 9 | STE | MSW | HHV = 81·(C − 3·O/8) + 57.3·O/8 + 345·(H − O/10) + 25·S − 6·(9·H + M) | 0.22 | [26,27] |

| 10 | MER | Wastes | HHV = (1 − M/100)(–0.3708·C − 1.1124·H + 0.1391·O − 0.3178·N − 0.1391·S) | 0.20 | [28] |

| 11 | S&A3 | Biomass | HHV = –1.3675 + 0.3137·C + 0.7009·H + 0.0318·O! (O! = 100 − C − H − Ash) | 0.19 | [29] |

| 12 | G&D | Coal | HHV = [654.3·H/100 − Ash]·[C/3 + H − O/8 − S/8] | 0.18 | [30] |

| 13 | G&B | Biomass | HHV = 0.328·C + 1.4306·H − 0.0237·N + 0.0929·S − (1 − Ash/100)(40.11·H/C) + 0.3466 | 0.18 | [23,29,31] |

| 14 | CAL12 | Biomass | HHV = 8.725 + 0.0007·(Ash2·H) + 0.0004·(VM2·H) + 0.0002·(C2·N) − 0.014·(H2·Ash) + 0.626·(S2·A) − 3.692·(S2·N) | 0.16 | [25] |

| 15 | WIL | MSW | HHV = (1 − M/100)(–0.3279·C − 1.5330·H + 0.1668·O + 0.0242·N − 0.0928·S) | 0.15 | [28] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jayapal, A.; Ordonez Morales, F.; Ishtiaq, M.; Kim, S.Y.; Reddy, N.G.S. Modeling the Higher Heating Value of Spanish Biomass via Neural Networks and Analytical Equations. Energies 2025, 18, 4067. https://doi.org/10.3390/en18154067

Jayapal A, Ordonez Morales F, Ishtiaq M, Kim SY, Reddy NGS. Modeling the Higher Heating Value of Spanish Biomass via Neural Networks and Analytical Equations. Energies. 2025; 18(15):4067. https://doi.org/10.3390/en18154067

Chicago/Turabian StyleJayapal, Anbarasan, Fernando Ordonez Morales, Muhammad Ishtiaq, Se Yun Kim, and Nagireddy Gari Subba Reddy. 2025. "Modeling the Higher Heating Value of Spanish Biomass via Neural Networks and Analytical Equations" Energies 18, no. 15: 4067. https://doi.org/10.3390/en18154067

APA StyleJayapal, A., Ordonez Morales, F., Ishtiaq, M., Kim, S. Y., & Reddy, N. G. S. (2025). Modeling the Higher Heating Value of Spanish Biomass via Neural Networks and Analytical Equations. Energies, 18(15), 4067. https://doi.org/10.3390/en18154067