Electricity Load Forecasting Method Based on the GRA-FEDformer Algorithm

Abstract

1. Introduction

- (1)

- A GRA-FEDformer method based on the mathematical–statistical method: GRA and a mixture of experts decomposition block is proposed, which can decompose the long time scale component and the short time scale component, and process them individually to better learn the characteristics of the variation in power load.

- (2)

- A frequency enhancement block based on FFT was utilized, and this module replaced the self-attention mechanism. The time series signals can be transformed into the frequency domain and important features of the time series can be captured from a global perspective.

- (3)

- The GRA was applied to the prediction of power loads, which can effectively screen the sampled variables with high information entropy values.

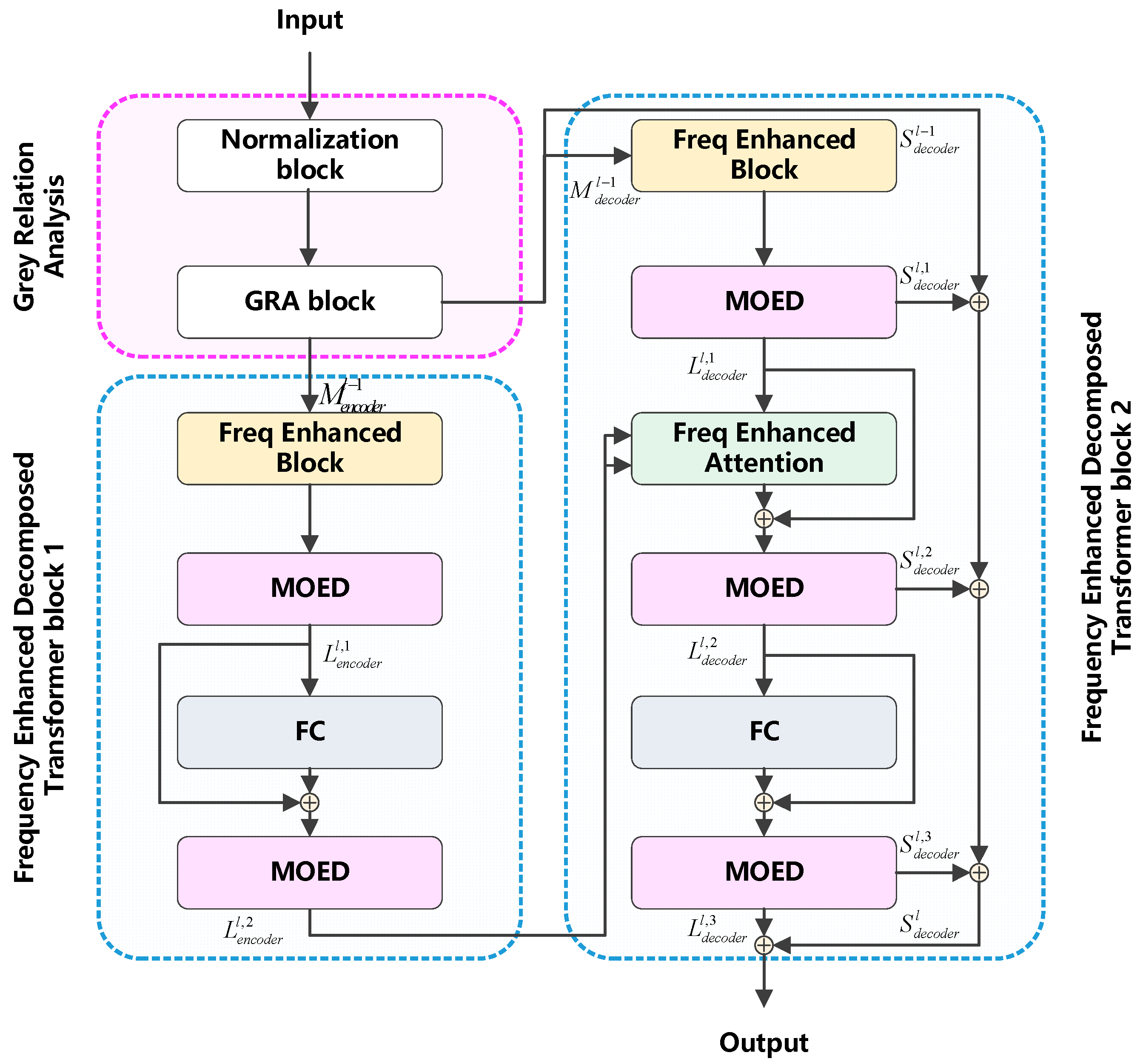

2. GRA-FEDformer Model

2.1. Model Framework

2.2. Grey Relation Analysis

2.3. FEDformer

2.4. Frequency Enhancement Block

2.5. Frequency Enhancement Attention

2.6. Mixture of Experts Decomposition Block

2.7. Frequency Domain Representation of Time Series Variables

3. Power Load Forecasting Method Based on GRA-FEDformer

4. Experimental Results

4.1. Dataset Description

4.2. Validation of Model Performance

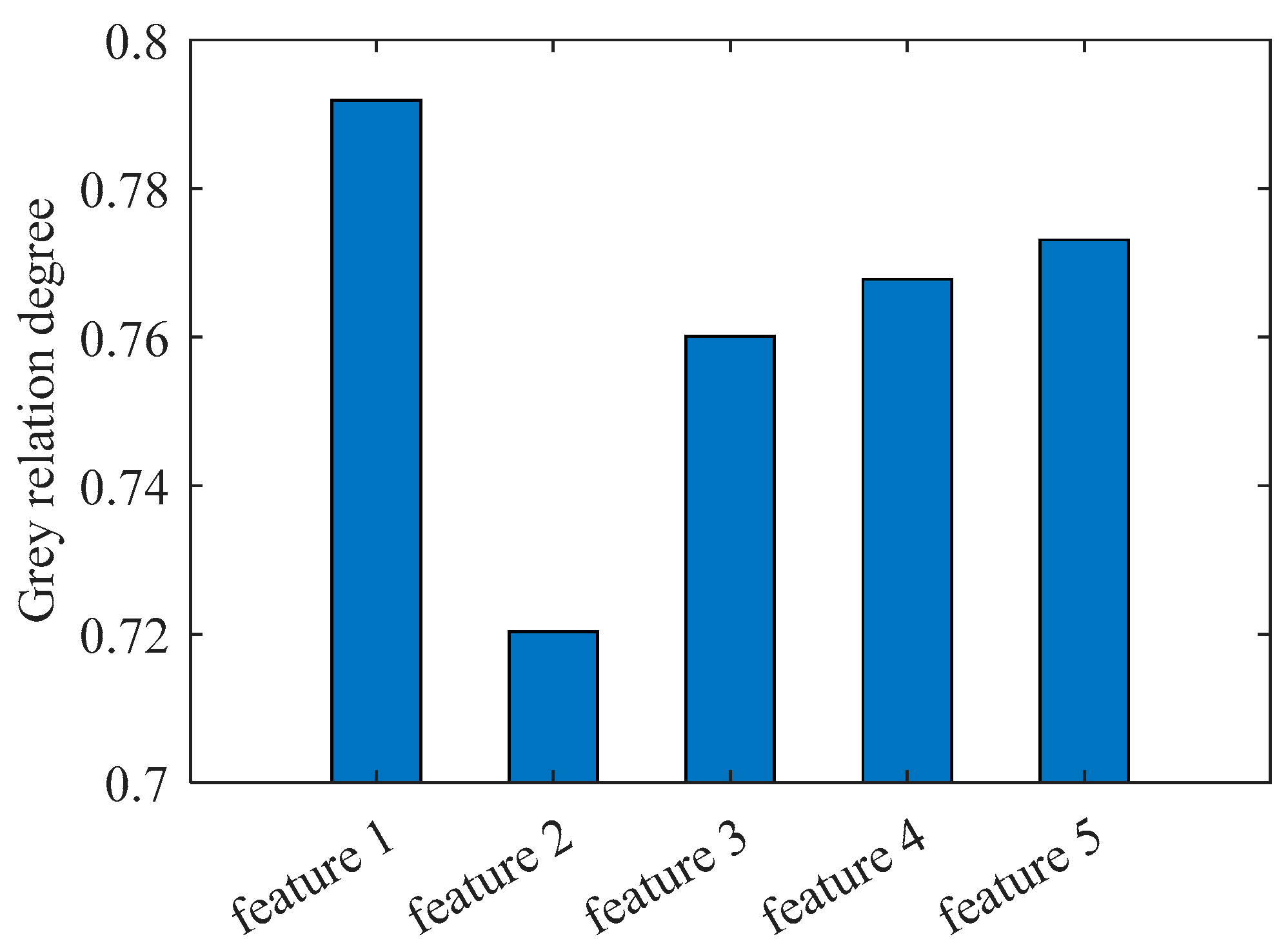

4.2.1. GRA-Based Feature Selection Method

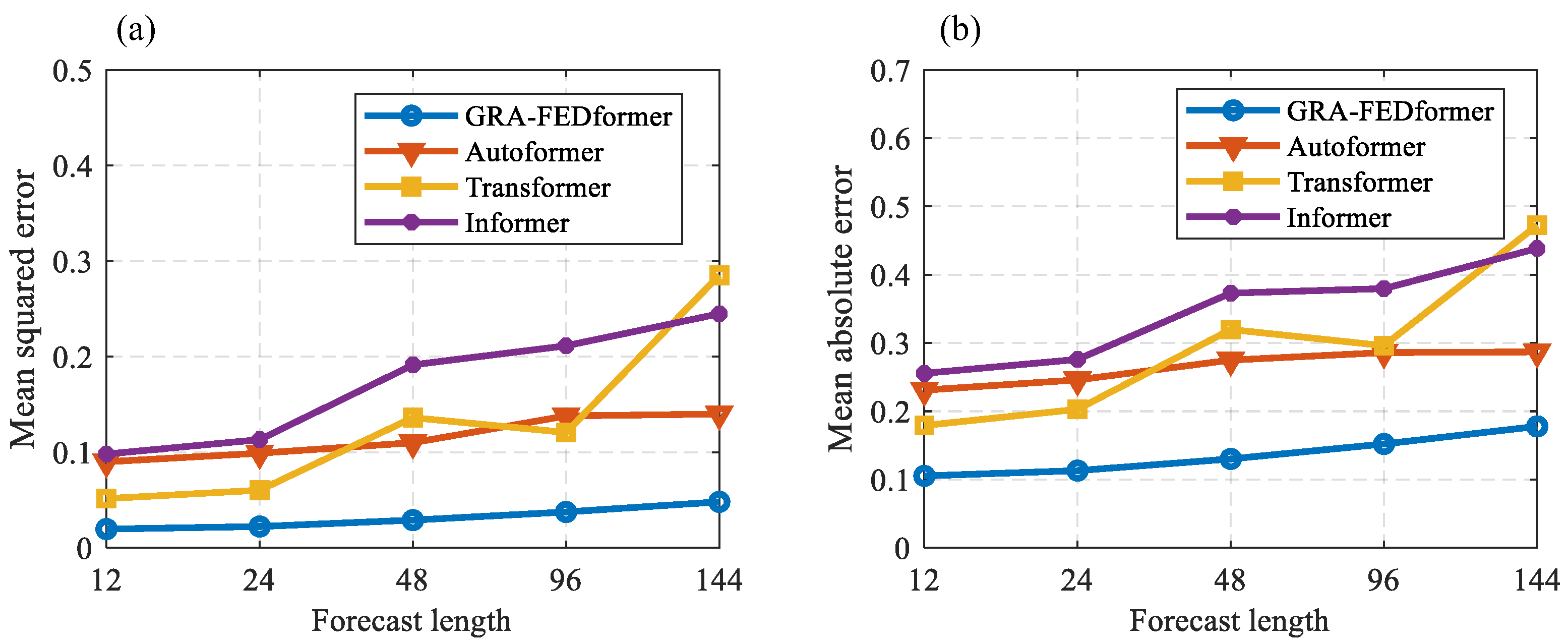

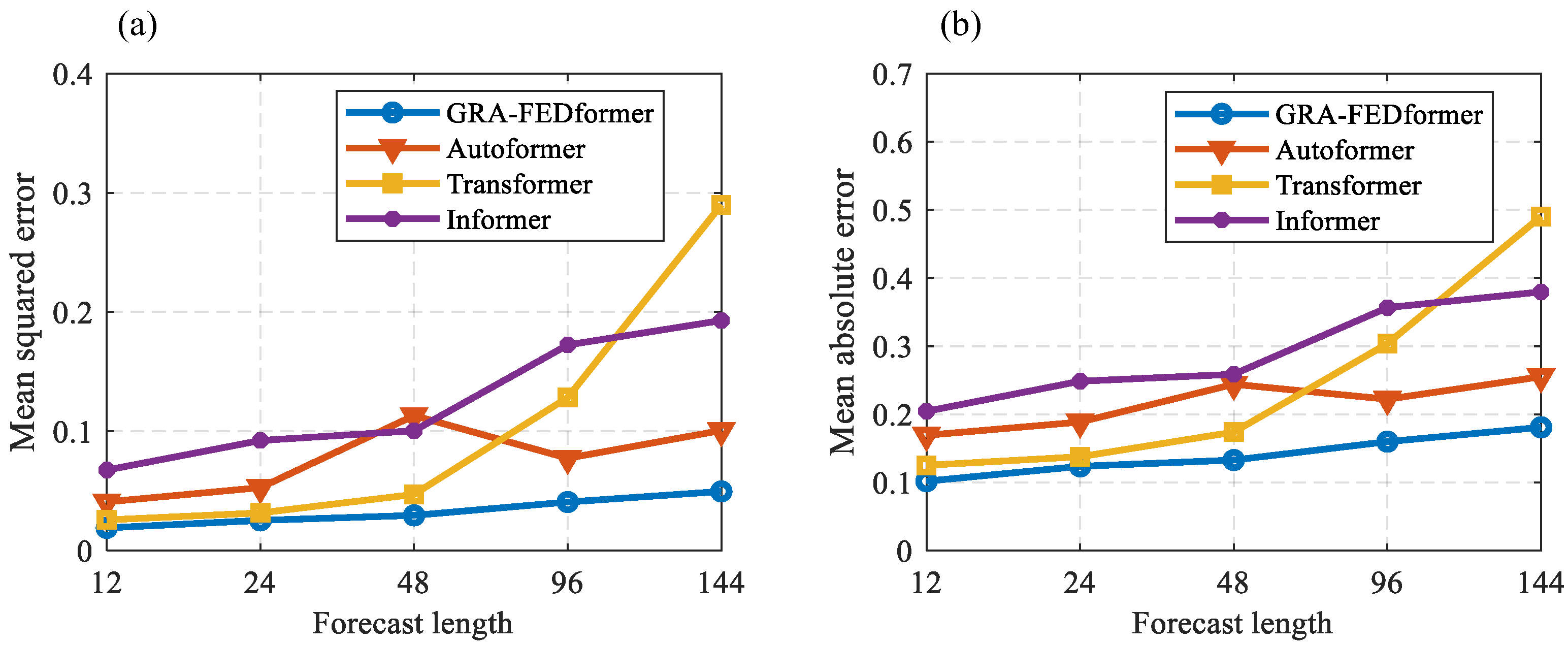

4.2.2. Analysis of the Results of Comparative Experiments

4.2.3. Performance Validation for Frequency Mode Selection Strategies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wei, N.; Yin, C.; Yin, L.; Tan, J.; Liu, J.; Wang, S.; Qiao, W.; Zeng, F. Short-term load forecasting based on WM algorithm and transfer learning model. Appl. Energy 2024, 353, 122087. [Google Scholar] [CrossRef]

- Wang, X.; Yao, Z.; Papaefthymiou, M. A real-time electrical load forecasting and unsupervised anomaly detection framework. Appl. Energy 2023, 330, 120279. [Google Scholar] [CrossRef]

- Mohandes, M. Support vector machines for short-term electrical load forecasting. Int. J. Energy Res. 2002, 26, 335–345. [Google Scholar] [CrossRef]

- Lee, C.M.; Ko, C.N. Short-term load forecasting using lifting scheme and ARIMA models. Expert Syst. Appl. 2011, 38, 5902–5911. [Google Scholar] [CrossRef]

- Cui, Z.; Hu, W.; Zhang, G.; Huang, Q.; Chen, Z.; Blaabjerg, F. Knowledge-Informed Deep Learning Method for Multiple Oscillation Sources Localization. IEEE Trans. Power Syst. 2025, 40, 2811–2814. [Google Scholar] [CrossRef]

- Bashir, T.; Haoyong, C.; Tahir, M.F.; Liquang, Z. Short term electricity load forecasting using hybrid prophet-LSTM model optimized by BPNN. Energy Rep. 2022, 8, 1678–1686. [Google Scholar] [CrossRef]

- Lin, T.; Guo, T.; Aberer, K. Hybrid neural networks for learning the trend in time series. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-term residential load forecasting based on LSTM recurrent neural network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Chiu, M.C.; Hsu, H.W.; Chen, K.S.; Wen, C.Y. A hybrid CNN-GRU based probabilistic model for load forecasting from individual household to commercial building. Energy Rep. 2023, 9, 94–105. [Google Scholar] [CrossRef]

- Li, S.; Li, W.; Cook, C.; Zhu, C.; Gao, Y. Independently recurrent neural network (indrnn): Building a longer and deeper Indrnn. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30, Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: New York, NY, USA, 2018. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Wu, Y.; Liao, K.; Chen, J.; Wang, J.; Chen, D.Z.; Gao, H.; Wu, J. D-former: A u-shaped dilated transformer for 3d medical image segmentation. Neural Comput. Appl. 2023, 35, 1931–1944. [Google Scholar] [CrossRef]

- Ran, P.; Dong, K.; Liu, X.; Wang, J. Short-term load forecasting based on CEEMDAN and Transformer. Electr. Power Syst. Res. 2023, 214, 108885. [Google Scholar] [CrossRef]

- Qingyong, Z.; Jiahua, C.; Gang, X.; Shangyang, H.; Kunxiang, D. TransformGraph: A novel short-term electricity net load forecasting model. Energy Rep. 2023, 9, 2705–2717. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y.; Ding, Z.; Zheng, T.; Hu, J.; Zhang, K. A transformer-based method of multienergy load forecasting in integrated energy system. IEEE Trans. Smart Grid 2022, 13, 2703–2714. [Google Scholar] [CrossRef]

- Ming, L.; Chaoshan, S.; Yunfei, T.; Guihao, W.; Yonghang, L. An electricity load forecasting based on improved Autoformer. Comput. Technol. Dev. 2024, 35, 107–112. [Google Scholar] [CrossRef]

- Jiang, Y.; Gao, T.; Dai, Y.; Si, R.; Hao, J.; Zhang, J.; Gao, D.W. Very short-term residential load forecasting based on deep-autoformer. Appl. Energy 2022, 328, 120120. [Google Scholar] [CrossRef]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A seasonal-trend decomposition. J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- Wen, Q.; He, K.; Sun, L.; Zhang, Y.; Ke, M.; Xu, H. RobustPeriod: Robust time-frequency mining for multiple periodicity detection. In Proceedings of the 2021 International Conference on Management of Data, Virtual, 20–25 June 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, Y.; Sun, J.; Han, C.; Sun, G.; Yan, B. Load forecasting of district heating system based on Informer. Energy 2022, 253, 124179. [Google Scholar] [CrossRef]

- Drineas, P.; Mahoney, M.W.; Muthukrishnan, S. Relative-error CUR matrix decompositions. SIAM J. Matrix Anal. Appl. 2008, 30, 844–881. [Google Scholar] [CrossRef]

- Zhou, H. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; AAAI Press: Washington, DC, USA, 2025. [Google Scholar] [CrossRef]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In Advances in Neural Information Processing Systems 34, Proceedings of the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), Vancouver, BC, Canada, 6–14 December 2021; Curran Associates, Inc.: New York, NY, USA, 2021. [Google Scholar]

| Ref. | Year | Embedding | Adaptability to Long Time Series Forecasting Tasks | Adaptability to Short Time Series Forecasting Tasks |

|---|---|---|---|---|

| [11] | 2017 | Transformer | Non-adaptation | Adaptation |

| [14] | 2023 | CEEMDAN+Transformer | Non-adaptation | Adaptation |

| [15] | 2023 | GCN+ Transformer | Non-adaptation | Adaptation |

| [16] | 2022 | Multiple-Decoder Transformer | Non-adaptation | Adaptation |

| [17] | 2024 | CDConv+Autoformer | Non-adaptation | Adaptation |

| [18] | 2022 | MLP+Autoformer | Non-adaptation | Adaptation |

| [21] | 2022 | Informer | Non-adaptation | Adaptation |

| Proposed | - | GRA+FEDformer | Adaptation | Adaptation |

| Forecast Length (Steps) | Proposed | Autoformer | Transformer | Informer | ||||

|---|---|---|---|---|---|---|---|---|

| MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | |

| 12 | 0.019 | 0.105 | 0.090 | 0.231 | 0.051 | 0.179 | 0.098 | 0.255 |

| 24 | 0.022 | 0.113 | 0.099 | 0.246 | 0.060 | 0.202 | 0.113 | 0.276 |

| 48 | 0.029 | 0.130 | 0.110 | 0.275 | 0.136 | 0.319 | 0.191 | 0.373 |

| 96 | 0.037 | 0.152 | 0.138 | 0.286 | 0.120 | 0.296 | 0.211 | 0.379 |

| 144 | 0.048 | 0.177 | 0.140 | 0.287 | 0.285 | 0.472 | 0.244 | 0.438 |

| SD | MOE | SD | MOE | SD | MOE | SD | MOE | |

| 12 | 0.139 | ±0.273 | 0.272 | ±0.534 | 0.204 | ±0.400 | 0.225 | ±0.440 |

| 24 | 0.146 | ±0.287 | 0.306 | ±0.601 | 0.193 | ±0.378 | 0.255 | ±0.501 |

| 48 | 0.170 | ±0.334 | 0.247 | ±0.484 | 0.244 | ±0.477 | 0.258 | ±0.506 |

| 96 | 0.193 | ±0.379 | 0.362 | ±0.709 | 0.258 | ±0.506 | 0.299 | ±0.586 |

| 144 | 0.201 | ±0.392 | 0.272 | ±0.729 | 0.353 | ±0.692 | 0.281 | ±0.551 |

| Forecast Length (Steps) | Proposed | Autoformer | Transformer | Informer | ||||

|---|---|---|---|---|---|---|---|---|

| MSE | MAE | MSE | MAE | MSE | MAE | MSE | MAE | |

| 12 | 0.019 | 0.101 | 0.040 | 0.169 | 0.025 | 0.125 | 0.067 | 0.204 |

| 24 | 0.025 | 0.123 | 0.052 | 0.188 | 0.031 | 0.137 | 0.092 | 0.248 |

| 48 | 0.029 | 0.132 | 0.113 | 0.244 | 0.047 | 0.173 | 0.100 | 0.258 |

| 96 | 0.040 | 0.159 | 0.077 | 0.222 | 0.128 | 0.303 | 0.172 | 0.356 |

| 144 | 0.049 | 0.180 | 0.100 | 0.255 | 0.289 | 0.490 | 0.192 | 0.379 |

| SD | MOE | SD | MOE | SD | MOE | SD | MOE | |

| 12 | 0.137 | ±0.269 | 0.190 | ±0.373 | 0.152 | ±0.299 | 0.201 | ±0.395 |

| 24 | 0.158 | ±0.309 | 0.218 | ±0.427 | 0.154 | ±0.303 | 0.221 | ±0.433 |

| 48 | 0.172 | ±0.336 | 0.336 | ±0.658 | 0.174 | ±0.341 | 0.256 | ±0.502 |

| 96 | 0.201 | ±0.394 | 0.269 | ±0.527 | 0.219 | ±0.430 | 0.251 | ±0.493 |

| 144 | 0.222 | ±0.435 | 0.298 | ±0.585 | 0.255 | ±0.500 | 0.257 | ±0.505 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, X.; Pan, T.; Yu, H.; Wang, Z.; Cao, W. Electricity Load Forecasting Method Based on the GRA-FEDformer Algorithm. Energies 2025, 18, 4057. https://doi.org/10.3390/en18154057

Jin X, Pan T, Yu H, Wang Z, Cao W. Electricity Load Forecasting Method Based on the GRA-FEDformer Algorithm. Energies. 2025; 18(15):4057. https://doi.org/10.3390/en18154057

Chicago/Turabian StyleJin, Xin, Tingzhe Pan, Heyang Yu, Zongyi Wang, and Wangzhang Cao. 2025. "Electricity Load Forecasting Method Based on the GRA-FEDformer Algorithm" Energies 18, no. 15: 4057. https://doi.org/10.3390/en18154057

APA StyleJin, X., Pan, T., Yu, H., Wang, Z., & Cao, W. (2025). Electricity Load Forecasting Method Based on the GRA-FEDformer Algorithm. Energies, 18(15), 4057. https://doi.org/10.3390/en18154057