Intelligent Management of Renewable Energy Communities: An MLaaS Framework with RL-Based Decision Making

Abstract

1. Introduction

2. Background

2.1. Role of COP26 in Energy Transition

2.2. Existing REC Solutions

2.3. Predictive Models in REC Settings

3. Methodology

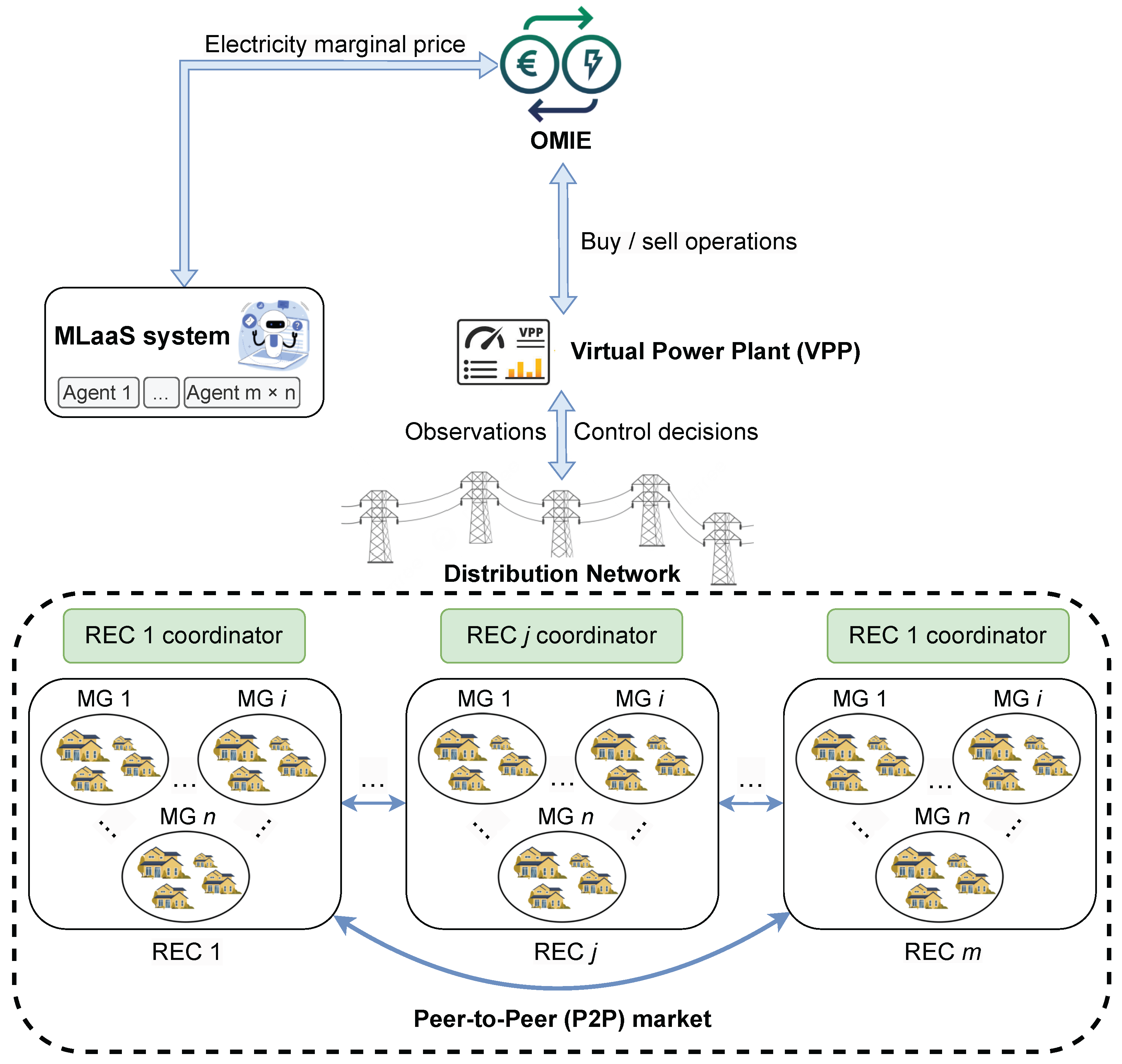

3.1. Application Context

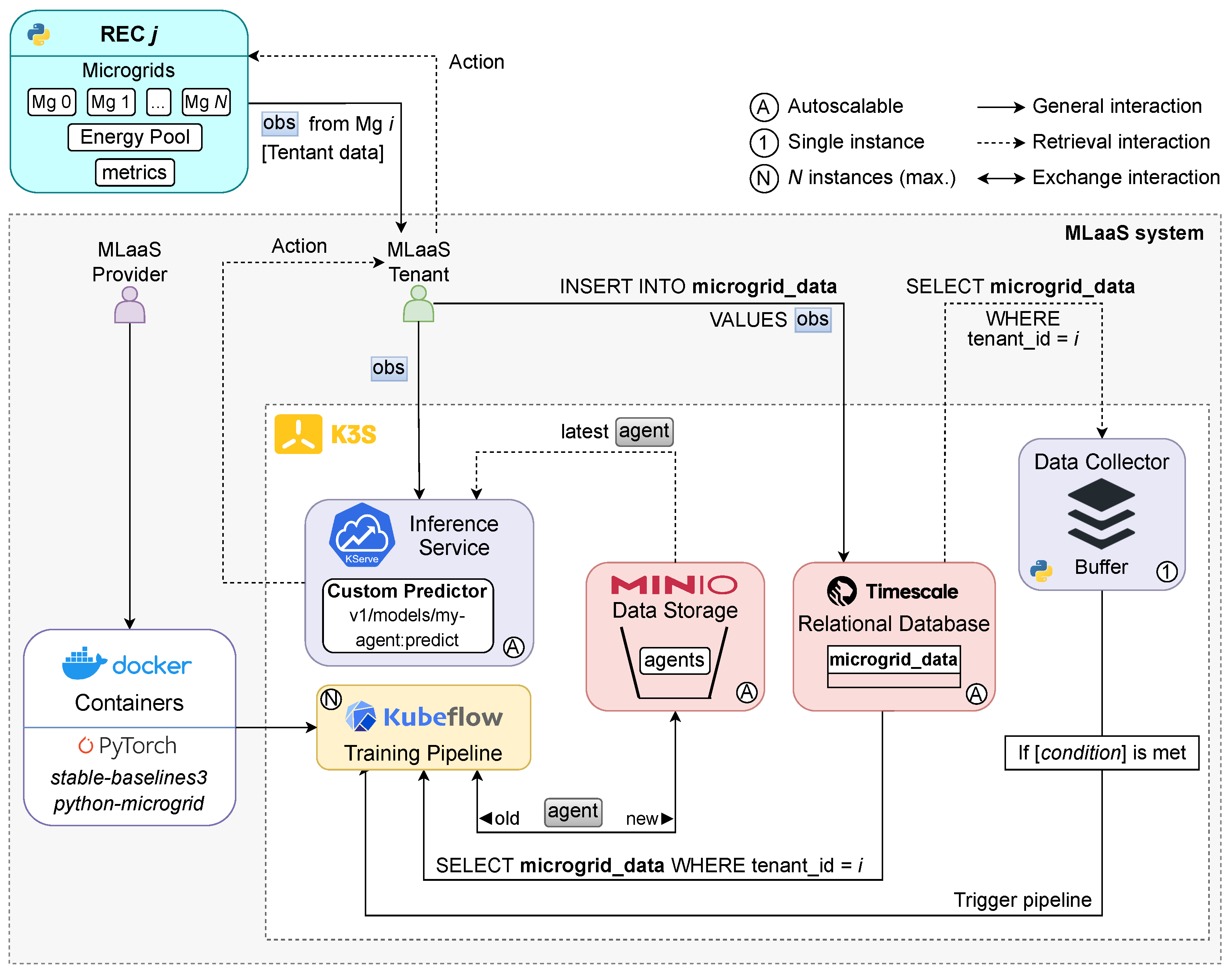

3.2. Proposed MLaaS Solution

3.3. RL Formulation

- : base state vector element at index i (e.g. energy load, PV generation, etc.);

- , : cyclical features for the current hour;

- , , : forecasted energy prices at different steps (initial, intermediate, and final).

- : Energy imported from the grid (kWh);

- : Energy exported to the grid (kWh);

- : Cost of trading 1kWh of energy with the grid (same value for importation/exportation, in this case);

- : Cost of operation i (loss load, overgeneration, battery use and genset).

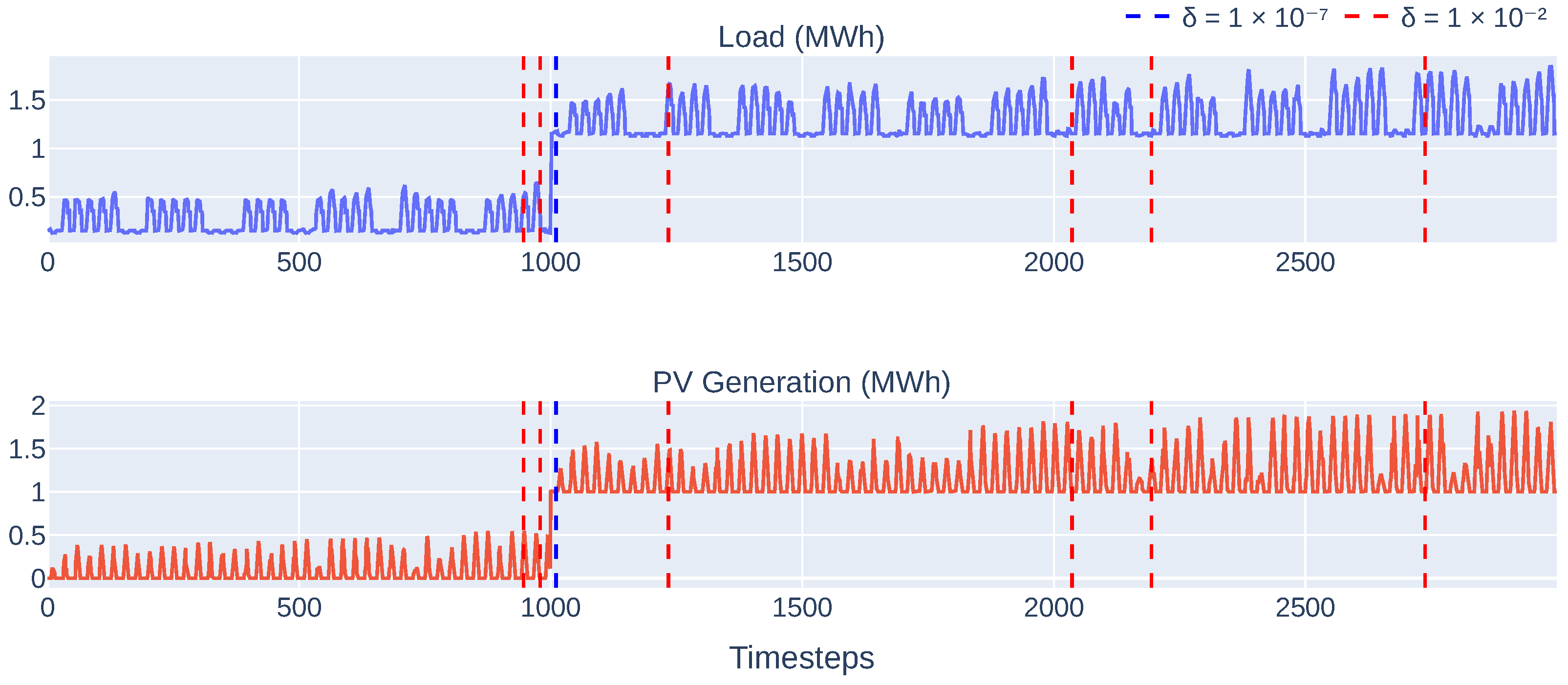

3.4. Available Energy Profiles

4. Development

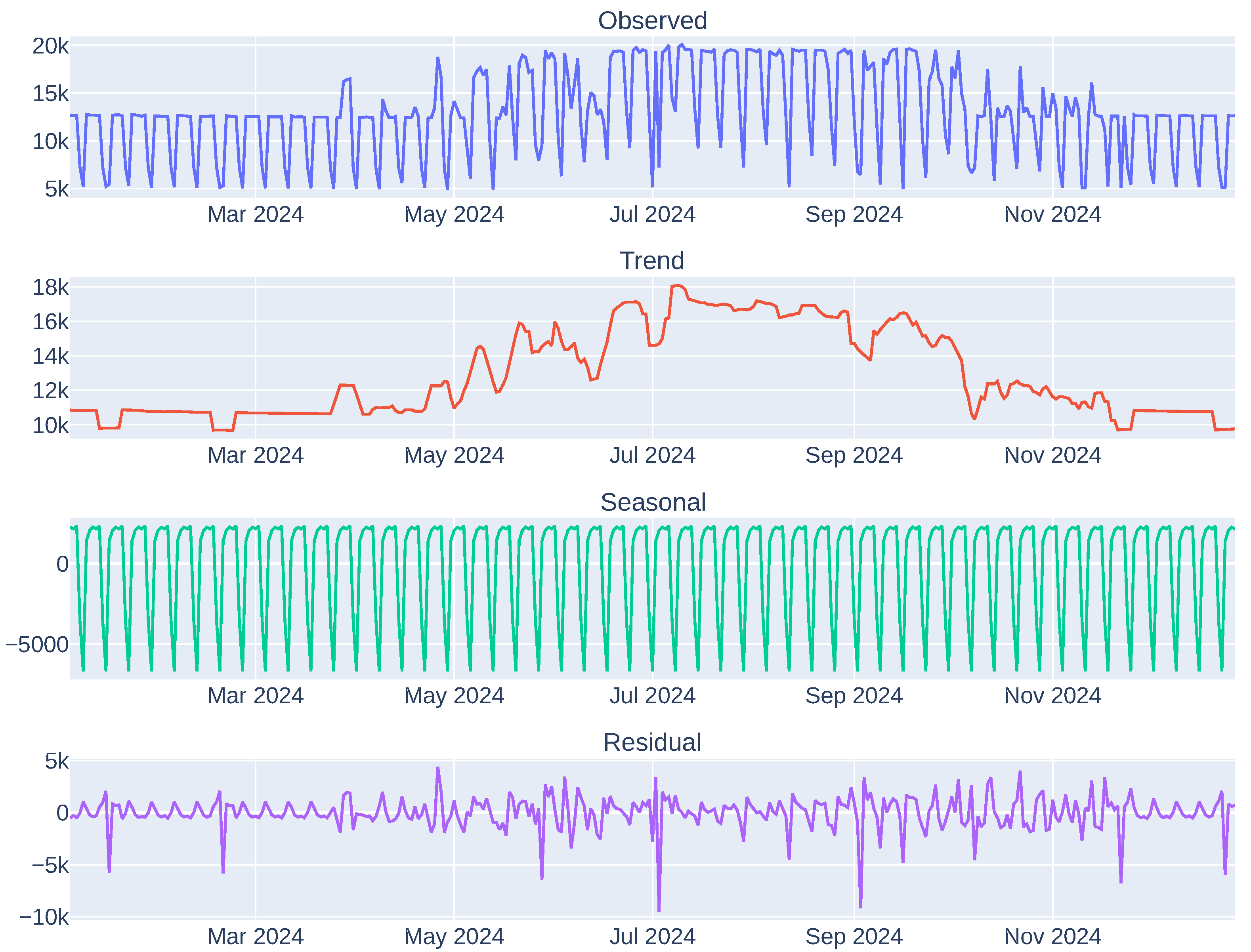

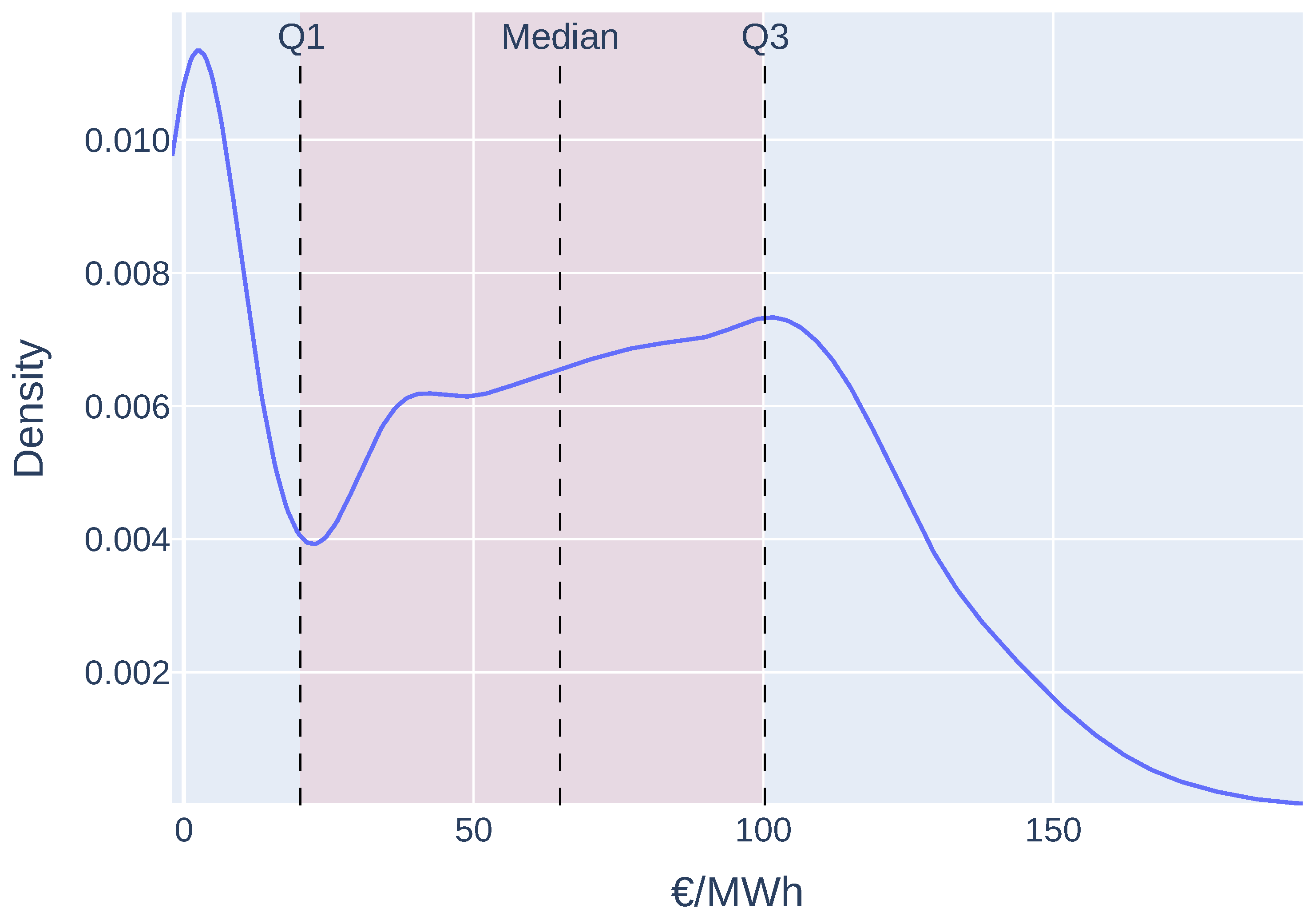

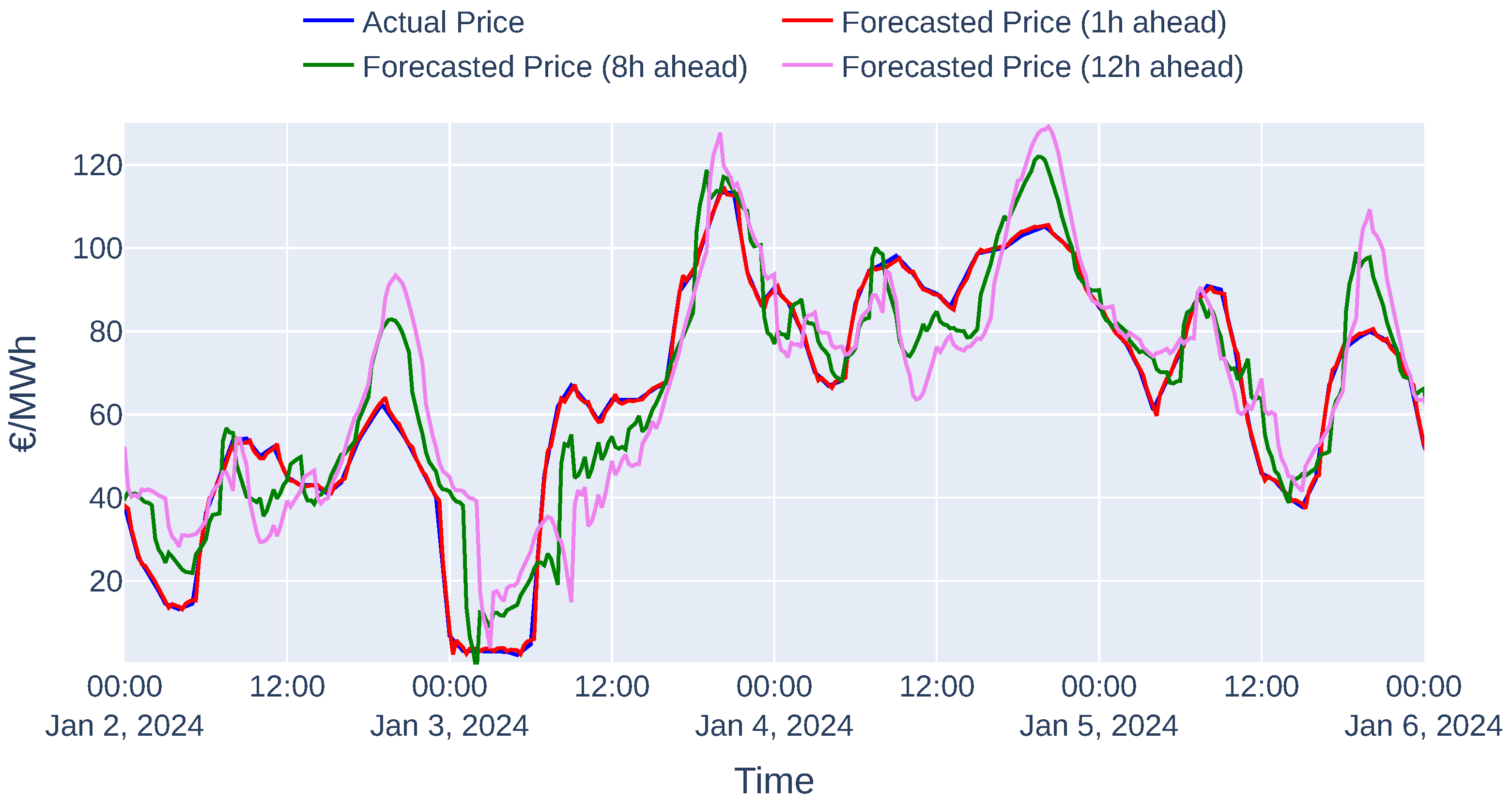

4.1. Energy Price Forecasting

4.2. Intelligent Agents

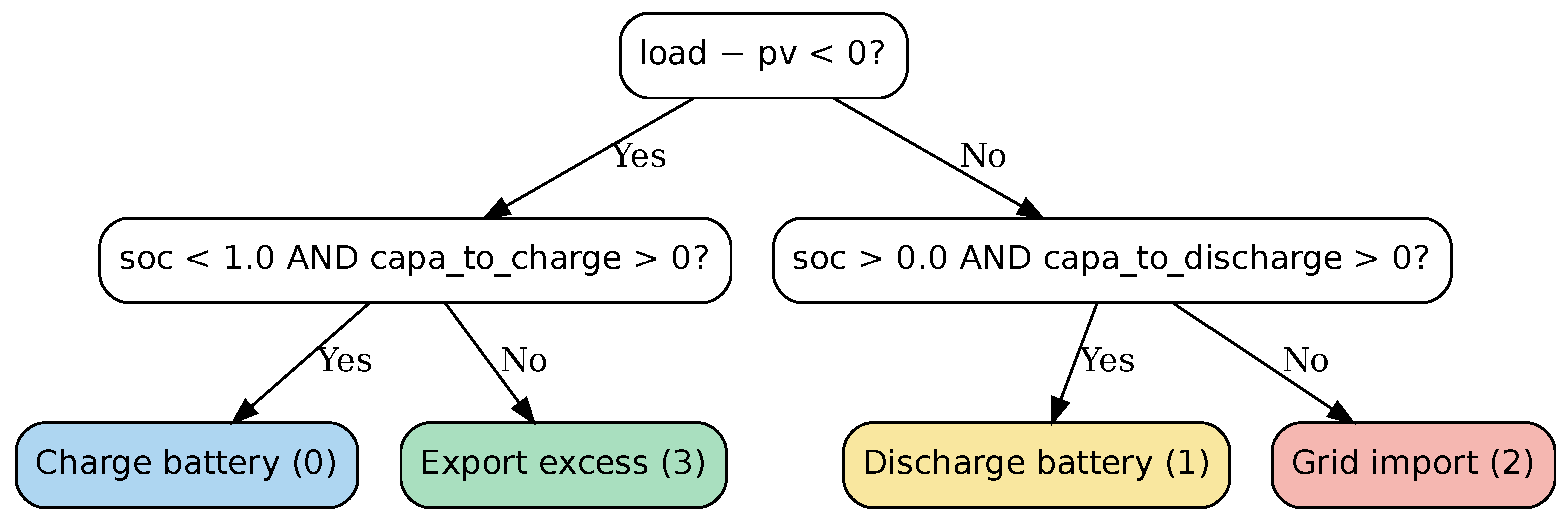

4.2.1. Heuristics-Based Agent (Baseline)

4.2.2. Algorithm 1: Deep Q-Learning (DQN)

- : Optimal action–value function;

- r: Immediate reward after taking action a in state s;

- : Discount factor that controls the importance of future rewards;

- : Next state;

- : Next action.

4.2.3. Algorithm 2: Proximal Policy Optimisation (PPO)

- : Discount factor that controls the importance of future rewards.

- : GAE parameter that controls the bias–variance tradeoff.

- : Temporal difference error between expected and actual returns.

- : Reward at time step t.

- : Estimated value function at state s.

- : Policy ratio at time step t.

- : Advantage estimate at time step t.

- : Clipping parameter that constrains the ratio.

4.2.4. Algorithm 3: Advantage Actor–Critic (A2C)

4.2.5. Algorithm Comparison

4.3. Retraining Strategy

4.4. Inter-REC Energy Trading

- /: Price of the buyer’s/seller’s bid.

- /: Amount of energy in the buyer’s/seller’s bid.

- c: A constant that determines the weight of each price in the final transaction price.

4.5. Intra-REC Energy Exchange

- i, j: Tenant indexes;

- N: Number of tenants in the community;

- : Amount of energy exported (positive) or imported (negative) by tenant x;

- : Current market’s energy marginal price;

- : Amount of energy available in the energy pool.

5. Evaluation

5.1. Experiment Setup

5.2. Quality of Energy Price Forecasts

- : Actual value/predicted value of the energy price at index i.

- n: Number of samples in the test/validation set.

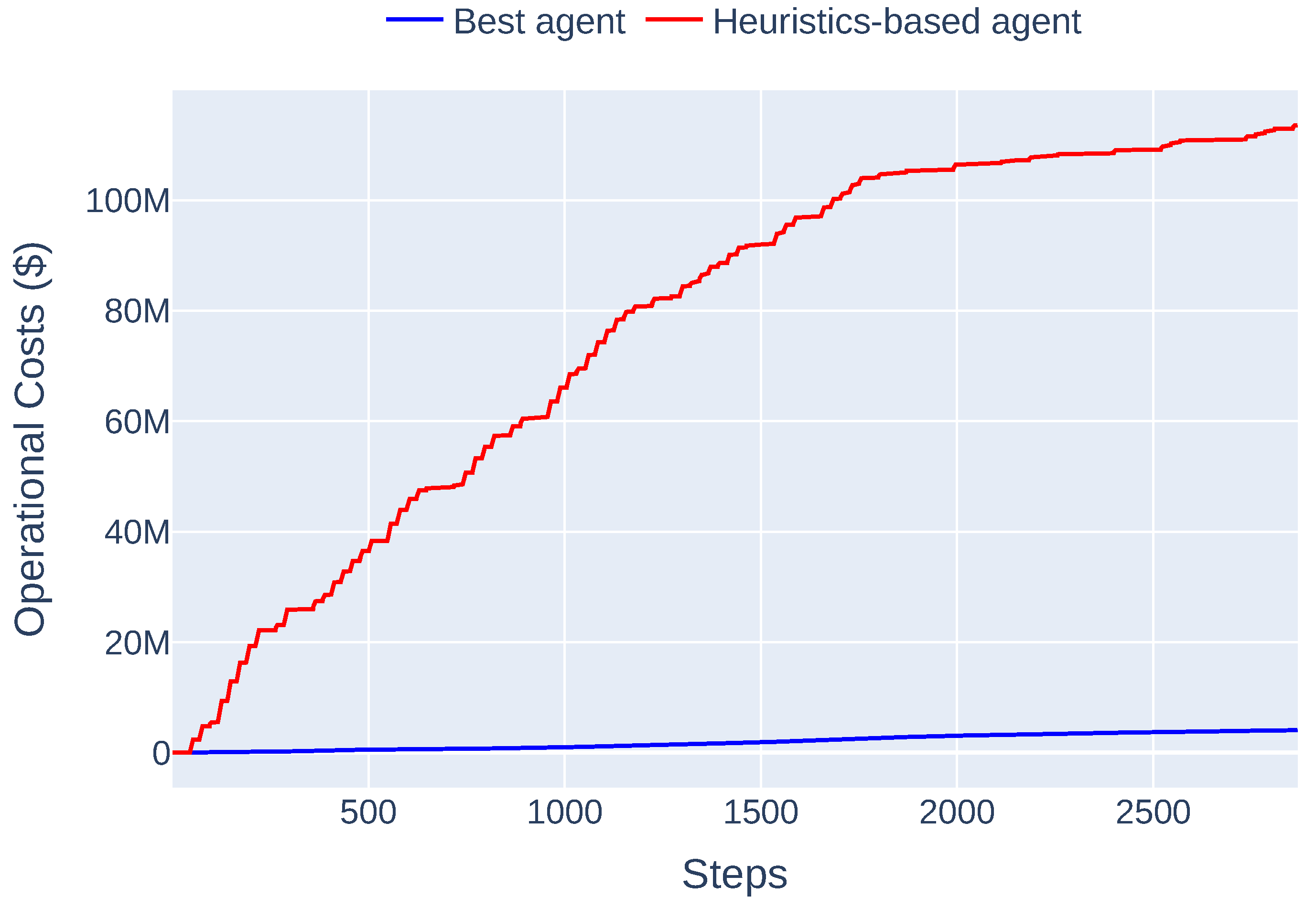

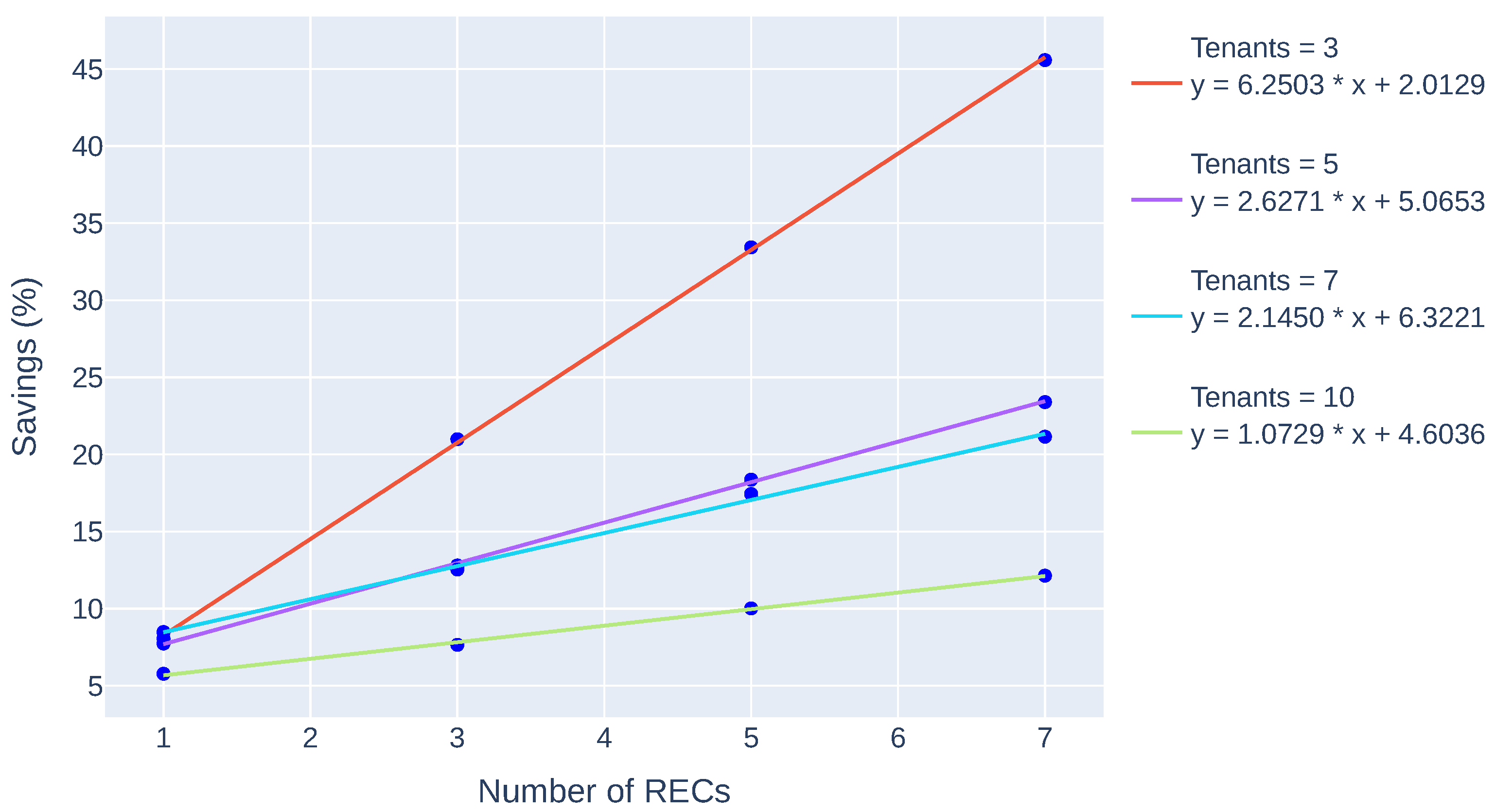

5.3. Simulation Benchmark

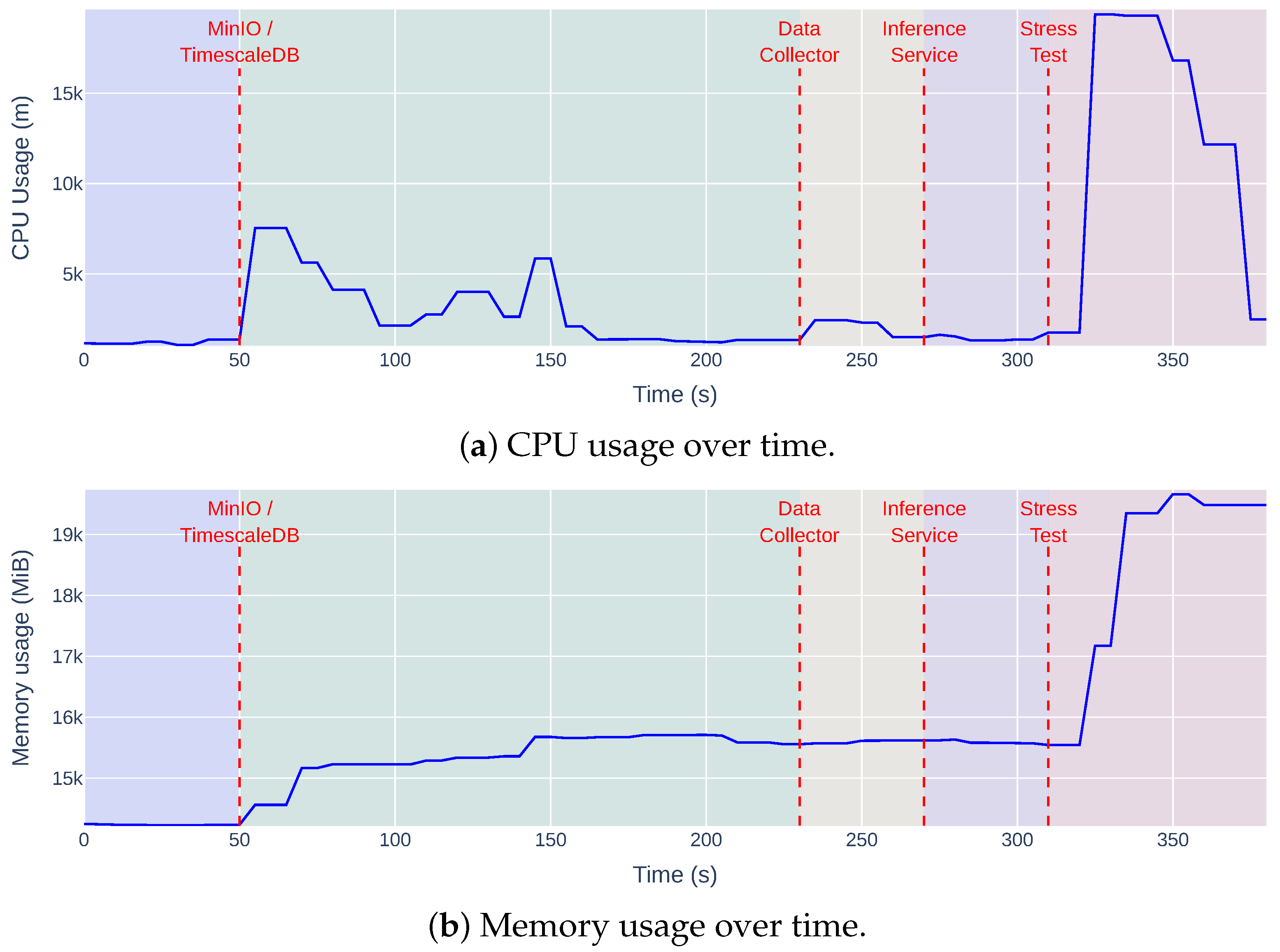

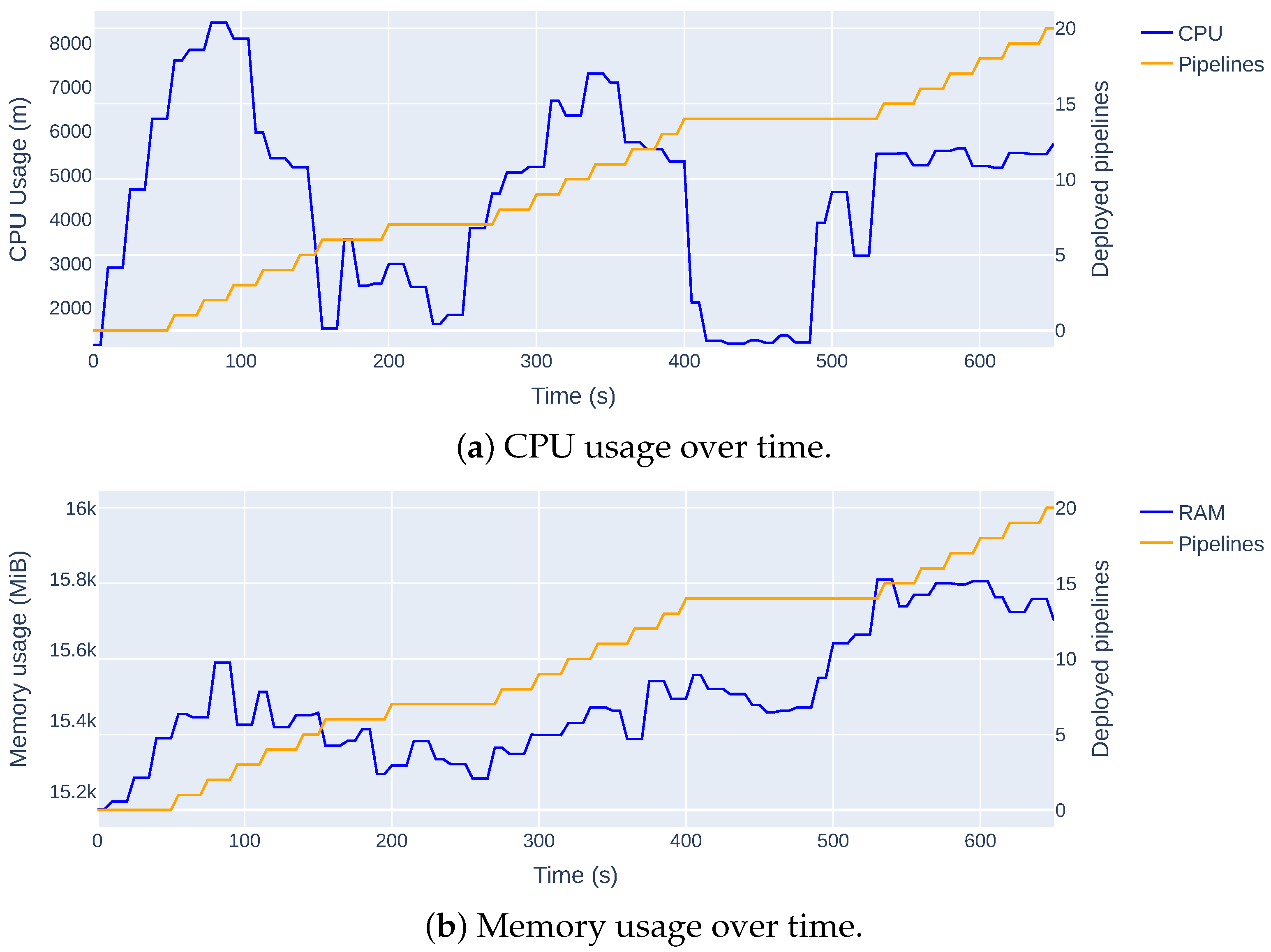

5.4. Infrastructure Validation

6. Conclusions

6.1. Limitations and Future Work

6.2. Final Considerations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

| RL | Reinforcement Learning |

| MLaaS | Machine Learning as a Service |

| MLOps | Machine Learning Operations |

| REC | Renewable Energy Community |

| VPP | Virtual Power Plant |

| NEMO | Nominated Electricity Market Operator |

| OMIE | Operador do Mercado Ibérico de Energia |

| EMS | Energy Management System |

| PV | Photovoltaic |

| BESS | Battery Energy Storage System |

| P2P | Peer-to-Peer |

| ANN | Artificial Neural Network |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| RMSE | Root Mean Squared Error |

| MAPE | Mean Absolute Percentage Error |

| EDA | Exploratory Data Analysis |

| HPA | Horizontal Pod Autoscaler |

| KFP | Kubeflow Pipelines |

| kWh | Kilowatt-hour |

| MWh | Megawatt-hour |

References

- Gielen, D.; Boshell, F.; Saygin, D.; Bazilian, M.D.; Wagner, N.; Gorini, R. The role of renewable energy in the global energy transformation. Energy Strategy Rev. 2019, 24, 38–50. [Google Scholar] [CrossRef]

- Soeiro, S.; Ferreira Dias, M. Renewable energy community and the European energy market: Main motivations. Heliyon 2020, 6, e04511. [Google Scholar] [CrossRef] [PubMed]

- Nations, U. Transforming our World: The 2030 Agenda for Sustainable Development; United Nations, Department of Economic and Social Affairs: New York, NY, USA, 2015; Volume 1, p. 41. [Google Scholar]

- Notton, G.; Nivet, M.L.; Voyant, C.; Paoli, C.; Darras, C.; Motte, F.; Fouilloy, A. Intermittent and stochastic character of renewable energy sources: Consequences, cost of intermittence and benefit of forecasting. Renew. Sustain. Energy Rev. 2018, 87, 96–105. [Google Scholar] [CrossRef]

- Zhang, L.; Ling, J.; Lin, M. Artificial intelligence in renewable energy: A comprehensive bibliometric analysis. Energy Rep. 2022, 8, 14072–14088. [Google Scholar] [CrossRef]

- Alwan, N.T.; Majeed, M.H.; Shcheklein, S.E.; Ali, O.M.; PraveenKumar, S. Experimental Study of a Tilt Single Slope Solar Still Integrated with Aluminum Condensate Plate. Inventions 2021, 6, 77. [Google Scholar] [CrossRef]

- Praveenkumar, S.; Gulakhmadov, A.; Kumar, A.; Safaraliev, M.; Chen, X. Comparative Analysis for a Solar Tracking Mechanism of Solar PV in Five Different Climatic Locations in South Indian States: A Techno-Economic Feasibility. Sustainability 2022, 14, 11880. [Google Scholar] [CrossRef]

- Conte, F.; D’Antoni, F.; Natrella, G.; Merone, M. A new hybrid AI optimal management method for renewable energy communities. Energy AI 2022, 10, 100197. [Google Scholar] [CrossRef]

- Mai, T.T.; Nguyen, P.H.; Haque, N.A.N.M.M.; Pemen, G.A.J.M. Exploring regression models to enable monitoring capability of local energy communities for self-management in low-voltage distribution networks. IET Smart Grid 2022, 5, 25–41. [Google Scholar] [CrossRef]

- Du, Y.; Mendes, N.; Rasouli, S.; Mohammadi, J.; Moura, P. Federated Learning Assisted Distributed Energy Optimization. arXiv 2023, arXiv:2311.13785. [Google Scholar] [CrossRef]

- Matos, M.; Almeida, J.; Gonçalves, P.; Baldo, F.; Braz, F.J.; Bartolomeu, P.C. A Machine Learning-Based Electricity Consumption Forecast and Management System for Renewable Energy Communities. Energies 2024, 17, 630. [Google Scholar] [CrossRef]

- Giannuzzo, L.; Minuto, F.D.; Schiera, D.S.; Lanzini, A. Reconstructing hourly residential electrical load profiles for Renewable Energy Communities using non-intrusive machine learning techniques. Energy AI 2024, 15, 100329. [Google Scholar] [CrossRef]

- Kang, H.; Jung, S.; Jeoung, J.; Hong, J.; Hong, T. A bi-level reinforcement learning model for optimal scheduling and planning of battery energy storage considering uncertainty in the energy-sharing community. Sustain. Cities Soc. 2023, 94, 104538. [Google Scholar] [CrossRef]

- Denysiuk, R.; Lilliu, F.; Recupero, D.R.; Vinyals, M. Peer-to-peer Energy Trading for Smart Energy Communities. In Proceedings of the 12th International Conference on Agents and Artificial Intelligence, Valletta, Malta, 22–24 February 2020; Volume 1: ICAART. INSTICC. SciTePress: Setúbal, Portugal, 2020; pp. 40–49. [Google Scholar] [CrossRef]

- Limmer, S. Empirical Study of Stability and Fairness of Schemes for Benefit Distribution in Local Energy Communities. Energies 2023, 16, 1756. [Google Scholar] [CrossRef]

- Guedes, W.; Oliveira, C.; Soares, T.A.; Dias, B.H.; Matos, M. Collective Asset Sharing Mechanisms for PV and BESS in Renewable Energy Communities. IEEE Trans. Smart Grid 2024, 15, 607–616. [Google Scholar] [CrossRef]

- Zhang, N.; Yan, J.; Hu, C.; Sun, Q.; Yang, L.; Gao, D.W.; Guerrero, J.M.; Li, Y. Price-Matching-Based Regional Energy Market With Hierarchical Reinforcement Learning Algorithm. IEEE Trans. Ind. Informatics 2024, 20, 11103–11114. [Google Scholar] [CrossRef]

- Karakolis, E.; Pelekis, S.; Mouzakitis, S.; Markaki, O.; Papapostolou, K.; Korbakis, G.; Psarras, J. Artificial intelligence for next generation energy services across Europe–the I-Nergy project. In Proceedings of the International Conferences e-Society 2022 and Mobile Learning 2022, Online, 12–14 March 2022; ERIC: New York, NY, USA, 2022. [Google Scholar]

- Coignard, J.; Janvier, M.; Debusschere, V.; Moreau, G.; Chollet, S.; Caire, R. Evaluating forecasting methods in the context of local energy communities. Int. J. Electr. Power Energy Syst. 2021, 131, 106956. [Google Scholar] [CrossRef]

- Aliyon, K.; Ritvanen, J. Deep learning-based electricity price forecasting: Findings on price predictability and European electricity markets. Energy 2024, 308, 132877. [Google Scholar] [CrossRef]

- Dimitropoulos, N.; Sofias, N.; Kapsalis, P.; Mylona, Z.; Marinakis, V.; Primo, N.; Doukas, H. Forecasting of short-term PV production in energy communities through Machine Learning and Deep Learning algorithms. In Proceedings of the 2021 12th International Conference on Information, Intelligence, Systems & Applications (IISA), Chania Crete, Greece, 12–14 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Krstev, S.; Forcan, J.; Krneta, D. An overview of forecasting methods for monthly electricity consumption. Teh. Vjesn. 2023, 30, 993–1001. [Google Scholar]

- Belenguer, E.; Segarra-Tamarit, J.; Pérez, E.; Vidal-Albalate, R. Short-term electricity price forecasting through demand and renewable generation prediction. Math. Comput. Simul. 2025, 229, 350–361. [Google Scholar] [CrossRef]

- Sousa, H.; Gonçalves, R.; Antunes, M.; Gomes, D. Privacy-Preserving Energy Optimisation in Home Automation Systems. In Proceedings of the Joint International Conference on AI, Big Data and Blockchain, Vienna, Austria, 19–21 August 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 3–14. [Google Scholar]

- Gonçalves, R.; Magalhães, D.; Teixeira, R.; Antunes, M.; Gomes, D.; Aguiar, R.L. Accelerating Energy Forecasting with Data Dimensionality Reduction in a Residential Environment. Energies 2025, 18, 1637. [Google Scholar] [CrossRef]

- Raffin, A.; Hill, A.; Gleave, A.; Kanervisto, A.; Ernestus, M.; Dormann, N. Stable-Baselines3: Reliable Reinforcement Learning Implementations. J. Mach. Learn. Res. 2021, 22, 1–8. [Google Scholar]

- Fuente, N.D.L.; Guerra, D.A.V. A Comparative Study of Deep Reinforcement Learning Models: DQN vs. PPO vs. A2C. arXiv 2024, arXiv:2407.14151. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Schulman, J.; Moritz, P.; Levine, S.; Jordan, M.; Abbeel, P. High-Dimensional Continuous Control Using Generalized Advantage Estimation. arXiv 2018, arXiv:1506.02438. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.P.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. arXiv 2016, arXiv:1602.01783. [Google Scholar]

- Huang, S.; Kanervisto, A.; Raffin, A.; Wang, W.; Ontañón, S.; Dossa, R.F.J. A2C is a special case of PPO. arXiv 2022, arXiv:2205.09123. [Google Scholar]

- Bifet, A.; Gavalda, R. Learning from time-changing data with adaptive windowing. In Proceedings of the 2007 SIAM international Conference on Data Mining, Minneapolis, MN, USA, 26–28 April 2007; SIAM: Philadelphia, PA, USA, 2007; pp. 443–448. [Google Scholar]

- Kiedanski, D.; Kofman, D.; Horta, J. PyMarket—A simple library for simulating markets in Python. J. Open Source Softw. 2020, 5, 1591. [Google Scholar] [CrossRef]

- OMIE. European Market. Available online: https://www.omie.es/en (accessed on 4 January 2025).

- Henri, G.; Levent, T.; Halev, A.; Alami, R.; Cordier, P. pymgrid: An Open-Source Python Microgrid Simulator for Applied Artificial Intelligence Research. arXiv 2020, arXiv:2011.08004. [Google Scholar]

- Mystakidis, A.; Koukaras, P.; Tsalikidis, N.; Ioannidis, D.; Tjortjis, C. Energy Forecasting: A Comprehensive Review of Techniques and Technologies. Energies 2024, 17, 1662. [Google Scholar] [CrossRef]

| Ref. | Energy Forecasting | Energy Optimisation | Fairness | Privacy | ML Pipeline | Continual Evaluation |

|---|---|---|---|---|---|---|

| [8] | ✓ | ✓ | X | X | X | X |

| [9] | ✓ | X | X | ✓ | X | X |

| [10] | ✓ | ✓ | ✓ | ✓ | X | X |

| [11] | ✓ | ✓ | X | X | X | X |

| [12] | ✓ | X | X | ✓ | X | X |

| [13] | X | ✓ | X | X | X | ✓ |

| [14] | X | ✓ | P2P | X | X | X |

| [15] | X | ✓ | ✓ | X | X | X |

| [16] | X | ✓ | ✓ | X | X | X |

| [17] | X | ✓ | X | ✓ | X | X |

| [18] | Concept | Concept | X | X | Concept | Concept |

| Count | 5 | 8 | 5 | 4 | 0 | 1 |

| Ref. | Target | TR | U/M | Lookback Window | Forecast Window | Performance |

|---|---|---|---|---|---|---|

| [8] | PV production (kW) Consumption | 15 m | U | 60 h | 24 h | MAE: 1.60 MAE: 2.15 |

| [9] | Congestion | 15 m | M | - | - | RMSE: 0.88 |

| [11] | Consumption | 30 m | M | 24 h | 24 h | RMSE: 4.13 |

| [19] | Demand | 15 m | U | 2 w | 48 h | MAPE: 10.00% |

| [20] | Electricity Price | 1 h | U | 7 d | 24 h | MAE: 18.86 |

| [21] | PV production (kWh) | 1 h | M | 10 h | 1 h | RMSE: 1.08 |

| [22] | Consumption | 1 M | U | 5 Y | 1 Y | MAPE 2.67% |

| [23] | Electricity Price | 1 h | M | 30 d | 2 d | rMAE: 8.18% |

| No. | Operation(s) | Entities | Load Supply | Surplus Handling |

|---|---|---|---|---|

| 0 | Charge battery | Battery | X | ✓ |

| 1 | Discharge battery | Battery | ✓ | X |

| 2 | Import from grid | Grid | ✓ | X |

| 3 | Export to grid | Grid | X | ✓ |

| 4 | Use genset | Genset | ✓ | X |

| 5 | Charge battery (fully) with energy from PV panels and/or the grid, then export PV surplus | Battery + Grid | X | ✓ |

| 6 | Discharge battery, then use genset | Battery + Genset | ✓ | X |

| Hyperparameter | Value |

|---|---|

| Replay buffer size | 50,000 |

| Number of transitions before start learning | 1000 |

| Exploration initial | 1.0 |

| Exploration final | 0.02 |

| Exploration fraction | 0.25 |

| Steps between target network updates | 10,000 |

| Reward discount factor () | 0.99 |

| Hyperparameter | Value |

|---|---|

| Number of epochs per policy update | 10 |

| Number of steps until policy update | 2048 |

| Mini-batch size | 32 |

| Reward discount factor () | 0.99 |

| GAE bias–variance parameter () | 0.95 |

| Clipping parameter () | 0.2 |

| Hyperparameter | Value |

|---|---|

| Number of steps until policy update | 5 |

| Mini-Batch size | 1 |

| Reward discount factor () | 0.99 |

| GAE bias–variance parameter () | 1.0 |

| Aspect | DQN | PPO | A2C |

|---|---|---|---|

| Policy Type | Implicit via Q-values | Directly parametrised | Directly parametrised |

| Action Space | Discrete | Discrete/Continuous | Discrete/Continuous |

| Exploration | -greedy | Stochastic policy | Stochastic policy |

| Stability | Moderate (replay buffer) | High | Low |

| Computational Cost | Moderate (replay buffer) | High (multiple epochs) | Low (1 update/rollout) |

| Lookback Window | Forecasting Window | MAE | Val. MAE | RMSE | Val. RMSE |

|---|---|---|---|---|---|

| 12 | 8 | 3.78 ± 0.16 | 5.18 ± 1.00 | 6.29 ± 0.38 | 8.73 ± 2.05 |

| 12 | 4.99 ± 0.23 | 7.42 ± 1.63 | 7.97 ± 0.56 | 11.75 ± 2.74 | |

| 24 | 7.23 ± 0.33 | 11.93 ± 3.02 | 10.74 ± 0.69 | 17.32 ± 4.14 | |

| 24 | 8 | 3.92 ± 0.30 | 4.98 ± 0.93 | 6.45 ± 0.42 | 8.51 ± 2.17 |

| 12 | 5.11 ± 0.29 | 6.81 ± 1.40 | 8.11 ± 0.57 | 11.11 ± 2.86 | |

| 24 | 7.36 ± 0.36 | 11.09 ± 2.53 | 10.92 ± 0.68 | 16.29 ± 3.91 | |

| 48 | 8 | 3.91 ± 0.41 | 4.88 ± 0.93 | 6.38 ± 0.46 | 8.26 ± 2.20 |

| 12 | 5.19 ± 0.32 | 6.80 ± 1.08 | 8.15 ± 0.57 | 11.03 ± 2.47 | |

| 24 | 7.33 ± 0.43 | 11.32 ± 2.75 | 10.86 ± 0.73 | 16.44 ± 4.03 |

| i | Agent | Activation Function | Network Arch. | Learning Rate | Baseline (M$) | Cost (M$) | Diff. (M$) | % |

|---|---|---|---|---|---|---|---|---|

| 0 | A2C | ReLU | [128, 128] | 0.001 | 0.19 | 0.05 | 0.14 | 72.68 |

| 1 | DQN | ReLU | [64, 64] | 0.0001 | 23.70 | 3.65 | 20.00 | 84.61 |

| 2 | DQN | ReLU | [64, 64] | 0.0001 | 36.00 | 1.75 | 34.30 | 95.14 |

| 3 | DQN | Tanh | [64, 64] | 0.001 | 35.90 | 1.58 | 34.40 | 95.60 |

| 4 | DQN | ReLU | [64, 64] | 0.0001 | 32.40 | 4.38 | 28.10 | 86.51 |

| 5 | PPO | Tanh | [128, 128] | 0.001 | 114.00 | 4.07 | 109.00 | 96.41 |

| 6 | PPO | Tanh | [128, 128] | 0.001 | 46.30 | 1.76 | 44.50 | 96.20 |

| 7 | DQN | ReLU | [64, 64] | 0.0001 | 15.40 | 1.21 | 14.20 | 92.14 |

| 8 | PPO | Tanh | [128, 128] | 0.001 | 9.74 | 3.64 | 6.09 | 62.58 |

| 9 | DQN | ReLU | [64, 64] | 0.0001 | 3.57 | 1.94 | 1.63 | 45.67 |

| Agent | Activation Function | Network Arch. | Learning Rate | % |

|---|---|---|---|---|

| PPO | Tanh | [64, 64] | 0.001 | <+0.01 |

| PPO | Tanh | [64, 64] | 0.0001 | <+0.01 |

| A2C | Tanh | [64, 64] | 0.001 | <+0.01 |

| DQN | Tanh | [128, 128] | 0.0001 | <+0.01 |

| A2C | Tanh | [128, 128] | 0.0001 | +0.13 |

| DQN | Tanh | [64, 64] | 0.0005 | +0.77 |

| DQN | ReLU | [64, 64] | 0.0001 | +0.94 |

| DQN | ReLU | [128, 128] | 0.0001 | +0.40 |

| DQN | Tanh | [64, 64] | 0.001 | +0.36 |

| DQN | Tanh | [128, 128] | 0.001 | 6.22 * |

| R | T | Heuristics-based Agent | Best Agent | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| NN | NY | YY | Sav. | % | NN | NY | YY | Sav. | % | ||

| 1 | 3 | 8.39 | 8.19 * | 0.21 | 2.47 | 7.37 | 6.78 * | 0.59 | 8.06 | ||

| 1 | 5 | 17.56 | 17.03 * | 0.54 | 3.05 | 15.51 | 14.31 * | 1.20 | 7.74 | ||

| 1 | 7 | 27.23 | 26.25 * | 0.98 | 3.61 | 23.30 | 21.33 * | 1.98 | 8.48 | ||

| 1 | 10 | 37.29 | 36.58 * | 0.72 | 1.92 | 32.75 | 30.86 * | 1.89 | 5.77 | ||

| 3 | 3 | 8.35 | 8.18 | 7.90 | 0.45 | 5.41 ± 0.46 | 7.36 | 7.22 | 5.82 | 1.55 | 20.99 ± 0.31 |

| 3 | 5 | 17.47 | 16.85 | 16.26 | 1.21 | 6.95 ± 0.22 | 15.55 | 15.00 | 13.56 | 1.99 | 12.80 ± 0.41 |

| 3 | 7 | 27.23 | 26.30 | 25.54 | 1.69 | 6.20 ± 0.19 | 23.44 | 22.64 | 20.50 | 2.94 | 12.54 ± 0.28 |

| 3 | 10 | 37.27 | 36.45 | 35.77 | 1.50 | 4.02 ± 0.13 | 32.82 | 32.11 | 30.31 | 2.51 | 7.65 ± 0.13 |

| 5 | 3 | 8.37 | 8.20 | 7.62 | 0.74 | 8.88 ± 0.37 | 7.40 | 7.25 | 4.93 | 2.47 | 33.43 ± 0.51 |

| 5 | 5 | 17.51 | 16.86 | 15.62 | 1.88 | 10.75 ± 0.26 | 15.58 | 15.01 | 12.72 | 2.86 | 18.36 ± 0.35 |

| 5 | 7 | 27.23 | 26.31 | 24.71 | 2.53 | 9.28 ± 0.39 | 23.44 | 22.59 | 19.35 | 4.09 | 17.44 ± 0.37 |

| 5 | 10 | 37.31 | 36.53 | 35.25 | 2.06 | 5.53 ± 0.19 | 32.84 | 32.14 | 29.55 | 3.29 | 10.02 ± 0.19 |

| 7 | 3 | 8.38 | 8.19 | 7.39 | 0.98 | 11.76 ± 0.49 | 7.42 | 7.27 | 4.04 | 3.38 | 45.58 ± 0.42 |

| 7 | 5 | 17.50 | 16.83 | 15.06 | 2.44 | 13.94 ± 0.25 | 15.60 | 15.01 | 11.95 | 3.65 | 23.40 ± 0.39 |

| 7 | 7 | 27.27 | 26.32 | 24.06 | 3.21 | 11.76 ± 0.35 | 23.51 | 22.64 | 18.53 | 4.97 | 21.15 ± 0.31 |

| 7 | 10 | 37.34 | 36.60 | 34.77 | 2.56 | 6.87 ± 0.28 | 32.90 | 32.18 | 28.91 | 3.99 | 12.14 ± 0.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonçalves, R.; Gomes, D.; Antunes, M. Intelligent Management of Renewable Energy Communities: An MLaaS Framework with RL-Based Decision Making. Energies 2025, 18, 3477. https://doi.org/10.3390/en18133477

Gonçalves R, Gomes D, Antunes M. Intelligent Management of Renewable Energy Communities: An MLaaS Framework with RL-Based Decision Making. Energies. 2025; 18(13):3477. https://doi.org/10.3390/en18133477

Chicago/Turabian StyleGonçalves, Rafael, Diogo Gomes, and Mário Antunes. 2025. "Intelligent Management of Renewable Energy Communities: An MLaaS Framework with RL-Based Decision Making" Energies 18, no. 13: 3477. https://doi.org/10.3390/en18133477

APA StyleGonçalves, R., Gomes, D., & Antunes, M. (2025). Intelligent Management of Renewable Energy Communities: An MLaaS Framework with RL-Based Decision Making. Energies, 18(13), 3477. https://doi.org/10.3390/en18133477