1. Introduction

The field of reactive power optimization in modern power grids is constantly evolving and improving as technologies progressively shift towards intelligence, real-time operation, and coordination to address the complexity and variability of power system operation. This enhances the operational efficiency, economic viability, and reliability of the grid. Reasonable strategies for reactive power control and allocation can reduce energy consumption and operational costs in the power grid, ultimately enhancing the stability and economic efficiency of the power system. Conversely, the insufficiency of reactive power and improper distribution in the power distribution system may result in increased line losses and voltage fluctuations. However, the modern reactive power optimization (RPO) field also faces various issues and challenges, including those presented by demand growth, the limitations of traditional reactive power compensation devices, and the intricate grid topology, among other challenges [

1].

The RPO of distribution networks in the power system is a subproblem of the optimal power flow problem. RPO can be achieved by manipulating the reactive power for the power system, thereby enhancing the efficiency of the power system [

2]. The primary objective of RPO is to achieve optimal operating conditions while satisfying the given constraints by harmonizing and optimizing control variables such as generator voltage values and transformer voltage ratios, which aim to reduce network losses and improve voltage levels [

3,

4].

In general, RPO models presented a single-objective function, such as minimizing reactive power losses or optimizing reactive power distribution. In recent years, with the increasing demands for grid stability and reliability, researchers have begun to explore multi-objective optimization problems. In addition to minimizing reactive power losses, reactive power optimization models also considered other objective functions, such as improving voltage stability, power factor, and reactive power balance. Therefore, the optimization problem has evolved from a single-objective RPO problem to a multi-objective reactive power optimization (MORPO) problem [

5,

6]. The MORPO problem is essentially a nonlinear optimization problem with multiple constraints, variables, and objectives [

7,

8].

To address this issue, numerous scholars have proposed various solutions, which are mainly divided into two categories: classical optimization techniques and artificial intelligence optimization technologies. The classical optimization techniques consist of gradient methods [

9], interior point methods [

10], linear programming [

11], and nonlinear programming methods [

12]. To solve multi-objective problems, the traditional approaches generally transformed the MORPO problem into a single-objective optimization problem by using weighted methods [

13], ε-constraint methods [

14], and fuzzy decision-making methods [

15]. However, there are inherent drawbacks to traditional methods, such as computational complexity, limited flexibility, and the inability to solve constrained problems involving nonlinear and discontinuous functions. Therefore, artificial intelligence optimization algorithms have gradually been employed to tackle multi-objective optimization problems. Recently, the MORPO problem has been successfully addressed through the implementation of metaheuristic optimization methodologies, with examples such as particle swarm optimization (PSO) [

16,

17], the sine cosine algorithm (SCA) [

18], the sparrow search algorithm (SSA) [

19], the imperialist competitive algorithm (ICA) [

20], the cuckoo search algorithm (CSA) [

21,

22], the genetic algorithm (GA) [

23], the beetle antenna search (BAS) algorithm [

24], the NSGA-II algorithm [

25], the grey wolf optimization (GWO) algorithm [

26,

27], the bacterial foraging optimization (BFO) algorithm [

28], etc.

The PSO algorithm, known for its low memory requirements and fast convergence, has been widely adopted in the field of MORPO due to its advantages [

29]. In reference [

30], an improved RPO algorithm was proposed by considering the minimization of power loss as the primary objective function, which was achieved by enhancing the strategy of inertia weight and the acceleration coefficients. In reference [

31], the L-index was incorporated to enhance the stability of static voltage in electrical power systems. Confronted with intricate multi-objective dilemmas, such as minimizing power loss and L-index, the implementation of a crossover operator was introduced to augment the diversity of PSO. Additionally, a chaotic sequence based on logical mapping was utilized in PSO instead of a random sequence to enhance its global search capability and exploitation ability. In reference [

32], the potential effects of integrating distributed generation (DG) into the power distribution network were discussed. An improved second-order oscillatory PSO algorithm was presented to enhance the efficiency and convergence properties of multi-objectives. It should be noted that multiple iterations are required to converge for the PSO algorithm. Consequently, this can lead to the PSO algorithm easily becoming trapped in a local optimum solution [

33].

The SSA is a novel nature-inspired algorithm that draws inspiration from the behavior of sparrows [

34]. It has gained widespread discussion among scholars and is currently under active research. In reference [

35], a multi-objective optimization model was established including investment cost, environmental sustainability, and power supply quality as the objective functions. Subsequently, the Levy flight strategy was incorporated into the SSA to enhance the ability of the multi-objective sparrow search algorithm to escape local optima. In reference [

36], a chaotic sparrow searches algorithm (CLSSA) based on the logarithmic spiral strategy and the adaptive step size strategy was proposed. The experimental findings demonstrate the commendable practicality of the proposed approach in addressing engineering quandaries. In reference [

37], this article aims to integrate an improved point selection strategy with the SSA. The issue of convergence degradation in solving high-dimensional multi-objective optimization problems has been resolved and the performance of the algorithm is improving. Based on the above research, it can be observed that despite the excellent performance of the SSA in optimization problems, it has some inherent drawbacks, such as slow convergence speed and the possibility of becoming trapped in local optima.

According to the aforementioned research, this paper proposes a method for MORPO in distribution networks using the improved sparrow search algorithm–particle swarm optimization (ISSA-PSO) algorithm. The specific findings and contributions of the paper can be summarized as follows:

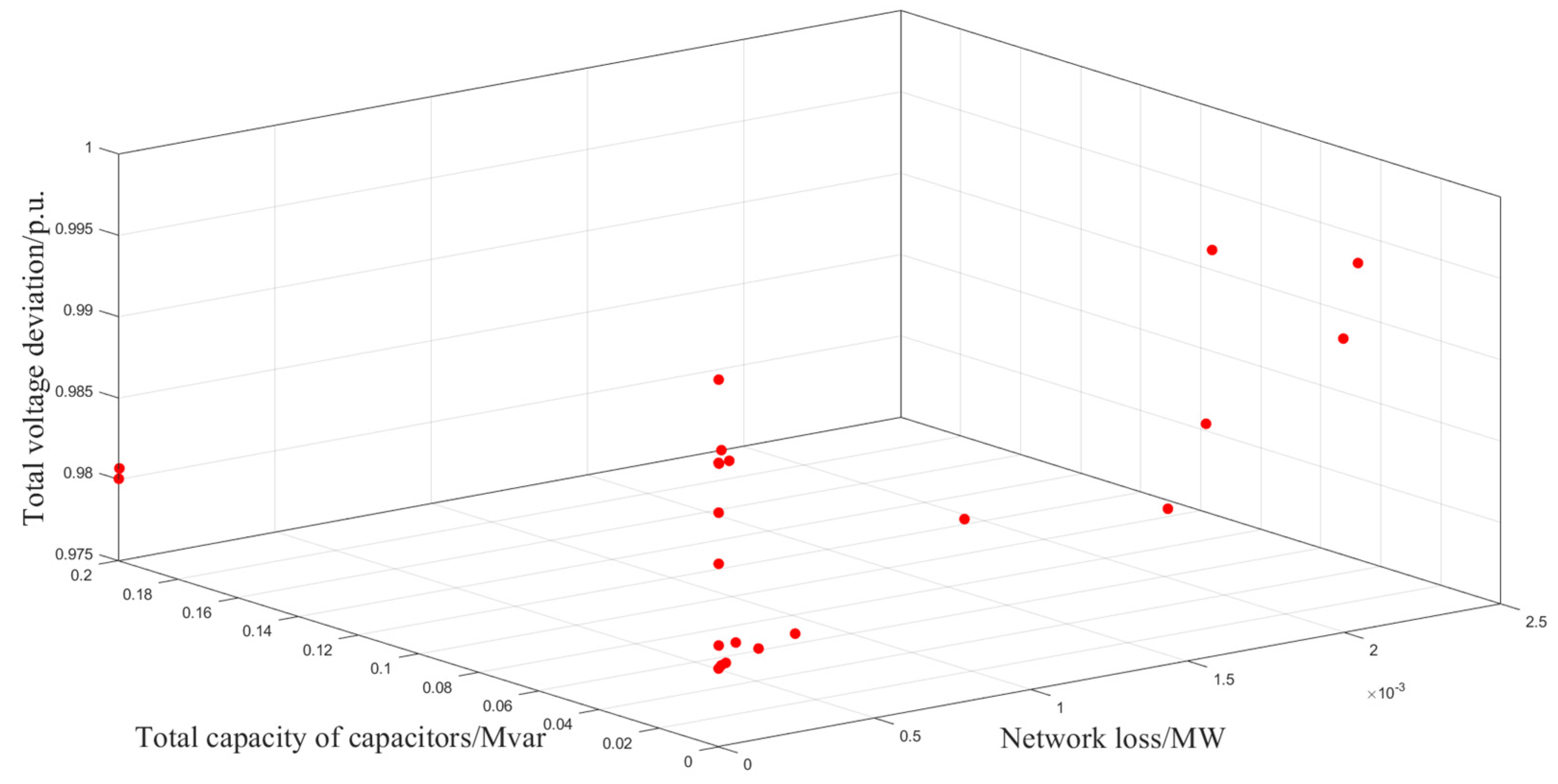

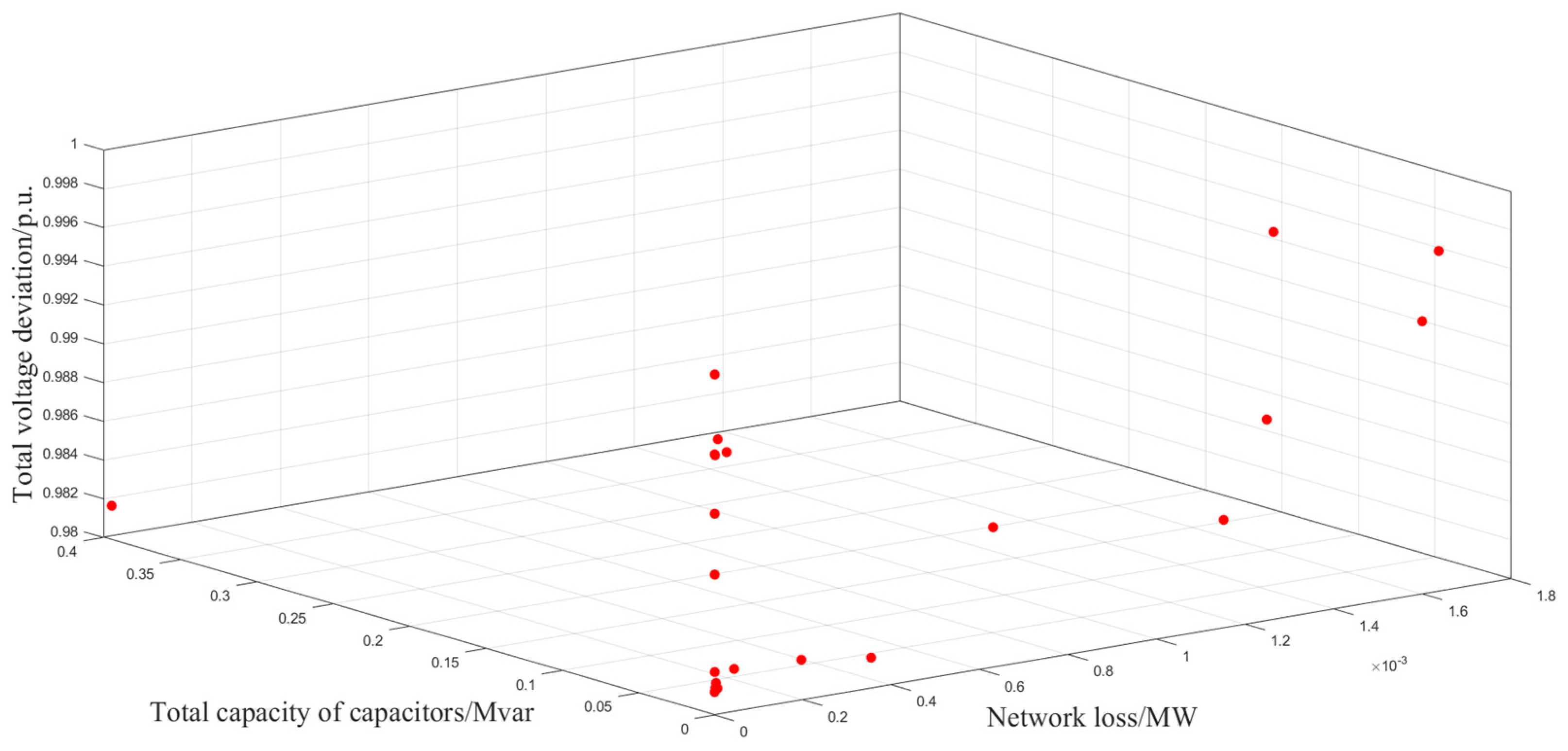

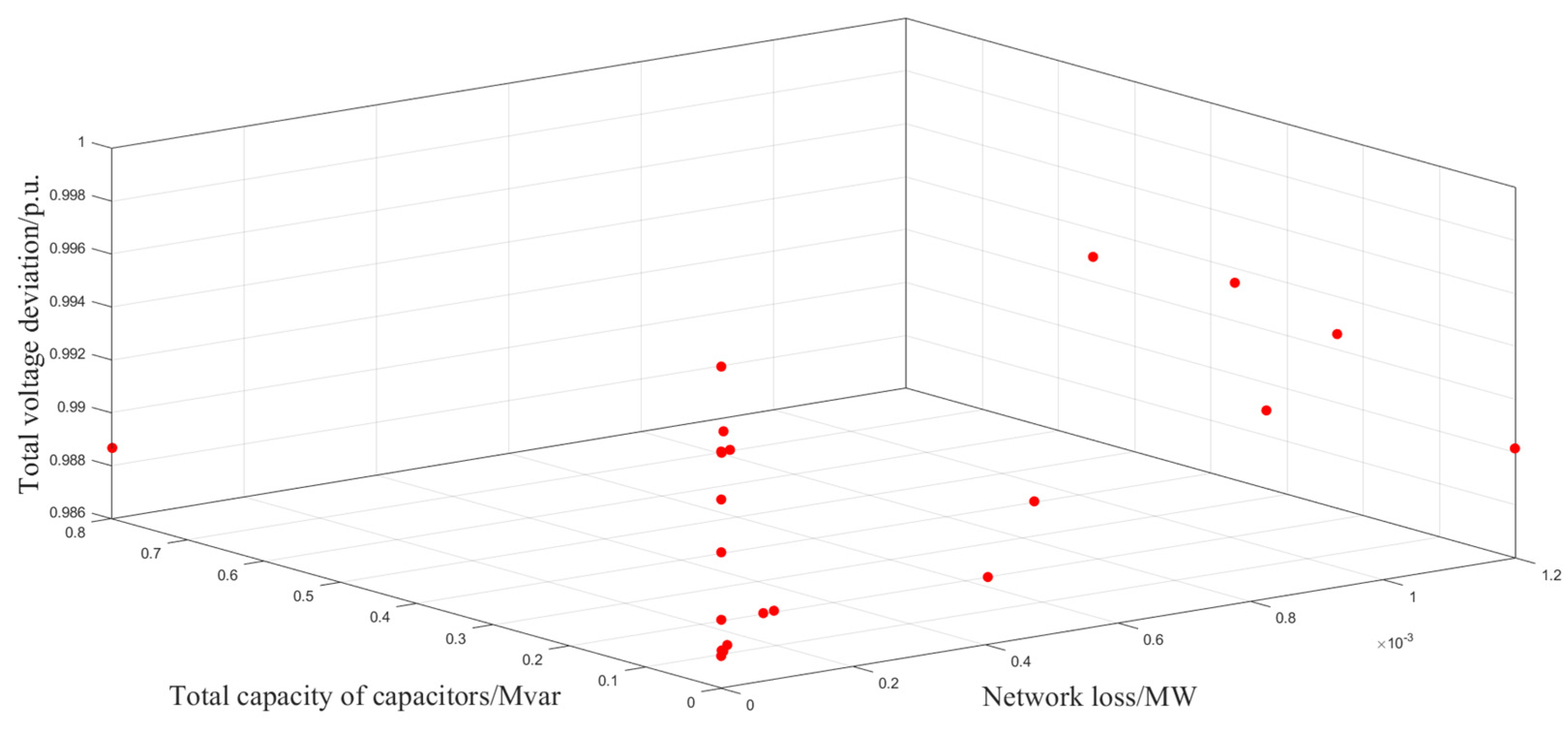

(1) This paper establishes a MORPO model, where the objective function consists of minimizing active power loss, minimizing total compensation of reactive power compensation devices, and minimizing the sum of node voltage deviations.

(2) Inspired by the aforementioned research, this paper presents the ISSA-PSO algorithm to address the low convergence accuracy in PSO while incorporating the strong global search capability and efficiency of SSA. This algorithm incorporates two notable enhancements: The first enhancement introduces the incorporation of a tent chaotic mapping mechanism to initialize the population, aiming to enhance its diversity. The second enhancement introduces a three-stage differential evolution mechanism to enhance the algorithm’s global exploration capability.

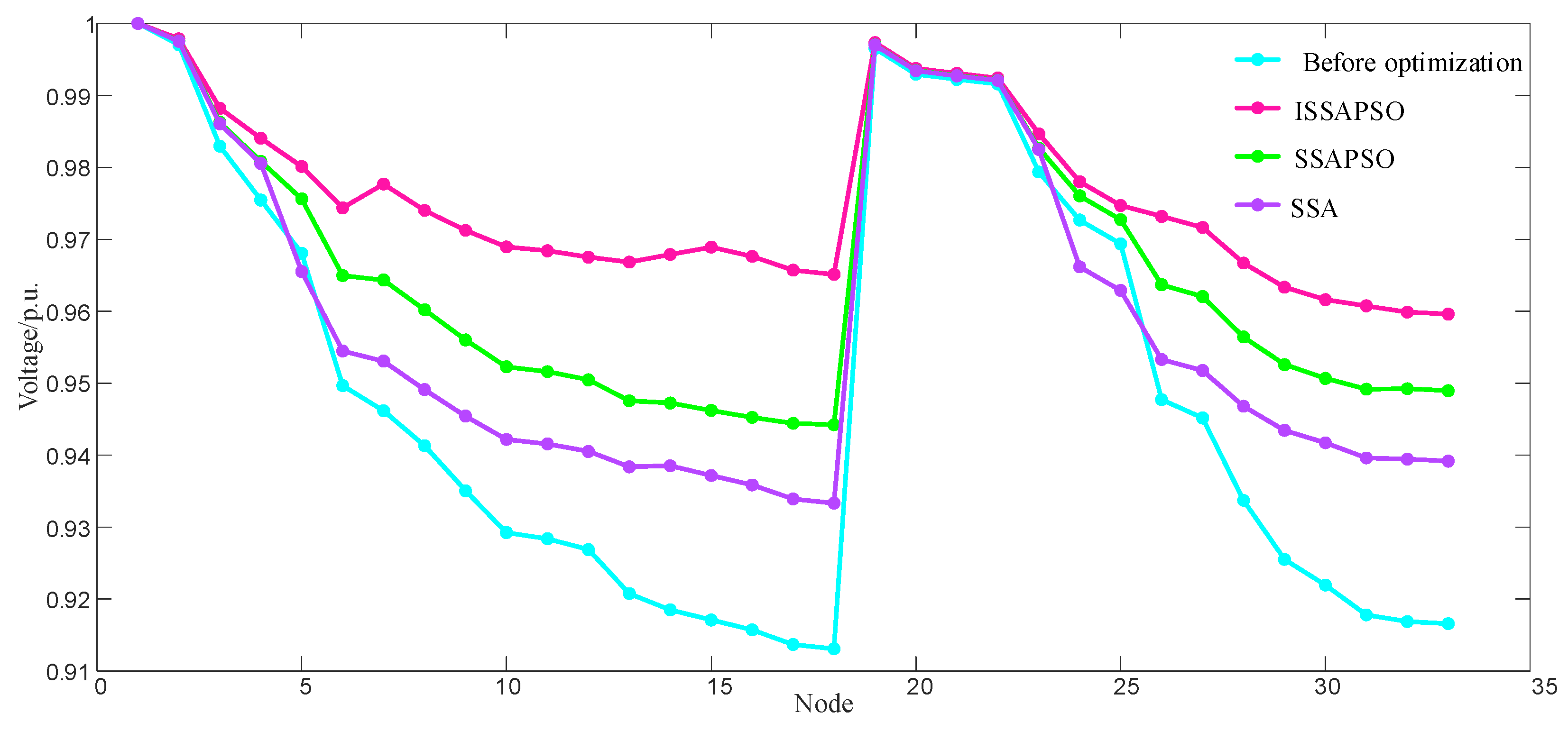

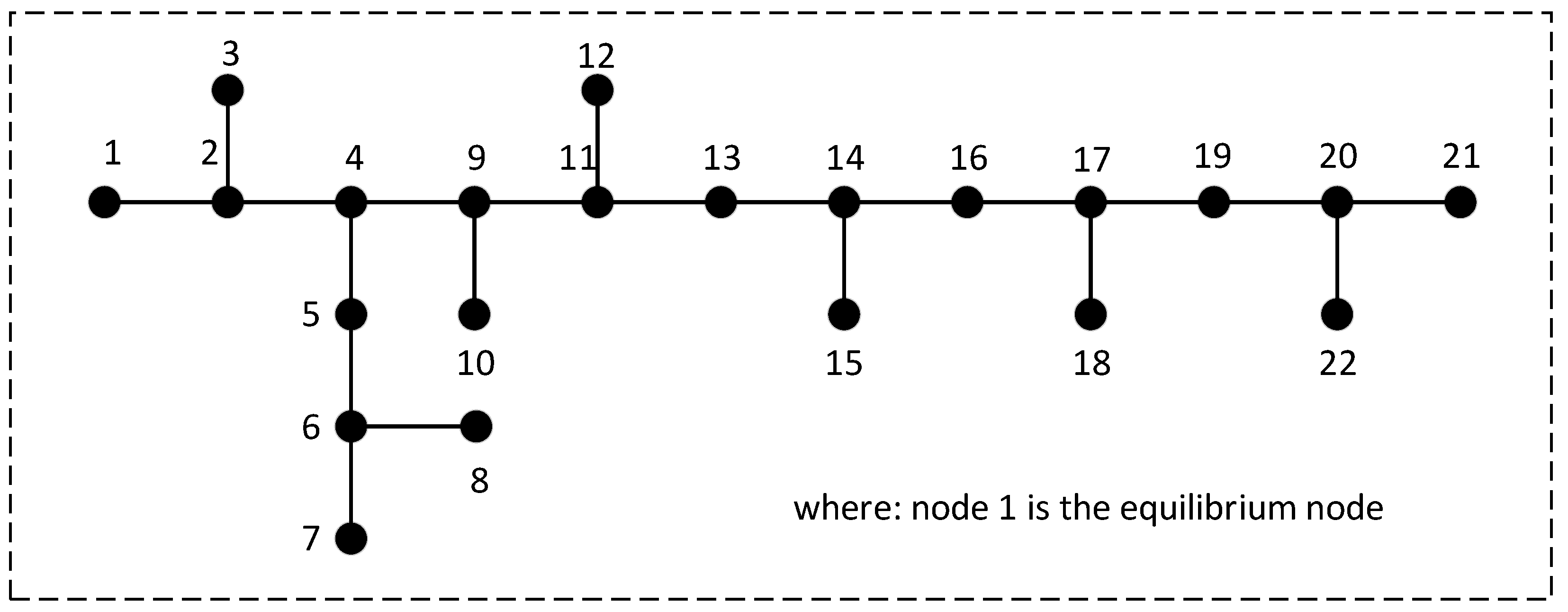

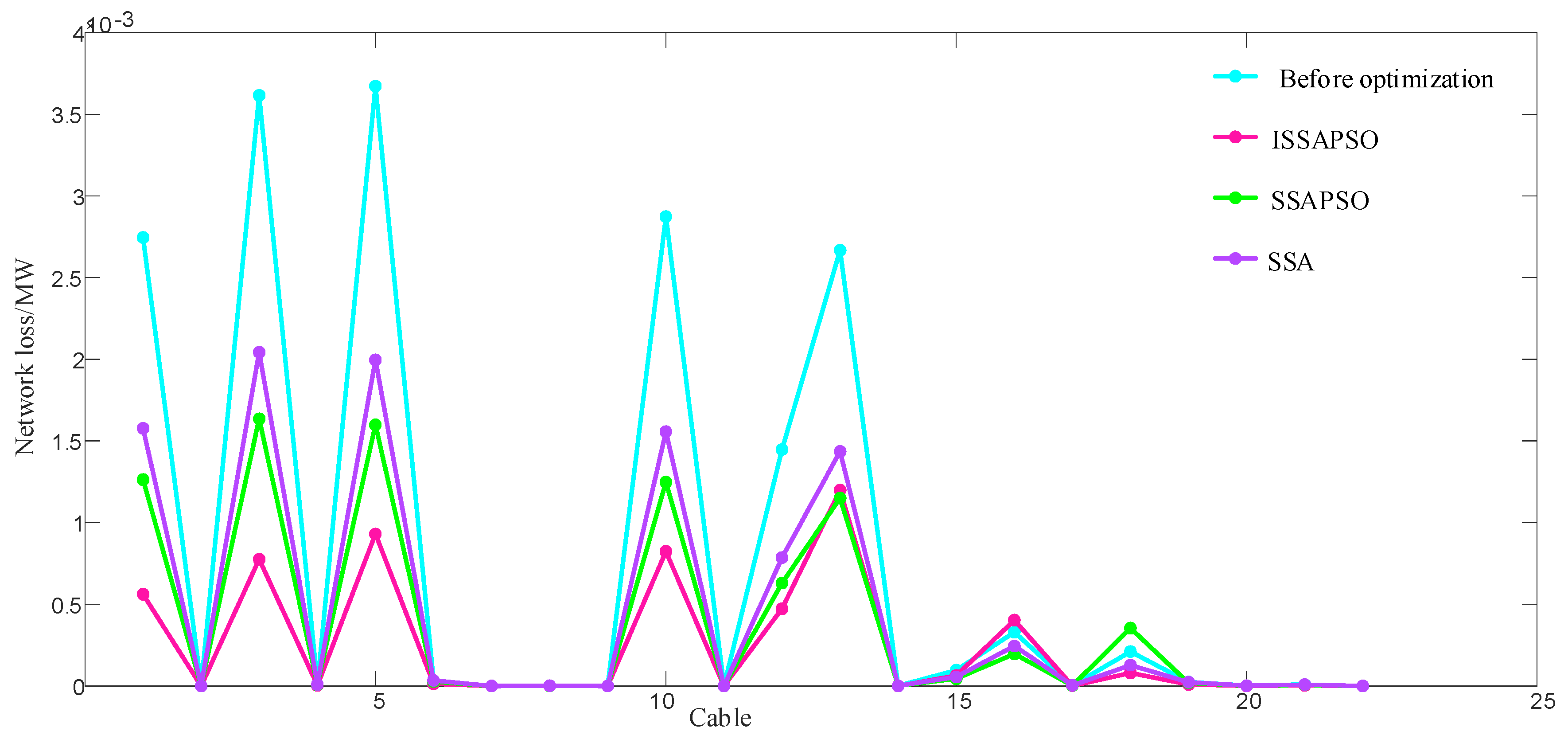

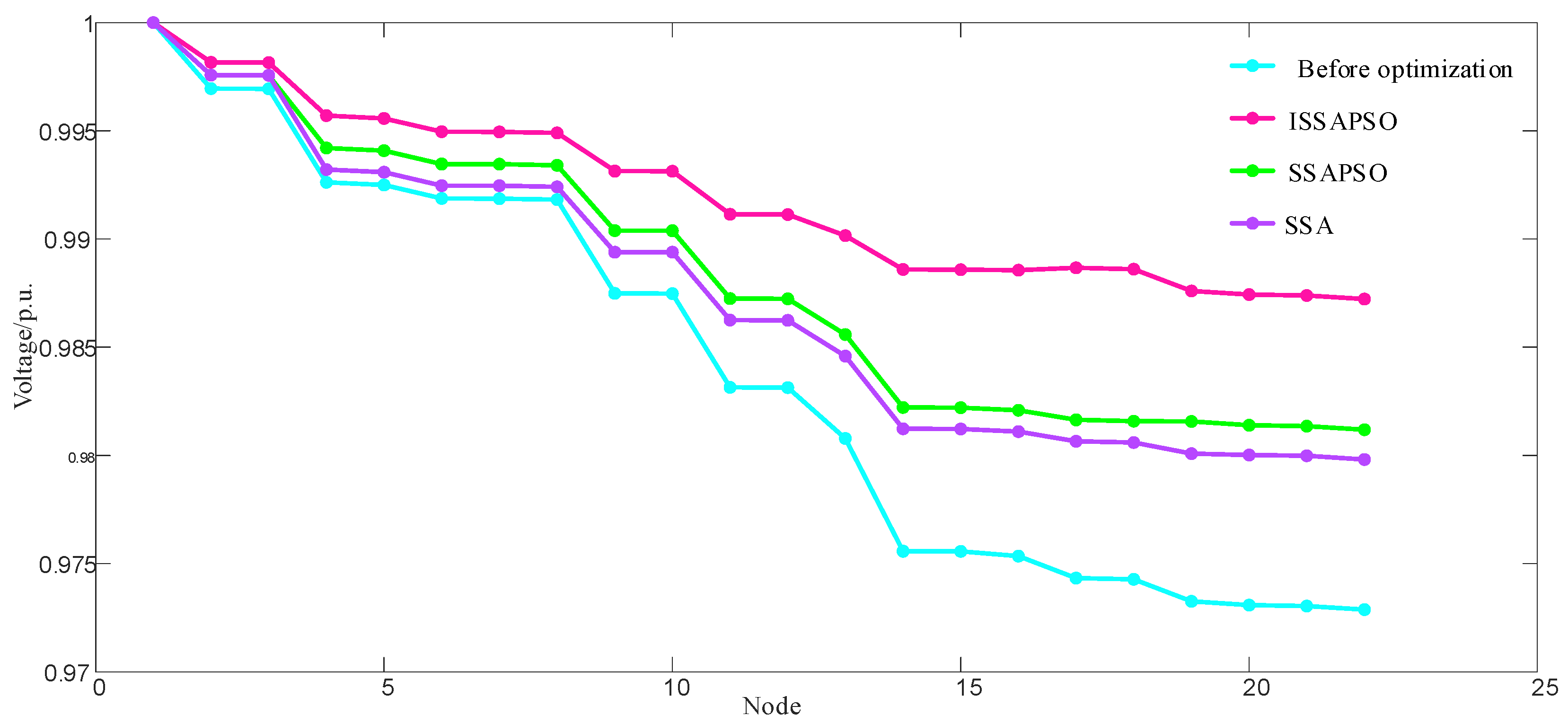

(3) The effectiveness of the ISSA-PSO algorithm is proved by simulation using the IEEE 33-node system and the practical 22-node system. Compared with the SSA, PSO algorithm, and SSA-PSO algorithm, the proposed strategy has better performance in terms of tracking speed, accuracy, and dependability.

3. The Improved Particle Swarm Optimization–Sparrow Search Algorithm

3.1. Sparrow Search Algorithm

The SSA is an intelligent algorithm inspired by the hunting behavior of sparrows. It possesses excellent capabilities for local exploration and global optimization. The process of sparrow predation can be divided into two primary roles: the discoverer and the follower. The discoverer, characterized by a higher energy level, provides the direction to the food source for the population. The remaining individuals serve as followers who trail the discoverer in search of food, and they may even become involved in disputes over resources. Moreover, a certain proportion of sparrows possess the ability of vigilant surveillance, allowing them to evade potential predators.

The discoverer undertakes the task of foraging for sustenance and guiding the collective migration of the entire population. Therefore, the discoverer may explore sustenance in a more extensive realm than the one inhabited by the joiner. The formula for updating the position of the discoverer is as follows:

where

is the

i-th individual in the

j-th dimension value following

t iterations;

is the early warning value;

represents random numbers,

(0,1]; ST is the safety threshold,

(0.5,1];

Q represents random numbers obeying the normal distribution; and

L is a matrix consisting of elements that are all 1.

If , this means the absence of predators, prompting the observer to engage in an extensive exploration mode. Otherwise, if , all the sparrows must expeditiously migrate to alternative safe havens upon the discovery of predators in their vicinity.

Once the sparrows perceive that the discoverer has identified a region with splendid nourishment, they will relinquish their current location and migrate to the discoverer’s position to contend for the food resources. In the event of their successful occupation of the designated vantage point, the discoverer shall be rewarded with nourishment. Otherwise, they shall persist in adhering to the established regulations. The formula for the position of the follower update is as follows:

where

is the optimal position occupied by the discoverer, and

is the current worst-case global position.

According to the aforementioned formula, the mathematical model can be expressed as follows:

where

is the current global optimal position;

represents the step size control parameters, conforming to a Gaussian distribution of random numbers with a mean of 1 and a variance of 1;

is the present fitness value of the sparrows;

is the current global best fitness value;

is the present minimum fitness value in the global range; and

is the minimum constant selected to avoid errors in the division by zero.

3.2. Particle Swarm Optimization

PSO is an algorithm of collective intelligence, conceived in the spirit of bird foraging behavior. This algorithm solves optimization problems by emulating the foraging behavior of avian species traversing multidimensional search spaces. In the PSO algorithm, the solution to a problem is represented as the position of a particle, with each particle representing a candidate solution in the problem space. The Formulas (14) and (15) express the updates for velocity and position in the PSO algorithm, respectively.

where

is the velocities of the

i-th particle at the

t and

t + 1 iterations;

and

are acceleration factors;

and

are the local and global optimal positions of particles;

and

are random numbers ranging from 0 to 1; and

is the inertia weight.

The advantages of the PSO algorithm include ease of implementation, obviating the need to calculate gradient information, applicability to both continuous and discrete optimization problems, etc. However, the PSO algorithm has some certain drawbacks, such as its susceptibility to becoming trapped within local optima, its sensitivity to problem initialization, etc.

3.3. Sparrow Search Algorithm–Particle Swarm Optimization

In order to tackle the problem of limited local search capacity and insufficient search accuracy in PSO, the SSA is introduced. To tackle the problems of search stagnation and the challenge of breaking free from a limited search space, a subgroup of the PSO population known as sparrows is incorporated, which are further classified into discoverers, trackers, and sentinels.

The formula is then updated as follows:

where

is the weight coefficient, with an initial value of 0.5;

and

are the pursuit of knowledge, with an initial value of 0.1 and 0.5;

is the individual optimal position;

is the global optimal position; and

and

are random numbers.

The formula for updating the position of each discoverer is defined as follows:

where

is the coordinate information of sparrow

i in dimension

j in the

t-th generation, where

j = 2;

is a random number;

A is an alert value,

[0,1];

is the safety threshold,

; and

Q represents random numbers obeying the normal distribution.

When

A <

, it indicates the absence of danger nearly, and the discoverer at this moment may engage in a search within a broader spatial range. When

A ≥

, the observer perceives danger, and some sparrows follow the discoverer’s actions as a follower. However, upon the discovery of food, these followers will approach and contend with the finder for sustenance. A small portion of the followers, due to insufficiency of sustenance, will fly to other areas to search for food, replenishing the necessary sustenance. The formula for updating is defined as follows:

where

N is the population,

N = 100;

is the best currently discovered food source; and

is the worst current global food source.

The formula for updating the position of the observer is as follows:

where

is the optimal food source discovered by the population of sparrows;

is the step size adjustment factor;

is a minuscule constant;

K is a random number,

;

is the present fitness value; and

is the current global best fitness value.

The weighting factor adopts a sinusoidal variation; the weight factor of the algorithm is represented by the following equation:

where

= 1,

= 0.5;

k represents iterations;

is the maximum number of iterations; and

(

k) is the inertia weight factor for the

k-th iteration.

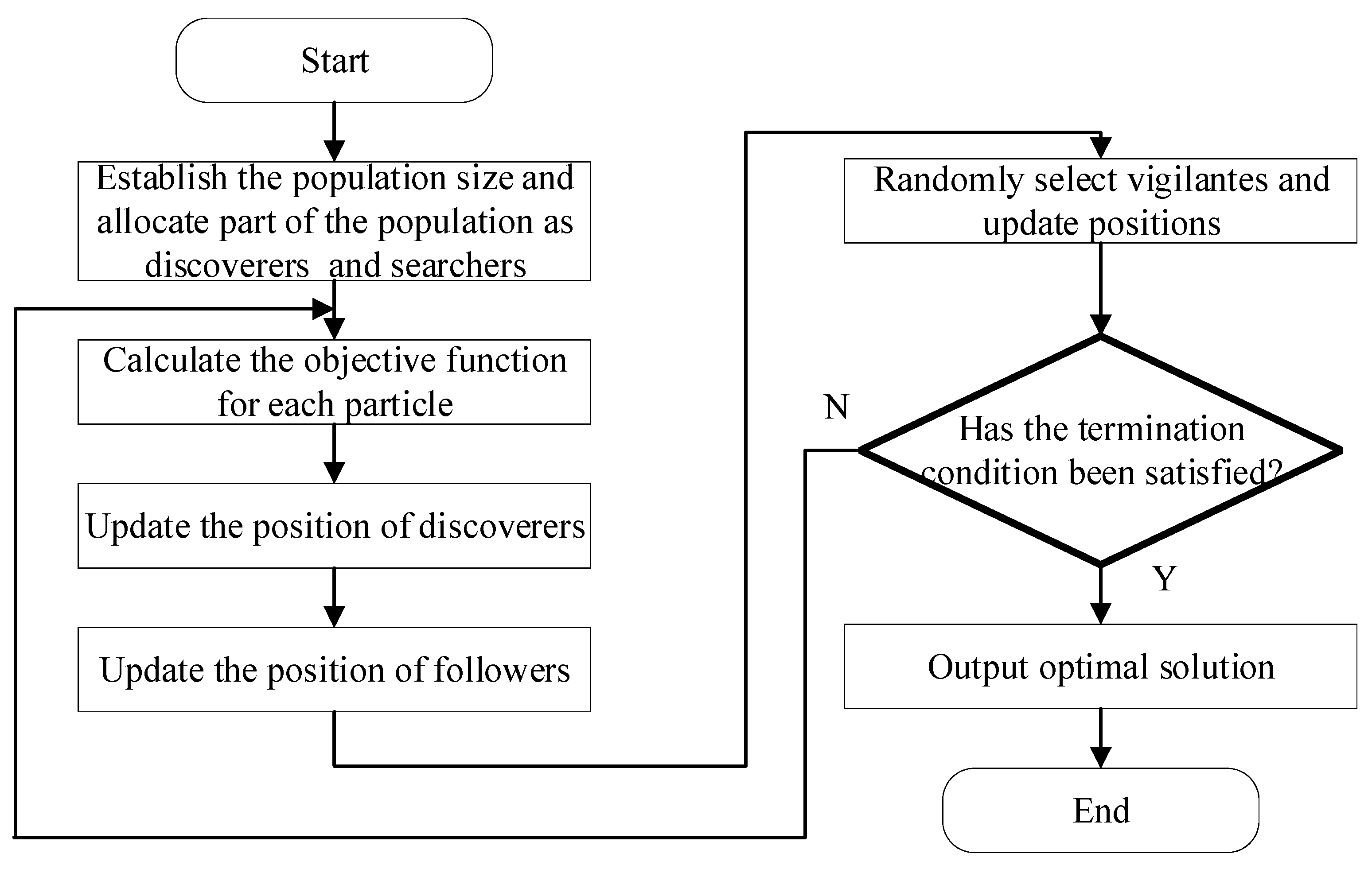

The flowchart of the SSA-PSO algorithm is shown in

Figure 1 as follows.

3.4. The Improved SSA-PSO Algorithm

The SSA-PSO algorithm combines the advantages of both the SSA and the PSO algorithm, significantly improving the algorithm’s optimization accuracy and efficiency. However, The SSA-PSO algorithm still possesses untapped potential for optimization. Therefore, this paper undertakes further optimization based on SSA-PSO: the first improvement is the introduction of a chaotic mapping mechanism to enhance the diversity of the population during initialization, and the second is the introduction of a three-stage differential evolution mechanism to improve the global exploration capability of the algorithm.

A tent chaotic map is a piecewise linear one-dimensional map. Compared to the logistic function, it exhibits a uniform power spectral density, probability density, and ideal correlation characteristics, along with a faster iteration rate. The mathematical expression is as follows:

When

a ≤ 1, the tent chaotic map is in a stable state; when 1 <

a < 2, the tent chaotic map is in a state of chaotic dynamics; when

a = 2, the tent chaotic map is the core of tent mapping. The mathematical expression is as follows:

The tent chaotic map exhibits remarkable traversability, and the computation processing is suitable for a large magnitude of data sequences. However, the mapping of the tent function suffers from the drawback of having a small unstable period. Therefore, the following enhancements for the tent mapping are proposed.

The TSDE is an evolutionary algorithm commonly used for solving optimization problems. It is an enhanced version of the differential evolution (DE) algorithm. The essence of TSDE lies in iteratively optimizing individuals to seek the optimal solution. In each generation, superior individuals are chosen and preserved by comparing the fitness of the parent population with that of the offspring population. Simultaneously, less adaptive individuals are replaced by newly generated individuals. For individuals with lower fitness, improvement can be achieved by adopting superior mutation and crossover strategies. Compared to traditional DE algorithms, TSDE incorporates a design consisting of three stages, which enhances the stability and convergence of the algorithm. Additionally, TSDE can be customized by employing different mutation and crossover operations tailored to the characteristics of specific problems. This adaptability and flexibility enable TSDE to effectively tackle diverse optimization problems. The three stages of TSDE are as follows:

Initialization phase: During this stage, the population needs to be initialized by generating a set of candidate solutions. Common methods for initialization include random generation, uniform distribution, or specific initialization based on the characteristics of the problem. The expression in the initialization phase is as follows:

where

C represents consumer factors with Levy flight characteristics.

where

N (0, 1) is the probability density function of a normal distribution with mean 0 and standard deviation 1; and

and

are the standard normal distribution.

Mutation and crossover phase: During this phase, new individuals are generated by selecting parent individuals and performing mutation and crossover operations. Specifically, the mutation operation introduces small perturbations to the parent individuals to obtain new individuals, while the crossover operation combines the new individuals with the original individuals to produce offspring individuals. The expressions for the mutation and crossover phase are as follows:

where

is the optimal individual from the

t-th iteration.

Selection phase: During this phase, individuals with higher fitness from both the parent and offspring populations are chosen as parents for the next generation based on a predetermined strategy. The expression for the selection phase is as follows:

where

is a random number,

.

The flowchart depicting the process of the ISSA-PSO algorithm is illustrated in

Figure 2.

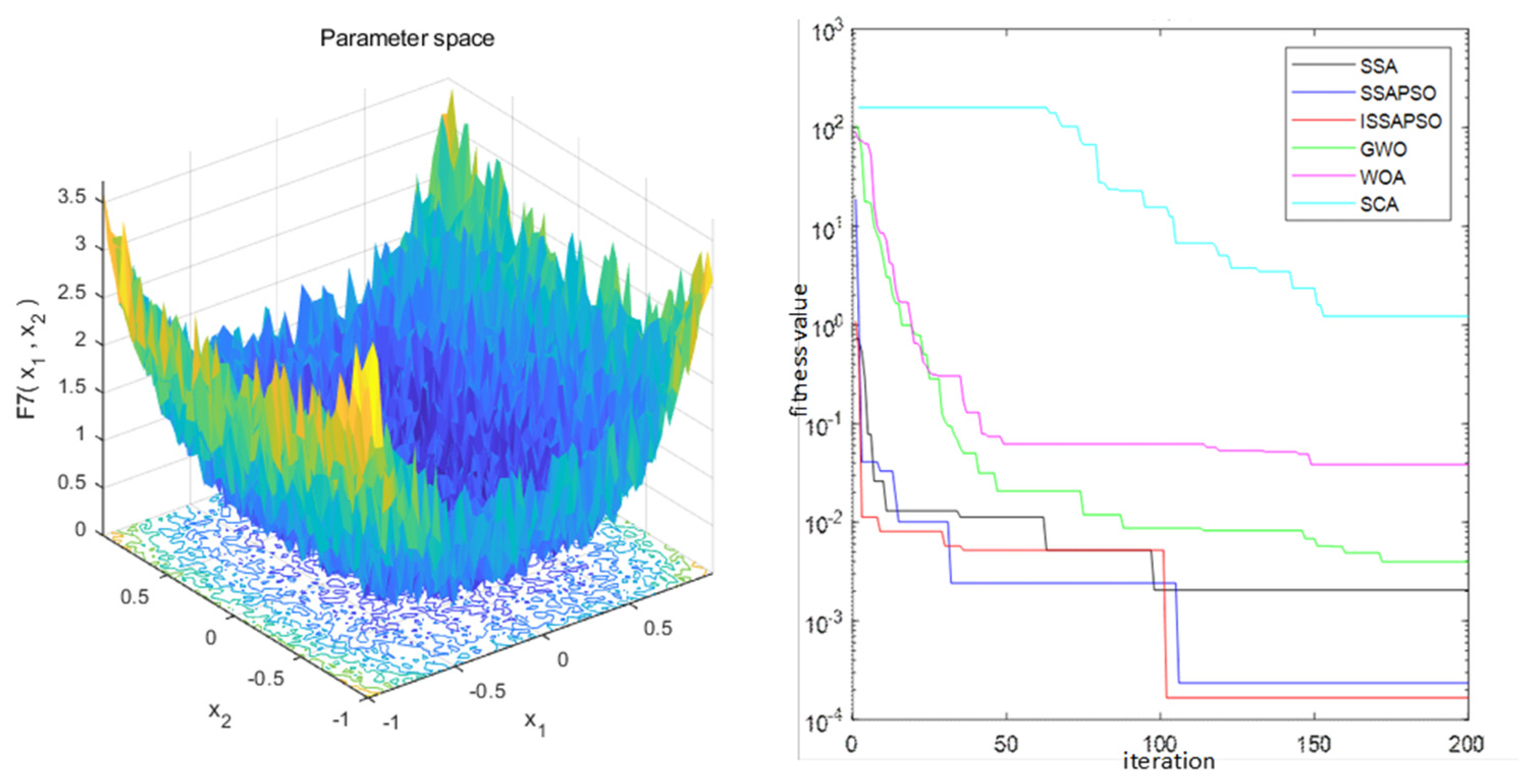

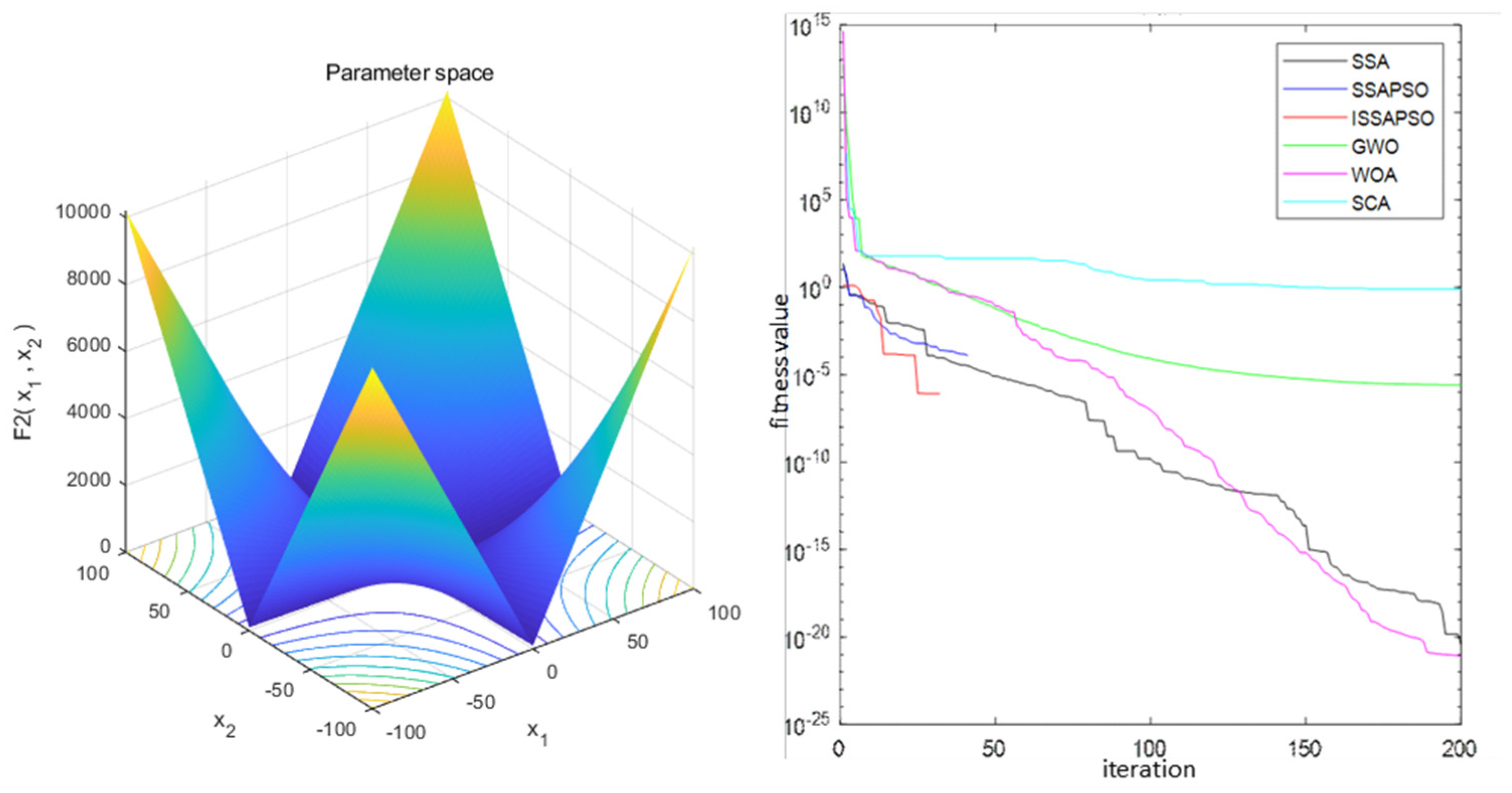

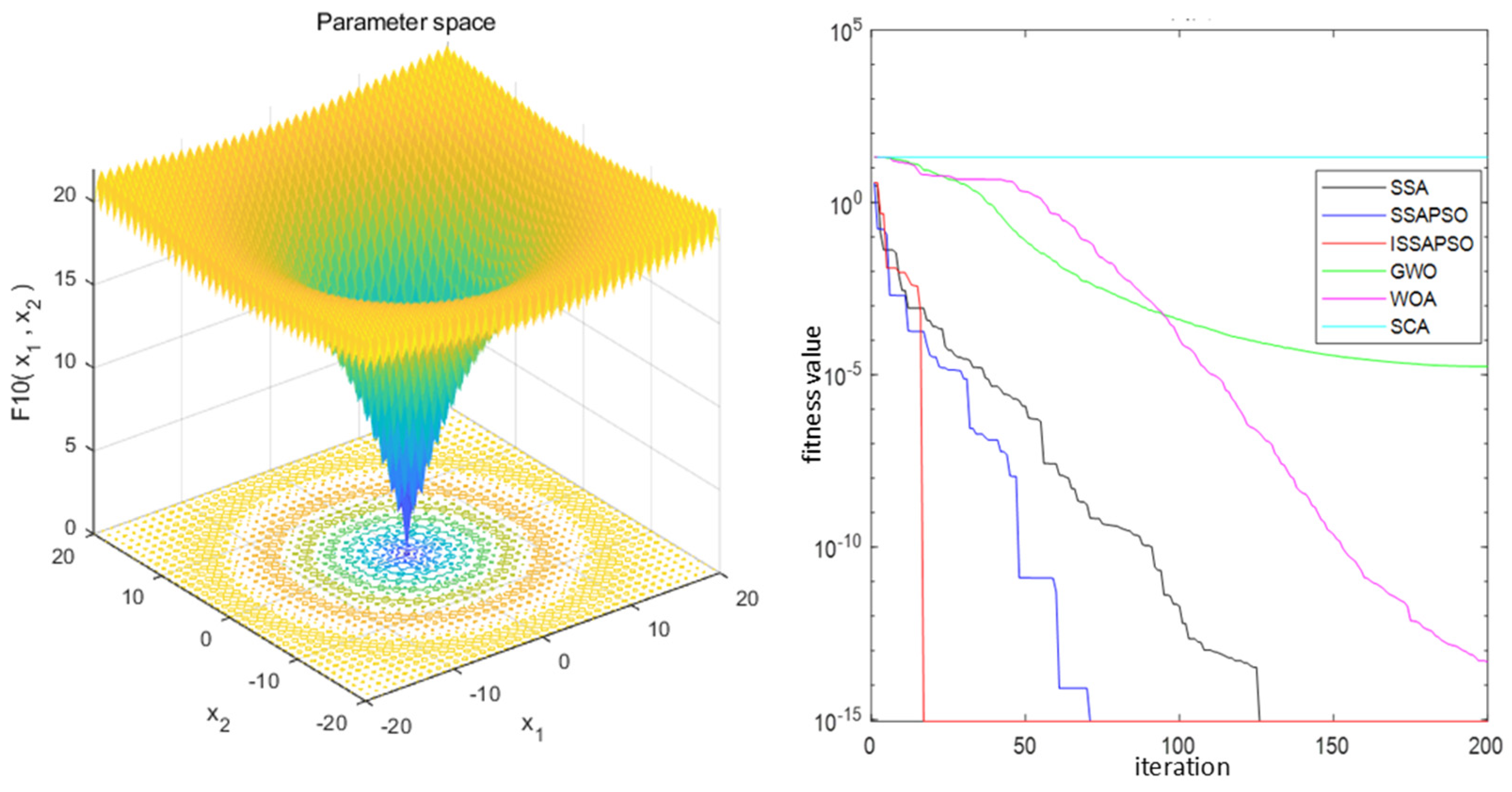

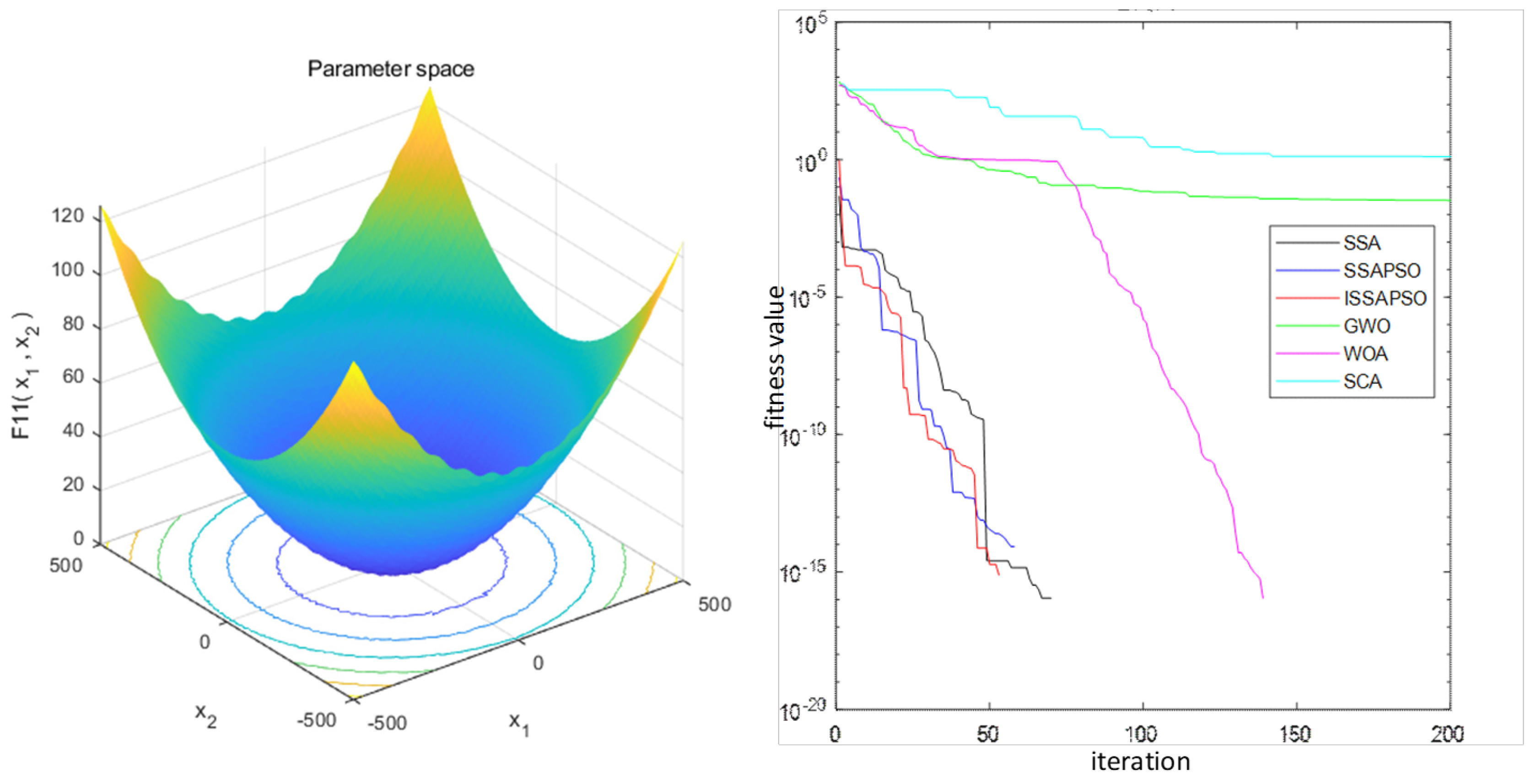

3.5. Function Test and Result Analysis

(1) Parameter setting

This section uses the Matlab 2019b platform to verify and analyze the computational performance of the ISSA-PSO algorithm to test functions. All the comparison algorithms are set to a population of 30, with 200 iteration times.

(2) Test function

In order to verify the performance of the algorithm, this paper selects four standard test functions for calculation, which are shown in

Table 1. To further validate the speed information of the algorithm, the iterative speed of the proposed ISSA-PSO algorithm is compared with that of the SSA, SSA-PSO, GWO, WOA, and SCA on four test functions, as shown in

Figure 3,

Figure 4,

Figure 5 and

Figure 6. From the illustration, it can be observed that ISSA-PSO exhibits remarkable performance advantages for unimodal test functions. Therefore, the ISSA-PSO algorithm consistently exhibits the fastest iteration speed when convergence reaches the optimal value.